Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

What's new in the truck industry

64% of truck industry CEOs say the future success of their organization hinges upon the digital revolution. This should come as no surprise, as transportation as we knew it a decade or two ago is slowly fading into obscurity.

Operating standards in the industry are improving, and values such as speed, efficiency, eco-friendliness, and safety are reverberating in announcements at industry conferences and in truck industry reports.

Self-driving, fully autonomous vehicles, mainly electrically powered and based on AI and the Internet of things , are transforming 21st-century transportation. It is well worth taking a look at examples of solutions implemented by innovators with substantial development capital.

Solutions that translate into safety and driving performance of larger vehicles

Some innovations, in particular, are shifting the industry forward. And they are literally doing so. Developments like autonomous vehicles, electric-powered trucks, Big Data , and cloud computing have modified the way goods and people are transported. Smart analytics allows for more efficient supply chains, but not only that. It also enhances driving safety and the experience of traveling long distances.

High-tech trucks break down less frequently and cause fewer accidents. And self-driving technologies, which are still being developed, enable you to save time and money.

AI, Big Data, Internet of things

Better location tracking, improved ambient sensing, and enhanced fleet management . All these benefits can be achieved by implementing IoT solutions.

Composed of devices and detectors in the vehicle and in the road infrastructure, the network is a space for the continuous exchange of data in real-time . It provides information about the conditions on the route, but also whether the cargo is stable (tilt at the level of the pallet or package), and whether the pressure in the tires is at the right level. This facilitates the work of drivers, shippers, and management.

This solution is applied, for instance, at one of the globally leading logistics companies, Kuehne + Nagel. The company uses IoT sensors and a cloud-based platform in its daily work. It simply works.

The use of artificial intelligence algorithms is equally important. Advanced Big Data analytics , coupled with AI, allows companies to make decisions based on accurate, quality data. According to Supply Chain Management World research, 64 percent of executives believe that big data and coupled technologies will empower and change the industry forever. This is because it will improve performance forecasting and goal formulation even further.

Performance indicators are measured in this way by the logistics company Geodis. With their proprietary Neptune platform, they leverage real-time coordination of transportation activities. With one app and a few clicks, carriers and customers can manage all activities during transport.

Failure prevention

Software-based solutions in the trucking industry are eradicating a number of issues that have previously been the bane of the industry. These include breakdowns, which sometimes take a fleet's operationally significant "arsenal" out of circulation. You can find out about such incidents even before they happen.

Drivers of the new Mercedes-Benz eActros, for example, have recently been able to make use of the intelligent Mercedes-Benz Uptime system. This service is based on more than 100 specific rules that continuously monitor processes such as charging. On top of that, they control the voltage history associated with the high-voltage battery.

All information required in terms of reliability is available to customers via a special portal in the cloud. In this way, the German manufacturer wants to keep unexpected faults to a minimum and facilitate the planning of maintenance work for the fleet.

Self-driving vehicles

Automated trucks equipped with short and long-distance radars, sensors, cameras, 3D mapping, and laser detection are poised to revolutionize the industry. They are also a solution to the problem of the driver shortage, though, as a matter of fact, we still have to wait a while for fully autonomous trucks.

However, there are many indications that there will be increased investment in such solutions. Just take a look at the proposals from tech giants in the US like Tesla, Uber, Cruise, and Waymo.

The latter offers the original Waymo VIA solution, promising van and bus drivers an unparalleled autonomous driving experience. Waymo Driver's intelligent driving assistant, based on simulations with the most challenging driving scenarios, is capable of making accurate decisions already in the natural road environment. WD sees and detects what's happening on the road, in addition to being able to handle complex tasks of accelerating, braking, and navigating a wide turning circle.

Sustainable drive

The sustainability trend is now powering multiple industries, with the truck industry being no exception. So it should come as no surprise that a rising number of large transport vehicles are being electrified.

Tesla is investing in electric trucks, and doubly so, because in addition to making their Semi Truck an electric vehicle, Elon Musk's brand has additionally created its own charging infrastructure - a network of superchargers under the brand, the Tesla Supercharger Network. As a result, ST trucks are able to drive 800 km on full batteries, and an additional 600 km of range can be attained after 30 minutes of charging.

Another giant, Volkswagen , is also following a similar approach. It is investing in electric trucks with solid-state batteries that, unlike lithium-ion batteries, provide greater safety and an improved quick-charging capability. In the long run, this is intended to lead to an increase of up to 250% in the range of kilometers covered.

The mission to reduce CO2 emissions in truck transport is also being actively promoted by VOLTA. Their all-electric trucks are designed to reduce exhaust tailpipe emissions to 1,191,000 tonnes by 2025. A slightly smaller, but still impressive goal has been set by England's Tevva Electric Trucks. Their vehicles are expected to reduce CO2 emissions by 10 million tons by the next decade.

Giants already know what's at stake

Companies like Tesla, Nikola Corporation, Einride, Daimler, and Volkswagen already understand the need to enter the electric vehicle market with bold proposals. Major players in the automotive market are also targeting synergistic collaborations. For instance, BMW, Daimler, Ford, and Volkswagen are teaming up to build a high-powered European charging network. Each charging point will be 350 kW and use the Combined Charging System (CCS) standard to work with most electric vehicles, including trucks.

Another major collaboration involves Volkswagen Group Research and the American company QuantumScape. The latter is conducting research on solid-state lithium metal batteries for large electric cars. This partnership is expected to enable the production of solid-state batteries on an industrial level.

Smooth energy management

Truck electrification is not all that is needed. It is also essential that electric vehicles have an adequate range and unhindered access to charging infrastructure. In addition, optimizing consumption and increasing energy efficiency is also one of the challenges.

It is with these needs in mind that Proterra has developed special Proterra APEX connected vehicle intelligence telematics software to assist electric fleets with real-time energy management. Electric batteries are constantly monitored and real-time alerts appear on dashboards. Fleet managers also have access to configurable reports.

Meanwhile, the Fleetboard Charge Management developed by Mercedes offers a comprehensive view of all interactions between e-trucks and the company's charging stations. Users can see what the charging time is and monitor the current battery status. Beyond that, they can view the history of previous events. They can also adjust individual settings such as departure times and final expected battery status.

Truck Platooning

More technologically advanced trucks can be linked together. Platooning, or interconnected lines of vehicles traveling in a single formation allows for substantial savings. Instead of multiple trucks "scattered" on the road, the idea is to have a single, predictable in many ways string of vehicles moving in a highly efficient and low-emission manner.

How is this possible? The answer is simple: telematics. Telecommunication devices enable the seamless sending, receiving, and storing of information. Josh Switkes, a founder of Peloton, a leader in automated vehicles, explains how the system functions: We’re sending information directly from the front truck to the rear truck, information like engine torque, vehicle speed, and brake application .

Although platooning is not yet widespread, it may soon become a permanent fixture on European roads thanks to Ensemble . As part of this project, specialists, working with brands such as DAF, DAIMLER, MAN, IVECO, SCANIA, and VOLVO Group, are analyzing the impact of platooning on infrastructure, road safety, and traffic flow. However, the fuel savings alone are already said to be 4.5% for the leading truck and 10% for the truck following it.

Smart sensors

Developers of automotive and truck industry technologies are focusing particularly on safety issues. These can be aided by intelligent sensors that allow a self-driving vehicle to generate alerts and take proactive action. This is how VADA works. This is Volvo ’s active driver assistance system, already being standard on the Volvo VNR and VNL models.

The advanced collision warning system, which combines radar sensors with a camera, alerts the driver seconds before an imminent collision. If you are too slow to react, the system can implement emergency braking automatically in order to avoid a crash.

Innovative design

Changes are also taking place at the design stage of large vehicles. This is particularly emphasized by the makers of these cutting-edge models. One of the leaders in this field is VOLTA , which advertises its ability to create "the world's safest commercial vehicles".

Their Volta Zero model provides easy and low level boarding and alighting from either side directly on the sidewalk. That's possible because the vehicle doesn't have an internal combustion engine, so the engineers were able to overhaul previously established rules.

Dynamic route mapping and smart monitoring

While GPS is nothing new, the latest software uses the technology to a more advanced degree. For instance, for so-called dynamic route mapping, i.e. selecting the shortest, most convenient route, allowing for possible congestion. Importantly, this works flexibly, adapting not only to road conditions but also, for example, to unexpected increases in loading, etc.

Volta Zero also relies on the advanced route and vehicle monitoring. Using the Sibros OTA Deep Logger, you can receive up-to-date information on individual vehicles and the entire fleet.

Shipping is not like it used to be

Apart from the passenger car market changes, a similar revolution is underway in the truck and van industry. This transformation is called for as the problem is not only a shortage of professional drivers but also reducing the cost of transportation and increasing volume. So any loss-reduction initiative is of paramount value.

As for the solutions we have mentioned in this article, they will certainly not all be widely implemented in the next few years. For example, it is difficult to expect only electric-powered autonomous trucks to be on the road as early as 2027. What can be widely rolled out now is, for example, optimization of cargo loading (by predicting when the truck will arrive), better route finding (via advanced GPS), or predictive maintenance (early repair before it generates logistics costs). It is only the second step to progress toward full electrification and autonomization.

Regardless of how the truck industry evolves over the next few or so years, it is definite that the changes will be based on the idea of a digital revolution, advanced software, and smart components.

All this is geared to enhance mobility services, bringing aspects such as driving comfort, business efficiency, and safety to a new level. This is a fact well known to the big OEM players and to the tech and automotive companies that year after year are competing with each other in innovations.

AAOS Hello World: How to build your first app for Android Automotive OS

Android Automotive OS is getting more recognition as automotive companies are looking to provide their customers with a more tailored experience. Here we share our guide to building the first app for AAOS.

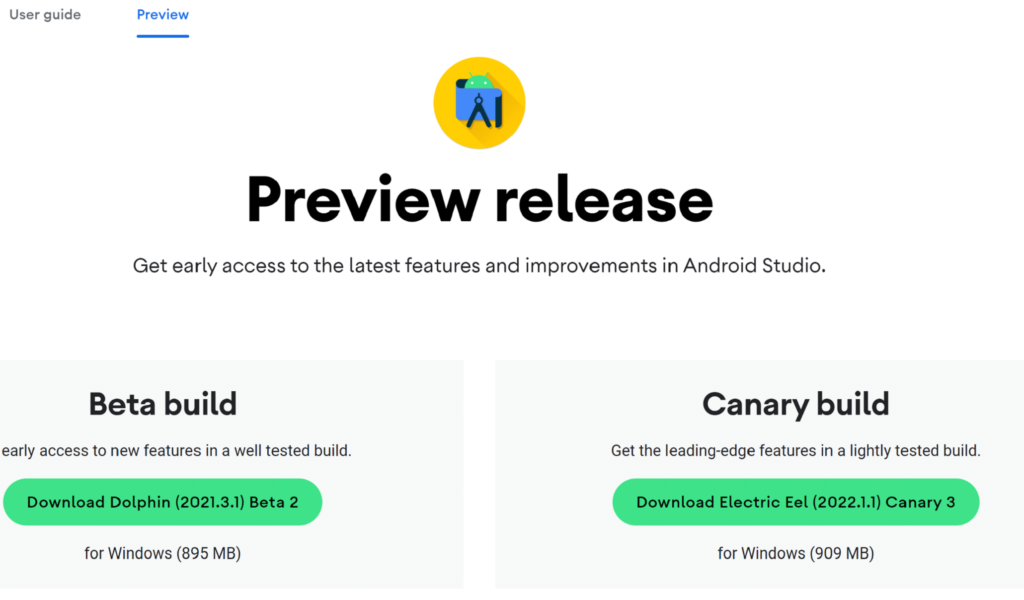

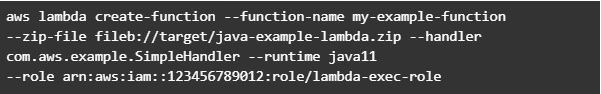

Before you start, read our first article about AAOS and get to know our review to be aware of what to expect. Let’s try making a simple Hello World app for android automotive. To get an IDE, go to Android Studio Preview | Android Developers and get a canary build:

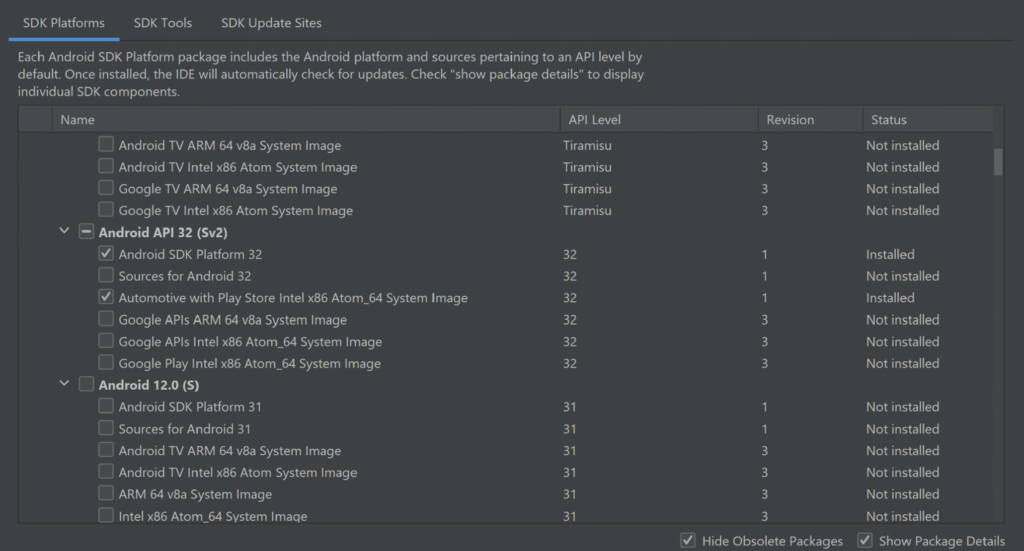

In the next step, prepare SDK, check and download the Automotive system image in SDK manager. You can get any from api32, Android 9, or Android 10, but I do not recommend the newest one as it is very laggy and crashes a lot right now. There are also Volvo and Polestar images.

For those you need to add links to SDK Update Sites:

https://developer.volvocars.com/sdk/volvo-sys-img.xml

https://developer.polestar.com/sdk/polestar2-sys-img.xml

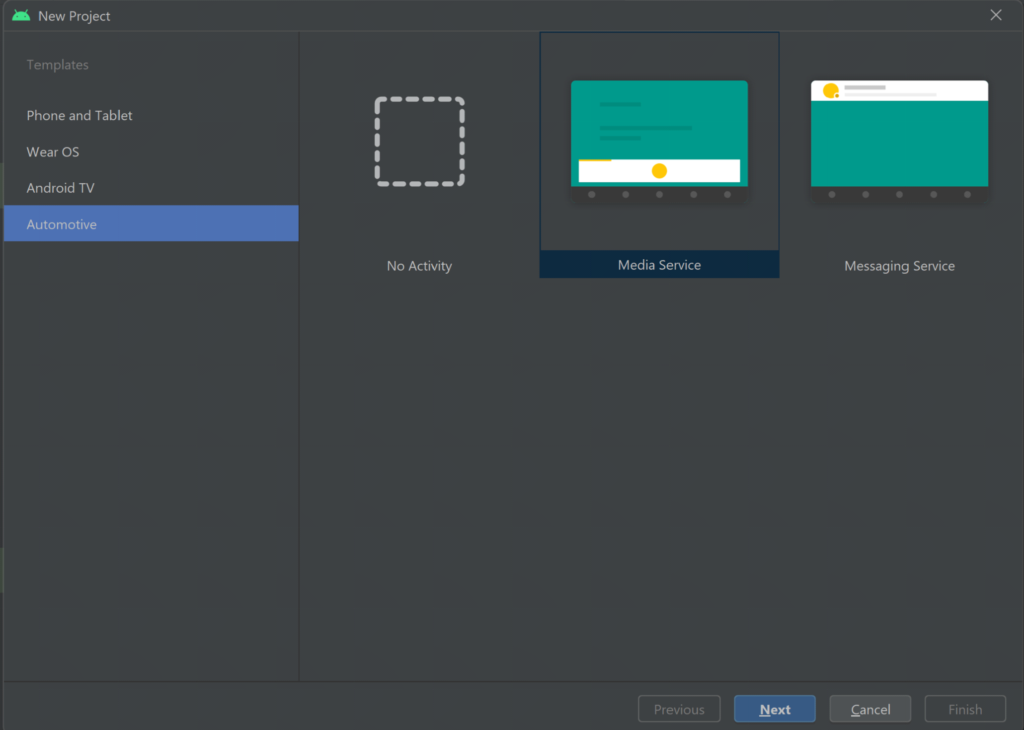

Start a new project, go to File> New Project and choose automotive with no activity

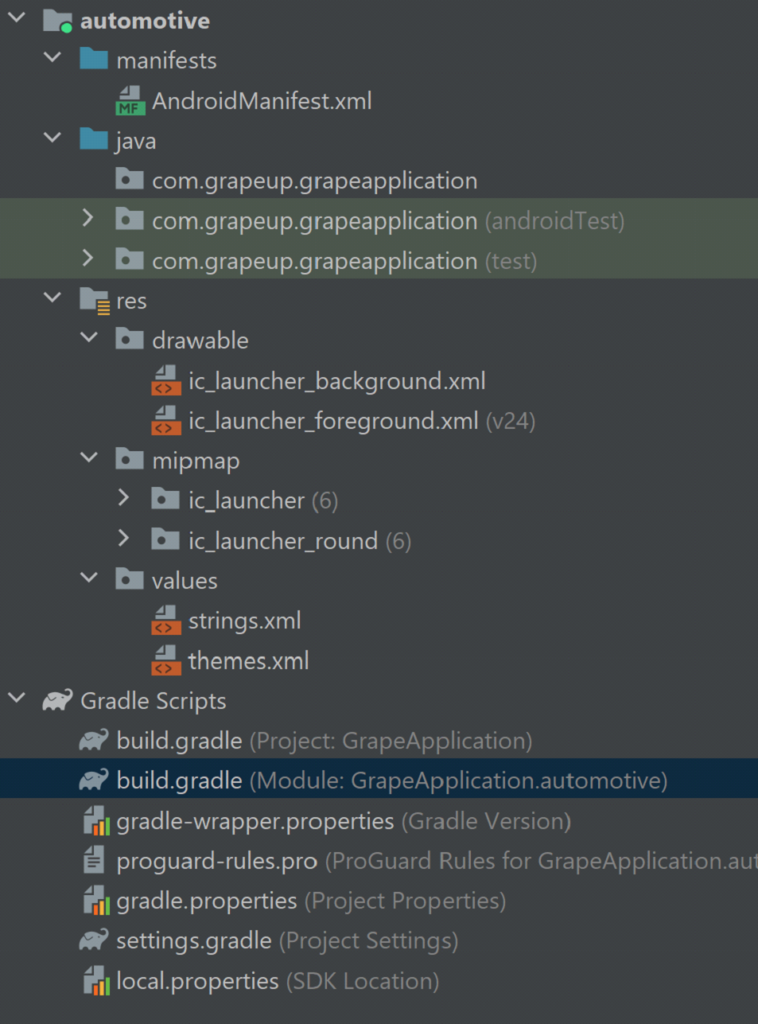

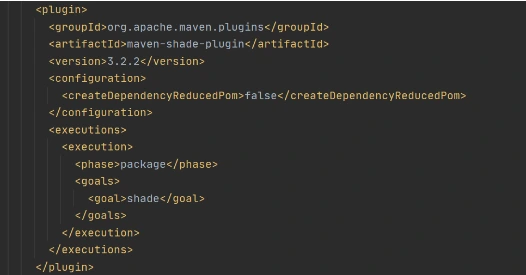

A nice and clean project should be created, without any classes: Go to build.gradle and add the car app library into dependencies, refresh the project to make it get

our new dependency:

implementation "androidx.car.app:app-automotive:1.2.0-rc01"

Let's write some code, first our screen class. Name it as you want and make it extend Screen class from android.car.app package and make it implement required methods:

public class GrapeAppScreen extends Screen {

public GrapeAppScreen(@NonNull CarContext carContext) {

super(carContext);

}

@NonNull

@Override

public Template onGetTemplate() {

Row row = new Row.Builder()

.setTitle("Thats our Grape App!").build();

return new PaneTemplate.Builder(

new Pane.Builder()

.addRow(row)

.build()

).setHeaderAction(Action.APP_ICON).build();

}

}

That should create a simple screen with our icon and title, now create another class extending CarAppService from the same package and as well make it implement the required methods. From createHostValidator() method return a static one that allows all hostnames for the purpose of this tutorial and return brand new session with our screen in onCreateSession() , pass CarContext using Session class getCarContext() method:

public class GrapeAppService extends CarAppService {

public GrapeAppService() {}

@NonNull

@Override

public HostValidator createHostValidator() {

return HostValidator.ALLOW_ALL_HOSTS_VALIDATOR;

}

@NonNull

@Override

public Session onCreateSession() {

return new Session() {

@Override

@NonNull

public Screen onCreateScreen(@Nullable Intent intent) {

return new GrapeAppScreen(getCarContext());

}

};

}

}

Next, move to AndroidManifest and add various features inside the main manifest tag:

<uses-feature

android:name="android.hardware.type.automotive"

android:required="true" />

<uses-feature

android:name="android.software.car.templates_host"

android:required="true" />

<uses-feature

android:name="android.hardware.wifi"

android:required="false" />

<uses-feature

android:name="android.hardware.screen.portrait"

android:required="false" />

<uses-feature

android:name="android.hardware.screen.landscape"

android:required="false" />

Inside the Application tag add our service and activity, don’t forget minCarApiLevel as lack of this will throw an exception on app start:

<application

android:allowBackup="true"

android:appCategory="audio"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/Theme.GrapeApplication">

<meta-data android:name="androidx.car.app.minCarApiLevel"

android:value="1"

/>

<service

android:name="com.grapeup.grapeapplication.GrapeAppService"

android:exported="true">

<intent-filter>

<action android:name="androidx.car.app.CarAppService" />

</intent-filter>

</service>

<activity

android:name="androidx.car.app.activity.CarAppActivity"

android:exported="true"

android:label="GrapeApp Starter"

android:launchMode="singleTask"

android:theme="@android:style/Theme.DeviceDefault.NoActionBar">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

<meta-data

android:name="distractionOptimized"

android:value="true" />

</activity>

</application>

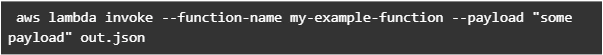

Now we can upload our application to the device, verify that you have an automotive emulator created, use automotive configuration, and hit run. The app is run in Google Automotive App Host, so if it is your first application on this device, it may require you to get to the play store and get it.

That’s how it looks:

The last thing, we’ll add a navigation button that will pop a Toast . Modify onGetTemplate() in Screen class, add Action and ActionStrip :

Action action = new Action.Builder()

.setOnClickListener(

() -> CarToast.makeText(getCarContext(), "Hello!", CarToast.LENGTH_SHORT).show())

.setTitle("Say hi!")

.build();

ActionStrip actionStrip = new

Add it to PaneTemplate:

return new PaneTemplate.Builder(

new Pane.Builder()

.addRow(row)

.build()

) .setActionStrip(actionStrip)

.setHeaderAction(Action.APP_ICON)

.build();

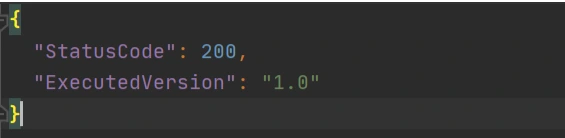

That’s our HelloWorld app:

Now you have the HelloWorld example app up and running using Car App Library. It takes care of displaying and arranging everything on the screen for us. The only responsibility is to add screens and actions we would like to have(and a bit of configuration). Check the Car app library to explore more of what can be done with it, play around with creating your app, and definitely check our blog soon for more AAOS app creation content.

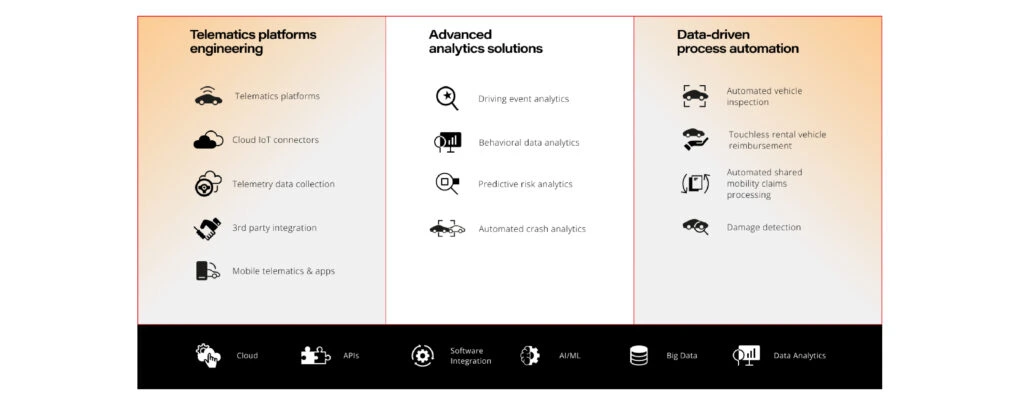

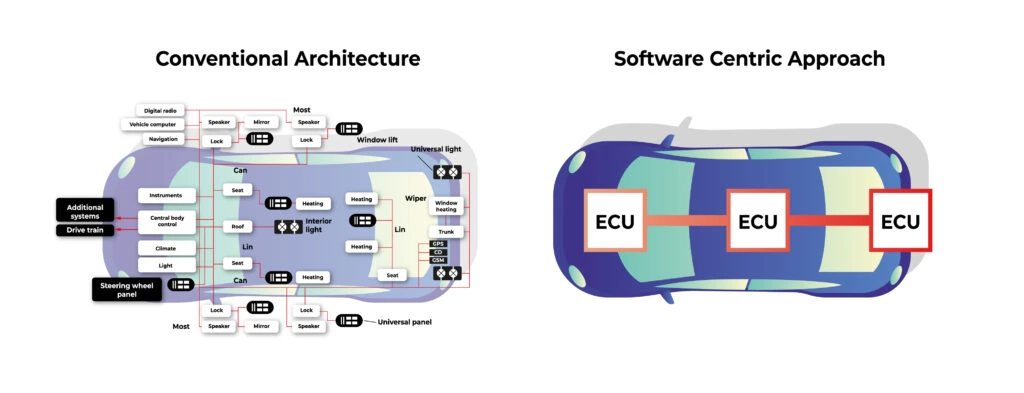

Software-defined vehicle and fleet management

With the development of artificial intelligence, the Internet of Things, and cloud solutions, the amount of data we can retrieve from a vehicle is expanding every year. Manufacturers improve efficiency in converting this data into new services and enhance their own offerings based on the information received from connected car systems. Can software-defined vehicle solutions be successfully applied to enabling fleet management systems for hundreds or even thousands of models? Of course, it can, and even should! This is what today's market, which is becoming steadily more car-sharing and micro mobility-based, expects and needs.

Netflix, Spotify, Glovo, and Revolut have taught us that entertainment, ordering food, or banking is now literally at our fingertips, available here and now, whenever we need or want it. Contactless, mobile-first processes, that reduce queues and provide flexibility, are now entering every area of the economy, including transportation and the automotive industry .

Three things: saving time, sparing money, and ecological trends dramatically change the attitude toward owning a car or choosing means of transport. Companies such as Uber, Lyft, or Bird cater to the needs of the younger generation, preferring renting over ownership.

The data-driven approach has become a cornerstone for automotive companies - both new, emerging startups and older, decades-old business models, such as car rental companies. None of the companies operating in this market can exist without a secure and well-thought-out IT platform for fleet management. At least if they want to stay relevant and compete.

It is the software - on an equal footing, or even first before the unique offer - that determines the success of such a company and allows it to manage a fleet of vehicles , which sometimes includes hundreds, if not thousands of models.

Depending on the purpose of the vehicles, the business model, and the scale of operations, solutions based on software will obviously vary, but they will be beneficial to both the fleet manager and the vehicle renter. They allow you to have an overall view of the situation, extract more useful information from received data and reasonably scale costs.

Among the potential entities that should be interested in improvements in this matter, the following types of fleets can be specifically mentioned:

- city e-scooters, bicycles, and scooters;

- car rentals;

- city bus fleets;

- tour operators;

- transport and logistics companies;

- cabs;

- public utility vehicles (e.g., fire departments, ambulances, or police cars) and government limousines;

- automobile mechanics;

- small private fleets (e.g., construction or haulage companies)

- insurers' fleets;

- automobile manufacturers' fleets (e.g., replacement or test vehicles).

The benefits of managing your fleet with cloud software and the Internet of Things (IoT)

Real-time vehicle monitoring (GPS)

A sizeable fleet implies a lot of responsibility and potentially a ton of problems. That's why it's so important to promptly locate each vehicle included and monitor it in real-time:

- the distance along the route,

- the place where the car was parked,

- place of breakdown.

This is especially useful in the context of a bus fleet, but also in the sharing-economy group of vehicles : city e-scooters, bicycles, and scooters. In doing so, the business owner can react quickly to problems.

Recovering lost or stolen vehicles

The real-time updated location, working due to IoT and wireless connectivity , also enables operations in emergency cases. This is because it allows you to recover a stolen or abandoned vehicle.

These benefits will be appreciated, for example, by people in charge of logistics transport fleets. After all, vehicles can be stolen in overnight parking lots. In turn, the fight against abandoned electric 2-wheelers will certainly be of interest to owners of the startups, which often receive complaints about scooters abandoned outside the zone, in unusual places, such as in fields or ditches in areas where there is no longer a sidewalk.

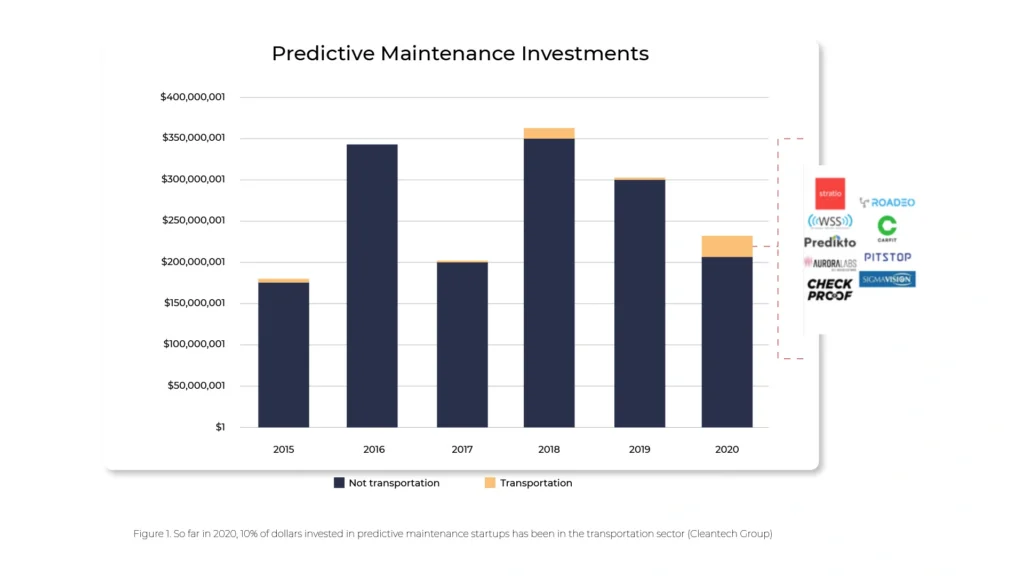

Predictive maintenance

We should also mention advanced predictive analytics for parts and components such as brakes, tires, and engines. The strength of such solutions is that you receive a warning (vehicle health alerts) even before a failure occurs.

The result? Reduced downtime, better resource planning, and streamlined decision-making. According to estimates, these are savings of $2,000 per vehicle per year.

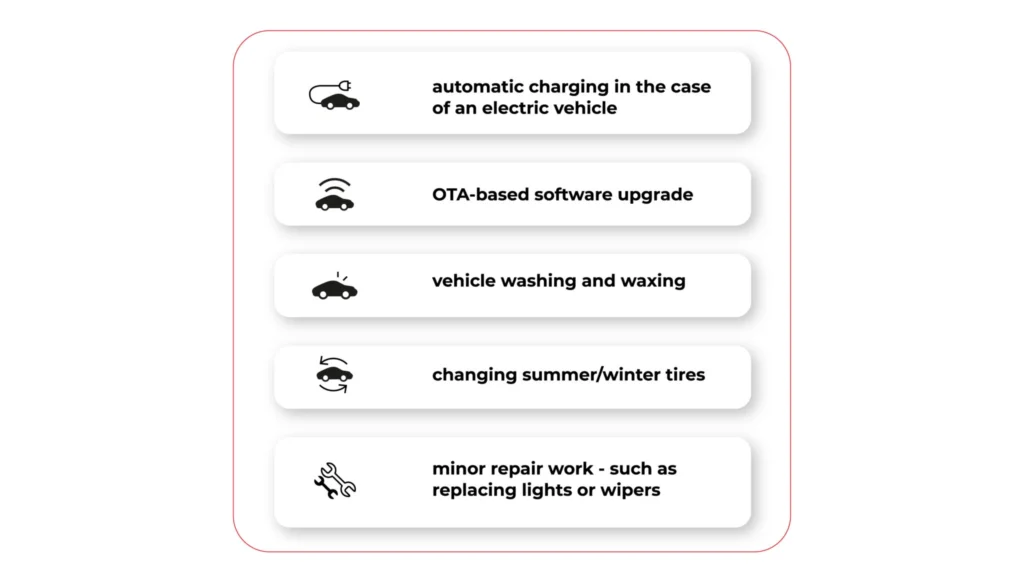

More convenient vehicle upgrades - comprehensive OTA (Over-the-Air)

Over-the-Air (OTA) car updates are vital for safety and usability. Interconnected and networked vehicles can be updated in one go , simultaneously. This saves the time otherwise required to manually configure each system one by one. In addition, operations can also be performed on vehicles that happen to be out of the country.

Such a facility applies to virtually all industries relying on extensive fleets, especially in the logistics, transportation, and tourism sectors.

Intermediation in renting

A growing number of services are focusing on service that is fast, simplified, and preferably remote. For instance, many rooms or apartment rentals on Airbnb rely on self-service check-in and check-out, using special lockups and codes.

Similar features are offered by software-defined vehicles , which can now be rented "off the street", without the need for service staff. The customer simply selects a vehicle and, via a smartphone app, unlocks access to it. Quick, easy, and instant.

Loyalty scheme for large fleets

Vehicle and software providers are well aware that new technology comes with great benefits, but also with a degree of investment. In order to make such commitments easier to decide upon, attractive loyalty schemes are being rolled out for larger fleets.

So as a business owner you reap double benefits. And at the same time you test, on lucrative terms, which solutions work best for you.

Improved fleet utilization

Cloud and IoT software enables more practical use of the entire fleet of available vehicles and accurately pinpoints bottlenecks or areas where the most downtime occurs.

This is an invaluable asset in the context of productivity-driven businesses, where even a few hours of delay can result in significant losses.

By contrast, artificial intelligence(AI)-based predictions (for example, information about an impending failure) offered to commercial fleets provide fleet managers with more anticipatory data , which can significantly cut business costs. Other benefits include improved emissions control or higher environmental standards.

Increasing safety

Minimized almost to zero danger of hacking into the system contributes to the security of the fleet-based business.

Case study: Ford Pro™ Telematics

Revenues based on software and digital services is not a bad deal for all informed participants in the business environment. Some big players like Ford have based their entire business model, on this idea. With their Ford Pro™ series of solutions, they want to become an accelerator for highly efficient and sustainable business. Their offering is based on market-ready commercial vehicles to suit almost any business needs and on all-electric trucks and vans. They are developing telematics in particular.

Ford Chief Executive Jim Farley puts it bluntly: We are the Tesla of this industry.

Bold assumptions? Yes, but also an equally bold implementation. Created in May 2021, a standalone Ford Pro™ unit is to focus exclusively on commercial and government customers. The new model also serves as a prelude to expanding digital service offers for retail customers.

The objective is to increase Ford Pro's annual revenue to $45 billion by 2025, up 67% from 2019.

Streamlined vehicle repairs

Managing a large group of vehicles also necessitates regular inspections and repairs, and at different times for different vehicles. This entails the need to control each unit individually.

The risk this poses is that information about the problem may not reach decision-makers in time, and besides, instead of the service and product, the executive is constantly focused on responding to anomalies. New technologies partially eliminate this problem.

As part of the Ford Pro Telematics Essentials package, vehicle owners receive real-time alerts on vehicle status in the form of engine diagnostic codes, vehicle recalls, and more. There's also a scheduled service tracking feature and, in the near future, remote locking/unlocking, which will further enhance fleet management.

Driver behavior insights

Human-centered technology can help improve driver performance and road safety. Various sensors and detectors inside Ford vehicles provide a lot of interesting information about the driver's behavior. They monitor the frequency and suddenness of actions such as braking or accelerating. Knowledge of this type of behavior allows for better fleet planning and improved driver safety.

Fuel efficiency analysis

Fuel is one of the major business costs for companies managing a large number of vehicles. Ford Pro™ Telematics, therefore, approaches customers with a solution to monitor fuel consumption and engine idle time.

This functionality is designed to optimize performance and reduce expenses. Better exhaust control also indirectly lowers operating costs.

Manage all-electric vehicle charging with E-Telematics

Telematics also provides an efficient way to manage a fleet consisting of electric vehicles. There are many indications that due to increasingly stringent environmental standards, they will form the backbone of various operations.

That's why Ford has developed its own E-Telematics software. It enables comprehensive monitoring of the charging status of the electric vehicle fleet. In addition, it helps drivers find and pay for public charging points and facilitates reimbursement for charging at home.

The system also offers the ability to accurately compare the efficiency and economic benefits of electric vehicles versus gas-powered ones.

Better cooperation with insurers

Cloud-based advanced telematics software not only provides a better customer experience. What also counts is a streamlined collaboration with insurance providers and the delivery of vehicle rental services to clients of such companies.

This, of course, requires a special tool that enables:

- remote processing of the case reported by the customer,

- making the information available to the rental company,

- allowing rental company personnel to provide a vehicle that meets the driver's needs.

The goal is to provide replacement cars for the customers of partnering insurers .

Touchless and counter-less experience

It includes verifying a customer and unlocking a car using a mobile app . This translates into greater customer satisfaction and the introduction of new business models. With the introduction of mobile apps in app stores, queues can be shortened. This results in a simplified rental process. From now on, it is more intuitive and focused on user experience and benefits. Because nowadays customers expect mobile and contactless service.

Case study: car rental

The leading rental enterprise teamed up with Grape Up to provide counter-less rental services and a touchless experience for their customers . By leveraging a powerful touchless platform and telematics system used by the rental enterprise, the company was able to build a more customer-friendly solution and tackle more business challenges, such as efficient stolen car recovery and car insurance replacement.

Software-defined vehicle solutions in vehicle fleets. How do implement them sensibly?

Technological changes that we are experiencing in the entertainment industry or e-commerce have also made their way into the automotive sector as well as micro-mobility and car rentals. There are many indications that there is no turning back.

Solutions such as real-time tracking, predictive maintenance, and driverless rental are the future. They help manufacturers execute their key processes more efficiently and track and manage their fleets effectively. In turn, the end customer receives an intuitive and convenient tool that fosters brand loyalty and makes life easier.

Of course, they need to be implemented properly. A large role is played by the quality of software. The key is the efficient flow of data and their cooperation with devices inside the vehicle. That is why it is worth choosing for business cooperation such a company that not only has the appropriate technological competence, but also the knowledge and experience gained during other such projects and implementations for the automotive industry.

Cloud solutions and AI software to serve the transport in cities of the future

Cities of the future are spaces that are comfortable to live in, eco-friendly, safe, and intelligently managed. It's hard to imagine such a futuristic scenario without the use of advanced technology. Preferably one that combines various elements within one coherent data processing system. Especially great potential lies in solutions at the crossroads of automotive, telematics, and AI. Let's dive into the transport in cities of the future.

GPS data

GPS technology gives developers the ability to monitor the vehicle position in real-time, and on top of that generate the data on parameters such as speed, distance, and travel time. Using this kind of telemetry information , combined with fuel level, speed limits, traffic information, and the estimated time of arrival, the urban transportation passengers can be instantly alerted to ongoing, but also predicted delays and problems on route.

An advanced version of this system can also propose different routes, to avoid building up traffic in congested areas and reduce the average travel time of the passengers, making everyone happy.

Sensors and detectors

Installed along roads, such elements collect data on traffic volumes, vehicle speeds, and which lanes are being occupied. Sensors embedded in roads are used today by about 25% of smart city stakeholders in the United States (Otonomo study).

Additionally, the so-called agglomerative clustering algorithm helps to identify clusters of places or destinations.

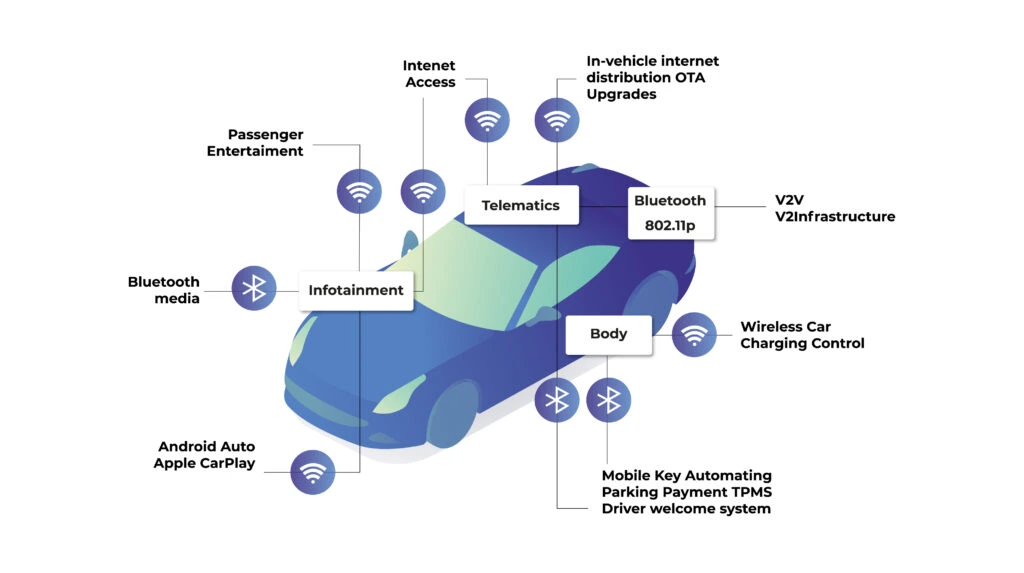

Connected Vehicles (CV)

The latest generation of intelligent transportation systems works closely with the Internet of Things (IoT), specifically the Internet of Vehicles(IoV). This allows for increased efficiency, mobility, and safety of autonomous cars.

Wireless connectivity provides communication in indoor and outdoor environments . There is possible interaction:

- Vehicle-to-vehicle (V2V),

- Vehicle to Vehicle Sensor (V2S),

- Vehicle-road infrastructure (V2R),

- Vehicle-Internet (V2I).

In the latter case, the vehicle couples with ITS infrastructure: traffic signs, traffic lights, and road sensors.

Digital twin (case study: Antwerp)

A digital twin is a kind of bridge between the digital and physical worlds . It supports decision-makers in their complex decisions about the quality of life in the city, allowing them to budget even more effectively.

In the Belgian city of Antwerp a digital twin, a 3D digital replica of the city, was launched in 2018. The model features real-time values from air quality and traffic sensors.

The city authorities can see exactly what the concentration of CO2 emissions and noise levels are in the city center. They also notice to what extent limited car traffic in certain city areas affects traffic emissions.

Automated Highway Systems (AHS)

Connected vehicles have opened the way for further innovations. Automated highway systems will be among them. Fully autonomous cars will move along designated lanes. The flow of cars will be controlled by a central city system.

The new solution will allow the causes of congestion on highways to be pinpointed and reduces the likelihood of collisions.

Traffic zone division

Traditional traffic zoning takes into account the social and economic factors of an area. Whereas today, this can be based on much better data downloaded in real-time from smartphones. You can see exactly where most vehicles are accumulating at any given time. These are not always "obvious" places, because, for instance, at 3 p.m. there may be heavier traffic on a small street near a popular corporation than on an exit road in the city center.

This modern categorization simplifies the city's complex road network, enabling more efficient traffic planning without artificial division into administrative boundaries.

Possibilities vs. practice

There are some interesting findings from a study conducted in 2021 by the analytics firm Lead to Market. It aimed to determine how cities are using vehicle data to enhance urban life. Today, these are being used for:

- alleviating bottlenecks in cities for business travelers and tourists (36%),

- better management of roads and infrastructure (18%),

- spatial and urban planning (18%),

- managing accident scenes (14%),

- improving parking (6%),

- mitigating environmental impacts (2%).

Surprisingly, however, only 22% of respondents use vehicle data for real-time daily traffic management. What could be the reason behind this? Ben Wolkow, CEO of Otonomo, points to one main reason: data dispersion. Today, it comes from a variety of sources. Meanwhile: for connected vehicle data to power smart city development in a meaningful way, they need to shift to a single connected data source .

It's good to know that data from connected vehicles currently account for less than one-tenth of smart city analysis. But experts agree that this will be changing in favor of new solutions.

Technology that shapes the city

Vehicles are becoming increasingly intelligent and connected. Hardware, software, and sensors can now be fully integrated into the digital infrastructure. On top of that, full communication between vehicles and sensors on and off the road is made possible. Wireless connectivity, AI , edge computing, and IoT are supporting predictive and analytical processes in larger metropolitan areas.

The biggest challenge, however, is the skillful use of data and its uninterrupted retrieval. It is, therefore, crucial to find a partner with whom you can co-develop, e.g. some reliable traffic analysis software.

Vehicle automation - where we are today and what problems we are facing

Tesla has Autopilot, Cadillac has Super Cruise, and Audi uses Travel Assist. While there are many names, their functionality is essentially similar. ADAS(advanced driver-assistance systems) assists the driver while on the road and sets the path we need to take toward autonomous driving. And where does your brand rank in terms of vehicle automation?

Consumers’ Reports data shows that 92 percent of new cars have the ability to automate speed with adaptive cruise control, and 50 percent can control both steering and speed. Although we are still two levels away from a vehicle that will be fully controlled by algorithms ( see the infographic below ), which, according to independent experts, is unlikely to happen within the next 10 years (at least when it comes to traditional car traffic), ADAS systems are finding their way into new vehicles year after year, and drivers are slowly learning to use them wisely.

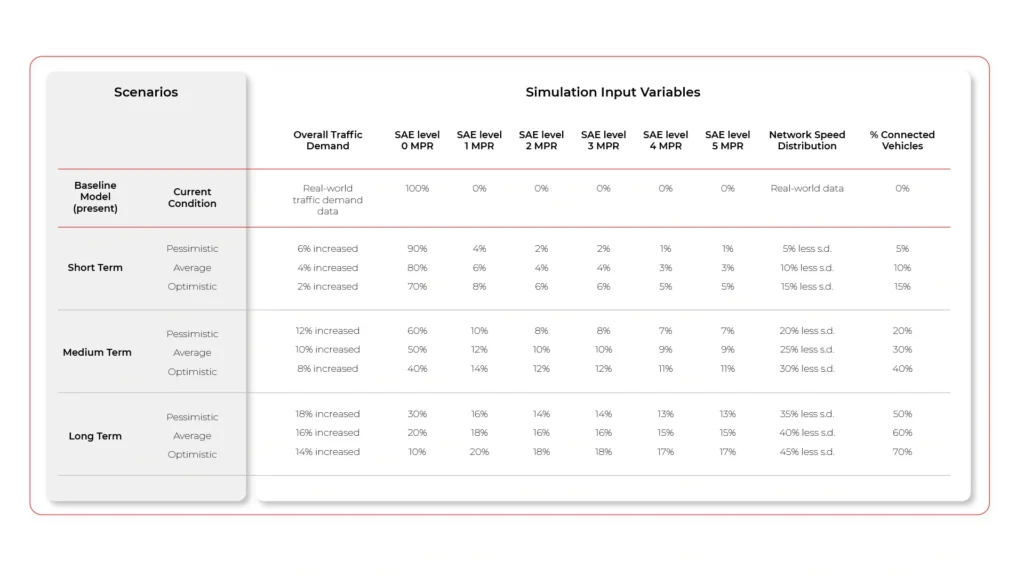

On the six-step scale of vehicle automation - starting at level 0, where the vehicle is not equipped with any driving technology, and ending at level 5 (fully self-driving vehicle) - we are now at level 3. ADAS systems, which are in a way the foundation for a fully automated vehicle, combine automatic driving, acceleration, and braking solutions under one roof.

However, in order for this trend to be adopted by the market and grow dynamically year by year, we need to focus on functional software and the challenges facing the automotive industry .

The main threats facing automated driving support systems

1. The absence of a driver monitoring system

Well-designed for functionality and UX, ADAS can effectively reduce driver fatigue and stress during extended journeys. However, for this to happen it needs to be equipped with an effective driver monitoring system.

Why is this significant? With the transfer of some driving responsibility into the hands of advanced technology, the temptation to "mind their own business" can arise in the driver. And this often results in drivers scrolling through their social media feeds on their smartphones. When automating driving, it is important to involve the driver, who must be constantly aware that their presence is essential to driving.

Meanwhile, Consumer Reports, which surveyed dozens of such systems in vehicles from leading manufacturers, reports that just five of them: BMW, Ford, Tesla, GM and Subaru - have fitted ADAS with such technology.

According to William Wallace - safety policy manager at Consumer Reports, "The evidence is clear: if a car facilitates people’s distraction from the road, they will do it - with potentially fatal consequences. It's critical that active driving assistance systems have safety features that actually verify that drivers are paying attention and are ready to take action at all times. Otherwise, the safety risks of these systems may ultimately outweigh their benefits."

2. Lack of response to unexpected situations

According to the same institution, none of the systems tested reacted well to unforeseen situations on the road, such as construction, potholes, or dangerous objects on the roadway. Such deficiencies in functionality in current systems, therefore, create a potential risk of accidents, because even if the system guides the vehicle flawlessly along designated lanes (intermittent lane-keeping or sustained lane-keeping system) the vehicle will not warn the driver in time to take control of the car when it becomes necessary to readjust the route.

There are already existing solutions on the market that can effectively warn the driver of such occurrences, significantly increase driving comfort and "delegate" some tasks to intelligent software. These are definitely further elements on the list of things worth upgrading driving automation systems within the coming years.

3. Inadequate UX and non-intuitive user experience

All technological innovations at the beginning of their development breed resistance and misunderstanding. It's up to the manufacturer and the companies developing software to support vehicle automation to create systems that are straightforward and user-friendly. Having simple controls, clear displays and transparent feedback on what the system does with the vehicle is an absolute "must-have" for any system. The driver needs to understand right from the outset in which situations the system should be used when to take control of the vehicle and what the automation has to offer.

4. Lack of consistency in symbols and terminology

Understanding the benefits and functionality of ADAS systems is certainly not made easier by the lack of market consistency. Each of the leading vehicle manufacturers uses different terminology and symbols for displaying warnings in vehicles. The buyer of a new vehicle does not know if a system named by Toyota offers the same benefits as a completely different named system available from Ford or BMW and how far the automation goes.

Sensory overload affects driver frustration, misunderstanding of automation, or outright resentment, and this is reflected in consumer purchasing decisions and, thus, in the development of systems themselves. It is challenging to track their impact on safety and driving convenience when the industry has not developed uniform naming and consistent labeling to help enforce the necessary safety features and components of such systems.

5. System errors

Automation systems in passenger cars are fairly new and still in development. It's natural that in the early stages they can make mistakes and sometimes draw the wrong conclusions from the behavior of drivers or neighboring vehicles. Unfortunately, mistakes - like the ones listed below - cause drivers to disable parts of the system or even all of it because they simply don't know how to deal with it.

- Lane-keeping assists freaking out in poorer weather;

- Steering stiffening and automatically slowing down when trying to cross the line;

- Sudden acceleration or braking of a vehicle with active cruise control - such as during overtaking maneuvers or entering a curve on a highway exit or misreading signs on truck trailers.

How to avoid such errors? The solution is to develop more accurate models that detect which lanes are affected by signs or traffic lights.

Vehicle automation cannot happen automatically

Considering the number of potential challenges and risks that automakers face when automating vehicles, it's clear that we're only at the beginning of the road to the widespread adoption of these technologies. This is a defining moment for their further development, which lays the foundation for further action.

On the one hand, drivers are already beginning to trust them, use them with greater frequency, and expect them in new car models. On the other hand, many of these systems still have the typical flaws and shortcomings of "infancy," which means that with their misunderstanding or overconfidence in their capabilities, driver frustration can result, or in extreme cases, accidents. The role of automotive OEMs and software developers is to create solutions that are simple and intuitive and to listen to market feedback even more attentively than before. A gradual introduction of such solutions to the market, so that consumers have time to learn and grasp them, will certainly facilitate automation to a greater extent and ultimately the creation of fully automated vehicles. For now, the path leading to them is still long and bumpy.

How AI is transforming automotive and car insurance

The car insurance industry is experiencing a real revolution today. Insurers are more and more carefully targeting their offers using AI and machine learning features. Such innovations significantly enhance business efficiency, eliminate the risk of accidents and their consequences, and enable adaptation to modern realities.

Changes are needed today

Approximately $25 billion is "frozen" with insurers annually due to problems such as fraud, claims adjustment, delays in service garages, etc. However, customers are not always happy with the insurance amounts they receive and the fact that they often have to accept undervalued rates. The reason for this is that due to limited data, it is difficult to accurately identify the culprit of the incident. It is also often the case that compensation is based on rates lower than the actual value of the damage.

Insurers today need to be aware of the ecosystem in which they operate . Clients are becoming more demanding and, according to an IBM Institute for Business Value (IBV) study, 50 percent of them prefer tailor-made products based on individual quotes. The very model of cooperation between businesses is also changing, as relations between insurance providers and car manufacturers are growing tighter. All of this is linked to the fact that cars are becoming increasingly autonomous, allowing them to more closely monitor traffic incidents and driver behavior as well as manage risk. Estimates suggest there will be as many as one trillion connected devices by 2025, and by 2030 there will be an increasing percentage of vehicles with automated features (ADAS).

No wonder there's an increasing buzz about changes in the car insurance industry. And these are changes based on technology. The use of artificial intelligence , machine learning, and advanced data analytics in the cloud will allow for seamless adaptation to market expectations.

CASE STUDY

SARA Assicurazioni and Automobile Club Italia are already encouraging drivers to install ADAS systems in exchange for a 20% discount on their insurance premiums. Indeed, it has been demonstrated that such systems can slash the rate of liability claims for personal injury by 4-25% and by 7-22% for property damage.

Why is this so important for insurers who want to face the reality?

Artificial intelligence-based pricing models provide a significant reduction in the time needed to introduce new offerings and to make optimal decisions. The risk of being mispriced is also lowered, as is the time it takes to launch insurance products.

The new AI-based insurance reality is happening as we speak. The digital-first companies like Lemonade, with their high flexibility in responding to market changes, are showing customers what solutions are feasible. In doing so, they put pressure on those companies that still hesitate to test new models.

Areas of change in car insurance due to AI

Artificial intelligence and related technologies are having a huge impact on many aspects of the insurance industry : quoting, underwriting, distribution, risk and claims management, and more.

Changes in insurance distribution

Artificial intelligence algorithms smoothly create risk profiles so that the time required to purchase a policy is reduced to minutes. Smart contracts based on blockchain instantly authenticate payments from an online account. At the same time, contract processing and payment verification is also vastly streamlined, reducing insurers' client acquisition cost.

Advanced risk assessment and reliable pricing

Traditionally, insurance premiums are determined using the "cost-plus" method. This includes an actuarial assessment of the risk premium, a component for direct and indirect costs, and a margin. Yet it has quite a few drawbacks.

One of them is the inability to easily account for non-technical price determinants, as well as the inability to react quickly to shifting market conditions.

How is risk calculated? For car insurance companies, the assessment refers to accidents, road crashes, breakdowns, theft, and fatalities.

These days, all these aspects can be controlled by leveraging AI, coupled with IoT data that provides real-time insights. Customized pricing of policies, for instance, can take into account GPS device dataon a vehicle’s location, speed, and distance traveled. This way, you can see whether the vehicle spends most of its time in the driveway or if, conversely, it frequently travels on highways, particularly at excessive speeds.

In addition, insurance companies can use a host of other sensor and camera data, as well as reports and documents from previous claims. Having all this information gathered, algorithms are able to reliably determine risk profiles.

CASE STUDY

Ant Financial, a Chinese company that offers an ecosystem of merged digital products and services, specializes in creating highly detailed customer profiles. Their technology is based on artificial intelligence algorithms that assign car insurance points to each customer, similarly to credit scoring. They take into account such detailed factors as lifestyle and habits. Based on this, the app shows an individual score, assigning a product that matches the specific policyholder.

An in-depth analysis of claims

The cooperation between an insurance company and its client is based on the premise that both parties are pursuing to avoid potential losses. Unfortunately, sometimes accidents, breakdowns or thefts occur and a claims process must be implemented. Artificial intelligence, integrated IoT data, and telematics come in handy irrespective of the type of claims we are handling.

- These technologies are suitable for, among other things, automatically generating not only damage information but also repair cost estimates.

- Machine learning techniques can estimate the average cost of claims for various client segments.

- Sending real-time alerts, in turn, enables the implementation of predictive maintenance.

- Once an image has been uploaded, an extensive database of parts and prices can be created.

The drivers themselves gain control as they can carry out the process of registering the damage from A to Z: take a photo, upload it to the insurer's platform and get an instant quote for the repair costs. From now on, they are no longer reliant on workshop quotes, which were often highly overestimated in line with the principle: "the insurer will pay anyway".

Fraud prevention

29 billion dollars in annual losses These are losses to auto insurers that occur due to fraud. Fraudsters want to scam a company out of insurance money based on illegally orchestrated events. How to prevent this? The answer is AI.

Analyzed data retrieved from cameras and sensors can reconstruct the details of a car accident with high precision. So, having an accident timeline generated by artificial intelligence facilitates accident investigation and claims management.

CASE STUDY

An advanced AI-based incident reconstruction has been tested lately on 200,000 vehicles as part of a collaboration between Israel's Project Nexar and a Japanese insurance company.

Assistance in the event of accidents

According to data from the OECD, car accident fatalities could be reduced by 44 percent if emergency medical services had access to real-time information about the injuries of involved parties.

Still, real-time assistance has great potential not only for public services but also in the context of auto insurance.

By leveraging AI to perform this, insurers can provide drivers with quick and semi-automated responses during collisions and accidents . For example, a chatbot can instruct the driver on how to behave, how to call for help, or how to help fellow passengers. All this is essential in the context of saving lives. At the same time, it is a way of reducing the consequences of an accident.

Transparent decision making (client perspective)

New technologies offer solutions to many problems not only for insurers but also for clients. The latter often complain about discrimination and unfair, from their point of view, calculations of policies and compensation.

"Smart automated gatekeepers" are superior in multiple ways to the imperfect solutions of traditional models. This is because, based on a number of reliable parameters, they facilitate the creation of more authoritative and personalized pricing policies. Data-rich and automated risk and damage assessments pay off for consumers because they have decision-making power based on how their actions affect insurance coverage.

The opportunities and future of AI in car insurance

McKinsey's analysis says that across functions and use cases AI investments are worth $1.1 trillion in potential annual value for the insurance industry.

The direction of changes is outlined in two ways: first by increasingly connected and software-equipped vehicles with more sensors. Second, by the changing analytical skills of insurers. Data-driven vehicles will certainly affect more reliable and real-time consistent repair costs and, consequently, claims payments. And when it comes to planning offers and understanding the client, AI is an enabler of change for personalized, real-time service (24/7 virtual assistance) and for creating flexible policies. All signs indicate that such "abstract" parameters as education or earnings will cease to play a major role in this regard.

As can be inferred from the diagram above, the greater the impact of a given technology on an insurance company's business , the longer the time required for its implementation. Therefore, it is vital to consider the future on a macro scale, by planning the strategy not for 2 years, but for 10.

The decisions you make today have a bearing on improving operational efficiency, minimizing costs, and opening up to individual client needs, which are becoming more and more coupled with digital technologies.

Predictive transport model and automotive. How can smart cities use data from connected vehicles?

There are many indications that the future lies in technology. Specifically, it belongs to connected and autonomous vehicles(CAVs), which, combined with 5G, AI, and machine learning, will form the backbone of the smart cities of the future. How will data from vehicles revolutionize city life as we've known it so far?

Data is "fuel" for the modern, smart cities

The UN estimates that by 2050, about 68 percent of the global population will live in urban areas. This raises challenges that we are already trying to address as a society.

Technology will significantly support connected, secure, and intelligent vehicle communication using vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I), and vehicle-to-everything (V2X) protocols. All of this is intended to promote better management of city transport, fewer delays, and environmental protection.

This is not just empty talk, because in the next few years 75% of all cars will be connected to the internet, generating massive amounts of data. One could even say that data will be a kind of "fuel" for mobility in the modern city . It is worth tapping into this potential. There is much to suggest that cities and municipalities will find that such innovative traffic management, routing, and congestion reduction will translate into better accessibility and increased safety. In this way, many potential crises related to overpopulation and excess of cars in agglomerations will be counteracted.

What can smart cities use connected car data for?

Traffic estimation and prediction systems

Data from connected cars, in conjunction with Internet of Things (IoT) sensor networks, will help forecast traffic volumes. Alerts about traffic congestion and road conditions can also be released based on this.

Parking and signalization management

High-Performance Computing and high-speed transmission platforms with industrial AI /5G/ edge computing technologies help, among other things, to efficiently control traffic lights and identify parking spaces, reducing the vehicle’s circling time in search of a space and fuel being wasted.

Responding to accidents and collisions

Real-time processed data can also be used to save the lives and health of city traffic users. Based on data from connected cars , accident detection systems can determine what action needs to be taken (repair, call an ambulance, block traffic). In addition, GPS coordinates can be sent immediately to emergency services, without delays or telephone miscommunication.

Such solutions are already being used by European warning systems, with the recently launched eCall system being one example. It works in vehicles across the EU and, in the case of a serious accident, will automatically connect to the nearest emergency network, allowing data (e.g. exact location, time of the accident, vehicle registration number, and direction of travel) to be transmitted, and then dial the free 112 emergency number. This enables the emergency services to assess the situation and take appropriate action. In case of eCall failure, a warning is displayed.

Reducing emissions

Less or more sustainable car traffic equals less harmful emissions into the atmosphere. Besides, data-driven simulations enable short- and long-term planning, which is essential for low-carbon strategies.

Improved throughput and reduced travel time

Research clearly shows that connected and automated vehicles add to the comfort of driving. The more such cars on the streets, the better the road capacity on highways.

As this happens, the travel time also decreases. By a specific amount, about 17-20 percent. No congestion means that fewer minutes have to be spent in traffic jams. Of course, this generates savings (less fuel consumption), and also for the environment (lower emissions).

Traffic management (case studies: Hangzhou and Tallinn)

Intelligent traffic management systems (ITS) today benefit from AI . This is apparent in the Chinese city of Hangzhou, which prior to the technology-transportation revolution ranked fifth among the most congested cities in the Middle Kingdom.

Data from connected vehicles there helps to efficiently manage traffic and reduce congestion in the city's most vulnerable districts. They also notify local authorities of traffic violations, such as running red lights. All this without investing in costly municipal infrastructure over a large area. Plus, built-in vehicle telematics requires no maintenance, which also reduces operating costs.

A similar model was compiled in Estonia by Tallinn Transport Authority in conjunction with German software company PTV Group. A continuously updated map illustrates, among other things, the road network and traffic frequency in the city.

Predictive maintenance in public transport

Estimated downtime costs for high-utilization fleets, such as buses and trucks, range from $448 to $760 daily . Just realize the problem of one bus breaking down in a city. All of a sudden, you find that delays affect not just one line, but many. Chaos is created and there are stoppages.

Fortunately, with the trend to equip more and more vehicles with telematics systems, predictive maintenance will be easier to implement. This will significantly increase the usability and safety of networked buses . Meanwhile, maintenance time and costs will drop.

Creating smart cities that are ahead of their time

Connected vehicle data not only make smart cities much smarter, but when leveraged for real-time safety, emergency planning, and reducing congestion, it saves countless lives and enables a better, cleaner urban experience – said Ben Wolkow, CEO of Otonomo.

The digitization of the automotive sector is accelerating the trend of smart and automated city traffic management. A digital transport model can forecast and analyze the city’s mobility needs to improve urban planning.

How insurers can use telematics technology to improve customer experience and increase market competitiveness

As technology is evolving in the car insurance and data-defined vehicles markets, the generational cross-section of people who use them is also undergoing transformation. Currently, two generations, in particular, are coming to the fore: Millennials and Gen Z. They are interested in service and product offerings that are as personalized and tailor-made as possible, rather than generic. Adding to that the rapid development of vehicle connectivity, now is the perfect time for insurers to roll out and scale up their telematics products offer.

Based on research by Allison+Partners, consumers within the youngest generation view the car simply as yet another life-enhancing device. What's more, about 70% of Generation Z consumers don't have a driver's license, and 30% of this group have no intention or desire to get one. This makes them more interested in carpooling using an autonomous vehicle. More than 45 percent of respondents feel comfortable with this. Thus, "urban mobility" players such as Uber and Lyft, as also e-scooter providers and on-demand car rental services are in demand . All of these are capable of filling the empty gap in the public transport infrastructure.

There is another interesting point. Those who do decide to get a driver's license and buy a car on their own, however, face the hassle of costly insurance . The amounts are especially exorbitant for the least experienced drivers, who in fact pay more for their year of birth stamped on their ID cards. There is even talk of the "age tax" phenomenon. Interestingly, young age does not always go hand in hand with traffic violations or dangerous driving behavior. Generations Y and Z are therefore advocating that car insurance should be reassessed, with more emphasis on personalization .

Telematics - technology that supports contemporary society's needs

Consumers today, particularly younger ones, expect cars to be as innovative as possible, as this directly translates into comfort and safety. Above all, personalized experiences, reliable connection, and comfort count. All this is a recipe for success in the future of mobility as a service .

Individual online services should be consolidated into comprehensive mobility platforms. This will ensure that the user no longer has to switch between applications, and autonomous driving will generate new opportunities for innovative business models.

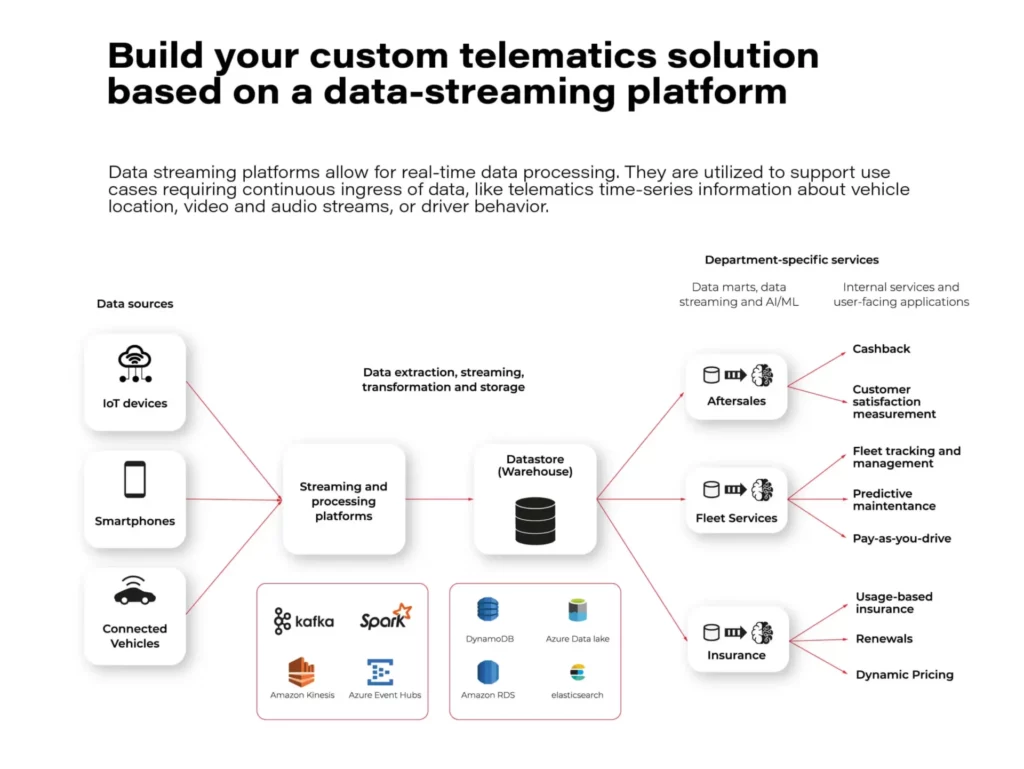

Utilizing telematics data opens the door to improving customer experience, unlocking new revenue streams, and increasing market competitiveness.

A modular and extensible telematics architecture provides tremendous opportunities for all pro-change agents.

This allows you to organize data handling, filter it and add missing information at various stages of processing. And it will not be an overstatement to say that in a technology-oriented information society, it is data that is the most valuable resource these days.

They can be used in all business processes, interacting with the customer experience and responding to their needs. Especially those who represent the younger generations of the future, namely Millennials and Gen Z.

Telematics platforms and the future of the automotive industry

The processed information is also used to train AI/ML models and to monitor system behavior. When you add to this the fact that they are extracted and included in real-time, you gain the added value of the rapid response. This then results in service satisfaction and sustained business performance.

On the other hand, driving data collected from various sources offer a full insight into what is actually happening on the road, how drivers behave, and what decisions they make while driving. Insurers benefit from this, but so do car manufacturers and companies that deal with shared mobility in its broader sense.

All these advantages are seemingly speaking for themselves. And this is just the beginning because the future of telematics is looking very bright. The results of IoT Analytics research indicate that by 2025 the total number of IoT devices will have exceeded 27 billion globally. For comparison, it is important to add that currently there are 1.06 billion passenger cars on the roads around the world. Specialists predict that in just these few years this value will increase by more than 400 million connected vehicles .

What will the end customer and the insurers themselves gain from applying telematics technology in automotive insurance?

Crash detection

The real-time feature of a telematics platform allows to detect accidents instantly and take proactive actions to mitigate the damages. Car location and sensor data can be used to trigger the crash alerts, coordinate emergency services dispatch, and reconstruct the crash timeline.

Such solutions are already being implemented, for example at IBM. Their Telematics Hub enables the management of crash and accident data in real-time and with a low probability of error. The tool can distinguish a false event from a real one, generate incident reports, and evaluate driver behavior.

Roadside assistance

According to Highway England, there are over 224,000 car breakdowns a year on England's busiest roads. That's an average of 25 cars per hour. In contrast, in another Anglo-Saxon country, the U.S., there are 1.76 million calls for roadside assistance per year.

Roadside assistance is an optional add-on to drivers' personal car insurance. It’s a popular service among the drivers but it needs to be further developed which requires leveraging real-time data processing. Those insurance providers that offer remote service or that minimize time spent on the side of the road are gaining a competitive advantage.

Monitoring vehicle activity allows pinpointing its location in case of an emergency. Once notified of the breakdown, assistance can be dispatched to the customer position, and the nearest available replacement vehicle can be booked.

UBI & BBI

Behavior-based (pay-how-you-drive) and usage-based insurance - UBI - (pay-as-you-drive) are the future of car insurance programs . Together with value-added services like automated crash detection or roadside assistance, they will determine the competitiveness and market share of insurance companies.

Handling large volumes of real-time data from every telematics device, like connected cars, mobile apps, and black boxes to extract crucial information and offer insights to customers, requires a robust and scalable telematics platform.

Experts point out many advantages of UBI schemes over the conventional solutions offered so far. The most important of these are:

- Potential discounts.

- The authorities and insurance claims adjusters have facilitated accident investigations.

- Drivers become motivated to improve their performance and eliminate risky driving behaviors and unsafe habits.

- Enhancing customer loyalty.

- Providing personalized, value-added services to insurance plans to serve customer interests more effectively.

Stolen vehicle recovery

The demand for targeted technologies for vehicle tracking and recovery comes in handy for insurance companies, which face the problem of issuing sizable amounts of compensation for stolen vehicles on a daily basis.

It's not true that younger generations are fickle and unwilling to take out insurance. Generation Z consumers and Millenials accounted for 39% of consumers buying auto insurance in 2018. This figure is increasing year on year and applies not only to compulsory insurance but also to additional plans. The problem is that in many cases theft insurance offers, if there are any, are based on statistical indicators rather than actual data, for instance, the high crime rate of this type in a given area. Besides, customers are often dissatisfied with amounts based on market values that are lower than expected.

So here, too, data-driven individualization is needed, and that's what telematics provides.

For instance, by gathering data about customer behavior, insurers can build driver profiles that allow them to set up alerts that are triggered by unusual or suspicious behavior. Another thing is real-time vehicle tracking. The alarm service can be activated on-demand or automatically, and the car establishes a connection to the operations center. It is also possible to document theft. Information detected by the vehicle is collected and then exported and made available for viewing by the appropriate people.

Telematics technology is an answer to a need, but also a challenge

The Millennials and Generation Z expect a holistic customer experience. Digital offerings must bring together a variety of products designed to make life easier and accommodate each individual's consumer personality.

This is exactly the task facing telematics today, which is not just an incomprehensible and distant technology. It is essentially something that allows you to adapt to society's changing service and experience-related expectations.

However, the new expectations of shared mobility, autonomous vehicles, and personalized data insurance offers are linked to new sacrifices that end customers must also be prepared to make. These include, for example, the need to share more and more data. Yet, the younger generations are already declaring their readiness. According to the Majesco survey, almost half of generation Z are also willing to share data if they see value in doing so. Questions in the survey also referred to the car and driver data-based insurance industry.

There are also massive challenges for insurers themselves, where data processing is still only at an initial stage. The technological capabilities of individual insurance companies need to be continuously developed. Ideally, driving data, and the software used to collect and process it, should not be scattered but planned holistically. This ultimately leads to the conscious use of telematics and to better management of situations requiring insurance payouts.

Grape Up helps you realize the potential of telematics by applying the automotive and insurance industry expertise to create scalable, cloud-native solutions.

Mood focused car enhancement - driving experience coupled with technological sense

Driving a car must evoke certain emotions and associations. Without them, a vehicle loses its soul, becomes a machine like any other and it is extremely hard for it to win popularity in a market filled to the brim. For years, brands have been striving to build their individual character and stand out with features such as unique design, performance, safety, or high quality of workmanship. With the proliferation of digital technologies, there is now one more element in the OEMs' toolkit: mood-building. From now on, drivers themselves can decide how they want to feel at a given moment. It's time for mood focused car enhancement . Digital technology will allow them to attain this state.

Up until now, remarkable driving sensations have typically been achieved by manufacturers through smooth driving, luxurious interior design or high-end sound systems. With modern technology, all of these elements can be combined into one seamless, sensory experience . In the vehicles of the future , the installed software will enable the creation of holistic experiences in which different senses are involved, and the driver's experience addresses sensations at both the functional level of the vehicle and the emotional level. Sound, color, scents, temperature, mood lighting, or tactile experiences (such as a massaging seat for the driver) can all create a one-of-a-kind experience that would distinguish the brand and offer the driver something that other manufacturers won't be able to give.

This suggests that sensor technology will become an important distinguishing mark in the user experience and will allow brands to more effectively influence purchase decisions and build consumer loyalty to a particular brand. According to PwC research, 86 percent of buyers are willing to pay more for a better customer experience.

Contextualizing the vehicle according to driving time, who is driving, or what mood they are in is already emerging as a trend set by major car brands.

Just as we approach the personalization of our own cell phones or computer accounts, we are already beginning to approach the personalization and contextualization of our own vehicles. As the implementations outlined below show, you can already see real-life examples of this today.

Manufacturers are using cloud solutions and AI not only to create a new vehicle functionality but also to induce us into a specific mood to make driving more enjoyable.

BMV My Modes use case

A whole new dimension of personalization and driving experience has recently been ventured by the BMW brand. With its BMW iX model, it is promoting a solution called "My Modes" . It features different colors and layout of the infotainment system with a curved display and digital cockpit. The user, depending on their mood, can change the color and sound theme (BMW IconicSounds Electric) in their vehicle.

Two popular modes are worth examining, namely Expressive and Relax. The former focuses on an active driving experience. Abstract patterns and vibrant colors stimulate action, inspire, and broaden thought paths. The experience is enhanced by interior audio that reflects the context of where you are at a given moment.

The Relax mode, as the name suggests, is designed to promote tranquility and well-being. The images displayed on the screens are inspired by nature and evoke associations of bliss and harmony. This is accompanied by discrete and serene sounds in the background.

https://youtu.be/vg6B0FY3mc4?t=266

Ford Mindfulness concept: Attention (to) safety

Mindfulness. A keyword in automotive safety in the broadest sense, but also - increasingly - in vehicle design. Focusing attention on the present and on real needs is becoming the status quo. This approach to on-board technology helps create electrified and autonomous vehicles where the driver and passengers can travel safely and pleasantly, being present in the moment. This is being developed by the Ford brand with the Mindfulness Concept Car.

According to Mark Higbie, senior advisor, Ford Motor Company, who helped introduce mindfulness into the Ford workplace: A car by itself is not mindful. But how a car is used and the behaviors that it supports, can be. Ford’s goal with this concept is to create experiences that encourage greater awareness. With unique features and embedded technologies, Ford is providing drivers and passengers with new ways to be mindful while in a Ford vehicle, anywhere along the road of life.

Features perfectly suited to your needs

The Mindfulness Concept Car is a vehicle that helps reduce distractions and stress, enhance travelers' well-being, and increase their level of sanitation. The latter is especially important given the pandemic reality.

Hygienic = safe

The pilot-activated Unlock Purge air conditioning system is geared to give the cabin a shot of clean, fresh air even before you enter the car. A more hygienic environment inside the car is also guaranteed by UV-C light diodes, which stop viruses and germs from multiplying.

Clean air is facilitated by a premium filter that removes almost all dust, odors, smog, allergens and bacteria-sized particles. It's an option specifically designed with allergy sufferers in mind.

The car that takes care of your health

Modern Ford cars prioritize individual driver characteristics, including what's going on in the driver's body that could potentially affect travel safety.

The Mindfulness Concept Car uses data from external measuring devices. These take real-time physiological data from the driver. Feedback on selected health parameters is then displayed on an in-car screen.

Additionally, an electrically activated driver seat provides a stimulating impact on breathing and heart rate.

Relaxing"here and now"

Ford's new addition allows you to fully indulge in an experience of tranquility and harmony. Mood lighting combined with temperature climate control provides specific moods inside the cockpit, such as refreshing dawn, relaxing blue sky and starry night.

Mindful driving guides are also provided in the new car concept. For instance, when the car is parked, the driver is instructed in yoga-based mini exercises that help relax the body and mind. The Powernap function, on the other hand, comes in handy during breaks on long journeys: a reclining seat, neck support and soothing sounds help drivers to fall asleep in a less stressful environment between travel points.

Speaking of relaxation, it's also interesting to note that the adaptive air conditioning provides calming cool air and simulates deep breathing. This happens especially after a dangerous incident, such as emergency braking (which is also supported by smart technology).

Personalized premium audio

The newly developed Ford's vehicle is a true host of new technologies to improve the existing driving experience. This applies, for instance, to the loudspeakers, including the B&O headrest speakers and the overhead speakers. Together they provide the finest possible listening experience.