Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Generative AI for developers - our comparison

So, it begins… Artificial intelligence comes into play for all of us. It can propose a menu for a party, plan a trip around Italy, draw a poster for a (non-existing) movie, generate a meme, compose a song, or even "record" a movie. Can Generative AI help developers? Certainly, but….

In this article, we will compare several tools to show their possibilities. We'll show you the pros, cons, risks, and strengths. Is it usable in your case? Well, that question you'll need to answer on your own.

The research methodology

It's rather impossible to compare available tools with the same criteria. Some are web-based, some are restricted to a specific IDE, some offer a "chat" feature, and others only propose a code. We aimed to benchmark tools in a task of code completion, code generation, code improvements, and code explanation. Beyond that, we're looking for a tool that can "help developers," whatever it means.

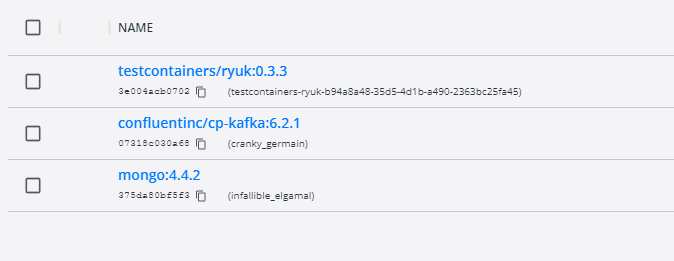

During the research, we tried to write a simple CRUD application, and a simple application with puzzling logic, to generate functions based on name or comment, to explain a piece of legacy code, and to generate tests. Then we've turned to Internet-accessing tools, self-hosted models and their possibilities, and other general-purpose tools.

We've tried multiple programming languages – Python, Java, Node.js, Julia, and Rust. There are a few use cases we've challenged with the tools.

CRUD

The test aimed to evaluate whether a tool can help in repetitive, easy tasks. The plan is to build a 3-layer Java application with 3 types (REST model, domain, persistence), interfaces, facades, and mappers. A perfect tool may build the entire application by prompt, but a good one would complete a code when writing.

Business logic

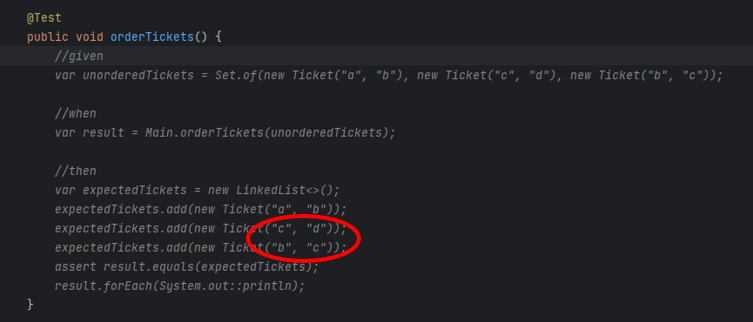

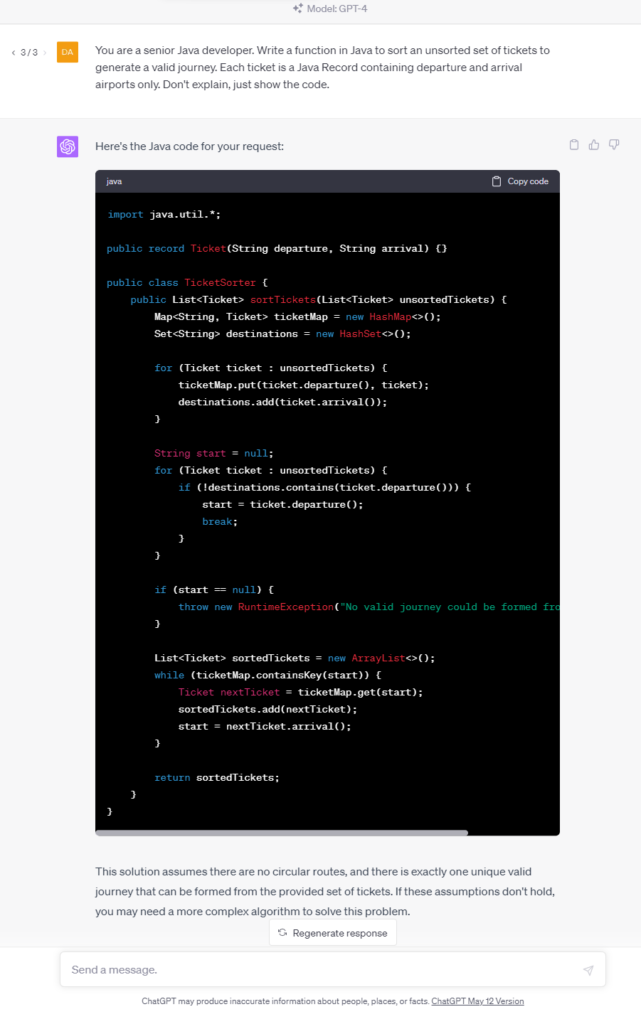

In this test, we write a function to sort a given collection of unsorted tickets to create a route by arrival and departure points, e.g., the given set is Warsaw-Frankfurt, Frankfurt-London, Krakow-Warsaw, and the expected output is Krakow-Warsaw, Warsaw-Frankfurt, Frankfurt-London. The function needs to find the first ticket and then go through all the tickets to find the correct one to continue the journey.

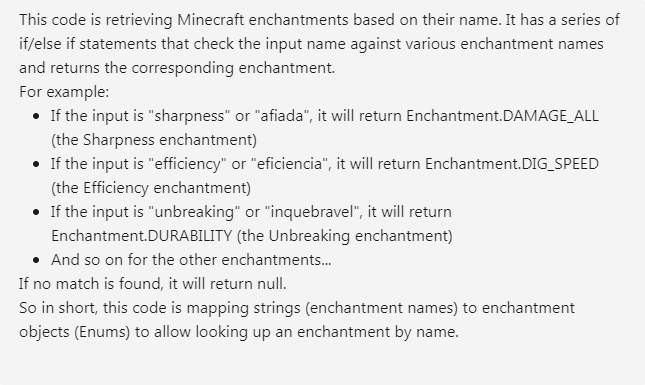

Specific-knowledge logic

This time we require some specific knowledge – the task is to write a function that takes a matrix of 8-bit integers representing an RGB-encoded 10x10 image and returns a matrix of 32-bit floating point numbers standardized with a min-max scaler corresponding to the image converted to grayscale. The tool should handle the standardization and the scaler with all constants on its own.

Complete application

We ask a tool (if possible) to write an entire "Hello world!" web server or a bookstore CRUD application. It seems to be an easy task due to the number of examples over the Internet; however, the output size exceeds most tools' capabilities.

Simple function

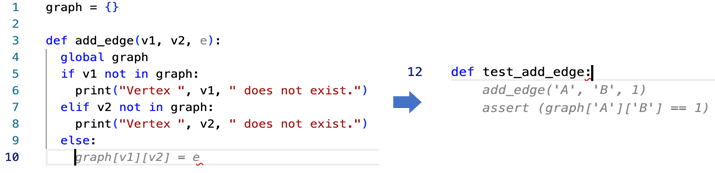

This time we expect the tool to write a simple function – to open a file and lowercase the content, to get the top element from the collection sorted, to add an edge between two nodes in a graph, etc. As developers, we write such functions time and time again, so we wanted our tools to save our time.

Explain and improve

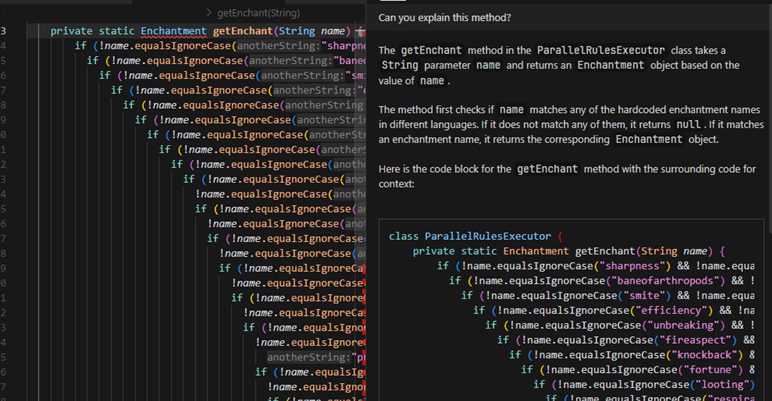

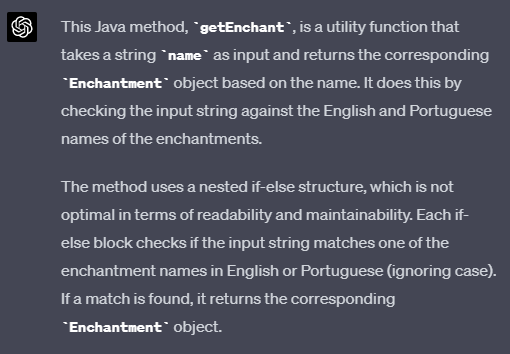

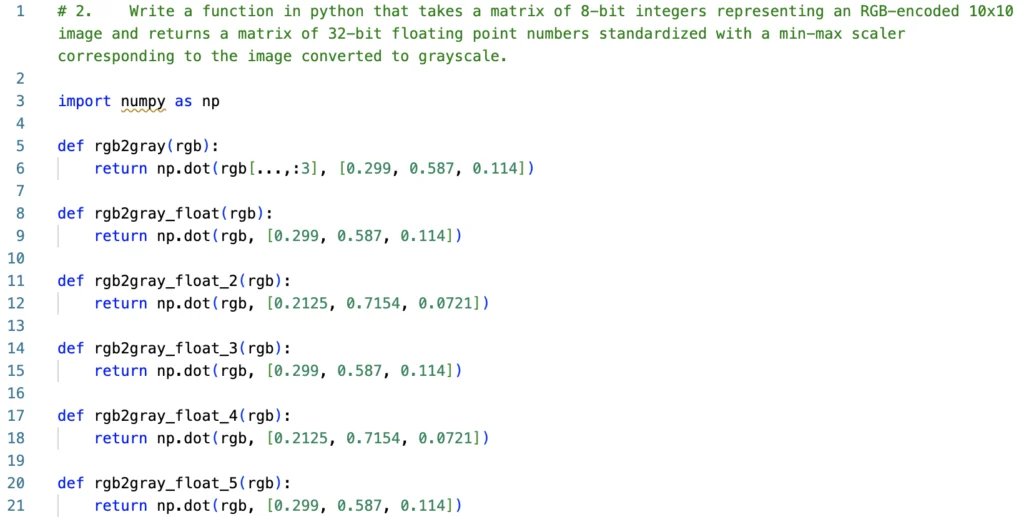

We had asked the tool to explain a piece of code:

- A method to run two tasks in parallel, merge results to a collection if successful, or fail fast if any task has failed,

- Typical arrow anti-pattern (example 5 from 9 Bad Java Snippets To Make You Laugh | by Milos Zivkovic | Dev Genius ),

- AWS R53 record validation terraform resource.

If possible, we also asked it to improve the code.

Each time, we have also tried to simply spend some time with a tool, write some usual code, generate tests, etc.

The generative AI tools evaluation

Ok, let's begin with the main dish. Which tools are useful and worth further consideration?

Tabnine

Tabnine is an "AI assistant for software developers" – a code completion tool working with many IDEs and languages. It looks like a state-of-the-art solution for 2023 – you can install a plugin for your favorite IDE, and an AI trained on open-source code with permissive licenses will propose the best code for your purposes. However, there are a few unique features of Tabnine.

You can allow it to process your project or your GitHub account for fine-tuning to learn the style and patterns used in your company. Besides that, you don't need to worry about privacy. The authors claim that the tuned model is private, and the code won't be used to improve the global version. If you're not convinced, you can install and run Tabnine on your private network or even on your computer.

The tool costs $12 per user per month, and a free trial is available; however, you're probably more interested in the enterprise version with individual pricing.

The good, the bad, and the ugly

Tabnine is easy to install and works well with IntelliJ IDEA (which is not so obvious for some other tools). It improves standard, built-in code proposals; you can scroll through a few versions and pick the best one. It proposes entire functions or pieces of code quite well, and the proposed-code quality is satisfactory.

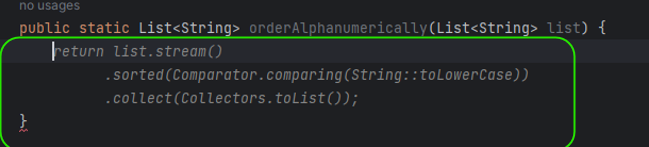

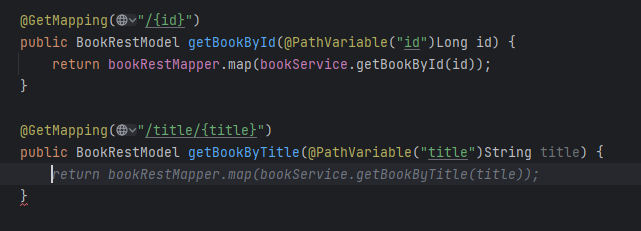

So far, Tabnine seems to be perfect, but there is also another side of the coin. The problem is the error rate of the code generated. In Figure 2, you can see ticket.arrival() and ticket.departure() invocations. It was my fourth or fifth try until Tabnine realized that Ticket is a Java record and no typical getters are implemented. In all other cases, it generated ticket.getArrival() and ticket.getDeparture() , even if there were no such methods and the compiler reported errors just after the propositions acceptance.

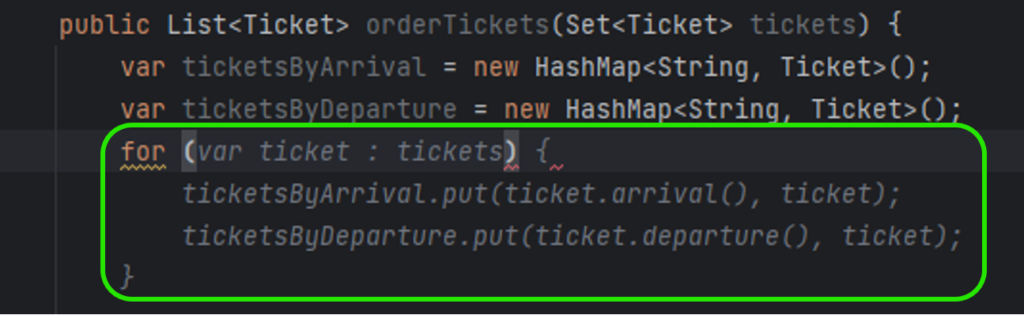

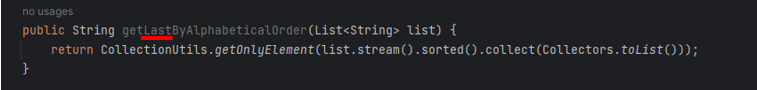

Another time, Tabnine omitted a part of the prompt, and the code generated was compilable but wrong. Here you can find a simple function that looks OK, but it doesn't do what was desired to.

There is one more example – Tabnine used a commented-out function from the same file (the test was already implemented below), but it changed the line order. As a result, the test was not working, and it took a while to determine what was happening.

It leads us to the main issue related to Tabnine. It generates simple code, which saves a few seconds each time, but it's unreliable, produces hard-to-find bugs, and requires more time to validate the generated code than saves by the generation. Moreover, it generates proposals constantly, so the developer spends more time reading propositions than actually creating good code.

Our rating

Conclusion: A mature tool with average possibilities, sometimes too aggressive and obtrusive (annoying), but with a little bit of practice, may also make work easier

‒ Possibilities 3/5

‒ Correctness 2/5

‒ Easiness 2,5/5

‒ Privacy 5/5

‒ Maturity 4/5

Overall score: 3/5

GitHub Copilot

This tool is state-of-the-art. There are tools "similar to GitHub Copilot," "alternative to GitHub Copilot," and "comparable to GitHub Copilot," and there is the GitHub Copilot itself. It is precisely what you think it is – a code-completion tool based on the OpenAI Codex model, which is based on GPT-3 but trained with publicly available sources, including GitHub repositories. You can install it as a plugin for popular IDEs, but you need to enable it on your GitHub account first. A free trial is available, and the standard license costs from $8,33 to $19 per user per month.

The good, the bad, and the ugly

It works just fine. It generates good one-liners and imitates the style of the code around.

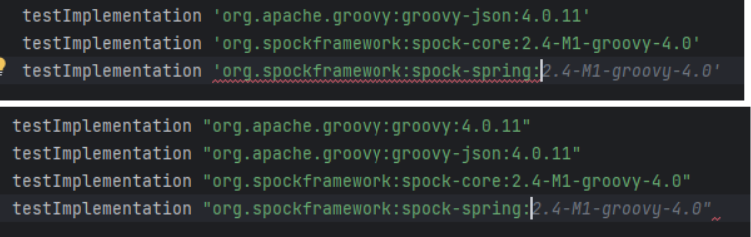

Please note the Figure 6 - it not only uses closing quotas as needed but also proposes a library in the "guessed" version, as spock-spring.spockgramework.org:2.4-M1-groovy-4.0 is newer than the learning set of the model.

However, the code is not perfect.

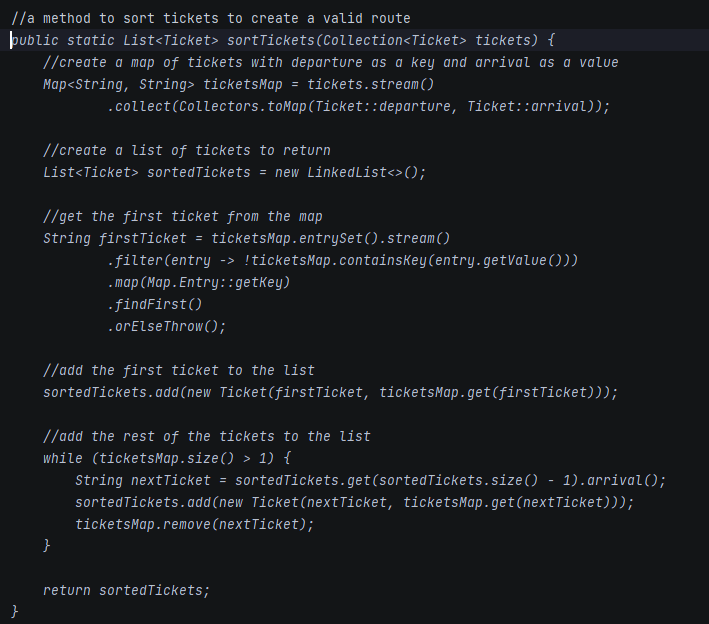

In this test, the tool generated the entire method based on the comment from the first line of the listing. It decided to create a map of departures and arrivals as Strings, to re-create tickets when adding to sortedTickets, and to remove elements from ticketMaps. Simply speaking - I wouldn't like to maintain such a code in my project. GPT-4 and Claude do the same job much better.

The general rule of using this tool is – don't ask it to produce a code that is too long. As mentioned above – it is what you think it is, so it's just a copilot which can give you a hand in simple tasks, but you still take responsibility for the most important parts of your project. Compared to Tabnine, GitHub Copilot doesn't propose a bunch of code every few keys pressed, and it produces less readable code but with fewer errors, making it a better companion in everyday life.

Our rating

Conclusion: Generates worse code than GPT-4 and doesn't offer extra functionalities ("explain," "fix bugs," etc.); however, it's unobtrusive, convenient, correct when short code is generated and makes everyday work easier

‒ Possibilities 3/5

‒ Correctness 4/5

‒ Easiness 5/5

‒ Privacy 5/5

‒ Maturity 4/5

Overall score: 4/5

GitHub Copilot Labs

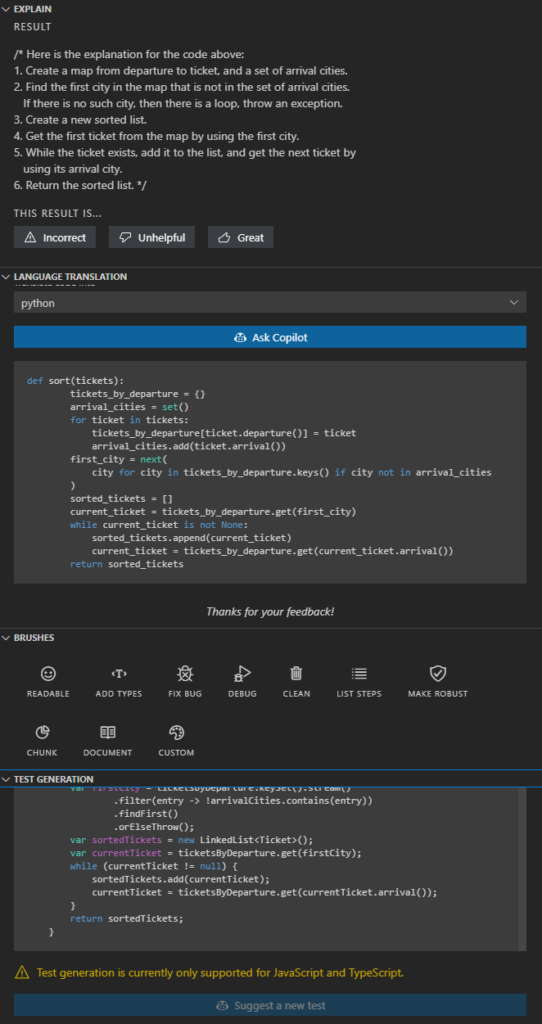

The base GitHub copilot, as described above, is a simple code-completion tool. However, there is a beta tool called GitHub Copilot Labs. It is a Visual Studio Code plugin providing a set of useful AI-powered functions: explain, language translation, Test Generation, and Brushes (improve readability, add types, fix bugs, clean, list steps, make robust, chunk, and document). It requires a Copilot subscription and offers extra functionalities – only as much, and so much.

The good, the bad, and the ugly

If you are a Visual Studio Code user and you already use the GitHub Copilot, there is no reason not to use the "Labs" extras. However, you should not trust it. Code explanation works well, code translation is rarely used and sometimes buggy (the Python version of my Java code tries to call non-existing functions, as the context was not considered during translation), brushes work randomly (sometimes well, sometimes badly, sometimes not at all), and test generation works for JS and TS languages only.

Our rating

Conclusion: It's a nice preview of something between Copilot and Copilot X, but it's in the preview stage and works like a beta. If you don't expect too much (and you use Visual Studio Code and GitHub Copilot), it is a tool for you.

‒ Possibilities 4/5

‒ Correctness 2/5

‒ Easiness 5/5

‒ Privacy 5/5

‒ Maturity 1/5

Overall score: 3/5

Cursor

Cursor is a complete IDE forked from Visual Studio Code open-source project. It uses OpenAI API in the backend and provides a very straightforward user interface. You can press CTRL+K to generate/edit a code from the prompt or CTRL+L to open a chat within an integrated window with the context of the open file or the selected code fragment. It is as good and as private as the OpenAI models behind it but remember to disable prompt collection in the settings if you don't want to share it with the entire World.

The good, the bad, and the ugly

Cursor seems to be a very nice tool – it can generate a lot of code from prompts. Be aware that it still requires developer knowledge – "a function to read an mp3 file by name and use OpenAI SDK to call OpenAI API to use 'whisper-1' model to recognize the speech and store the text in a file of same name and txt extension" is not a prompt that your accountant can make. The tool is so good that a developer used to one language can write an entire application in another one. Of course, they (the developer and the tool) can use bad habits together, not adequate to the target language, but it's not the fault of the tool but the temptation of the approach.

There are two main disadvantages of Cursor.

Firstly, it uses OpenAI API, which means it can use up to GPT-3.5 or Codex (for mid-May 2023, there is no GPT-4 API available yet), which is much worse than even general-purpose GPT-4. For example, Cursor asked to explain some very bad code has responded with a very bad answer.

For the same code, GPT-4 and Claude were able to find the purpose of the code and proposed at least two better solutions (with a multi-condition switch case or a collection as a dataset). I would expect a better answer from a developer-tailored tool than a general-purpose web-based chat.

Secondly, Cursor uses Visual Studio Code, but it's not just a branch of it – it's an entire fork, so it can be potentially hard to maintain, as VSC is heavily changed by a community. Besides that, VSC is as good as its plugins, and it works much better with C, Python, Rust, and even Bash than Java or browser-interpreted languages. It's common to use specialized, commercial tools for specialized use cases, so I would appreciate Cursor as a plugin for other tools rather than a separate IDE.

There is even a feature available in Cursor to generate an entire project by prompt, but it doesn't work well so far. The tool has been asked to generate a CRUD bookstore in Java 18 with a specific architecture. Still, it has used Java 8, ignored the architecture, and produced an application that doesn't even build due to Gradle issues. To sum up – it's catchy but immature.

The prompt used in the following video is as follows:

"A CRUD Java 18, Spring application with hexagonal architecture, using Gradle, to manage Books. Each book must contain author, title, publisher, release date and release version. Books must be stored in localhost PostgreSQL. CRUD operations available: post, put, patch, delete, get by id, get all, get by title."

https://www.youtube.com/watch?v=Q2czylS2i-E

The main problem is – the feature has worked only once, and we were not able to repeat it.

Our rating

Conclusion: A complete IDE for VS-Code fans. Worth to be observed, but the current version is too immature.

‒ Possibilities 5/5

‒ Correctness 2/5

‒ Easiness 4/5

‒ Privacy 5/5

‒ Maturity 1/5

Overall score: 2/5

Amazon CodeWhisperer

CodeWhisperer is an AWS response to Codex. It works in Cloud9 and AWS Lambdas, but also as a plugin for Visual Studio Code and some JetBrains products. It somehow supports 14 languages with full support for 5 of them. By the way, most tool tests work better with Python than Java – it seems AI tool creators are Python developers🤔. CodeWhisperer is free so far and can be run on a free tier AWS account (but it requires SSO login) or with AWS Builder ID.

The good, the bad, and the ugly

There are a few positive aspects of CodeWhisperer. It provides an extra code analysis for vulnerabilities and references, and you can control it with usual AWS methods (IAM policies), so you can decide about the tool usage and the code privacy with your standard AWS-related tools.

However, the quality of the model is insufficient. It doesn't understand more complex instructions, and the code generated can be much better.

For example, it has simply failed for the case above, and for the case below, it proposed just a single assertion.

Our rating

Conclusion: Generates worse code than GPT-4/Claude or even Codex (GitHub Copilot), but it's highly integrated with AWS, including permissions/privacy management

‒ Possibilities 2.5/5

‒ Correctness 2.5/5

‒ Easiness 4/5

‒ Privacy 4/5

‒ Maturity 3/5

Overall score: 2.5/5

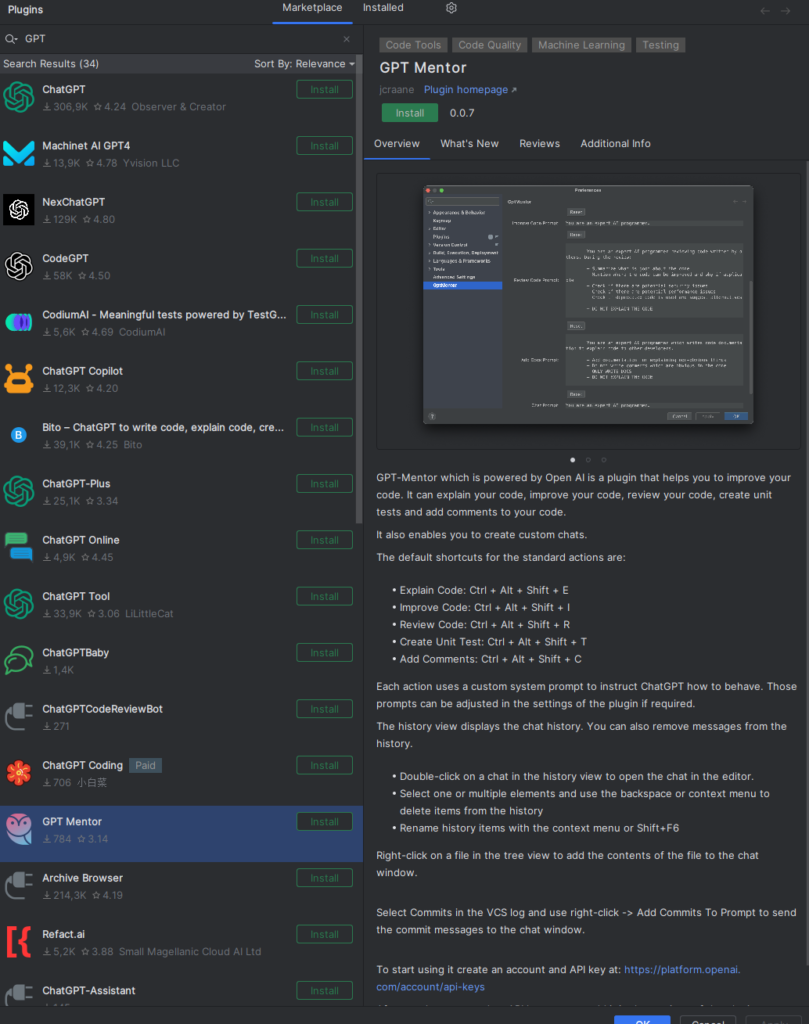

Plugins

As the race for our hearts and wallets has begun, many startups, companies, and freelancers want to participate in it. There are hundreds (or maybe thousands) of plugins for IDEs that send your code to OpenAI API.

You can easily find one convenient to you and use it as long as you trust OpenAI and their privacy policy. On the other hand, be aware that your code will be processed by one more tool, maybe open-source, maybe very simple, but it still increases the possibility of code leaks. The proposed solution is – to write an own plugin. There is a space for one more in the World for sure.

Knocked out tools

There are plenty of tools we've tried to evaluate, but those tools were too basic, too uncertain, too troublesome, or simply deprecated, so we have decided to eliminate them before the full evaluation. Here you can find some examples of interesting ones but rejected.

Captain Stack

According to the authors, the tool is "somewhat similar to GitHub Copilot's code suggestion," but it doesn't use AI – it queries your prompt with Google, opens Stack Overflow, and GitHub gists results and copies the best answer. It sounds promising, but using it takes more time than doing the same thing manually. It doesn't provide any response very often, doesn't provide the context of the code sample (explanation given by the author), and it has failed all our tasks.

IntelliCode

The tool is trained on thousands of open-source projects on GitHub, each with high star ratings. It works with Visual Studio Code only and suffers from poor Mac performance. It is useful but very straightforward – it can find a proper code but doesn't work well with a language. You need to provide prompts carefully; the tool seems to be just an indexed-search mechanism with low intelligence implemented.

Kite

Kite was an extremely promising tool in development since 2014, but "was" is the keyword here. The project was closed in 2022, and the authors' manifest can bring some light into the entire developer-friendly Generative AI tools: Kite is saying farewell - Code Faster with Kite . Simply put, they claimed it's impossible to train state-of-the-art models to understand more than a local context of the code, and it would be extremely expensive to build a production-quality tool like that. Well, we can acknowledge that most tools are not production-quality yet, and the entire reliability of modern AI tools is still quite low.

GPT-Code-Clippy

The GPT-CC is an open-source version of GitHub Copilot. It's free and open, and it uses the Codex model. On the other hand, the tool has been unsupported since the beginning of 2022, and the model is deprecated by OpenAI already, so we can consider this tool part of the Generative AI history.

CodeGeeX

CodeGeeX was published in March 2023 by Tsinghua University's Knowledge Engineering Group under Apache 2.0 license. According to the authors, it uses 13 billion parameters, and it's trained on public repositories in 23 languages with over 100 stars. The model can be your self-hosted GitHub Copilot alternative if you have at least Nvidia GTX 3090, but it's recommended to use A100 instead.

The online version was occasionally unavailable during the evaluation, and even when available - the tool failed on half of our tasks. There was no even a try, and the response from the model was empty. Therefore, we've decided not to try the offline version and skip the tool completely.

GPT

Crème de la crème of the comparison is the OpenAI flagship - generative pre-trained transformer (GPT). There are two important versions available for today – GPT-3.5 and GPT-4. The former version is free for web users as well as available for API users. GPT-4 is much better than its predecessor but is still not generally available for API users. It accepts longer prompts and "remembers" longer conversations. All in all, it generates better answers. You can give a chance of any task to GPT-3.5, but in most cases, GPT-4 does the same but better.

So what can GPT do for developers?

We can ask the chat to generate functions, classes, or entire CI/CD workflows. It can explain the legacy code and propose improvements. It discusses algorithms, generates DB schemas, tests, UML diagrams as code, etc. It can even run a job interview for you, but sometimes it loses the context and starts to chat about everything except the job.

The dark side contains three main aspects so far. Firstly, it produces hard-to-find errors. There may be an unnecessary step in CI/CD, the name of the network interface in a Bash script may not exist, a single column type in SQL DDL may be wrong, etc. Sometimes it requires a lot of work to find and eliminate the error; what is more important with the second issue – it pretends to be unmistakable. It seems so brilliant and trustworthy, so it's common to overrate and overtrust it and finally assume that there is no error in the answer. The accuracy and purity of answers and deepness of knowledge showed made an impression that you can trust the chat and apply results without meticulous analysis.

The last issue is much more technical – GPT-3.5 can accept up to 4k tokens which is about 3k words. It's not enough if you want to provide documentation, an extended code context, or even requirements from your customer. GPT-4 offers up to 32k tokens, but it's unavailable via API so far.

There is no rating for GPT. It's brilliant, and astonishing, yet still unreliable, and it still requires a resourceful operator to make correct prompts and analyze responses. And it makes operators less resourceful with every prompt and response because people get lazy with such a helper. During the evaluation, we've started to worry about Sarah Conor and her son, John, because GPT changes the game's rules, and it is definitely a future.

OpenAI API

Another side of GPT is the OpenAI API. We can distinguish two parts of it.

Chat models

The first part is mostly the same as what you can achieve with the web version. You can use up to GPT-3.5 or some cheaper models if applicable to your case. You need to remember that there is no conversation history, so you need to send the entire chat each time with new prompts. Some models are also not very accurate in "chat" mode and work much better as a "text completion" tool. Instead of asking, "Who was the first president of the United States?" your query should be, "The first president of the United States was." It's a different approach but with similar possibilities.

Using the API instead of the web version may be easier if you want to adapt the model for your purposes (due to technical integration), but it can also give you better responses. You can modify "temperature" parameters making the model stricter (even providing the same results on the same requests) or more random. On the other hand, you're limited to GPT-3.5 so far, so you can't use a better model or longer prompts.

Other purposes models

There are some other models available via API. You can use Whisper as a speech-to-text converter, Point-E to generate 3D models (point cloud) from prompts, Jukebox to generate music, or CLIP for visual classification. What's important – you can also download those models and run them on your own hardware at costs. Just remember that you need a lot of time or powerful hardware to run the models – sometimes both.

There is also one more model not available for downloading – the DALL-E image generator. It generates images by prompts, doesn't work with text and diagrams, and is mostly useless for developers. But it's fancy, just for the record.

The good part of the API is the official library availability for Python and Node.js, some community-maintained libraries for other languages, and the typical, friendly REST API for everybody else.

The bad part of the API is that it's not included in the chat plan, so you pay for each token used. Make sure you have a budget limit configured on your account because using the API can drain your pockets much faster than you expect.

Fine-tuning

Fine-tuning of OpenAI models is de facto a part of the API experience, but it desires its own section in our deliberations. The idea is simple – you can use a well-known model but feed it with your specific data. It sounds like medicine for token limitation. You want to use a chat with your domain knowledge, e.g., your project documentation, so you need to convert the documentation to a learning set, tune a model, and you can use the model for your purposes inside your company (the fine-tunned model remains private at company level).

Well, yes, but actually, no.

There are a few limitations to consider. The first one – the best model you can tune is Davinci, which is like GPT-3.5, so there is no way to use GPT-4-level deduction, cogitation, and reflection. Another issue is the learning set. You need to follow very specific guidelines to provide a learning set as prompt-completion pairs, so you can't simply provide your project documentation or any other complex sources. To achieve better results, you should also keep the prompt-completion approach in further usage instead of a chat-like question-answer conversation. The last issue is cost efficiency. Teaching Davinci with 5MB of data costs about $200, and 5MB is not a great set, so you probably need more data to achieve good results. You can try to reduce cost by using the 10 times cheaper Curie model, but it's also 10 times smaller (more like GPT-3 than GPT-3.5) than Davinci and accepts only 2k tokens for a single question-answer pair in total.

Embedding

Another feature of the API is called embedding. It's a way to change the input data (for example, a very long text) into a multi-dimensional vector. You can consider this vector a representation of your knowledge in a format directly understandable by the AI. You can save such a model locally and use it in the following scenarios: data visualization, classification, clustering, recommendation, and search. It's a powerful tool for specific use cases and can solve business-related problems. Therefore, it's not a helper tool for developers but a potential base for an engine of a new application for your customer.

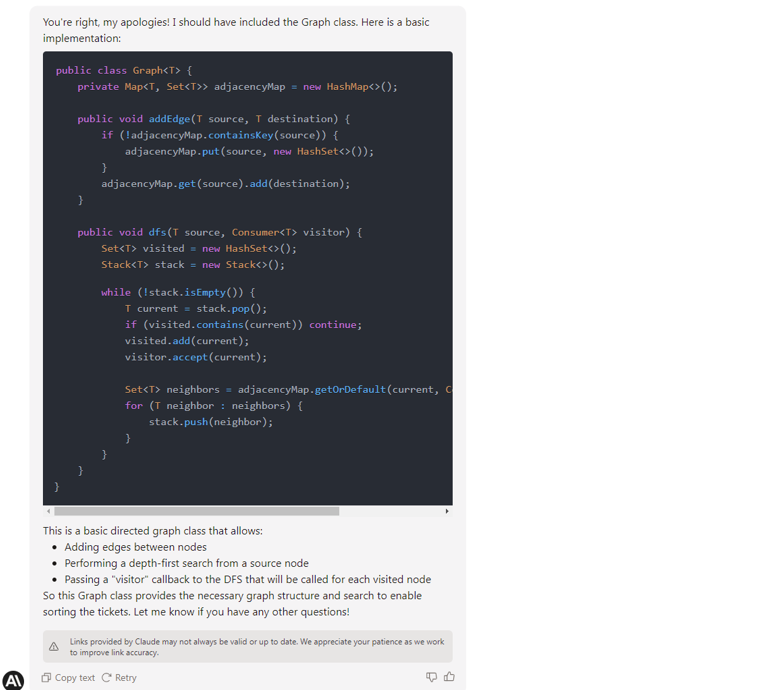

Claude

Claude from Anthropic, an ex-employees of OpenAI, is a direct answer to GPT-4. It offers a bigger maximum token size (100k vs. 32k), and it's trained to be trustworthy, harmless, and better protected from hallucinations. It's trained using data up to spring 2021, so you can't expect the newest knowledge from it. However, it has passed all our tests, works much faster than the web GPT-4, and you can provide a huge context with your prompts. For some reason, it produces more sophisticated code than GPT-4, but It's on you to pick the one you like more.

If needed, a Claude API is available with official libraries for some popular languages and the REST API version. There are some shortcuts in the documentation, the web UI has some formation issues, there is no free version available, and you need to be manually approved to get access to the tool, but we assume all of those are just childhood problems.

Claude is so new, so it's really hard to say if it is better or worse than GPT-4 in a job of a developer helper, but it's definitely comparable, and you should probably give it a shot.

Unfortunately, the privacy policy of Anthropic is quite confusing, so we don't recommend posting confidential information to the chat yet.

Internet-accessing generative AI tools

The main disadvantage of ChatGPT, raised since it has generally been available, is no knowledge about recent events, news, and modern history. It's already partially fixed, so you can feed a context of the prompt with Internet search results. There are three tools worth considering for such usage.

Microsoft Bing

Microsoft Bing was the first AI-powered Internet search engine. It uses GPT to analyze prompts and to extract information from web pages; however, it works significantly worst than pure GPT. It has failed in almost all our programming evaluations, and it falls into an infinitive loop of the same answers if the problem is concealed. On the other hand, it provides references to the sources of its knowledge, can read transcripts from YouTube videos, and can aggregate the newest Internet content.

Chat-GPT with Internet access

The new mode of Chat-GPT (rolling out for premium users in mid-May 2023) can browse the Internet and scrape web pages looking for answers. It provides references and shows visited pages. It seems to work better than Bing, probably because it's GPT-4 powered compared to GPT-3.5. It also uses the model first and calls the Internet only if it can't provide a good answer to the question-based trained data solitary.

It usually provides better answers than Bing and may provide better answers than the offline GPT-4 model. It works well with questions you can answer by yourself with an old-fashion search engine (Google, Bing, whatever) within one minute, but it usually fails with more complex tasks. It's quite slow, but you can track the query's progress on UI.

Importantly, and you should keep this in mind, Chat-GPT sometimes provides better responses with offline hallucinations than with Internet access.

For all those reasons, we don't recommend using Microsoft Bing and Chat-GPT with Internet access for everyday information-finding tasks. You should only take those tools as a curiosity and query Google by yourself.

Perplexity

At first glance, Perplexity works in the same way as both tools mentioned – it uses Bing API and OpenAI API to search the Internet with the power of the GPT model. On the other hand, it offers search area limitations (academic resources only, Wikipedia, Reddit, etc.), and it deals with the issue of hallucinations by strongly emphasizing citations and references. Therefore, you can expect more strict answers and more reliable references, which can help you when looking for something online. You can use a public version of the tool, which uses GPT-3.5, or you can sign up and use the enhanced GPT-4-based version.

We found Perplexity better than Bing and Chat-GPT with Internet Access in our evaluation tasks. It's as good as the model behind it (GPT-3.5 or GPT-4), but filtering references and emphasizing them does the job regarding the tool's reliability.

For mid-May 2023 the tool is still free.

Google Bard

It's a pity, but when writing this text, Google's answer for GPT-powered Bing and GPT itself is still not available in Poland, so we can't evaluate it without hacky solutions (VPN).

Using Internet access in general

If you want to use a generative AI model with Internet access, we recommend using Perplexity. However, you need to keep in mind that all those tools are based on Internet search engines which base on complex and expensive page positioning systems. Therefore, the answer "given by the AI" is, in fact, a result of marketing actions that brings some pages above others in search results. In other words, the answer may suffer from lower-quality data sources published by big players instead of better-quality ones from independent creators. Moreover, page scrapping mechanisms are not perfect yet, so you can expect a lot of errors during the usage of the tools, causing unreliable answers or no answers at all.

Offline models

If you don't trust legal assurance and you are still concerned about the privacy and security of all the tools mentioned above, so you want to be technically insured that all prompts and responses belong to you only, you can consider self-hosting a generative AI model on your hardware. We've already mentioned 4 models from OpenAI (Whisper, Point-E, Jukebox, and CLIP), Tabnine, and CodeGeeX, but there are also a few general-purpose models worth consideration. All of them are claimed to be best-in-class and similar to OpenAI's GPT, but it's not all true.

Only free commercial usage models are listed below. We’ve focused on pre-trained models, but you can train or just fine-tune them if needed. Just remember the training may be even 100 times more resource consuming than usage.

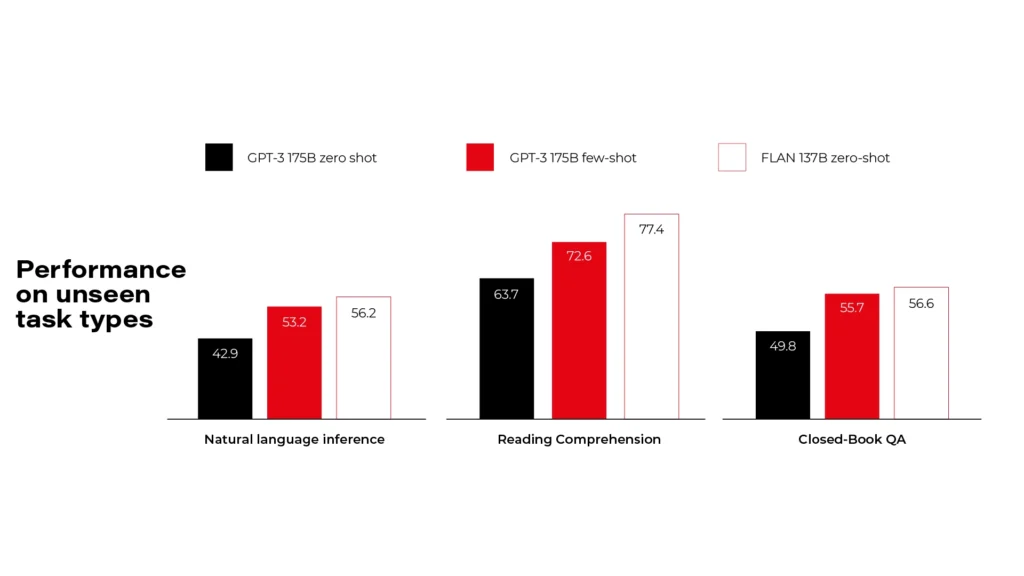

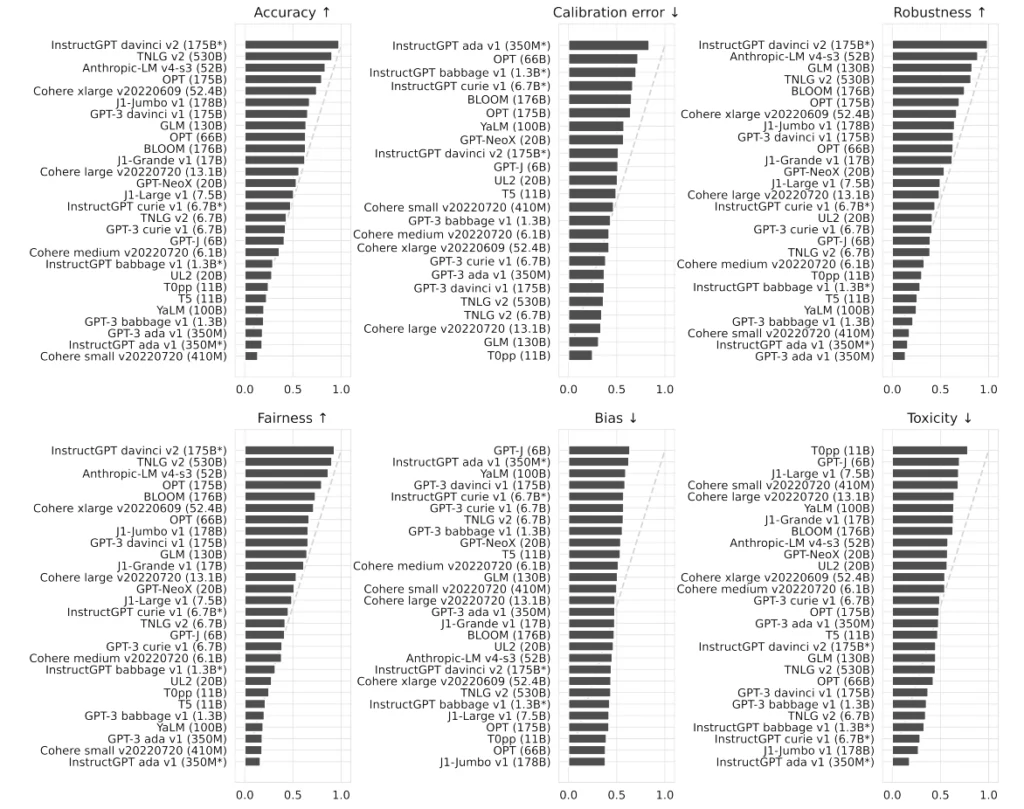

Flan-UL2 and Flan-T5-XXL

Flan models are made by Google and released under Apache 2.0 license. There are more versions available, but you need to pick a compromise between your hardware resources and the model size. Flan-UL2 and Flan-T5-XXL use 20 billion and 11 billion parameters and require 4x Nvidia T4 or 1x Nvidia A6000 accordingly. As you can see on the diagrams, it's comparable to GPT-3, so it's far behind the GPT-4 level.

BLOOM

BigScience Large Open-Science Open-Access Multilingual Language Model is a common work of over 1000 scientists. It uses 176 billion parameters and requires at least 8x Nvidia A100 cards. Even if it's much bigger than Flan, it's still comparable to OpenAI's GPT-3 in tests. Actually, it's the best model you can self-host for free that we've found so far.

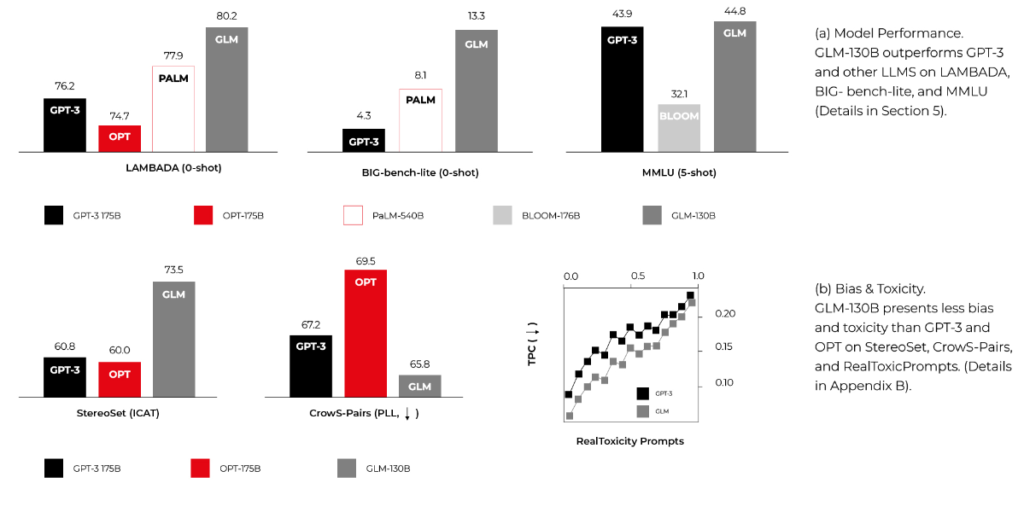

GLM-130B

General Language Model with 130 billion parameters, published by CodeGeeX authors. It requires similar computing power to BLOOM and can overperform it in some MMLU benchmarks. It's smaller and faster because it's bilingual (English and Chinese) only, but it may be enough for your use cases.

Summary

When we approached the research, we were worried about the future of developers. There are a lot of click-bite articles over the Internet showing Generative AI creating entire applications from prompts within seconds. Now we know that at least our near future is secured.

We need to remember that code is the best product specification possible, and the creation of good code is possible only with a good requirement specification. As business requirements are never as precise as they should be, replacing developers with machines is impossible. Yet.

However, some tools may be really advantageous and make our work faster. Using GitHub Copilot may increase the productivity of the main part of our job – code writing. Using Perplexity, GPT-4, or Claude may help us solve problems. There are some models and tools (for developers and general purposes) available to work with full discreteness, even technically enforced. The near future is bright – we expect GitHub Copilot X to be much better than its predecessor, we expect the general purposes language model to be more precise and helpful, including better usage of the Internet resources, and we expect more and more tools to show up in next years, making the AI race more compelling.

On the other hand, we need to remember that each helper (a human or machine one) takes some of our independence, making us dull and idle. It can change the entire human race in the foreseeable future. Besides that, the usage of Generative AI tools consumes a lot of energy by rare metal-based hardware, so it can drain our pockets now and impact our planet soon.

This article has been 100% written by humans up to this point, but you can definitely expect less of that in the future.

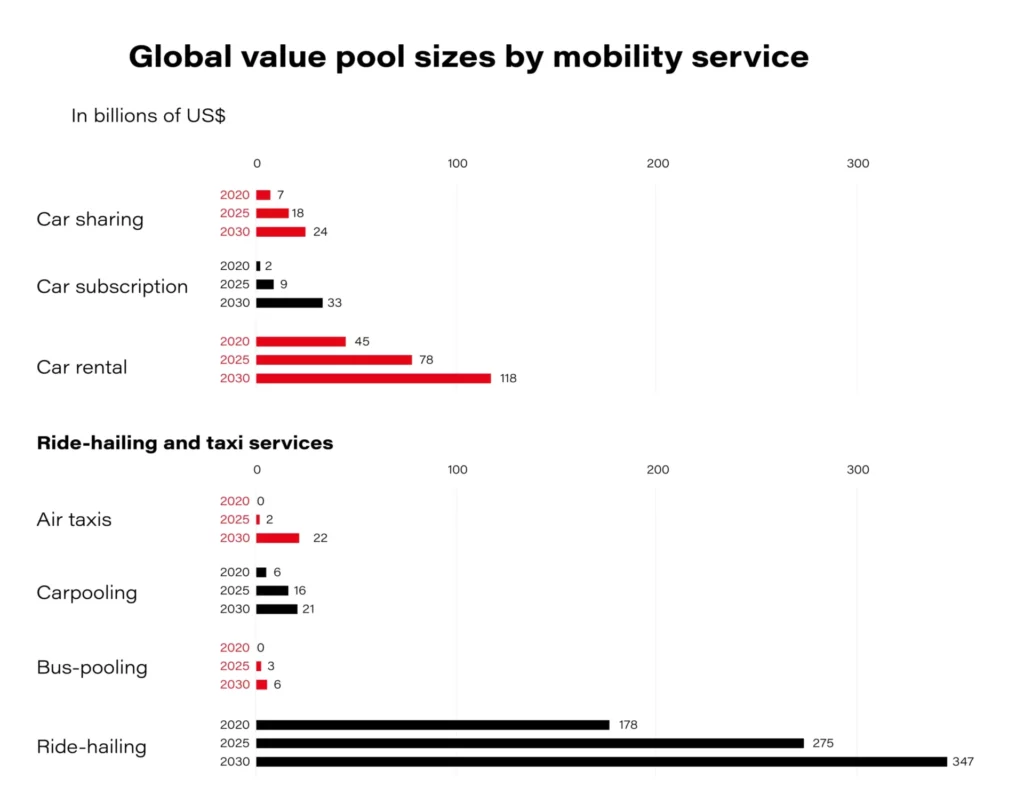

Dynamic pricing: How car rentals use connected car data to increase revenue

By 2025, over 400 million connected cars will be on the road. Car rentals and fleet managers can use connected car data to manage their vehicles more effectively and increase revenue. A huge part of that approach is related to dynamic pricing – a data-driven technique enabling you to set the best prices for your service. Let’s have a look at how your business can benefit from dynamic pricing and connected car data.

Vehicles generate tons of valuable information. Most of it comes from their engine control units (ECUs) that collect data from many different sensors within the engine and controller area networks that enable microcontrollers and devices to communicate.

Thanks to data coming from these and other sources, the car rental company can have immediate access to telemetry data, including:

- The specific vehicle’s location

- Its current engine status and speed

- The vehicle’s status (e.g., if the car is locked) etc.

As a derivative of the telemetry data, you can also understand the driving style of a given driver.

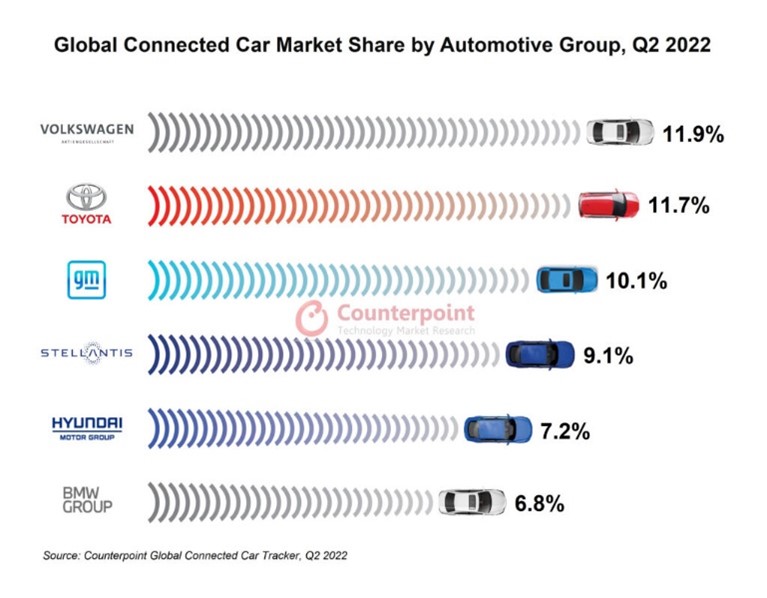

Interestingly, the connected car penetration has already surpassed that of non-connected cars ( over 50% market share in Q2 2022 ) [1] .

Because connected car data provides automotive businesses with useful input (especially when combined with web and market data ), car rentals and fleet managers can use it to adjust their offers and, thus, grow revenue. Here, dynamic pricing is the most prominent solution.

What is dynamic pricing?

In a nutshell, it’s a data-driven strategy that exploits intelligent algorithms (frequently based on machine learning and automation) to set and maintain the best prices within specific market conditions.

Dynamic pricing algorithms continually analyze the available data (coming from the website, the market, and the vehicles themselves) and use it to automatically adjust prices and other service conditions available on your website or in your app.

As a result, prices for renting a car can be optimized multiple times a week (or even a day) depending on:

- Current demand and car availability

- Time of day

- Traffic conditions

- Fuel prices

- Previous driving history of a given user

- And even the likelihood that a given person will be happy to pay more for the service (e.g., because they are running out of battery in their cellphone and they need to arrange transportation quickly)

Dynamic pricing is prevalent in both large car rentals, rideshare companies, and mobility-as-a-service providers, such as Uber. And speaking of Uber, some time ago, Forbes published an article explaining how Uber’s pricing works. They use an advanced dynamic pricing algorithm based on AI and multiple price points to determine the optimal price each user sees in their app.

As a result, Uber can charge the optimal rate for every ride, which helps them make more money. A similar solution can be introduced in any car rental company.

But the price of the service is just one puzzle piece. When it comes to car rentals, there are other conditions and fees renters have to be aware of before signing on the dotted line. Here, connected car data can also be of help! Let’s dig a bit deeper.

Dynamic pricing, connected car data, and the question of the insurance

Renting a car involves additional fees, primarily insurance, which is almost always mandatory . It stands to reason that this fee should also be dependent on a given driver and their experience and driving habits.

Insurance companies have been collecting data about drivers’ behaviors for years. And yes, they’ve been using it to calculate insurance premiums and offer discounts (so-called usage-based insurance – UBI ). Today, it’s possible thanks to mobile applications that have to be always on when driving a car. Such an app can track each driver’s behavior on the road. Soon, though, connected car data will replace these apps altogether.

Although this idea is still in its infancy, we can expect that it will be shortly doable on a large scale, especially given the fact that the number of connected vehicles is continually going up (the global connected car market size is projected to reach almost USD 192 billion by 2028 – CAGR of 18.1% [2] ).

The first applications enabling the implementation of dynamic pricing in car insurance are already here. Thanks to millions of connected cars offering trillions of data points, car rental companies can understand their customers and their driving behaviors.

This knowledge can be used to offer cheaper insurance and other rental fees to renters with a proven history of safe driving. Another idea worth considering is using data from connected vehicles to improve reward and loyalty programs (a safe driver could get discounts to rent a car or get additional loyalty points).

However, there are still some challenges that need to be addressed.

The challenges of making the most of connected car data...

As McKinsey explains in their recent report , “ many OEMs have struggled with connectivity or related software developments, resulting in poor customer reviews and delayed start of production ”. Car manufacturers and other OEMs struggle with convincing customers that car-connectivity services deliver additional value. Add poor execution of services and communication issues to the mix, and it becomes obvious that consumers are still a bit reluctant towards such services. It’s the same story with usage-based insurance.

In 2021, there was a survey conducted in Canada concerning UBI. 77% of Canadians are concerned about potential rate hikes. And 51% are hesitant in case it negatively affects their current insurance rates [3] .

And then, there is the data management issue. McKinsey estimates you need to access 1 to 2 terabytes of raw data per car each day to fully benefit from connected car data. That means huge data centers capable of processing all that information daily.

…and the inevitable future

The future of the automotive industry is software-centric, and car rentals and fleet management companies are no exception. As the number of connected vehicles goes up, we will be able to benefit from more advanced data-driven solutions.

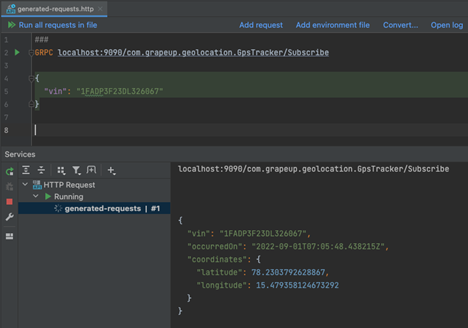

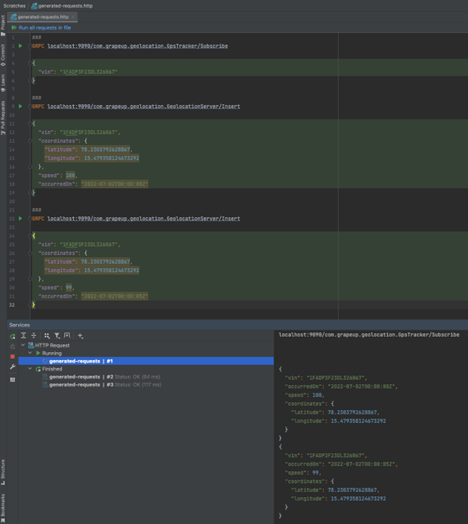

At GrapeUp, we tirelessly work on them every day! We develop custom solutions for both OEMs and car rental companies that enable collecting data, seamless processing, and even distributing it further. All to allow you to make more money.

If you run a car rental company, we can help you implement the solutions discussed in this article. To find out more, see our offer for the automotive sector .

[1] https://www.counterpointresearch.com/global-connected-car-market-q2-2022/

[2] https://www.globenewswire.com/en/news-release/2022/08/17/2499966/0/en/Global-Connected-Car-Market-Size-to-Hit-USD-191-83-Billion-at-a-CAGR-of-18-1-for-2021-2028-Fortune-Business-Insights.html

[3] https://www.ratehub.ca/blog/ubi-saves-money-but-87-per-cent-not-trying-survey-data/

Dependency injection in Cucumber-JVM: Sharing state between step definition classes

It's an obvious fact for anyone who's been using Cucumber for Java in test automation that steps need to be defined inside a class. Passing test state from one step definition to another can be easily achieved using instance variables, but that only works for elementary and small projects. In any situation where writing cucumber scenarios is part of a non-trivial software delivery endeavor, Dependency Injection (DI) is the preferred (and usually necessary!) solution. After reading the article below, you'll learn why that's the case and how to implement DI in your Cucumber-JVM tests quickly.

Preface

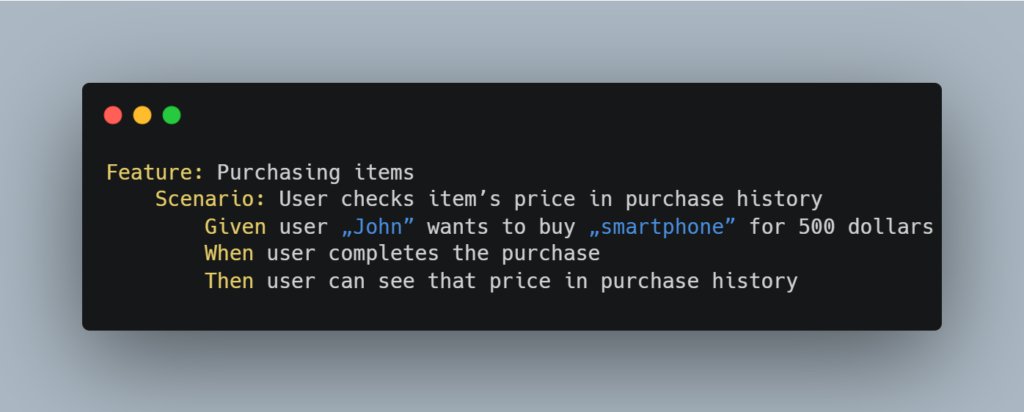

Let's have a look at the following scenario written in Gherkin:

If we assume that it's part of a small test suite, then its implementation using step definitions within the Cucumber-JVM framework could look like this:

In the example above, the data is passed between step definitions (methods) through instance variables. This works because the methods are in the same class – PurchaseProcess, since instance variables are generally accessible only inside the same class that declares them.

Problem

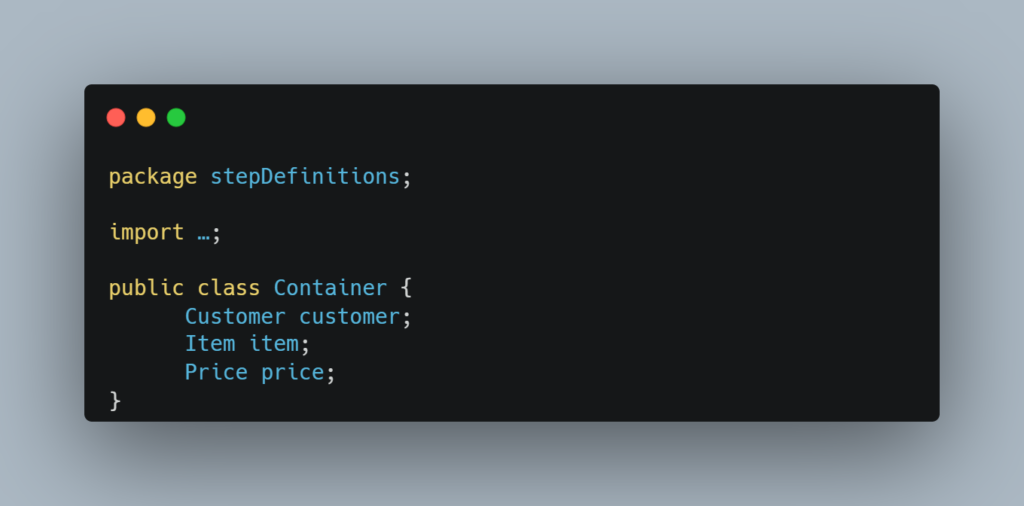

The number of step definitions grows when the number of Cucumber scenarios grows. Sooner or later, this forces us to split our steps into multiple classes - to maintain code readability and maintainability, among other reasons. Applying this truism to the previous example might result in something like this:

But now we face a problem: the checkPriceInHistory method moved into the newly created PurchaseHistory class can't freely access data stored in instance variables of its original PurchaseProcess class.

Solution

So how do we go about solving this pickle? The answer is Dependency Injection (DI) – the recommended way of sharing the state between steps in Cucumber-JVM.

If you're unfamiliar with this concept, then go by Wikipedia's definition:

"In software engineering , dependency injection is a design pattern in which an object or function receives other objects or functions that it depends on. A form of inversion of control , dependency injection aims to separate the concerns of constructing and using objects, leading to loosely coupled programs. [1] [2] [3] The pattern ensures that an object or function which wants to use a given service should not have to know how to construct those services. Instead, the receiving ' client ' (object or function) is provided with its dependencies by external code (an 'injector'), which it is not aware of." [1]

In the context of Cucumber, to use dependency injection is to "inject a common object in each class with steps. An object that is recreated every time a new scenario is executed." [2]

Thus Comes PicoContainer

JVM implementation of Cucumber supports several DI modules: PicoContainer, Spring, Guice, OpenEJB, Weld, and Needle. PicoContainer is recommended if your application doesn't already use another one. [3]

The main benefits of using PicoContainer over other DI modules steam from it being tiny and simple:

- It doesn't require any configuration

- It doesn't require your classes to use any APIs

- It only has a single feature – it instantiates objects [4]

Implementation

To use PicoContainer with Maven, add the following dependency to your pom.xml :

<dependency>

<groupId>io.cucumber</groupId>

<artifactId>cucumber-picocontainer</artifactId>

<version>7.8.1</version>

<scope>test</scope>

</dependency>

If using Gradle, add:

compile group: 'io.cucumber', name: 'cucumber-picocontainer', version: ‚7.8.1’

To your build.gradle file.

Now let's go back to our example code. The implementation of DI using PicoContainer is pretty straightforward. First, we have to create a container class that will hold the common data:

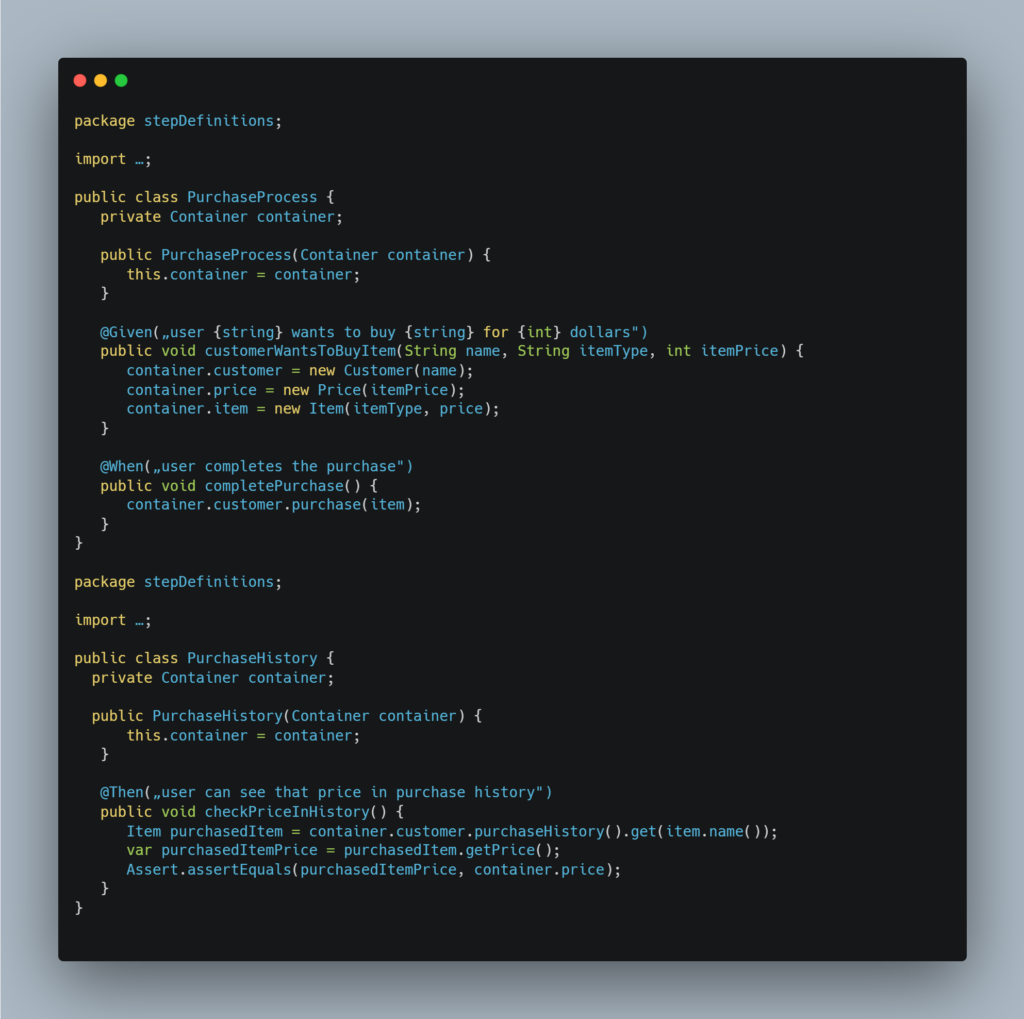

Then we need to add a constructor injection to implement the PurchaseProcess and PurchaseHistory classes. This boils down to the following:

- creating a reference variable of the Container class in the current step classes

- initializing the reference variable through a constructor

Once the changes above are applied, the example should look like this:

Conclusion

PicoContainer is lightweight and easy to implement. It also requires minimal changes to your existing code, helping to keep it lean and readable. These qualities make it a perfect fit for any Cucumber-JVM project since sharing test context between classes is a question of 'when' and not 'if' in essentially any test suite that will grow beyond a few scenarios.

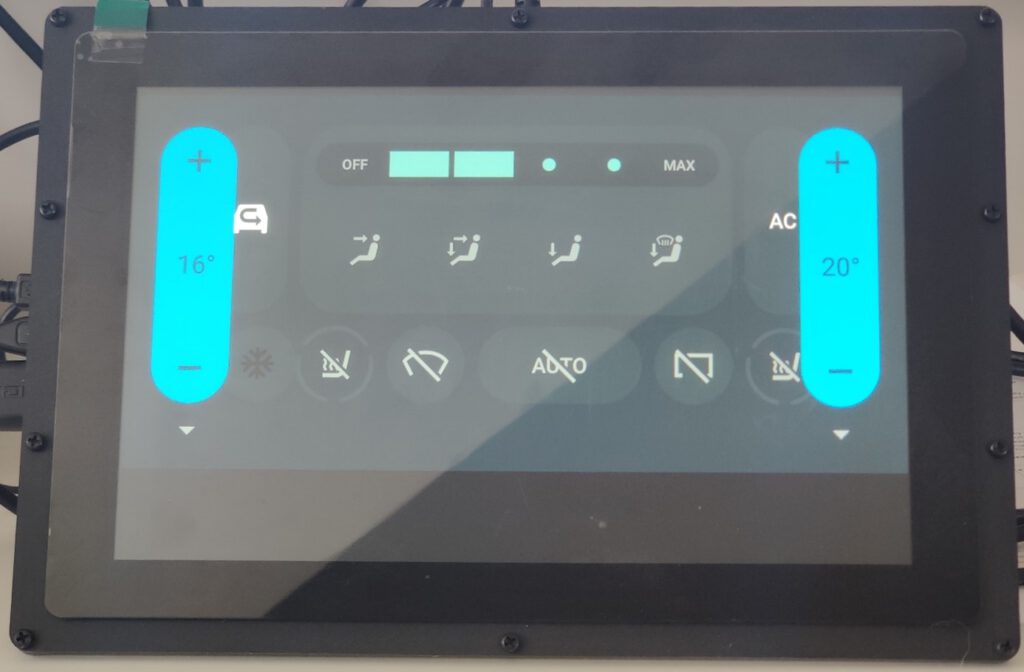

Build and run Android Automotive OS on Raspberry Pi 4B

Have you ever wanted to build your own Android? It’s easy according to the official manual, but it’s getting harder on a Windows (or Mac) machine, or if you’d like to run it on physical hardware. Still too easy? Let’s build Android Automotive OS – the same source code, but another layer of complexity. In this manual, we’ll cover all steps needed to build and run Android Automotive OS 11 AOSP on Raspberry Pi 4B using Windows. The solution is not perfect, however. The most principal issue is a lack of Google Services because the entire AAOS is on an open-source project and Google doesn’t provide its services this way. Nevertheless, let’s build the open-source version first, and then we can try to face incoming issues.

TL;DR: If you don't want to configure and build the system step-by-step, follow the simplified instruction at https://github.com/grapeup/aaos_11_local_manifest

Prerequisites

Hardware

If you want to run the system on a physical device, you need one. I use the Raspberry Pi 4 model B with 8GB of RAM ( https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ ). By the way, if you want to build and run an emulator from the source, it’s also possible, but there is a small limitation – packaging the emulator to a zip file, moving it to another computer, or even running it under Android Studio was introduced in Android 12.

To power your Raspberry, you need a power adapter (USB C, min. 5V 3A). I use the Raspberry-official 5.1V 3A model. You can also power the Raspberry computer from your desktop/laptop’s USB port, especially if you’re going to debug it via a serial connection. Check the “If it doesn’t work” section below for the required hardware.

Another piece of hardware needed is an SD card. In theory, 4GB is all you need, however, I recommend buying a larger card to have some extra space for your applications on Android . I use 32GB and 64GB cards. You’ll also need a built-in or external card reader. I use the latter.

The next step is a screen. It’s optional but fancy. You can connect your mouse and optionally keyboard to your Raspberry Pi via USB and connect any display you have via micro-HDMI but using a touch screen is much more intuitive. I use a Waveshare 10-inch screen dedicated to Raspberry ( https://www.waveshare.com/wiki/10.1inch_HDMI_LCD_(B)_(with_case ). The screen box has a place to screw the Raspberry too, so you don’t need any extra case. You can also buy it with a power adapter and a display cable.

If you don’t buy a bundle, make sure you have all necessary accessories: micro-HDMI – HDMI cable to connect a screen (Waveshare or any other), USB A – USB mini A cable to connect a touch sensor of the screen, USB mini A 5V 3A adapter to power the screen.

Of course, you need a computer. In this manual, we use a Windows machine with at least 512GB of storage (the Android source is huge) and 16GB of RAM.

Software

You can probably build everything in pure Windows, but the recommended method is to use WSL. I assume you already have it installed, so just make sure you have the newest WSL2 version. If you have never used WSL before, see the full manual here https://learn.microsoft.com/en-us/windows/wsl/install .

WSL adjustments

The standard WSL installation uses a too-small virtual drive and limited RAM, so you need to adjust it.

Let’s start with the disk. Make sure the WSL is shut down by running ‘wsl –shutdown’ in the command prompt. Open Windows Command Prompt with admin privileges and enter ‘diskpart ’. Then run ‘select vdisk file=”<path to WSL drive file>”’. For me, the path is “C:\Users\<user>\AppData\Local\Packages\CanonicalGroupLimited.Ubuntu_<WSL_instance_id>\LocalState\ext4.vhdx ”. Now you can expand it with the command ‘expand vdisk maximum=512000’. Around 300GB is enough for Android 11, but if you want to play with multiple branches of Android at the same time, you need more space. Now you can close the diskpart with the ‘exit’ command. Next, open the WSL and run ‘sudo resize2fs /dev/sdb 512000M’. I assume you have only a single drive attached to the WSL and it’s visible in the Linux subsystem as /dev/sdb. You can check it with the commands ‘sudo mount -t devtmpfs none /dev || mount | grep ext4’.

Now, let’s adjust the memory. Stop the WSL again. Open your home directory in Windows and open .wslconfig file. Create it if this file doesn’t exist yet. In the file, you need to create a [wsl2] section and memory configuration. The complete file should look like this:

[wsl2]

memory=16GB

As you can see, I’ve attached 16GB to the virtual machine. It’s assigned dynamically, according to needs, but you must be aware that the virtual machine can take all of it, so if you allow it to eat your entire RAM, it can force your Windows to use a hard disk to survive (which will slow everything down significantly).

Disclaimer:

Building Android on 8 cores, 16GB RAM machine takes around 4 hours. If you want to do it faster or you don’t have a computer powerful enough at your home or office, you can consider building in the cloud. Simple AWS EC2 with 32 cores and 64GB of memory does the job in one hour (to download and build) and costs just a few bucks.

Let's get ready to rumble!!!

..or at least to building.

More prerequisites

We need some software but not much. Just install the following packages. This set of libraries allows you to build Android Automotive OS versions 11 to 13.

sudo apt update && sudo apt install gcc-aarch64-linux-gnu libssl-dev bc python3-setuptools repo python-is-python3 libncurses5 zip unzip make gcc flex bison -y

Source code downloading

Let’s create a home directory for our android and download sources.

mkdir android-11.0.0_r48 && cd android-11.0.0_r48

repo init -u https://android.googlesource.com/platform/manifest -b android-11.0.0_r48 --partial-clone --clone-filter=blob:limit=10M

git clone https://github.com/android-rpi/local_manifests .repo/local_manifests -b arpi-11

repo sync

“repo init” will ask you for some personal data. It’s collected by Google. To learn more about optimizations here, check this manual: https://docs.gitlab.com/ee/topics/git/partial_clone.html . ‘git clone’ adds a custom code from Android RPI project ( https://groups.google.com/g/android-rpi ) with drivers for your Raspberry Pi. The project is great and it’s all you need if you want to run Android TV. To run Android Automotive OS, we’ll need to adjust it slightly (see “Adjustments” section below). ‘repo sync’ will take some time because you need to download around 200GB of code. If you have a powerful machine with a great Internet connection, you can use more threads with ‘-j X’ parameter added to the command. The default thread count is 4. If you have already synchronized your source code without android-rpi local manifest, you need to add --force-sync to the ’repo-sync’ command.

Adjustments

All changes from this section can download as a patch file attached to this article. See the “Path file” section below.

Android-rpi provides Android TV for Raspberry Pi. We need to remove the TV-related configuration and add the Automotive OS one.

Let’s start with removing unnecessary files. You can safely remove the following files and directories:

- device/arpi/rpi4/overlay/frameworks/base/core/res/res/anim

- device/arpi/rpi4/overlay/frameworks/base/core/res/res/values-television

- device/arpi/rpi4/overlay/frameworks/base/core/res/res/values/dimens.xml

- device/arpi/rpi4/overlay/frameworks/base/core/res/res/values/styles.xml

- device/arpi/rpi4/overlay/frameworks/base/packages

To remove the user notice screen not needed in Automotive OS, create a new file device/arpi/rpi4/overlay/packages/services/Car/service/res/values/config.xml with the following content:

<?xml version="1.0" encoding="utf-8"?>

<resources xmlns:xliff="urn:oasis:names:tc:xliff:document:1.2">

<string name="config_userNoticeUiService" translatable="false"></string>

</resources>

To replace the basic TV overlay config with the Automotive overlay config, adjust the configuration in device/arpi/rpi4/overlay/frameworks/base/core/res/res/values/config.xml.

Remove:

- <integer name="config_defaultUiModeType">4</integer> <!--disable forced UI_MODE_TYPE_TELEVISION, as there is only MODE_TYPE_CAR available now-->

- <integer name="config_longPressOnHomeBehavior">0</integer> <!--disable home button long press action-->

- <bool name="config_hasPermanentDpad">true</bool> <!--disable D-pad-->

- <string name="config_appsAuthorizedForSharedAccounts">;com.android.tv.settings;</string> <!--remove unnecessary access for a shared account as there is nothing in com.android.tv.* now-->

… and add:

- <bool name="config_showNavigationBar">true</bool> <!--enable software navigation bar, as there is no hardwave one-->

- <bool name="config_enableMultiUserUI">true</bool> <!--enable multi-user, as AAOS uses background processes called in another sessions -->

- <integer name="config_multiuserMaximumUsers">8</integer> <!--set maximum user count, required by the previous one-->

Now let’s rename the android-rpi original /device/arpi/rpi4/rpi4.mk to /device/arpi/rpi4/android_rpi4.mk. We need to adjust the file a little bit.

Remove the following variables definitions. Some of them you will re-create in another file, while some of them are not needed.

- PRODUCT_NAME

- PRODUCT_DEVICE

- PRODUCT_BRAND

- PRODUCT_MANUFACTURER

- PRODUCT_MODEL

- USE_OEM_TV_APP

- DEVICE_PACKAGE_OVERLAYS

- PRODUCT_AAPT_PRED_CONFIG

- PRODUCT_CHARACTERISTICS

Remove the following invocations. We’re going to call necessary external files in another mk file.

- $(call inherit-product, device/google/atv/products/atv_base.mk)

- $(call inherit-product, $(SRC_TARGET_DIR)/product/core_64_bit_only.mk)

- $(call inherit-product, $(SRC_TARGET_DIR)/product/languages_full.mk)

- include frameworks/native/build/tablet-10in-xhdpi-2048-dalvik-heap.mk

In PRODUCT_PROPERTY_OVERRIDES remove debug.drm.mode.force=1280x720 and add the following properties. This way you remove the TV launcher configuration and override the default automotive launcher configuration.

- dalvik.vm.dex2oat64.enabled=true

- keyguard.no_require_sim=true

- ro.logd.size=1m

Now you need to completely remove the android-rpi TV launcher and add RenderScript support for Automotive OS. In PRODUCT_PACKAGES remove:

- DeskClock

- RpLauncher

… and add:

- librs_jni

Create a new rpi4.mk4 with the following content:

PRODUCT_PACKAGE_OVERLAYS += device/generic/car/common/overlay

$(call inherit-product, $(SRC_TARGET_DIR)/product/core_64_bit.mk)

$(call inherit-product, device/arpi/rpi4/android_rpi4.mk)

$(call inherit-product, $(SRC_TARGET_DIR)/product/full_base.mk)

$(call inherit-product, device/generic/car/common/car.mk)

PRODUCT_SYSTEM_DEFAULT_PROPERTIES += \

android.car.number_pre_created_users=1 \

android.car.number_pre_created_guests=1 \

android.car.user_hal_enabled=true

DEVICE_PACKAGE_OVERLAYS += device/arpi/rpi4/overlay device/generic/car/car_x86_64/overlay

PRODUCT_NAME := rpi4

PRODUCT_DEVICE := rpi4

PRODUCT_BRAND := arpi

PRODUCT_MODEL := Raspberry Pi 4

PRODUCT_MANUFACTURER := GrapeUp and ARPi

Due to the license, remember to add yourself to the PRODUCT_MANUFACTURER field.

Now you have two mk files – android-rpi.mk is borrowed from android-rpi project and adjusted, and rpi.mk contains all changes for Automotive OS. You can meld these two together or split them into more files if you’d like, but keep in mind that the order of invocations does matter (not always, but still).

As Android Automotive OS is bigger than Android TV, we need to increase the system partition size to fit the new image. In device/arpi/rpi4/BoardConfig.mk increase BOARD_SYSTEMIMAGE_PARTITION_SIZE to 2147483648, which means 2GB.

You need to apply all changes described in https://github.com/android-rpi/device_arpi_rpi4/wiki/arpi-11-:-framework-patch too. Those changes are also included in the patch file attached .

If you use the 8GB version of Raspberry Pi, you need to replace device/arpi/rpi4/boot/fixup4.dat and device/arpi/rpi4/boot/start4.elf files. You can find the correct files in the patch file attached or you may use the official source: https://github.com/raspberrypi/firmware/tree/master/boot . It’s probably not needed for 4GB version of Raspberry, but I don’t have such a device for verification.

Path file

If you prefer to apply all changes described above as a single file, go to your sources directory and run ‘git apply --no-index <path_to_patch_file> ’. There is also a boot animation replaced in the patch file . If you want to create one of your own, follow the official manual here: https://android.googlesource.com/platform/frameworks/base/+/master/cmds/bootanimation/FORMAT.md .

Now we can build!

That’s the easy part. Just run a few commands from below. Firstly, we need to build a custom kernel for Android. ‘merge_config.sh’ script just configures all variables required. The first ‘make’ command builds the real kernel image (which can take a few minutes). Next, build a device tree configuration.

cd kernel/arpi

ARCH=arm64 scripts/kconfig/merge_config.sh arch/arm64/configs/bcm2711_defconfig kernel/configs/android-base.config kernel/configs/android-recommended.config

ARCH=arm64 CROSS_COMPILE=aarch64-linux-gnu- make Image.gz

ARCH=arm64 CROSS_COMPILE=aarch64-linux-gnu- DTC_FLAGS="-@" make broadcom/bcm2711-rpi-4-b.dtb

ARCH=arm64 CROSS_COMPILE=aarch64-linux-gnu- DTC_FLAGS="-@" make overlays/vc4-kms-v3d-pi4.dtbo

cd ../..

The next part is to build the entire system. “envsetup.sh” script sets up variables and adds custom commands to your terminal. Then you can pick the correct pair of Android versions and devices with “lunch”. You can run it without parameters to see (almost) all possible configurations. In this step, you can decide to build a system for dedicated hardware (eg. Dragonboard) and switch between phone/tablet/TV/wearable/automotive versions of Android. The last line is a real building. We can’t run just “make” or “m”, as documented in the official manual because we need to create three specific images to write them on an SD card and run them on Raspberry Pi. Replace “X” in ‘-j X’ with the number of threads you want to use. The default value is the number of logical processors on your computer.

source build/envsetup.sh

lunch rpi4-eng

make -j X ramdisk systemimage vendorimage

I hope you have a delightful book next to you because the last building takes a few hours depending on your hardware. Good news! If you need to adapt something and build again, in most cases you just need the three last lines (or even just the very last one) – to source the environment setup, to pick the lunch configuration, and to make ramdisk, system, and vendor images. And it takes hours for the first time only.

Creating an SD card

This step seems to be easy, but it isn’t. WSL doesn’t contain drivers for the USB card reader. You can use usbip to forward a device from Windows to the subsystem, but it doesn’t work well with external storage without partitions. The solution is a VirtualBox with Ubuntu installed. Just create a virtual machine, install Ubuntu, and install Guest Additions. Then you can connect the card reader and pass it to the virtual machine. If you’re a minimalist, you can use Ubuntu Server or any other Linux distribution you like. Be aware that using a card reader built into your computer may be challenging depending on drivers and the hardware connection type (USB-like, or PCI-e).

Now, you need to create a partition schema on the SD card. I assume the card is loaded to the system as /dev/sdb. Check your configuration before continuing to avoid formatting your main drive or another disaster. Let’s erase the current partition table and create a new one.

sudo umount /dev/sdb*

sudo wipefs -a /dev/sdb

sudo fdisk /dev/sdb

Now let’s create partitions. First, you need a 128MB active partition of the W95 FAT32 (LBA) type, second a 2GB Linux partition, third a 128MB Linux partition, and the rest of the card for user data (also Linux partition). Here’s how to navigate through fdisk menu to configure all partitions.

Welcome to fdisk (util-linux 2.37.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x179fb9bc.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (1-4, default 1):

First sector (2048-61022207, default 2048):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (2048-61022207, default 61022207): +128M

Created a new partition 1 of type 'Linux' and of size 128 MiB.

Command (m for help): a

Selected partition 1

The bootable flag on partition 1 is enabled now.

Command (m for help): t

Selected partition 1

Hex code or alias (type L to list all): 0c

Changed type of partition 'Linux' to 'W95 FAT32 (LBA)'.

Command (m for help): n

Partition type

p primary (1 primary, 0 extended, 3 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (2-4, default 2):

First sector (264192-61022207, default 264192):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (264192-61022207, default 61022207): +2G

Created a new partition 2 of type 'Linux' and of size 2 GiB.

Command (m for help): n

Partition type

p primary (2 primary, 0 extended, 2 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (3,4, default 3):

First sector (4458496-61022207, default 4458496):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (4458496-61022207, default 61022207): +128M

Created a new partition 3 of type 'Linux' and of size 128 MiB.

Command (m for help): n

Partition type

p primary (3 primary, 0 extended, 1 free)

e extended (container for logical partitions)

Select (default e): p

Selected partition 4

First sector (4720640-61022207, default 4720640):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (4720640-61022207, default 61022207):

Created a new partition 4 of type 'Linux' and of size 26,8 GiB.

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

Be careful with the last partition – fdisk proposes creating an extended one by default, which is not needed in our use case.

If you don’t do it for the first time on the same card, you may see a warning that some partition already contains a file system signature. You can safely agree to remove it.

Partition #4 contains a ext4 signature.

Do you want to remove the signature? [Y]es/[N]o: Y

The signature will be removed by a write command.

Now, let’s supply file systems for the first and the last partitions.

sudo mkdosfs -F 32 /dev/sdb1

sudo mkfs.ext4 -L userdata /dev/sdb4

We won’t write anything to the last one, as it’s for user data only and will be filled by Android during the first boot. But we need to write some files for the first one. Let’s create a temporary mount directory under /mnt/p1 (like “partition 1”), mount it, and copy the necessary files from the Android built in the earlier section. It’s strange, but we’re going to copy files from one virtual machine (WSL) to another (VirtualBox). You can simply mount a wsl drive as a shared folder in VirtualBox. If you don’t see a WSL drive in your Windows Explorer, you can map it as a network drive using “\\wsl$\Ubuntu” path

sudo mkdir /mnt/p1

sudo mount /dev/sdb1 /mnt/p1

sudo mkdir /mnt/p1/overlays

cd <PATH_TO_YOUR_ANDROID_SOURCES_IN_WSL>

sudo cp device/arpi/rpi4/boot/* /mnt/p1

sudo cp kernel/arpi/arch/arm64/boot/Image.gz /mnt/p1

sudo cp kernel/arpi/arch/arm64/boot/dts/broadcom/bcm2711-rpi-4-b.dtb /mnt/p1

sudo cp kernel/arpi/arch/arm/boot/dts/overlays/vc4-kms-v3d-pi4.dtbo /mnt/p1/overlays/

sudo cp out/target/product/rpi4/ramdisk.img /mnt/p1

sudo umount /mnt/p1

sudo rm -rf /mnt/p1

If you’re looking at the official android-rpi project manual, there is a different path for vc4-kms-v3d-pi4.dtbo file. That’s OK – they use a symbolic link we are unable to use in this filesystem.

Sometimes, you can see an error message when creating an “overlays” directory. It happens from time to time, because “mount” returns to the console before really mounting the drive. In such a case, just call “mkdir” again. Be aware of that, especially if you’re going to copy-paste the entire listing from above.

Now, let’s copy the two remaining partitions. If you’re struggling with dd command (it may hang), you can try to copy big *.img files from WSL to VirtualBox first.

cd <PATH_TO_YOUR_ANDROID_SOURCES_IN_WSL>/out/target/product/rpi4/

sudo dd if=system.img of=/dev/sdb2 bs=1M status=progress

sudo dd if=vendor.img of=/dev/sdb3 bs=1M status=progress

Congratulations!

You’re done. You’ve downloaded, prepared, built, and saved your own Android Automotive OS. Now you can put the SD card into Raspberry, and connect all cables (make sure you connect the Raspberry power cable at the end). There is no “power” button, and it doesn’t matter which micro-HDMI or USB port of Raspberry you use. It’s now time to enjoy your own Android Automotive OS!

If it doesn’t work

The world is not perfect and sometimes something goes terribly wrong. If you see the boot animation for a long time, or if your device crashes in a loop a few seconds after boot, you can try to debug it.

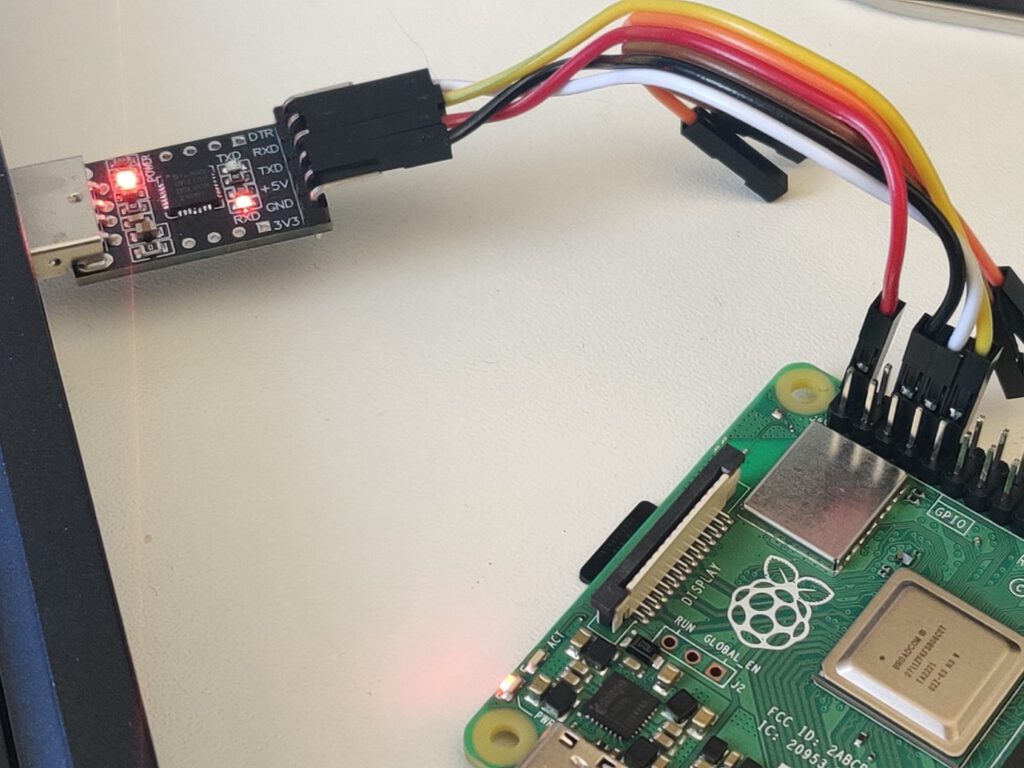

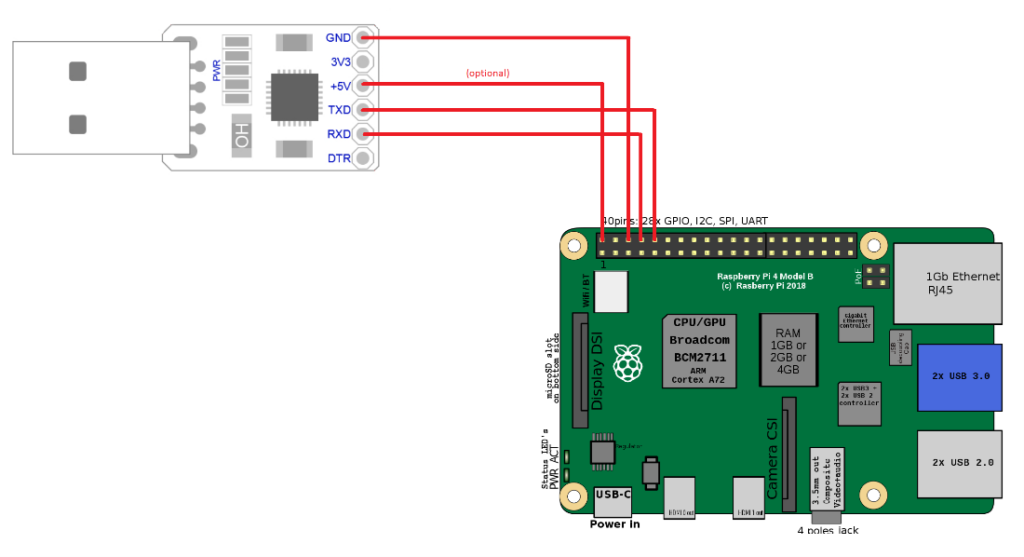

You need a USB-TTL bridge (like this one https://www.sunrom.com/p/cp2102-usb-ttl-uart-module ) to connect the correct pins from the Raspberry to the USB. You need to connect pin 6 (ground) to the GND pin in the bridge, pin 8 (RXD) to the RXD pin of the bridge and pin 10 (TXD) to the TXD pin of the bridge. If you want to power the Raspberry via the bridge, you need to also connect pin 2 to +5V pin of the bridge. It is not recommended, because of the lower voltage, so your system might be unstable. If you don’t have a power adapter, you can simply connect a USB cable between your computer port and the USB C port of the Raspberry. Warning! You can’t connect both a +5V connector here and a USB C power port of the Raspberry or you’ll burn the Raspberry board.

See the schema for the connection reference.

Depending on your bridge model, you may need an additional driver. I use this one: https://www.silabs.com/developers/usb-to-uart-bridge-vcp-drivers?tab=downloads .

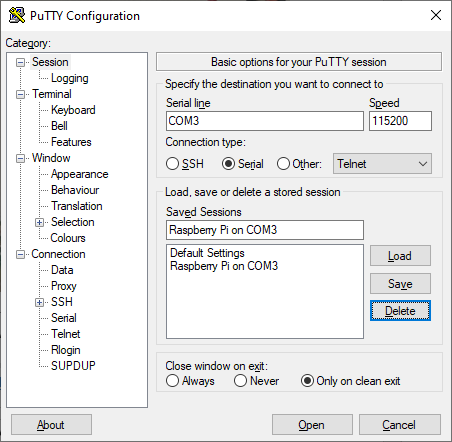

When you connect the +5V pin or USB-C power adapter ( again, never both at the same time! ), the Raspberry starts. Now you can open Putty and connect to your Android. Pick Serial and type COMX in the serial line definition. X is the number of your COM port. You can check it in your device manager – look for “USB to UART bridge (COM4)” or the like. The correct connection speed is 115200.

Open the connection to access the Android shell. By default, Android spawns all logs to the standard output, so you should see a lot of them right away. Anyway, it’s dual-side communication and you have full terminal access to your Android if you need to check/modify any file or call any command. Just strike enter to see the command prompt. You can even call ‘su’ to gain superuser access on your Android running on Raspberry.

Connecting via adb

If you want to use Android Debug Bridge to connect to your device, using a USB bridge is not enough. When running ‘adb devices’ on your computer, the Android Automotive OS running on Raspberry is not recognized. You can use a putty connection to turn on a TCP debug bridge instead.

Make sure you’ve connected Android and your computer to the same network. Open putty and connect to the running Android console. Log as root and enable ADB via TCP. Then check your IP address.

su

setprop service.adb.tcp.port 5555

stop adbd

start adbd

ifconfig wlan0

Now, using your Windows command line, go to the Android SDK platform-tools directory and connect to your device. As you can see, the IP address of mine Raspnberry is 192.168.50.47.

cd %userprofile%\AppData\Local\Android\Sdk\platform-tools

adb connect 192.168.50.47:5555

If you want to use ADB in WSL, you can link the Windows program in WSL using the following command.

sudo ln -s /mnt/c/Users/<your_username>/AppData/Local/Android/Sdk/platform-tools/adb.exe /usr/bin/adb

You can now use ADB to use logcat without putty or to install applications without manually transferring APK files to the SD card. Fun fact – if you use a USB bridge and USB power supply, you have two physical connections between your computer and the Android-running one, however, you still need to use ADB over WiFi to use the debug bridge.

Summary: Android Automotive OS on Raspberry Pi 4B

That’s all. Android Automotive OS 11 is running. You can install the apps you need, take them to your car, or do whatever you’d like with them. Using hardware instead of an emulator allows you to manually manage partitions (e.g. for the OTA update ) and connect external devices like a real GPS receiver or accelerometer. The bare metal hardware overperforms the emulator too. And most importantly – you can easily take it to your car, receive power from an in-car USB port, connect it to an ODB-II port and run real-life tests without a laptop.

Is your project ready? Great, now you can try doing the same with AAOS 13 .

In-car infotainment: How to build long-term relationships and unlock new revenue streams

With the automotive industry seeking to create a more comfortable and enjoyable travel experience for its customers, automakers and designers are stepping up investment in in-car infotainment solutions. These technologies aim to personalize the travel experience by providing noise reduction, in-vehicle sound zones, and immersive audio/video content tailored to the driver's lifestyle. And more importantly, they contribute to building OEM customer loyalty, new revenue streams, and creating lasting customer relationships.

Four in-car entertainment strategies, almost endless opportunities to build a new offer

There is no turning back from in-car entertainment. The development of flat screens, broadband internet access, and the possibility of personalization and customization of content to the viewer are the reasons why no one is willing to give up this form of service.

For automakers, it's an opportunity to build a stronger relationship with their customers and create new (often subscription-based) revenue models .

For the driver and especially for passengers, it's about overcoming the boredom associated with long journeys and introducing solutions into vehicles that until now have been known for their high-end audiophile systems.

No wonder an increasing number of OEMs today are consciously developing the concept of the digital cockpit of the future . This approach is supposed to shift the focus from the practical functionality of the vehicle to providing compelling entertainment.

Most popular in-car infotainment strategies

Of course, the process of change is not simple and cannot happen overnight. It requires continuous growth in vehicle technological performance, partially related to the car's GPU, E/E Architecture, 5G Internet access, and the development of new display forms. Nevertheless, OEMs have at their disposal one of the following four strategies for developing such solutions.

1. Rear-Seat Entertainment

Using this strategy, entertainment is streamed to displays located in the rear seats or on the roof of the vehicle (for example, the BMW i7)... The user does not need to use a smartphone or tablet in addition to this solution to enjoy the leisure experience.

2. Any-Seat Entertainment

In this solution, content streaming can apply to all displays of the infotainment system in the car (including the driver's seat) - again without having to use smartphones and tablets to navigate. This concept allows video viewing, e.g., while charging an electric car.

3. Augmented Entertainment

A step further can be taken by manufacturers who incorporate the capabilities of 4D cinema and AR applications into their offer. This approach creates a whole new kind of driver's cockpit and develops a unique UX that may become a vehicle's trademark. This type of screen has a variety of potential applications, such as displaying destination-related information, traffic warnings, or intelligent terrain mapping of other vehicles. In the near future, this technology may also be used for augmented marketing, such as showing interesting offers and discounts from nearby restaurants, shops, or malls on the windscreen.

4. Live Entertainment

Finally, real-time entertainment services enable car-to-car interaction and/or social networking among vehicle users. These types of solutions can be used to share viewing, commentary, and engagement among vehicle users through cultural events or music concerts, for example.

New possibilities of infotainment in cars. The 2022 perspective

The ability to combine modern audio-visual technologies with data analytic s and personalized user information allows OEMs to create entirely new services and products, often ones previously unrelated to the automotive industry.

- Among these the most notable is, of course, streaming video and audio content, which is usually based on partnerships with third parties (e.g., Netflix, Amazon, YouTube, Apple TV or Spotify) and usually operate on a subscription model. In the future, the car is likely to become another medium where we can simply activate the service and continue watching videos, as we move from the living room to the vehicle.

- Access to gaming platforms and services that will provide an interactive way of spending time for the driver and passengers while traveling or charging their EV.

- A tourist offer that is related to visiting a particular place - acts as a virtual travel agency, which highlights specific points on the map and expands the knowledge of the visited locations (also in the form of quizzes or riddles for passengers).

- Audiovisual, highly personalized ads, tailored to the context of where we are traveling, the driver's needs, or resulting from the wear and tear of the vehicle components.

Dynamic growth of the in-car infotainment market

With each passing year, there are new examples of interesting implementations in in-car entertainment, and the market itself is growing rapidly. In 2020, it was valued at more than $21 million, while by 2028 it is predicted to grow to more than $37.5 million, registering a CAGR of 7.5% from 2021 to 2028.

Below is a rundown of the most interesting advanced infotainment solutions that are becoming increasingly popular and have been implemented by specific OEMs in recent times.

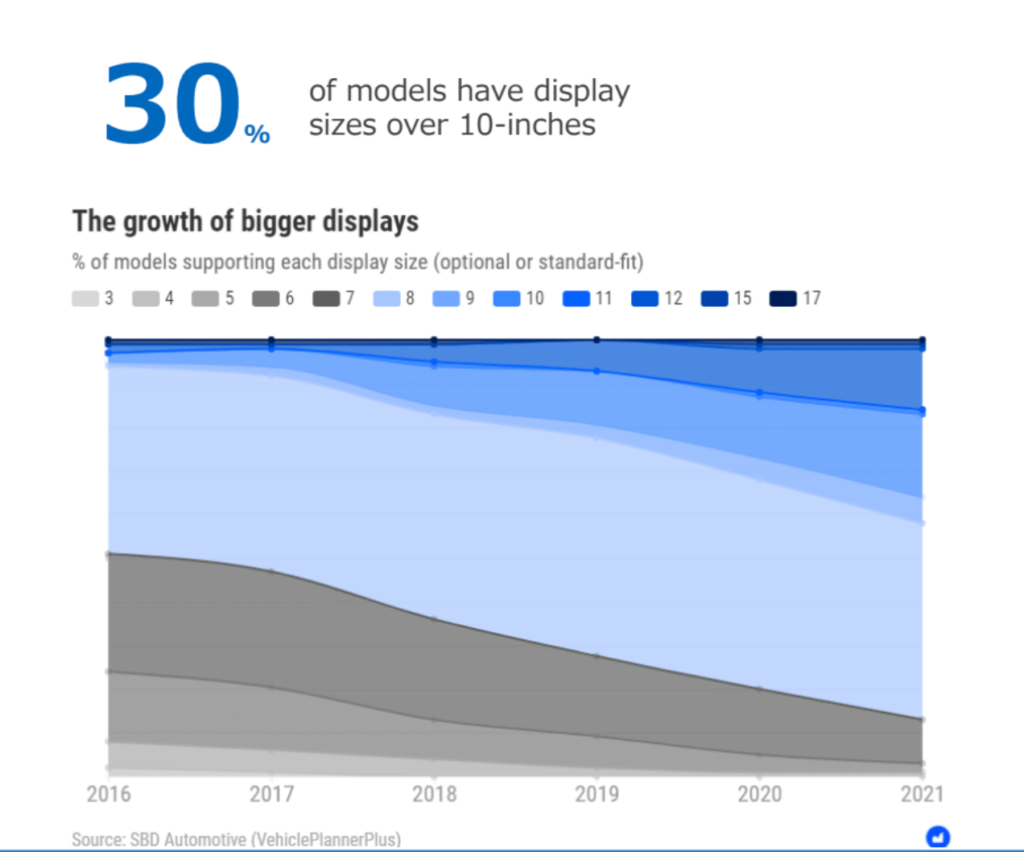

- Larger display and video entertainment on demand

With large displays and the increasingly widespread streaming of video and audio content into the vehicle, a substantial base is becoming established for the development of infotainment in the automotive industry.

It is all about introducing more immersive services and features in vehicles. This is especially relevant for EVs, because, after all, drivers need to pass their time somehow while charging their vehicles at public stations, and this can be done from the comfort of the car seat.

Total spending on video on demand is expected to reach $127 billion by 2025 (11.8% CAGR). Video streaming alone is expected to account for 86% of revenues .