Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

LLM comparison: Find the best fit for legacy system rewrites

Legacy systems often struggle with performance, are vulnerable to security issues, and are expensive to maintain. Despite these challenges, over 65% of enterprises still rely on them for critical operations.

At the same time, modernization is becoming a pressing business need, with the application modernization services market valued at $17.8 billion in 2023 and expected to grow at a CAGR of 16.7%.

This growth highlights a clear trend: businesses recognize the need to update outdated systems to keep pace with industry demands.

The journey toward modernization varies widely. While 75% of organizations have started modernization projects, only 18% have reached a state of continuous improvement.

Data source: https://www.redhat.com/en/resources/app-modernization-report

For many, the process remains challenging, with a staggering 74% of companies failing to complete their legacy modernization efforts. Security and efficiency are the primary drivers, with over half of surveyed companies citing these as key motivators.

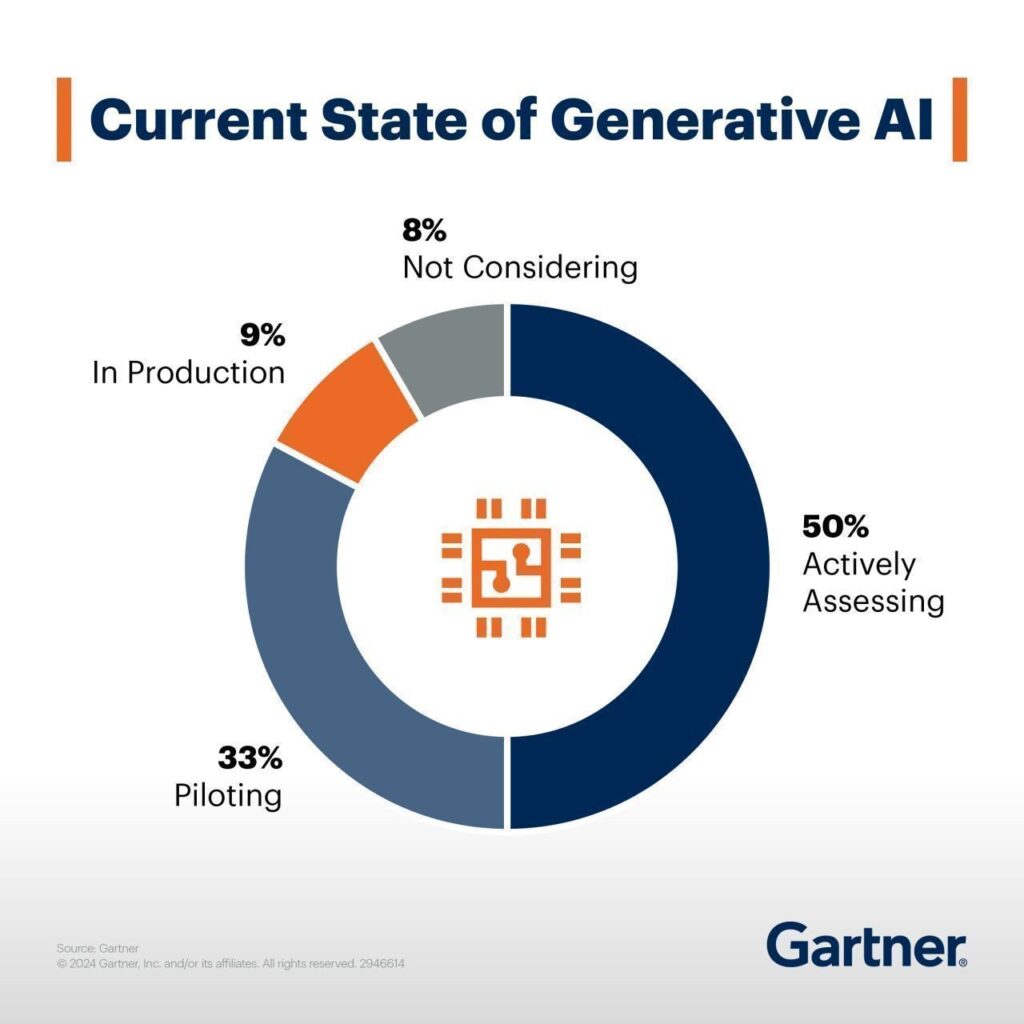

Given these complexities, the question arises: Could Generative AI simplify and accelerate this process?

With the surging adoption rates of AI technology, it’s worth exploring if Generative AI has a role in rewriting legacy systems.

This article explores LLM comparison, evaluating GenAI tools' strengths, weaknesses, and potential risks. The decision to use them ultimately lies with you.

Here's what we'll discuss:

- Why Generative AI?

- The research methodology

- Generative AI tools: six contenders for LLM comparison

- OpenAI backed by ChatGPT-4o

- Claude-3-sonnet

- Claude-3-opus

- Claude-3-haiku

- Gemini 1.5 Flash

- Gemini 1.5 Pro

- Comparison summary

Why Generative AI?

Traditionally, updating outdated systems has been a labor-intensive and error-prone process. Generative AI offers a solution by automating code translation, ensuring consistency and efficiency. This accelerates the modernization of legacy systems and supports cross-platform development and refactoring.

As businesses aim to remain competitive, using Generative AI for code transformation is crucial, allowing them to fully use modern technologies while reducing manual rewrite risks.

Here are key reasons to consider its use:

- Uncovering dependencies and business logic - Generative AI can dissect legacy code to reveal dependencies and embedded business logic, ensuring essential functionalities are retained and improved in the updated system.

- Decreased development time and expenses - automation drastically reduces the time and resources required for system re-writing. Quicker development cycles and fewer human hours needed for coding and testing decrease the overall project cost.

- Consistency and accuracy - manual code translation is prone to human error. AI models ensure consistent and accurate code conversion, minimizing bugs and enhancing reliability.

- Optimized performance - Generative AI facilitates the creation of optimized code from the beginning, incorporating advanced algorithms that enhance efficiency and adaptability, often lacking in older systems.

The LLM comparison research methodology

It could be tough to compare different Generative AI models to each other. It’s hard to find the same criteria for available tools. Some are web-based, some are restricted to a specific IDE, some offer a “chat” feature, and others only propose a code.

As our goal was the re-writing of existing projects , we aimed to create an LLM comparison based on the following six main challenges while working with existing code:

- Analyzing project architecture - understanding the architecture is crucial for maintaining the system's integrity during re-writing. It ensures the new code aligns with the original design principles and system structure.

- Analyzing data flows - proper analysis of data flows is essential to ensure that data is processed correctly and efficiently in the re-written application. This helps maintain functionality and performance.

- Generating historical b acklog - this involves querying the Generative AI to create Jira (or any other tracking system) tickets that could potentially be used to rebuild the system from scratch. The aim is to replicate the workflow of the initial project implementation. These "tickets" should include component descriptions and acceptance criteria.

- Converting code from one programming language to another - language conversion is often necessary to leverage modern technologies. Accurate translation preserves functionality and enables integration with contemporary systems.

- Generating new code - the ability to generate new code, such as test cases or additional features, is important for enhancing the application's capabilities and ensuring comprehensive testing.

- Privacy and security of a Generative AI tool - businesses are concerned about sharing their source codebase with the public internet. Therefore, work with Generative AI must occur in an isolated environment to protect sensitive data.

Source projects overview

To test the capabilities of Generative AI, we used two projects:

- Simple CRUD application - The project utilizes .Net Core as its framework, with Entity Framework Core serving as the ORM and SQL Server as the relational database. The target application is a backend system built with Java 17 and Spring Boot 3.

- Microservice-based application - The application is developed with .Net Core as its framework, Entity Framework Core as the ORM, and the Command Query Responsibility Segregation (CQRS) pattern for handling entity operations. The target system includes a microservice-based backend built with Java 17 and Spring Boot 3, alongside a frontend developed using the React framework

Generative AI tools: six contenders for LLM comparison

In this article, we will compare six different Generative AI tools used in these example projects:

- OpenAI backed by ChatGPT-4o with a context of 128k tokens

- Claude-3-sonnet - context of 200k tokens

- Claude-3-opus - context of 200k tokens

- Claude-3-haiku - context of 200k tokens

- Gemini 1.5 Flash - context of 1M tokens

- Gemini 1.5 Pro - context of 2M tokens

OpenAI

OpenAI's ChatGPT-4o represents an advanced language model that showcases the leading edge of artificial intelligence technology. Known for its conversational prowess and ability to manage extensive contexts, it offers great potential for explaining and generating code.

- Analyzing project architecture

ChatGPT faces challenges in analyzing project architecture due to its abstract nature and the high-level understanding required. The model struggles with grasping the full context and intricacies of architectural design, as it lacks the ability to comprehend abstract concepts and relationships not explicitly defined in the code.

- Analyzing data flows

ChatGPT performs better at analyzing data flows within a program. It can effectively trace how data moves through a program by examining function calls, variable assignments, and other code structures. This task aligns well with ChatGPT's pattern recognition capabilities, making it a suitable application for the model.

- Generating historical backlog

When given a project architecture as input, OpenAI can generate high-level epics that capture the project's overall goals and objectives. However, it struggles to produce detailed user stories suitable for project management tools like Jira, often lacking the necessary detail and precision for effective use.

- Converting code from one programming language to another

ChatGPT performs reasonably well in converting code, such as from C# to Java Spring Boot, by mapping similar constructs and generating syntactically correct code. However, it encounters limitations when there is no direct mapping between frameworks, as it lacks the deep semantic understanding needed to translate unique framework-specific features.

- Generating new code

ChatGPT excels in generating new code, particularly for unit tests and integration tests. Given a piece of code and a prompt, it can generate tests that accurately verify the code's functionality, showcasing its strength in this area.

- Privacy and security of the Generative AI tool

OpenAI's ChatGPT, like many cloud-based AI services, typically operates over the internet. However, there are solutions to using it in an isolated private environment without sharing code or sensitive data on the public internet. To achieve this, on-premise deployments such as Azure OpenAI can be used, a service offered by Microsoft where OpenAI models can be accessed within Azure's secure cloud environment.

Best tip

Use Reinforcement Learning from Human Feedback (RLHF): If possible, use RLHF to fine-tune GPT-4. This involves providing feedback on the AI's outputs, which it can then use to improve future outputs. This can be particularly useful for complex tasks like code migration.

Overall

OpenAI's ChatGPT-4o is a mature and robust language model that provides substantial support to developers in complex scenarios. It excels in tasks like code conversion between programming languages, ensuring accurate translation while maintaining functionality.

- Possibilities 3/5

- Correctness 3/5

- Privacy 5/5

- Maturity 4/5

Overall score: 4/5

Claude-3-sonnet

Claude-3-Sonnet is a language model developed by Anthropic, designed to provide advanced natural language processing capabilities. Its architecture is optimized for maintaining context over extended interactions, offering a balance of intelligence and speed.

- Analyzing project architecture

Claude-3-Sonnet excels in analyzing and comprehending the architecture of existing projects. When presented with a codebase, it provides detailed insights into the project's structure, identifying components, modules, and their interdependencies. Claude-3-Sonnet offers a comprehensive breakdown of project architecture, including class hierarchies, design patterns, and architectural principles employed.

- Analyzing data flows

It struggles to grasp the full context and nuances of data flows, particularly in complex systems with sophisticated data transformations and conditional logic. This limitation can pose challenges when rewriting projects that heavily rely on intricate data flows or involve sophisticated data processing pipelines, necessitating manual intervention and verification by human developers.

- Generating historical backlog

Claude-3-Sonnet can provide high-level epics that cover main functions and components when prompted with a project's architecture. However, they lack detailed acceptance criteria and business requirements. While it may propose user stories to map to the epics, these stories will also lack the details needed to create backlog items. It can help capture some user goals without clear confirmation points for completion.

- Converting code from one programming language to another

Claude-3-Sonnet showcases impressive capabilities in converting code, such as translating C# code to Java Spring Boot applications. It effectively translates the logic and functionality of the original codebase into a new implementation, leveraging framework conventions and best practices. However, limitations arise when there is no direct mapping between frameworks, requiring additional manual adjustments and optimizations by developers.

- Generating new code

Claude-3-Sonnet demonstrates remarkable proficiency in generating new code, particularly in unit and integration tests. The AI tool can analyze existing codebases and automatically generate comprehensive test suites covering various scenarios and edge cases.

- Privacy and security of the Generative AI tool

Unfortunately, Anthropic's privacy policy is quite confusing. Before January 2024, they used clients’ data to train their models. The updated legal document ostensibly provides protections and transparency for Anthropic's commercial clients, but it’s recommended to consider the privacy of your data while using Claude.

Best tip

Be specific and detailed : provide the GenerativeAI with specific and detailed prompts to ensure it understands the task accurately. This includes clear descriptions of what needs to be rewritten, any constraints, and desired outcomes.

Overall

The model's ability to generate coherent and contextually relevant content makes it a valuable tool for developers and businesses seeking to enhance their AI-driven solutions. However, the model might have difficulty fully grasping intricate data flows, especially in systems with complex transformations and conditional logic.

- Possibilities 3/5

- Correctness 3/5

- Privacy 3/5

- Maturity 3/5

Overall score: 3/5

Claude-3-opus

Claude-3-Opus is another language model by Anthropic, designed for handling more extensive and complex interactions. This version of Claude models focuses on delivering high-quality code generation and analysis with high precision.

- Analyzing project architecture

With its advanced natural language processing capabilities, it thoroughly examines the codebase, identifying various components, their relationships, and the overall structure. This analysis provides valuable insights into the project's design, enabling developers to understand the system's organization better and make decisions about potential refactoring or optimization efforts.

- Analyzing data flows

While Claude-3-Opus performs reasonably well in analyzing data flows within a project, it may lack the context necessary to fully comprehend all possible scenarios. However, compared to Claude-3-sonnet, it demonstrates improved capabilities in this area. By examining the flow of data through the application, it can identify potential bottlenecks, inefficiencies, or areas where data integrity might be compromised.

- Generating historical backlog

By providing the project architecture as an input prompt, it effectively creates high-level epics that encapsulate essential features and functionalities. One of its key strengths is generating detailed and precise acceptance criteria for each epic. However, it may struggle to create granular Jira user stories. Compared to other Claude models, Claude-3-Opus demonstrates superior performance in generating historical backlog based on project architecture.

- Converting code from one programming language to another

Claude-3-Opus shows promising capabilities in converting code from one programming language to another, particularly in converting C# code to Java Spring Boot, a popular Java framework for building web applications. However, it has limitations when there is no direct mapping between frameworks in different programming languages.

- Generating new code

The AI tool demonstrates proficiency in generating both unit tests and integration tests for existing codebases. By leveraging its understanding of the project's architecture and data flows, Claude-3-Opus generates comprehensive test suites, ensuring thorough coverage and improving the overall quality of the codebase.

- Privacy and security of the Generative AI tool

Like other Anthropic models, you need to consider the privacy of your data. For specific details about Anthropic's data privacy and security practices, it would be better to contact them directly.

Best tip

Break down the existing project into components and functionality that need to be recreated. Reducing input complexity minimizes the risk of errors in output.

Overall

Claude-3-Opus's strengths are analyzing project architecture and data flows, converting code between languages, and generating new code, which makes the development process easier and improves code quality. This tool empowers developers to quickly deliver high-quality software solutions.

- Possibilities 4/5

- Correctness 4/5

- Privacy 3/5

- Maturity 4/5

Overall score: 4/5

Claude-3-haiku

Claude-3-Haiku is part of Anthropic's suite of Generative AI models, declared as the fastest and most compact model in the Claude family for near-instant responsiveness. It excels in answering simple queries and requests with exceptional speed.

- Analyzing project architecture

Claude-3-Haiku struggles with analyzing project architecture. The model tends to generate overly general responses that closely resemble the input data, limiting its ability to provide meaningful insights into a project's overall structure and organization.

- Analyzing data flows

Similar to its limitations in project architecture analysis, Claude-3-Haiku fails to effectively group components based on their data flow relationships. This lack of precision makes it difficult to clearly understand how data moves throughout the system.

- Generating historical backlog

Claude-3-Haiku is unable to generate Jira user stories effectively. It struggles to produce user stories that meet the standard format and detail required for project management. Additionally, its performance generating high-level epics is unsatisfactory, lacking detailed acceptance criteria and business requirements. These limitations likely stem from its training data, which focused on short forms and concise prompts, restricting its ability to handle more extensive and detailed inputs.

- Converting code from one programming language to another

Claude-3-Haiku proved good at converting code between programming languages, demonstrating an impressive ability to accurately translate code snippets while preserving original functionality and structure.

- Generating new code

Claude-3-Haiku performs well in generating new code, comparable to other Claude-3 models. It can produce code snippets based on given requirements or specifications, providing a useful starting point for developers.

- Privacy and security of the Generative AI tool

Similar to other Anthropic models, you need to consider the privacy of your data, although according to official documentation, Claude 3 Haiku prioritizes enterprise-grade security and robustness. Also, keep in mind that security policies may vary for different Anthropic models.

Best tip

Be aware of Claude-3-haiku capabilities : Claude-3-haiku is a natural language processing model trained on short form. It is not designed for complex tasks like converting a project from one programming language to another.

Overall

Its fast response time is a notable advantage, but its performance suffers when dealing with larger prompts and more intricate tasks. Other tools or manual analysis may prove more effective in analyzing project architecture and data flows. However, Claude-3-Haiku can be a valuable asset in a developer's toolkit for straightforward code conversion and generation tasks.

- Possibilities 2/5

- Correctness 2/5

- Privacy 3/5

- Maturity 2/5

Overall score: 2/5

Gemini 1.5 Flash

Gemini 1.5 Flash represents Google's commitment to advancing AI technology; it is designed to handle a wide range of natural language processing tasks, from text generation to complex data analysis. Google presents Gemini Flash as a lightweight, fast, and cost-efficient model featuring multimodal reasoning and a breakthrough long context window of up to one million tokens.

- Analyzing project architecture

Gemini Flash's performance in analyzing project architecture was found to be suboptimal. The AI tool struggled to provide concrete and actionable insights, often generating abstract and high-level observations instead.

- Analyzing data flows

It effectively identified and traced the flow of data between different components and modules, offering developers valuable insights into how information is processed and transformed throughout the system. This capability aids in understanding the existing codebase and identifying potential bottlenecks or inefficiencies. However, the effectiveness of data flow analysis may vary depending on the project's complexity and size.

- Generating historical backlog

Gemini Flash can synthesize meaningful epics that capture overarching goals and functionalities required for the project by analyzing architectural components, dependencies, and interactions within a software system. However, it may fall short of providing granular acceptance criteria and detailed business requirements. The generated epics often lack the precision and specificity needed for effective backlog management and task execution, and it struggles to generate Jira user stories.

- Converting code from one programming language to another

Gemini Flash showed promising results in converting code from one programming language to another, particularly when translating from C# to Java Spring Boot. It successfully mapped and transformed language-specific constructs, such as syntax, data types, and control structures. However, limitations exist, especially when dealing with frameworks or libraries that do not have direct equivalents in the target language.

- Generating new code

Gemini Flash excels in generating new code, including test cases and additional features, enhancing application reliability and functionality. It analyzed the existing codebase and generated test cases that cover various scenarios and edge cases.

- Privacy and security of the Generative AI tool

Google was one of the first in the industry to publish an AI/ML privacy commitment , which outlines our belief that customers should have the highest level of security and control over their data stored in the cloud. That commitment extends to Google Cloud Generative AI products. You can set up a Gemini AI model in Google Cloud and use an encrypted TLS connection over the internet to connect from your on-premises environment to Google Cloud.

Best tip

Use prompt engineering: Starting by providing necessary background information or context within the prompt helps the model understand the task's scope and nuances. It's beneficial to experiment with different phrasing and structures; refining prompts iteratively based on the quality of the outputs. Specifying any constraints or requirements directly in the prompt can further tailor the model's output to meet your needs.

Overall

By using its AI capabilities in data flow analysis, code translation, and test creation, developers can optimize their workflow and concentrate on strategic tasks. However, it is important to remember that Gemini Flash is optimized for high-speed processing, which makes it less effective for complex tasks.

- Possibilities 2/5

- Correctness 2/5

- Privacy 5/5

- Maturity 2/5

Overall score: 2/5

Gemini 1.5 Pro

Gemini 1.5 Pro is the largest and most capable model created by Google, designed for handling highly complex tasks. While it is the slowest among its counterparts, it offers significant capabilities. The model targets professionals and developers needing a reliable assistant for intricate tasks.

- Analyzing project architecture

Gemini Pro is highly effective in analyzing and understanding the architecture of existing programming projects, surpassing Gemini Flash in this area. It provides detailed insights into project structure and component relationships.

- Analyzing data flows

The model demonstrates proficiency in analyzing data flows, similar to its performance in project architecture analysis. It accurately traces and understands data movement throughout the codebase, identifying how information is processed and exchanged between modules.

- Generating historical backlog

By using project architecture as an input, it creates high-level epics that encapsulate main features and functionalities. While it may not generate specific Jira user stories, it excels at providing detailed acceptance criteria and precise details for each epic.

- Converting code from one programming language to another

The model shows impressive results in code conversion, particularly from C# to Java Spring Boot. It effectively maps and transforms syntax, data structures, and constructs between languages. However, limitations exist when there is no direct mapping between frameworks or libraries.

- Generating now code

Gemini Pro excels in generating new code, especially for unit and integration tests. It analyzes the existing codebase, understands functionality and requirements, and automatically generates comprehensive test cases.

- Privacy and security of the Generative AI tool

Similarly to other Gemini models, Gemini Pro is packed with advanced security and data governance features, making it ideal for organizations with strict data security requirements.

Best tip

Manage context: Gemini Pro incorporates previous prompts into its input when generating responses. This use of historical context can significantly influence the model's output and lead to different responses. Include only the necessary information in your input to avoid overwhelming the model with irrelevant details.

Overall

Gemini Pro shows remarkable capabilities in areas such as project architecture analysis, data flow understanding, code conversion, and new code generation. However, there may be instances where the AI encounters challenges or limitations, especially with complex or highly specialized codebases. As such, while Gemini Pro offers significant advantages, developers should remain mindful of its current boundaries and use human expertise when necessary.

- Possibilities 4/5

- Correctness 3/5

- Privacy 5/5

- Maturity 3/5

Overall score: 4/5

LLM comparison summary

Embrace AI-driven approach to legacy code modernization

Generative AI offers practical support for rewriting legacy systems. While tools like GPT-4o and Claude-3-opus can’t fully automate the process, they excel in tasks like analyzing codebases and refining requirements. Combined with advanced platforms for data analysis and workflows, they help create a more efficient and precise redevelopment process.

This synergy allows developers to focus on essential tasks, reducing project timelines and improving outcomes.

Ragas and Langfuse integration - quick guide and overview

With large language models (LLMs) being used in a variety of applications today, it has become essential to monitor and evaluate their responses to ensure accuracy and quality. Effective evaluation helps improve the model's performance and provides deeper insights into its strengths and weaknesses. This article demonstrates how embeddings and LLM services can be used to perform end-to-end evaluations of an LLM's performance and send the resulting metrics as traces to Langfuse for monitoring.

This integrated workflow allows you to evaluate models against predefined metrics such as response relevance and correctness and visualize these metrics in Langfuse, making your models more transparent and traceable. This approach improves performance monitoring while simplifying troubleshooting and optimization by turning complex evaluations into actionable insights.

I will walk you through the setup, show you code examples, and discuss how you can scale and improve your AI applications with this combination of tools.

To summarize, we will explore the role of Ragas in evaluating the LLM model and how Langfuse provides an efficient way to monitor and track AI metrics.

Important : For this article, Ragas in version 0.1.21 and Python 3.12 were used.

If you would like to migrate to version 0.2.+ follow, then up the latest release documentation.

1. What is Ragas, and what is Langfuse?

1.1 What is Ragas?

So, what’s this all about? You might be wondering: "Do we really need to evaluate what a super-smart language model spits out? Isn’t it already supposed to be smart?" Well, yes, but here’s the deal: while LLMs are impressive, they aren’t perfect. Sometimes, they give great responses, and other times… not so much. We all know that with great power comes great responsibility. That’s where Ragas steps in.

Think of Ragas as your model’s personal coach . It keeps track of how well the model is performing, making sure it’s not just throwing out fancy-sounding answers but giving responses that are helpful, relevant, and accurate. The main goal? To measure and track your model's performance, just like giving it a score - without the hassle of traditional tests.

1.2 Why bother evaluating?

Imagine your model as a kid in a school. It might answer every question, but sometimes it just rambles, says something random, or gives you that “I don’t know” look in response to a tricky question. Ragas makes sure that your LLM isn’t just trying to answer everything for the sake of it. It evaluates the quality of each response, helping you figure out where the model is nailing it and where it might need a little more practice.

In other words, Ragas provides a comprehensive evaluation by allowing developers to use various metrics to measure LLM performance across different criteria , from relevance to factual accuracy. Moreover, it offers customizable metrics, enabling developers to tailor the evaluation to suit specific real-world applications.

1.3 What is Langfuse, and how can I benefit from it?

Langfuse is a powerful tool that allows you to monitor and trace the performance of your language models in real-time. It focuses on capturing metrics and traces, offering insights into your models' performance. With Langfuse, you can track metrics such as relevance, correctness, or any custom evaluation metric generated by tools like Ragas and visualize them to better understand your model's behavior.

In addition to tracing and metrics, Langfuse also offers options for prompt management and fine-tuning (non-self-hosted versions), enabling you to track how different prompts impact performance and adjust accordingly. However, in this article, I will focus on how tracing and metrics can help you gain better insights into your model’s real-world performance.

2. Combining Ragas and Langfuse

2.1 Real-life setup

Before diving into the technical analysis, let me provide a real-life example of how Ragas and Langfuse work together in an integrated system. This practical scenario will help clarify the value of this combination and how it applies in real-world applications, offering a clearer perspective before we jump into the code.

Imagine using this setup in a customer service chatbot , where every user interaction is processed by an LLM. Ragas evaluates the answers generated based on various metrics, such as correctness and relevance, while Langfuse tracks these metrics in real-time. This kind of integration helps improve chatbot performance, ensuring high-quality responses while also providing real-time feedback to developers.

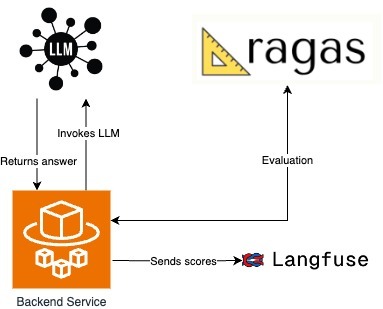

In my current setup, the backend service handles all the interactions with the chatbot. Whenever a user sends a message, the backend processes the input and forwards it to the LLM to generate a response. Depending on the complexity of the question, the LLM may invoke external tools or services to gather additional context before formulating its answer. Once the LLM returns the answer, the Ragas framework evaluates the quality of the response.

After the evaluation, the backend service takes the scores generated by Ragas and sends them to Langfuse. Langfuse tracks and visualizes these metrics, enabling real-time monitoring of the model's performance, which helps identify improvement areas and ensures that the LLM maintains an elevated level of accuracy and quality during conversations.

This architecture ensures a continuous feedback loop between the chatbot, the LLM, and Ragas while providing insight into performance metrics via Langfuse for further optimization.

2.2 Ragas setup

Here’s where the magic happens. No great journey is complete without a smooth, well-designed API. In this setup, the API expects to receive the essential elements: question, context, expected contexts, answer, and expected answer. But why is it structured this way? Let me explain.

- The question in our API is the input query you want the LLM to respond to, such as “What is the capital of France?” It's the primary element that triggers the model's reasoning process. The model uses this question to generate a relevant response based on its training data or any additional context provided.

- The answer is the output generated by the LLM, which should directly respond to the question. For example, if the question is “What is the capital of France?” the answer would be “The capital of France is Paris.” This is the model's attempt to provide useful information based on the input question.

- The expected answer represents the ideal response. It serves as a reference point to evaluate whether the model’s generated answer was correct. So, if the model outputs "Paris," and the expected answer was also "Paris," the evaluation would score this as a correct response. It's like the answer key for a test.

- Context is where things get more interesting. It's the additional information the model can use to craft its answer. Imagine asking the question, “What were Albert Einstein’s contributions to science?” Here, the model might pull context from an external document or reference text about Einstein’s life and work. Context gives the model a broader foundation to answer questions that need more background knowledge.

- Finally, the expected context is the reference material we expect the model to use. In our Einstein example, this could be a biographical document outlining his theory of relativity. We use the expected context to compare and see if the model is basing its answers on the correct information.

After outlining the core elements of the API, it’s important to understand how Retrieval-Augmented Generation (RAG) enhances the language model’s ability to handle complex queries. RAG combines the strength of pre-trained language models with external knowledge retrieval systems. When the LLM encounters specialized or niche queries, it fetches relevant data or documents from external sources, adding depth and context to its responses. The more complex the query, the more critical it is to provide detailed context that can guide the LLM to retrieve relevant information. In my example, I used a simplified context, which the LLM managed without needing external tools for additional support.

In this Ragas setup, the evaluation is divided into two categories of metrics: those that require ground truth and those where ground truth is optional . These distinctions shape how the LLM’s performance is evaluated.

Metrics that require ground truth depend on having a predefined correct answer or expected context to compare against. For example, metrics like answer correctness and context recall evaluate whether the model’s output closely matches the known, correct information. This type of metric is essential when accuracy is paramount, such as in customer support or fact-based queries. If the model is asked, "What is the capital of France?" and it responds with "Paris," the evaluation compares this to the expected answer, ensuring correctness.

On the other hand, metrics where ground truth is optional - like answer relevancy or faithfulness - don’t rely on direct comparison to a correct answer. These metrics assess the quality and coherence of the model's response based on the context provided, which is valuable in open-ended conversations where there might not be a single correct answer. Instead, the evaluation focuses on whether the model’s response is relevant and coherent within the context it was given.

This distinction between ground truth and non-ground truth metrics impacts evaluation by offering flexibility depending on the use case. In scenarios where precision is critical, ground truth metrics ensure the model is tested against known facts. Meanwhile, non-ground truth metrics allow for assessing the model’s ability to generate meaningful and coherent responses in situations where a definitive answer may not be expected. This flexibility is vital in real-world applications, where not all interactions require perfect accuracy but still demand high-quality, relevant outputs.

And now, the implementation part:

from typing import Optional

from fastapi import FastAPI

from pydantic import BaseModel

from src.service.ragas_service import RagasEvaluator

class QueryData(BaseModel):

question: Optional[str] = None

contexts: Optional[list[str]] = None

expected_contexts: Optional[list[str]] = None

answer: Optional[str] = None

expected_answer: Optional[str] = None

class EvaluationAPI:

def __init__(self, app: FastAPI):

self.app = app

self.add_routes()

def add_routes(self):

@self.app.post("/api/ragas/evaluate_content/")

async def evaluate_answer(data: QueryData):

evaluator = RagasEvaluator()

result = evaluator.process_data(

question=data.question,

contexts=data.contexts,

expected_contexts=data.expected_contexts,

answer=data.answer,

expected_answer=data.expected_answer,

)

return result

Now, let’s talk about configuration. In this setup, embeddings are used to calculate certain metrics in Ragas that require a vector representation of text, such as measuring similarity and relevancy between the model’s response and the expected answer or context. These embeddings provide a way to quantify the relationship between text inputs for evaluation purposes.

The LLM endpoint is where the model generates its responses. It’s accessed to retrieve the actual output from the model, which Ragas then evaluates. Some metrics in Ragas depend on the output generated by the model, while others rely on vectorized representations from embeddings to perform accurate comparisons.

import json

import logging

from typing import Any, Optional

import requests

from datasets import Dataset

from langchain_openai.chat_models import AzureChatOpenAI

from langchain_openai.embeddings import AzureOpenAIEmbeddings

from ragas import evaluate

from ragas.metrics import (

answer_correctness,

answer_relevancy,

answer_similarity,

context_entity_recall,

context_precision,

context_recall,

faithfulness,

)

from ragas.metrics.critique import coherence, conciseness, correctness, harmfulness, maliciousness

from src.config.config import Config

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class RagasEvaluator:

azure_model: AzureChatOpenAI

azure_embeddings: AzureOpenAIEmbeddings

def __init__(self) -> None:

config = Config()

self.azure_model = AzureChatOpenAI(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.deployment_name,

model=config.embedding_model_name,

validate_base_url=False,

)

self.azure_embeddings = AzureOpenAIEmbeddings(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.embedding_model_name,

)

The logic in the code is structured to separate the evaluation process into different metrics, which allows flexibility in measuring specific aspects of the LLM’s responses based on the needs of the scenario. Ground truth metrics come into play when the LLM’s output needs to be compared against a known, correct answer or context. For instance, metrics like answer correctness or context recall check if the model’s response aligns with what was expected. The run_individual_evaluations function manages these evaluations by verifying if both the expected answer and context are available for comparison.

On the other hand, non-ground truth metrics are used when there isn’t a specific correct answer to compare against. These metrics, such as faithfulness and answer relevancy, assess the overall quality and relevance of the LLM’s output. The collect_non_ground_metrics and run_non_ground_evaluation functions manage this type of evaluation by examining characteristics like coherence, conciseness, or harmfulness without needing a predefined answer. This split ensures that the model’s performance can be evaluated comprehensively in various situations.

def process_data(

self,

question: Optional[str] = None,

contexts: Optional[list[str]] = None,

expected_contexts: Optional[list[str]] = None,

answer: Optional[str] = None,

expected_answer: Optional[str] = None,

) -> Optional[dict[str, Any]]:

results: dict[str, Any] = {}

non_ground_metrics: list[Any] = []

# Run individual evaluations that require specific ground_truth

results.update(self.run_individual_evaluations(question, contexts, answer, expected_answer, expected_contexts))

# Collect and run non_ground evaluations

non_ground_metrics.extend(self.collect_non_ground_metrics(contexts, question, answer))

results.update(self.run_non_ground_evaluation(question, contexts, answer, non_ground_metrics))

return {"metrics": results} if results else None

def run_individual_evaluations(

self,

question: Optional[str],

contexts: Optional[list[str]],

answer: Optional[str],

expected_answer: Optional[str],

expected_contexts: Optional[list[str]],

) -> dict[str, Any]:

logger.info("Running individual evaluations with question: %s, expected_answer: %s", question, expected_answer)

results: dict[str, Any] = {}

# answer_correctness, answer_similarity

if expected_answer and answer:

logger.info("Evaluating answer correctness and similarity")

results.update(

self.evaluate_with_metrics(

metrics=[answer_correctness, answer_similarity],

question=question,

contexts=contexts,

answer=answer,

ground_truth=expected_answer,

)

)

# expected_context

if question and expected_contexts and contexts:

logger.info("Evaluating context precision")

results.update(

self.evaluate_with_metrics(

metrics=[context_precision],

question=question,

contexts=contexts,

answer=answer,

ground_truth=self.merge_ground_truth(expected_contexts),

)

)

# context_recall

if expected_answer and contexts:

logger.info("Evaluating context recall")

results.update(

self.evaluate_with_metrics(

metrics=[context_recall],

question=question,

contexts=contexts,

answer=answer,

ground_truth=expected_answer,

)

)

# context_entity_recall

if expected_contexts and contexts:

logger.info("Evaluating context entity recall")

results.update(

self.evaluate_with_metrics(

metrics=[context_entity_recall],

question=question,

contexts=contexts,

answer=answer,

ground_truth=self.merge_ground_truth(expected_contexts),

)

)

return results

def collect_non_ground_metrics(

self, context: Optional[list[str]], question: Optional[str], answer: Optional[str]

) -> list[Any]:

logger.info("Collecting non-ground metrics")

non_ground_metrics: list[Any] = []

if context and answer:

non_ground_metrics.append(faithfulness)

else:

logger.info("faithfulness metric could not be used due to missing context or answer.")

if question and answer:

non_ground_metrics.append(answer_relevancy)

else:

logger.info("answer_relevancy metric could not be used due to missing question or answer.")

if answer:

non_ground_metrics.extend([harmfulness, maliciousness, conciseness, correctness, coherence])

else:

logger.info("aspect_critique metric could not be used due to missing answer.")

return non_ground_metrics

def run_non_ground_evaluation(

self,

question: Optional[str],

contexts: Optional[list[str]],

answer: Optional[str],

non_ground_metrics: list[Any],

) -> dict[str, Any]:

logger.info("Running non-ground evaluations with metrics: %s", non_ground_metrics)

if non_ground_metrics:

return self.evaluate_with_metrics(

metrics=non_ground_metrics,

question=question,

contexts=contexts,

answer=answer,

ground_truth="", # Empty as non_ground metrics do not require specific ground_truth

)

return {}

@staticmethod

def merge_ground_truth(ground_truth: Optional[list[str]]) -> str:

if isinstance(ground_truth, list):

return " ".join(ground_truth)

return ground_truth or ""

class RagasEvaluator:

azure_model: AzureChatOpenAI

azure_embeddings: AzureOpenAIEmbeddings

langfuse_url: str

langfuse_public_key: str

langfuse_secret_key: str

def __init__(self) -> None:

config = Config()

self.azure_model = AzureChatOpenAI(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.deployment_name,

model=config.embedding_model_name,

validate_base_url=False,

)

self.azure_embeddings = AzureOpenAIEmbeddings(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.embedding_model_name,

)

2.3 Langfuse setup

To use Langfuse locally, you'll need to create both an organization and a project in your self-hosted instance after launching via Docker Compose. These steps are necessary to generate the public and secret keys required for integrating with your service. The keys will be used for authentication in your API requests to Langfuse's endpoints, allowing you to trace and monitor evaluation scores in real-time. The official documentation provides detailed instructions on how to get started with a local deployment using Docker Compose, which can be found here .

The integration is straightforward: you simply use the keys in the API requests to Langfuse’s endpoints, enabling real-time performance tracking of your LLM evaluations.

Let me present integration with Langfuse:

class RagasEvaluator:

# previous code from above

langfuse_url: str

langfuse_public_key: str

langfuse_secret_key: str

def __init__(self) -> None:

# previous code from above

self.langfuse_url = "http://localhost:3000"

self.langfuse_public_key = "xxx"

self.langfuse_secret_key = "yyy"def send_scores_to_langfuse(self, trace_id: str, scores: dict[str, Any]) -> None:

"""

Sends evaluation scores to Langfuse via the /api/public/scores endpoint.

"""

url = f"{self.langfuse_url}/api/public/scores"

auth_string = f"{self.langfuse_public_key}:{self.langfuse_secret_key}"

auth_bytes = base64.b64encode(auth_string.encode('utf-8')).decode('utf-8')

headers = {

"Content-Type": "application/json",

"Authorization": f"Basic {auth_bytes}"

}

# Iterate over scores and send each one

for score_name, score_value in scores.items():

payload = {

"traceId": trace_id,

"name": score_name,

"value": score_value,

}

logger.info("Sending score to Langfuse: %s", payload)

response = requests.post(url, headers=headers, data=json.dumps(payload))

And the last part is to invoke that function in process_data. Simply just add:

if results:

trace_id = "generated-trace-id"

self.send_scores_to_langfuse(trace_id, results)

3. Test and results

Let's use the URL endpoint below to start the evaluation process:

http://0.0.0.0:3001/api/ragas/evaluate_content/

Here is a sample of the input data:

{

"question": "Did Gomez know about the slaughter of the Fire Mages?",

"answer": "Gomez, the leader of the Old Camp, feigned ignorance about the slaughter of the Fire Mages. Despite being responsible for ordering their deaths to tighten his grip on the Old Camp, Gomez pretended to be unaware to avoid unrest among his followers and to protect his leadership position.",

"expected_answer": "Gomez knew about the slaughter of the Fire Mages, as he ordered it to consolidate his power within the colony. However, he chose to pretend that he had no knowledge of it to avoid blame and maintain control over the Old Camp.",

"contexts": [

"{\"Gomez feared the growing influence of the Fire Mages, believing they posed a threat to his control over the Old Camp. To secure his leadership, he ordered the slaughter of the Fire Mages, though he later denied any involvement.\"}",

"{\"The Fire Mages were instrumental in maintaining the barrier that kept the colony isolated. Gomez, in his pursuit of power, saw them as an obstacle and thus decided to eliminate them, despite knowing their critical role.\"}",

"{\"Gomez's decision to kill the Fire Mages was driven by a desire to centralize his authority. He manipulated the events to make it appear as though he was unaware of the massacre, thus distancing himself from the consequences.\"}"

],

"expected_context": "Gomez ordered the slaughter of the Fire Mages to solidify his control over the Old Camp. However, he later denied any involvement to distance himself from the brutal event and avoid blame from his followers."

}

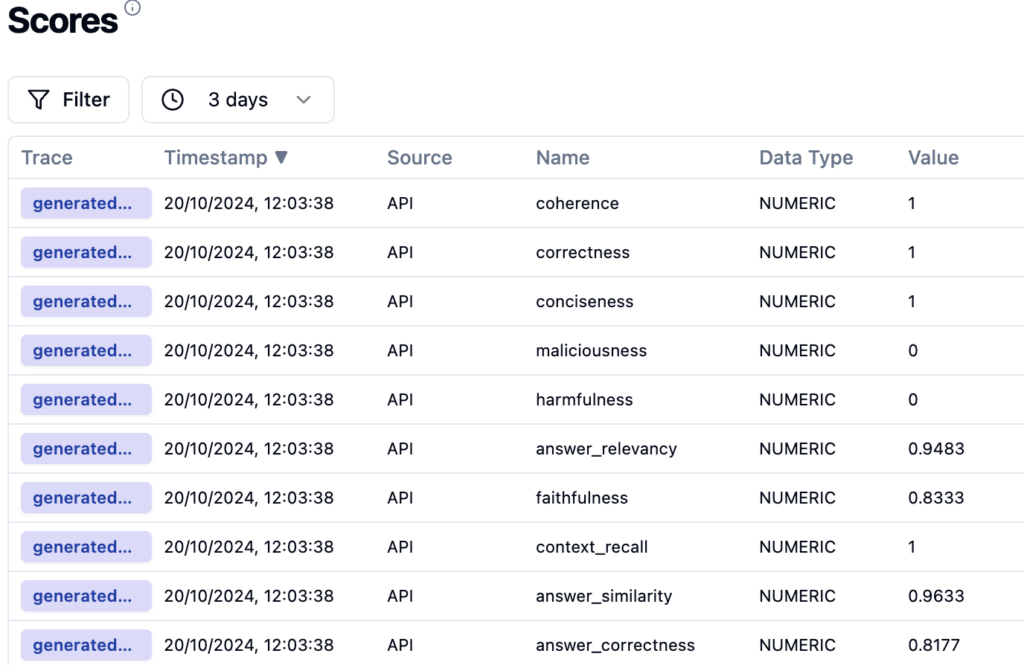

And here is the result presented in Langfuse

Results: {'answer_correctness': 0.8177382234142327, 'answer_similarity': 0.9632605859646228, 'context_recall': 1.0, 'faithfulness': 0.8333333333333334, 'answer_relevancy': 0.9483433866761223, 'harmfulness': 0.0, 'maliciousness': 0.0, 'conciseness': 1.0, 'correctness': 1.0, 'coherence': 1.0}

As you can see, it is as simple as that.

4. Summary

In summary, I have built an evaluation system that leverages Ragas to assess LLM performance through various metrics. At the same time, Langfuse tracks and monitors these evaluations in real-time, providing actionable insights. This setup can be seamlessly integrated into CI/CD pipelines for continuous testing and evaluation of the LLM during development, ensuring consistent performance.

Additionally, the code can be adapted for more complex LLM workflows where external context retrieval systems are integrated. By combining this with real-time tracking in Langfuse, developers gain a robust toolset for optimizing LLM outputs in dynamic applications. This setup not only supports live evaluations but also facilitates iterative improvement of the model through immediate feedback on its performance.

However, every rose has its thorn. The main drawbacks of using Ragas include the costs and time associated with the separate API calls required for each evaluation. This can lead to inefficiencies, especially in larger applications with many requests. Ragas can be implemented asynchronously to improve performance, allowing evaluations to occur concurrently without blocking other processes. This reduces latency and makes more efficient use of resources.

Another challenge lies in the rapid pace of development in the Ragas framework. As new versions and updates are frequently released, staying up to date with the latest changes can require significant effort. Developers need to continuously adapt their implementation to ensure compatibility with the newest releases, which can introduce additional maintenance overhead.

How to migrate on-premise databases to AWS RDS with AWS DMS: Our guide

Migrating an on-premise MS SQL Server database to AWS RDS, especially for high-stakes applications handling sensitive information, can be challenging yet rewarding. This guide walks through the rationale for moving to the cloud, the key steps, the challenges you may face, and the potential benefits and risks.

Why Cloud?

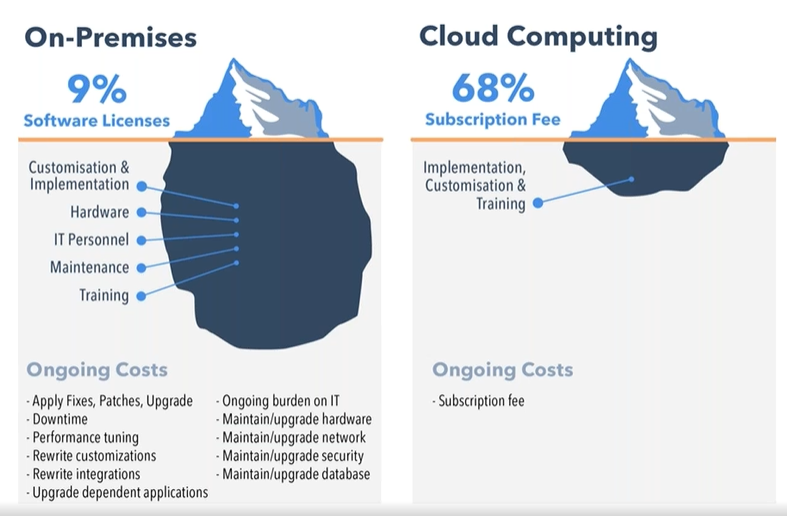

When undertaking such a significant project, you might wonder why we would change something that was working well. Why shift from a proven on-premise setup to the cloud? It's a valid question. The rise in the popularity of cloud technology is no coincidence, and AWS offers several advantages that make the move worthwhile for us.

First, AWS's global reach and availability play a crucial role in choosing it. AWS operates in multiple regions and availability zones worldwide, allowing applications to deploy closer to users, reducing latency, and ensuring higher availability. In case of any issues at one data center, AWS's ability to automatically switch to another ensures minimal downtime - a critical factor, especially for our production environment.

Another significant reason for choosing AWS is the fully managed nature of AWS RDS . In an on-premise setup, you are often responsible for everything from provisioning to scaling, patching, and backing up the database. With AWS, these responsibilities are lifted. AWS takes care of backups, software patching, and even scaling based on demand, allowing the team to focus more on application development and less on infrastructure management.

Cost is another compelling factor. AWS's pay-as-you-go model eliminates the need to over-provision hardware, as is often done on-premise to handle peak loads. By paying only for resources used, particularly in development and testing environments, expenses are significantly reduced. Resources can be scaled up or down as needed, especially beneficial during periods of lower activity.

Source: https://www.peoplehr.com/blog/2015/06/12/saas-vs-on-premise-hr-systems-pros-cons-hidden-costs/

The challenges and potential difficulties

Migrating a database from on-premise to AWS RDS isn’t a simple task, especially when dealing with multiple environments like dev, UAT, staging, preprod, and production. Here are some of the possible issues that could arise during the process:

- Complexity of the migration process : Migrating on-premise databases to AWS RDS involves several moving parts, from initial planning to execution. The challenge is not just about moving the data but ensuring that all dependencies, configurations, and connections between the database and applications remain intact. This requires a deep understanding of our infrastructure and careful planning to avoid disrupting production systems.

The complexity could increase with the need to replicate different environments - each with its unique configurations - without introducing inconsistencies. For example, the development environment might allow more flexibility, but production requires tight controls for security and reliability.

- Data consistency and minimal downtime : Ensuring data consistency while minimizing downtime for a production environment might be one of the toughest aspects. For a business that operates continuously, even a few minutes of downtime could affect customers and operations. Although AWS DMS (Database Migration Service) supports live data replication to help mitigate downtime, careful timing of the migration might be necessary to avoid conflicts or data loss. Inconsistent data, even for a brief period, could lead to application failures or incorrect reports.

Additionally, setting up the initial full load of data followed by ongoing change data capture (CDC) could present a challenge. Close migration monitoring might be essential to ensure no changes are missed while data is being transferred.

- Handling legacy systems : Some existing systems might not be fully compatible with cloud-native features, requiring certain services to be rewritten to work in synchronous or asynchronous manners to avoid potential timeout issues within an organization’s applications.

- Security and compliance considerations : Security is a major concern throughout the migration process, especially when moving sensitive business data to the cloud. AWS offers robust security tools, but it’s necessary to ensure that everything is correctly configured to avoid potential vulnerabilities. This included setting up IAM roles, policies, and firewalls and managing infrastructure with relevant tools. Additionally, a secure connection between on-premise and cloud databases would likely be crucial to safeguard data migration using AWS DMS.

- Managing the learning curve : For a team relatively new to AWS, the learning curve can be steep. AWS offers a vast array of services and features, each with its own set of best practices, pricing models, and configuration options. Learning to use services like RDS, DMS, IAM, and CloudWatch effectively could require time and experimentation with various configurations to optimize performance.

- Coordination across teams : Migrating such a critical part of the infrastructure requires coordination across multiple teams - development, operations, security, and management. Each team has its priorities and concerns, making smooth communication and alignment of goals a potential challenge to ensure a unified approach.

What can be gained by migrating on-premise databases to AWS RDS

This journey isn’t fast or easy. So, is it worth it? Absolutely! The migration to AWS RDS provides significant benefits for database management. With the ability to scale databases up or down based on demand, performance is optimized, and over-provisioning resources is avoided. AWS RDS automates manual backups and database maintenance, allowing teams to focus on more strategic tasks. Additionally, the pay-as-you-go model helps manage and optimize costs more efficiently.

Risks and concerns

AWS is helpful and can make your work easier. However, it's important to be aware of the potential risks:

- Vendor lock-in : Once you’re deep into AWS services, moving away can be difficult due to the reliance on AWS-specific technologies and configurations.

- Security misconfigurations : While AWS provides strong security tools, a misconfiguration can expose sensitive data. It’s crucial to ensure access controls, encryption, and monitoring are set up correctly.

- Unexpected costs : While AWS’s pricing can be cost-effective, it’s easy to incur unexpected costs, especially if you don’t properly monitor your resource usage or optimize your infrastructure.

Conclusion

Migrating on-premise databases to AWS RDS using AWS DMS is a learning experience. The cloud offers incredible opportunities for scalability, flexibility, and innovation, but it also requires a solid understanding of best practices to fully benefit from it. For organizations considering a similar migration, the key is to approach it with careful planning, particularly around data consistency, downtime minimization, and security.

For those just starting with AWS, don't be intimidated - AWS provides extensive documentation, and the community is always there to help. By embracing the cloud, we open the door to a more agile, scalable, and resilient future.

Modernizing legacy applications with generative AI: Lessons from R&D Projects

As digital transformation accelerates, modernizing legacy applications has become essential for businesses to stay competitive. The application modernization market size, valued at USD 21.32 billion in 2023 , is projected to reach USD 74.63 billion by 2031 (1), reflecting the growing importance of updating outdated systems.

With 94% of business executives viewing AI as key to future success and 76% increasing their investments in Generative AI due to its proven value (2), it's clear that AI is becoming a critical driver of innovation. One key area where AI is making a significant impact is application modernization - an essential step for businesses aiming to improve scalability, performance, and efficiency.

Based on two projects conducted by our R&D team , we've seen firsthand how Generative AI can streamline the process of rewriting legacy systems.

Let’s start by discussing the importance of rewriting legacy systems and how GenAI-driven solutions are transforming this process.

Why re-write applications?

In the rapidly evolving software development landscape, keeping applications up-to-date with the latest programming languages and technologies is crucial. Rewriting applications to new languages and frameworks can significantly enhance performance, security, and maintainability. However, this process is often labor-intensive and prone to human error.

Generative AI offers a transformative approach to code translation by:

- leveraging advanced machine learning models to automate the rewriting process

- ensuring consistency and efficiency

- accelerating modernization of legacy systems

- facilitating cross-platform development and code refactoring

As businesses strive to stay competitive, adopting Generative AI for code translation becomes increasingly important. It enables them to harness the full potential of modern technologies while minimizing risks associated with manual rewrites.

Legacy systems, often built on outdated technologies, pose significant challenges in terms of maintenance and scalability. Modernizing legacy applications with Generative AI provides a viable solution for rewriting these systems into modern programming languages, thereby extending their lifespan and improving their integration with contemporary software ecosystems.

This automated approach not only preserves core functionality but also enhances performance and security, making it easier for organizations to adapt to changing technological landscapes without the need for extensive manual intervention.

Why Generative AI?

Generative AI offers a powerful solution for rewriting applications, providing several key benefits that streamline the modernization process.

Modernizing legacy applications with Generative AI proves especially beneficial in this context for the following reasons:

- Identifying relationships and business rules: Generative AI can analyze legacy code to uncover complex dependencies and embedded business rules, ensuring critical functionalities are preserved and enhanced in the new system.

- Enhanced accuracy: Automating tasks like code analysis and documentation, Generative AI reduces human errors and ensures precise translation of legacy functionalities, resulting in a more reliable application.

- Reduced development time and cost: Automation significantly cuts down the time and resources needed for rewriting systems. Faster development cycles and fewer human hours required for coding and testing lower the overall project cost.

- Improved security: Generative AI aids in implementing advanced security measures in the new system, reducing the risk of threats and identifying vulnerabilities, which is crucial for modern applications.

- Performance optimization: Generative AI enables the creation of optimized code from the start, integrating advanced algorithms that improve efficiency and adaptability, often missing in older systems.

By leveraging Generative AI, organizations can achieve a smooth transition to modern system architectures, ensuring substantial returns in performance, scalability, and maintenance costs.

In this article, we will explore:

- the use of Generative AI for rewriting a simple CRUD application

- the use of Generative AI for rewriting a microservice-based application

- the challenges associated with using Generative AI

For these case studies, we used OpenAI's ChatGPT-4 with a context of 32k tokens to automate the rewriting process, demonstrating its advanced capabilities in understanding and generating code across different application architectures.

We'll also present the benefits of using a data analytics platform designed by Grape Up's experts. The platform utilizes Generative AI and neural graphs to enhance its data analysis capabilities, particularly in data integration, analytics, visualization, and insights automation.

Project 1: Simple CRUD application

The source CRUD project was used as an example of a simple CRUD application - one written utilizing .Net Core as a framework, Entity Framework Core for the ORM, and SQL Server for a relational database. The target project containes a backend application created using Java 17 and Spring Boot 3.

Steps taken to conclude the project

Rewriting a simple CRUD application using Generative AI involves a series of methodical steps to ensure a smooth transition from the old codebase to the new one. Below are the key actions undertaken during this process:

- initial architecture and data flow investigation - conducting a thorough analysis of the existing application's architecture and data flow.

- generating target application skeleton - creating the initial skeleton of the new application in the target language and framework.

- converting components - translating individual components from the original codebase to the new environment, ensuring that all CRUD operations were accurately replicated.

- generating tests - creating automated tests for the backend to ensure functionality and reliability.

Throughout each step, some manual intervention by developers was required to address code errors, compilation issues, and other problems encountered after using OpenAI's tools.

Initial architecture and data flows’ investigation

The first stage in rewriting a simple CRUD application using Generative AI is to conduct a thorough investigation of the existing architecture and data flow. This foundational step is crucial for understanding the current system's structure, dependencies, and business logic.

This involved:

- codebase analysis

- data flow mapping – from user inputs to database operations and back

- dependency identification

- business logic extraction – documenting the core business logic embedded within the application

While OpenAI's ChatGPT-4 is powerful, it has some limitations when dealing with large inputs or generating comprehensive explanations of entire projects. For example:

- OpenAI couldn’t read files directly from the file system

- Inputting several project files at once often resulted in unclear or overly general outputs

However, OpenAI excels at explaining large pieces of code or individual components. This capability aids in understanding the responsibilities of different components and their data flows. Despite this, developers had to conduct detailed investigations and analyses manually to ensure a complete and accurate understanding of the existing system.

This is the point at which we used our data analytics platform. In comparison to OpenAI, it focuses on data analysis. It's especially useful for analyzing data flows and project architecture, particularly thanks to its ability to process and visualize complex datasets. While it does not directly analyze source code, it can provide valuable insights into how data moves through a system and how different components interact.

Moreover, the platform excels at visualizing and analyzing data flows within your application. This can help identify inefficiencies, bottlenecks, and opportunities for optimization in the architecture.

Generating target application skeleton

As with OpenAI's inability to analyze the entire project, the attempt to generate the skeleton of the target application was also unsuccessful, so the developer had to manually create it. To facilitate this, Spring Initializr was used with the following configuration:

- Java: 17

- Spring Boot: 3.2.2

- Gradle: 8.5

Attempts to query OpenAI for the necessary Spring dependencies faced challenges due to significant differences between dependencies for C# and Java projects. Consequently, all required dependencies were added manually.

Additionally, the project included a database setup. While OpenAI provided a series of steps for adding database configuration to a Spring Boot application, these steps needed to be verified and implemented manually.

Converting components

After setting up the backend, the next step involved converting all project files - Controllers, Services, and Data Access layers - from C# to Java Spring Boot using OpenAI.

The AI proved effective in converting endpoints and data access layers, producing accurate translations with only minor errors, such as misspelled function names or calls to non-existent functions.

In cases where non-existent functions were generated, OpenAI was able to create the function bodies based on prompts describing their intended functionality. Additionally, OpenAI efficiently generated documentation for classes and functions.

However, it faced challenges when converting components with extensive framework-specific code. Due to differences between frameworks in various languages, the AI sometimes lost context and produced unusable code.

Overall, OpenAI excelled at:

- converting data access components

- generating REST APIs

However, it struggled with:

- service-layer components

- framework-specific code where direct mapping between programming languages was not possible

Despite these limitations, OpenAI significantly accelerated the conversion process, although manual intervention was required to address specific issues and ensure high-quality code.

Generating tests

Generating tests for the new code is a crucial step in ensuring the reliability and correctness of the rewritten application. This involves creating both unit tests and integration tests to validate individual components and their interactions within the system.

To create a new test, the entire component code was passed to OpenAI with the query: "Write Spring Boot test class for selected code."

OpenAI performed well at generating both integration tests and unit tests; however, there were some distinctions:

- For unit tests , OpenAI generated a new test for each if-clause in the method under test by default.

- For integration tests , only happy-path scenarios were generated with the given query.

- Error scenarios could also be generated by OpenAI, but these required more manual fixes due to a higher number of code issues.

If the test name is self-descriptive, OpenAI was able to generate unit tests with a lower number of errors.

Project 2: Microservice-based application

As an example of a microservice-based application, we used the Source microservice project - an application built using .Net Core as the framework, Entity Framework Core for the ORM, and a Command Query Responsibility Segregation (CQRS) approach for managing and querying entities. RabbitMQ was used to implement the CQRS approach and EventStore to store events and entity objects. Each microservice could be built using Docker, with docker-compose managing the dependencies between microservices and running them together.

The target project includes:

- a microservice-based backend application created with Java 17 and Spring Boot 3

- a frontend application using the React framework

- Docker support for each microservice

- docker-compose to run all microservices at once

Project stages

Similarly to the CRUD application rewriting project, converting a microservice-based application using Generative AI requires a series of steps to ensure a seamless transition from the old codebase to the new one. Below are the key steps undertaken during this process:

- initial architecture and data flows’ investigation - conducting a thorough analysis of the existing application's architecture and data flow.

- rewriting backend microservices - selecting an appropriate framework for implementing CQRS in Java, setting up a microservice skeleton, and translating the core business logic from the original language to Java Spring Boot.

- generating a new frontend application - developing a new frontend application using React to communicate with the backend microservices via REST APIs.

- generating tests for the frontend application - creating unit tests and integration tests to validate its functionality and interactions with the backend.

- containerizing new applications - generating Docker files for each microservice and a docker-compose file to manage the deployment and orchestration of the entire application stack.

Throughout each step, developers were required to intervene manually to address code errors, compilation issues, and other problems encountered after using OpenAI's tools. This approach ensured that the new application retains the functionality and reliability of the original system while leveraging modern technologies and best practices.

Initial architecture and data flows’ investigation

The first step in converting a microservice-based application using Generative AI is to conduct a thorough investigation of the existing architecture and data flows. This foundational step is crucial for understanding:

- the system’s structure

- its dependencies

- interactions between microservices

Challenges with OpenAI

Similar to the process for a simple CRUD application, at the time, OpenAI struggled with larger inputs and failed to generate a comprehensive explanation of the entire project. Attempts to describe the project or its data flows were unsuccessful because inputting several project files at once often resulted in unclear and overly general outputs.

OpenAI’s strengths

Despite these limitations, OpenAI proved effective in explaining large pieces of code or individual components. This capability helped in understanding:

- the responsibilities of different components

- their respective data flows

Developers can create a comprehensive blueprint for the new application by thoroughly investigating the initial architecture and data flows. This step ensures that all critical aspects of the existing system are understood and accounted for, paving the way for a successful transition to a modern microservice-based architecture using Generative AI.

Again, our data analytics platform was used in project architecture analysis. By identifying integration points between different application components, the platform helps ensure that the new application maintains necessary connections and data exchanges.

It can also provide a comprehensive view of your current architecture, highlighting interactions between different modules and services. This aids in planning the new architecture for efficiency and scalability. Furthermore, the platform's analytics capabilities support identifying potential risks in the rewriting process.

Rewriting backend microservices

Rewriting the backend of a microservice-based application involves several intricate steps, especially when working with specific architectural patterns like CQRS (Command Query Responsibility Segregation) and event sourcing . The source C# project uses the CQRS approach, implemented with frameworks such as NServiceBus and Aggregates , which facilitate message handling and event sourcing in the .NET ecosystem.

Challenges with OpenAI

Unfortunately, OpenAI struggled with converting framework-specific logic from C# to Java. When asked to convert components using NServiceBus, OpenAI responded:

"The provided C# code is using NServiceBus, a service bus for .NET, to handle messages. In Java Spring Boot, we don't have an exact equivalent of NServiceBus, but here's how you might convert the given C# code to Java Spring Boot..."

However, the generated code did not adequately cover the CQRS approach or event-sourcing mechanisms.

Choosing Axon framework

Due to these limitations, developers needed to investigate suitable Java frameworks. After thorough research, the Axon Framework was selected, as it offers comprehensive support for:

- domain-driven design

- CQRS

- event sourcing

Moreover, Axon provides out-of-the-box solutions for message brokering and event handling and has a Spring Boot integration library , making it a popular choice for building Java microservices based on CQRS.

Converting microservices

Each microservice from the source project could be converted to Java Spring Boot using a systematic approach, similar to converting a simple CRUD application. The process included:

- analyzing the data flow within each microservice to understand interactions and dependencies

- using Spring Initializr to create the initial skeleton for each microservice

- translating the core business logic, API endpoints, and data access layers from C# to Java

- creating unit and integration tests to validate each microservice’s functionality

- setting up the event sourcing mechanism and CQRS using the Axon Framework, including configuring Axon components and repositories for event sourcing

Manual Intervention

Due to the lack of direct mapping between the source project's CQRS framework and the Axon Framework, manual intervention was necessary. Developers had to implement framework-specific logic manually to ensure the new system retained the original's functionality and reliability.

Generating a new frontend application

The source project included a frontend component written using aspnetcore-https and aspnetcore-react libraries, allowing for the development of frontend components in both C# and React.

However, OpenAI struggled to convert this mixed codebase into a React-only application due to the extensive use of C#.

Consequently, it proved faster and more efficient to generate a new frontend application from scratch, leveraging the existing REST endpoints on the backend.

Similar to the process for a simple CRUD application, when prompted with “Generate React application which is calling a given endpoint” , OpenAI provided a series of steps to create a React application from a template and offered sample code for the frontend.

- OpenAI successfully generated React components for each endpoint

- The CSS files from the source project were reusable in the new frontend to maintain the same styling of the web application.

- However, the overall structure and architecture of the frontend application remained the developer's responsibility.

Despite its capabilities, OpenAI-generated components often exhibited issues such as: