Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

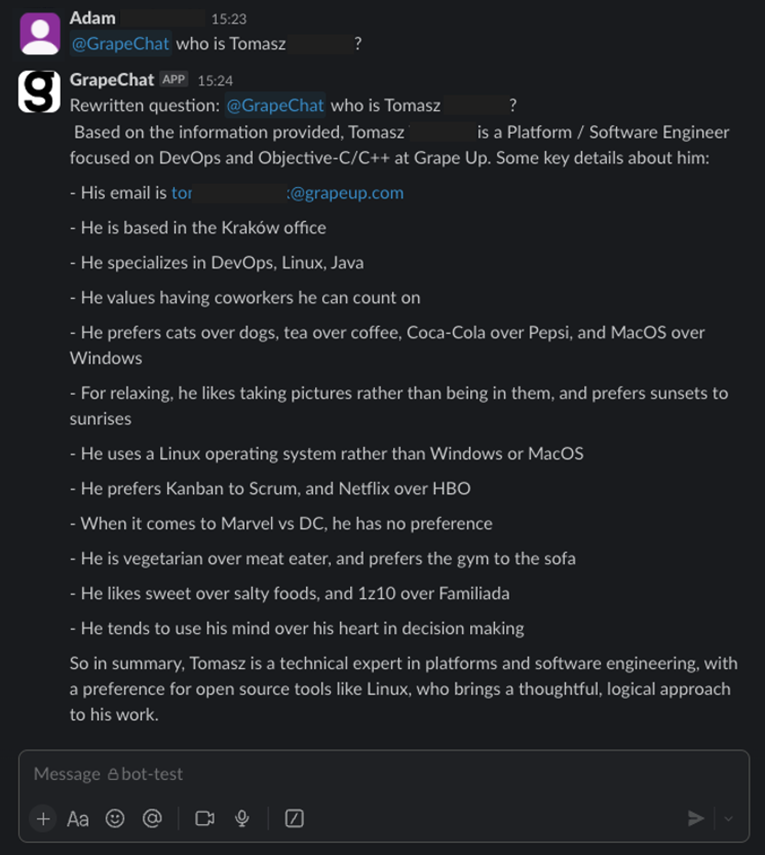

GrapeChat – the LLM RAG for enterprise

LLM is an extremely hot topic nowadays. In our company, we drive several projects for our customers using this technology. There are more and more tools, researches, and resources, including no-code, all-in-one solutions.

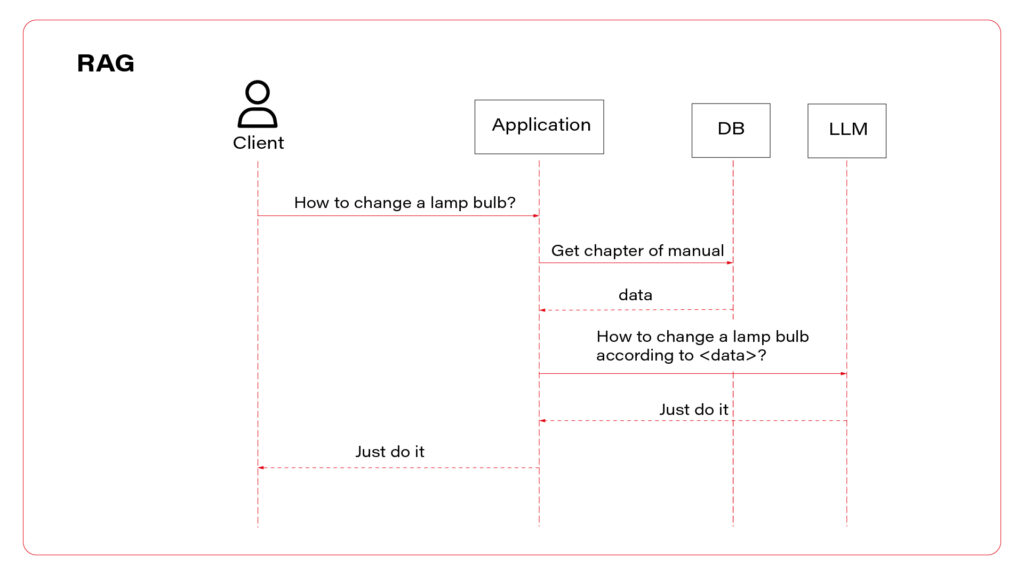

The topic for today is RAG – Retrieval Augmented Generation. The aim of RAG is to retrieve necessary knowledge and generate answers to the users’ questions based on this knowledge. Simply speaking, we need to search the company knowledge base for relevant documents, add those documents to the conversation context, and instruct an LLM to answer questions using the knowledge. But in detail, it’s nothing simple, especially when it comes to permissions.

Before you start

There are two technologies that take the current software development sector by storm, taking advantage of the LLM revolution : Microsoft Azure cloud platform, along with other Microsoft services, and Python programming language.

If your company uses Microsoft services, and SharePoint and Azure are within your reach, you can create a simple RAG application fast. Microsoft offers a no-code solution and application templates with source code in various languages (including easy-to-learn Python) if you require minor customizations.

Of course, there are some limitations, mainly in the permission management area, but you should also consider how much you want your company to rely on Microsoft services.

If you want to start from scratch, you should start by defining your requirements (as usual). Do you want to split your users into access groups, or do you want to assign access to resources for individuals? How do you want to store and classify your files? How deeply do you want to analyze your data (what about dependencies)? Is Python a good choice, after all? What about the costs? How to update permissions? There are a lot of questions to answer before you start. In Grape Up, we went through this process and implemented GrapeChat, our internal RAG-based chatbot using our Enterprise data.

Now, I invite you to learn more from our journey.

The easy way

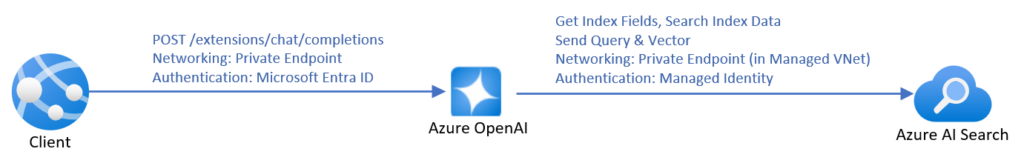

Source: https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/use-your-data-securely

The most time-efficient way to create a chatbot using RAG is to use the official manual from Microsoft . It covers everything – from pushing data up to the front-end application. However, it’s not very cost-efficient. To make it work with your data, you need to create an AI Search resource, and the simplest one costs 234€ per month (you will pay for the LLM usage, too). Moreover, SharePoint integration is not in the final stage yet , which forces you to manually upload data. You can lower the entry threshold by uploading your data to Blob storage instead of using SharePoint directly, and then you can use Power Automate to do it automatically for new files, but it requires more and more hard to troubleshoot UI-created components, with more and more permission management by your Microsoft-care team (probably your IT team) and a deeper integration between Microsoft and your company.

And then there is the permission issue.

When using Microsoft services, you can limit access to the documents being processed during RAG by using Azure AI Search security filters . This method requires you to assign a permission group when adding each document to the system (to be more specific, during indexing), and then you can add a permission group as a parameter to the search request. Of course, there is much more offered by Microsoft in terms of security of the entire application (web app access control, network filtering, etc.).

To use those techniques, you must have your own implementation (say bye-bye to no-code). If you like starting a project from a blueprint, go here . Under the link, you’ll find a ready-to-use Azure application, including the back-end, front-end, and all necessary resources, along with scripts to set it up. There are also variants linked in the README file, written in other languages (Java, .Net, JavaScript).

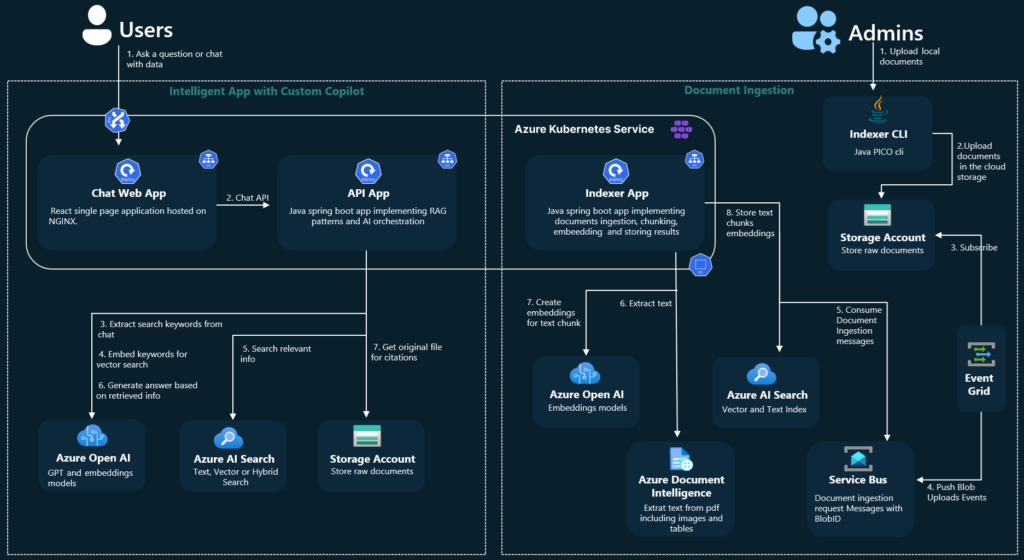

Source: https://github.com/Azure-Samples/azure-search-openai-demo-java/blob/main/docs/aks/aks-hla.png

However, there are still at least three topics to consider.

1) You start a new project, but with some code already written. Maybe the quality of the code provided by Microsoft is enough for you. Maybe not. Maybe you like the code structure. Maybe not. From my experience, learning the application to adjust it may take more time than starting from scratch. Please note that this application is not a simple CRUD, but something much more complex, making profits from a sophisticated toolbox.

2) Permission management is very limited. “Permission” is a keyword that distinguishes RAG and Enterprise-RAG. Let’s imagine that you have a document (for example, the confluence page) available to a limited number of users (for example, your company’s board). One day, the board member decides to grant access to this very page to one of the non-board managers. The manager is not part of the “board” group, the document is already indexed, and Confluence uses a dual-level permission system (space and document), which is not aligned with external SSO providers (Microsoft’s Entra ID).

Managing permissions in this system is a very complex task. Even if you manage to do it, there are two levels of protection – the Entra ID that secures your endpoint and the filter parameter in the REST request to restrict documents being searched during RAG. Therefore, the potential attack vector is very wide – if somebody has access to the Entra ID (for example, a developer working on the system), she/he can overuse the filtering API to get any documents, including the ones for the board members’ eyes only.

3) You are limited to Azure AI Search. Using Azure OpenAI is one thing (you can use OpenAI API without Azure, you can go with Claude, Gemini, or another LLM), but using Azure AI Search increases cost and limits your possibilities. For example, there is no way to utilize connections between documents in the system, when one document (e.g. an email with a question) should be linked to another one (e.g. a response email with the answer).

All in all, you couple your company with Microsoft very strict – using Entra ID permission management, Azure resources, Microsoft Storage (Azure Blob or SharePoint), etc. I’m not against Microsoft, but I’m against a single point of failure and addiction to a single service provider.

The hard way

I would say a “better way”, but it’s always a matter of your requirements and possibilities.

The hard way is to start the project with a blank page. You need to design the user’s touch point, the backend architecture, and the permission management.

In our company, we use SSO – the same identity for all resources: data storage, communicators, and emails. Therefore, the main idea is to propagate the user’s identity to authorize the user to obtain data.

Let’s discuss the data retrieval part first. The user logs into the messaging app (Slack, Teams, etc.) with their own credentials. The application uses their token to call the GrapeChat service. Therefore, the user’s identity is ensured. The bot decides (using LLM) to obtain some data. The service exchanges the user’s token for a new user’s token, allowed to call the database. This process is allowed only for the service with the user logged in. It's impossible to access the database without both the GrapeChat service and the user's token. The database verifies credentials and filters data. Let me underline this part – the database is in charge of data security. It’s like a typical database, e.g. PostgreSQL or MySQL – the user uses their own credentials to access the data, and nobody challenges its permission system, even if it stores data of multiple users.

Wait a minute! What about shared credentials, when a user stores data that should be available for other users, too?

It brings us to the data uploading process and the database itself.

The user logs into some data storage. In our case, it may be a messaging app (conversations are a great source of knowledge), email client, Confluence, SharePoint, shared SMB resource, or a cloud storage service (e.g. Dropbox). However, the user’s token is not used to copy the data from the original storage to our database.

There are three possible solutions.

- The first one is to actively push data from its original storage to the database. It’s possible in just a few systems, e.g. as automatic forwarding for all emails configured on the email server.

- The second one is to trigger the database to download new data, e.g. with a webhook. It’s also possible in some systems, e.g. Contentful to send notifications about changes this way.

- The last one is to periodically call data storages and compare stored data with the origin. This is the worst idea (because of the possible delay and comparing process) but, unfortunately, the most common one. In this approach, the database actively downloads data based on a schedule.

Using those solutions requires separate implementations for each data origin.

In all those cases, we need a non-user’s account to process user’s data. The solution we picked is to create a “superuser” account and restrict it to non-human access. Only the database can use this account and only in an isolated virtual network.

Going back to the group permission and keeping in mind that data is acquired with “superuser” access, the database encrypts each document (a single piece of data) using the public keys of all users that should have access to it. Public keys are stored with the Identity (in our case, this is a custom field in Active Directory), and let me underline it again – the database is the only entity that process unencrypted data and the only one that uses “superuser” access. Then, when accessing the data, a private key (obtained from an Active Directory using the user’s SSO token) of each allowed user can be used for decryption.

Therefore, the GrapeChat service is not part of the main security processes, but on the other hand, we need a pretty complex database module.

The database and the search process

In our case, the database is a strictly secured container running 3 applications – SQL database, vector database, and a data processing service. Its role is to acquire and embed data, update permissions, and execute search. The embedding part is easy. We do it internally (in the database module) with the Instructor XL model, but you can choose a better one from the leaderboard . Allowed users’ IDs are stored within the vector database (in our case – Qdrant ) for filtering purposes, and the plain text content is encrypted with users’ public keys.

When the DB module searches for a query, it uses the vector DB first, including metadata to filter allowed users. Then, the DB service obtains associated entities from the SQL DB. In the next steps, the service downloads related entities using simple SQL relations between them. There is also a non-data graph node, “author”, to keep together documents created by the same person. We can go deeper through the graph relation-by-relation if the caller has rights to the content. The relation-search deepness is a parameter of the system.

We do use a REST field filter like the one offered by the native MS solution, too, but in our case, we do the permission-aware search first. So, if there are several people in the Slack conversation and one of them mentions GrapeChat, the bot uses his permission in the first place and then, additionally, filters results not to expose a document to other channel members if they are not allowed to see it. In other words, the calling user can restrict search results according to teammates but is not able to extend the results above her/his permissions.

What happens next?

The GrapeChat service is written in Java. This language offers a nice Slack SDK, and Spring AI, so we've seen no reason to opt for Python with the Langchain library. The much more important component is the database service, built of three elements described above. To make the DB fast and smalll, we recommend using Rust programming language, but you can also use Python, according to the knowledge of your developers.

Another important component is a document parser. The task is easy with simple, plain text messages, but your company knowledge includes tons of PDFs, Word docs, Excel spreadsheets, and even videos. In our architecture, parsers are external, replaceable modules written in various languages working with the DB in the same isolated network.

RAG for Enterprise

With all the achievements of recent technology, RAG is not rocket science anymore. However, when it comes to the Enterprise data, the task is getting more and more complex. Data security is one of the biggest concerns in the LLM era, so we recommend starting small – with a limited number of non-critical documents, with limited access, and a wisely secured system.

In general, the task is not impossible, and can be easily handled with a proper application design. Working on an internal tool is a great opportunity to gain experience and prepare better for your next business cases, especially when the IT sector is so young and immature. This way we, here at GrapeUp, use our expertise to serve our customers in a better way.

Integrating generative AI with knowledge graphs for enhanced data analytics

The integration of generative AI into data analytics is transforming business data management and interpretation, opening vast possibilities across industries.

Recent statistics from a Gartner survey indicate significant strides in the adoption of generative AI: 45% of organizations are now piloting generative AI projects, with 10% having fully integrated these systems into their operations. This marks a considerable increase from earlier figures, demonstrating a rapid adoption curve. Additionally, by 2026, it's predicted that more than 80% of organizations will use generative AI applications, up from less than 5% just three years prior.

Combining generative AI and knowledge graphs for data analytics

The potential impact of combining generative AI with knowledge graphs is particularly promising. This synergy enhances data analytics by improving accuracy, speeding up data processing, and enabling deeper insights into complex datasets. As adoption continues to expand, the mentioned technologies will transform how organizations leverage data for strategic advantage.

This article details the specific benefits of generative AI and knowledge graphs and how their integration can boost data-based decision-making processes.

Maximizing generative AI potential in data analytics

Generative AI has revolutionized data analytics by automating tasks that traditionally required significant human effort and by providing new methods to manage and interpret large datasets. Here is a more detailed explanation of how GenAI operates in various aspects of data analytics.

Rapid Summarization of Information

GenAI's ability to swiftly process and summarize large volumes of data is a boon in situations that demand quick insights from extensive datasets. This is especially critical in areas like financial analysis or market trend monitoring, where rapid information condensation can significantly expedite decision-making processes.

Enhanced Data Enrichment

In the initial stages of data analytics , raw data is often unstructured and may contain errors or gaps. GenAI plays a crucial role in enriching this raw data before it can be effectively visualized or analyzed. This includes cleaning the data, filling in missing values, generating new features, and integrating external data sources to add depth and context. Such capabilities are particularly beneficial in scenarios like predictive modeling for customer behavior, where historical data may not fully capture current trends.

Automation of Repetitive Data Preparation Tasks

Data preparation is often the most time-consuming part of data analytics. GenAI helps automate these processes with unmatched precision and speed. This not only enhances the efficiency and accuracy of data preparation but also improves data quality by quickly identifying and correcting inconsistencies.

Complex Data Simplification

GenAI expertly simplifies complex data patterns, making them easy to understand and accessible. This allows users with varying levels of expertise to derive actionable insights and make informed decisions effortlessly.

Interactive Data Exploration via Conversational Interfaces

GenAI uses Natural Language Processing (NLP) to facilitate interactions, allowing users to query data in everyday language. This significantly lowers the barrier to data exploration, making analytics tools more user-friendly and extending their use across different organizational departments.

The use of knowledge graphs in data analytics

Knowledge graphs prove increasingly useful in data analytics, providing a solid framework to improve decision-making in various industries. These graphs represent data as interconnected networks of entities linked by relationships, enabling intuitive and sophisticated analysis of complex datasets.

What are associative knowledge graphs?

Associative knowledge graphs are a specialized subset of knowledge graphs that excel in identifying and leveraging intricate and often subtle associations among data elements. These associations include not only direct links but also indirect and inferred relationships that are crucial for deep data analysis, AI modeling, and complex decision-making processes where understanding subtle connections can be crucial.

Associative knowledge graphs functionalities

Associative knowledge graphs are useful in dynamic environments where data constantly evolves. They can incorporate incremental updates without major structural changes , allowing them to quickly adapt and maintain accuracy without extensive modifications. This is particularly beneficial in scenarios where knowledge graphs need to be updated frequently with new information without retraining or restructuring the entire graph.

Designed to handle complex queries involving multiple entities and relationships, these graphs offer advanced capabilities beyond traditional relational databases. This is due to their ability to represent data in a graph structure that reflects the real-world interconnections between different pieces of information. Whether the data comes from structured databases, semi-structured documents, or unstructured sources like texts and multimedia, associative knowledge graphs can amalgamate these different data types into a unified model.

Additionally, associative knowledge graphs generate deeper insights in data analytics through cognitive and associative linking . They connect disparate data points by mimicking human cognitive processes, revealing patterns important for strategic decision-making.

Generative AI and associative knowledge graphs: Synergy for analytics

The integration of Generative AI with associative knowledge graphs enhances data processing and analysis in three key ways: speed, quality of insights, and deeper understanding of complex relationships.

Speed: GenAI automates conventional data management tasks , significantly reducing the time required for data cleansing, validation, and enrichment. This helps decrease manual efforts and speed up data handling. Combining it with associative knowledge graphs simplifies data integration and enables faster querying and manipulation of complex datasets, enhancing operational efficiency.

Quality of Insights: GenAI and associative knowledge graphs work together to generate high-quality insights. GenAI quickly processes large datasets to deliver timely and relevant information. Knowledge graphs enhance these outputs by providing semantic and contextual depth, where precise insights are vital.

Deeper Understanding of Complex Relationships: By illustrating intricate data relationships, knowledge graphs reveal hidden patterns and correlations which leads to more comprehensive and actionable insights that can improve data utilization in complex scenarios.

Example applications

Healthcare:

- Patient Risk Prediction : GenAI and associative knowledge graphs can be used to predict patient risks and health outcomes by analyzing and interpreting comprehensive data, including historical records, real-time health monitoring from IoT devices, and social determinants of health. This integration enables creation of personalized treatment plans and preventive care strategies.

- Operational Efficiency Optimization : These technologies optimize resource allocation, staff scheduling, and patient flow by integrating data from various hospital systems (electronic health records, staffing schedules, patient admissions). This results in more efficient resource utilization, reduced waiting times and improved overall care delivery.

Insurance, Banking & Finance:

- Risk Assessment / Credit Scoring : Using a broad array of data points such as historical financial data, social media activity, and IoT device data, GenAI and knowledge graphs can help generate accurate risk assessments and credit scores. This comprehensive analysis uncovers complex relationships and patterns, enhancing the understanding of risk profiles.

- Customer Lifetime Value Prediction : These technologies are utilized to analyze transaction and interaction data to predict future banking behaviors and assess customer profitability. By tracking customer behaviors, preferences, and historical interactions, they allow for the development of personalized marketing campaigns and loyalty programs, boosting customer retention and profitability.

Retail:

- Inventory Management : Customers can also use GenAI and associative knowledge graphs to optimize inventory management and prevent overstock and stockouts. Integrating supply chain data, sales trends, and consumer demand signals ensures balanced inventory aligned with market needs, improving operational efficiency and customer satisfaction.

- Sales & Price Forecasting : Otherwise, you can forecast future sales and price trends by analyzing historical sales data, economic indicators, and consumer behavior patterns. By combining various data sources, you get a comprehensive understanding of sales dynamics and price fluctuations, aiding in strategic planning and decision-making.

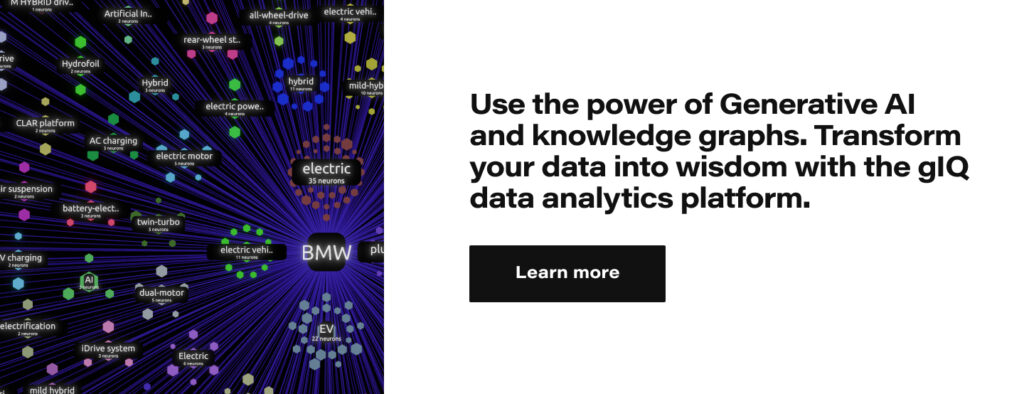

gIQ – data analytics platform powered by generative AI and associative knowledge graphs

The gIQ data analytics platform demonstrates one example of integrating generative AI with knowledge graphs. Developed by Grape Up founders, this solution represents a cutting-edge approach, allowing for transformation of raw data into applicable knowledge. This integration allows gIQ to swiftly detect patterns and establish connections, delivering critical insights while bypassing the intensive computational requirements of conventional machine learning techniques. Consequently, users can navigate complex data environments easily, paving the way for informed decision-making and strategic planning.

Conclusion

The combination of generative AI and knowledge graphs is transforming data analytics by allowing organizations to analyze data more quickly, accurately, and insightfully. The increasing use of these technologies indicates that they are widely recognized for their ability to improve decision-making and operational efficiency in a variety of industries.

Looking forward, it's highly likely that the ongoing development and improvement of these technologies will unlock more advanced and sophisticated applications. This will drive innovation and give organizations a strategic advantage. Embracing these advancements isn't just beneficial, it's essential for companies that want to remain competitive in an increasingly data-driven world.

From silos to synergy: How LLM Hubs facilitate chatbot integration

In today's tech-driven business environment, large language models (LLM)-powered chatbots are revolutionizing operations across a myriad of sectors, including recruitment, procurement, and marketing. In fact, the Generative AI market can gain $1.3 trillion worth by 2032. As companies continue to recognize the value of these AI-driven tools, investment in customized AI solutions is burgeoning. However, the growth of Generative AI within organizations brings to the fore a significant challenge: ensuring LLM interoperability and effective communication among the numerous department-specific GenAI chatbots.

The challenge of siloed chatbots

In many organizations, the deployment of GenAI chatbots in various departments has led to a fragmented landscape of AI-powered assistants. Each chatbot, while effective within its domain, operates in isolation, which can result in operational inefficiencies and missed opportunities for cross-departmental AI use.

Many organizations face the challenge of having multiple GenAI chatbots across different departments without a centralized entry point for user queries. This can cause complications when customers have requests, especially if they span the knowledge bases of multiple chatbots.

Let’s imagine an enterprise, which we’ll call Company X, which uses separate chatbots in human resources, payroll, and employee benefits. While each chatbot is designed to provide specialized support within its domain, employees often have questions that intersect these areas. Without a system to integrate these chatbots, an employee seeking information about maternity leave policies, for example, might have to interact with multiple unconnected chatbots to understand how their leave would affect their benefits and salary.

This fragmented experience can lead to confusion and inefficiencies, as the chatbots cannot provide a cohesive and comprehensive response.

Ensuring LLM interoperability

To address such issues, an LLM hub must be created and implemented. The solution lies in providing a single user interface that serves as the one point of entry for all queries, ensuring LLM interoperability. This UI should enable seamless conversations with the enterprise's LLM assistants, where, depending on the specific question, the answer is sourced from the chatbot with the necessary data.

This setup ensures that even if separate teams are working on different chatbots, these are accessible to the same audience without users having to interact with each chatbot individually. It simplifies the user's experience, even as they make complex requests that may target multiple assistants. The key is efficient data retrieval and response generation, with the system smartly identifying and pulling from the relevant assistant as needed.

In practice at Company X, the user interacts with a single interface to ask questions. The LLM hub then dynamically determines which specific chatbot – whether from human resources, payroll, or employee benefits (or all of them) – has the requisite information and tuning to deliver the correct response. Rather than the user navigating through different systems, the hub brings the right system to the user.

This centralized approach not only streamlines the user experience but also enhances the accuracy and relevance of the information provided. The chatbots, each with its own specialized scope and data, remain interconnected through the hub via APIs. This allows for LLM interoperability and a seamless exchange of information, ensuring that the user's query is addressed by the most informed and appropriate AI assistant available.

Advantages of LLM Hubs

- LLM hubs provide a unified user interface from which all enterprise assistants can be accessed seamlessly. As users pose questions, the hub evaluates which chatbot has the necessary data and specific tuning to address the query and routes the conversation to that agent, ensuring a smooth interaction with the most knowledgeable source.

- The hub's core functionality includes the intelligent allocation of queries . It does not indiscriminately exchange data between services but selectively directs questions to the chatbot best equipped with the required data and configuration to respond, thus maintaining operational effectiveness and data security.

- The service catalog remains a vital component of the LLM hub, providing a centralized directory of all chatbots and their capabilities within the organization. This aids users in discovering available AI services and enables the hub to allocate queries more efficiently, preventing redundant development of AI solutions.

- The LLM hub respects the specialized knowledge and unique configurations of each departmental chatbot. It ensures that each chatbot applies its finely-tuned expertise to deliver accurate and contextually relevant responses, enhancing the overall quality of user interaction.

- The unified interface offered by LLM hubs guarantees a consistent user experience. Users engage in conversations with multiple AI services through a single touchpoint, which maintains the distinct capabilities of each chatbot and supports a smooth, integrated conversation flow.

- LLM hubs facilitate the easy management and evolution of AI services within an organization. They enable the integration of new chatbots and updates, providing a flexible and scalable infrastructure that adapts to the business's growing needs.

At Company X, the introduction of the LLM hub transformed the user experience by providing a single user interface for interacting with various chatbots.

The IT department's management of chatbots became more streamlined. Whenever updates or new configurations were made to the LLM hub, they were effectively distributed to all integrated chatbots without the need for individual adjustments.

The scalable nature of the hub also facilitated the swift deployment of new chatbots, enabling Company X to rapidly adapt to emerging needs without the complexities of setting up additional, separate systems. Each new chatbot connects to the hub, accessing and contributing to the collective knowledge network established within the company.

Things to consider when implementing the LLM Hub solution

1. Integration with Legacy Systems : Enterprises with established legacy systems must devise strategies for integrating with LLM hubs. This ensures that these systems can engage with AI-driven technologies without disrupting existing workflows.

2. Data Privacy and Security: Given that chatbots handle sensitive data, it is crucial to maintain data privacy and security during interactions and within the hub. Implementing strong encryption and secure transfer protocols, along with adherence to regulations such as GDPR, is necessary to protect data integrity.

3. Adaptive Learning and Feedback Loops: Embedding adaptive learning within LLM hubs is key to the progressive enhancement of chatbot interactions. Feedback loops allow for continual learning and improvement of provided responses based on user interactions.

4. Multilingual Support: Ideally, LLM hubs should accommodate multilingual capabilities to support global operations. This enables chatbots to interact with a diverse user base in their preferred languages, broadening the service's reach and inclusivity.

5. Analytics and Reporting: The inclusion of advanced analytics and reporting within the LLM hub offers valuable insights into chatbot interactions. Tracking metrics like response accuracy and user engagement helps fine-tune AI services for better performance.

6. Scalability and Flexibility: An LLM hub should be designed to handle scaling in response to the growing number of interactions and the expanding variety of tasks required by the business, ensuring the system remains robust and adaptable over time.

Conclusion

LLM hubs represent a proactive approach to overcoming the challenges posed by isolated chatbot s within organizations. By ensuring LLM interoperability and fostering seamless communication between different AI services, these hubs enable companies to fully leverage their AI assets.

This not only promotes a more integrated and efficient operational structure but also sets the stage for innovation and reduced complexity in the AI landscape. As GenAI adoption continues to expand, developing interoperability solutions like the LLM hub will be crucial for businesses aiming to optimize their AI investments and achieve a cohesive and effective chatbot ecosystem.

Exploring the architecture of automotive electronics: Domain vs. zone

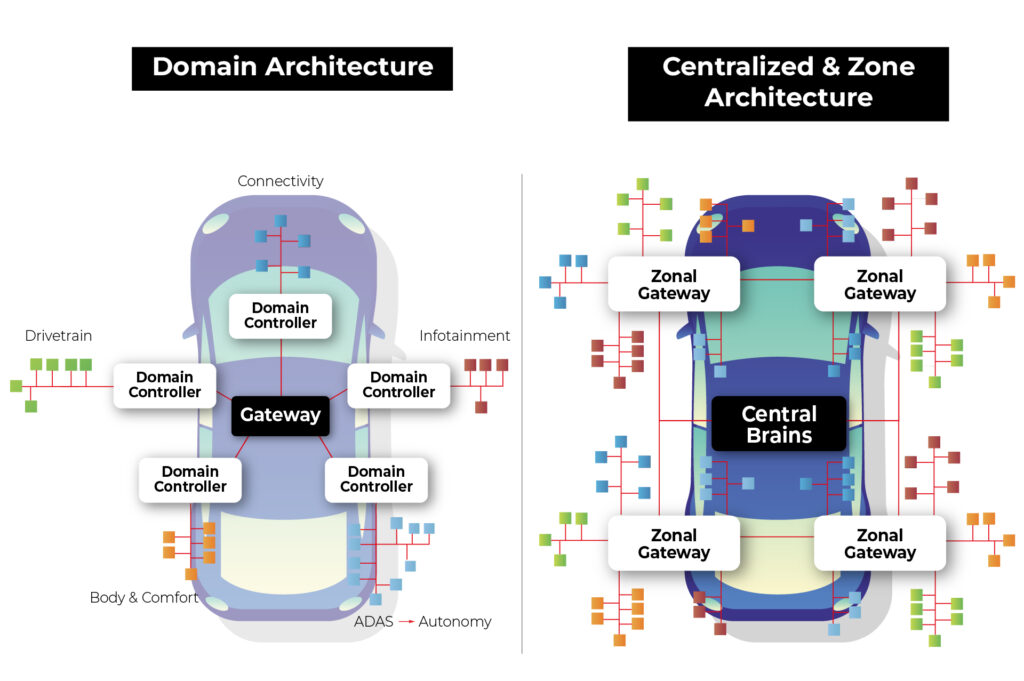

The automotive industry is undergoing a transformative shift towards electrification and automation, with vehicles becoming increasingly reliant on sophisticated electrical and electronic systems. At the heart of this evolution lies the architecture that governs how these systems are organized and integrated within the vehicle. Two prominent paradigms have emerged in this domain: domain architecture and zone architecture.

Source: https://www.eetasia.com/the-role-of-centralized-storage-in-the-emerging-zonal-automotive-architecture/

Domain Architecture : In this approach, various electrical and electronic functions are organized around "domains" or functional modules. Each domain is responsible for a specific functional area of the vehicle, such as the engine, braking system, steering system, etc. Each domain may have its independent controllers and communication networks.

Example : In a domain architecture setup, the engine domain would handle all functions related to the vehicle's engine, including ignition, fuel injection, and emissions control. This domain would have its dedicated controller managing these operations.

Zone Architecture : In this approach, the electrical and electronic systems are organized around different "zones" within the vehicle. Zones typically correspond to specific physical areas of the vehicle, such as the front dashboard, passenger cabin, front-end, rear-end, etc. Each zone may have independent electrical and electronic systems tailored to specific needs and functions.

Example : In a zone architecture setup, the front-end zone might encompass functions like lighting, HVAC (Heating, Ventilation, and Air Conditioning), and front-facing sensors for driver assistance systems. These functions would be integrated into a system optimized for the front-end zone's requirements.

Advantages of zonal architecture over domain architecture

In the realm of automotive electronics, zone architecture offers several advantages over domain architecture, revolutionizing the way vehicles are designed, built, and operated. Let's explore these advantages in detail:

1. Software defined vehicle (SDV)

The concept of a Software Defined Vehicle (SDV) represents a paradigm shift in automotive engineering, transforming vehicles into dynamic platforms driven by software innovation. SDV involves decoupling the application layer from the hardware layer, creating a modular and flexible system that offers several significant advantages:

Abstraction of Application from Hardware : In an SDV architecture, applications are abstracted from the underlying hardware, creating a layer of abstraction that simplifies development and testing processes. This separation allows developers to focus on building software functionalities without being constrained by hardware dependencies.

Sensor Agnosticism : One of the key benefits of SDV is the ability to utilize sensors across multiple applications without being tied to specific domains. In traditional domain architectures, sensors are often dedicated to specific functions, limiting their flexibility and efficiency. In an SDV setup, sensors are treated as shared resources that can be accessed and utilized by various applications independently. This sensor agnosticism enhances resource utilization and reduces redundancy, leading to optimized system performance and cost-effectiveness.

Independent Software Updates : SDV enables independent software updates for different vehicle functions and applications. Instead of relying on centralized control units or domain-specific controllers, software functionalities can be updated and upgraded autonomously, enhancing the agility and adaptability of the vehicle.

The OTA system in zonal architecture is also simpler in general as the whole idea is based on abstracting software from hardware, and less tightly coupled software is way easier to update remotely.

With independent software updates, manufacturers can address software bugs, introduce new features and deploy security patches more efficiently. This capability ensures that vehicles remain up-to-date with the latest advancements and safety standards, enhancing user satisfaction and brand reputation.

2. Security

Zone architecture in automotive electronics offers concrete advantages over domain architecture. Let's examine how zone architecture addresses security concerns more effectively compared to domain architecture:

Network Access Vulnerabilities in Domain Architecture:

In domain architecture, connecting to the vehicle network grants access to the entire communication ecosystem, including sensors, actuators, and the central computer.

Particularly concerning is the Controller Area Network (CAN), a widely used protocol lacking built-in authentication and authorization mechanisms. Once connected to a CAN network, an attacker can send arbitrary messages as if originating from legitimate devices.

Granular Access Control in Zone Architecture:

Zone architecture introduces granular access control mechanisms, starting at the nearest gateway to the zone. Each message passing through the gateway is scrutinized, allowing only authorized communications to proceed while rejecting unauthorized ones.

By implementing granular access control, attackers accessing the network gain access only to communication between sensors and the gateway. Moreover, the architecture enables the segregation of end networks based on threat levels.

Network Segmentation for Enhanced Security:

In a zone architecture setup, it's feasible to segment networks based on the criticality of components and potential exposure to threats.

Less critical sensors and actuators can be grouped together on a single CAN network. Conversely, critical sensors vulnerable to external access can be connected via encrypted Ethernet connections, offering an additional layer of security.

In summary, zone architecture provides a reliable solution to security vulnerabilities inherent in domain architecture. By implementing granular access control and network segmentation, zone architecture significantly reduces the attack surface and enhances the overall security posture of automotive systems. This approach ensures that critical vehicle functions remain protected against unauthorized access and manipulation, safeguarding both the vehicle and its occupants from potential cyber threats.

3. Simplified and lightweight wiring

Wiring in automotive electronics plays a critical role in connecting various components and systems within the vehicle. However, it also poses challenges, particularly in terms of weight and complexity. This section, explores how zone architecture addresses these challenges, leading to simplified and lightweight wiring solutions.

The Weight of Wiring: It's important to recognize that wiring is one of the heaviest components in a vehicle, trailing only behind the chassis and engine. In fact, the total weight of wiring harnesses in a vehicle can reach up to 70 kilograms. This significant weight contributes to the overall mass of the vehicle, affecting fuel efficiency, handling, and performance.

Challenges with Traditional Wiring: Traditional wiring systems, especially in domain architecture, often involve long and complex wiring harnesses that span the entire vehicle. This extensive wiring adds to the overall weight and complexity of the vehicle, making assembly and maintenance more challenging.

The Promise of Zone Architecture: Zonal architecture offers a promising alternative by organizing vehicle components into functional zones. This approach allows for more localized placement of sensors, actuators, and control units within each zone, minimizing the distance between components and reducing the need for lengthy wiring harnesses.

Reduced Cable Length: By grouping components together within each zone, zone architecture significantly reduces the overall cable length required to connect these components. Shorter cable runs translate to lower electrical resistance, reduced signal attenuation, and improved signal integrity, resulting in more reliable and responsive vehicle systems.

Optimized Routing and Routing Flexibility: Zone architecture allows for optimized routing of wiring harnesses, minimizing interference and congestion between different systems and components. Moreover, the flexibility inherent in zone architecture enables easier adaptation to different vehicle configurations and customer preferences without the constraints imposed by rigid wiring layouts.

4. Easier and cheaper production

Zonal architecture not only enhances the functionality and efficiency of automotive electronics but also streamlines the production process, making it easier and more cost-effective. Let's explore how zone architecture achieves this:

Modular Assembly : One of the key advantages of zone architecture is its modular nature, which allows for the assembly of individual zones separately before integrating them into the complete vehicle. This modular approach simplifies the assembly process, as each zone can be constructed and tested independently, reducing the complexity of assembly lines and minimizing the risk of errors during assembly.

Reduced Wiring Complexity : The reduction in wiring complexity achieved through zone architecture has a significant impact on production costs. Wiring harnesses are one of the most expensive components in a vehicle, primarily due to the labor-intensive nature of their installation. Each wire must be routed and connected individually, and since each domain typically has its own wiring harness, the process becomes even more laborious.

Automation Challenges with Wiring : Furthermore, automating the wiring process is inherently challenging due to the intricate nature of routing wires and connecting them to various components. While automation has been successfully implemented in many aspects of automotive production, wiring assembly remains largely manual, requiring a significant workforce to complete the task efficiently.

Batch Production of Zones : With zone architecture, the assembly of individual zones can be batch-produced, allowing for standardized processes and economies of scale. This approach enables manufacturers to optimize production lines for specific tasks, reduce setup times between production runs, and achieve greater consistency and quality control.

Integration of Wiring Harnesses : Another advantage of zone architecture is the integration of wiring harnesses into larger assemblies, such as the entire zone. By combining wiring harnesses and assembly for an entire zone into a single process, manufacturers can significantly accelerate production and reduce costs associated with wiring installation and integration.

In summary, zone architecture simplifies and streamlines the production process of vehicles by allowing for modular assembly, reducing wiring complexity, addressing automation challenges, and facilitating batch production of zones. By integrating wiring harnesses into larger assemblies and optimizing production lines, manufacturers can achieve cost savings, improve efficiency, and enhance overall quality in automotive production.

Introduction to zone architecture demonstration

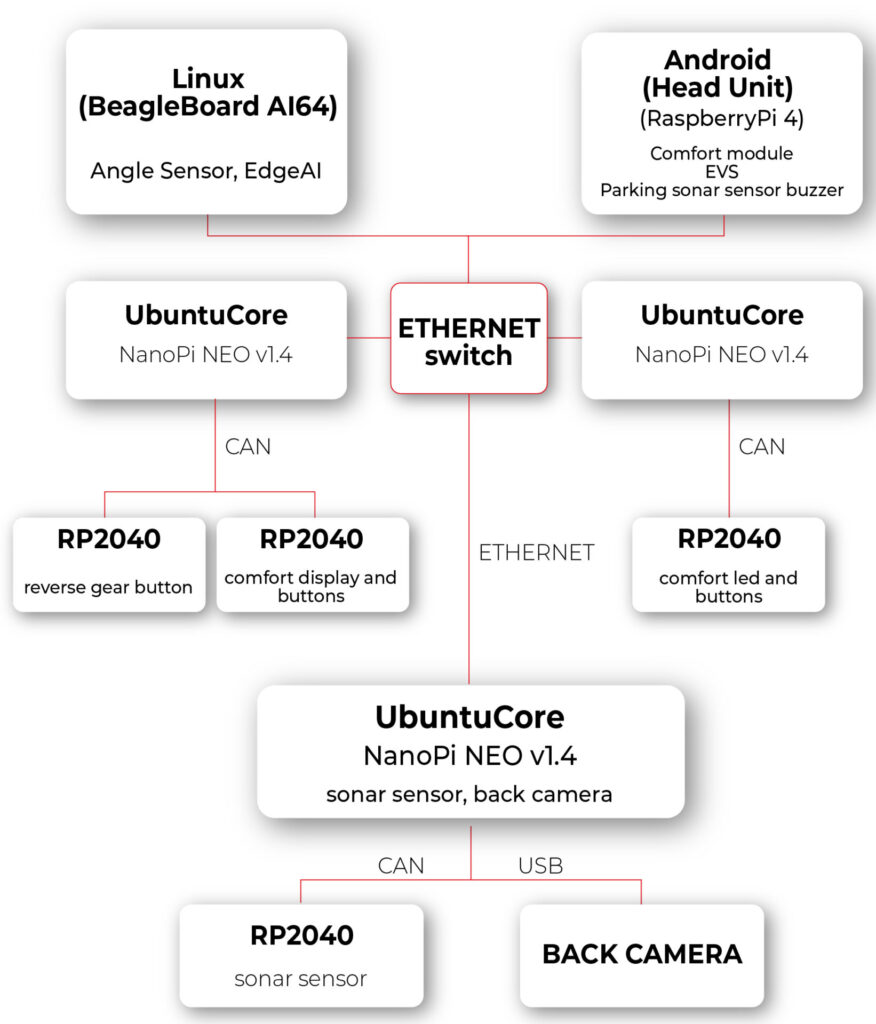

In our Research and Development (R&D) department, we're thrilled to present a demonstration showcasing the power and versatility of zone architecture in automotive electronics. Let's take a closer look at the key components of our setup:

1. Android Computer with Modified VHAL for HVAC:

- Our central computing platform is an Android 14 device running Android Automotive OS (AAOS). This device serves as the hub for system control and communication.

- The modified Vehicle Hardware Abstraction Layer (VHAL) is a pivotal component of our setup. This software layer enables seamless interaction with the vehicle's HVAC module.

- The modified VHAL establishes a connection with the HVAC module through Ethernet and REST API communication protocols, facilitating real-time control and monitoring of HVAC functions.

2. Zone Computer with Ubuntu Core and HVAC Controller Application:

- Each zone in our architecture is equipped with a dedicated computer running Ubuntu Core.

- These zone computers act as the brains of their respective zones, hosting our custom HVAC controller application.

- The HVAC controller application, developed in-house, is responsible for interpreting commands received from the central Android computer and orchestrating HVAC operations within the zone.

- By leveraging Ethernet and REST API communication, as well as the Controller Area Network (CAN) network, the HVAC controller application ensures seamless integration and coordination of HVAC functions.

3. Microcontroller for Physical Interface:

- To provide users with intuitive control over HVAC settings, we've integrated a microcontroller into our setup.

- The microcontroller serves as an interface between the physical controls (buttons and display) and the zone computer.

- Using the CAN interface, the microcontroller relays user inputs to the zone computer, enabling responsive and interactive control of HVAC parameters.

- By bridging the gap between the digital and physical domains, the microcontroller enhances user experience and usability, making HVAC control intuitive and accessible.

The experiment with zone architecture in automotive electronics has proven the effectiveness of our setup. In our solution, pressing a button triggers the transmission of information to the zone computer, where the temperature is adjusted and broadcasted to the respective temperature displays in the zone and to the main Android Automotive OS (head unit IVI). Additionally, changing the temperature via the interface on Android results in sending information to the appropriate zone, thereby adjusting the temperature in that zone.

During the hardware layer testing, we utilized the REST API protocol to expedite implementation. However, we observed certain limitations of this solution. Specifically, we anticipated from the outset that the REST API protocol would not suffice for our needs. The VHAL in the Android system needs to know the HTTP addresses of individual zones and specify to which zone the temperature change should be sent. This approach is not very flexible and may introduce delays associated with each connection to the HTTP server.

In the next article, we plan to review available communication protocols and methods of message description in such a network. Our goal will be to find protocols that excel in terms of speed, flexibility of application, and security. By doing so, we aim to further refine our solution and maximize its effectiveness in the context of zone architecture in automotive electronics.

Addressing data governance challenges in enterprises through the use of LLM Hubs

In an era where more than 80% of enterprises are expected to use Generative AI by 2026, up from less than 5% in 2023, the integration of AI chatbots is becoming increasingly common. This adoption is driven by the significant efficiency boosts these technologies offer, with over half of businesses now deploying conversational AI for customer interactions.

In fact, 92% of Fortune 500 companies are using OpenAI’s technology, with 94% of business executives believing that AI is a key to success in the future.

Challenges to GenAI implementation

The implementation of large language models (LLMs) and AI-driven chatbots is a challenging task in the current enterprise technology scene. Apart from the complexity of integrating these technologies, there is a crucial need to manage the vast amount of data they process securely and ethically. This emphasizes the importance of having robust data governance practices in place.

Organizations deploying generative AI chatbots may face security risks associated with both external breaches and internal data access. Since these chatbots are designed to streamline operations, they require access to sensitive information . Without proper control measures in place, there is a high possibility that confidential information may be inadvertently accessed by unauthorized personnel.

For example, chatbots or AI tools are used to automate financial processes or provide financial insights. Failures in secure data management in this context may lead to malicious breaches.

Similarly, a customer service bot may expose confidential customer data to departments that do not have a legitimate need for it. This highlights the need for strict access controls and proper data handling protocols to ensure the security of sensitive information.

Dealing with complexities of data governance and LLMs

To integrate LLMs into current data governance frameworks, organizations need to adjust their strategy. This lets them use LLMs effectively while still following important standards like data quality, security, and compliance.

- It is crucial to adhere to ethical and regulatory standards when using data within LLMs. Establish clear guidelines for data handling and privacy.

- Devise strategies for the effective management and anonymization of the vast data volumes required by LLMs.

- Regular updates to governance policies are necessary to keep pace with technological advancements, ensuring ongoing relevance and effectiveness.

- Implement strict oversight and access controls to prevent unauthorized exposure of sensitive information through, for example, chatbots.

Introducing the LLM hub: centralizing data governance

An LLM hub empowers companies to manage data governance effectively by centralizing control over how data is accessed, processed, and used by LLMs within the enterprise. Instead of implementing fragmented solutions, this hub serves as a unified platform for overseeing and integrating AI processes.

By directing all LLM interactions through this centralized platform, businesses can monitor how sensitive data is being handled. This guarantees that confidential information is only processed when required and in full compliance with privacy regulations.

Role-Based Access Control in the LLM hub

A key feature of the LLM Hub is its implementation of Role-Based Access Control (RBAC) . This system enables precise delineation of access rights, ensuring that only authorized personnel can interact with specific data or AI functionalities. RBAC limits access to authorized users based on their roles in their organization. This method is commonly used in various IT systems and services, including those that provide access to LLMs through platforms or hubs designed for managing these models and their usage.

In a typical RBAC system for an LLM Hub, roles are defined based on the job functions within the organization and the access to resources that those roles require. Each role is assigned specific permissions to perform certain tasks, such as generating text, accessing billing information, managing API keys, or configuring model parameters. Users are then assigned roles that match their responsibilities and needs.

Here are some of the key features and benefits of implementing RBAC in an LLM Hub:

- By limiting access to resources based on roles, RBAC helps to minimize potential security risks. Users have access only to the information and functionality necessary for their roles, reducing the chance of accidental or malicious breaches.

- RBAC allows for easier management of user permissions. Instead of assigning permissions to each user individually, administrators can assign roles to users, streamlining the process and reducing administrative overhead.

- For organizations that are subject to regulations regarding data access and privacy, RBAC can help ensure compliance by strictly controlling who has access to sensitive information.

- Roles can be customized and adjusted as organizational needs change. New roles can be created, and permissions can be updated as necessary, allowing the access control system to evolve with the organization.

- RBAC systems often include auditing capabilities, making it easier to track who accessed what resources and when. This is crucial for investigating security incidents and for compliance purposes.

- RBAC can enforce the principle of separation of duties, which is a key security practice. This means that no single user should have enough permissions to perform a series of actions that could lead to a security breach. By dividing responsibilities among different roles, RBAC helps prevent conflicts of interest and reduces the risk of fraud or error.

Practical application: safeguarding HR Data

Let's break down a practical scenario where an LLM Hub can make a significant difference - managing HR inquiries:

- Scenario : An organization employed chatbots to handle HR-related questions from employees. These bots need access to personal employee data but must do so in a way that prevents misuse or unauthorized exposure.

- Challenge: The main concern was the risk of sensitive HR data—such as personal employee details, salaries, and performance reviews—being accessed by unauthorized personnel through the AI chatbots. This posed a significant risk to privacy and compliance with data protection regulations.

- Solution with the LLM hub :

- Controlled access: Through RBAC, only HR personnel can query the chatbot for sensitive information, significantly reducing the risk of data exposure to unauthorized staff.

- Audit trails: The system maintained detailed audit trails of all data access and user interactions with the HR chatbots, facilitating real-time monitoring and swift action on any irregularities.

- Compliance with data privacy laws: To ensure compliance with data protection regulations, the LLM Hub now includes automated compliance checks. These help to adjust protocols as needed to meet legal standards.

- Outcome: The integration of the LLM Hub at the company led to a significant improvement in the security and privacy of HR records. By strictly controlling access and ensuring compliance, the company not only safeguarded employee information but also strengthened its stance on data ethics and regulatory adherence.

Conclusion

Robust data governance is crucial as businesses embrace LLMs and AI. The LLM Hub provides a forward-thinking solution for managing the complexities of these technologies. Centralizing data governance is key to ensuring that organizations can leverage AI to improve their operational efficiency without compromising on security, privacy, or ethical standards. This approach not only helps organizations avoid potential pitfalls but also enables sustainable innovation in the AI-driven enterprise landscape.

How to design the LLM Hub Platform for enterprises

In today's fast-paced digital landscape, businesses constantly seek ways to boost efficiency and cut costs. With the rising demand for seamless customer interactions and smoother internal processes, large corporations are turning to innovative solutions like chatbots. These AI-driven tools hold the potential to revolutionize operations, but their implementation isn't always straightforward.

The rapid advancements in AI technology make it challenging to predict future developments. For example, consider the differences in image generation technology that occurred over just two years:

Source: https://medium.com/@junehao/comparing-ai-generated-images-two-years-apart-2022-vs-2024-6c3c4670b905

Find more examples in this blog post .

This text explores the requirements for an LLM Hub platform, highlighting how it can address implementation challenges, including the rapid development of AI solutions, and unlock new opportunities for innovation and efficiency. Understanding the importance of a well-designed LLM Hub platform empowers businesses to make informed decisions about their chatbot initiatives and embark on a confident path toward digital transformation.

Key benefits of implementing chatbots

Several factors fuel the desire for easy and affordable chatbot solutions.

- Firstly, businesses recognize the potential of chatbots to improve customer service by providing 24/7 support, handling routine inquiries, and reducing wait times.

- Secondly, chatbots can automate repetitive tasks , freeing up human employees for more complex and creative work.

- Finally, chatbots can boost operational efficiency by streamlining processes across various departments, from customer service to HR.

However, deploying and managing chatbots across diverse departments and functions can be complex and challenging. Integrating chatbots with existing systems, ensuring they understand and respond accurately to a wide range of inquiries, and maintaining them with regular updates requires significant technical expertise and resources.

This is where LLM Hubs come into play.

What is an LLM Hub?

An LLM Hub is a centralized platform designed to simplify the deployment and management of multiple chatbots within an organization. It provides a single interface to oversee various AI-driven tools, ensuring they work seamlessly together. By centralizing these functions, an LLM Hub makes implementing updates, maintaining security standards, and managing data sources easier.

This centralization allows for consistent and efficient management, reducing the complexity and cost associated with deploying and maintaining chatbot solutions across different departments and functions.

Why does your organization need an LLM Hub?

The need for such solutions is clear. Without the adoption of AI tools, businesses risk falling behind quickly. Furthermore, if companies neglect to manage AI usage, employees might use AI tools independently, leading to potential data leaks. One example of this risk is described in an article detailing leaked conversations using ChatGPT, where sensitive information, including system login credentials, was exposed during a system troubleshooting session at a pharmacy drug portal.

Cost is another critical factor. The affordability of deploying chatbots at scale depends on licensing fees, infrastructure costs, and maintenance expenses. A comprehensive LLM Hub platform that is both cost-effective and scalable allows businesses to adopt chatbot technology with minimal financial risk.

Considerations for the LLM Hub implementation

However, achieving this requires careful planning. Let’s consider, for example, data security . To provide answers tailored to employees and potential customers, we need to integrate the models with extensive data sources. These data sources can be vast, and there is a significant risk of inadvertently revealing more information than intended. The weakest link in any company's security chain is often human error, and the same applies to chatbots. They can make mistakes, and end users may exploit these vulnerabilities through clever manipulation techniques.

We can implement robust tools to monitor and control the information being sent to users. This capability can be applied to every chatbot assistant within our ecosystem, ensuring that sensitive data is protected. The security tools we use - including encryption, authentication mechanisms, and role-based access control - can be easily implemented and tailored for each assistant in our LLM Hub or configured centrally for the entire Hub, depending on the specific needs and policies of the organization.

As mentioned, deploying, and managing chatbots across diverse departments and functions can also be complex and challenging. Efficient development is crucial for organizations seeking to stay compliant with regulatory requirements and internal policies while maximizing operational effectiveness. This requires utilizing standardized templates or blueprints within an LLM Hub, which not only accelerates development but also ensures consistency and compliance across all chatbots.

Additionally, LLM Hubs offer robust tools for compliance management and control, enabling organizations to monitor and enforce regulatory standards, access controls, and data protection measures seamlessly. These features play a pivotal role in reducing the complexity and cost associated with deploying and maintaining chatbot solutions while simultaneously safeguarding sensitive data and mitigating compliance risks.

In the following chapter, we will delve into the specific technical requirements necessary for the successful implementation of an LLM Hub platform, addressing the challenges and opportunities it presents.

LLM Hub - technical requirements

Several key technical requirements must be met to ensure that LLM Hub functions effectively within the organization's AI ecosystem. These requirements focus on data integration, adaptability, integration methods, and security measures . For this use case, 4 major requirements were set based on the business problem we want to solve.

- Independent Integration of Internal Data Sources: The LLM Hub should seamlessly integrate with the organization's existing data sources. This ensures that data from different departments or sources within the organization can be seamlessly incorporated into the LLM Hub. It enables the creation of chatbots that leverage valuable internal data, regardless of the specific chatbot's function. Data owners can deliver data sources, which promotes flexibility and scalability for diverse use cases.

- Easy Onboarding of New Use Cases: The LLM Hub should streamline the process of adding new chatbots and functionalities. Ideally, the system should allow the creation of reusable solutions and data tools. This means the ability to quickly create a chatbot and plug in data tools, such as internal data sources or web search functionalities into it. This reusability minimizes development time and resources required for each new chatbot, accelerating AI deployment.

- Security Verification Layer for the Entire Platform: Security is paramount in LLM-Hub development when dealing with sensitive data and infinite user interactions. The LLM Hub must be equipped with robust security measures to protect user privacy and prevent unauthorized access or malicious activities. Additionally, a question-answer verification layer must be implemented to ensure the accuracy and reliability of the information provided by the chatbots.

- Possibility of Various Integrations with the Assistant Itself: The LLM Hub should offer diverse integration options for AI assistants. Interaction between users and chatbots within the Hub should be available regardless of the communication platform. Whether users prefer to engage via an API, a messaging platform like Microsoft Teams, or a web-based interface, the LLM Hub should accommodate diverse integration options to meet user preferences and operational needs.

High-level design of the LLM Hub

A well-designed LLM Hub platform is key to unlocking the true potential of chatbots within an organization. However, building such a platform requires careful consideration of various technical requirements. In the previous section, we outlined four key requirements. Now, we will take an iterative approach to unveil the LLM Hub architecture.

Data sources integration

Figure 1

The architectural diagram in Figure 1 displays a design that prioritizes independent integration of internal data sources. Let us break down the key components and how they contribute to achieving the goal:

- Domain Knowledge Storage (DKS) – knowledge storage acts as a central repository for all the data extracted from the internal source. Here, the data is organized using a standardized schema for all domain knowledge storages. This schema defines the structure and meaning of the data (metadata), making it easier for chatbots to understand and query the information they need regardless of the original source.

- Data Loaders – data loaders act as bridges between the LLM Hub and specific data sources within the organization. Each loader can be configured and created independently using its native protocols (APIs, events, etc.), resulting in structured knowledge in DKS. This ensures that LLM Hub can integrate with a wide variety of data sources without requiring significant modifications in the assistant. Data Loaders, along with DKS, can be provided by data owners who are experts in the given domain.

- Assistant – represents a chatbot that can be built using the LLM Hub platform. It uses the RAG approach, getting knowledge from different DKSs to understand the topic and answer user questions. It is the only piece of architecture where use case owners can make some changes like prompt engineering, caching, etc.

Functions

Figure 2 introduces pre-built functions that can be used for any assistant. It enables easier onboarding for new use cases . Functions can be treated as reusable building blocks for chatbot development . Assistants can easily enable and disable specific functions using configuration.

They can also facilitate knowledge sharing and collaboration within an organization. Users can share functions they have created, allowing others to leverage them and accelerate chatbot development efforts.

Using pre-built functions, developers can focus on each chatbot's unique logic and user interface rather than re-inventing the wheel for common functionalities like internet search. Also, using function calling, LLM can decide whether specific data knowledge storage should be called or not, optimizing the RAG process, reducing costs, and minimizing unnecessary calls to external resources.

Figure 2

Middleware

With the next diagram (Figure 3), we introduce an additional layer of middleware, a crucial enhancement that fortifies our software by incorporating a unified authentication process and a prompt validation layer. This middleware acts as a gatekeeper , ensuring that all requests meet our security and compliance standards before proceeding further into the system.

When a user sends a request, the middleware's authentication module verifies the user's credentials to ensure they have the necessary permissions to access the requested resources. This step is vital in maintaining the integrity and security of our system, protecting sensitive data, and preventing unauthorized access. By implementing a robust authentication mechanism, we safeguard our infrastructure from potential breaches and ensure that only legitimate users interact with our assistants.

Next, the prompt validation layer comes into play. This component is designed to scrutinize each incoming request to ensure it complies with company policies and guidelines. Given the sophisticated nature of modern AI models, there are numerous ways to craft queries that could potentially extract sensitive or unauthorized information. For instance, as highlighted in a recent study , there are methods to extract training data through well-constructed queries. By validating prompts before they reach the AI model, we mitigate these risks, ensuring that the data processed is both safe and appropriate.

Figure 3

The middleware, comprising the authentication (Auth) and Prompt Verification Layer, acts as a gatekeeper to ensure secure and valid interactions. The authentication module verifies user credentials, while the Prompt Verification Layer ensures that incoming requests are appropriate and within the scope of the AI model's capabilities. This dual-layer security approach not only safeguards the system but also ensures that users receive relevant and accurate responses.

Adaptability is the key here. It is designed to be a common component for all our assistants, providing a standardized approach to security and compliance. This uniformity simplifies maintenance, as updates to the authentication or validation processes can be implemented across the board without needing to modify each assistant individually. Furthermore, this modular design allows for easy expansion and customization, enabling us to tailor the solution to meet the specific needs of different customers.

This means a more reliable and secure system that can adapt to their unique requirements. Whether you need to integrate new authentication protocols, enforce stricter compliance rules, or scale the system to accommodate more users, our middleware framework is flexible enough to handle these changes seamlessly.

Handlers

We are coming to the very beginning of our process: the handlers. Figure 4 highlights the crucial role of these components in managing requests from various sources . Users can interact through different communication platforms, including popular ones in office environments such as Teams and Slack. These platforms are familiar to employees, as they use them daily for communication with colleagues.

Handling prompts from multiple sources can be complex due to the variations in how each platform structures requests. This is where our handlers play a critical role.

They are designed to parse incoming requests and convert them into a standardized format , ensuring consistency in responses regardless of the communication platform used. By developing robust handlers, we ensure that the AI model provides uniform answers across all communicators, thereby enhancing reliability and user experience.

Moreover, these handlers streamline the integration process, allowing for easy scalability as new communication platforms are adopted. This flexibility is essential for adapting to the evolving technological landscape and maintaining a cohesive user experience across various channels.

The API handler facilitates the creation of custom, tailored front-end interfaces . This capability allows the company to deliver unique and personalized chat experiences that are adaptable to various scenarios.

For example, front-end developers can leverage the API handler to implement a mobile version of the chatbot or enable interactions with the AI model within a car. With comprehensive documentation, the API handler provides an effective solution for developing and integrating these features seamlessly.

In summary, the handlers are a foundational element of our AI infrastructure, ensuring seamless communication, robust security, and scalability. By standardizing requests and enabling versatile front-end integrations, they provide a consistent and high-quality user experience across various communication platforms.

Figure 4

Conclusions

The development of the LLM Hub platform is a significant step forward in adopting AI technology within large organizations. It effectively addresses the complexities and challenges of implementing chatbots in an easy, fast, and cost-effective way. But to maximize the potential of LLM Hub, architecture is not enough, and several key factors must be considered:

- Continuous Collaboration: Collaboration between data owners, use case owners, and the platform team is essential for the platform to stay at the forefront of AI innovation.

- Compliance and Control: In the corporate world, robust compliance measures must be implemented to ensure the chatbots adhere to industry and organizational standards. LLM Hub can be a perfect place for it. It can implement granular access controls, audit trails, logging, or policy enforcements.

- Templates for Efficiency: LLM Hub should provide customizable templates for all chatbot components that can be used in a new use case. Facilitating templates will help teams accelerate the creation and deployment of new assistants, improving efficiency and reducing time to market.

By adhering to these rules, organizations can unlock new ways for growth, efficiency, and innovation in the era of artificial intelligence. Investing in a well-designed LLM Hub platform equips corporations with the chatbot tools to:

- Simplify Compliance: LLM Hub ensures that chatbots created in the platform adhere to industry regulations and standards, safeguarding your company from legal implications and maintaining a positive brand name.

- Enhance Security : Security measures built into the platform foster trust among all customers and partners, safeguarding sensitive data and the organization's intellectual property.

- Accelerate chatbot development : Templates and tools provided by LLM Hub, or other use case owners enhance quickly development and launch of sophisticated chatbots.

- Asynchronous Collaboration and Work Reduction: An LLM Hub enables teams to work asynchronously on chatbot development, eliminating the need to duplicate efforts, e.g., to create a connection to the same data source or make the same action.

As AI technology continues to evolve, the potential applications of LLM Hubs will expand, opening new opportunities for innovation. Organizations can leverage this technology to not only enhance customer interactions but also to streamline internal processes, improve decision-making, and foster a culture of continuous improvement. By integrating advanced analytics and machine learning capabilities, the LLM Hub can provide deeper insights and predictive capabilities, driving proactive business strategies.

Furthermore, the modularity and scalability of the LLM Hub platform means that it can grow alongside the organization, adapting to changing needs without requiring extensive overhauls. Specifically, this growth potential translates to the ability to seamlessly integrate new tools and functionalities into the entire LLM Hub ecosystem. Additionally, new chatbots can be simply added to the platform and use already implemented tools as the organization expands. This future-proof design ensures that investments made today will continue to yield benefits in the long run.

The successful implementation of an LLM Hub can transform the organizational landscape, making AI an integral part of the business ecosystem. This transformation enhances operational efficiency and positions the organization as a leader in technological innovation, ready to meet future challenges and opportunities.

How to develop AI-driven personal assistants tailored to automotive needs. Part 3

Blend AI assistant concepts together

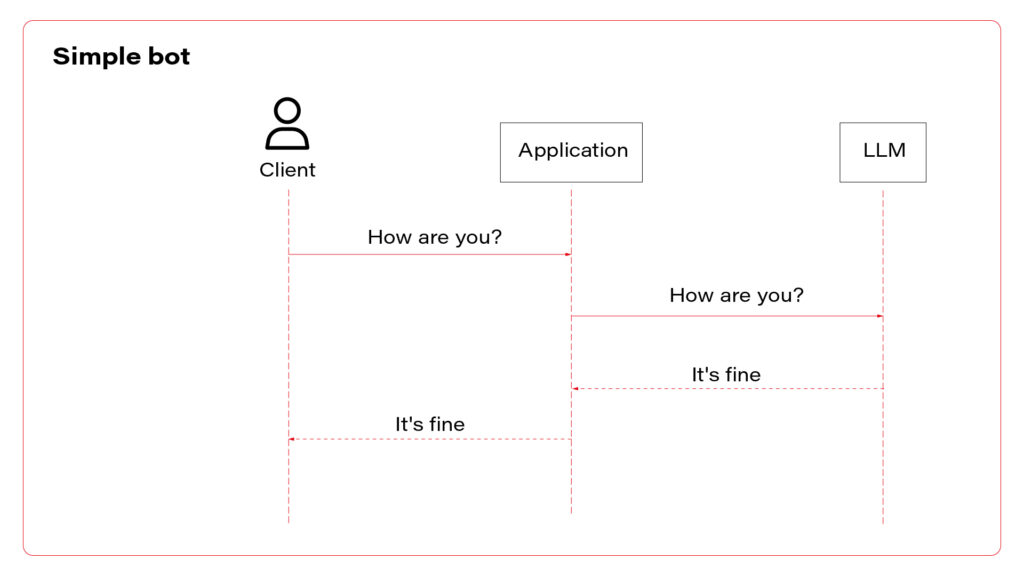

This series of articles starts with a general chatbot description – what it is, how to deploy the model, and how to call it. The second part is about tailoring – how to teach the bot domain knowledge and how to enable it to execute actions. Today, we’ll dive into the architecture of the application, to avoid starting with something we would regret later on.

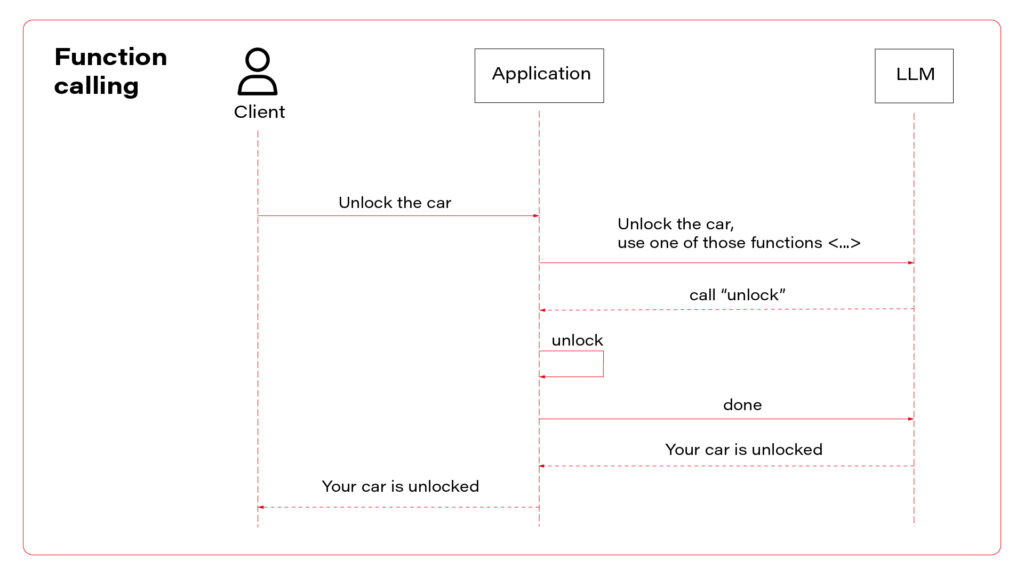

To sum up, there are three AI assistant concepts to consider: simple chatbot, RAG, and function calling.

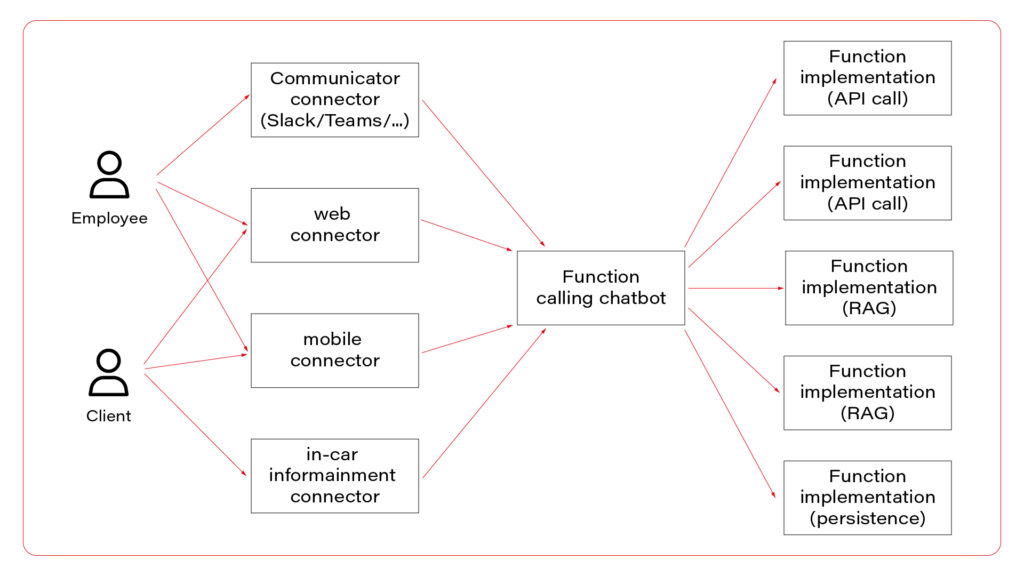

I propose to use them all at once. Let’s talk about the architecture. The perfect one may look as follows.

In the picture, you can see three layers. The first one, connectors, is responsible for session handling. There are some differences between various UI layers, so it’s wise to keep them small, simple, and separated. Members of this layer may be connected to a fast database, like Redis, to allow session sharing between nodes, or you can use a server-side or both-side communication channel to keep sessions alive. For simple applications, this layer is optional.

The next layer is the chatbot – the “main” application in the system. This is the application connected to the LLM, implementing the function calling feature. If you use middleware between users and the “main” application, this one may be stateless and receive the entire conversation from the middleware with each call. As you can see, the same application serves its capabilities both to employees and clients.

Let’s imagine a chatbot dedicated to recommending a car. Both a client and a dealer may use a very similar application, but the dealer has more capabilities – to order a car, to see stock positions, etc. You don’t need to create two different applications for that. The concept is the same, the architecture is the same, and the LLM client is the same. There are only two elements that differ: system prompts and the set of available functions. You can play it through a simple abstract factory pattern that will provide different prompts and function definitions for different users.

In a perfect world, the last layer is a set of microservices to handle different functions. If the LLM decides to use the function “store_order”, the “main” application calls the “store_order” function microservice that inserts data to an order database. Suppose the LLM decides to use the function “honk_and_flash” to localize a car in a crowded parking. In that case, the “main” application calls the “hong_and_flash” function microservice that handles authorization and calls a Digital Twin API to execute the operation in the car. If the LLM decides to use a function “check_in_user_manual”, the “main” application calls the “check_in_user_manual” function microservice, which is… another LLM-based application!

And that’s the point!

A side note before we move on – the world is never perfect so it’s understandable if you won’t implement each function as a separate microservice and e.g. keep everything in the same application.

The architecture proposed can combine all three AI assistant concepts. The “main” application may answer questions based on general knowledge and system prompts (“simple chatbot” concept) or call a function (“function calling” concept). The function may collect data based on the prompt (“RAG” concept) and do one of the following: call LLM to answer a question or return the data to add it to the context to let the “main” LLM answer the question. Usually, it’s better to follow the former way – to answer the question and not to add huge documents to the context. But for special use cases, like a long conversation about collected data, you may want to keep the document in the context of the conversation.