AAOS 14 - Surround view parking camera: How to configure and launch exterior view system

EVS - park mode

The Android Automotive Operating System (AAOS) 14 introduces significant advancements, including a Surround View Parking Camera system. This feature, part of the Exterior View System (EVS), provides a comprehensive 360-degree view around the vehicle, enhancing parking safety and ease. This article will guide you through the process of configuring and launching the EVS on AAOS 14 .

Structure of the EVS system in Android 14

The Exterior View System (EVS) in Android 14 is a sophisticated integration designed to enhance driver awareness and safety through multiple external camera feeds. This system is composed of three primary components: the EVS Driver application, the Manager application, and the EVS App. Each component plays a crucial role in capturing, managing, and displaying the images necessary for a comprehensive view of the vehicle's surroundings.

EVS driver application

The EVS Driver application serves as the cornerstone of the EVS system, responsible for capturing images from the vehicle's cameras. These images are delivered as RGBA image buffers, which are essential for further processing and display. Typically, the Driver application is provided by the vehicle manufacturer, tailored to ensure compatibility with the specific hardware and camera setup of the vehicle.

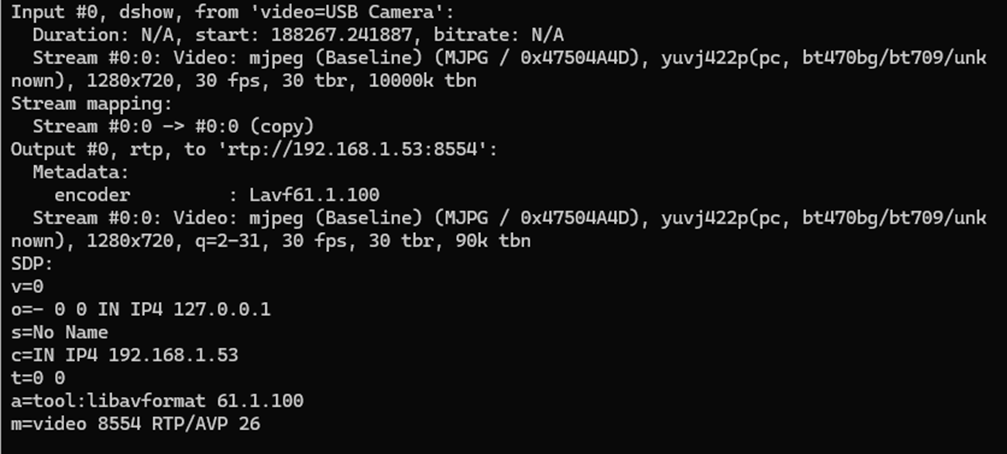

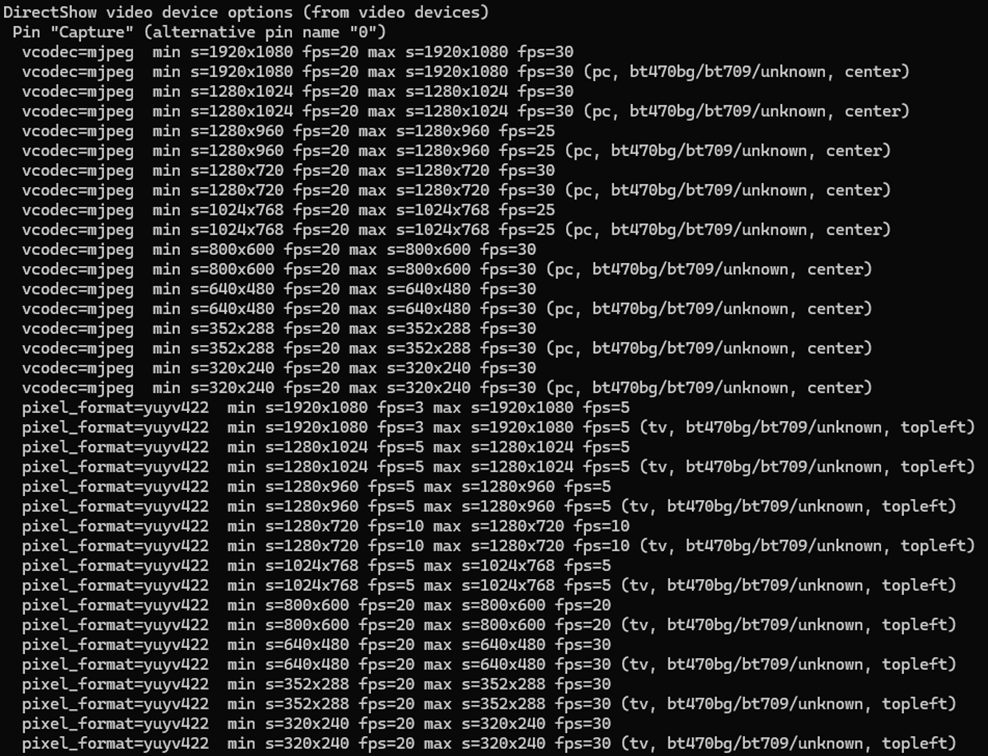

To aid developers, Android 14 includes a sample implementation of the Driver application that utilizes the Linux V4L2 (Video for Linux 2) subsystem. This example demonstrates how to capture images from USB-connected cameras, offering a practical reference for creating compatible Driver applications. The sample implementation is located in the Android source code at packages/services/Car/cpp/evs/sampleDriver .

Manager application

The Manager application acts as the intermediary between the Driver application and the EVS App. Its primary responsibilities include managing the connected cameras and displays within the system.

Key Tasks :

- Camera Management : Controls and coordinates the various cameras connected to the vehicle.

- Display Management : Manages the display units, ensuring the correct images are shown based on the input from the Driver application.

- Communication : Facilitates communication between the Driver application and the EVS App, ensuring a smooth data flow and integration.

EVS app

The EVS App is the central component of the EVS system, responsible for assembling the images from the various cameras and displaying them on the vehicle's screen. This application adapts the displayed content based on the vehicle's gear selection, providing relevant visual information to the driver.

For instance, when the vehicle is in reverse gear (VehicleGear::GEAR_REVERSE), the EVS App displays the rear camera feed to assist with reversing maneuvers. When the vehicle is in park gear (VehicleGear::GEAR_PARK), the app showcases a 360-degree view by stitching images from four cameras, offering a comprehensive overview of the vehicle’s surroundings. In other gear positions, the EVS App stops displaying images and remains in the background, ready to activate when the gear changes again.

The EVS App achieves this dynamic functionality by subscribing to signals from the Vehicle Hardware Abstraction Layer (VHAL), specifically the VehicleProperty::GEAR_SELECTION . This allows the app to adjust the displayed content in real-time based on the current gear of the vehicle.

Communication interface

Communication between the Driver application, Manager application, and EVS App is facilitated through the IEvsEnumerator HAL interface. This interface plays a crucial role in the EVS system, ensuring that image data is captured, managed, and displayed accurately. The IEvsEnumerator interface is defined in the Android source code at hardware/interfaces/automotive/evs/1.0/IEvsEnumerator.hal .

EVS subsystem update

Evs source code is located in: packages/services/Car/cpp/evs. Please make sure you use the latest sources because there were some bugs in the later version that cause Evs to not work.

cd packages/services/Car/cpp/evs

git checkout main

git pull

mm

adb push out/target/product/rpi4/vendor/bin/hw/android.hardware.automotive.evs-default /vendor/bin/hw/

adb push out/target/product/rpi4/system/bin/evs_app /system/bin/

EVS driver configuration

To begin, we need to configure the EVS Driver. The configuration file is located at /vendor/etc/automotive/evs/evs_configuration_override.xml .

Here is an example of its content:

<configuration>

<!-- system configuration -->

<system>

<!-- number of cameras available to EVS -->

<num_cameras value='2'/>

</system>

<!-- camera device information -->

<camera>

<!-- camera device starts -->

<device id='/dev/video0' position='rear'>

<caps>

<!-- list of supported controls -->

<supported_controls>

<control name='BRIGHTNESS' min='0' max='255'/>

<control name='CONTRAST' min='0' max='255'/>

<control name='AUTO_WHITE_BALANCE' min='0' max='1'/>

<control name='WHITE_BALANCE_TEMPERATURE' min='2000' max='7500'/>

<control name='SHARPNESS' min='0' max='255'/>

<control name='AUTO_FOCUS' min='0' max='1'/>

<control name='ABSOLUTE_FOCUS' min='0' max='255' step='5'/>

<control name='ABSOLUTE_ZOOM' min='100' max='400'/>

</supported_controls>

<!-- list of supported stream configurations -->

<!-- below configurations were taken from v4l2-ctrl query on

Logitech Webcam C930e device -->

<stream id='0' width='1280' height='720' format='RGBA_8888' framerate='30'/>

</caps>

<!-- list of parameters -->

<characteristics>

</characteristics>

</device>

<device id='/dev/video2' position='front'>

<caps>

<!-- list of supported controls -->

<supported_controls>

<control name='BRIGHTNESS' min='0' max='255'/>

<control name='CONTRAST' min='0' max='255'/>

<control name='AUTO_WHITE_BALANCE' min='0' max='1'/>

<control name='WHITE_BALANCE_TEMPERATURE' min='2000' max='7500'/>

<control name='SHARPNESS' min='0' max='255'/>

<control name='AUTO_FOCUS' min='0' max='1'/>

<control name='ABSOLUTE_FOCUS' min='0' max='255' step='5'/>

<control name='ABSOLUTE_ZOOM' min='100' max='400'/>

</supported_controls>

<!-- list of supported stream configurations -->

<!-- below configurations were taken from v4l2-ctrl query on

Logitech Webcam C930e device -->

<stream id='0' width='1280' height='720' format='RGBA_8888' framerate='30'/>

</caps>

<!-- list of parameters -->

<characteristics>

</characteristics>

</device>

</camera>

<!-- display device starts -->

<display>

<device id='display0' position='driver'>

<caps>

<!-- list of supported inpu stream configurations -->

<stream id='0' width='1280' height='800' format='RGBA_8888' framerate='30'/>

</caps>

</device>

</display>

</configuration>

In this configuration, two cameras are defined: /dev/video0 (rear) and /dev/video2 (front). Both cameras have one stream defined with a resolution of 1280 x 720, a frame rate of 30, and an RGBA format.

Additionally, there is one display defined with a resolution of 1280 x 800, a frame rate of 30, and an RGBA format.

Configuration details

The configuration file starts by specifying the number of cameras available to the EVS system. This is done within the <system> tag, where the <num_cameras> tag sets the number of cameras to 2.

Each camera device is defined within the <camera> tag. For example, the rear camera ( /dev/video0 ) is defined with various capabilities such as brightness, contrast, auto white balance, and more. These capabilities are listed under the <supported_controls> tag. Similarly, the front camera ( /dev/video2 ) is defined with the same set of controls.

Both cameras also have their supported stream configurations listed under the <stream> tag. These configurations specify the resolution, format, and frame rate of the video streams.

The display device is defined under the <display> tag. The display configuration includes supported input stream configurations, specifying the resolution, format, and frame rate.

EVS driver operation

When the EVS Driver starts, it reads this configuration file to understand the available cameras and display settings. It then sends this configuration information to the Manager application. The EVS Driver will wait for requests to open and read from the cameras, operating according to the defined configurations.

EVS app configuration

Configuring the EVS App is more complex. We need to determine how the images from individual cameras will be combined to create a 360-degree view. In the repository, the file packages/services/Car/cpp/evs/apps/default/res/config.json.readme contains a description of the configuration sections:

{

"car" : { // This section describes the geometry of the car

"width" : 76.7, // The width of the car body

"wheelBase" : 117.9, // The distance between the front and rear axles

"frontExtent" : 44.7, // The extent of the car body ahead of the front axle

"rearExtent" : 40 // The extent of the car body behind the rear axle

},

"displays" : [ // This configures the dimensions of the surround view display

{ // The first display will be used as the default display

"displayPort" : 1, // Display port number, the target display is connected to

"frontRange" : 100, // How far to render the view in front of the front bumper

"rearRange" : 100 // How far the view extends behind the rear bumper

}

],

"graphic" : { // This maps the car texture into the projected view space

"frontPixel" : 23, // The pixel row in CarFromTop.png at which the front bumper appears

"rearPixel" : 223 // The pixel row in CarFromTop.png at which the back bumper ends

},

"cameras" : [ // This describes the cameras potentially available on the car

{

"cameraId" : "/dev/video32", // Camera ID exposed by EVS HAL

"function" : "reverse,park", // Set of modes to which this camera contributes

"x" : 0.0, // Optical center distance right of vehicle center

"y" : -40.0, // Optical center distance forward of rear axle

"z" : 48, // Optical center distance above ground

"yaw" : 180, // Optical axis degrees to the left of straight ahead

"pitch" : -30, // Optical axis degrees above the horizon

"roll" : 0, // Rotation degrees around the optical axis

"hfov" : 125, // Horizontal field of view in degrees

"vfov" : 103, // Vertical field of view in degrees

"hflip" : true, // Flip the view horizontally

"vflip" : true // Flip the view vertically

}

]

}

The EVS app configuration file is crucial for setting up the system for a specific car. Although the inclusion of comments makes this example an invalid JSON, it serves to illustrate the expected format of the configuration file. Additionally, the system requires an image named CarFromTop.png to represent the car.

In the configuration, units of length are arbitrary but must remain consistent throughout the file. In this example, units of length are in inches.

The coordinate system is right-handed: X represents the right direction, Y is forward, and Z is up, with the origin located at the center of the rear axle at ground level. Angle units are in degrees, with yaw measured from the front of the car, positive to the left (positive Z rotation). Pitch is measured from the horizon, positive upwards (positive X rotation), and roll is always assumed to be zero. Please keep in mind that, unit of angles are in degrees, but they are converted to radians during configuration reading. So, if you want to change it in EVS App source code, use radians.

This setup allows the EVS app to accurately interpret and render the camera images for the surround view parking system.

The configuration file for the EVS App is located at /vendor/etc/automotive/evs/config_override.json . Below is an example configuration with two cameras, front and rear, corresponding to our driver setup:

{

"car": {

"width": 76.7,

"wheelBase": 117.9,

"frontExtent": 44.7,

"rearExtent": 40

},

"displays": [

{

"_comment": "Display0",

"displayPort": 0,

"frontRange": 100,

"rearRange": 100

}

],

"graphic": {

"frontPixel": -20,

"rearPixel": 260

},

"cameras": [

{

"cameraId": "/dev/video0",

"function": "reverse,park",

"x": 0.0,

"y": 20.0,

"z": 48,

"yaw": 180,

"pitch": -10,

"roll": 0,

"hfov": 115,

"vfov": 80,

"hflip": false,

"vflip": false

},

{

"cameraId": "/dev/video2",

"function": "front,park",

"x": 0.0,

"y": 100.0,

"z": 48,

"yaw": 0,

"pitch": -10,

"roll": 0,

"hfov": 115,

"vfov": 80,

"hflip": false,

"vflip": false

}

]

}

Running EVS

Make sure all apps are running:

ps -A | grep evs

automotive_evs 3722 1 11007600 6716 binder_thread_read 0 S evsmanagerd

graphics 3723 1 11362488 30868 binder_thread_read 0 S android.hardware.automotive.evs-default

automotive_evs 3736 1 11068388 9116 futex_wait 0 S evs_app

To simulate reverse gear you can call:

evs_app --test --gear reverse

And park:

evs_app --test --gear park

EVS app should be displayed on the screen.

Troubleshooting

When configuring and launching the EVS (Exterior View System) for the Surround View Parking Camera in Android AAOS 14, you may encounter several issues.

To debug that, you can use logs from EVS system:

logcat EvsDriver:D EvsApp:D evsmanagerd:D *:S

Multiple USB cameras - image freeze

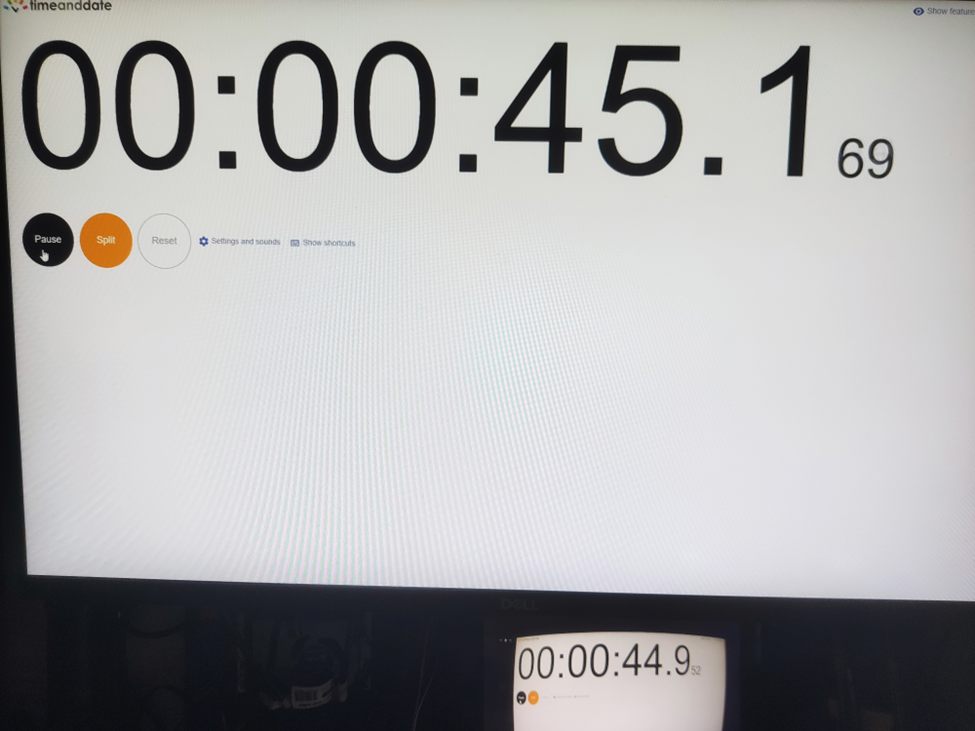

During the initialization of the EVS system, we encountered an issue with the image feed from two USB cameras. While the feed from one camera displayed smoothly, the feed from the second camera either did not appear at all or froze after displaying a few frames.

We discovered that the problem lay in the USB communication between the camera and the V4L2 uvcvideo driver. During the connection negotiation, the camera reserved all available USB bandwidth. To prevent this, the uvcvideo driver needs to be configured with the parameter quirks=128 . This setting allows the driver to allocate the USB bandwidth based on the actual resolution and frame rate of the camera.

To implement this solution, the parameter should be set in the bootloader, within the kernel command line, for example:

console=ttyS0,115200 no_console_suspend root=/dev/ram0 rootwait androidboot.hardware=rpi4 androidboot.selinux=permissive uvcvideo.quirks=128

After applying this setting, the image feed from both cameras should display smoothly, resolving the freezing issue.

Green frame around camera image

In the current implementation of the EVS system, the camera image is surrounded by a green frame, as illustrated in the following image:

To eliminate this green frame, you need to modify the implementation of the EVS Driver. Specifically, you should edit the GlWrapper.cpp file located at cpp/evs/sampleDriver/aidl/src/ .

In the void GlWrapper::renderImageToScreen() function, change the following lines:

-0.8, 0.8, 0.0f, // left top in window space

0.8, 0.8, 0.0f, // right top

-0.8, -0.8, 0.0f, // left bottom

0.8, -0.8, 0.0f // right bottom

to

-1.0, 1.0, 0.0f, // left top in window space

1.0, 1.0, 0.0f, // right top

-1.0, -1.0, 0.0f, // left bottom

1.0, -1.0, 0.0f // right bottom

After making this change, rebuild the EVS Driver and deploy it to your device. The camera image should now be displayed full screen without the green frame.

Conclusion

In this article, we delved into the intricacies of configuring and launching the EVS (Exterior View System) for the Surround View Parking Camera in Android AAOS 14. We explored the critical components that make up the EVS system: the EVS Driver, EVS Manager, and EVS App, detailing their roles and interactions.

The EVS Driver is responsible for providing image buffers from the vehicle's cameras, leveraging a sample implementation using the Linux V4L2 subsystem to handle USB-connected cameras. The EVS Manager acts as an intermediary, managing camera and display resources and facilitating communication between the EVS Driver and the EVS App. Finally, the EVS App compiles the images from various cameras, displaying a cohesive 360-degree view around the vehicle based on the gear selection and other signals from the Vehicle HAL.

Configuring the EVS system involves setting up the EVS Driver through a comprehensive XML configuration file, defining camera and display parameters. Additionally, the EVS App configuration, outlined in a JSON file, ensures the correct mapping and stitching of camera images to provide an accurate surround view.

By understanding and implementing these configurations, developers can harness the full potential of the Android AAOS 14 platform to enhance vehicle safety and driver assistance through an effective Surround View Parking Camera system. This comprehensive setup not only improves the parking experience but also sets a foundation for future advancements in automotive technology.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.