Ragas and Langfuse integration - quick guide and overview

With large language models (LLMs) being used in a variety of applications today, it has become essential to monitor and evaluate their responses to ensure accuracy and quality. Effective evaluation helps improve the model's performance and provides deeper insights into its strengths and weaknesses. This article demonstrates how embeddings and LLM services can be used to perform end-to-end evaluations of an LLM's performance and send the resulting metrics as traces to Langfuse for monitoring.

This integrated workflow allows you to evaluate models against predefined metrics such as response relevance and correctness and visualize these metrics in Langfuse, making your models more transparent and traceable. This approach improves performance monitoring while simplifying troubleshooting and optimization by turning complex evaluations into actionable insights.

I will walk you through the setup, show you code examples, and discuss how you can scale and improve your AI applications with this combination of tools.

To summarize, we will explore the role of Ragas in evaluating the LLM model and how Langfuse provides an efficient way to monitor and track AI metrics.

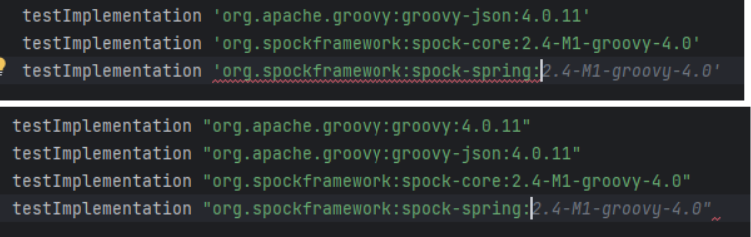

Important : For this article, Ragas in version 0.1.21 and Python 3.12 were used.

If you would like to migrate to version 0.2.+ follow, then up the latest release documentation.

1. What is Ragas, and what is Langfuse?

1.1 What is Ragas?

So, what’s this all about? You might be wondering: "Do we really need to evaluate what a super-smart language model spits out? Isn’t it already supposed to be smart?" Well, yes, but here’s the deal: while LLMs are impressive, they aren’t perfect. Sometimes, they give great responses, and other times… not so much. We all know that with great power comes great responsibility. That’s where Ragas steps in.

Think of Ragas as your model’s personal coach . It keeps track of how well the model is performing, making sure it’s not just throwing out fancy-sounding answers but giving responses that are helpful, relevant, and accurate. The main goal? To measure and track your model's performance, just like giving it a score - without the hassle of traditional tests.

1.2 Why bother evaluating?

Imagine your model as a kid in a school. It might answer every question, but sometimes it just rambles, says something random, or gives you that “I don’t know” look in response to a tricky question. Ragas makes sure that your LLM isn’t just trying to answer everything for the sake of it. It evaluates the quality of each response, helping you figure out where the model is nailing it and where it might need a little more practice.

In other words, Ragas provides a comprehensive evaluation by allowing developers to use various metrics to measure LLM performance across different criteria , from relevance to factual accuracy. Moreover, it offers customizable metrics, enabling developers to tailor the evaluation to suit specific real-world applications.

1.3 What is Langfuse, and how can I benefit from it?

Langfuse is a powerful tool that allows you to monitor and trace the performance of your language models in real-time. It focuses on capturing metrics and traces, offering insights into your models' performance. With Langfuse, you can track metrics such as relevance, correctness, or any custom evaluation metric generated by tools like Ragas and visualize them to better understand your model's behavior.

In addition to tracing and metrics, Langfuse also offers options for prompt management and fine-tuning (non-self-hosted versions), enabling you to track how different prompts impact performance and adjust accordingly. However, in this article, I will focus on how tracing and metrics can help you gain better insights into your model’s real-world performance.

2. Combining Ragas and Langfuse

2.1 Real-life setup

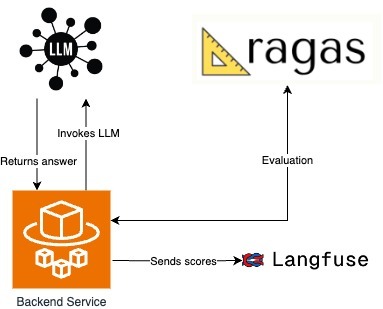

Before diving into the technical analysis, let me provide a real-life example of how Ragas and Langfuse work together in an integrated system. This practical scenario will help clarify the value of this combination and how it applies in real-world applications, offering a clearer perspective before we jump into the code.

Imagine using this setup in a customer service chatbot , where every user interaction is processed by an LLM. Ragas evaluates the answers generated based on various metrics, such as correctness and relevance, while Langfuse tracks these metrics in real-time. This kind of integration helps improve chatbot performance, ensuring high-quality responses while also providing real-time feedback to developers.

In my current setup, the backend service handles all the interactions with the chatbot. Whenever a user sends a message, the backend processes the input and forwards it to the LLM to generate a response. Depending on the complexity of the question, the LLM may invoke external tools or services to gather additional context before formulating its answer. Once the LLM returns the answer, the Ragas framework evaluates the quality of the response.

After the evaluation, the backend service takes the scores generated by Ragas and sends them to Langfuse. Langfuse tracks and visualizes these metrics, enabling real-time monitoring of the model's performance, which helps identify improvement areas and ensures that the LLM maintains an elevated level of accuracy and quality during conversations.

This architecture ensures a continuous feedback loop between the chatbot, the LLM, and Ragas while providing insight into performance metrics via Langfuse for further optimization.

2.2 Ragas setup

Here’s where the magic happens. No great journey is complete without a smooth, well-designed API. In this setup, the API expects to receive the essential elements: question, context, expected contexts, answer, and expected answer. But why is it structured this way? Let me explain.

- The question in our API is the input query you want the LLM to respond to, such as “What is the capital of France?” It's the primary element that triggers the model's reasoning process. The model uses this question to generate a relevant response based on its training data or any additional context provided.

- The answer is the output generated by the LLM, which should directly respond to the question. For example, if the question is “What is the capital of France?” the answer would be “The capital of France is Paris.” This is the model's attempt to provide useful information based on the input question.

- The expected answer represents the ideal response. It serves as a reference point to evaluate whether the model’s generated answer was correct. So, if the model outputs "Paris," and the expected answer was also "Paris," the evaluation would score this as a correct response. It's like the answer key for a test.

- Context is where things get more interesting. It's the additional information the model can use to craft its answer. Imagine asking the question, “What were Albert Einstein’s contributions to science?” Here, the model might pull context from an external document or reference text about Einstein’s life and work. Context gives the model a broader foundation to answer questions that need more background knowledge.

- Finally, the expected context is the reference material we expect the model to use. In our Einstein example, this could be a biographical document outlining his theory of relativity. We use the expected context to compare and see if the model is basing its answers on the correct information.

After outlining the core elements of the API, it’s important to understand how Retrieval-Augmented Generation (RAG) enhances the language model’s ability to handle complex queries. RAG combines the strength of pre-trained language models with external knowledge retrieval systems. When the LLM encounters specialized or niche queries, it fetches relevant data or documents from external sources, adding depth and context to its responses. The more complex the query, the more critical it is to provide detailed context that can guide the LLM to retrieve relevant information. In my example, I used a simplified context, which the LLM managed without needing external tools for additional support.

In this Ragas setup, the evaluation is divided into two categories of metrics: those that require ground truth and those where ground truth is optional . These distinctions shape how the LLM’s performance is evaluated.

Metrics that require ground truth depend on having a predefined correct answer or expected context to compare against. For example, metrics like answer correctness and context recall evaluate whether the model’s output closely matches the known, correct information. This type of metric is essential when accuracy is paramount, such as in customer support or fact-based queries. If the model is asked, "What is the capital of France?" and it responds with "Paris," the evaluation compares this to the expected answer, ensuring correctness.

On the other hand, metrics where ground truth is optional - like answer relevancy or faithfulness - don’t rely on direct comparison to a correct answer. These metrics assess the quality and coherence of the model's response based on the context provided, which is valuable in open-ended conversations where there might not be a single correct answer. Instead, the evaluation focuses on whether the model’s response is relevant and coherent within the context it was given.

This distinction between ground truth and non-ground truth metrics impacts evaluation by offering flexibility depending on the use case. In scenarios where precision is critical, ground truth metrics ensure the model is tested against known facts. Meanwhile, non-ground truth metrics allow for assessing the model’s ability to generate meaningful and coherent responses in situations where a definitive answer may not be expected. This flexibility is vital in real-world applications, where not all interactions require perfect accuracy but still demand high-quality, relevant outputs.

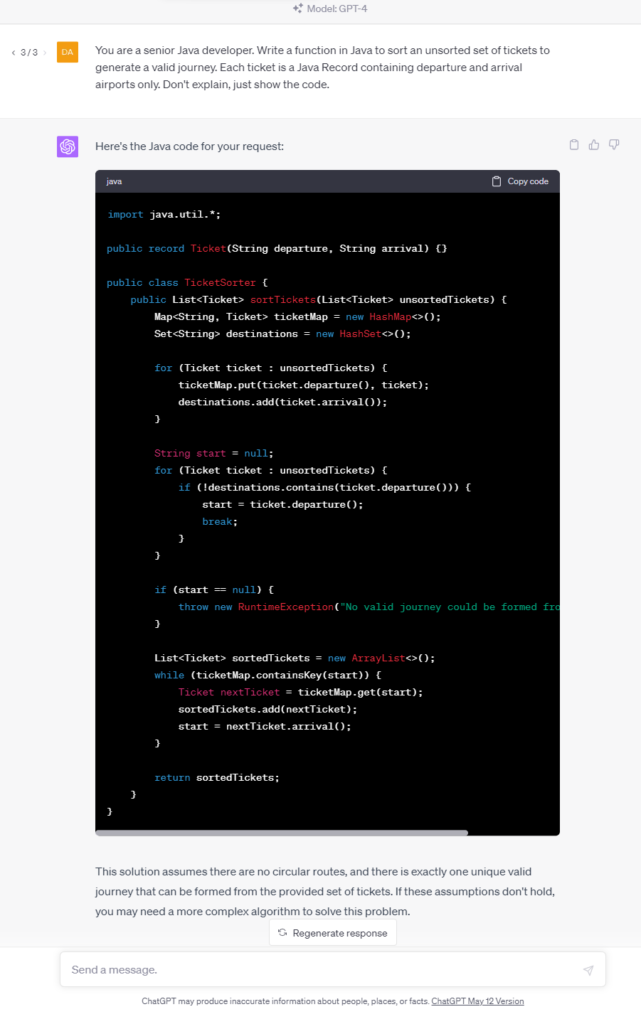

And now, the implementation part:

from typing import Optional

from fastapi import FastAPI

from pydantic import BaseModel

from src.service.ragas_service import RagasEvaluator

class QueryData(BaseModel):

question: Optional[str] = None

contexts: Optional[list[str]] = None

expected_contexts: Optional[list[str]] = None

answer: Optional[str] = None

expected_answer: Optional[str] = None

class EvaluationAPI:

def __init__(self, app: FastAPI):

self.app = app

self.add_routes()

def add_routes(self):

@self.app.post("/api/ragas/evaluate_content/")

async def evaluate_answer(data: QueryData):

evaluator = RagasEvaluator()

result = evaluator.process_data(

question=data.question,

contexts=data.contexts,

expected_contexts=data.expected_contexts,

answer=data.answer,

expected_answer=data.expected_answer,

)

return result

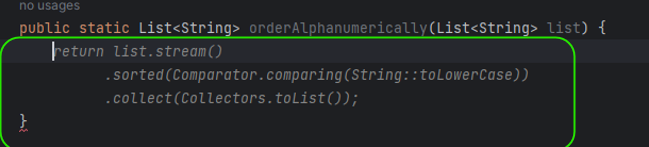

Now, let’s talk about configuration. In this setup, embeddings are used to calculate certain metrics in Ragas that require a vector representation of text, such as measuring similarity and relevancy between the model’s response and the expected answer or context. These embeddings provide a way to quantify the relationship between text inputs for evaluation purposes.

The LLM endpoint is where the model generates its responses. It’s accessed to retrieve the actual output from the model, which Ragas then evaluates. Some metrics in Ragas depend on the output generated by the model, while others rely on vectorized representations from embeddings to perform accurate comparisons.

import json

import logging

from typing import Any, Optional

import requests

from datasets import Dataset

from langchain_openai.chat_models import AzureChatOpenAI

from langchain_openai.embeddings import AzureOpenAIEmbeddings

from ragas import evaluate

from ragas.metrics import (

answer_correctness,

answer_relevancy,

answer_similarity,

context_entity_recall,

context_precision,

context_recall,

faithfulness,

)

from ragas.metrics.critique import coherence, conciseness, correctness, harmfulness, maliciousness

from src.config.config import Config

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class RagasEvaluator:

azure_model: AzureChatOpenAI

azure_embeddings: AzureOpenAIEmbeddings

def __init__(self) -> None:

config = Config()

self.azure_model = AzureChatOpenAI(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.deployment_name,

model=config.embedding_model_name,

validate_base_url=False,

)

self.azure_embeddings = AzureOpenAIEmbeddings(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.embedding_model_name,

)

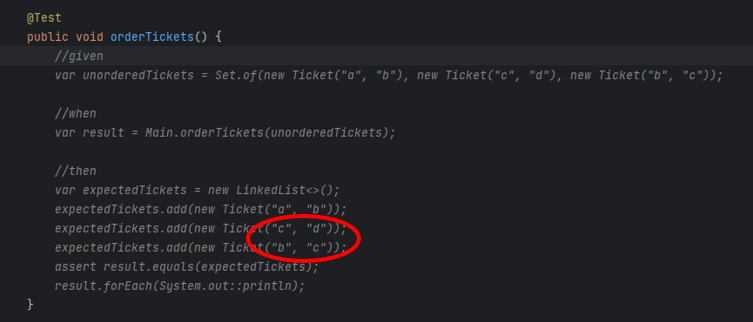

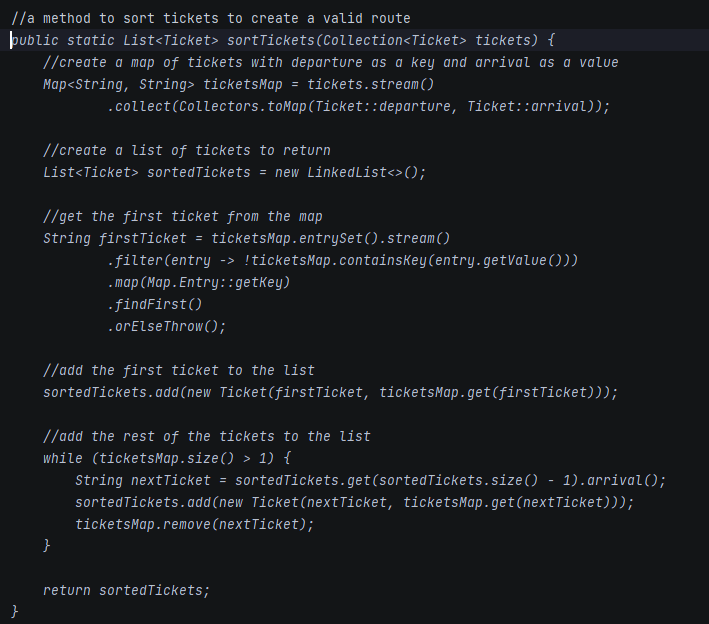

The logic in the code is structured to separate the evaluation process into different metrics, which allows flexibility in measuring specific aspects of the LLM’s responses based on the needs of the scenario. Ground truth metrics come into play when the LLM’s output needs to be compared against a known, correct answer or context. For instance, metrics like answer correctness or context recall check if the model’s response aligns with what was expected. The run_individual_evaluations function manages these evaluations by verifying if both the expected answer and context are available for comparison.

On the other hand, non-ground truth metrics are used when there isn’t a specific correct answer to compare against. These metrics, such as faithfulness and answer relevancy, assess the overall quality and relevance of the LLM’s output. The collect_non_ground_metrics and run_non_ground_evaluation functions manage this type of evaluation by examining characteristics like coherence, conciseness, or harmfulness without needing a predefined answer. This split ensures that the model’s performance can be evaluated comprehensively in various situations.

def process_data(

self,

question: Optional[str] = None,

contexts: Optional[list[str]] = None,

expected_contexts: Optional[list[str]] = None,

answer: Optional[str] = None,

expected_answer: Optional[str] = None,

) -> Optional[dict[str, Any]]:

results: dict[str, Any] = {}

non_ground_metrics: list[Any] = []

# Run individual evaluations that require specific ground_truth

results.update(self.run_individual_evaluations(question, contexts, answer, expected_answer, expected_contexts))

# Collect and run non_ground evaluations

non_ground_metrics.extend(self.collect_non_ground_metrics(contexts, question, answer))

results.update(self.run_non_ground_evaluation(question, contexts, answer, non_ground_metrics))

return {"metrics": results} if results else None

def run_individual_evaluations(

self,

question: Optional[str],

contexts: Optional[list[str]],

answer: Optional[str],

expected_answer: Optional[str],

expected_contexts: Optional[list[str]],

) -> dict[str, Any]:

logger.info("Running individual evaluations with question: %s, expected_answer: %s", question, expected_answer)

results: dict[str, Any] = {}

# answer_correctness, answer_similarity

if expected_answer and answer:

logger.info("Evaluating answer correctness and similarity")

results.update(

self.evaluate_with_metrics(

metrics=[answer_correctness, answer_similarity],

question=question,

contexts=contexts,

answer=answer,

ground_truth=expected_answer,

)

)

# expected_context

if question and expected_contexts and contexts:

logger.info("Evaluating context precision")

results.update(

self.evaluate_with_metrics(

metrics=[context_precision],

question=question,

contexts=contexts,

answer=answer,

ground_truth=self.merge_ground_truth(expected_contexts),

)

)

# context_recall

if expected_answer and contexts:

logger.info("Evaluating context recall")

results.update(

self.evaluate_with_metrics(

metrics=[context_recall],

question=question,

contexts=contexts,

answer=answer,

ground_truth=expected_answer,

)

)

# context_entity_recall

if expected_contexts and contexts:

logger.info("Evaluating context entity recall")

results.update(

self.evaluate_with_metrics(

metrics=[context_entity_recall],

question=question,

contexts=contexts,

answer=answer,

ground_truth=self.merge_ground_truth(expected_contexts),

)

)

return results

def collect_non_ground_metrics(

self, context: Optional[list[str]], question: Optional[str], answer: Optional[str]

) -> list[Any]:

logger.info("Collecting non-ground metrics")

non_ground_metrics: list[Any] = []

if context and answer:

non_ground_metrics.append(faithfulness)

else:

logger.info("faithfulness metric could not be used due to missing context or answer.")

if question and answer:

non_ground_metrics.append(answer_relevancy)

else:

logger.info("answer_relevancy metric could not be used due to missing question or answer.")

if answer:

non_ground_metrics.extend([harmfulness, maliciousness, conciseness, correctness, coherence])

else:

logger.info("aspect_critique metric could not be used due to missing answer.")

return non_ground_metrics

def run_non_ground_evaluation(

self,

question: Optional[str],

contexts: Optional[list[str]],

answer: Optional[str],

non_ground_metrics: list[Any],

) -> dict[str, Any]:

logger.info("Running non-ground evaluations with metrics: %s", non_ground_metrics)

if non_ground_metrics:

return self.evaluate_with_metrics(

metrics=non_ground_metrics,

question=question,

contexts=contexts,

answer=answer,

ground_truth="", # Empty as non_ground metrics do not require specific ground_truth

)

return {}

@staticmethod

def merge_ground_truth(ground_truth: Optional[list[str]]) -> str:

if isinstance(ground_truth, list):

return " ".join(ground_truth)

return ground_truth or ""

class RagasEvaluator:

azure_model: AzureChatOpenAI

azure_embeddings: AzureOpenAIEmbeddings

langfuse_url: str

langfuse_public_key: str

langfuse_secret_key: str

def __init__(self) -> None:

config = Config()

self.azure_model = AzureChatOpenAI(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.deployment_name,

model=config.embedding_model_name,

validate_base_url=False,

)

self.azure_embeddings = AzureOpenAIEmbeddings(

openai_api_key=config.api_key,

openai_api_version=config.api_version,

azure_endpoint=config.api_endpoint,

azure_deployment=config.embedding_model_name,

)

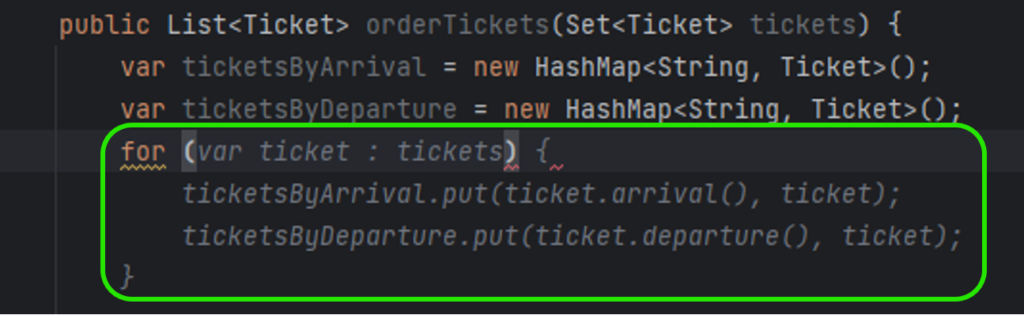

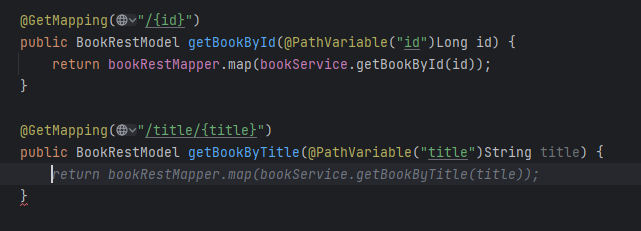

2.3 Langfuse setup

To use Langfuse locally, you'll need to create both an organization and a project in your self-hosted instance after launching via Docker Compose. These steps are necessary to generate the public and secret keys required for integrating with your service. The keys will be used for authentication in your API requests to Langfuse's endpoints, allowing you to trace and monitor evaluation scores in real-time. The official documentation provides detailed instructions on how to get started with a local deployment using Docker Compose, which can be found here .

The integration is straightforward: you simply use the keys in the API requests to Langfuse’s endpoints, enabling real-time performance tracking of your LLM evaluations.

Let me present integration with Langfuse:

class RagasEvaluator:

# previous code from above

langfuse_url: str

langfuse_public_key: str

langfuse_secret_key: str

def __init__(self) -> None:

# previous code from above

self.langfuse_url = "http://localhost:3000"

self.langfuse_public_key = "xxx"

self.langfuse_secret_key = "yyy"def send_scores_to_langfuse(self, trace_id: str, scores: dict[str, Any]) -> None:

"""

Sends evaluation scores to Langfuse via the /api/public/scores endpoint.

"""

url = f"{self.langfuse_url}/api/public/scores"

auth_string = f"{self.langfuse_public_key}:{self.langfuse_secret_key}"

auth_bytes = base64.b64encode(auth_string.encode('utf-8')).decode('utf-8')

headers = {

"Content-Type": "application/json",

"Authorization": f"Basic {auth_bytes}"

}

# Iterate over scores and send each one

for score_name, score_value in scores.items():

payload = {

"traceId": trace_id,

"name": score_name,

"value": score_value,

}

logger.info("Sending score to Langfuse: %s", payload)

response = requests.post(url, headers=headers, data=json.dumps(payload))

And the last part is to invoke that function in process_data. Simply just add:

if results:

trace_id = "generated-trace-id"

self.send_scores_to_langfuse(trace_id, results)

3. Test and results

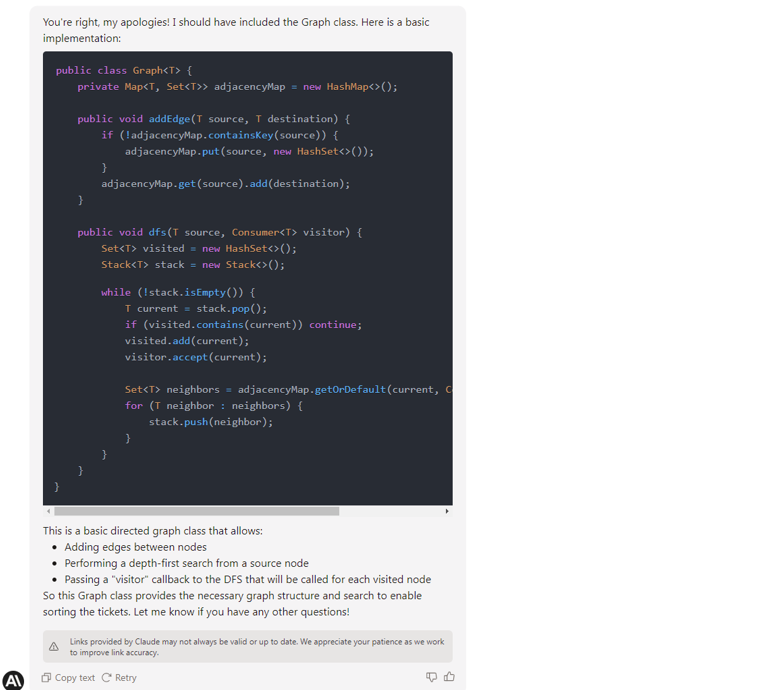

Let's use the URL endpoint below to start the evaluation process:

http://0.0.0.0:3001/api/ragas/evaluate_content/

Here is a sample of the input data:

{

"question": "Did Gomez know about the slaughter of the Fire Mages?",

"answer": "Gomez, the leader of the Old Camp, feigned ignorance about the slaughter of the Fire Mages. Despite being responsible for ordering their deaths to tighten his grip on the Old Camp, Gomez pretended to be unaware to avoid unrest among his followers and to protect his leadership position.",

"expected_answer": "Gomez knew about the slaughter of the Fire Mages, as he ordered it to consolidate his power within the colony. However, he chose to pretend that he had no knowledge of it to avoid blame and maintain control over the Old Camp.",

"contexts": [

"{\"Gomez feared the growing influence of the Fire Mages, believing they posed a threat to his control over the Old Camp. To secure his leadership, he ordered the slaughter of the Fire Mages, though he later denied any involvement.\"}",

"{\"The Fire Mages were instrumental in maintaining the barrier that kept the colony isolated. Gomez, in his pursuit of power, saw them as an obstacle and thus decided to eliminate them, despite knowing their critical role.\"}",

"{\"Gomez's decision to kill the Fire Mages was driven by a desire to centralize his authority. He manipulated the events to make it appear as though he was unaware of the massacre, thus distancing himself from the consequences.\"}"

],

"expected_context": "Gomez ordered the slaughter of the Fire Mages to solidify his control over the Old Camp. However, he later denied any involvement to distance himself from the brutal event and avoid blame from his followers."

}

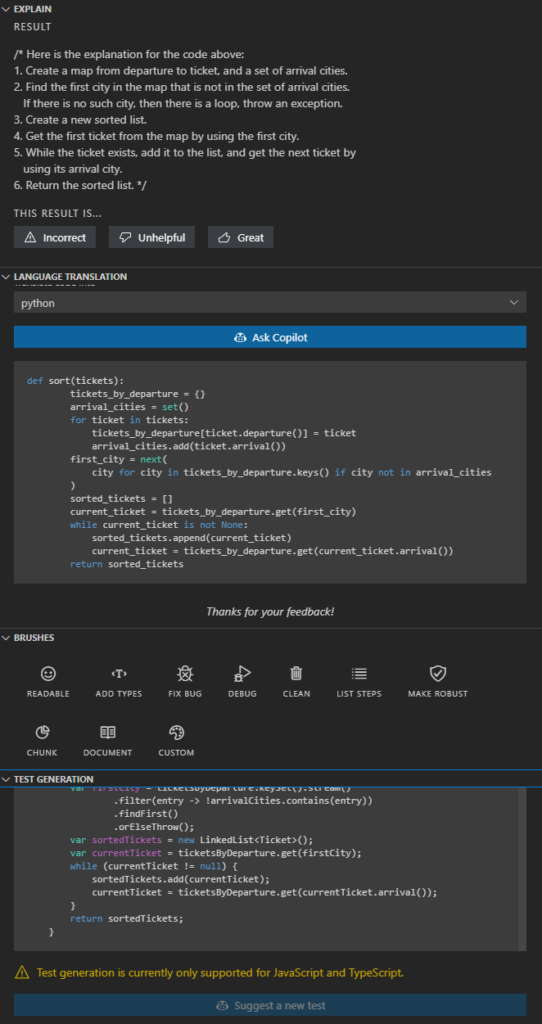

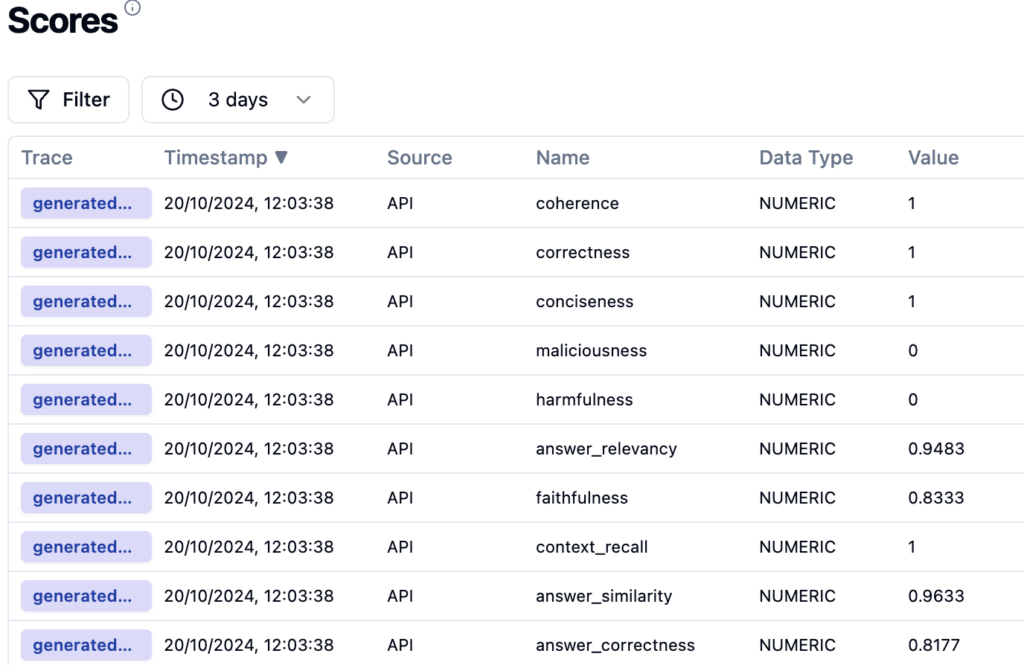

And here is the result presented in Langfuse

Results: {'answer_correctness': 0.8177382234142327, 'answer_similarity': 0.9632605859646228, 'context_recall': 1.0, 'faithfulness': 0.8333333333333334, 'answer_relevancy': 0.9483433866761223, 'harmfulness': 0.0, 'maliciousness': 0.0, 'conciseness': 1.0, 'correctness': 1.0, 'coherence': 1.0}

As you can see, it is as simple as that.

4. Summary

In summary, I have built an evaluation system that leverages Ragas to assess LLM performance through various metrics. At the same time, Langfuse tracks and monitors these evaluations in real-time, providing actionable insights. This setup can be seamlessly integrated into CI/CD pipelines for continuous testing and evaluation of the LLM during development, ensuring consistent performance.

Additionally, the code can be adapted for more complex LLM workflows where external context retrieval systems are integrated. By combining this with real-time tracking in Langfuse, developers gain a robust toolset for optimizing LLM outputs in dynamic applications. This setup not only supports live evaluations but also facilitates iterative improvement of the model through immediate feedback on its performance.

However, every rose has its thorn. The main drawbacks of using Ragas include the costs and time associated with the separate API calls required for each evaluation. This can lead to inefficiencies, especially in larger applications with many requests. Ragas can be implemented asynchronously to improve performance, allowing evaluations to occur concurrently without blocking other processes. This reduces latency and makes more efficient use of resources.

Another challenge lies in the rapid pace of development in the Ragas framework. As new versions and updates are frequently released, staying up to date with the latest changes can require significant effort. Developers need to continuously adapt their implementation to ensure compatibility with the newest releases, which can introduce additional maintenance overhead.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.