Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Cloud native: What does it mean for your business?

We witness how the world of IT constantly changes. Today, like never before, it is more often defined as “being THE business” rather than just “supporting the business”. In other words, the conventional application architectures and development methods are slowly becoming inadequate in this new world. Grape Up, playing a key role in the cloud migration strategy, helps Fortune 1000 companies make a smooth transition. We build apps that support the business itself, we advocate the agile methodology, and implement DevOps to optimize performance.

To clarify the idea behind cloud native technologies, we’ve put together the most important insights to help you and your team understand the essentials and benefits of Cloud Native Applications:

Microservices architecture

First and foremost, one must come to terms with the fact that the traditional application architecture means complex development, long testing and releasing new features only in a specific period. Whereas, the microservices approach is nothing like that. It deconstructs an application into many functional components. These components can be updated and deployed separately without having any impact on other parts of the application.

Depending on its functionality, every microservice can be updated as often as needed. For instance, a microservice that contains functionalities of a dynamic business offering will not affect other parts of the app that barely change at all. Thanks to this, an application can be developed without changing its fundamental architecture. Gone are the days when IT teams had to alter most of the application just to change one piece.

Operational design

One of the biggest issues that our customers face before the migration is the burden of moving new code releases into production. Along with monolithic architectures that combine the whole code into one executable, new code releases require deploying the entire application. Because production environment isn’t the same as development environment, it often becomes impossible for developers to detect potential bugs before the release. Also, testing new features without moving the whole environment to the new app version can become tricky. This, in turn, complicates releasing new code. Microservices solve this problem prefectly. Since the environment is divided, any changes in code are separated to executables. Thanks to this, updates do not change the rest of the application, which is what clients are initially concerned about.

API

One of the indisputable advantage of microservices which outweighs all traditional methods is the fact that they communicate by means of API. With that said, you can release new features step by step with a new API version simultaneously. And if any failures appear, there is also a possibility to shut off access to the new API while the previous version of your app is still operational. In the meantime, you can work on the new function.

DevOps

At Grape Up, we often work on on-site projects alongside our clients. On the first day of the project, we are introduced to multiple groups that are in charge of various parts of the app’s lifecycle such as operations, deployment, QA or development. Each of them has its own processes. This creates long gaps between tasks being handed over from one group to another. Such gaps result in ridiculously long deployment time frames which are very harmful to an IT business, especially when frequent releases are more than welcome. To efficiently get rid of these obstacles and improve the whole process, we introduce clients to DevOps.

By and large, DevOps is nothing else, but an attempt to eliminate the gaps between IT groups. It’s an engineering culture that Grape Up experts teach clients to use. If followed properly, they are able to transform manual processes to automated ones and start getting things done faster and better. The most important thing is to find the pain point in the application’s lifecycle. Let’s say that the QA department doesn’t have enough resources to test software and delays the entire process in time. A solution to this can be either to migrate testing to a cloud-based environment or put developers in charge of creating tests that analyze the code. By doing so, the QA stage can take place simultaneously with the development stage, not after it. And this is what it takes to understand DevOps.

The transition to cloud-native software development is no longer an option, it is a necessity. We hope that all the reasons mentioned above prompted you to embark on a journey called “Cloud-Native”, a promising opportunity for your company to grow in the years to come. And even if you’re still feeling hesitant, don’t think twice. Our expertise combined with your vision can be a great start into a brighter future for your enterprise .

In case of a broken repository, break glass: mitigating real-life Git goofs

Imagine that you’re busy with a project that’s become a lifeblood of your company. The release is just a few days away and the schedule is tight. You work overtime or spend your 9-5 switching back and forth between a multitude of JIRA tickets, pushing a lot of pull requests as new issues come and go.

Focusing on the task at hand between one coffee break and another is tedious, and once you finish and push that final patch to your remote, you stop for a second and get this tingle in your chest. „Is it just some random piece of code that was not supposed to be there? What, release branch? Oh, my gosh! So, what do I do now?”

As many of my co-workers and myself have found ourselves in such situation, I feel obligated to address this issue. Thankfully, the good folks at Git made sure that undoing something we have already committed to a remote is not impossible. In this article, I will explore the ways of recovering your overtime mistakes as well as their potential drawbacks.

Depending on what your workflow looks like

Merge and rebase are examples of Git workflows that are used most often within corporate projects. For those who are not familiar with either of them:

- Merge workflow assumes that your team uses one or more branches that derive from the trunk (often indirectly, i.e. having been branched out from development/sprint branches), then merged into the parent branch using the classic Git mechanism utilising a merge commit. This has an advantage of seeing clearly when a given functionality has been introduced into the parent branch and also preserving commit hashes after introducing the functionality. Also, it is easier for VCS tracking systems (like BitBucket) to make sense of the progress of your repository.

The drawback: your repository tree gets polluted with merge commits and the history graph (if you are into such things) becomes quite untidy. - In a rebase workflow the features, after being branched out of their parents, become incorporated into the trunk seamlessly. The trunk log becomes straightforward and the history log gets easy to navigate. This, however, does not preserve commit hashes and unless used in conjuction with pull request tracking systems, can easily result in the repository becoming difficult to maintain.

As it happens, there are many ways to fix your repository. These ways depend on your workflow and the kind of mistake. Let’s go through some of the most common errors that can take place. Without further ado…

I pushed a shiny, brand new feature without a pull request

As we all know, pull requests do matter – they help us avoid subtle bugs and it never hurts to have another pair of eyes to look at your code. You can of course do the review afterwards, but this would require some discipline to maintain. It is easier to stick to pull requests, really.

Working overtime or undercaffeinated often leads one to forget to create a feature branch before an actual feature is implemented. Rushing to push it and to test it leads to a mess.

Revert to the rescue

git revert

git revert is a powerful command that can safely undo code changes without rewriting the history. It is powerful enough to gain respect in the eyes of many developers who usually hesitate to use its true potential.

Revert (2) works by replaying given commits in reverse , that is, creating new commits that remove added lines of code, they add back whatever was removed and undo modifications. This differs from git reset (1) that reverting is a fast-forward change that involves no history rewriting. Thus, if somebody already pulled down the tainted repository branch or introduced some changes on their own, it would be straightforward to address that. Here’s an illustration:

Revert takes a single ref, a range of refs or several arbitrary (unrelated) refs as an argument. A ref can be anything that can be resolved to a commit: the branch name (local or remote), relative commit (using tilde operator) or the commit hash itself. Only the sky is the limit.

git revert 74e0028545d52b680d9ac59edd3aff0ac4

git revert 74e002..9839b2

git revert HEAD~1..HEAD~3

git revert origin/develop~1 origin/develop~3 origin/develop~4

Reverting several changes at once

Normally, all commits in a range are reverted in order one by one. If you wish to make your log a little tidier and revert them all at once, use a no-commit flag and commit the result with a custom message:

git revert -n HEAD~1..HEAD~3

git commit -m „Revert commits x – y”

Undoing merges – wanted and unwanted

When using a merge flow, as opposed to a rebase flow, reverting changes becomes slightly more complicated – it requires the programmer to explicitly choose the parent branch based on which the changes are reverted. This often means choosing a release branch – it doesn’t affect what is actually being reverted, but what’s preserved in the history log.

Let’s suppose you base your feature off the development branch and you name it feature-1 . You introduce some changes into your branch while somebody merges some of their changes into development . Both your branch and the parent branch undergo some changes and then you can proceed to merging.

After a while, this feature has to be undone for the release that your team has to work on some more, and thus it requires a revert of the features you have previously introduced. Some time has passed since then and many more features have been introduced into the release.

Every merge commit has two parents. To revert yours into the state that preserves your and your team’s changes in the log, you would usually specify the mainline branch (-m) as the first one:

git revert 36bca8 -m 1

However, once the team decides to reintroduce this change once again, it will mean that you will try to merge in a set of diffs that’s already in the target branch. This will result in a succinct message that everything is up-to-date. To mitigate that, we could try to switch to our feature branch, amend the commit (in case there’s only one change to revert) or use an interactive rebase.

git rebase -i HEAD~n

Where n is the number of the introduced changes with edit option. Amending the commits will replay them, not altering the changes or commit messages, but making the commits appear different to Git and thus allowing us to reintroduce them as if they were fresh.

If you use rebasing, read this

The rebasing eases the burden of keeping track of the merge parents – because there are no merges to begin with. The history is linear and, as such, reverts of unwanted code are straightforward to perform.

It may be tempting to use rewriting in this case, but keep in mind the golden rule of rewriting – unless you are absolutely sure that it’s only you and nobody else using that branch, don’t rewrite the history.

How (not) to use the –force

Not everybody is born Jedi, and most programmers are no different. Force pushing the commit allows us to overwrite the remote history even if our branch does not perfectly fit into the scene, but makes it dangerously easy to forgo the changes somebody else made. A rule of thumb is to only use the force push on your own feature branches, and only when it is absolutely necessary.

When is it fine to rewrite Git history?

Put simply, as long as we haven’t published our branch yet for somebody else’s use. As long as our changes stay local, we’re fine to do as we please – provided we take responsibility for the data we manipulate. Some commands, such as git reset --hard can lead to loss of data (which happens quite often, as one would be able to infer from multitude of Stack Overflow posts on the topic). Nevertheless, it’s always wise to create a backup branch (or otherwise remember the ref) before attempting such operations.

Goof Prevention Patrol

Other than using software solutions, it’s best to enforce the team discipline yourself – make fellow developers responsible for their mistakes and let them learn from practice.

Some points worth going over are:

- protecting main/release branches from accidental pushes using restrictions and rules

- establishing a naming convention for branches and commit messages

- using CD/CI system for additional monitoring – this may help detect repository inconsistencies

Also, many Git tools and providers, such as BitBucket, allow one to specify branch restrictions, such as not allowing developers to push to a main or release branch without a pull request. This can be extended to matching multiple branches with a glob expression or a regex. This is very handy especially if your team follows naming branches after a specific pattern.

Summary and further Git reads

Mistakes were made, are made and will be. Thankfully, Git is smart enough to include mechanisms for undoing them for the developers brave enough to use them.

Hopefully this article resolved some common misunderstandings about Revert and when to use it. Should that not prove enough, here are some helpful links:

A slippery slope from agile to fragile: treat your team with respect

„Great things in business are never done by one person, they’re done by a team of people.”

said Steve Jobs. Now imagine if he didn’t have such Agile approach. Would the iPhone ever exist?

Agile development, a set of methods and practices where solutions evolve thanks to the collaboration between cross-functional teams . It is also pictured to be a framework which helps to ‘get things done’ fast. On top of that, it helps to set a clear division of who is doing what, to spot blockers and plan ahead. And we’ve all heard of this. Most of us even know the definition of Agile by heart. In the last few decades, Agile has become the approach for modern product development. But despite its popularity, it is still misinterpreted quite often and its core values tend to get abused. This misinterpretation has become so common that it has developed a name for itself, frAgile. In other words, it is what your product and team become if you don’t follow the rules.

The thin line between Agile and frAgile

Working by the principles of Agile means you are flexible and you’re able to deliver your product the way the customer wants it and on time. By and large, Agile teaches u how to work smarter and how to eliminate all barriers to working efficiently. However, there are times when the attempt to follow Agile isn’t taken with enough care and the whole plan fails. Just like trying to keep balance when it’s your first time on ice skates.

With that said, I will step-by-step explain a few examples of how Agile can quickly and irreversably become something it should never be in the first place. Later on, I will list the tips on how to avoid stepping on that slippery slope. So let’s take a look at the examples:

Your technical debt is going through the roof

Just like projects, sprints are used to accomplish a goal. Quite often though, when the sprint is already running, new decisions and changes keep flowing in. As a result, your team keeps restarting work over and over again and works all the time. Does it sound familiar?

Unfortunately, if this situation continues, everyone gets used to it and it becomes the norm. It usually leads to a huge pile of technical debt you could ever imagine. Combine it with an endless list of defects caused by the lack of stability to nurture the code and you are doomed for failure.

You should always respond to change wisely. Despite the fact that the Agile methodology embraces changes and advocates flexibility, you shouldn’t overdo it. Especially not the changes that impact your sprint on a daily basis. Every bigger change ought to be consulted between sprints, and be based on the feedback received from users.

A big fish leaves the team and the project falls apart

Another, not so fortunate thing that can happen is a team member leave your team in the middle of a long-term, complex project that consists of more unstructured processes than meets the eye. With the job rotation in the contemporary world of IT, it happens all the time.

Once a Product Owner or a Team Leader is gone, none of the team members will be able to propoerly describe the system behavior and what should be delivered. As a result, deadlines will fail to be met and you will be chasing dreams about the quality of your product.

Find the balance between individuals and processes. And most importantly, never underestimate how your scope of work is documented and the team is managed. Otherwise, after an important team member is gone, the rest will be left in a difficult position. So prepare them for such events. Take your time to estimate what might hold back your team and what is absolutely necessary for the fast-paced of world-class engineering.

The project is nearly finished but your customer is nowhere near Agile

You would be surprised at how many companies out there are Agile… in their dreams. By appreciating the flexibility that Agile gives, they often confuse Agile working with flexible working and still work with the waterfall methodology in their minds. This can be spotted especially when someone overuses terms like sprint or scrum all the time. In reality, actions speak louder than words and one doesn’t have to show off their „rich” vocabulary.

Therefore, if you agree on a strict scope of delivery in your contract, you might regret it later on. We all know that the reason why IT is all about Agile is because plans tend to change. The only problems is that the list of features on the contract doesn’t. If the customer doesn’t fully understand the values of Agile, the business relationship can be put at risk.

Prioritize the collaboration with your client over contract negotiations. Focus on clear communication from the beginning and make sure that your client grasps the principles of Agile. Also, if along the way any unexpected changes to the established scope of work appear, make sure to carry them out in front of your client’s eyes.

Save me from drowning in frAgility

With all the above, here is how you can avoid messing up your work environment:

- Prepare user stories before planning the sprint. You will thank yourself later. If written collaboratively as part of the product backlog grooming proces, it will leverage creativity and the team’s knowledge. Not only will it reduce overhead, but also accelerate the product delivery.

- Be careful with changes during the sprint. Or simply - avoid abrupt changes. Thanks to this, your code base will have its necessary stability for performance.

- Turn yourself into a true Agile evangelist. Face reality that not everyone understands the core values of the world’s most beloved methodology – not even your customers. So even if someone tells you that they use Agile, take it with a pinch of salt. Strong business partnerships are built upon expectations that are clear to both sides.

At Grape Up, we follow the principles behind the Agile Manifesto for software development. We believe that business people and developers must work together daily throughout the project. It helps us and our clients achieve mutual success on their way to becoming cloud-native enterprises .

Building an easy to maintain, reusable user interface on macOS

The value of the user interface

What you see inside an app is called the user interface (UI). Undoubtedly, it is an important part of every software, and it’s no piece of cake to wrap the business logic with a fancy, (self-explanatory) and efficient visual representation.

How many times in your life have you heard not to judge the book by its cover? Probably quite a few. And how many times have you left an application and never came back just because you didn’t like the design at first sight? Probably more than just a few times.

At Grape Up, we achieve a successful UI through close cooperation between our software engineers (SE) and designers. And this article is about the software engineer’s part – programming UI for the macOS platform .

Programming user interface in Cocoa

Cocoa, an original name given to the Application Programming Interface (API) used for building Mac apps. It is fully responsible for the appearance of apps, their distinctive feel and responsiveness to user actions. The tool automates and simplifies many aspects of UI programming to comply with Apple's human interface guidelines (AHIG). It provides software engineers with several tools for UI development:

- Storyboards

- XIBs

- Custom code

Each of them gives the possibility to implement not only a fully functional, but also an incredibly useful and captivating UI. Having used all three of them at Grape Up, we could give you a comparative analysis of each tool, but we will not be discussing it here.

Why is it better to use XIBs and Storyboards?

Of course, it would be wrong to state that XIBs or Storyboards are simply better than the Custom code. Like always, there is no “silver bullet” – no universal approach or solution for every software development problem. On some occasions the Interface Builder (IB) won’t be helpful to achieve the desired appearance. However, years of hands-on experience backed up by numerous projects have allowed us to draw the following conclusions as to why it’s better to use the Interface Builder on a regular basis:

It’s easy to understand and update

Let’s say that the software engineer needs to deliver an update to an already existing container view with a number of subviews. In what case will he spend less time getting familiar with the existing solution and applying the update? When using XIB with visualized UI elements and layout constraints or when the UI is scripted in several hundred lines of code?

Another example, let’s imagine a set of very similar but still different views. The software engineer needs to extend this set with another view. In what case will he not duplicate the already existing solution and reuse it instead? These are a few examples of regular SE tasks.

In practice, most of the times you would expect the UI to be available in XIB/Storyboard, and not in the code. We can consider XIBs/Storyboards as a public high-level API which encapsulates all the details underneath. IB design will provide a better general overview as well as decrease the chance of missing something.

One of the biggest arguments against XIBs are merge conflicts. Usually, they are caused by not paying enough attention to decomposing tasks during the project. Which is exactly why we practice task decomposition, a project technique that breaks down the workload and tasks into smaller ones before the actual creation of the entire work structure. Thanks to this, we are able to save a lot of time in the long run. By using IB, you can create as much granular UI elements as you want. Moreover, this a proper way of targeting a reusable and easy to update User Interface.

It helps avoid mistakes and saves time

Another great advantage of the Interface Builder is the fact that software engineers can get away with plenty of the so called “dirty work”, such as objects initialization, configuration, basic constraints management, etc. The UI can be implemented faster and with less code. By using IB, the engineer simply follows the rule – “Less code produces less bugs”.

It generates warnings and errors

The warnings and errors generated by the Interface Builder when working with an Auto Layout engine: unsatisfiable layouts, ambiguous layouts, logical errors. Raised issues are quite descriptive and self-explanatory. In many cases, the IB even suggests a solution. Thanks to the mentioned issues it is a lot easier to prepare a proper and functional UI before even building the application. Which would rather be impossible to achieve when programming UI in code. With that said we can say that the IB is a great tool for both creating and testing the UI.

It accelerates the development cycle

The next useful feature that is available in IB is Previews. Thanks to it, the application interface can be previewed using the assistant editor, which displays a secondary editor side-by-side with the main one. It gives the possibility to check the designed Auto Layout on the fly and also significantly reduces development cycle time. Such feature is especially helpful when supporting multiple localizations.

It helps educate new team members

As we all know, during the project life time engineers leave and join the team. To make the onboarding process smooth and effective, we introduce new team members to a UI which is organized in XIB files or in Storyboards. As a result, they are given a complete overview of the application’s functionalities. After all, we all know how the saying goes– “Human brains prefer a visual content over the textual one”.

XIBs and Storyboards extended use cases

Is there a better way of organizing your User Interface designs than just keeping them in your Resources folder? Of course. Why not organize them in a library or a framework? Having a UI framework with all the custom views (XIBs) along with controllers to manage them can be quite convenient. After all, having UI elements in visual representation and keeping them atomic enough guarantees extensibility, manageability and reusability. For those that work on big, stretched over time projects it might be really useful.

Conclusion - providing an easy to maintain user interface

The modern world is all about information and the ways of transferring, processing as well as selling it. Why bother yourself with some boring symbols if there already are more fascinating ways of dealing with it right around the corner? The Interface Builder delivers a quick, convenient and reliable way for programming your UI. So, let’s save us some time for a mutual discussion.

Native bindings for JavaScript - why should I care?

At the very beginning, let’s make one important statement: I am not a JavaScript Developer. I am a C++ Developer, and with my skill set I feel more like a guest in the world of JavaScript. If you’re also a Developer, you probably already know why companies today are on the constant lookout for web developers - the JavaScript language is flexible and has a quite easy learning curve. Also, it is present everywhere from computers, to mobile devices, embedded devices, cars, ATMs or washing machines - you name it. Writing portable code that may be run everywhere is tempting and that's why it gets more and more attention.

Desktop applications in JavaScript

In general, JavaScript is not the first or best fit for desktop applications. It was not created for that purpose and it lacks GUI libraries like C++, C#, Java or even Python. Even though it has found its niche.

Just take a look at the Atom text editor. It is based on Electron [1], a framework to run JS code in a desktop application. The tool internally uses chromium engine so it is more or less a browser in a window, but still quite an impressive achievement to have the same codebase for both Windows, macOS and Linux. It is quite meaningful for those that want to use agile processes. Especially because it is really easy to start with an MVP and have incremental updates with new features often, as it is the same code.

Native code and JavaScript? Are you kidding?

Since JavaScript works for desktop applications, one may think: why bother with native bindings then? But before you also think so, consider the fact that there are a few reasons for that, usually performance issues:

- Javascript is a script, virtualized language. In some scenarios, it may be of magnitude slower than its native code equivalent [2].

- Javascript is characterized by garbage collection and has a hard time with memory consuming algorithms and tasks.

- Access to hardware or native frameworks is sometimes only possible from a native, compiled code.

Of course, this is not always necessary. In fact, you may happily live your developer life writing JS code on a daily basis and never find yourself in a situation when creating or using native code in your application is unavoidable. Hardware performance is still on the uphill and often there is no need to even profile an application. On the other hand, once it happens, every developer should know what options are available for them.

The strange and scary world of static typing

If a JavaScript Developer who uses Electron finds out that his great video encoding algorithm does not keep up with the framerate, is that the end of his application? Should he rewrite everything in assembler?

Obviously not. He might try to use the native library to do the hard work and leverage the environment without a garbage collector and with fast access to the hardware - or maybe even SIMD SSE extensions. The question that many may ask is: but isn’t it difficult and pointless?

Surprisingly not. Let’s dig deeper into the world of native bindings when as an opposite to JavaScript, you often have to specify the type of variable or return value for functions in your code.

First of all, if you want to use Electron or any framework based on NodeJS, you are not alone - you have a small friend called “nan” (if you have seen “wat”[3] you are probably already smiling). Nan is a “native abstraction for NodeJS” [4] and thanks to its very descriptive name, it is enough to say that it allows to create add-ons to NodeJS easily. As literally everything today you can install it using the npm below:

$ npm install --save nan

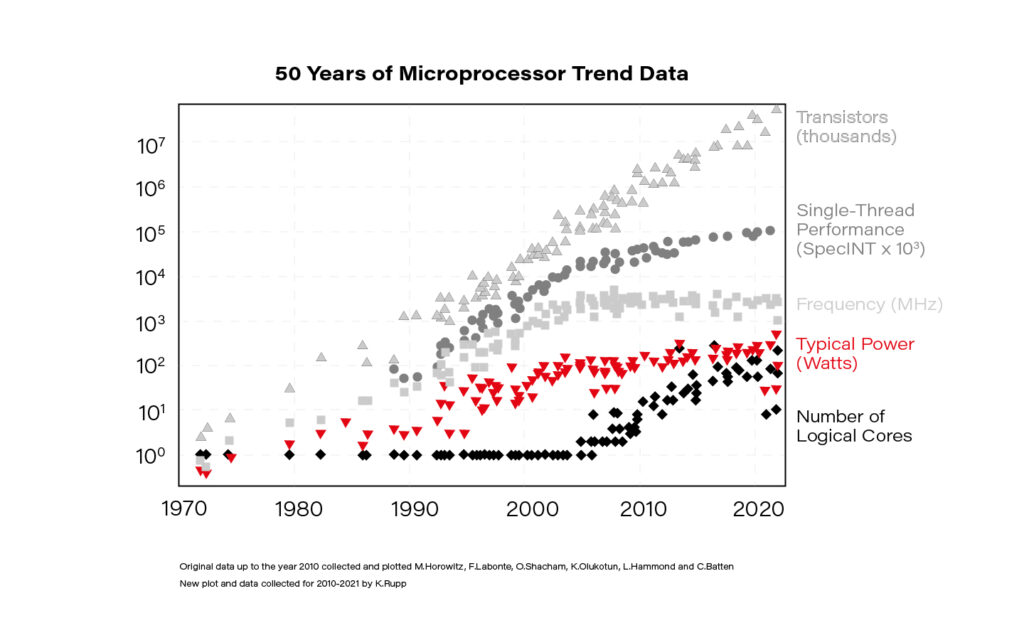

Without getting into boring, technical details, here is an example of wrapping C++ class to a JavaScript object:

Nothing really fancy and the code isn’t too complex either. Just a thin wrapping layer over the C++ class. Obviously, for bigger frameworks there will be much code and more problems to solve, but in general we have a native framework bound with the JavaScript application.

Summary

The bad part is that we, unfortunately, need to compile the code for each platform to use it and by each platform we usually mean windows, linux, macOS, android and iOS depending on our targets. Also, it is not rocket science, but for JavaScript developers that never had a chance to compile the code it may be too difficult.

On the other hand, we have a not so perfect, but working solution for writing applications that run on multiple platforms and handle even complex business logic . Programming has never been a perfect world, it has always been a world of trade-offs, beautiful solutions that never work and refactoring that never happens. In the end, when you are going to look for yet another compromise between your team’s skills and the client’s requirements for the next desktop or mobile application framework, you might consider using JavaScript.

Steps to successful application troubleshooting in a distributed cloud environment

At Grape Up, when we execute digital transformation, we need to take care of a lot of things. First of all, we need to pick a proper IaaS that meets our needs such as AWS or GCP. Then, we need to choose a suitable platform that will run on top of this infrastructure . In our case, it is either Cloud Foundry or Kubernetes. Next, we need to automate this whole setup and provide an easy way to reconfigure it in the future. Once we have the cloud infrastructure ready, we should plan how and what kind of applications we want to migrate to the new environment. This step requires analyzing the current state of the application’s portfolio and answering the following:

- What is the technology stack?

- Which apps are critical for the business?

- What kind of effort is required for replatforming a particular app?

Any components that are particularly troublesome or have some serious technical debts should be considered for modernization. This process is called “breaking the monolith” where we try to iteratively decompose the app into smaller parts, where each new part can be a new separate microservice. As a result, we end up with dozens of new or updated microservices running in the cloud.

So let’s assume that all the heavy lifting has been done. We have our new production-ready cloud platform up and running, we replatformed and/or modernized the apps and we have everything automated with the CI/CD pipelines. From now on, everything works as expected, can be easily scaled and the system is both highly available and resilient.

Application troubleshooting in a cloud environment

Unfortunately, quite often and soon enough we receive a report that some requests behave unusual in some scenarios. Of course, these kind of problems are not unusual no matter what kind of infrastructures, frameworks or languages we use. This is a standard maintenance or monitoring process that each computer system needs to take into account after it has been released to production.

Despite the fact that cloud environments and cloud-native apps improve a lot of things, application troubleshooting might be more complex in the new infrastructure compared to what the ‘old world’ represented.

Therefore, I would like to show you a few techniques that will help you with troubleshooting microservices problems in a distributed cloud environment. To exemplify everything, I will use Cloud Foundry as our cloud-native platform and Java/Spring microservices deployed on it. Some tips might be more general and can be applied in different scenarios.

Check if your app is running properly

There are two basic commands in CF C L I to check if your app is running:

- ‘cf apps’ – this will list all applications deployed to current space with their state and the number of instances currently running. Find your app and check if its state says “started”

- ‘cf app <app_name>` - this command is similar to the one above, but will also show you more detailed information about a particular app. Additionally, since the app is running, you can also check what is the current CPU usage, memory usage and disk utilization.

This step should be first since it’s the fastest way to check if the application is running on the Cloud Foundry Platform.

Check logs & events

If our app is running, you can check its lifecycle events with :

`cf events <app_name>`

This will help you diagnose what was happening with the app. Cloud Foundry could have been reporting some errors before the app finally started. This might be a sign of a potential issue. Another example might be when events show that our app is being restarted repeatedly. This could indicate a shortage of memory which in turn causes the Cloud Foundry platform to destroy the app container.

Events give you just a broad look on what has happened with the app, but if you want more details you need to check your logs. Cloud Foundry helps a lot with handling your logs. There are three ways to check them:

- `cf logs <app_name> --recent` - dumps only recent logs. It will output them to your console so you can use linux commands to filter them.

- `cf logs <app_name> - returns a real-time stream of the application logs.

- Configure syslog drain which will stream logs to your external log management tool (ex: Prometheus, Papertrail) - https://docs.cloudfoundry.org/devguide/services/log-management.html.

This method is as good as the maturity or consistency of your logs, but the Cloud Foundry platform also helps in the case of adding some standardization to your logs. Each log line will have the following info :

- Timestamp

- Log type – CF component that is origin of log line

- Channel – either OUT (logs emitted on stdout) or ERR (logs emitted on stderr)

- Message

Check your configuration

If you have investigated your logs and found out that the connection to some external service is failing, you must check the configuration that your app uses in its cloud environment. There are a few places you should look into:

- Examine your environment variables with the `cf env <app_name>` command. This will list all environment variables (container variables) and details of each binded service.

- `cf ssh <app_name> -i 0` enables you to SSH into container hosting your app. With the ‘i’ parameter you can point to a particular instance. Now, it is possible to check the files you are interested in to see if the configuration is set up properly.

- If you use any configuration server (like Spring Cloud Config), check if the connection to this server works. Make sure that the spring profiles are set up correctly and double-check the content of your configuration files.

Diagnose network traffic

There are cases in which your application runs properly, the entire configuration is correct, you don’t see anything extraordinary in your events and your logs don’t really show anything. This could be why:

- ou don’t log enough information in your app

- There is a network related issue

- Request processing is blocked at some point in your web server

With the first one, you can’t really do much if your app is already in production. You can only prevent such situations in the future by talking more effort in implementing proper logging. To check if the second issue relates to you:

- SSH to the Container/VM hosting your app and use the linux `tcpdump` command. Tcpdump is a network packet analyzer which can help you check if the traffic on an expected port is flowing.

- Using `netstat -l | grep <your_port>` you can check if there is a process that listens on your expected port. If it exists, you can verify if this is the proper one (i.e. Tomcat server).

- If your server listens on a proper port but you still don’t see the expected traffic with tcpdump then you might check firewalls, security groups and ACLs. You can use linux netcat (‘nc’ command) to verify if TCP connections can be established between the container hosting your app and the target server.

Print your thread stack

Your app is running and listening on a proper port, the TCP traffic is flowing correctly and you have well designed the logging system. But still there are no new logs for a particular request and you cannot diagnose at which point and where exactly your app processing has stopped.

In this scenario it might be useful to use a Java tool to print the current thread stack which is called jstack. It’s a very simple and handy tool recommended for diagnosing what is currently happening on your java server.

Once you have executed jstack -f , you will see the stack traces of all Java threads that run within a target JVM. This way you can check if some threads are blocked and on what execution point they’ve stopped.

Implement /health endpoints in your apps

A good practice in microservice architecture is to implement the ‘/health’ endpoint in each application. Basically, the responsibility of this endpoint is to return the application health information in a short and concise way. For example, you can return a list of app external services with a status for each one: UP or DOWN. If the status is DOWN, you can tell what caused the error. For example, ‘timeout when connecting to MySQL’.

From the security perspective, we can return the global UP/DOWN information for all unauthenticated users. It will be used to quickly determine if something is wrong. The list of all services with error details will be accessible only for authenticated users with proper roles.

In Spring Boot apps, if you add a dependency to the ‘spring-boot-starter-actuator’, there are extra ‘/health’ endpoints. Also, there is a simple way to extend the default behavior. All you need to do is implement your custom health indicator classes that will implement the `HealthIndicator` interface.

Use distributed HTTP tracing systems

If your system is composed of dozens of microservices and the interactions between them are getting more complex, then you might come across difficulties without any distributed tracing system. Fortunately, there are open source tools that solve such problems.

You can choose from HTrace, Zipkin or Spring Sleuth library that abstracts many concepts similar to distributed tracing. All this tools are based on the same concept of adding additional trace information to HTTP headers.

Certainly, a big advantage of using Spring Sleuth is that it is almost invisible for most users. Your interactions with external systems should be instrumented automatically by the framework. Trace information can be logged to a standard output or sent to a remote collector service when you can visualize your requests better.

Think about integrating APM tools

APM stands for Application Performance Management. These tools are often external services that help you to monitor your whole system health and diagnose potential problems. In most cases, you will need to integrate them with your applications. For example you might need to run some agent parallel to your app which will report your app diagnostics to external APM server in the background.

Additionally, you will have rich dashboards for visualizing your system’s state and its health. You have many ways to adjust and customize those dashboards according with your needs.

APM Examples : New Relic, Dynatrace, AppDynamics

These tools are must-haves for a highly available distributed environment.

Remote debugging in Cloud Foundry

Every developer is familiar with the concept of debugging, but less than 90% of time we are talking about local development debugging where you run the code on your machine. Sometimes, you receive a report that something is doesn’t behave the way it should on one of your testing environments. Of course, you could deploy a particular version on your local environment, but it is hard to simulate all aspects of this environment. In this case, it might be best to debug an application in a place where it is actually running. To perform a remote debug procedure on Cloud Foundry , see the below:

Please note that you must have the same source code version opened in your IDE. This method is very useful for development or testing environments. However, it shouldn’t be used on production environments.

Summary of application troubleshooting in cloud native

To sum up, I hope that all the above will help you with application troubleshooting problems with microservices in a distributed cloud environment and that everything will indeed work as expected, will be easily scaled and the system will be both highly available and resilient.

Cloud-native applications: 5 things you should know before you start

Introduction

Building cloud-native applications has become a direction in IT that has already been followed by companies like Facebook, Netflix or Amazon for years. The trend allows enterprises to develop and deploy apps more efficiently by means of cloud services and provides all sorts of run-time platform capabilities like scalability, performance and security. Thanks to this, developers can actually spend more time delivering functionality and speed up Innovation. What else is there that leaves the competition further behind than introducing new features at a global scale according to customer needs? You either keep up with the pace of the changing world or you don’t. The aim of this article is to present and explain the top 5 elements of cloud-native applications.

Why do you need cloud-native applications in the first place?

It is safe to say that the world we live in has gone digital. We communicate on Facebook, watch movies on Netflix, store our documents on Google Drive and at least a certain percentage of us shop on Amazon. It shouldn’t be a surprise that business demands are on a constant rise when it comes to customer expectations. Enterprises need a high-performance IT organization to be on top of this crowded marketplace.

Throughout the last 20 years the world has witnessed an array of developments in technology as well as people & culture. All these improvements took place in order to start delivering software faster. Automation, continuous integration & delivery to DevOps and microservice architecture patterns also serve that purpose. But still, quite frequently teams have to wait for infrastructure to become available, which significantly slows down the delivery line.

Some try to automate infrastructure provisioning or make an attempt towards DevOps. However, if the delivery of the infrastructure relies on a team that works remotely and can’t keep up with your speed, automated infrastructure provisioning will not be of much help. The recent rise of cloud computing has shown that infrastructure can be made available at a nearly infinite scale. IT departments are able to deliver their own infrastructure just as fast as if they were doing their regular online shopping on Amazon. On top of that, cloud infrastructure is simply cost efficient, as it doesn’t need tons of capital investment in advance. It represents a transparent pay-as-you-go model. Which is exactly why this kind of infrastructure has won its popularity among startups or innovation departments where a solution that tries out new products quickly is a golden ticket. So, what IS there to know before you dive in?

The ingredients of cloud-native apps, and what makes them native?

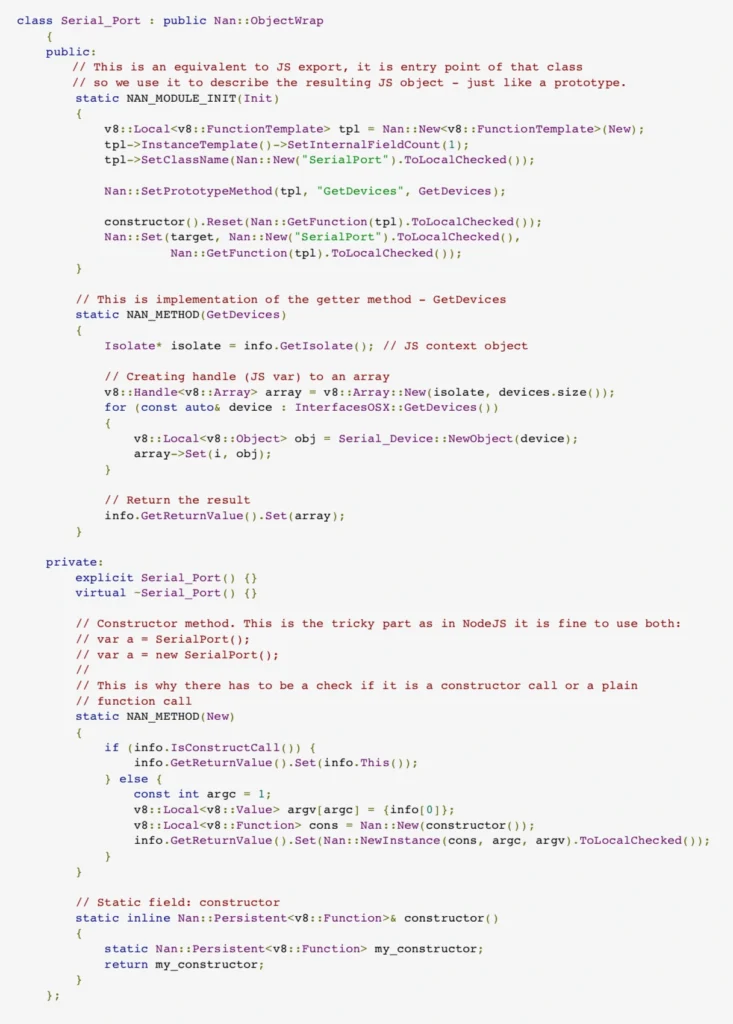

Now that we have explained the need for cloud-native apps, we can still shed a light on the definition, especially because it doesn’t always go hand in hand with its popularity. Cloud native apps are those applications that have been built to live and run in the cloud. If you want to get a better understanding of that it means - I recommend reading on. There are 5 elements divided into 2 categories (excluding the application itself) that are essential for creating cloud native environments.

Cloud platform automation

In other words, the natural habitat in which cloud-native applications live. It provides services that keep the application running, manage security and network. As stated above, the flexibility of such cloud platform and its cost-efficiency thanks to the pay-as-you-go model is perfect for enterprises who don’t want to pay through the nose for infrastructure from the very beginning.

Serverless functions

These small, one-purpose functions that allow you to build asynchronous event-driven architectures by means of microservices. Don’t let the „serverless” part of their name misleads you, though. Your code WILL run on servers. The only difference is that it’s on the cloud provider’s side to handle accelerating instances to run your code and scale out under load. There are plenty of serverless functions out there offered by cloud providers that can be used by cloud-native apps. Services like IoT, Big Data or data storage are built from open-source solutions. It means that you simply don’t have to take care of some complex platform, but rather focus on functionality itself and not have to deal with the pains of installation and configuration.

Microservices architecture pattern

Architecture pattern aims to provide self-contained software products which implement one type of a single-purpose function that can be developed, tested and deployed separately to speed up the product’s time to market. Which is equal to what cloud-native apps offer.

Once you get down to designing microservices, remember to do it in a way so that they can run cloud native. Most likely, you will come across one of the biggest challenges when it comes to the ability to run your app in a distributed environment across application instances. Luckily, there are these 12-factor app design principles which you can follow with a peaceful mind. These principles will help design such microservices that can run cloud native.

DevOps culture

The journey with cloud-native applications comes not only with a change in technology, but also with a culture change. Following DevOps principles is essential for cloud-native apps . Therefore, getting the whole software delivery pipeline to work automatically will only be possible if development and operation teams cooperate closely together. Development engineers are those who are in charge of getting the application to run on the cloud platform. While operations engineers handle development, operations and automation of that platform.

Cloud reliability engineering

And last but not least – cloud reliability engineering that comes from Google’s Site Reliability Engineering (SRE) concept. It is based on approaching the platform administration in the same way as software engineering. As stated by Ben Treynor , VP of Engineering at Google „Fundamentally, it’s what happens when you ask a software engineer to design an operations function (…). So SRE is fundamentally doing work that has historically been done by an operations team, but using engineers with software expertise, and banking on the fact that these engineers are inherently both predisposed to, and have the ability to, substitute automation for human labor.”

Treynor also claims that the roles of many operations teams nowadays are similar. But the way SRE teams work is significantly different because of several reasons. SRE people are software engineers with software development abilities and are characterized by proclivity. They have enough knowledge about programming languages, data structures, algorithms and performance. The result? They can create software that is more effective.

Summary

Cloud- native applications are one of the reasons why big players such as Facebook or Amazon stay ahead of the competition. The article sums up the most important factors that comprise them. Of course, the process of building cloud native apps goes far beyond choosing the tools. The importance of your team (People & Culture) can never be stressed enough. So what are the final thoughts? Advocate DevOps and follow Google’s Cloud Engineering principles to make your company efficient and your platform more reliable. You will be surprised at how fast cloud-native will help your organization become successful.

Accelerating data projects with parallel computing

Inspired by Petabyte Scale Solutions from CERN

The Large Hadron Collider (LHC) accelerator is the biggest device humankind has ever created. Handling enormous amounts of data it produces has required one of the biggest computational infrastructures on the earth. However, it is quite easy to overwhelm even the best supercomputer with inefficient algorithms that do not correctly utilize the full power of underlying, highly parallel hardware. In this article, I want to share insights born from my meeting with the CERN people, particularly how to validate and improve parallel computing in the data-driven world.

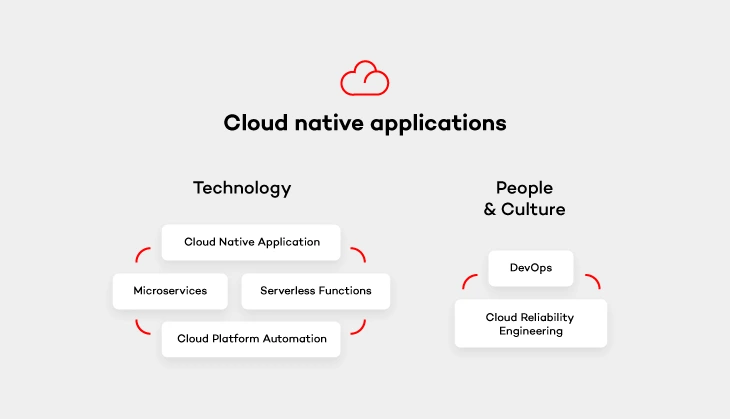

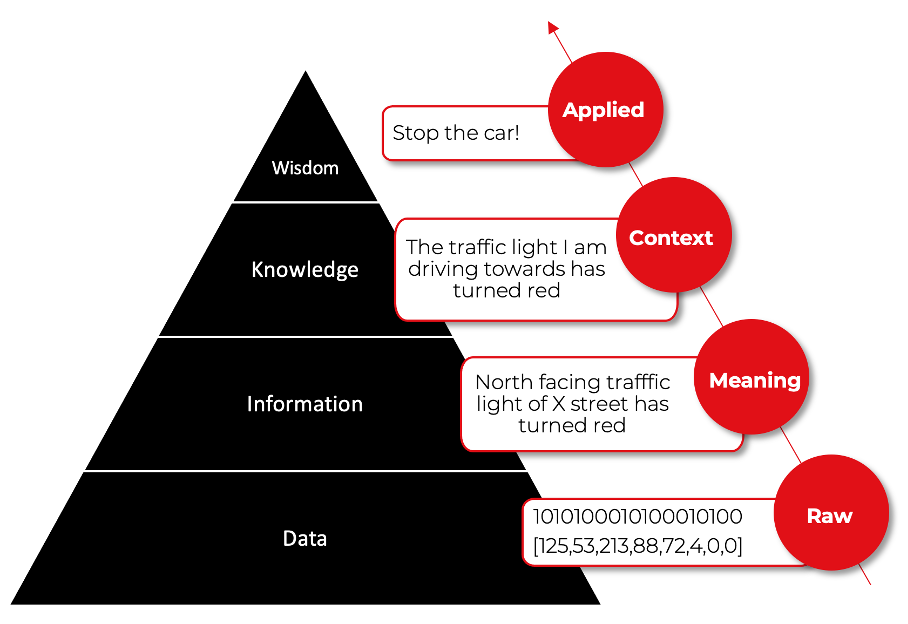

Struggling with data on the scale of megabytes (10 6 ) to gigabytes (10 9 ) is the bread and butter for data engineers, data scientists, or machine learning engineers. Moving forward, the terabyte (10 12 ) and petabyte (10 15 ) scale is becoming increasingly ordinary, and the chances of dealing with it in everyday data-related tasks keep growing. Although the claim "Moore's law is dead!" is quite a controversial one, the fact is that single-thread performance improvement has slowed down significantly since 2005. This is primarily due to the inability to increase the clock frequency indefinitely. The solution is parallelization - mainly by an increase in the numbers of logical cores available for one processing unit.

Knowing it, the ability to properly parallelize computations is increasingly important.

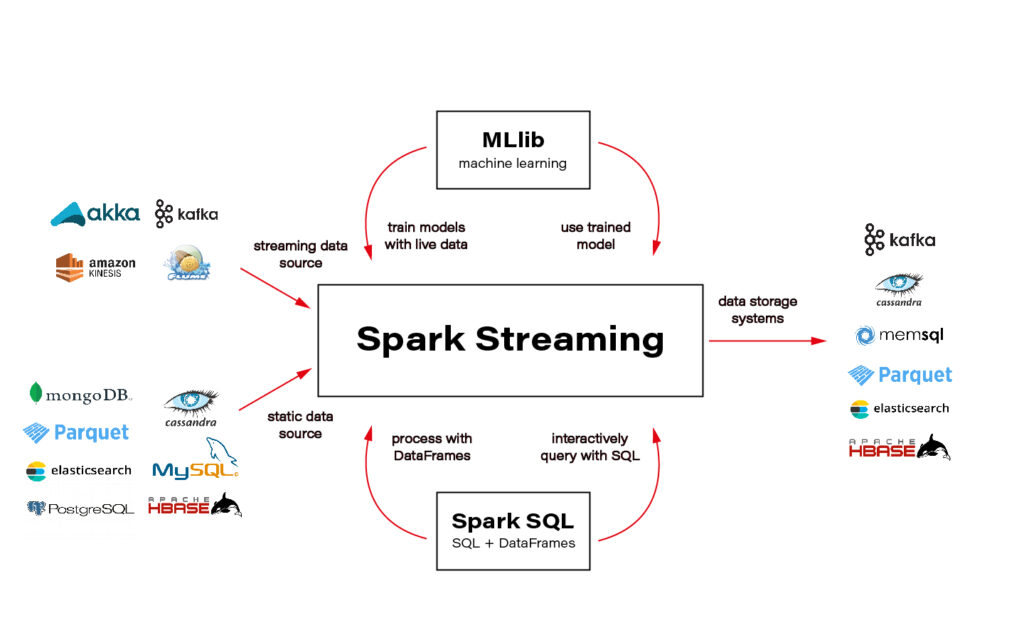

In a data-driven world, we have a lot of ready-to-use, very good solutions that do most of the parallel stuff on all possible levels for us and expose easy-to-use API. For example, on a large scale, Spark or Metaflow are excellent tools for distributed computing; at the other end, NumPy enables Python users to do very efficient matrix operations on the CPU, something Python is not good at all, by integrating C, C++, and Fortran code with friendly snake_case API. Do you think it is worth learning how it is done behind the scenes if you have packages that do all this for you? I honestly believe this knowledge can only help you use these tools more effectively and will allow you to work much faster and better in an unknown environment.

The LHC lies in a tunnel 27 kilometers (about 16.78 mi) in circumference, 175 meters (about 574.15 ft) under a small city built for that purpose on the France–Switzerland border. It has four main particle detectors that collect enormous amounts of data: ALICE, ATLAS, LHCb, and CMS. The LHCb detector alone collects about 40 TB of raw data every second. Many data points come in the form of images since LHCb takes 41 megapixels resolution photos every 25 ns. Such a huge amount of data must be somehow compressed and filtered before permanent storage. From the initial 40 TB/s, only 10G GB/s are saved on disk – the compression ratio is 1:4000!

It was a surprise for me that about 90% of CPU usage in LHCb is done on simulation. One may wonder why they simulate the detector. One of the reasons is that a particle detector is a complicated machine, and scientists at CERN use, i.e., Monte Carlo methods to understand the detector and the biases. Monte Carlo methods can be suitable for massively parallel computing in physics.

Let us skip all the sophisticated techniques and algorithms used at CERN and focus on such aspects of parallel computing, which are common regardless of the problem being solved. Let us divide the topic into four primary areas:

- SIMD,

- multitasking and multiprocessing,

- GPGPU,

- and distributed computing.

The following sections will cover each of them in detail.

SIMD

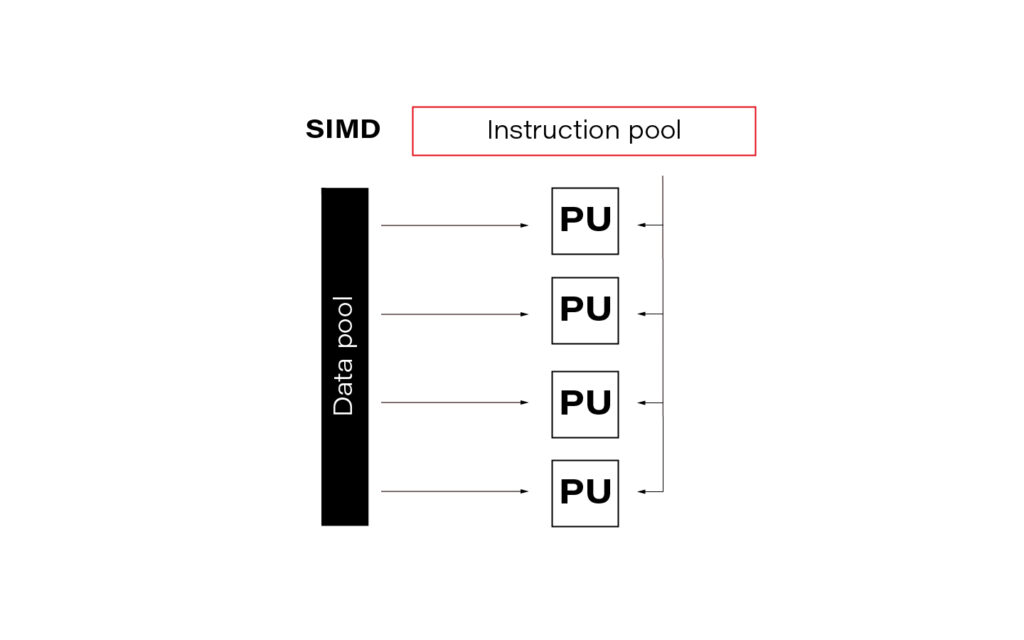

The acronym SIMD stands for Single Instruction Multiple Data and is a type of parallel processing in Flynn's taxonomy .

In the data science world, this term is often so-called vectorization. In practice, it means simultaneously performing the same operation on multiple data points (usually represented as a matrix). Modern CPUs and GPGPUs often have dedicated instruction sets for SIMD; examples are SSE and MMX. SIMD vector size has significantly increased over time.

Publishers of the SIMD instruction sets often create language extensions (typically using C/C++) with intrinsic functions or special datatypes that guarantee vector code generation. A step further is abstracting them into a universal interface, e.g., std::experimental::simd from C++ standard library. LLVM's (Low Level Virtual Machine) libcxx implements it (at least partially), allowing languages based on LLVM (e.g., Julia, Rust) to use IR (Intermediate Representation – code language used internally for LLVM's purposes) code for implicit or explicit vectorization. For example, in Julia, you can, if you are determined enough, access LLVM IR using macro @code_llvm and check your code for potential automatic vectorization.

In general, there are two main ways to apply vectorization to the program:

- auto-vectorization handled by compilers,

- and rewriting algorithms and data structures.

For a dev team at CERN, the second option turned out to be better since auto-vectorization did not work as expected for them. One of the CERN software engineers claimed that "vectorization is a killer for the performance." They put a lot of effort into it, and it was worth it. It is worth noting here that in data teams at CERN, Python is the language of choice, while C++ is preferred for any performance-sensitive task.

How to maximize the advantages of SIMD in everyday practice? Difficult to answer; it depends, as always. Generally, the best approach is to be aware of this effect every time you run heavy computation. In modern languages like Julia or best compilers like GCC, in many cases, you can rely on auto-vectorization. In Python, the best bet is the second option, using dedicated libraries like NumPy. Here you can find some examples of how to do it.

Below you can find a simple benchmarking presenting clearly that vectorization is worth attention.

import numpy as np

from timeit import Timer

# Using numpy to create a large array of size 10**6

array = np.random.randint(1000, size=10**6)

# method that adds elements using for loop

def add_forloop():

new_array = [element + 1 for element in array]

# Method that adds elements using SIMD

def add_vectorized():

new_array = array + 1

# Computing execution time

computation_time_forloop = Timer(add_forloop).timeit(1)

computation_time_vectorized = Timer(add_vectorized).timeit(1)

# Printing results

print(execution_time_forloop) # gives 0.001202600

print(execution_time_vectorized) # gives 0.000236700

Multitasking and Multiprocessing

Let us start with two confusing yet important terms which are common sources of misunderstanding:

- concurrency: one CPU, many tasks,

- parallelism: many CPUs, one task.

Multitasking is about executing multiple tasks concurrently at the same time on one CPU. A scheduler is a mechanism that decides what the CPU should focus on at each moment, giving the impression that multiple tasks are happening simultaneously. Schedulers can work in two modes:

- preemptive,

- and cooperative.

A preemptive scheduler can halt, run, and resume the execution of a task. This happens without the knowledge or agreement of the task being controlled.

On the other hand, a cooperative scheduler lets the running process decide when the processes voluntarily yield control or when idle or blocked, allowing multiple applications to execute simultaneously.

Switching context in cooperative multitasking can be cheap because parts of the context may remain on the stack and be stored on the higher levels in the memory hierarchy (e.g., L3 cache). Additionally, code can stay close to the CPU for as long as it needs without interruption.

On the other hand, the preemptive model is good when a controlled task behaves poorly and needs to be controlled externally. This may be especially useful when working with external libraries which are out of your control.

Multiprocessing is the use of two or more CPUs within a single Computer system. It is of two types:

- Asymmetric - not all the processes are treated equally; only a master processor runs the tasks of the operating system.

- Symmetric - two or more processes are connected to a single, shared memory and have full access to all input and output devices.

I guess that symmetric multiprocessing is what many people intuitively understand as typical parallelism.

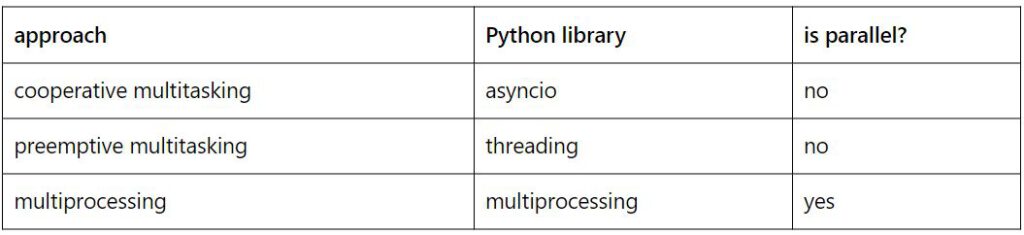

Below are some examples of how to do simple tasks using cooperative multitasking, preemptive multitasking, and multiprocessing in Python. The table below shows which library should be used for each purpose.

- Cooperative multitasking example:

import asyncio

import sys

import time

# Define printing loop

async def print_time():

while True:

print(f"hello again [{time.ctime()}]")

await asyncio.sleep(5)

# Define stdin reader

def echo_input():

print(input().upper())

# Main function with event loop

async def main():

asyncio.get_event_loop().add_reader(

sys.stdin,

echo_input

)

await print_time()

# Entry point

asyncio.run(main())

Just type something and admire the uppercase response.

- Preemptive multitasking example:

import threading

import time

# Define printing loop

def print_time():

while True:

print(f"hello again [{time.ctime()}]")

time.sleep(5)

# Define stdin reader

def echo_input():

while True:

message = input()

print(message.upper())

# Spawn threads

threading.Thread(target=print_time).start()

threading.Thread(target=echo_input).start()

The usage is the same as in the example above. However, the program may be less predictable due to the preemptive nature of the scheduler.

- Multiprocessing example:

import time

import sys

from multiprocessing import Process

# Define printing loop

def print_time():

while True:

print(f"hello again [{time.ctime()}]")

time.sleep(5)

# Define stdin reader

def echo_input():

sys.stdin = open(0)

while True:

message = input()

print(message.upper())

# Spawn processes

Process(target=print_time).start()

Process(target=echo_input).start()

Notice that we must open stdin for the echo_input process because this is an exclusive resource and needs to be locked.

In Python, it may be tempting to use multiprocessing anytime you need accelerated computations. But processes cannot share resources while threads / asyncs can. This is because a process works with many CPUs (with separate contexts) while threads / asyncs are stuck to one CPU. So, you must use synchronization primitives (e.g., mutexes or atomics), which complicates source code. No clear winner here; only trade-offs to consider.

Although that is a complex topic, I will not cover it in detail as it is uncommon for data projects to work with them directly. Usually, external libraries for data manipulation and data modeling encapsulate the appropriate code. However, I believe that being aware of these topics in contemporary software is particularly useful knowledge that can significantly accelerate your code in unconventional situations.

You may find other meanings of the terminology used here. After all, it is not so important what you call it but rather how to choose the right solution for the problem you are solving.

GPGPU

General-purpose computing on graphics processing units (GPGPU) utilizes shaders to perform massive parallel computations in applications traditionally handled by the central processing unit.

In 2006 Nvidia invented Compute Unified Device Architecture (CUDA) which soon dominated the machine learning models acceleration niche. CUDA is a computing platform and offers API that gives you direct access to parallel computation elements of GPU through the execution of computer kernels.

Returning to the LHCb detector, raw data is initially processed directly on CPUs operating on detectors to reduce network load. But the whole event may be processed on GPU if the CPU is busy. So, GPUs appear early in the data processing chain.

GPGPU's importance for data modeling and processing at CERN is still growing. The most popular machine learning models they use are decision trees (boosted or not, sometimes ensembled). Since deep learning models are harder to use, they are less popular at CERN, but their importance is still rising. However, I am quite sure that scientists worldwide who work with CERN's data use the full spectrum of machine learning models.

To accelerate machine learning training and prediction with GPGPU and CUDA, you need to create a computing kernel or leave that task to the libraries' creators and use simple API instead. The choice, as always, depends on what goals you want to achieve.

For a typical machine learning task, you can use any machine learning framework that supports GPU acceleration; examples are TensorFlow, PyTorch, or cuML , whose API mirrors Sklearn's. Before you start accelerating your algorithms, ensure that the latest GPU driver and CUDA driver are installed on your computer and that the framework of choice is installed with an appropriate flag for GPU support. Once the initial setup is done, you may need to run some code snippet that switches computation from CPU (typically default) to GPU. For instance, in the case of PyTorch, it may look like that:

import torch

torch.cuda.is_available()

def get_default_device():

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

device = get_default_device()

device

Depending on the framework, at this point, you can process as always with your model or not. Some frameworks may require, e. g. explicit transfer of the model to the GPU-specific version. In PyTorch, you can do it with the following code:

net = MobileNetV3()

net = net.cuda()

At this point, we usually should be able to run .fit(), .predict(), .eval(), or something similar. Looks simple, doesn't it?

Writing a computing kernel is much more challenging. However, there is nothing special about computing kernel in this context, just a function that runs on GPU.

Let's switch to Julia; it is a perfect language for learning GPU computing. You can get familiar with why I prefer Julia for some machine learning projects here . Check this article if you need a brief introduction to the Julia programming language.

Data structures used must have an appropriate layout to enable performance boost. Computers love linear structures like vectors and matrices and hate pointers, e. g. in linked lists. So, the very first step to talking to your GPU is to present a data structure that it loves.

using Cuda

# Data structures for CPU

N = 2^20

x = fill(1.0f0, N) # a vector filled with 1.0

y = fill(2.0f0, N) # a vector filled with 2.0

# CPU parallel adder

function parallel_add!(y, x)

Threads.@threads for i in eachindex(y, x)

@inbounds y[i] += x[i]

end

return nothing

end

# Data structures for GPU

x_d = CUDA.fill(1.0f0, N)

# a vector stored on the GPU filled with 1.0

y_d = CUDA.fill(2.0f0, N)

# a vector stored on the GPU filled with 2.0

# GPU parallel adder

function gpu_add!(y, x)

CUDA.@sync y .+= x

return

end

GPU code in this example is about 4x faster than the parallel CPU version. Look how simple it is in Julia! To be honest, it is a kernel imitation on a very high level; a more real-life example may look like this:

function gpu_add_kernel!(y, x)

index = (blockIdx().x - 1) * blockDim().x + threadIdx().x

stride = gridDim().x * blockDim().x

for i = index:stride:length(y)

@inbounds y[i] += x[i]

end

return

end

The CUDA analogs of threadid and nthreads are called threadIdx and blockDim. GPUs run a limited number of threads on each streaming multiprocessor (SM). The recent NVIDIA RTX 6000 Ada Generation should have 18,176 CUDA Cores (streaming processors). Imagine how fast it can be even compared to one of the best CPUs for multithreading AMD EPYC 7773X (128 independent threads). By the way, 768MB L3 cache (3D V-Cache Technology) is amazing.

Distributed Computing

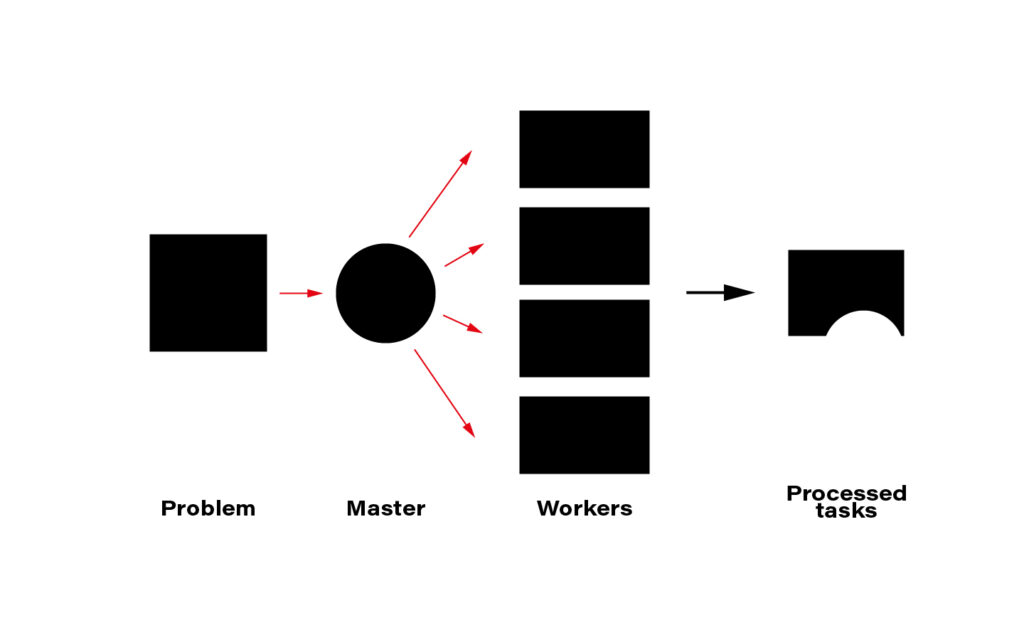

The term distributed computing, in simple words, means the interaction of computers in a network to achieve a common goal. The network elements communicate with each other by passing messages (welcome back cooperative multitasking). Since every node in a network usually is at least a standalone virtual machine, often separate hardware, computing may happen simultaneously. A master node can split the workload into independent pieces, send them to the workers, let them do their job, and concatenate the resulting pieces into the eventual answer.

The computer case is the symbolic border line between the methods presented above and distributed computing. The latter must rely on a network infrastructure to send messages between nodes, which is also a bottleneck. CERN uses thousands of kilometers of optical fiber to create a huge and super-fast network for that purpose. CERN's data center offers about 300,000 physical and hyper-threaded cores in a bare-metal-as-a-service model running on about seventeen thousand servers. A perfect environment for distributed computing.

Moreover, since most data CERN produces is public, LHC experiments are completely international - 1400 scientists, 86 universities, and 18 countries – they all create a computing and storage grid worldwide. That enables scientists and companies to run distributed computing in many ways.

Although this is important, I will not cover technologies and distributed computing methods here. The topic is huge and very well covered on the internet. An excellent framework recommended and used by one of the CERN scientists is Spark + Scala interface. You can solve almost every data-related task using Spark and execute the code in a cluster that distributes computation on nodes for you.

Ok, the only piece of advice: be aware of how much data you send to the cluster - transferring big data can ruin all the profit from distributing the calculations and cost you a lot of money.

Another excellent tool for distributed computation on the cloud is Metaflow. I wrote two articles about Metaflow: introduction and how to run a simple project . I encourage you to read and try it.

Conclusions

CERN researchers have convinced me that wise parallelization is crucial to achieving complex goals in the contemporary Big Data world. I hope I managed to infect you with this belief. Happy coding!

Tips on mastering the art of learning new technologies and transitioning between them

Learning new technologies is always challenging, but it is also crucial for self-development and broadening professional skills in today's rapidly developing IT world.

The process may be split into two phases: learning the basics and increasing our expertise in areas where we already have the basic knowledge . The first phase is more challenging and demanding. It often involves some mind-shifting and requires us to build a new knowledge system on which we don't have any information. On the other hand, the second phase, often called "upskilling," is the most important as it gives us the ability to develop real-world projects.

To learn effectively, it's important to organize the process of acquiring knowledge properly. There are several key things to consider when planning day-to-day learning. Let's define the basic principles and key points of how to apprehend new programming technologies. First, we'll describe the structure of the learning process.

Structure of the process of learning new technologies

Set a global but concrete goal

A good goal helps you stay driven. It's important to keep motivation high, especially in the learning basics phase, where we acquire new knowledge, which is often tedious. The goal should be concrete, e.g., "Learn AWS to build more efficient infrastructure," "Learn Spring Boot to develop more robust web apps," or "Learn Kubernetes to work on modern and more interesting projects." Focusing on such goals gives us the feeling that we know precisely why we keep progressing. This approach is especially helpful when we work on a project while learning at the same time, and it helps keep us motivated.

Set a technical task

Practical skills are the whole point of learning new technologies. Training in a new programming language or theoretical framework is only a tool that lays the foundation for practical application.

The best way to learn new technology is by practicing it. For this purpose, it’s helpful to set up test projects. Imagine which real-world task fits the framework or language you’re learning and try to write test projects for such a task. It should be a simplified version of an actual hypothetical project.

Focus on a small specific area that is currently being studied. Use it in the project, simplifying the rest of the setup or code. E.g., if you learn some new database framework, you should design entities, the code that does queries, etc, precisely and with attention to detail. At the same time, do only the minimum for the rest of the app. E.g., application configuration may be a basic or out-of-the-box solution provided by the framework.

Use a local or in-memory database. Don't spend too much time on setup or writing complicated business logic code for the service or controller layer. This will allow you to focus on learning the subject you’re interested in. If possible, it's also a good idea to keep developing the test app by adding another functionality that utilizes new knowledge as you walk through the learning path (rather than writing another new test project from scratch for each topic).

Theoretical knowledge learning

Theoretical knowledge is the background for practical work with a framework or programming language. This means we should not neglect it. Some core theoretical knowledge is always required to use technology. This is especially important when we learn something completely new. We should first understand how to think in terms of the paradigms or rules of the technology we're learning. Do some mind-shifting to understand the technology and its key concepts.

You need to develop your understanding of the basis on which the technology is built to such a level that you will be able to associate new knowledge with that base and understand how it works in relation to it. For example, functional programming would be a completely different and unknown concept for someone who previously worked only using OOP. To start using functional programming, it's not enough to know what a function is; we have to understand main concepts like lambda calculus, pure functions, high-level functions, etc., at least at a basic level.

Create a detailed plan for learning new technologies

Since we learn step by step, we need to define how to decompose our learning path for technology into smaller chunks. Breaking down the topics into smaller pieces can make it easier to learn. Rather than "learn Spring Boot" or "learn DB access with Spring," we should follow more granular steps like "learn Spring IoC," "learn Spring AOP," and "learn JPA."

A more detailed plan can make the learning process easier. While it may be clear that the initial steps should cover the basics and become more advanced with each topic, determining the order in which to learn each topic can be tricky. A properly made learning plan guarantees that our knowledge will be well-structured, and all the important information will be covered. Having such a plan also helps track progress.

When planning, it is beneficial to utilize modern tools that can help visualize the structure of the learning path. Tools such as Trello, which organizes work into boards, task lists, and workspaces, can be especially helpful. These tools enable us to visualize learning paths and track progress as we learn.

Revisit previous topics

When learning about technologies, topics can often have complex dependencies, sometimes even cyclical. This means that to learn one topic, we may need to have some understanding of another. It sounds complicated, but don’t let it worry you – our brain can deal with way more complex things.

When we learn, we initially understand the first topic on a basic level, which allows us to move on to the next topics. However, as we continue to learn and acquire further knowledge, our understanding of the initial topic becomes deeper. Revisiting previous topics can help us achieve a deeper and better understanding of the technology.

Tips on learning strategies

Now, let's explore some helpful tips for learning new technologies. These suggestions are more general and aimed at making the learning process more efficient.

Overcome reluctance

Extending our thinking or adding new concepts to what we know requires much effort. So, it's natural for our brain to resist. When we learn something new, we may feel uncomfortable because we don't understand the whole technology, even in general, and we see how much knowledge there is to be acquired within it.

Here are some tips that may be useful to make the learning process easier and less tiring.

1) Don't try to learn as much as possible. Focus on the basics within each topic first. Then, learn the key things required to its practical application. As you study more advanced topics, revisit some of the previous ones. This allows you to get a better and deeper understanding of the material.

2) Try to find some interesting topic that you enjoy exploring at the moment. If you have multiple options, pick the one you like the most. Learning is much more efficient if we study something that interests us. While working on some feature or task in the current or past project, you were probably curious about how certain things worked. Or you just like the architectural approach or pattern to the specific task. Finding a similar topic or concept in your learning plan is the best way to utilize such curiosity. Even if it's a bit more advanced, your brain will show more enthusiasm for such material than for something that is simpler but seems dull.

3) Split the task into intermediate, smaller goals. Learning small steps rather than deep diving into large topics is easier. The feeling of having accomplished intermediate goals helps to retain motivation and move forward.

4) Track the progress. It's not just a formality. It helps to ensure your velocity and keep track of the timing. If some steps take more time, tracking progress will allow you to adjust the learning plan accordingly. Moreover, observing the progress provides a feeling of satisfaction about the intermediate achievements.

5) Ask questions, even about the basics. When you learn, there is no such thing as a stupid question. If you feel stuck with some step of the learning plan, searching for help from others will speed up the process.

Learn new technologies systematically and frequently