Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

How to manage fire trucks – IoT architecture with isolated applications and centralized management system

Welcome to a short cycle of articles that shows a way to combine network techniques and AWS services for a mission-critical automotive system .

We’ll show you how to design and implement an IoT system with a complex edge architecture.

The cycle consists of three articles and shows the architecture design, a step-by-step implementation guide, and some pitfalls with the way to overcome these.

Let’s start!

AWS IoT usage to manage vehicle fleet

Let’s create an application. But this won’t be a typical, yet another CRUD-based e-commerce system. This time, we’d like to build an IoT-based fleet-wise system with distributed (on-edge/in-cloud) computing.

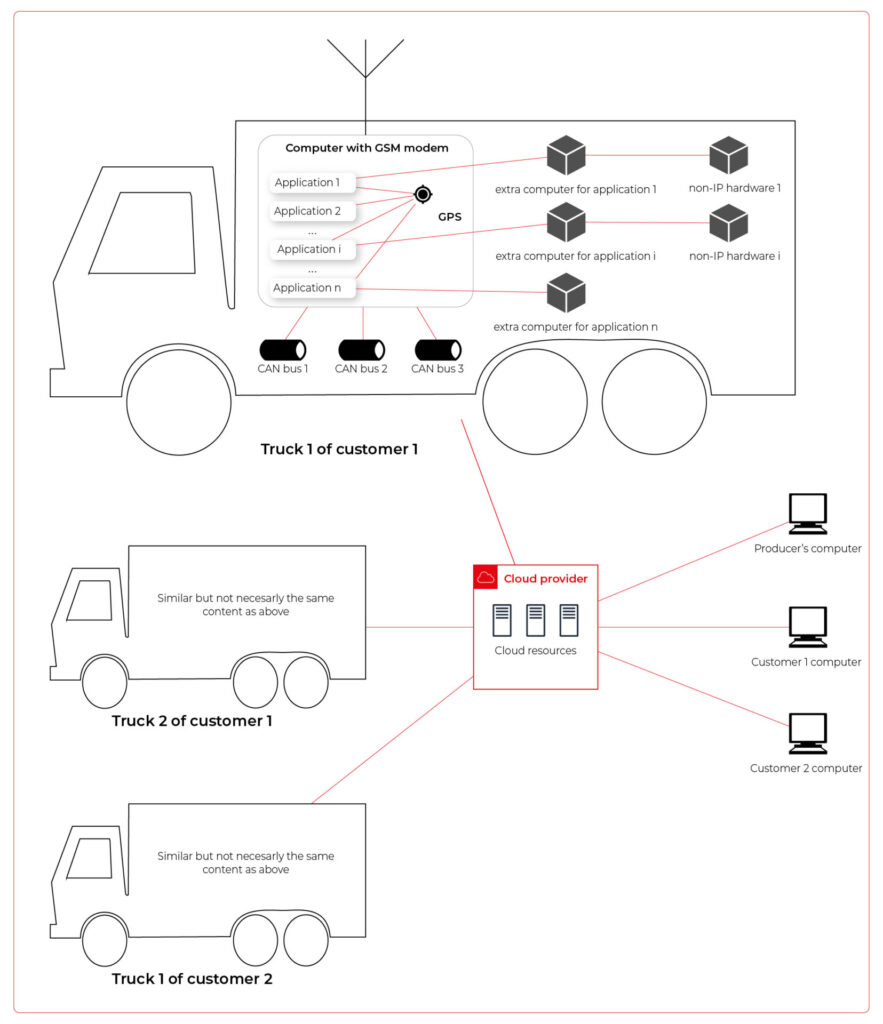

Our customer is an automotive company that produces fire trucks. We’re not interested in engine power, mechanical systems, and firefighters' equipment. We’re hired to manage the fleet of vehicles for both the producer and its customers.

Each truck is controlled by a central, “rule-them-all” computer connected to all vehicles CAN buses, and whole extra firefighters’ equipment. The computer sends basic vehicle data (fuel level, tire pressure, etc.) to the fire station and a central emergency service supervisor. It receives new orders, calculates the best route to targets and controls all the vehicle equipment - pumps, lights, signals, and of course – the ladder. Also, it sends some telemetry and usage statistics to the producer to help design even better trucks in the future.

However, those trucks are not the same. For instance, in certain regions, the cabin must be airtight, so extra sensors are used. Some cities integrate emergency vehicles with city traffic light systems to clear the route for a running truck. Some stations require specialized equipment like winches, extra lights, power generators, crew management systems, etc.

Moreover, we need to consider that those trucks often operate in unpleasant conditions, with a limited and unreliable Internet connection available.

Of course, the customer would like to have a cloud-based server to manage everything both for the producer and end users - to collect logs and metrics with low latency, to send commands with no delay, and with a colorful, web-based, easy-to-use GUI.

Does it sound challenging? Let's break it down!

Requirements

Based on a half-an-hour session with the customer, we've collected the following, a bit chaotic, set of business requirements:

- a star-like topology system, with a cloud in the center and trucks around it,

- groups of trucks are owned by customers - legal entities that should have access only to their trucks,

- each group that belongs to a customer may be customized by adding extra components, both hardware-, or software-based,

- each truck is controlled by identical, custom, Linux-based computers running multiple applications provided by the customer or third parties,

- truck-controlling computers are small, ARM-based machines with limited hardware and direct Internet access via GSM,

- Internet connection is usually limited, expensive, and non-reliable,

- the main computer should host common services, like GPS or time service,

- some applications are built of multiple components (software and hardware-based) - hardware components communicate with the main computers via the in-vehicle IP network,

- the applications must communicate with their servers over the Internet, and we need to control (filter/whitelist) this traffic,

- each main computer is a router for the vehicle network,

- each application should be isolated to minimize a potential attack scope,

- components in trucks may be updated by adding new software or hardware components, even after leaving the production line,

- the cloud application should be easy - read-only dashboards, truck data dump, send order, both-way emergency messages broadcast,

- new trucks can be added to the system every day,

- class-leading security is required - user and privileges management, encrypted and signed communication, operations tracking, etc.

- provisioning new vehicles to the system should be as simple as possible to enable the factory workers to do it.

As we’ve learned so far, the basic architecture is as shown in the diagram below.

Our job is to propose a detailed architecture and prove the concept. Then, we’ll need a GPT-based instrument bench of developers to hammer it down.

The proposed architecture

There are two obvious parts of the architecture - the cloud one and the truck one. The cloud one is easy and mostly out-of-scope for the article. We need some frontend, some backend, and some database (well, as usual). In the trucks, we need to separate applications working on the same machine and then isolate traffic for each application. It sounds like containers and virtual networks. Before diving into each part, we need to solve the main issue - how to communicate between trucks and the cloud.

Selecting the technology

The star-like architecture of the system seems to be a very typical one - there is a server in the center with multiple clients using its services. However, in this situation, we can't distinguish between resources/services supplier (the server) and resources/services consumers (the clients). Instead, we need to consider the system as a complex, distributed structure with multiple working nodes, central management, and 3rd party integration. Due to the isolation, trucks’ main computers should containerize running applications. We could use Kubernetes clusters in trucks and another one in the cloud, but in that case, we need to implement everything manually – new truck onboarding, management at scale, resource limiting for applications, secured communication channels, and OTA updates. In the cloud, we would need to manage the cluster and pods, running even when there is no traffic.

An alternative way is the IoT. Well, as revealed in the title, this is the way that we have chosen. IoT provides a lot of services out-of-the-box - the communication channel, permissions management, OTA updates, components management, logs, metrics, and much more. Therefore, the main argument for using it was speeding up the deployment process.

However, we need to keep in mind that IoT architecture is not designed to be used with complex edge devices. This is our challenge, but fortunately, we are happy to solve it.

Selecting the cloud provider

The customer would like to use a leading provider, which reduces the choice to the top three in the World: AWS, MS Azure, and GCP.

The GCP IoT Core is the least advanced solution. It misses a lot of concepts and services available in the competitors, like a digital twin creation mechanism, complex permissions management, security evaluation, or a complex provisioning mechanism.

The Azure IoT is much more complex and powerful. On the other hand, it suffers from shortcomings in documentation, and - what is most important - some features are restricted to Microsoft instruments only (C#, Visual Studio, or PowerShell). On the other hand, it provides seamless AI tool integration, but it’s not our case for now.

But the last one – AWS IoT – fits all requirements and provides all the services needed. Two MQTT brokers are available, plenty of useful components (logs forwarding, direct tunnel for SSH access, complex permission management), and almost no limitation for IoT Core client devices. There is much more from AWS Greengrass - an extended version with higher requirements (vanilla C is not enough), but we can easily fulfill those requirements with our ARM-based trucks’ computers.

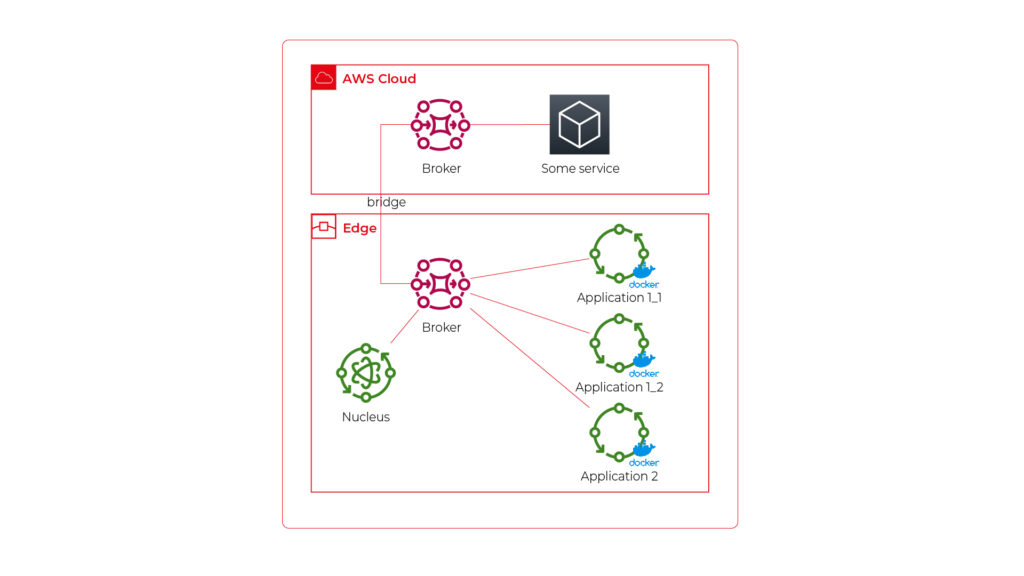

The basic architecture

Going back to the start-like topology, the most important part is the communication between multiple edge devices and the core. AWS IoT provides MQTT to enable a TCP-based, failure-resistant communication channel with a buffer that seamlessly keeps the communication on connection lost. The concept offers two MQTT brokers (in the cloud and on the edge) connected via a secured bridge. This way, we can use the MQTT as the main communication mechanism on the edge and decide which topics should be bridged and transferred to the cloud. We can also manage permissions for each topic on both sides as needed.

The cloud part is easy – we can synchronize the IoT MQTT broker with another messaging system (SNS/SQS, Kafka, whatever you like) or read/write it directly from our applications.

The edge part is much more complex. In the beginning, let’s assume that there are two applications running as executable programs on the edge. Each of these uses its own certificate to connect to the edge broker so we can distinguish between them and manage their permissions. It brings up some basic questions – how to provide certificates and ensure that one application won’t steal credentials from another. Fortunately, AWS IoT Greengrass supplies a way to run components as docker containers – it creates and provides certificates and uses IPC (inter-process communication) to allow containers to use the broker. Docker ensures isolation with low overhead, so each application is not aware of the other one. See the official documentation for details: Run a Docker container - AWS IoT Greengrass (amazon.com) .

Please note the only requirement for the applications, which is, in fact, the requirement we make to applications’ providers: we need docker images with applications that use AWS IoT SDK for communication.

See the initial architecture in the picture below.

As you can see, Application 1 contains two programs (separate docker containers) communicating with each other via the broker: Application 1_1 and Application 1_2. Thanks to the privileges management, we are sure that Application 2 can’t impact or read this communication. If required, we can also configure a common topic accessible by both applications.

Please also note that there is one more component – Nucleus. You can consider it as an orchestrator required by AWS IoT to rule the system.

Of course, we can connect thousands of similar edges to the same cloud, but we are not going to show it on pictures for readability reasons. AWS IoT provides deployment groups with versioning for OTA updates based on typical AWS SDK. Therefore, we can expose a user-friendly management system (for our client and end users) to manage applications running on edge at scale.

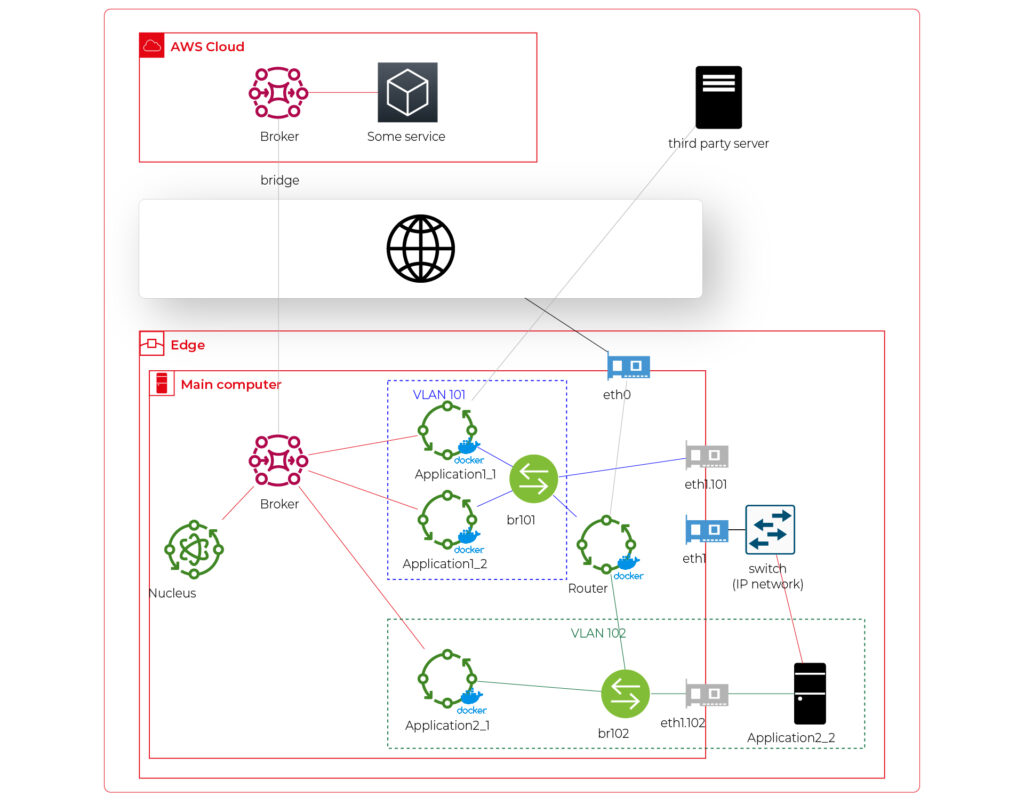

Virtual networks

Now, let’s challenge the architecture with a more complex scenario. Let’s assume that Application 2 communicates with an in-cabin air quality sensor – a separate computer that is in the same IP network. We can assume the sensor is a part of Application 2, and our aim is to enable such communication but also to hide it from Application 1. Let’s add some VLANs and utilize network interfaces.

Starting from the physical infrastructure, the main computer uses two interfaces – eth0 to connect to the Internet and eth1 connected to a physical, managed switch (the “in-vehicle IP network” mentioned above). The Application 2_2 computer (the air quality sensor) is connected to the switch to a port tagged as VLAN 102, and the switch is connected to eth1 via a trunk port.

The eth0 interface is used by the main computer (host) to communicate with the Internet, so the main MQTT bridging is realized via this interface. On the other hand, there is also a new Greengrass-docker component called router. It’s connected to eth0 and to two virtual bridges – br101 and br102. Those bridges are not the same as the MQTT bridge. This time, we need to use the kernel-based Linux feature “bridge,” which is a logical, virtual network hub. Those bridges are connected to virtual network interfaces eth1.101 and eth1.102 and to applications’ containers.

This way, Application 1 uses its own VLAN 101 (100% virtual), and Application 2 uses its own VLAN 102 (holding both virtual and physical nodes). The application separation is still ensured, and there is no logical difference between virtual and mixed VLANs. Applications running inside VLANs can’t distinguish between physical and virtual nodes, and all IP network features (like UDP broadcasting and multicasting) are allowed. Note that nodes belonging to the same application can communicate omitting the MQTT (which is fine because the MQTT may be a bottleneck for the system).

Moreover, there is a single security-configuration point for all applications. The router container is the main gateway for all virtual and physical application-nodes, so we can configure a firewall on it or enable restricted routes between specific nodes between applications if needed. This way, we can enable applications to communicate with third-party servers over the Internet (see Application 1_1 in the picture), to communicate with individual nodes of the applications without restrictions, and to control the entire application-related traffic in a single place. And this place – the router – is just another Greengrass component, ready to be redeployed as a part of the OTA update. Also, the router is a good candidate to serve traffic targeting all networks (and all applications), e.g., to broadcast GPS position via UDP or to act as the network time server.

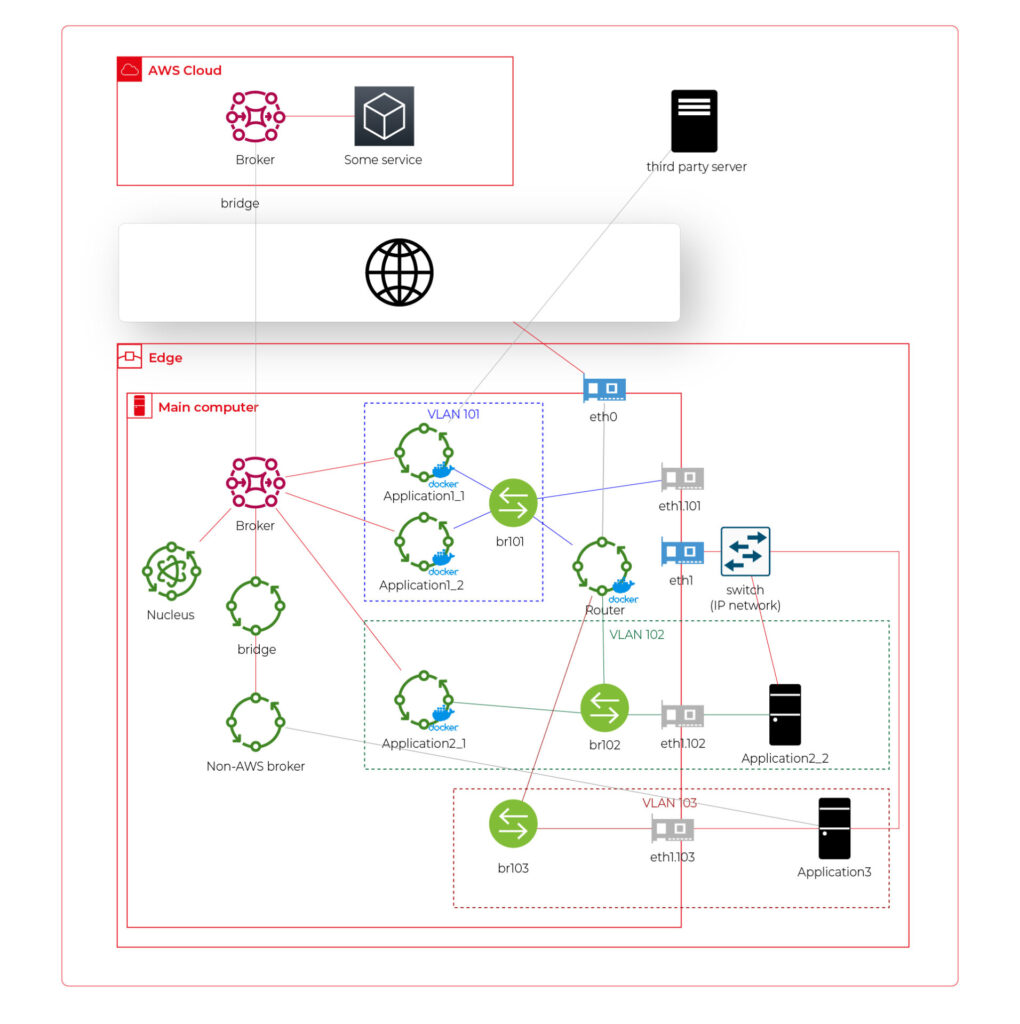

One more broker

What if… the application is provided as a physical machine only?

Well, as the main communication channel is MQTT, and the direct edge-to-Internet connection is available but limited, we would like to enable a physical application to use the MQTT. MQTT is a general standard for many integrated systems (small computers with limited purposes), but our edge MQTT broker is AWS-protected, so there are two options available. We can force the application supplier to be AWS-Greengrass compatible, or we need another broker. As we’re pacifists and we can’t stand forcing anybody to do anything, let’s add one more broker and one more bridge.

This time, there are two new components. The first one, an MQTT broker (Mosquitto or similar), interacts with Application 3. As we can’t configure the Mosquitto to act as a bridge for the AWS-managed broker, there is one more, custom application running on the server for this purpose only – a Greengrass component called “bridge”. This application connects to both local MQTT brokers and routes specific messages between them, as configured. Please note that Application 3 is connected to its own VLAN even if there are no virtual nodes. The reason is – there are no virtual nodes yet, but we’d like to keep the system future-proof and consistent. This way, we keep the virtual router as a network gateway for Application 3, too. Nevertheless, the non-AWS broker can listen to specific virtual interfaces, including eth1.103 in this case, so we can enable it for specific VLANs (application) if needed.

Summary

The article shows how to combine AWS IoT, docker, and virtual networks to achieve a future-proof fleet management system with hardware- and software-based applications at scale. We can use AWS tools to deliver new applications to edge devices and manage groups evoking truck owners or truck models. Each vehicle can be equipped with an ARM computer that uses AWS-native fleet provisioning on OS initialization to join the system. The proposed structure may seem to be complex, but you need to configure it only once to fulfill all requirements specified by the client.

However, theory is sometimes easier than practice, so we encourage you to read the following article with implementation details .

Predictive maintenance in automotive manufacturing

Our initial article on predictive maintenance covered the definition of such a system, its construction, and the key implementation challenges. In this part, we'll delve into how PdM technology is transforming different facets of the automotive industry and its advantages for OEMs, insurers, car rental companies, and vehicle owners.

Best predictive maintenance techniques and where you can use them

In the first part of the article, we discussed the importance of sensors in a PdM system. These sensors are responsible for collecting data from machines and vehicles, and they can measure various variables like temperature, vibration, pressure, or noise. Proper placement of these sensors on the machines and connecting them to IoT solutions, enables the transfer of data to the central repository of the system. After processing the data, we obtain information about specific machines or their parts that are prone to damage or downtime.

The automotive industry can benefit greatly from implementing these top predictive maintenance techniques.

Vibration analysis

How does it work?

Machinery used in the automotive industry and car components have a specific frequency of vibration. Deviations from this standard pattern can indicate "fatigue" of the material or interference from a third-party component that may affect the machine's operation. The PdM system enables you to detect these anomalies and alert the machine user before a failure occurs.

What can be detected?

The technique is mainly applied to high-speed rotating equipment. Vibration and oscillation analysis can detect issues such as bent shafts, loose mechanical components, engine problems, misalignment, and worn bearings or shafts.

Infrared thermography analysis

How does it work?

The technique involves using infrared cameras to detect thermal anomalies. This technology can identify malfunctioning electrical circuits, sensors or components that are emitting excessive heat due to overheating or operating at increased speeds. With this advanced technology, it's possible to anticipate and prevent such faults, and even create heat maps that can be used in predictive models and maintenance of heating systems.

What can be detected?

Infrared analysis is a versatile and non-invasive method that can be used on a wide scale. It is suitable for individual components, parts, and entire industrial facilities, and can detect rust, delamination, wear, or heat loss on various types of equipment.

Acoustic analysis monitoring

How does it work?

Machines produce sound waves while operating, and these waves can indicate equipment failure or an approaching critical point. The amplitude and character of these waves are specific to each machine. Even if the sound is too quiet for humans to hear in the initial phase of malfunction, sensors can detect abnormalities and predict when a failure is likely to occur.

What can be detected?

This PdM technology is relatively cheaper compared to others, but it does have some limitations in terms of usage. It is widely used in the Gas & Oil industry to detect gas and liquid leaks. In the automotive industry, it is commonly used for detecting vacuum leaks, unwanted friction, and stress on machine parts.

Motor circuit analysis

How does it work?

The technique works through electronic signature analysis (ESA). It involves measuring the supply voltage and operating current of an electronic engine. It allows locating and identifying problems related to the operation of electric engine components.

What can be detected?

Motor circuit analysis is a powerful tool that helps identify issues related to various components, such as bearings, rotor, clutch, stator winding, or system load irregularities. The main advantage of this technique is its short testing time and convenience for the operator, as it can be carried out in just two minutes while the machine is running.

PdM oil analysis

How does it work?

An effective method for Predictive Maintenance is to analyze oil samples from equipment without causing any damage. By analyzing the viscosity and size of the sample, along with detecting the presence or absence of third substances such as water, metals, acids or bases, we can obtain valuable information about mechanical damage, erosion or overheating of specific parts.

What can be detected?

Detecting anomalies early is crucial for hydraulic systems that consist of rotating and lubricating parts, such as pistons in a vehicle engine. By identifying issues promptly, effective solutions can be developed and potential damage to the equipment or a failure can be prevented.

Computer vision

How does it work?

Computer vision is revolutionizing the automotive industry by leveraging AI-based technology to enhance predictive maintenance processes. It achieves this by analyzing vast datasets, including real-time sensor data and historical performance records, to rapidly predict equipment wear and tear. By identifying patterns, detecting anomalies, and issuing early warnings for potential equipment issues, computer vision enables proactive maintenance scheduling.

What can be detected?

In the automotive industry, computer vision technology plays a crucial role in detecting equipment wear and tear patterns to predict maintenance requirements. It can also identify manufacturing defects such as scratches or flaws, welding defects in automotive components, part dimensions and volumes to ensure quality control, surface defects related to painting, tire patterns to match with wheels, and objects for robotic guidance and automation.

Who and how can benefit from predictive maintenance

Smart maintenance systems analyze multiple variables and provide a comprehensive overview, which can benefit several stakeholders in the automotive industry. These stakeholders range from vehicle manufacturing factories and the supply chain to service and dealerships, rental companies, insurance companies, and drivers.

Below, we have outlined the primary benefits that these stakeholders can enjoy. In the OEMs section, we have provided examples of specific implementations and case studies from the market.

Car rentals

Fleet health monitoring and better prediction of the service time

Managing service and repairs for a large number of vehicles can be costly and time-consuming for rental companies. When vehicles break down or are out of service while in the possession of customers, it can negatively impact the company’s revenue. To prevent this, car rental companies need constant insight into the condition of their vehicles and the ability to predict necessary maintenance. This allows them to manage their service plan more efficiently and minimize the risk of vehicle failure while on the road.

Car dealerships

Reducing breakdown scenarios

Car dealerships use predictive maintenance primarily to anticipate mechanical issues before they develop into serious problems. This approach helps in ensuring that vehicles sold or serviced by them are in optimal condition, which aids in preventing breakdowns or major faults for the customer down the line. By analyzing data from the vehicle's onboard sensors and historical maintenance records, dealerships can identify patterns that signify potential future failures. Predictive maintenance also benefits dealerships by allowing for proactive communication with vehicle owners, reducing breakdown scenarios, and enhancing customer satisfaction

Vehicle owners

Peace of mind

Periodic maintenance recommendations for vehicles are traditionally based on analyzing historical data from a large population of vehicle owners. However, each vehicle is used differently and could benefit from a tailored maintenance approach. Vehicles with high mileage or heavy usage should undergo more frequent oil changes than those that are used less frequently. By monitoring the actual vehicle condition and wear, owners can ensure that their vehicles are always at 100% and can better manage and plan for maintenance expenses.

Insurance companies

Risk & fraud

By using data from smart maintenance systems, insurance companies can enhance their risk modeling. The analysis of this data allows insurers to identify the assets that are at higher risk of requiring maintenance or replacement and adjust their premiums accordingly. In addition, smart maintenance systems can detect any instances of tampering with the equipment or negligence in maintenance. This can aid insurers in recognizing fraudulent claims.

OEMs successful development of PdM systems

BMW Group case study

The German brand implements various predictive maintenance tools and technologies, such as sensors, data analytics, and artificial intelligence, to prevent production downtime, promote sustainability, and ensure efficient resource utilization in its global manufacturing network. These innovative, cloud-based solutions are playing a vital role in enhancing their manufacturing processes and improving overall productivity.

The BMW Group's approach involves:

- Forecasting phenomena and anomalies using a cloud-based platform. Individual software modules within the platform can be easily switched on and off if necessary to instantly adapt to changing requirements. The high degree of standardization between individual components allows the system to be globally accessible. Moreover, it is highly scalable and allows new application scenarios to be easily implemented.

- Optimizing component replacements (this uses advanced real-time data analytics).

- Carrying out maintenance and service work in line with the requirements of the actual status of the system.

- Anomaly detection using advanced AI predictive algorithms.

Meanwhile, it should be taken into account that in BMW's body and paint shop alone, welding guns perform some 15,000 spot welds per day. At the BMW Group's plant in Regensburg, the conveyor systems' control units run 24/7. So any downtime is a huge loss.

→ SOURCE case study.

FORD case study

Predictive vehicle maintenance is one of the benefits offered to drivers and automotive service providers as part of Ford's partnerships with CARUSO and HIGH MOBILITY. In late 2020, Ford announced two new connected car agreements to potentially enable vehicle owners to benefit from a personalized third-party offer.

CARUSO and HIGH MOBILITY will function as an online data platform that is completely independent of Ford and allows third-party service providers secure and compliant access to vehicle-generated data. This access will, in turn, enable third-party providers to create personalized services for Ford vehicle owners. This will enable drivers to benefit from smarter insurance, technical maintenance and roadside recovery.

Sharing vehicle data (warning codes, GPS location, etc.) via an open platform is expected to be a way to maintain competitiveness in the connected mobility market.

→ SOURCE case study.

Predictive maintenance is the future of the automotive market

An effective PdM system means less time spent on equipment maintenance, saving on spare parts, eliminating unplanned downtime and improved management of company resources. And with that comes more efficient production and customers’ and employees’ satisfaction.

As the data shows, organizations that have implemented a PdM system report an average decrease of 55% in unplanned equipment failures. Another upside is that, compared to other connected car systems (such as infotainment systems), PdM is relatively easy to monetize. Data here can remain anonymous, and all parties involved in the production and operation of the vehicle reap the benefits.

Organizations have come to recognize the hefty returns on investment provided by predictive maintenance solutions and have thus adopted it on a global scale. According to Market Research Future, the global Predictive Maintenance market is projected to grow to 111.30 billion by 2030 , suggesting that further growth is possible in the future.

Unveiling the EU Data Act: Automotive industry implications

Fasten your seatbelts! The EU Data Act aims to drive a paradigm shift in the digital economy, and the automotive industry is about to experience a high-octane transformation. Get ready to explore the user-centric approach , new data-sharing mechanisms, and the roadmap for OEMs to adapt and thrive in the European data market. Are you prepared for this journey?

Key takeaways

- The EU Data Act grants users ownership and control of their data while introducing obligations for automotive OEMs to ensure fair competition.

- The Act facilitates data sharing between users, enterprises, and public sector bodies to promote innovation in the European automotive industry.

- Automotive OEMs must invest in resources and technologies to comply with the EU Data Act regulations for optimal growth opportunities.

The EU Data Act and its impact on the automotive industry

The EU Data Act applies to manufacturers, suppliers, and users of products or services placed on the market in the EU, as well as data holders and recipients based in the EU.

What is the EU Data Act regulation?

The EU Data Act is a proposed regulation that seeks to harmonize rules on fair access to and use of data in the European Union. The regulation sets out clear guidelines on who is obliged to surrender data, who can access it, how it can be used, and for what specific purposes it can be utilized.

In June 2023, the European Union took a significant step towards finalizing the Data Act, marking a pivotal moment in data governance. While the Act awaits formal adoption by the Council and Parliament following a legal-linguistic revision, the recent informal political agreement suggests its inevitability. This groundbreaking regulation will accelerate the monetization of industrial data while ensuring a harmonized playing field across the European Union.

User-centric approach

The European Data Act is revving up the engines of change in the automotive sector, putting users in the driver’s seat of their data and imposing specific obligations on OEMs. This means that connected products and related services must provide users with direct access to data generated in-vehicle, without any additional costs, and in a secure, structured, and machine-readable format.

Data handling by OEMs

A significant change is about to happen in data practices, particularly for OEMs operating in the automotive industry. Manufacturers and designers of smart products, such as smart cars, will be required to share data with users and authorized third parties. This shared data includes a wide range of information:

Included in the Sharing Obligation: The data collected during the user's interaction with the smart car that includes information about the car's operation and environment. This information is gathered from onboard applications such as GPS and sensor images, hardware status indications, as well as data generated during times of inaction by the user, such as when the car is on standby or switched off. Both raw and pre-processed data are collected and analyzed.

Excluded from the Sharing Obligation: Insights derived from raw data, any data produced when the user engages in activities like content recording or transmitting, and any data from products designed to be non-retrievable are not shared.

Sharing mechanisms and interactions

Data holders must make vehicle-generated data available (including associated metadata) promptly, without charge, and in a structured, commonly used, machine-readable format.

The legal basis for sharing personal data with connected vehicle users and legal entities or data recipients other than the user varies depending on the data subject and the sector-specific legislation to be presented.

Data access and third-party services

The Data Act identifies eligible entities for data sharing, encompassing both physical persons, such as individual vehicle owners or lessees, and legal persons, like organizations operating fleets of vehicles.

Requesting data sharing

Data can be accessed by users who are recipients either directly from the device's storage or from a remote server that captures the data. In cases where the data cannot be accessed directly, the manufacturers must promptly provide it.

The data must be free, straightforward, secure, and formatted for machine readability, and its quality should be maintained where necessary. There may be contracts that limit or deny access or further distribution of data if it breaches legal security requirements. This is a critical aspect for smart cars where sharing data might pose a risk to personal safety.

If the recipient of data is a third party , they cannot use the data to create competing products, only for maintenance. They cannot share the data unless it is for providing a user service and cannot prevent users who are consumers from sharing it with other parties.

Fair competition and trade secrets

The Data Act mandates that manufacturers share data, even when it is protected by trade secret laws. However, safeguards exist, allowing OEMs to impose confidentiality obligations and withhold data sharing in specific circumstances. These provisions ensure a balance between data access and trade secret protection. During the final negotiations on the Data Act, safeguarding trade secrets was a primary focus.

The Data Act now has provisions to prevent potential abusive behavior by data holders. It also includes an exception to data-sharing that permits manufacturers to reject certain data access requests if they can prove that such access would result in the disclosure of trade secrets, leading to severe and irreversible economic losses.

Connected vehicle data

Connected vehicle data takes the spotlight under the EU Data Act, empowering users with real-time access to their data and enabling data sharing with repair or service providers.

The implementation of the Data Act heavily involves connected cars. As per the Act, users, including companies, have the right to access the data collected by vehicles. However, manufacturers have the option to limit access under exceptional circumstances. This has a significant impact on data collection practices in the automotive sector.

Preparing for the EU Data Act: A guide for automotive OEMs

To stay ahead of the curve, OEMs must understand the business implications of the Data Act, adapt to new regulations, and invest in the necessary resources and technologies to ensure compliance.

As connected vehicles become the norm, OEMs that embrace the Data Act will be well-positioned to capitalize on new opportunities and drive growth in the European automotive sector.

Business implications

The EU Data Act imposes significant business implications on automotive OEMs, necessitating changes in their data handling practices and adherence to new obligations. As the industry embraces the user-centric approach to data handling, OEMs must design connected products and related services that provide users with access to their in-vehicle data.

To ensure a smooth transition and maintain a competitive edge, automotive OEMs must undertake a tailored and strategic preparation process.

Adapting to new regulations

Failure to comply with the Data Act could result in legal and financial repercussions for automotive OEMs. In order to avoid any possible problems, they should invest in the necessary resources and technologies to ensure compliance with the regulations of the Data Act.

They should also engage proactively with the requirements of the Data Act and implement compliance measures strategically.

By taking the following steps, automotive OEMs can navigate the regulatory landscape effectively and seize growth opportunities in the European automotive sector:

In-Depth Knowledge: Dive deep into the EU Data Act, with a special focus on its impact on the automotive industry. Recognize that the automotive sector is central to this regulation, requiring industry-specific understanding.

Data Segmentation: Perform a comprehensive analysis of your data, categorizing it into distinct groups. Identify which data types fall within the purview of the EU Data Act.

Compliance Framework Development:

- Internal Compliance: Audit and update policies to comply with the EU Data Act. Develop a data governance framework for access, sharing, and privacy.

- Data Access Protocols: Establish unambiguous protocols for data access and sharing, including procedures for obtaining user consent, data retrieval, and sharing modalities.

Data Privacy and Security:

- Data Safeguards: Enhance data privacy and security, including encryption and access controls.

Data Utilization: Develop plans for leveraging this data to generate new revenue streams while adhering to the EU Data Act's mandates.

User Engagement and Consent:

- Transparency: Forge clear and transparent channels of communication with users. Keep users informed about data collection, sharing, and usage practices, and obtain well-informed consent.

- Consent Management: Implement robust consent management systems to efficiently monitor and administer user consent. Ensure that users maintain control over their data.

Legal Advisors: Engage legal experts well-versed in data protection and privacy laws, particularly those relevant to the automotive sector. Seek guidance for interpreting and implementing the EU Data Act within your specific industry context.

Data Access Enhancement: Invest in technology infrastructure to facilitate data access and sharing as per the EU Data Act's stipulations. Ensure that data can be easily and securely provided in the required format.

Employee Education: Educate your workforce on the intricacies of the EU Data Act and its implications for daily operations. Ensure that employees possess a strong understanding of data protection principles.

Ongoing Compliance Oversight: Establish mechanisms for continuous compliance monitoring. Regularly assess data practices, consent management systems, and data security protocols to identify and address compliance gaps.

Collaboration with Peers: Collaborate closely with industry associations, fellow automotive OEMs, and stakeholders to share insights, best practices, and strategies for addressing the specific challenges posed by the EU Data Act in the automotive sector.

Future-Ready Solutions: Develop adaptable and scalable solutions that accommodate potential regulatory landscape shifts. Remain agile and prepared to adjust strategies as needed.

Boosting innovation capabilities

The Data Act may bring some challenges, but it also creates a favorable environment for innovation. By making industrial data more accessible, the Act offers a huge potential for data-driven businesses to explore innovative business models. Adapting to the Act can improve a company's ability to innovate, allowing it to use data as a strategic asset for growth and differentiation.

Summary

The EU Data Act is driving a paradigm shift in the automotive sector, putting users in control of their data and revolutionizing the way OEMs handle, share, and access vehicle-generated data.

By embracing the user-centric approach, ensuring compliance with data sharing and processing provisions, and investing in innovation capabilities, data holders can unlock new opportunities and drive growth in the European automotive market.

It's time for OEMs to take actionable steps to comply with the new regulation . Read this guide on building EU Data Act-compliant connected car software to learn what they are.

Get prepared to meet the EU Data Act deadlines

Ready to turn compliance into a competitive advantage? We’re here to assist you , whether you need expert guidance on regulatory changes or customized data-sharing solutions.

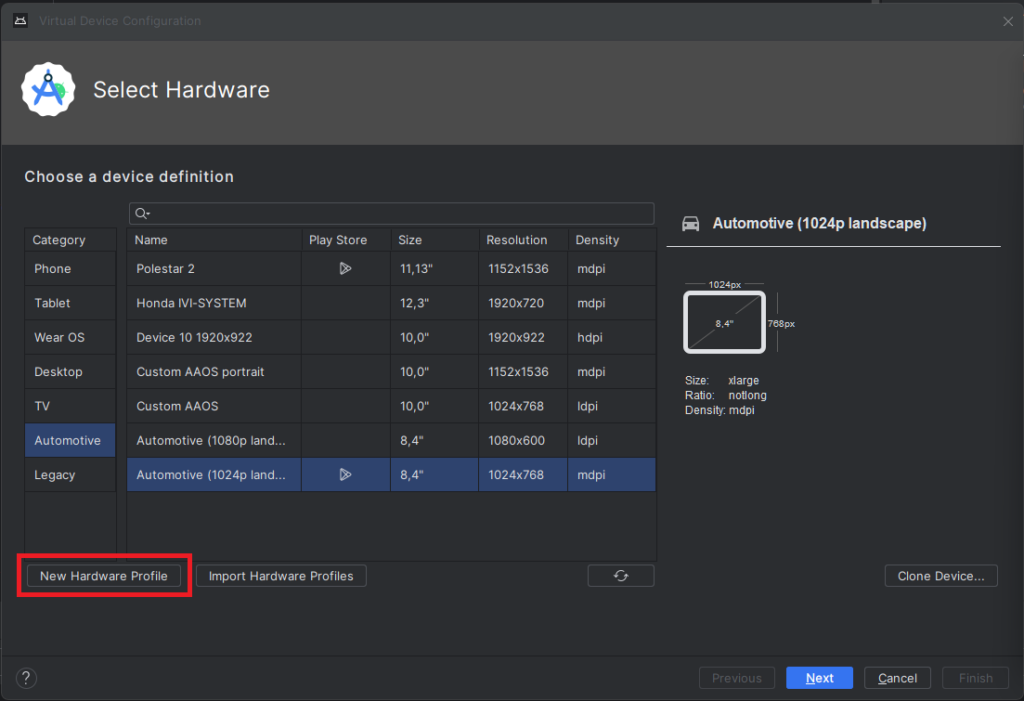

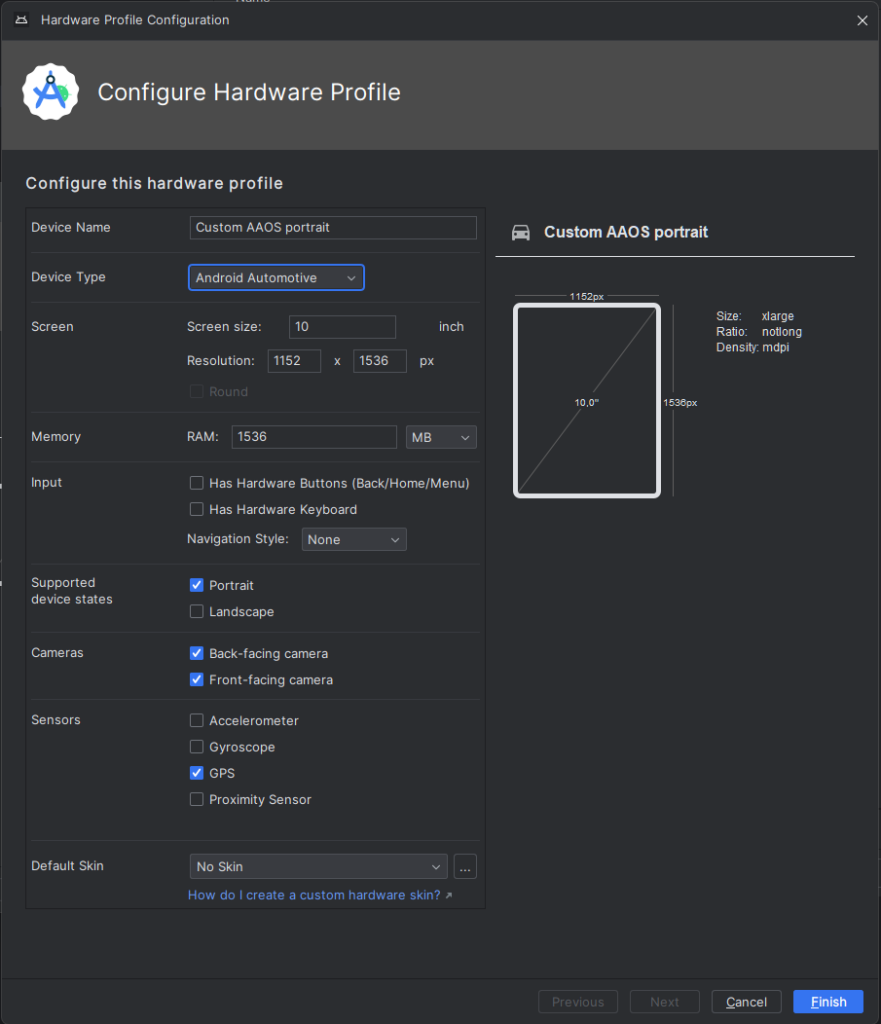

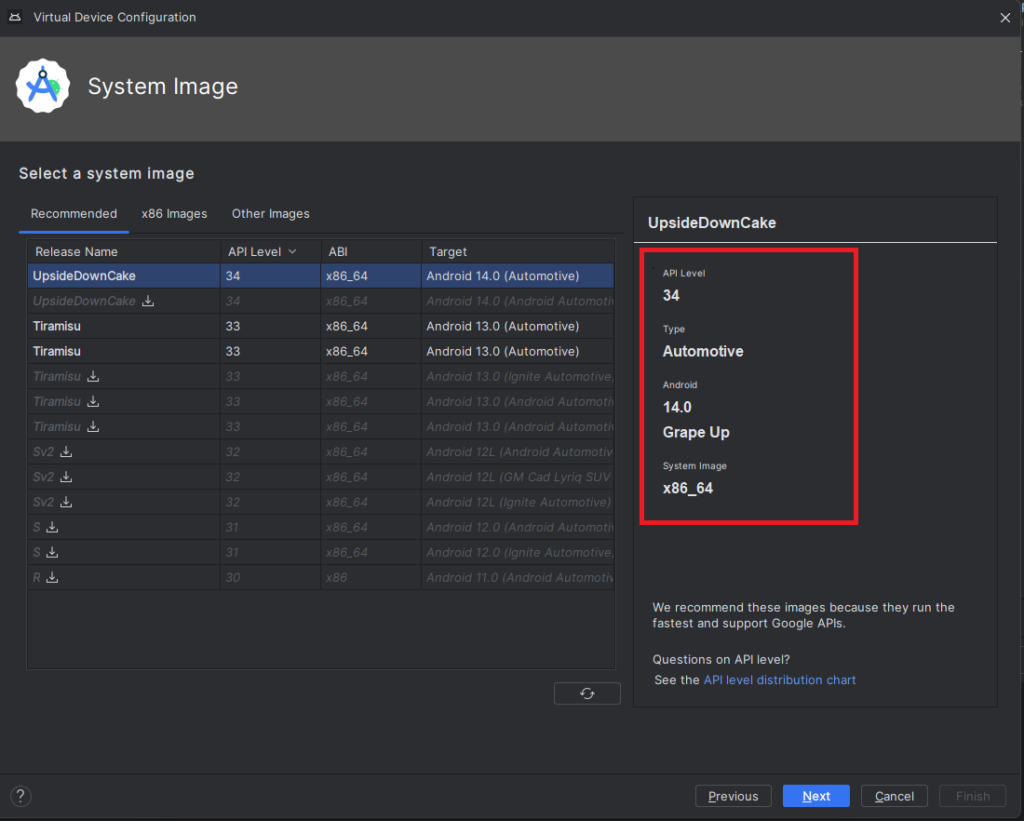

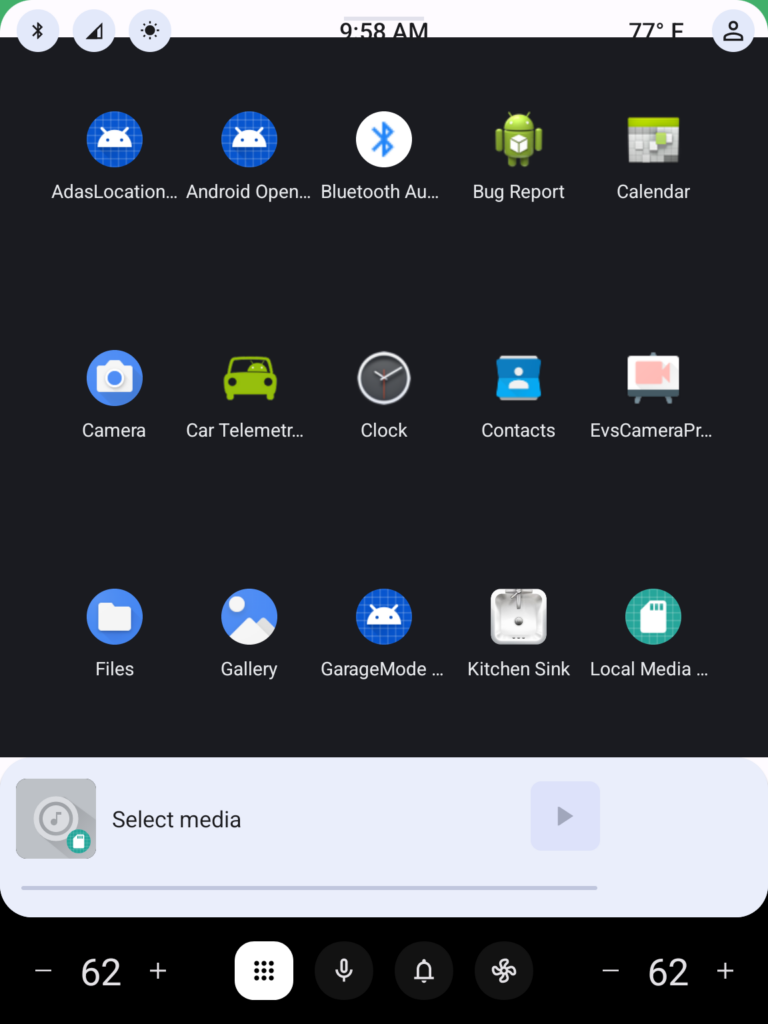

Android Automotive OS 11 Camera2 and EVS - Two different camera subsystems up and running

Android Automotive OS, AAOS in short, is a vehicle infotainment operating system that has gained a lot of traction recently, with most of the OEMs around the world openly announcing new versions of their infotainment based on Android. AAOS is based on the AOSP (Android Open Source Project) source code, which makes it fully compatible with Android, with additions that make it more useful in cars – different UI, integration with hardware layer, or vehicle-specific apps.

For OEMs and Tier1s, who are deeply accustomed to infotainment based on QNX/Autosar/Docker/Linux, and software developers working on AAOS apps, it’s sometimes difficult to quickly spin-up the development board or emulator supporting external hardware that has no out-of-the-box emulation built by Google. One of the common examples is camera access, which is missing in the official AAOS emulator these days, but the hardware itself is quite common in modern vehicles – which makes implementation of applications similar to Zoom or MS Teams for AAOS tempting to app developers.

In this article, I will explain how to build a simple test bench based on a cost-effective Raspberry Pi board and AAOS for developers to test their camera application. Examples will be based on AAOS 11 running on Raspberry Pi 4 and our Grape Up repository. Please check our previous article: " Build and Run Android Automotive OS on Raspberry Pi 4B " for a detailed description of how to run AAOS on this board.

Android Automotive OS has 2 different subsystems to access platform cameras: Camera2 and EVS. In this article, I will explain both how we can use it and how to get it running on Android Automotive OS 11.

Exterior View System (EVS)

EVS is a subsystem to display parking and maneuvering camera image. It supports multiple cameras' access and view. The main goal and advantage of that subsystem is that it boots quickly and should display a parking view before 2 seconds, which is required by law.

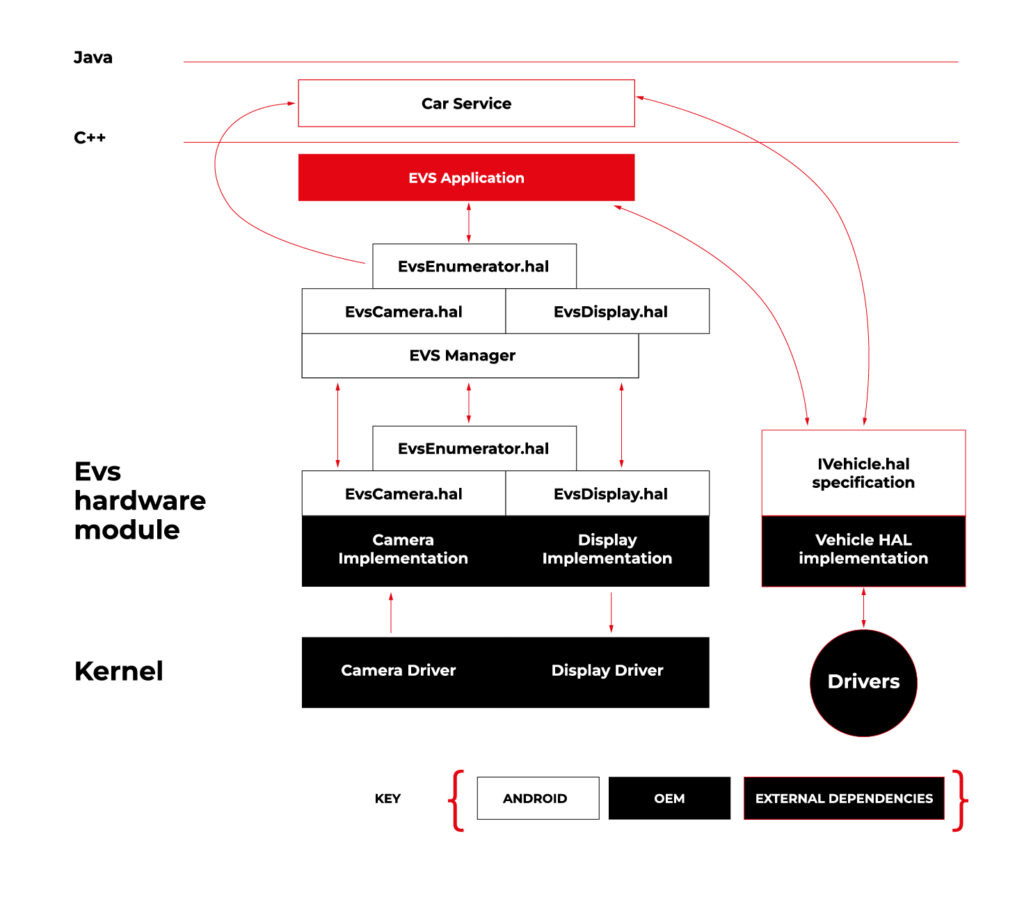

Source https://source.android.com/docs/automotive/camera-hal

As you can see on the attached diagram, low layers of EVS depend on OEM source code. OEM needs to deliver Camera and Display implementation. However, Android delivers a sample application (/hardware/interfaces/automotive/evs/1.0) , which uses Linux V4L2 and OpenGL to grab camera frames and display them. You can find more information about EVS at https://source.android.com/docs/automotive/camera-hal

In our example, we will use samples from Android. Additionally, I assume you build our Raspberry Pi image (see our article ), as it has multiple changes that allow AAOS to reliably run on RPi4 and support its hardware.

You should have a camera connected to your board via USB. Please check if your camera is detected by V4L2. There should be a device file:

/dev/video0

Then, type on the console:

su

setprop persist.automotive.evs.mode 1

This will start the EVS system.

To display camera views:

evs_app

Type Ctrl-C to exit the app and go back to the normal Android view.

Camera2

Camera2 is a subsystem intended for camera access by “normal” Android applications (smartphones, tablets, etc.). It is a common system for all Android applications, recently slowly being replaced by CameraX. The developer of an Android app uses Java camera API to gain access to the camera.

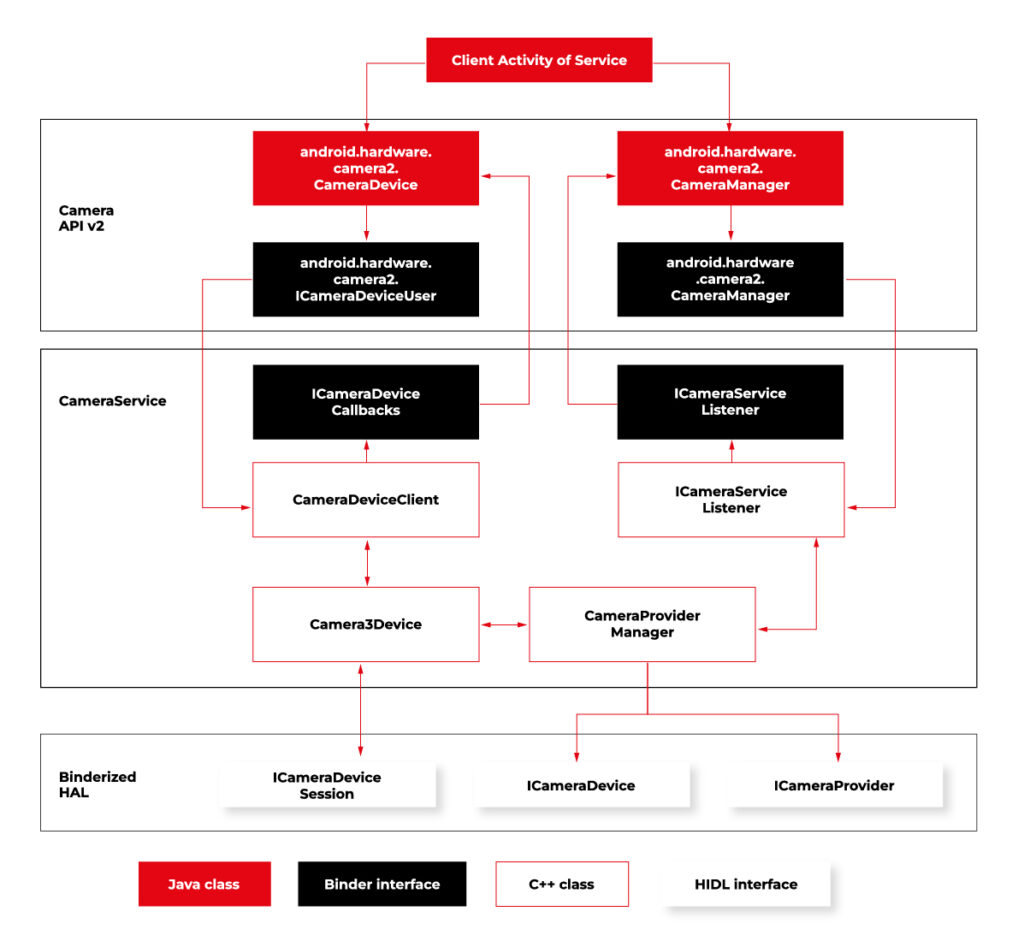

Camera2 has three main layers, which are shown in the diagram below:

Source https://source.android.com/docs/core/camera

Low-level Camera access is implemented in CameraProvider. OEM can implement their own provider or a V4L2 camera driver can be used.

To get Camera2, you should enable it in the Car product make file. In

packages/services/Car/car_product/build/car_base.mk change config.disable_cameraservice to false.

PRODUCT_PROPERTY_OVERRIDES += config.disable_cameraservice=false

After that, rebuild Android:

make ramdisk systemimage vendorimage

Put it in the SD card and boot RPi with it. You will be able to run the “Camera” application on the AAOS screen, see camera output from the connected webcam, and run and debug applications using Camera API.

Summary

Now you know how to run both AAOS camera APIs on the RPi4 board. You can use both APIs to develop automotive applications leveraging cameras and test them using a simple USB webcam, which you may have somewhere on the shelf. If you found this article useful, you can also look at our previous articles about AAOS – both from the application development perspective and the OS perspective . Happy coding!

How to build software architecture for new mobility services - gathering telemetry data

In the modern world, tech companies strive to collect as much information as possible about the status of owned cars to enable proactive maintenance and rapid responses to any incidents that may occur. These incidents could involve theft, damage, or the cars simply getting lost. The only way to remotely monitor their status is by obtaining telemetry data sent by the vehicles and storing it on a server or in the cloud. There are numerous methods for gathering this data, but is there an optimal approach? Is there a blueprint for designing an architecture for such a system? Let's explore.

What does “telemetry” mean in a car?

This article is about gathering telemetry data, so let's begin with a quick reminder of what it is. Telemetry in cars refers to the technology that enables the remote collection and transmission of real-time data from various components of a vehicle to a central monitoring system. This data encompasses a wide range of parameters, including, for example:

- engine performance,

- fuel consumption,

- tire pressure,

- vehicle speed,

- percentage of electric vehicle battery,

- braking activity,

- acceleration,

- GPS position,

- odometer

Collecting vehicle details is valuable, but what is the real purpose of this information?

Why collect telemetry data from a car?

The primary use of telemetry data is to monitor a car's status from anywhere in the world, and it's especially crucial for companies like car rental firms such as Hertz or Europcar, as well as transportation companies like Uber. Here are some examples:

- Tracking Stolen Cars : Companies can quickly track a stolen vehicle if they store its GPS position.

- Accident Analysis : If a car is involved in an accident, the company can assess the likelihood of the event by analyzing data such as a sudden drop in speed to zero and high acceleration. This allows companies to provide replacement cars promptly.

- Fuel or Charging Management : In cases where a rental car is returned without a full tank of fuel or not fully charged, the company can respond quickly to make the car available for the next rental.

These are just a few examples of how telemetry data can be utilized, with many more possibilities. Understanding the value of telemetry data, let's delve into the technical aspects of acquiring and using this data in the next part of the article.

To begin planning the architecture, we need answers to some fundamental questions

How will the telemetry data be used?

Architectural planning should commence with an understanding of the use cases for the collected telemetry data. This includes considering what the end user intends to do with the data and how they will access it. Common uses for this data include:

- Sharing data on a dashboard : To enable this, an architecture should be designed to support an API that retrieves data from databases and displays it on a dashboard,

- Data analytics : Depending on the specific needs, appropriate analytic tools should be planned. This can vary from real-time analysis (e.g. AWS Kinesis Data Analytics) to near real-time analysis (e.g. Kafka) or historical data analysis (e.g. AWS Athena),

- Sharing data with external clients : If external clients require real-time data, it's essential to incorporate a streaming mechanism into your architecture. If real-time access is not needed, a REST API should be part of the plan.

Can we collect the data from cars?

We should not collect any data from cars unless we either own the car or have a specific legal agreement to do so. This requires not only planning the architecture for acquiring access to the car but also for disposing of it. For example, if we collect telemetry or location data from a car through websockets and the company decides to sell the car, we should immediately cease tracking the car. Storing data from it, especially location data, might be illegal as it could potentially allow tracking of the location of a person inside the car.

How do we manage permissions to the car?

If we have legal permission to collect data from the car, we must include correct permission management in our architecture. Some key considerations include:

- Credential and token encryption,

- Secure storage of secrets, such as using AWS Secret Manager,

- Regular rotation of credentials and tokens for security,

- Implementing minimum access levels for services and vehicles,

- Good management of certificates,

- Adhering to basic security best practices.

How do we collect the data?

Now that we have access to the data, it's time to consider how to collect it. There are several known methods to do this:

- Pull Through REST/GRPC API: In this scenario, you'll need to implement a data poller. This approach may introduce latency in data acquisition and is not the most scalable solution. Additionally, you may encounter request throttling issues due to hitting request limits.

- External Service Push Through REST/GRPC: Here, you should set up a listener, which is essentially a service exposed with an endpoint, such as an ECS task or a Lambda function on AWS. This method might incur some costs, and it's crucial to consider automatic scaling to ensure no data is lost. Keep in mind that the endpoint will be publicly exposed, so robust permission management is essential.

- Pulling From a Stream: This approach is often recommended as it's the most scalable and secure option. You can receive data in real-time or near real-time, making it highly efficient. The primary considerations are access to the stream and the service responsible for pulling data from it.

- Queues: Similar to streams, queues can be used for data collection, and they may offer better data ordering. However, streams are typically faster but might be more expensive. This is another viable option for collecting vehicle data from external services.

- Websockets: Websockets are a suitable solution when bidirectional data flow is required, and they can be superior to REST/GRPC APIs in such cases. For example, they are an appropriate choice when a client needs confirmation that data has been successfully acquired. Websockets also allow you to specify which telemetry data can be acquired and at what frequency. A notable example is the Tesla Websockets ( https://github.com/teslamotors/fleet-telemetry/blob/main/protos/vehicle_data.proto ).

Where to store the data?

After collecting the data, it's important to decide where to store it. There are various databases available, and the choice depends on your specific data use cases and access patterns. For instance:

- Commonly Used Data : For data that will be frequently accessed, you can opt for a traditional database like MongoDB or PostgreSQL.

- Low-Maintenance Database : If you prefer a database that requires minimal maintenance, AWS DynamoDB is a good choice.

- Infrequently Used Data for Analytics : When data won't be used frequently but will be utilized for occasional data analytics, you can consider using an AWS S3 bucket with the appropriate storage tier, coupled with AWS Athena for data analysis.

- Complex Data Analysis : If the data will be regularly analyzed with complex queries, AWS Redshift might be a suitable solution.

When planning your databases, don't forget to consider data retention. If historical data is no longer needed, it's advisable to remove it to avoid excessive storage costs.

Example

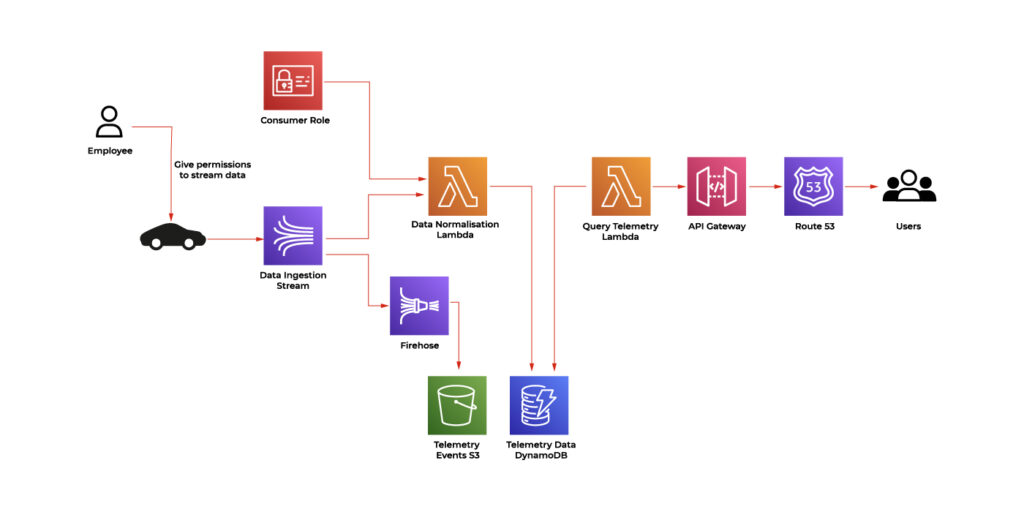

Here is an example of such an architecture on AWS in which:

- An employee grants permissions to the car to stream the data.

- The data is streamed using AWS Kinesis Stream and saved to an S3 bucket by AWS Kinesis Firehose for audit purposes.

- The data is also normalized by the AWS Lambda function and stored in AWS DynamoDB.

- The stored data is queried by another AWS Lambda function.

- The query Lambda is triggered by an AWS API Gateway to enhance security, such as limiting requests per second.

- The API is exposed via Route 53 to the end user, which can be, for example, a dashboard or an external API.

Conclusion

In the modern tech landscape, the quest for complete vehicle data is a paramount objective. Tech companies seek to collect critical information about the status of owned cars to enable proactive maintenance and rapid responses to a spectrum of incidents, from theft and damage to simple misplacement. This imperative relies on the remote monitoring of vehicles through the collection and storage of data on servers or in the cloud, offering the capability to monitor a vehicle's status from any corner of the globe. This is especially essential for companies like car rental firms and transportation services, with applications ranging from tracking stolen cars through GPS data to analyzing accident events and managing fuel or charging for rental vehicles.

The core of this mission is to strike a balance between data collection, security, and architectural planning. The process involves careful consideration of data collection methods, adherence to legal and security best practices, and informed choices for data storage solutions. The evolving landscape of vehicle data offers endless possibilities for tech companies to harness the power of telemetry and deliver an enhanced experience for their customers.

Driving success in delivering innovation for automotive: Exploring various partnership models

The automotive sector has rapidly evolved, recognizing that cutting-edge software is now the primary factor differentiating car brands and models. Automotive leaders are reshaping the industry by creating innovative software-defined vehicles that seamlessly integrate advanced features, providing drivers with a genuinely captivating experience.

While some automotive manufacturers have begun building in-house expertise, others are turning to third-party software development companies for assistance. However, such partnerships can take on different forms depending on factors like the vendor’s size and specialization, which can sometimes lead to challenges in cooperation.

Still, success is possible if both parties adopt the right approach despite any problems that may arise. To ensure optimal collaboration, we recommend implementing the Value-Aligned Partnership concept.

By adopting this approach, vendors accept full accountability for delivery, match their contributions to the client's business goals, and use their experience to guarantee success. We use this strategy at Grape Up to ensure our clients receive the best possible results.

This article explores the advantages and challenges of working with service vendors of various sizes and specializations. It also highlights the Value-Aligned Partnership model as a way to overcome these challenges and maximize the benefits of client-vendor cooperation.

Large vendors and system integrators

Working with multi-client software vendors can pose unique challenges for automotive companies, as these companies typically operate on a body leasing model , providing temporary skilled personnel for development services.

Furthermore, these companies may lack specialized expertise , which is particularly true for large organizations with high turnover rates in their development teams. Despite having impressive case studies, the team assigned to a specific project doesn't always possess the necessary experience due to frequent personnel changes within the company.

Moreover, big vendors usually have numerous clients and projects to manage simultaneously. This may lead to limited personalized attention and cause potential delays in delivery. Outsourcing services to countries with different time zones, quality assurance procedures, and cultural norms can further complicate the situation.

However, despite these challenges, such partnerships have significant advantages, which should be considered in the decision-making process.

Advantages of partnering with large vendors and system integrators

- Access to diverse expertise : A wide array of specialists is available for various project aspects.

- Scalability advantage : Project resource requirements are met without bottlenecks.

- Accelerated time-to-market : Development speed is increased with skilled, available teams.

- Cost-efficiency : It's a cost-effective alternative via vendor teams, reducing overhead.

- Risk management : Turnover risks are handled by vendors to ensure continuity.

- Cross-industry insights application : Diverse sector practices are applied to automotive projects.

- Agile adaptation : They are efficiently adjusting to changing project needs.

- Enhanced global collaboration: Creativity is boosted by leveraging time zones and diverse perspectives from vendors.

Working with smaller vendors

Your other option is to work with smaller software companies, but you should be aware of the potential challenges of such collaboration, as well. For example, while they are exceptionally well-versed in a particular field or technology, they might lack the breadth of knowledge necessary to meet the needs of the industry. Additionally, limited resources or difficulties in scaling could hinder their ability to keep pace with the growing demands of the sector.

Furthermore, although these vendors excel in specific technical areas , they may struggle to provide comprehensive end-to-end solutions, leaving gaps in the development process.

Adding to these challenges, smaller companies often have a more restricted perspective due to their limited engagement in partnerships , infrequent conference attendance, and a narrower appeal that doesn't span a global audience. This can result in a lack of exposure to diverse ideas and practices, hindering their ability to innovate and adapt.

Benefits of working with smaller vendors

- Tailored excellence: Small vendors often craft industry-specific, innovative solutions without unnecessary features.

- Personal priority: They prioritize each client, ensuring dedicated attention and satisfaction.

- Flexible negotiation: Smaller companies offer negotiable terms and pricing, unlike larger counterparts.

- Bespoke solutions: They customize offerings based on your unique needs, unlike a generic approach.

- Agile responsiveness: Quick release cycles, technical agility, and transparent interactions are their strengths.

- Meaningful connections: They deeply care about your success, fostering personal relationships.

- Accountable quality: They take responsibility for their products, as development and support are integrated.

Niche specialization companies

Collaborating with a company specializing in the automotive sector offers distinct advantages, addressing challenges some large and small vendors face. Their in-depth knowledge of the automotive industry ensures tailored solutions that meet specific requirements efficiently.

As opposed to vendors who work in several industries, they are quick to adjust to shifts in the market, allowing software development projects to be successful and meet the ever-changing needs of the automobile sector.

It is important, however, to consider the potential drawbacks when entering partnerships that rely heavily on a narrow area of expertise. Niche solutions may not be versatile , and specialization can lead to higher costs and resistance to innovation . Additionally, overreliance on a single source could lead to dependency concerns and a limited perspective on the market. It is important to weigh these risks against the benefits and ensure that partnerships are balanced to avoid stagnation and limited options.

When working with software vendors, no matter their size or specialization level, it is essential to adopt the right approach to cooperation to mitigate risks and challenges.

Value-aligned partnership model explained

This cooperation model prioritizes shared values, expertise, and cultural compatibility. It's important to note, in this context, that a company's size doesn't limit its abilities to become a successful partner. Both large and small vendors can be just as driven and invested in professional development.

What matters most is a company's mindset and commitment to continuous improvement. Small and large businesses can excel by prioritizing a robust organizational culture, regular training sessions, knowledge sharing among employees, and partnerships that leverage the strengths of both parties.

Thus, the Value-Aligned Partnership is a model that brings together the benefits of working with different types of companies. It combines the diverse expertise of large vendors with the agility and tailored solutions of small companies while incorporating vast industry-specific knowledge.

In a value-aligned partnership model, the vendor goes beyond simply providing software development services. They actively engage in the journey towards success by fully immersing themselves in the client's vision . By thoroughly comprehending the customer's values and goals, a partner ensures that every contribution they make aligns seamlessly with the overall direction of the business and can even foster innovation within the client's company.

Building a strong partnership based on shared values takes time and effort, but it's worth it for the exceptional outcomes that result. Open communication, collaboration, and mutual understanding are key factors in creating a foundation for long-term cooperation and shared success between the two parties.

In the fast-paced and ever-changing automotive industry, having expertise specific to the domain is crucial. A value-aligned partner recognizes the importance of retaining in-house knowledge and skills related to the sector. As a company that prioritizes this approach, Grape Up invests in measures to minimize turnover , provide ongoing training and education, and ensure that our team possesses deep domain expertise . This commitment to automotive know-how strengthens the partnership's reliability and establishes us as a trusted, long-term ally for the automotive company.

Conclusion

The automotive industry is transforming remarkably with the rise of software-defined vehicles. OEMs realize they can't tackle this revolution alone and seek the perfect collaborators to join them on this exciting journey. These partners bring a wealth of expertise in software development, cloud technologies, artificial intelligence, and more. With their finger on the pulse of the automotive industry, they understand the ever-changing trends and challenges. They take the time to comprehend the OEM's vision, objectives, and market positioning, enabling them to provide tailored solutions that address specific needs.

Unleashing the full potential of MongoDB in automotive applications

Welcome to the second part of our article series about MongoDB in automotive. In the previous installment , we explored the power of MongoDB as a versatile data management solution for the automotive industry, focusing on its flexible data model, scalability, querying capabilities, and optimization techniques.

In this continuation, we will delve into two advanced features of MongoDB that further enhance its capabilities in automotive applications: timeseries data management and change streams. By harnessing the power of timeseries and change streams, MongoDB opens up new possibilities for managing and analyzing real-time data in the automotive domain. Join us as we uncover the exciting potential of MongoDB's advanced features and their impact on driving success in automotive applications.

Time-series data management in MongoDB for automotive

Managing time-series data effectively is a critical aspect of many automotive applications. From sensor data to vehicle telemetry, capturing and analyzing time-stamped data is essential for monitoring performance, detecting anomalies, and making informed decisions. MongoDB offers robust features and capabilities for managing time-series data efficiently. This section will explore the key considerations and best practices for leveraging MongoDB's time-series collections.

Understanding time-series collections

Introduced in MongoDB version 5.0, time-series collections provide a specialized data organization scheme optimized for storing and retrieving time-series data. Time-series data consists of a sequence of data points collected at specific intervals, typically related to time. This could include information such as temperature readings, speed measurements, or fuel consumption over time.

MongoDB employs various optimizations in a time-series collection to enhance performance and storage efficiency. One of the notable features is the organization of data into buckets. Data points within a specific time range are grouped in these buckets. This time range, often referred to as granularity, determines the level of detail or resolution at which data points are stored within each bucket. This bucketing approach offers several advantages.

Firstly, it improves query performance by enabling efficient data retrieval within a particular time interval. With data organized into buckets, MongoDB can quickly identify and retrieve the relevant data points, reducing the time required for queries.

Secondly, bucketing allows for efficient storage and compression of data within each bucket. By grouping data points, MongoDB can apply compression techniques specific to each bucket, optimizing disk space utilization. This helps to minimize storage requirements, especially when dealing with large volumes of time-series data.

Choosing sharded or non-sharded collections

When working with time-series data, you have the option to choose between sharded and non-sharded collections. Sharded collections distribute data across multiple shards, enabling horizontal scalability and accommodating larger data volumes. However, it's essential to consider the trade-offs associated with sharding. While sharded collections offer increased storage capacity, they may introduce additional complexity and potentially impact performance compared to non-sharded collections.

In most cases, non-sharded collections are sufficient for managing time-series data, especially when proper indexing and data organization strategies are employed. Non-sharded collections provide simplicity and optimal performance for most time-series use cases, eliminating the need for managing a sharded environment.

Effective data compression for time-series collections

Given the potentially large volumes of data generated by time-series measurements, efficient data compression techniques are crucial for optimizing storage and query performance. MongoDB provides built-in compression options that reduce data size, minimizing storage requirements and facilitating faster data transfer. Using compression, MongoDB significantly reduces the disk space consumed by time-series data while maintaining fast query performance.

One of the key compression options available in MongoDB is the WiredTiger storage engine. WiredTiger offers advanced compression algorithms that efficiently compress and decompress data, reducing disk space utilization. This compression option is particularly beneficial for time-series collections where data points are stored over specific time intervals.

By leveraging WiredTiger compression, MongoDB achieves an optimal balance between storage efficiency and query performance for time-series collections. The compressed data takes up less space on disk, resulting in reduced storage costs and improved overall system scalability. Additionally, compressed data can be transferred more quickly across networks, improving data transfer speeds and reducing network bandwidth requirements.

Considerations for granularity and data retention

When designing a time-series data model in MongoDB, granularity and data retention policies are important factors to consider. Granularity refers to the level of detail or resolution at which data points are stored, while data retention policies determine how long the data is retained in the collection.

Choosing the appropriate granularity is crucial for striking a balance between data precision and performance. MongoDB provides different granularity options, such as "seconds," "minutes," and "hours," each covering a specific time span. Selecting the granularity depends on the time interval between consecutive data points that have the same unique value for a specific field, known as the meta field. You can optimize storage and query performance by aligning the granularity with the ingestion rate of data from a unique data source.

For example, if you collect temperature readings from weather sensors every five minutes, setting the granularity to "minutes" would be appropriate. This ensures that data points are grouped in buckets based on the specified time span, enabling efficient storage and retrieval of time-series data.

In addition to granularity, defining an effective data retention policy is essential for managing the size and relevance of the time-series collection over time. Consider factors such as the retention period for data points, the frequency of purging outdated data, and the impact on query performance.

MongoDB provides a Time to Live (TTL) mechanism that can automatically remove expired data points from a time-series collection based on a specified time interval. However, it's important to note that there is a known issue related to TTL for very old records in MongoDB at the time of writing this article. The issue is described in detail in the MongoDB Jira ticket SERVER-76560.

The TTL behavior in time series collections differs from regular collections. In a time series collection, TTL expiration occurs at the bucket level rather than on individual documents within the bucket. Once all documents within a bucket have expired, the entire bucket is removed during the next run of the background task that removes expired buckets.

This bucket-level expiration behavior means that TTL may not work in the exact same way as with normal collections, where individual documents are removed as soon as they expire. It's important to be aware of this distinction and consider it when designing your data retention strategy for time series collections.

When considering granularity and data retention policies, evaluate the specific requirements of your automotive application. Consider the level of precision needed for analysis, the data ingestion rate, and the desired storage and query performance. By carefully evaluating these factors and understanding the behavior of TTL in time series collections, you can design a time-series data model in MongoDB that optimizes both storage efficiency and query performance while meeting your application's needs.

Retrieving latest documents

In automotive applications, retrieving the latest documents for each unique meta key can be a common requirement. MongoDB provides an efficient approach to achieve this using the `DISTINCT_SCAN` stage in the aggregation pipeline. Let's explore how you can use this feature, along with an automotive example.

The `DISTINCT_SCAN` stage is designed to perform distinct scans on sorted data in an optimized manner. By leveraging the sorted nature of the data, it efficiently speeds up the process of identifying distinct values.

To illustrate its usage, let's consider a scenario where you have a time series collection of vehicle data that includes meta information and timestamps. You want to retrieve the latest document for each unique vehicle model. Here's an example code snippet demonstrating how to accomplish this:

```javascript

db.vehicleData.aggregate([

{ $sort: { metaField: 1, timestamp: -1 } },

{

$group: {

_id: "$metaField",

latestDocument: { $first: "$$ROOT" }

}

},

{ $replaceRoot: { newRoot: "$latestDocument" } }

])

```

In the above code, we first use the `$sort` stage to sort the documents based on the `metaField` field in ascending order and the `timestamp` field in descending order. This sorting ensures that the latest documents appear first within each group.

Next, we employ the `$group` stage to group the documents by the `metaField` field and select the first document using the `$first` operator. This operator retrieves the first document encountered in each group, corresponding to the latest document for each unique meta key.

Finally, we utilize the `$replaceRoot` stage to promote the `latestDocument` to the root level of the output, effectively removing the grouping and retaining only the latest documents.

By utilizing this approach, you can efficiently retrieve the latest documents per each meta key in an automotive dataset. The `DISTINCT_SCAN` stage optimizes the distinct scan operation, while the `$first` operator ensures accurate retrieval of the latest documents.

It's important to note that the `DISTINCT_SCAN` stage is an internal optimization technique of MongoDB's aggregation framework. It is automatically applied when the conditions are met, so you don't need to specify or enable it in your aggregation pipeline explicitly.

Time series collection limitations

While MongoDB Time Series brings valuable features for managing time-series data, it also has certain limitations to consider. Understanding these limitations can help developers make informed decisions when utilizing MongoDB for time-series data storage:

● Unsupported Features : Time series collections in MongoDB do not support certain features, including transactions and change streams. These features are not available when working specifically with time series data.

● A ggregation $out and $merge : The $out and $merge stages of the aggregation pipeline, commonly used for storing aggregation results in a separate collection or merging results with an existing collection, are not supported in time series collections. This limitation affects the ability to perform certain aggregation operations directly on time series collections.

● Updates and Deletes : Time series collections only support insert operations and read queries. This means that once data is inserted into a time series collection, it cannot be directly modified or deleted on a per-document basis. Any updates or manual delete operations will result in an error.

MongoDB change streams for real-time data monitoring

MongoDB Change Streams provide a powerful feature for real-time data monitoring in MongoDB. Change Streams allow you to capture and react to any changes happening in a MongoDB collection in a real-time manner. This is particularly useful in scenarios where you need to track updates, insertions, or deletions in your data and take immediate actions based on those changes.

Change Streams provide a unified and consistent way to subscribe to the database changes, making it easier to build reactive applications that respond to real-time data modifications.

```javascript

// MongoDB Change Streams for Real-Time Data Monitoring

const MongoClient = require('mongodb').MongoClient;

// Connection URL

const url = 'mongodb://localhost:27017';

// Database and collection names

const dbName = 'mydatabase';

const collectionName = 'mycollection';

// Create a change stream

MongoClient.connect(url, function(err, client) {

if (err) throw err;

const db = client.db(dbName);

const collection = db.collection(collectionName);

// Create a change stream cursor with filtering

const changeStream = collection.watch([{ $match: { operationType: 'delete' } }]);

// Set up event listeners for change events

changeStream.on('change', function(change) {

// Process the delete event

console.log('Delete Event:', change);

// Perform further actions based on the delete event

});

// Close the connection

// client.close();

});

```

In this updated example, we use the `$match` stage in the change stream pipeline to filter for delete operations only. The `$match` stage is specified as an array in the `watch()` method. The `{ operationType: 'delete' }` filter ensures that only delete events will be captured by the change stream.

Now, when a delete operation occurs in the specified collection, the `'change'` event listener will be triggered, and the callback function will execute. Inside the callback, you can process the delete event and perform additional actions based on your application's requirements. It's important to note that the change stream will only provide the document ID for delete operations. The actual content of the document is no longer available. If you need the document content, you need to retrieve it before the delete operation or store it separately for reference.

One important aspect to consider is related to the inability to distinguish whether a document was removed manually or due to the Time to Live (TTL) mechanism. Change streams do not provide explicit information about the reason for document removal. This means that when a document is removed, the application cannot determine if it was deleted manually by a user or automatically expired through the TTL mechanism. Depending on the use case, it may be necessary to implement additional logic or mechanisms within the application to handle this distinction if it is critical for the business requirements.

Here are some key aspects and benefits of using MongoDB Change Streams for real-time data monitoring:

● Real-Time Event Capture : Change Streams allow you to capture changes as they occur, providing a real-time view of the database activity. This enables you to monitor data modifications instantly and react to them in real-time.

● Flexibility in Filtering : You can specify filters and criteria to define the changes you want to capture. This gives you the flexibility to focus on specific documents, fields, or operations and filter out irrelevant changes, optimizing your monitoring process.