Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Developing software for connected cars - common challenges and how to tackle them

Automotive is transforming into a hyper-connected, software-driven industry that goes far beyond the driving experience. How to build applications in such an innovative environment? What are the main challenges of providing software for connected cars and how to deal with them? Let’s dive into the process of utilizing the capabilities of the cloud to move automotive forward.

People have always aimed for the clouds. From Icarus in Greek mythology, first airplanes and spaceships to dreams about flying cars – our culture and history of technology development express a strong desire to go beyond our limits. Although the vision from Back to the Future and other Sci-Fi movies didn’t come true and our cars cannot be used as flying vehicles, our cars actually are in the cloud.

Meanwhile, the idea of the Internet of Things came true; our devices are connected to the Internet . We have smartphones, smartwatches, smart homes and, as it turns out, smart cars. We are able to communicate with them to gather data or even remotely control them. The possibilities are only limited by hardware, but even it is constantly improving to follow the pace of rapid changes triggered by software development.

Offerings on the automotive market are developing rapidly with numerous features and promised experiences to the end customer. By using cutting-edge technologies, utilizing cloud platforms, and working with innovative software developers, automakers provide solutions to even the most demanding needs . And while our user experience is improving at an accelerated pace, there is still a broad list of challenges to tackle.

In this article, we dive into the technology behind the latest trends, take into account the most demanding areas of developing software in the cloud, and explain how proper solution empowers the change that affects us all.

Challenging determinants of the cloud revolution in automotive

Connecting with your car through a smartphone or utilizing information about traffic provided to your vehicle thanks to the platforms that accumulate data registered by other drivers is extremely useful.

Those innovative changes wouldn’t be possible without cloud infrastructure . And as there is no way back from moving to the cloud, the transition creates challenges in various areas: safety, security, responsiveness, integrity , and more.

Safety in the automotive sector

How to create a solution that doesn’t affect the safety of a driver? When developing new services, you cannot forget about the basics. Infotainment provided to vehicles is more advanced for every new release of a car and can be really engaging. The amount of delivered information combined with increasingly larger displays may lead to distraction and create dangerous situations. It’s worth mentioning that some of the colors may even impair the driver’s vision!

Integration with the cloud usually enables some of the remote commands. When implementing them, there are a lot of restrictions that need to be kept in mind. Some of them are obvious, such as you don’t want to disable the engine when a car is being driven 100km/h, but others may be much more complicated and unseen at first.

Providing security for car owners

Enabling services for your vehicle in the cloud, despite being extremely helpful to improve your experience, creates another way to break into your car. Everyone would like to open a car without using keys, but using a mobile phone, voice, or a fingerprint instead. And as these solutions seem modern and fancy, there is a big responsibility on the software side to do it securely.

Responsiveness enabling the seamless user experience

Customer-facing services need to deliver a seamless experience to the end-user. The customer doesn’t want to wait a minute or even ten seconds for unlocking a car door. These services need to do it immediately or not at all, as an issue with opening the doors just because the system had a ‘lag’ is not acceptable behavior.

Data integrity is a must

Another very important concept associated with providing solutions utilizing cloud technologies is data integrity. Information collected by your vehicle should be useful and up to date. You don’t want a situation when the mobile application says that the car has a range of 100km, but in the morning, it turns out that the tank is almost empty, and you need to refuel it before going to work.

How to integrate and utilize mobile devices to connect with your vehicle?

When discussing how to use mobile phones to control cars, a very important question occurs; how to communicate with the car? There is no simple answer, as it all depends on what model and version of a car it is, as depending on a provider, the vehicles are equipped with various technologies. Some of them are equipped with BLE, Wi-Fi Hotspots, or RFID tags, while others don’t offer a direct connection to the car, and the only way is to go through the backend side. Most of the manufacturers will expose some API over the Internet without providing a direct connection from mobile to the car. In such cases, usually, it’s a good practice to create your own backend which handles all API flaws. To do so, your system will need a platform to have a reliable solution.

When the limitation of hardware is met, there is always an option to equip the car with a custom device, which will expose a proper communication channel and will be integrated with the vehicle. To do so, it may use the OBD protocol. It gives us full control over the communication part, however, it’s expensive and hard to maintain the solution.

Building a platform to solve the challenges

There is no simple answer on how to solve the mentioned challenges and implement a resilient system that will deliver all necessary functionalities with the highest quality. However, it’s very important to remember that such a solution should be scalable and utilize cloud-native patterns. When designing a system for connected cars, the natural choice is to go with the microservice architecture. The implementation of the system is one thing, and partly this topic was covered in the previous article , but on the other hand, the very important aspect is a runtime, the platform. Choosing the wrong setup of virtual machines or having to deploy everything manually can lead to downtime of the system. Having a system that isn’t available for the customer constantly can damage your business.

Kubernetes to the rescue! As probably you know, Kubernetes is a container orchestration platform, which allows running workload in pods. The platform itself helped us to deliver many features faster and with ease to our clients. Nowadays, Kubernetes is so easily accessible that you can spin up a cluster in minutes using existing service providers like AWS or Azure. It allows you to increase the speed of delivery of new features, as they may be deployed immediately! What’s very important with Kubernetes, is its abstraction from infrastructure. The development team with expertise in Kubernetes is able to work on any cloud provider. Furthermore, mission-critical systems can successfully implement Kubernetes for their use cases as well.

Automotive cloud beyond car manufacturers

Automotive cloud is not only a domain of car manufacturers. As mentioned earlier, they offer digital services to integrate with their cars, but numerous mobility service providers integrate with these APIs to implement their own use cases.

- Live notifications

- Online diagnostics

- Fleet management

- Vehicle recovery

- Remote access

- Car sharing

- Car rental

The best practices of providing cloud-native software for the automotive industry

Working with the leading auto motive brands and being engaged in numerous projects meant to deliver innovative applications. Our team have collected a group of helpful practices which make development easier and improve user experience. There are some must-have practices when it comes to delivering high-quality software, such as CI/CD, Agile, DevOps, etc., – they are crucial yet well-known for the experienced development team and we don’t focus on them in this article. Here we share tips dedicated for teams working with app delivery for automotive.

Containerize your vehicle

One of the things we’ve learned collaborating with Porsche is that vehicles are equipped with ECUs and installing software on them isn’t easy. However, Kubernetes helps to mitigate that challenge, as we can mock the target ECU by docker image with specialized operating systems and install software directly in it. That’s a good approach to create an integration environment that shortens the feedback loop and helps deliver software faster and better.

Asynchronous API

In the IoT ecosystem, you can’t rely too much on your connection with edge devices. There are a lot of connectivity challenges, for example, a weak cellular range. You can’t guarantee when your command to the car will be delivered and if the car will respond in milliseconds or even at all. One of the best patterns here is to provide the asynchronous API. It doesn’t matter on which layer you’re building your software if it’s a connector between vehicle and cloud or a system communicating with the vehicle’s API provider. Asynchronous API allows you to limit your resource consumption and avoid timeouts that leave systems in an unknown state.

Let’s take a very simple example of a mobile application for locking the car remotely.

Synchronous API scenario

- A customer presses a button on the application to lock the car.

- The request is sent and is waiting for a response.

- The request needs to be delegated to the car which may take some time.

- The backend component crashes and starts without any knowledge about the previous request.

- The application gets a timeout.

- What now? Is the car locked? What should be displayed to the end-user?

Asynchronous API scenario

- The customer presses a button on the application to lock the car.

- The request is sent and ended immediately.

- The request needs to be delegated to the car which may take some time.

- The backend component crashes and starts without any knowledge about the previous request.

- The car sends a request with the command result through the backend to the application.

- Application displays: “Car is locked.”

With asynchronous API, there’s always a way to resend the response. With synchronous API, after you lose connection, the system doesn’t know where to resend response out of the box. As you may see, the asynchronous pattern handles this case perfectly.

Digital Twin

DigDigital Twin is a virtual model of a process, a product or a service, in case of automotive – a digital cockpit of a car. This pattern helps to ensure the integrity of data and simplify the development of new systems by its abstraction over the vehicle. The concept is based on the fact that it stores the actual state of the vehicle in the cloud and constantly updates it based on data sent from a car. Every feature requiring some property of vehicle should be integrated with Digital Twin to limit direct integrations with a car and improve the execution time of operations.

Implementation of Digital Twin may be tricky though, as it all depends on the vehicle manufacturer and API it provides. Sometimes it doesn’t expose enough properties or doesn’t provide real-time updates. In such cases, it’s even impossible to implement this pattern.

Software for Connected Cars - Summary

We believe that the future will look more futuristic than we could have ever imagined. Autonomous cars, smart cars, smart homes, every device tries to make our lives easier. It’s not known when and how these solutions will fully utilize Artificial Intelligence to make this experience even better. Everything connects as numerous IoT devices are connected which provides us with unlimited possibilities.

T he automotive industry is currently transforming, and it isn’t only focusing on the driving experience anymore. There is a serious focus on connected mobility and other customer-oriented services to enhance our daily routines and habits. However, as software providers, we should keep in mind that automotive is a mature industry. The first connected car solutions were built years ago, and it’s challenging to integrate with them. These best practices should help focus on customer experience. Unreliable systems won’t encourage anyone to use it, and bad reviews can easily destroy a brilliant idea.

The automotive industry is experiencing a challenging transformation. We can notice these changes with every new model of a car and with every new service released. However, to keep up with the pace of the changing world, the industry needs modern technologies and reliable solutions, such as Kubernetes. And on top of that cloud-native application, software created with the best practices by experienced engineers who use the customer-first approach.

ASP.NET core CI/CD on Azure Pipelines with Kubernetes and Helm

Due to the high entry threshold, it is not that easy to start a journey with Cloud Native. Developing apps focused on reliability and performance, and meeting high SLAs can be challenging. Fortunately, there are tools like Istio which simplify our lives. In this article, we guide you through the steps needed to create CI/CD with Azure Pipelines for deploying microservices using Helm Charts to Kubernetes. This example is a good starting point for preparing your development process. After this tutorial, you should have some basic ideas about how Cloud Native apps should be developed and deployed .

Technology stack

- .NET Core 3.0 (preview)

- Kubernetes

- Helm

- Istio

- Docker

- Azure DevOps

Prerequisites

You need a Kubernetes cluster, free Azure DevOps account, and a docker registry. Also, it would be useful to have kubectl and gcloud CLI installed on your machine. Regarding the Kubernetes cluster, we will be using Google Kubernetes Engine from Google Cloud Platform, but you can use a different cloud provider based on your preferences. On GCP you can create a free account and create a Kubernetes cluster with Istio enabled ( Enable Istio checkbox). We suggest using a machine with 3 standard nodes.

Connecting the cluster with Azure Pipelines

Once we have the cluster ready, we have to use kubectl to prepare service account which is needed for Azure Pipelines to authenticate. First, authenticate yourself by including necessary settings in kubeconfig. All cloud providers will guide you through this step. Then following commands should be run:

kubectl create serviceaccount azure-pipelines-deploy

kubectl create clusterrolebinding azure-pipelines-deploy --clusterrole=cluster-admin --serviceaccount=default:azure-pipelines-deploy

kubectl get secret $(kubectl get secrets -o custom-columns=":metadata.name" | grep azure-pipelines-deploy-token) -o yaml

We are creating a service account, to which a cluster role is assigned. The cluster-admin role will allow us to use Helm without restrictions. If you are interested, you can read more about RBAC on Kubernetes website . The last command is supposed to retrieve secret yaml , which is needed to define connection - save that output yaml somewhere.

Now, in Azure DevOps, go to Project Settings -> Service Connections and add a new Kubernetes service connection. Choose service account for authentication and paste the yaml copied from command executed in the previous step.

One more thing we need in here is the cluster IP. It should be available at cluster settings page, or it can be retrieved via command line. In the example, for GCP command should be similar to this:

gcloud container clusters describe --format=value(endpoint) --zone

Another service connection we have to define is for docker registry. For the sake of simplicity, we will use the Docker hub, where all you need is just to create an account (if you don’t have one). Then just supply whatever is needed in the form, and we can carry on with the application part.

Preparing an application

One of the things we should take into account while implementing apps in the Cloud is the Twelve-Factor methodology. We are not going to describe them one by one since they are explained good enough here but few of them will be mentioned throughout the article.

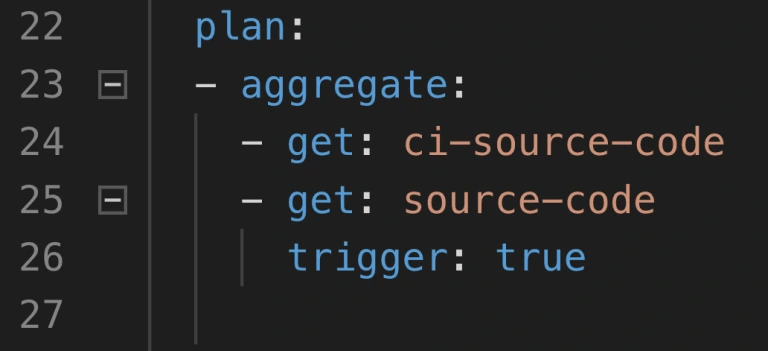

For tutorial purposes, we’ve prepared a sample ASP.NET Core Web Application containing a single controller and database context. It also contains simple dockerfile and helm charts. You can clone/fork sample project from here . Firstly, push it to a git repository (we will use Azure DevOps), because we will need it for CI. You can now add a new pipeline, choosing any of the available YAML definitions. In here we will define our build pipeline (CI) which looks like that:

trigger:

- master

pool:

vmImage: 'ubuntu-latest'

variables:

buildConfiguration: 'Release'

steps:

- task: Docker@2

inputs:

containerRegistry: 'dockerRegistry'

repository: '$(dockerRegistry)/$(name)'

command: 'buildAndPush'

Dockerfile: '**/Dockerfile'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.SourcesDirectory)/charts'

ArtifactName: 'charts'

publishLocation: 'Container'

Such definition is building a docker image and publishing it into predefined docker registry. There are two custom variables used, which are dockerRegistry (for docker hub replace with your username) and name which is just an image name (exampleApp is our case). The second task is used for publishing artifact with helm chart. These two (docker image & helm chart) will be used for the deployment pipeline.

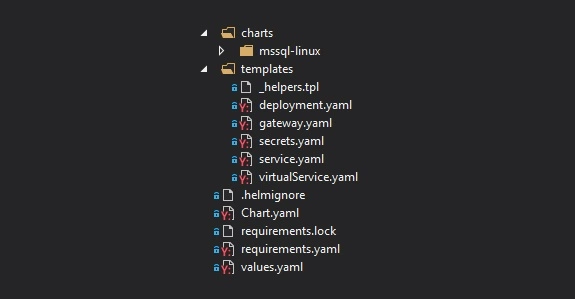

Helm charts

Firstly, take a look at the file structure for our chart. In the main folder, we have Chart.yaml which keeps chart metadata, requirements.yaml with which we can specify dependencies or values.yaml which serves default configuration values. In the templates folder, we can find all Kubernetes objects that will be created along with chart deployment. Then we have nested charts folder, which is a collection of charts added as a dependency in requirements.yaml. All of them will have the same file structure.

Let’s start with a focus on the deployment.yaml - a definition of Deployment controller, which provides declarative updates for Pods and Replica Sets. It is parameterized with helm templates, so you will see a lot of {{ template [...] }} in there. Definition of this Deployment itself is quite default, but we are adding a reference for the secret of SQL Server database password. We are hardcoding ‘-mssql-linux-secret’ part cause at the time of writing this article, helm doesn’t provide a straightforward way to access sub-charts properties.

env:

- name: sa_password

valueFrom:

secretKeyRef:

name: {{ template "exampleapp.name" $root }}-mssql-linux-secret

key: sapassword

As we mentioned previously, we do have SQL Server chart added as a dependency. Definition of that is pretty simple. We have to define the name of the dependency, which will match the folder name in charts subfolder and the version we want to use.

dependencies:

- name: mssql-linux

repository: https://kubernetes-charts.storage.googleapis.com

version: 0.8.0

[...]

For the mssql chart, there is one change that has to be applied in the secret.yaml . Normally, this secret will be created on each deployment ( helm upgrade ), it will generate a new sapassword - which is not what we want. The simplest way to adjust that is by modifying metadata and adding a hook on pre-install. This will guarantee that this secret will be created just once on installing the release.

metadata:

annotations:

"helm.sh/hook": "pre-install"

A deployment pipeline

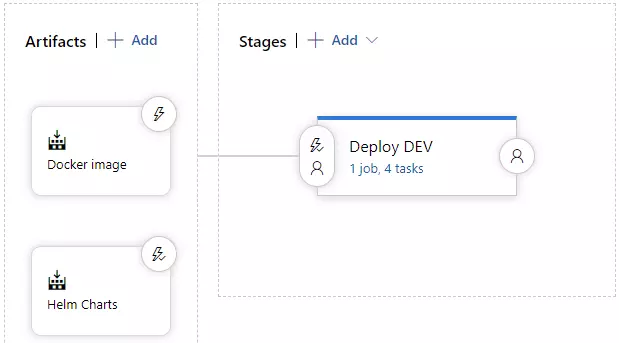

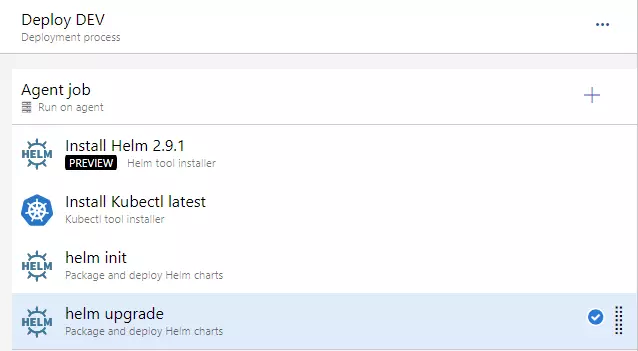

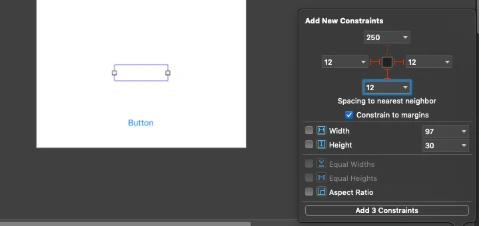

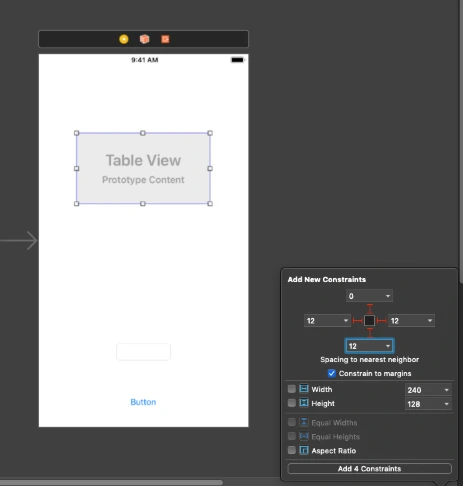

Let’s focus on deployment now. We will be using Helm to install and upgrade everything that will be needed in Kubernetes. Go to the Releases pipelines on the Azure DevOps, where we will configure continuous delivery. You have to add two artifacts, one for docker image and second for charts artifact. It should look like on the image below.

On the stages part, we could add a few more environments, which would get deployed in a similar manner, but to a different cluster. As you can see, this approach guarantees Deploy DEV stage is simply responsible for running a helm upgrade command. Before that, we need to install helm, kubectl and run helm init command.

For the helm upgrade task, we need to adjust a few things.

- set Chart Path, where you can browse into Helm charts artifact (should look like: “$(System.DefaultWorkingDirectory)/Helm charts/charts”)

- paste that “image.tag=$(Build.BuildNumber)” into Set Values

- and check to Install if release not present or add --install ar argument. This will behave as helm install if release won’t exist (i.e. on a clean cluster)

At this point, we should be able to run the deployment application - you can create a release and run deployment. You should see a green output at this point :).

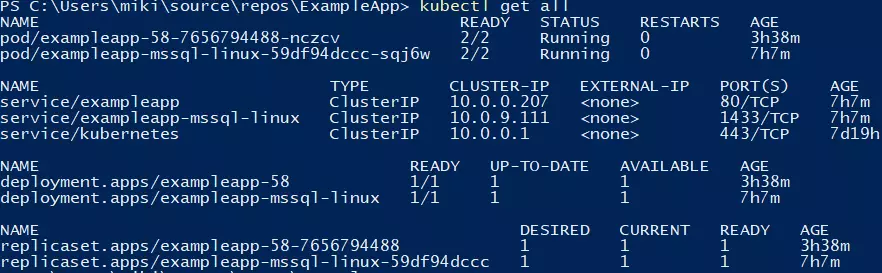

You can verify if the deployment went fine by running a kubectl get all command.

Making use of basic Istio components

Istio is a great tool, which simplifies services management. It is responsible for handling things like load balancing, traffic behavior, metric & logs, and security. Istio is leveraging Kubernetes sidecar containers, which are added to pods of our applications. You will have to enable this feature by applying an appropriate label on the namespace.

kubectl label namespace default istio-injection=enabled

All pods which will be created now will have an additional container, which is called a sidecar container in Kubernetes terms. That’s a useful feature, cause we don’t have to modify our application.

Two objects that we are using from Istio, which are part of the helm chart, are Gateway and VirtualService . For the first one, we will bring Istio definition, because it’s simple and accurate: “Gateway describes a load balancer operating at the edge of the mesh receiving incoming or outgoing HTTP/TCP connections”. That object is attached to the LoadBalancer object - we will use the one created by Istio by default. After the application is deployed, you will be able to access it using LoadBalancer external IP, which you can retrieve with such command:

kubectl get service/istio-ingressgateway -n istio-system

You can retrieve external IP from the output and verify if http://api/examples url works fine.

Summary

In this article, we have created a basic CI/CD which deploys single service into Kubernetes cluster with the help of Helm. Further adjustments can include different types of deployment, publishing tests coverage from CI or adding more services to mesh and leveraging additional Istio features. We hope you were able to complete the tutorial without any issues. Follow our blog for more in-depth articles around these topics that will be posted in the future.

The state of Kubernetes - what upcoming months will bring for the container orchestration

Kubernetes has become a must-have container orchestration platform for every company that aims to gain a competitive advantage by delivering high-quality software at a rapid pace. What’s the state of Kubernetes at the beginning of 2020? Is there room for improvement? Here is a list of trends that should shape the first months of the upcoming year.

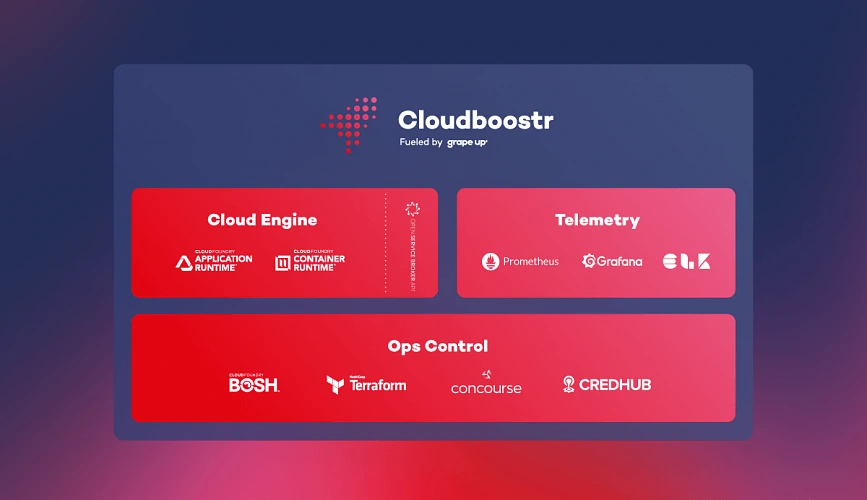

As a team that provides own Multicloud Enterprise Kubernetes platform and empowers numerous companies in adopting K8s, we follow all the news that helps to prepare for the upcoming trends in using this cloud-native platform. And there are the best places to learn what’s new and what’s coming like KubeCon I CloudNativeCon conferences.

A few weeks ago, San Diego hosted KubeCon + CloudNativeCon North America gathering 12 thousand cloud-native enthusiasts - 50% increase in the number of attendees in comparison to the previous edition shows the scale of the Kubernetes' popularity growth. During the event, we had a chance to listen about new trends and discuss further opportunities with industry experts. Most of the news announced in San Diego will influence the upcoming months in a cloud-native world. Below, we focus on the most important ones.

Kubernetes is a must for the most competitive brands

What makes KubeCon so likable? Access to Kubernetes experts, networking with an amazing community of people gathered around CNCF, chance to learn the trends before they become mainstream? For sure, but what also makes it so special? The answer comes to the hottest brands that join cloud-native nation these days - Pinterest, Home Depot, Walmart, Tinder and many more.

It’s obvious when tech companies present how they build their advantage using the latest technologies, but it becomes more intriguing when you have an opportunity to get to know how companies like Adidas, Nike or Tinder (yes, indeed) are using Kubernetes to provide their customers/users with extraordinary value.

As attached examples show, we live in the software-driven world, where the quality of delivered apps is crucial to stay relevant, regardless of the industry.

Enterprises need container orchestration to sustain their market share

The conference confirmed that Kubernetes is a standard in container orchestration and one of the key elements contributing to the successful implementation of a cloud-first strategy for enterprises.

But why the largest companies should be interested in adopting the newest technologies? Because their industries are being constantly disrupted by fresh startups utilizing agility and cutting-edge tech solutions. The only way to sustain position is by evolving. The way to achieve it comes to adopting a cloud-native strategy and implementing Kubernetes. As Jonathan Smart once said - “You’re never done with improving and learning.”

Automate what can be automated

As more and more teams move Kubernetes to production, a large number of companies is working on solutions that would help streamline and automate certain processes. That drives to the growing market of tools associated with Kubernetes and enriching its usage.

For example, Helm, which has its place in the native cloud toolbox used by administrators as one of the key deployment tools in its latest version, simplifies and improves operation by getting rid of some dependencies, such as Tiller, a server-side component running in the Kubernetes cluster.

Kubernetes-as-a-service and demand for Kubernetes experts

During this year’s KubeCon, many vendors presented a range of domains that have been offering complete solutions for Kubernetes, accelerating container orchestration. At previous events, we met vendors who have been providing storage, networking, and security components for Kubernetes. This evolution expresses the development of the environment built around the platform. Such an extensive offer of solutions allows teams or organizations to migrate to the native cloud to facilitate finding a compromise regarding "building versus buying" concerning components and solutions.

Rancher announced a solution that may be an example of an interesting Kubernetes-as-a-service option. The company collaborated with ARM to design a highly optimized version of Kubernetes for the edge - packaged as a single binary with a small footprint to reduce the dependencies and steps needed to install and run K8s in resource-constrained environments (e.g. IoT or edge devices for ITOps and DevOps teams.) By making K3s (lightweight distribution built for small footprint workloads) available and providing the beta release of Rio, their new application deployment engine for Kubernetes, Rancher delivers integrated deployment experience from operations to the pipeline.

Kubernetes-as-a-service offerings on the market are gaining strength. The huge number of Kubernetes use cases entails another very important trend. Companies are looking for talent in this field more than ever. Many companies have used conferences to meet with experts. Therefore, the number of Kubernetes jobs has also increased. The demand for experts on the subject is huge.

Multicloud is here to stay

Are hybrid solutions becoming a standard? Many cloud providers have claimed to be the best providers for multi-clouds - and we observe the trend that it becomes more popular. Despite some doubts (regarding its complexity, security, regulatory, or performance) enterprises are dealing well with implementing a multicloud strategy.

Top world’s companies are moving to multicloud as this approach empowers them to gain exceptional agility and huge cost savings thanks to the possibility to separate their workloads into different environments and make decisions based on the individual goals and specific requirements.

It is also a good strategy for companies working with private cloud-only. Usually, that’s the case because of storing sensitive data. As numerous case studies show, these businesses can be architected into multicloud solutions, whereas sensitive data is still stored securely on-premise, while other things are moved into the public cloud, which makes them easily scalable and easier to maintain.

Kubernetes is everywhere, even in the car….

During KubeCon, Rafał Kowalski, our colleague from Grape Up shared his presentation about running Kubernetes clusters in the car - "Kubernetes in Your 4x4 - Continuous Deployment Direct to the Car". Rafał showed how to use Kubernetes, KubeEdge, k3s, Jenkins, and RSocket for building continuous deployment pipelines, which ship software directly to the car, deals with rollbacks and connectivity issues. You can watch the entire video here:

https://www.youtube.com/watch?v=zmuOxFp3CAk&feature=youtu.be

…. and can be used in various devices

But these are not all of the possibilities; other devices such as drones or any IoT devices can also utilize containers The need for increased automation of cluster management and the ability to quickly rebuild clusters from scratch were the conclusions breaking through the above-mentioned occurrences.

The environment shows, through the remarkable pattern of the number of companies using Kubernetes and the development of utilities, there are still open needs in terms of simplicity and scalability of tools for operations, e.g. Security, data management, programming tools, and continuing operations in this area should be expected.

“Kubernetes has established itself as the de facto standard for container orchestration,”- these are the most frequently repeated words. It’s good to observe the development of the ecosystem around Kubernetes that strives to provide more reliable and cheaper experiences for enterprises that want to extend their strategic initiatives to the limit.

Reactive service to service communication with RSocket – load balancing & resumability

This article is the second one of the mini-series which will help you to get familiar with RSocket – a new binary protocol which may revolutionize machine to machine communication in distributed systems. In the following paragraphs, we will discuss the load balancing problem in the cloud as well as we will present the resumability feature which helps to deal with network issues, especially in the IoT systems.

- If you are not familiar with RSocket basics, please see the previous article available here

- Please notice that code examples presented in the article are available at GitHub

High availability & load balancing as a crucial part of enterprise-grade systems

Applications availability and reliability are crucial parts of many business areas like banking and insurance. In these demanding industries, the services have to be operational 24/7 even during high traffic, periods of increased network latency or natural disasters. To ensure that the software is always available to the end-users it is usually deployed in redundantly, across the multiple availability zones.

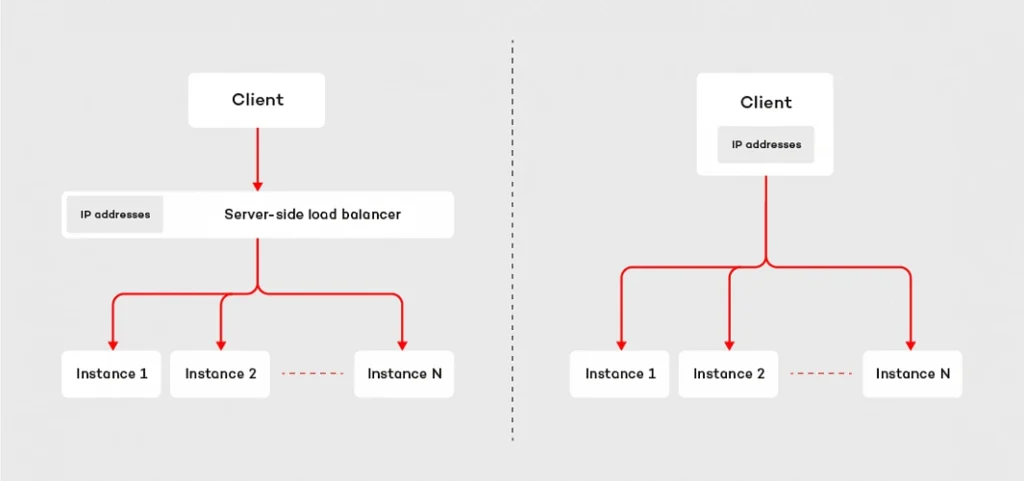

In such a scenario, at least two instances of each microservice are deployed in at least two availability zones. This technique helps our system become resilient and increase its capacity - multiple instances of the microservices are able to handle a significantly higher load. So where is the trick? The redundancy introduces extra complexity. As engineers, we have to ensure that the incoming traffic is spread across all available instances. There are two major techniques which address this problem: server load balancing and client load balancing .

The first approach is based on the assumption that the requester does not know the IP addresses of the responders. Instead of that, the requester communicates with the load balancer, which is responsible for spreading the requests across the microservices connected to it. This design is fairly easy to adopt in the cloud era. IaaS providers usually have built-in, reliable solutions, like Elastic Load Balancer available in Amazon Web Services. Moreover, such a design helps develop routing strategy more sophisticated than plain round ribbon (e.g. adaptive load balancing or chained failover ). The major drawback of this technique is the fact that we have to configure and deploy extra resources, which may be painful if our system consists of hundreds of the microservices. Furthermore, it may affect the latency – each request has extra “network hop” on the load balancer.

The second technique inverts the relation. Instead of a central point used to connect to responders, the requester knows IP addresses of each and every instance of the given microservice. Having such knowledge, the client can choose the responder instance to which it sends the request or opens the connection with. This strategy does not require any extra resources, but we have to ensure that the requester has the IP addresses of all instances of the responder ( see how to deal with it using service discovery pattern ). The main benefit of the client load balancing pattern is its performance – by reduction of one extra “network hop”, we may significantly decrease the latency. This is one of the key reasons why RSocket implements the client load balancing pattern.

Client load balancing in RSocket

On the code level, the implementation of the client load balancing in RSocket is pretty straightforward. The mechanism relies on the LoadBalancedRSocketMono object which works as a bag of available RSocket instances, provided by RSocket supplier. To access RSockets we have to subscribe to the LoadBalancedRSocketMono which onNext signal emits fully-fledged RSocket instance. Moreover, it calculates statistics for each RSocket, so that it is able to estimate the load of each instance and based on that choose the one with the best performance at the given point of time.

The algorithm takes into account multiple parameters like latency, number of maintained connections as well as a number of pending requests. The health of each RSocket is reflected by the availability parameter – which takes values from 0 to 1, where 0 indicates that the given instance cannot handle any requests and 1 is assigned to fully operational socket. The code snippet below shows the very basic example of the load-balanced RSocket, which connects to three different instances of the responder and executes 100 requests. Each time it picks up RSocket from the LoadBalancedRSocketMono object.

@Slf4j

public class LoadBalancedClient {

static final int[] PORTS = new int[]{7000, 7001, 7002};

public static void main(String[] args) {

List rsocketSuppliers = Arrays.stream(PORTS)

.mapToObj(port -> new RSocketSupplier(() -> RSocketFactory.connect()

.transport(TcpClientTransport.create(HOST, port))

.start()))

.collect(Collectors.toList());

LoadBalancedRSocketMono balancer = LoadBalancedRSocketMono.create((Publisher>) s -> {

s.onNext(rsocketSuppliers);

s.onComplete();

});

Flux.range(0, 100)

.flatMap(i -> balancer)

.doOnNext(rSocket -> rSocket.requestResponse(DefaultPayload.create("test-request")).block())

.blockLast();

}

}

It is worth noting, that client load balancer in RSocket deals with dead connections as well. If any of the RSocket instances registered in the LoadBalancedRSocketMono stop responding, the mechanism will automatically try to reconnect. By default, it will execute 5 attempts, in 25 seconds. If it does not succeed, the given RSocket will be removed from the pool of available connections. Such design combines the advantages of the server-side load balancing with low latency and reduction of “network hops” of the client load balancing.

Dead connections & resumabilty mechanism

The question which may arise in the context of dead connections is: what will happen if I have an only single instance of the responder and the connection drops due to network issues. Is there anything we can do with this? Fortunately, RSocket has built-in resumability mechanism.

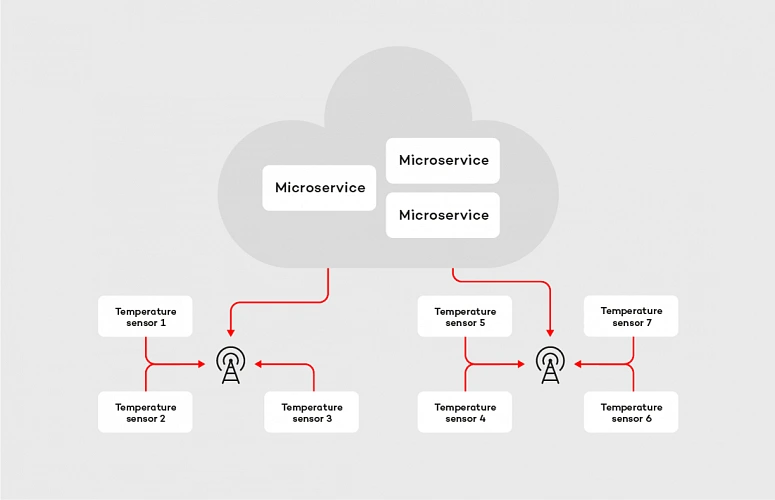

To clarify the concept let’s consider the following example. We are building an IoT platform which connects to multiple temperature sensors located in different places. Most of them in the distance to the nearest buildings and internet connection sources. Therefore, the devices connect to cloud services using GPRS. The business requirement for our system is that we need to collect temperature readings every second in the real-time, and we cannot lose any data.

In case of the machine-to-the machine communication within the cloud, streaming data in real-time is not a big deal, but if we consider IoT devices located in areas without access to a stable, reliable internet connection, the problem becomes more complex. We can easily identify two major issues we may face in such a system: the network latency and connection stability . From a software perspective, there is not much we can do with the first one, but we can try to deal with the latter. Let’s tackle the problem with RSocket, starting with picking up the proper interaction model . The most suitable in this case is request stream method, where the microservice deployed in the cloud is the requester and temperature sensor is the responder. After choosing the interaction model we apply resumability mechanism. In RSocket, we do it by method resume() invoked on the RSocketFactory , as shown in the examples below:

@Slf4j

public class ResumableRequester {

private static final int CLIENT_PORT = 7001;

public static void main(String[] args) {

RSocket socket = RSocketFactory.connect()

.resume()

.resumeSessionDuration(RESUME_SESSION_DURATION)

.transport(TcpClientTransport.create(HOST, CLIENT_PORT))

.start()

.block();

socket.requestStream(DefaultPayload.create("dummy"))

.map(payload -> {

log.info("Received data: [{}]", payload.getDataUtf8());

return payload;

})

.blockLast();

}

}

@Slf4j

public class ResumableResponder {

private static final int SERVER_PORT = 7000;

static final String HOST = "localhost";

static final Duration RESUME_SESSION_DURATION = Duration.ofSeconds(60);

public static void main(String[] args) throws InterruptedException {

RSocketFactory.receive()

.resume()

.resumeSessionDuration(RESUME_SESSION_DURATION)

.acceptor((setup, sendingSocket) -> Mono.just(new AbstractRSocket() {

@Override

public Flux requestStream(Payload payload) {

log.info("Received 'requestStream' request with payload: [{}]", payload.getDataUtf8());

return Flux.interval(Duration.ofMillis(1000))

.map(t -> DefaultPayload.create(t.toString()));

}

}))

.transport(TcpServerTransport.create(HOST, SERVER_PORT))

.start()

.subscribe();

log.info("Server running");

Thread.currentThread().join();

}

}

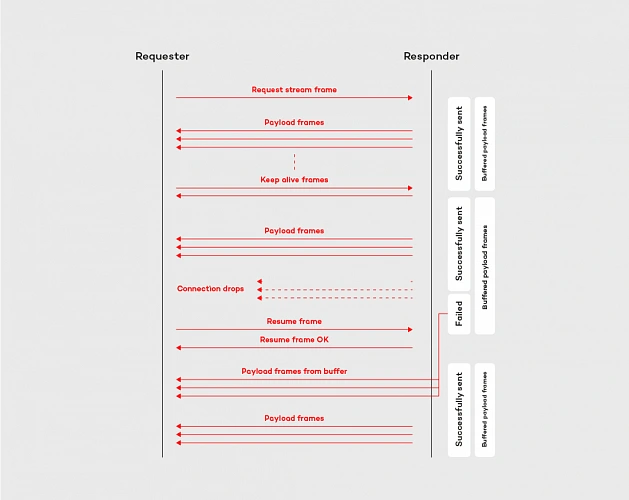

The mechanism on the requester and responder side works similarly, it is based on a few components. First of all, there is a ResumableFramesStore which works as a buffer for the frames. By default, it stores them in the memory, but we can easily adjust it to our needs by implementing the ResumableFramesStore interface (e.g. store the frames in the distributed cache, like Redis). The store saves the data emitted between keep alive frames, which are sent back and forth periodically and indicates, if the connection between the peers is stable. Moreover, the keep alive frame contains the token, which determines Last received position for the requester and the responder. When the peer wants to resume the connection, it sends the resume frame with an implied position . The implied position is calculated from last received position (is the same value we have seen in the Keep Alive frame) plus the length of the frames received from that moment. This algorithm is applied to both parties of the communication, in the resume frame is it reflected by last received server position and first client available position tokens. The whole flow for resume operation is shown in the diagram below:

By adopting the resumability mechanism built in the RSocket protocol, with the relatively low effort we can reduce the impact of the network issues. Like shown in the example above, the resumability might be extremely useful in the data streaming applications, especially in the case of the device to the cloud communication.

Summary

In this article, we discussed more advanced features of the RSocket protocol, which are helpful in reducing the impact of the network on the system operationality. We covered the implementation of the client load balancing pattern and resumability mechanism. These features, combined with the robust interaction model constitutes the core of the protocol.

In the last article of this mini-series , we will cover available abstraction layers on top of the RSocket.

Painless view controller configuration in Swift

Back in the pre-storyboard era of iOS development, developers had to write hundreds of lines of boilerplate code that served to manage UIViewController object hierarchies. Back then, some were inventing generic approaches to the configuring of controllers and transitions between them. Others were just satisfied with the ad-hoc view controller creation and presented them directly from other code controllers. But things changed when Apple introduced storyboards in iOS 5. It was a huge step forward in the UI design for iOS. Storyboards introduced an ability to visually define app screens and - what is the most important - transitions between them (called segues) in a single file. Storyboard segues allow to discard all the boilerplate code related to transitions between view controllers.

Of course, every solution has its advantages and disadvantages. When it comes to storyboards, some may note issues such as hard to resolve merge conflicts, coupling of view controllers, poor reusability etc. Some developers don’t even use storyboards because of such disadvantages. For others the advantages play a more important role. However, the real bottleneck of the storyboards is the initialization of view controllers. In fact, there is no true initialization for the view controllers presented by storyboard segues.

Problems with the view controller configuration

Let’s start from some basics. In Objective-C/Swift, in order to give an object an initial state, the initializer ( init() ) is called. This call assigns values to properties of the class. It always happens at the point where the object is created. When subclassing any class, we may provide the initializer and this is the only proper way. We may also provide such initializer for the UIViewController subclass. However, in case such controller is created/presented using the storyboard, the segue creation takes place through a particular initializer – init(coder:) . Overriding it in subclass may give us the ability to initialize properties added by the subclass. However, we don’t have the ability to pass additional arguments to the overridden method. Moreover, even if we had such an ability, it would make no sense. This is because for storyboard-driven view controllers there is no particular point in code which allows them to pass data to the initializer. That is, we cannot catch the moment of creation of such controller. The creation of view controllers managed by storyboard segues is hidden from the programmer. It happens when segue to the controller is triggered – either entirely handled by the system (when triggering action is set up in the storyboard file) or using performSegue() method.

Apple, however, provides a place where we can pass some data to an already created view controller after the segue is triggered. It’s a prepare(for : sender:) method. From its first parameter (of UIStoryboardSegue type), we can get the segue’s destination view controller. Because the controller has already been created (initialization is already performed when triggering segue) the only option for passing the required data is to configure it. This means that after the initialization, but before the prepare(for : sender:) is called, the properties of the controller that hold such data should not have initial value or should have fake ones. While the second option is meaningless in most cases, the first one is widely used. Such absence of data means that the corresponding controller’s properties should be of an optional type(s). Let’s take a look on the following sample:

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if segue.identifier == "ToSomeViewControllerSegueID",

let controller = segue.destination as? SomeViewController {

controller.someValue = 12345

}

}

}

This is how the view controller configuring is implemented in most cases when dealing with segues:

- check segue id;

- get the destination view controller from the segue object;

- try to cast it to the type of the expected view controller subclass.

In case all conditions are satisfied we can set values to the properties of the controller that need to be configured. The problem with the approach is that it has too much service code related to verification and data extraction. It may not be visible in simple cases like the one shown above. However, taking into account the fact that each view controller in application often has transitions to several other view controllers such service code becomes a real boilerplate code we’d like to avoid. Take a look at the following example that generalizes the problem with prepare(for : sender:) implementation.

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if segue.identifier == "ToViewControllerA",

let controller = segue.destination as? ViewControllerA {

// configure View Controller A

}

} else if segue.identifier == "ToViewControllerB",

let controller = segue.destination as? ViewControllerB {

// configure View Controller B

}

} else if segue.identifier == "ToViewControllerC",

let controller = segue.destination as? ViewControllerC {

// configure View Controller C

}

} else ...

...

} else if segue.identifier == "ToViewControllerZ",

let controller = segue.destination as? ViewControllerZ {

// configure View Controller Z

}

}

}

All those if… else if… blocks are making code hard to read. Moreover, each block is for the a different view controller that has to be configured. That is, the more view controllers are going to be present by this one, the more if… else if… will be added. This, in turn, reveals another problem with such configuration. There is a single method for a particular controller that does all configurations for every controller we’re going to present.

Solution

Let’s try to find the approach to the view controller configuration that may eliminate the outlined problems. We’re limited to the usage of prepare(for : sender:) since it’s the only point where the configuration can be done. So we cannot do anything with the type of the destination view controller and with the check of segue identifier. Instead we’d like to generalize the process of configuration in a way that allows us to have a single type check and single verification for identifier. That is, check with some generalized type of destination view controller and variable segue identifier rather than enumerating all the possible concrete types/identifiers. For this, we need to pass somehow the information about the type and the segue identifier to the prepare(for : sender:) method. We would like to have something like the following:

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if segue.identifier == ,

let viewerController = segue.destination as? {

// configure viewerController

}

}

In order to have a single configuration code for all the controllers we need two things: unified interface to configure the controller, and a way to get the configuration data for the particular destination controller and segue identifier. Let’s define each part of the solution.

1. Unified interface for view configuration

As defined previously, configuration means setting values to one or more properties of destination view controller. So it’s natural to associate the configuration interface with the destination controller rather than with the one that triggers the segue. Obviously, each destination view controller has a different number of properties of different types to configure.

In order to provide a unified configuration interface we may implement a method for configuring each controller. We should pass there the values that will be assigned to the corresponding controller properties. To unify such method, every configured controller should have the same signature. To achieve this, we should wrap a set of passed configuring values into a single object. Then such method will always have one argument – no matter how many properties should be set. The type of the argument is a type of the wrapping object and is different for each view controller. This means that the view controller should implement a method for configuring and somehow define a type of the argument of the method. This is a perfect task for protocols with associated types. Let’s define the following protocol:

protocol Configurable {

associatedtype ConfigurationType

func configure(with configuration: ConfigurationType)

}

Each view controller that is going to be configured (is configurable) should conform to this protocol by implementing the configure(with:) method and defining a concrete type for ConfigurationType . In the easiest case where we only have one property that needs to be configured, the ConfigurationType is the type of that property. Otherwise, the ConfigurationType may be defined as a structure or tuple to represent several values. Consider the following examples:

class SomeViewController: UIViewController, Configurable {

var someValue: Int?

var someObject: MyModelType?

…

func configure(with configuration: (value: Int, object: MyModelType)) {

value = configuration.value

someObject = configuration.object

}

}

class OtherViewController: UIViewController, Configurable {

var underlyingObject: MyObjectType?

…

func configure(with object: MyObjectType) {

underlyingObject = object

}

}

2. Defining the configuration data for view controller

Now, let’s go back to the controller that is triggering a segue. We’re going to use the configuration protocol we’ve defined. For this, we need to have data for passing it to the configure(with:) method. This should be something as follows:

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if let segueIdentifier = segue.identifier {

// 1. Get configuration object

// for segue.destination and segueIdentifier

// 2. Pass configuration object to the destination controller

}

}

Let's focus on how should we obtain the configuration object. Each segue is unique within a controller that triggers those segues. For each segue we have a single destination controller that has its own type of configuration. This means that segue id unambiguously defines a configuration type that should be used for configuring the destination view controller.

On the other hand, just returning the configuration of a concrete type per each segue id is not enough. If we did so, we would need to pass it somehow to a destination controller that has a type UIViewCotroller. It has nothing to do with the configuration. On the other hand, we cannot use the Configurable protocol as a type of an object directly because it has an associated type constraint. That is, we cannot cast the destination view controller to the Configurable type like as follows:

(segue.destination as? Configurable)?.configure(with: data) . Instead, we need to use some proxy generic type that is constrained to being a Configurable.

Also, creating all the configuration objects for the controllers in a single method has no sense since it brings the same issue as the one with prepare(for ) . That is, in this case we have a concentration of code intended to configuring different objects in a single method. Instead, the better solution is to group the code for creating the particular configuration and the type of the controller which is configured into a separate object. Consider the following example:

class Configurator {

let configurationProvider: () -> ConfigurableType.ConfigurationType

init(configuringCode: @escaping () -> ConfigurableType.ConfigurationType) {

self.configurationProvider = configuringCode

}

func performConfiguration(of object: ConfigurableType) {

let configuration = configurationProvider()

object.configure(with: configuration)

}

}

In the code above, a single Configurator<T> instance is responsible for configuring the controller of a particular type. The code that creates the configuration is injected to the configurator in the init() method during creation.

According with the reasoning given above, we should associate a segue ID with the particular configuration and type. Considering the approach with the Configurator<T> , the easiest way to do it is to create a mapping object where the key is a segue ID and a value is the corresponding Configurator<T> instance. We may also create those Configurator<T> objects in place of the map definition. This will make the code more clear and readable. The following example demonstrates such map:

var segueIDToConfigurator: [String : Any] {

return [

"ToSomeViewControllerSegueID": Configurator {

return (value: 123, object: MyModelType())

},

"ToOtherViewControllerSegueID": Configurator {

return MyObjectType()

}

]

}

Let’s now try to use the configuration from the dictionary above in prepare(for ) method. Let’s take a look at the following example

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if let segueIdentifier = segue.identifier,

let configuring = segueIDToConfigurator[segueIdentifier] as? Configurator {

configuring.performConfiguration(of: segue.destination)

}

}

The problem is that the value type of the dictionary segueIDToConfigurator is Any . We cannot call on it any method directly. Instead, we need to cast it to the type that contains the performConfiguration(of:) method. On the other hand, the only type in our implementation that contains the performConfiguration(of:) method is the generic type Configurator<T> . And to use it we should pass a certain type of the destination view controller in place of the generic type placeholder. At this point, the problem is in prepare(for ) method. In this method we don’t have the information about that view controller type. Let’s try to resolve the problem. We need Configurator<T> only to call the performConfiguration(of:) method. Instead of having the whole interface of Configurator<T> type inside the prepare(for ) method we may use some intermediate interface that does not depend on a generic type and allows us to call performConfiguration(of:) .

var segueIDToConfigurator: [String : Configuring] {

return [

"ToSomeViewControllerSegueID": Configurator {

return (value: 123, object: MyModelType())

},

"ToOtherViewControllerSegueID": Configurator {

return MyObjectType()

}

]

}

For this, let’s create a protocol Configuring and modify the Configurator<T> type to make it conform to it. The example below demonstrates the refined approach.

protocol Configuring {

func performConfiguration(of object: SomeType) throws

}

class Configurator: Configuring {

let configurationProvider: () -> ConfigurableType.ConfigurationType

init(configuringCode: @escaping () -> ConfigurableType.ConfigurationType) {

self.configurationProvider = configuringCode

}

func performConfiguration(of object: SomeType) throws {

if let configurableObject = object as? ConfigurableType {

let configuration = configurationProvider()

configurableObject.configure(with: configuration)

} else {

throw ConfigurationError()

}

}

}

Now, the performConfiguration(of:) is a generic method. This allows us to call it without knowing the exact type of the object which is configured. The method however became throwable. This is because the type of its argument is widened so that the arbitrary type can be passed. But the method can still handle only the objects that conform to the Configurable protocol. And if the passed object is not Configurable we don’t have anything to do with it. In this case we throw an error.

We may now use the newly defined Configuring protocol to define the dictionary for segue-to-configurator mapping:

This allows us to use the Configuring objects inside the prepare(for ) method as shown below:

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if let segueIdentifier = segue.identifier,

let configuring = segueIDToConfigurator[segueIdentifier] {

do {

try configuring.performConfiguration(of: segue.destination)

} catch let configurationError {

fatalError("Cannot configure (segue.destination). " +

"Error: (configurationError)")

}

}

}

Refining the solution

The above prepare(for ) implementation is the same for any controller that is going to use the described approach. There are several ways to avoid such code duplication. But you must keep in mind that each has its downsides.

The first and the most obvious way is to use some base view controller across the project that will implement the method prepare(for ) and the segueIDToConfigurator property for holding configurations:

class BaseViewController {

var segueIDToConfigurator: [String: Configuring] {

return [String: Configuring]()

}

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

if let segueIdentifier = segue.identifier,

let configuring = segueIDToConfigurator[segueIdentifier] {

do {

try configuring.performConfiguration(of: segue.destination)

} catch let configurationError {

// throw an error or just write to log

// if you just want to silently it ignore

fatalError("Cannot configure (segue.destination). " +

"Error: (configurationError)")

}

}

}

}

class MyViewController: BaseViewController {

// Define needed configurators

override var segueIDToConfigurator: [String: Configuring]{

...

}

}

The advantage of the first way is that any controller that subclasses BaseViewController needs to define strictly the data that is needed for the configuration. That is, override the segueIDToConfigurator property. However, it forces all the view controllers to subclass BaseViewController . This makes it impossible to use the system UIViewController subclasses like UITableViewViewController , etc.

The second way is to use a special protocol that defines the interface of the controller that can configure other controllers. Consider the following example:

protocol ViewControllerConfiguring {

var segueIDToConfigurator: [String: Configuring] { get }

}

extension ViewControllerConfiguring {

func configure(segue: UIStoryboardSegue) {

if let segueIdentifier = segue.identifier,

let configuring = segueIDToConfigurator[segueIdentifier] {

do {

try configuring.performConfiguration(of: segue.destination)

} catch let configurationError {

fatalError("Cannot configure (segue.destination). " +

"Error: (configurationError)")

}

}

}

}

class MyViewController: UIViewController, ViewControllerConfiguring {

// Define needed configurators

var segueIDToConfigurator = ...

// Each view controller still have to implement this method

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

configure(segue: segue)

}

}

This way is more flexible in comparison to the first one. The protocol can be implemented by any object that is going to configure the segue destination controller. It means that not only UIViewController subclass can use it. Moreover, it doesn’t limit us to use only the BaseViewController as a superclass. On the other hand, each view controller still needs to override prepare(for ) and call configure(segue:) method in its implementation.

Summary

In this article, I described the approach to configuring destination view controllers with clean and straightforward code when using storyboard segues. The approach is possible thanks to useful Swift concepts, such as Generics and Protocols with associated types. The code is also safe as it uses static typing wherever possible and handles errors. Meanwhile the dynamic types are concentrated in single place and the possible errors are handled only there. This approach allows us to avoid unnecessary boilerplate code in the prepare(for ) methods. On the other hand, it makes configuring particular view controllers clearer and more robust by using a specific Configurable protocol.

Main challenges while working in multicultural half-remote teams

We know that adjusting to the new working environment may be tough. It’s even more challenging when you have to collaborate with people located in different offices around the world. We both experienced such a demanding situation and want to describe a few problems and suggest some ways to tackle them. We hope to help fellow professionals who are at the beginning of this ambitious career path. In this article, we want to elaborate on working in multicultural, half-remote teams and main challenges related to this. To dispel doubts, by “half remote team” we mean a situation in which part of the group works together on-site when other part/parts of the crew work in other places, single or in a larger group/groups. We've gathered our experiences during our works in this kind of teams in Europe and the USA.

It’s nothing new that some seemingly harmless things can nearly destroy whole relations in a team and can start an internal tension. One of us worked in a team where six people worked together in one office and the rest of the team (three people) in the second office. We didn’t know why, but something wrong started to happen, these two groups of people started calling themselves “we” and “them”. One team, divided into two mutually opposing groups of people.

Those groups started to defy each other, gossip about yourself, and disturb yourself at work. What's more, there was not a person who tried to fix it, conflicts were growing, and teamwork was impossible. The project was closed. One year later, we started to observe this in our another project. In that project situation was different. There were 3 groups of people, a larger group with 4 people, and 2 smaller with 2 people each. We had delved into the state of things, and we discovered the reasons for this situation.

Information exchange

One of the reasons was the information exchange. The biggest team was located together, and they often discuss things related to work. Often the discussion turned into planning and decision-making, as you can guess, the rest of the team did not have the opportunity to take part in them. The large team made the decision without consulting it, and it really annoyed the rest…

What was done wrong, how can you avoid it in your project? The team should be team, even if its members don’t work in the same location. Everyone should take an active part in making decisions. Despite the fact that it is difficult to achieve, all team members must be aware of this, they must treat the rest of the team in the same way, as if they were sitting next to them.

Firstly, if an idea is developed during the discussion, a group of people must grab it and present it to the rest of the team for further analysis. You should avoid situations where the idea is known only to a local group of people. It reduces the knowledge about the project and increases the anger of other developers. They do not feel part of the team, they do not feel that they have an impact on its development. What's more, if the idea does not please the rest, they begin to treat authors hostile which create conflicts and leads to a situation where people start to say “we” and “them”. Part of the team should not make important decisions, it should be taken by the whole team, if a smaller group has something to talk about everyone should know about it and have a chance to join them (even remotely!).

Secondly, if a group notices they are discussing things which other people may be interested in, they should postpone local discussion and create remote room when discussion can be continued. Anyone can join it as if sitting next to them.

Thirdly, if it was not possible to include others in the conversation the conversation summary should be saved and made available to all team members.

Team integration

The second reason We found was an integration of the parts of the team. The natural thing was that people sitting together knew each other better, thus, a natural division into groups within the team was formed. Sadly, this can not be avoided… but we can reduce the impact of this factor.

Firstly, if possible, we should ensure the integration of the parts of the team. They have to meet at least once, and preferably in regular meetings not related to work, so-called integration trips.

Secondly, mutual trust among the team should be built. The team should talk about difficult situations in full composition, not in local groups over coffee. And if a local conversation took place, the problem should be presented to the whole team. Everyone should be able to speak honestly and feel comfortable in the team, it is very important!

Language and insufficient communication

Another obstacle is a different culture or language. If there are people who speak different languages in the team, they will usually use English which will not be a native language for a part of the team… Different team members may have different levels of English speaking skills, less skilled team members may not understand intricate phrases.

It is very important to make sure everyone understands the given statement. If you know that you have some people in your team whose English is not so fluent, you can ask and make sure they understood everything. Confidence should be built inside the team, everyone should feel that they can ask for an explanation of the statement in the simplest words without taunting and consistency. We have seen such a problem many times in teams especially multicultural. A lack of understanding leads to misunderstandings and the collapse of the project. Each of the team members should learn and improve their skills, the team should support colleagues with lower language skills, politely correcting them and communicating that they use some language form incorrectly. We recommend doing it in private unless the confidence in the team is so large that it can be done in a group.

Communication can also lead to misunderstandings, at the beginning of our careers our language skills were not the best. Our statements were very simple and crude. As a result, sometimes our messages were perceived as aggressive… We did not realize it until We started to notice the tension between us and some of the team members. It is very difficult to remedy this, after all, we do not know what others think. Therefore, small advice from us - talk to each other, seriously and try to build a culture of open feedback in the team, address even uncomfortable topics. Even if you have a language problem it is sometimes better to try to describe something in 100 simple sentences than not to speak at all...

Time difference

Let’s focus on one more challenging difficulty that may cause a lot of troubles while working in half-remote teams. While working in teams distributed over a larger area of the world, the time difference between team member’s locations might cause an issue that is very hard to overcome. We have been working in a team where team members were located in the USA (around both eastern and western coasts), Australia and Poland. As per our experience, it is nearly impossible to gather all team members together because of working hours in those locations. We have observed some common issues that such a situation may cause.

Team members working in different time zones have limited capabilities of teamwork. There is often not enough time for team activities like technical or non-work-related discussions over a cup of coffee that build team spirit and good relations between members. It is impossible to integrate distributed teams without cyclic meetings in one place. We have seen how such odds and ends lead to team divisions on “we” and “they” mentioned before. It is also a blocker when it comes to applying good programming practices in the project like pair programming and knowledge sharing.

Distributed teams are more difficult to manage, and some of the Agile work methodologies are not applicable at all, as it often requires the participation of all team members. In the case of our team, Scrum methodology did not work at all, because we could not organize successful planning sessions, sprint reviews, retrospectives and demos on which everyone’s input matters. It was a common situation where after planning team members did not know what they are supposed to do next, and at first, they needed to discuss something with absent teammates.

If we take a look at distributed team performance, it will usually seem to be lower than in the case of local teams. That is mainly because of inevitable delays when some team member needs assistance from another. Imagine that you start working and after an hour you encounter a problem that requires your teammate’s help, but s/he will wake up no sooner than in 7 hours. You have to postpone task you were working on, and focus on some other - what usually slows your job down. Of course, it is a sunny day scenario, because there might be more serious issues where you cannot do anything else in the meantime (i.e. you have broken all environments including production, backup was “nice to have” on planning - and your mate from Australia is the only one who can restore it). It also takes more time to exchange information, process code reviews and share knowledge about a project if we cannot reach other team members immediately when they are needed.

On the other hand, distributed teams have some advantages. There are many projects or professions that require client support for 24/7 - and in this case, it is much easier for such time coverage. It can save a team from on-calls and other inconveniences.

We have learned that there is no cure for all the problems that distributed teams struggle with, but the impact of some of them can be reduced. Companies that are aware of how time difference impacts team performance often offer possibilities to work remotely from home in fully flexible hours. In some cases, it works and it is faster to get things done, but it does not solve all problems on a daily basis, because everyone wants to live their private life as well, meet friends on the evening or grab a beer and watch TV series rather than work late at night. Moreover, team integration and cooperation issue could be solved by frequent travels but it is expensive and the majority of people do not have the possibility to leave home for a longer period of time.

Summary

To sum it up, multicultural half-remote teams are really challenging to manage. Distributed teams struggle with a lot of troubles such as information exchange, teamwork, communication, and integration - which may be caused by cultural differences, remote communication and the time difference between team members. Without all this, there is just a bunch of individuals that cannot act as a team. Despite the above tips to solve some of the problems, it is hard to avoid the lack of partnership among team members, that may lead to divisions, misunderstandings and team collapse.

And while the struggles described above are real, we can't forget why we do it. Building a distributed team allows a company to acquire talent often not available on the local market. By creating an international environment, the same company can gain a wider perspective and better understand different markets. Diversification of the workforce can be a lifesaver when it comes to some emergency issue that may be a danger for a company that the entire team works in one location. We at Grape Up share different experiences, and thanks to knowledge exchange, our team members are prepared to work is such a demanding environment.

Server Side Swift with Vapor - end to end application with Heroku cloud

In this tutorial, I want to show you the whole production process of back-end and front-end swift applications and push the backend side to the heroku cloud.

First, please make sure that you have installed at least Xcode 10. Let’s start from the backend side:

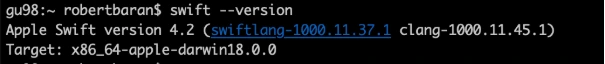

Open a terminal and check your Swift version and make sure that your swift version is 4.2.

swift –-version

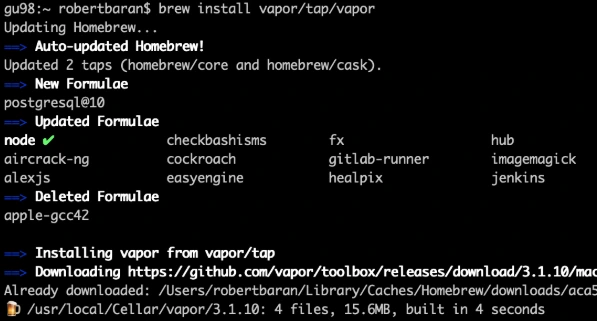

Time to install Vapor CLI, for this one we should use homebrew.

brew install vapor/tap/vapor

Vapor CLI is already installed.

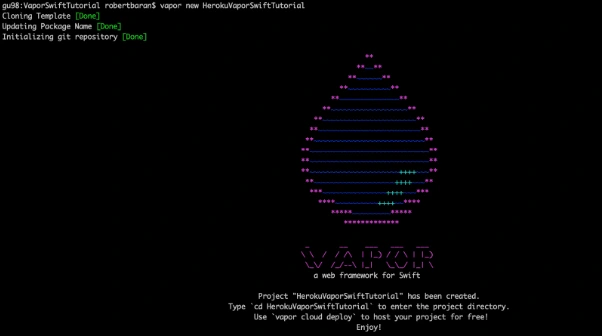

Let’s create a new project. Vapor CLI have simple commands for lots of things. Most of them you will learn in this tutorial later.

Vapor new {your-project-name}

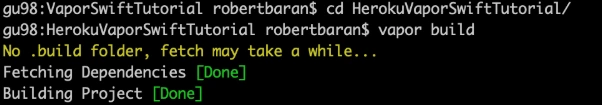

Go into the project directory and build it using "vapor build" command:

vapor build

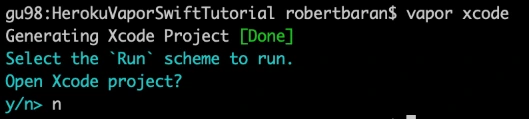

Let’s create xcode project using vapor xcode which will be needed later.

And verify if your backend works fine locally:

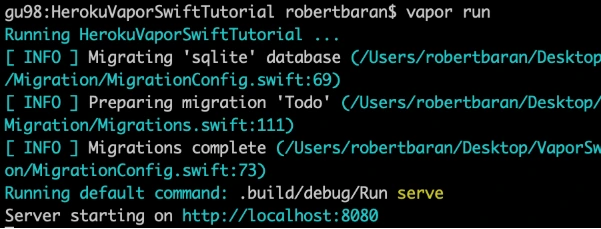

vapor run

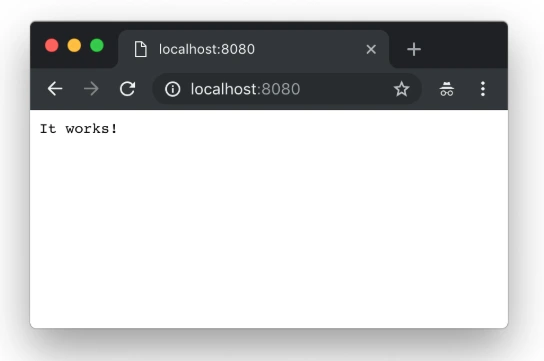

As we can see the server is starting on our machine to be sure if that works fine, go to the web browser and check the localhost:8080.

Yupi! Here is your first application running with Perfect Swift. Let's try to deploy it to the cloud, but first, we need to install heroku cli.

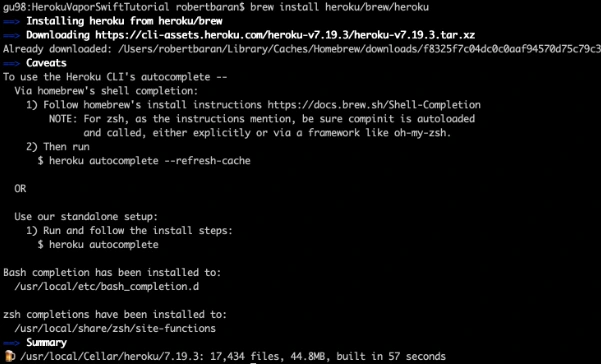

brew install heroku/brew/ heroku

Now, deploy your first application to the cloud, but first you need to create free Heroku account. I will skip this process in tutorial. When the account was created go to the terminal install Heroku CLI and try to deploy.

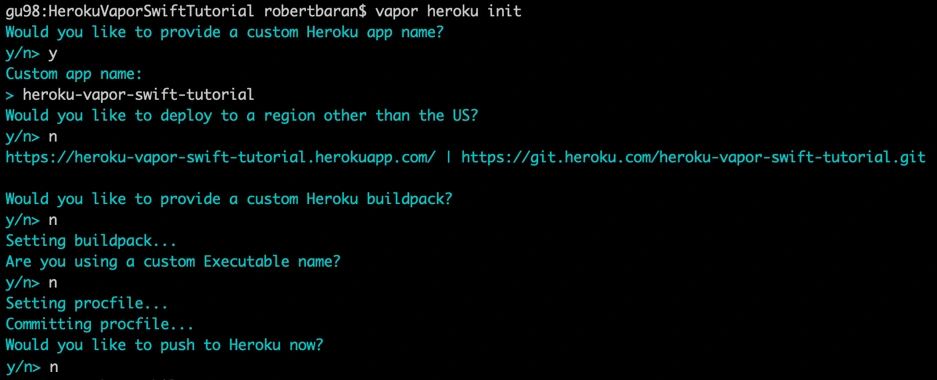

vapor heroku init

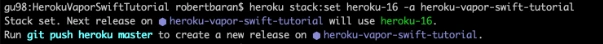

Before we push to Heroku we have to change Heroku stack from 18 to 16 as 18 is in the beta stage and doesn’t support vapor yet.

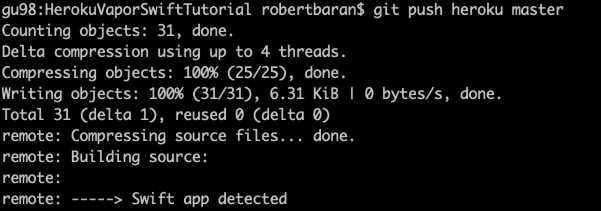

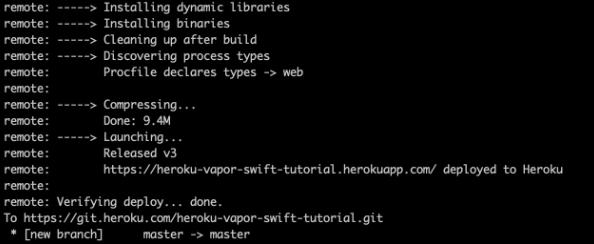

Let’s deploy: git push Heroku master.

The app is already deployed. You can log in via a web browser to your Heroku account and see if it is running and if it works!

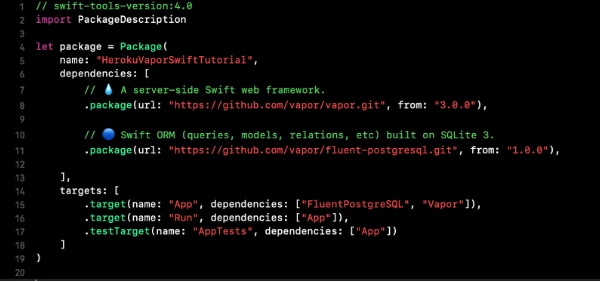

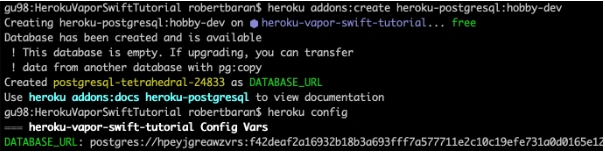

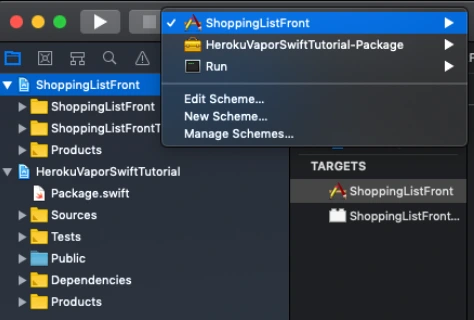

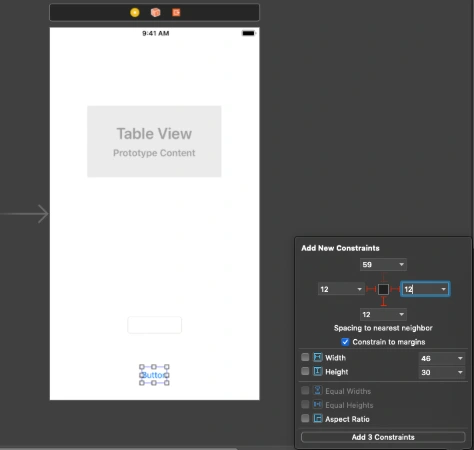

Now we need to configure the project. Go to Xcode or other source editor and install PostgreSQL framework. In Package.swift we need to add the FluentPostreSQL. In Package.swift we need to add the FluentPostreSQL.

Run vapor clean, vapor build and then vapor Xcode.

It's time to configure the database and clean up the project. First of all, go to configure.swift file and remove FluentSQL and remove related functions related to it. Then we need to configure the PostgreSQL database in our project. We need to remember that few things need to be done like at the beginning import the FluentPostgreSQL and register provider into services.

try services.register(FluentPostgreSQLProvider())

Then we need to create a database config and register the database.

// Configure a database

var databases = DatabasesConfig()

let databaseConfig: PostgreSQLDatabaseConfig

if let url = Environment.get("DATABASE_URL") {

guard let urlConfig = PostgreSQLDatabaseConfig(url: url) else {

fatalError("Failed to create PostgresConfig")

}

print(urlConfig)

databaseConfig = urlConfig

} else {

let databaseName: String

let databasePort: Int

if (env == .testing) {

databaseName = "vapor-test"

if let testPort = Environment.get("DATABASE_PORT") {

databasePort = Int(testPort) ?? 5433

} else {

databasePort = 5433

}

}

else {

databaseName = Environment.get("DATABASE_DB") ?? "vapor"

databasePort = 5432

}

let hostname = Environment.get("DATABASE_HOSTNAME") ?? "localhost"

let username = Environment.get("DATABASE_USER") ?? "robertbaran"

databaseConfig = PostgreSQLDatabaseConfig(hostname: hostname, port: databasePort, username: username, database: databaseName, password: nil)

}

let database = PostgreSQLDatabase(config: databaseConfig)

databases.add(database: database, as: .psql)

services.register(databases)

Once the database is registered we need to create our model and controller. In the example project, we have todo model and controller you could remove that as we will create a new model and controller. The idea for the app is creating a shopping list, so we need to think about what we need for that. So we have already database registered so we have to create a model which will contain our productName and id. Create ShoppingList.swift file which will be our PostgreSQL model.

import FluentPostgreSQL

import Vapor

final class ShoppingList: PostgreSQLModel {

var id: Int?

var productName: String