How to develop AI-driven personal assistants tailored to automotive needs. Part 2

Making the chatbot more suitable

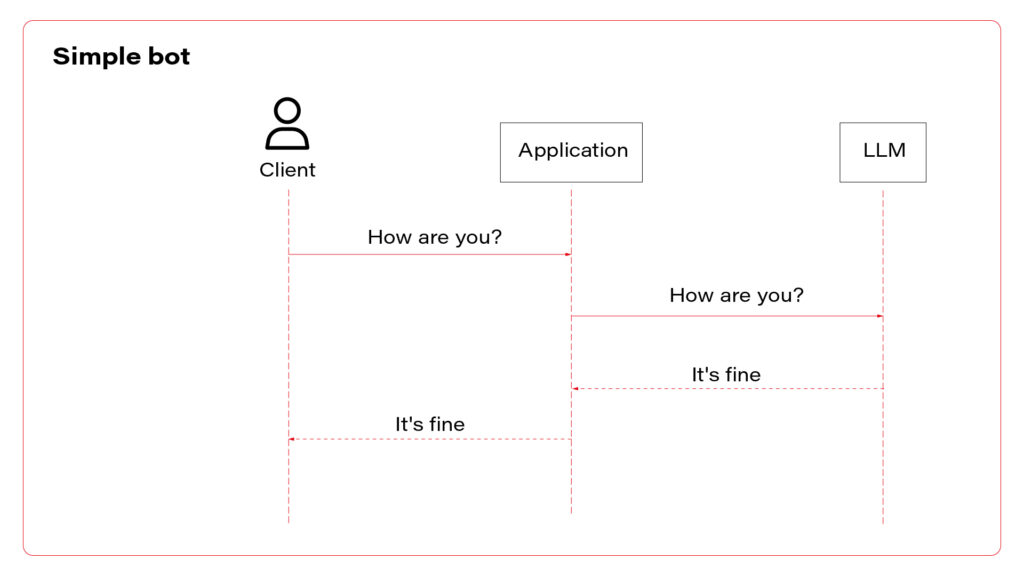

Recently we’ve built a simple chatbot , using an Azure-hosted GPT 4 Turbo instance. It’s a great starting point, but we definitely need more to fulfill any business requirements. Let’s try to talk with our bot.

Prompt:

Hi, I’d like to buy a new car. Can you assist me with a choice? I’m looking for a family car with a hybrid powertrain and all-wheel drive. I’m a fan of modern design and fancy features. My budget is around 70k euro.

Completion:

Absolutely, I’d be happy to help you find a suitable family car that meets your criteria! Given your preferences (…), there are several options across different brands that could be a great fit. (…):

1. Audi Q5 TFSI e

Powertrain: (…)

Features: (…)

Price Range: (…)

2. BMW X5 xDrive45e

Powertrain: (…)

Features: (…)

Price Range: (…)

3. Volvo XC90 Recharge

Powertrain: (…)

Features: (…)

Price Range: (…)

4. Mercedes-Benz GLE 450 4MATIC

Powertrain: (…)

Features: (…)

Price Range: (…)

5. Lexus RX 450h

Powertrain: (…)

Features: (…)

Price Range: (…)

When considering these options, it’s important to test drive the ones you’re most interested in to get a feel for their driving dynamics, comfort, and the user-friendliness of their tech features. Additionally, (…)

As you can see, the response is quite long, versatile, and absolutely useless.

If you’re working for one of those brands, you don’t want the chatbot to offer your competitors. Also, the knowledge base is a bit outdated, so the answer is unreliable. Besides that, the answer is too long to be shown on a mobile device or to be read. And you need to be aware that the user may misuse the chatbot to generate a master thesis, write a fairy tale or plan a trip to Rome.

Our job is to limit the bot possibilities. The way to achieve it is prompt engineering. Let’s try to add some system messages before the user prompt.

Messages=[

{"role": "system", "content": "You are a car seller working for X"},

{"role": "system", "content": "X offers following vehicles (…)"},

{"role": "system", "content": "Never recommend X competitors"},

{"role": "system", "content": "Avoid topics not related to X. e.g. If the user asks for weather, kindly redirect him to the weather service"},

{"role": "system", "content": "Be strict and accurate, avoid too long messages"},

{"role": "user", "content": "Hi, I’d like to buy a new car. Can you assist me with a choice? I’m looking for a family car with a hybrid powertrain and all-wheel drive. I’m a fan of modern design and fancy features. My budget is around 70k euro."},

]

Now the chatbot should behave much better, but it still can be tricked. Advanced prompt engineering, together with LLM hacking and ways to prevent it, is out of the scope of this article, but I strongly recommend exploring this topic before exposing your chatbot to real customers. For our purposes, you need to be aware that providing an entire offer in a prompt (“X offers following vehicles (…)”) may go way above the LLM context window. Which brings us to the next point.

Retrieval augmented generation

You often want to provide more information to your chatbot than it can handle. It can be an offer of a brand, a user manual, a service manual, or all of that put together, and much more. GPT 4 Turbo can work on up to 128 000 tokens (prompt + completion together), which is, according to the official documentation , around 170 000 of English words. However, the accuracy of the model decreases around half of it [source] , and the longer context processing takes more time and consumes more money. Google has just announced a 1M tokens model but generally speaking, putting too much into the context is not recommended so far. All in all, you probably don’t want to put there everything you have.

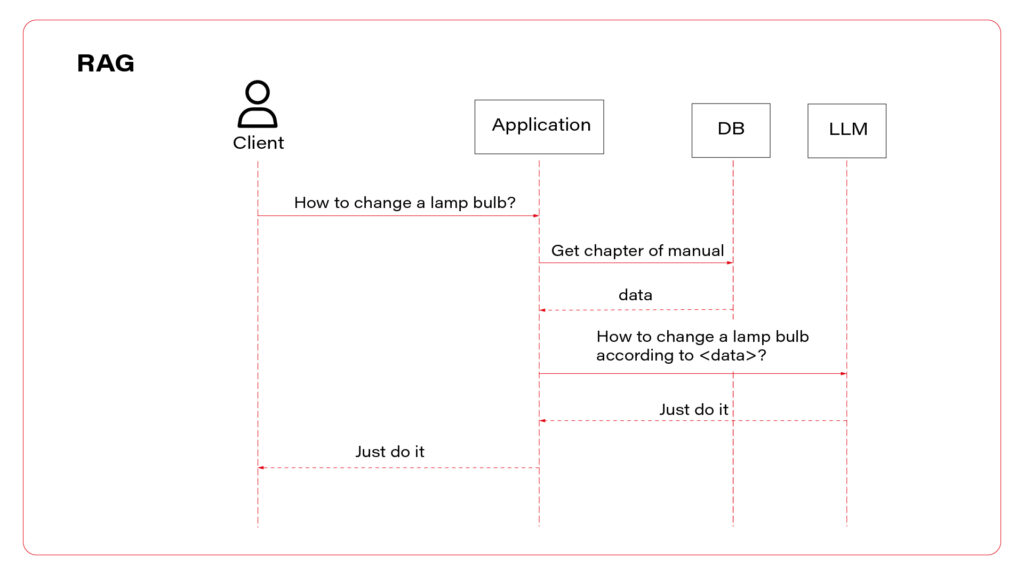

RAG is a technique of collecting proper input for the LLM that may contain information required to answer the user questions.

Let’s say you have two documents in your company knowledge base. The first one contains the company offer (all vehicles for sale), and the second one contains maintenance manuals. The user approaches your chatbot and asks the question: “Which car should I buy?”. Of course, the bot needs to identify user’s needs, but it also needs some data to work on. The answer is probably included in the first document but how can we know that?

In more detail, RAG is a process of comparing the question with available data sources to find the most relevant one or ones. The most common technique is vector search. This process converts your domain knowledge to vectors and stores them in a database (this process is called embedding). Each vector represents a piece of document – one chapter, one page, one paragraph, depending on your implementation. When the user asks his question, it is also converted to a vector representation. Then, you need to find the document represented by the most similar vector – it should contain the response to the question, so you need to add it to the context. The last part is the prompt, e.g. “Basic on this piece of knowledge, answer the question”.

Of course, the matter is much more complicated. You need to consider your embedding model and maybe improve it with fine-tuning. You need to compare search methods (vector, semantic, keywords, hybrid) and adapt them with parameters. You need to select the best-fitting database, polish your prompt, convert complex documents to text (which may be challenging, especially with PDFs), and maybe process the output to link to sources or extract images.

It's challenging but possible. See the result in one of our case studies: Voice-Driven Car Manual .

Good news is – you’re not the first one working on this issue, and there are some out-of-the-box solutions available.

The no-code one is Azure AI Search, together with Azure Cognitive Service and Azure Bot. The official manual covers all steps – prerequisites, data ingestion, and web application deployment. It works well, including OCR, search parametrization, and exposing links to source documents in chat responses. If you want a more flexible solution, the low-code version is available here .

I understand if you want to keep all the pieces of the application in your hands and you prefer to build it from scratch. At this point we need to move back to the language opting. The Langchain library, which was originally available for Python only, may be your best friend for this implementation.

See the example below.

From langchain.chains.question_answering import load_qa_chain

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import Qdrant

from qdrant_client import QdrantClient

from langchain.chat_models import AzureChatOpenAI

from langchain.chains.retrieval_qa.base import RetrievalQA

client = QdrantClient(url="…", api_key="…")

embeddings = HuggingFaceEmbeddings(model_name="hkunlp/instructor-xl")

db = Qdrant(client= client, collection_name="…", embeddings=embeddings)

second_step = load_qa_chain(AzureChatOpenAI(

deployment_name="…",

openai_api_key="…",

openai_api_base="…",

openai_api_version="2023-05-15"

), chain_type="stuff", prompt="Using the context {{context}} answer the question: …")

first_step = RetrievalQA(

combine_documents_chain=second_step,

retriever=db.as_retriever(

search_type="similarity_score_threshold", search_kwargs={"score_threshold": 0.5 }

),

)

first_step.run()

This is the entire searching application. It creates and executes a “chain” of operations – the first step is to look for data in the Qdrant database, using a model called instructor-xl for embedding. The second step is to put the output of the first step as a “context” to the GPT prompt. As you can see, the application is based on the Langchain library. There is a Java port for it, or you can execute each step manually in any language you want. However, using Langchain in Python is the most convenient way to follow and a significant advantage of using this language at all.

With this knowledge you can build a chatbot and feed it with company knowledge. You can aim the application for end users (car owners), internal employees, or potential customers. But LLM can “do” more.

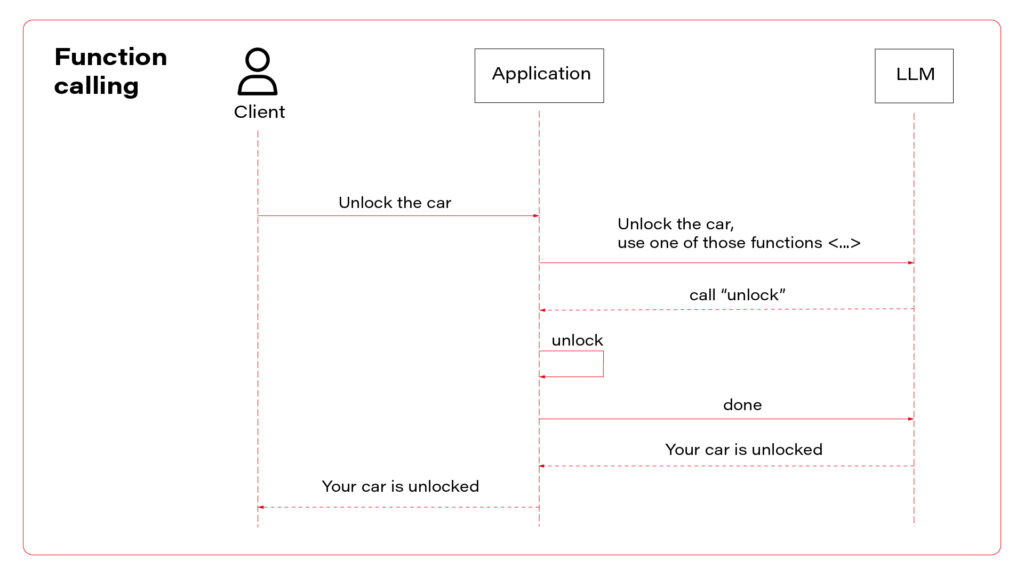

Function calling

To “do” is the keyword. In this section we’ll teach the LLM to do something for us, not only to provide information or tell jokes . An operational chatbot can download more data if needed and decide which data is required for the conversation, but it can also execute real operations. Most modern vehicles are delivered with mobile applications that you can use to read data (localize the car, check the mileage, read warnings) or to execute operations (open doors, turn on air conditioning, or start charging process). Let’s do the same with the chatbot.

Function calling is a built-in functionality of GPT models. There is a field in the API model for tools (functions), and it can produce responses in a JSON format. You can try to achieve the same with any other LLM with a prompt like that.

In this environment, you have access to a set of tools you can use to answer the user's question.

You may call them like this:

<function_calls>

<invoke>

<tool_name>$TOOL_NAME</tool_name>

<parameters>

<$PARAMETER_NAME>$PARAMETER_VALUE</$PARAMETER_NAME>

</parameters>

</invoke>

</function_calls>

Here are the tools available:

<tools>

<tool_description>

<tool_name>unlock</tool_name>

<description>

Unlocks the car.

</description>

<parameters>

<parameter>

<name>vin</name>

<type>string</type>

<description>Car identifier</description>

</parameter>

</parameters>

</tool_description>

</tools>

This is a prompt from the user: ….

Unfortunately, LLMs often don’t like to follow a required structure of completions, so you might face some errors when parsing responses.

With the GPT, the official documentation recommends verifying the response format, but I’ve never encountered any issue with this functionality.

Let’s see a sample request with functions’ definitions.

{

"model": "gpt-4",

"messages": [

{ "role": "user", "content": "Unlock my car" }

],

"tools": [

{

"type": "function",

"function": {

"name": "unlock",

"description": "Unlocks the car",

"parameters": {

"type": "object",

"properties": {

"vin": {

"type": "string",

"description": "Car identifier"

},

"required": ["vin"]

}

}

}

],

}

To avoid making the article even longer, I encourage you to visit the official documentation for reference.

If the LLM decides to call a function instead of answering the user, the response contains the function-calling request.

{

…

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "call_abc123",

"type": "function",

"function": {

"name": "unlock",

"arguments": "{\"vin\": \"ABC123\"}"

}

}

]

},

"logprobs": null,

"finish_reason": "tool_calls"

}

]

}

Based on the finish_reason value, your application decides to return the content to the user or to execute the operation. The important fact is – there is no magic that can be used to automatically call some API or execute a function in your code. Your application must find a function based on the name, and parse arguments from the JSON-formatted list. Then the response of the function should be sent to the LLM (not to the user), and the LLM makes the decision about next steps – to call another function (or the same with different arguments) or to write a response for the user. To send the response to the LLM, just add it to the conversation.

{

"model": "gpt-4",

"messages": [

{ "role": "user", "content": "Unlock my car" },

{"role": "assistant", "content": null, "function_call": {"name": "unlock", "arguments": "{\"vin\": \"ABC123\"}"}},

{"role": "function", "name": "unlock", "content": "{\"success\": true}"}

],

"tools": [

…

],

}

In the example above, the next response is more or less “Sure, I’ve opened your car”.

With this approach, you need to send with each request not only the conversation history and system prompts but also a list of all functions available with all parameters. Keep it in mind when counting your tokens.

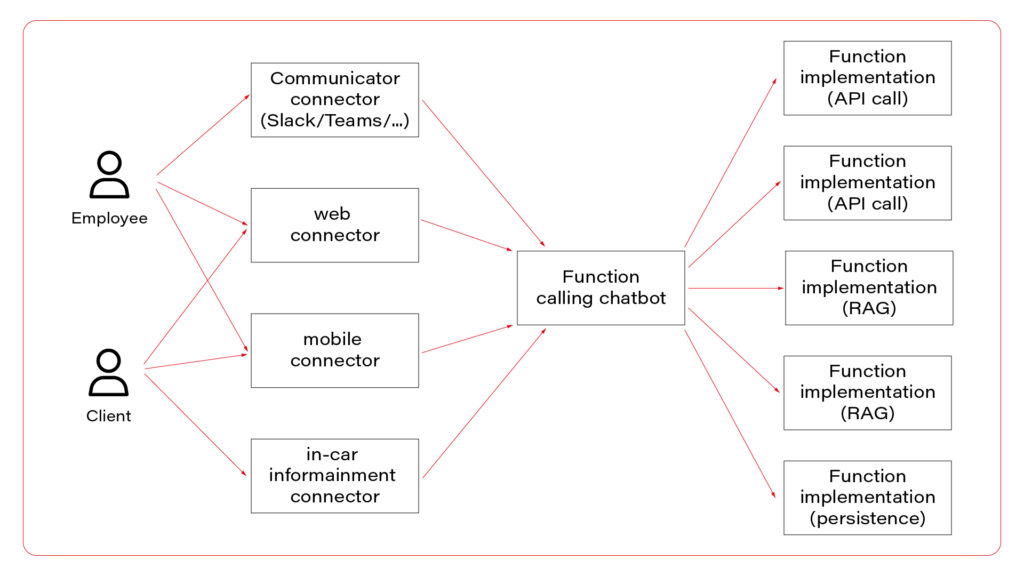

Follow up

As you can see, we can limit the chatbot versatility by prompt engineering and boost its resourcefulness with RAG or external tools. It brings us to another level of LLMs usability but now we need to meld it together and not throw the baby out with the bathwater. In the last article we’ll consider the application architecture, plug some optimization, and evade common pitfalls. We’ll be right back!

Data powertrain in automotive: Complete end-to-end solution

We power your entire data journey, from signals to solutions

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.