Fleet management task with AWS IoT – overcoming limitations of Docker Virtual Networks

We’re working for a client that produces fire trucks. There is a list of requirements and the architecture proposal in the first article and a step-by-step implementation of the prototype in the second one . This time, we’re going to close the topic with DHCP implementation and UDP tests.

DHCP server and client

A major issue with Docker is the need to assign IP addresses for containers. It is impractical to rely on automatic address assignments managed by Docker or to manually set addresses when containers are started. The architecture intended for IoT edge should ensure that the state of the device can be easily reproduced even after a power failure or reboot.

It may also be necessary to set fixed addresses for containers that will be the reference point for the entire architecture - see the Router container in our previous text. It is also worth considering the scenario where an external provider wants to connect to the edge device with extra devices. As part of the collaboration, it may be necessary to provide immutable IP addresses, e.g., for IP discovery service.

Our job is to provide a service to assign IP addresses from configurable pools for both physical and virtual devices in VLANs. It sounds like DHCP and indeed, it is DHCP, but it’s not so simple with Docker. Unfortunately, Docker uses its own addressing mechanism that cannot be linked to the network DHCP server.

The proposed solution will rely on a DHCP server and a DHCP client. At startup, the script responsible for running the Docker image will call the DHCP client and receive information about the MAC address and IP address the container will have.

Ultimately, we want to get a permanent configuration that is stored as a file or some simple database for the above. This will give us an immutable configuration for the basic parameters of the Docker container. To connect the MAC address, IP address, and Docker container, we propose adding the name of the potential Docker container to the record. This will create a link for the 3 elements that uniquely identifies the Docker container.

When the script starts, it queries the DHCP server for a possible available IP address and checks beforehand if there is already a lease for the IP/MAC address determined from the Docker container name.

This achieves a configuration that is resistant to IP conflicts and guarantees the reusability of previously assigned IP addresses.

DHCP server

For our use-case, we’ve decided to rely on isc-dhcp-server package. This is a sample configuration you can adjust for your needs.

dhcpd.conf

authoritative;

one-lease-per-client true;

subnet 10.0.1.0 netmask 255.255.255.0 {

range 10.0.1.2 10.0.1.200;

option domain-name-servers 8.8.8.8, 8.8.4.4;

option routers 10.0.1.3;

option subnet-mask 255.255.255.0;

default-lease-time 3600;

max-lease-time 7200;

}

subnet 10.0.2.0 netmask 255.255.255.0 {

range 10.0.2.2 10.0.1.200;

option domain-name-servers 8.8.8.8, 8.8.4.4;

option routers 10.0.2.3;

option subnet-mask 255.255.255.0;

default-lease-time 3600;

max-lease-time 7200;

}

Here is the breakdown for each line in the mentioned configuration. There are two subnets configured with two address pools for each VLAN in our network.

authoritative - this directive means that the DHCP server is the authoritative source for the network. If a client queries with an IP address that it was given by another DHCP server, this server will tell the client that the IP address is invalid, effectively forcing the client to ask for a new IP address.

one-lease-per-client - this ensures that each client gets only one lease at a time. This helps avoid scenarios where a single client might end up consuming multiple IP addresses, leading to a reduced available IP pool.

option domain-name-servers – this assigns DNS servers to the DHCP clients. In this case, it's using Google's public DNS servers (8.8.8.8 and 8.8.4.4).

option routers – this assigns a default gateway for the DHCP clients. Devices in this network will use 10.0.1.3 as their way out of the local network, likely to reach the internet or other networks.

option subnet-mask – this specifies the subnet mask to be assigned to DHCP clients, which in this case is 255.255.255.0. It determines the network portion of an IP address.

default-lease-time – specifies how long, in seconds, a DHCP lease will be valid if the client doesn't ask for a specific lease time. Here, it's set to 3600 seconds, which is equivalent to 1 hour.

max-lease-time - this sets the maximum amount of time, in seconds, a client can lease an IP address. Here, it's 7200 seconds or 2 hours.

DHCP Client

In our scenario, all new application containers are added to the system via bash commands executed on the Host – the firetruck’s main computer or Raspberry PI in our prototype. See the previous chapter for adding containers commands reference. The command requires IP addresses and gateways for each container.

Our approach is to obtain an address from the DHCP server (as dynamic IP) and set up a container with the address configured as static IP. To achieve this, we need a shell-friendly DHCP client. We’ve decided to go with a Python script that can be called when creating new containers.

DHCP Client Example (Python)

See comments in the scripts below for explanations of each block.

from scapy.layers.dhcp import BOOTP, DHCP

from scapy.layers.inet import UDP, IP, ICMP

from scapy.layers.l2 import Ether

from scapy.sendrecv import sendp, sniff

# Sendind discovery packet for DHCP

def locate_dhcp(src_mac_addr):

packet = Ether(dst='ff:ff:ff:ff:ff:ff', src=src_mac_addr, type=0x0800) / IP(src='0.0.0.0', dst='255.255.255.255') / \

UDP(dport=67, sport=68) / BOOTP(op=1, chaddr=src_mac_addr) / DHCP(options=[('message-type', 'discover'), 'end'])

sendp(packet, iface="enp2s0")

# Receiving offer by filtering out packets packet[DHCP].options[0][1] == 2

def capture_offer():

return sniff(iface="enp2s0", filter="port 68 and port 67",

stop_filter=lambda packet: BOOTP in packet and packet[BOOTP].op == 2 and packet[DHCP].options[0][1] == 2,

timeout=5)

# Transmitting packets with accepted offer (IP) from DHCP

def transmit_request(src_mac_addr, req_ip, srv_ip):

packet = Ether(dst='ff:ff:ff:ff:ff:ff', src=src_mac_addr, type=0x0800) / IP(src='0.0.0.0', dst='255.255.255.255') / \

UDP(dport=67, sport=68) / BOOTP(op=1, chaddr=src_mac_addr) / \

DHCP(options=[('message-type', 'request'), ("client_id", src_mac_addr), ("requested_addr", req_ip),

("server_id", srv_ip), 'end'])

sendp(packet, iface="enp2s0")

# Reading acknowledgement from DHCP. Filtering out packet[BOOTP].op == 2 and packet[DHCP].options[0][1] == 5 and ports 68/67

def capture_acknowledgement():

return sniff(iface="enp2s0", filter="port 68 and port 67",

stop_filter=lambda packet: BOOTP in packet and packet[BOOTP].op == 2 and packet[DHCP].options[0][1] == 5,

timeout=5)

# Ping offered IP address

def transmit_test_packet(src_mac_addr, src_ip_addr, dst_mac_addr, dst_ip_addr):

packet = Ether(src=src_mac_addr, dst=dst_mac_addr) / IP(src=src_ip_addr, dst=dst_ip_addr) / ICMP()

sendp(packet, iface="enp2s0")

if __name__ == "__main__":

# dummy mac address

mac_addr = "aa:bb:cc:11:22:33"

print("START")

print("SEND: Discover")

locate_dhcp(mac_addr)

print("RECEIVE: Offer")

received_packets = capture_offer()

server_mac_addr = received_packets[0]["Ether"].src

bootp_response = received_packets[0]["BOOTP"]

server_ip_addr = bootp_response.siaddr

offered_ip_addr = bootp_response.yiaddr

print("OFFER:", offered_ip_addr)

print("SEND: Request for", offered_ip_addr)

transmit_request(mac_addr, offered_ip_addr, server_ip_addr)

print("RECEIVE: Acknowledge")

received_packets2 = capture_acknowledgement()

print("ACKNOWLEDGE:", offered_ip_addr)

print("SEND: Test IP Packet")

transmit_test_packet(mac_addr, offered_ip_addr, server_mac_addr, server_ip_addr)

print("END")

Let’s talk about our use case.

The business requirement is to add another device to the edge - perhaps a thermal imaging camera. Our assumption is to guarantee as fully automatic onboarding of the device in our system as possible. Adding a new device will also mean, in our case, connecting it to the customer-provided Docker container.

Our expected result is to get a process that registers the new Docker container with the assigned IP address from the DHCP server. The IP address is, of course, dependent on the VLAN in which the new device will be located.

In summary, it is easy to see that plugging in a new device at this point just means that the IP address is automatically assigned and bound. The new device is aware of where the Router container is located - so communication is guaranteed from the very beginning.

UDP broadcast and multicast setup

Broadcast UDP is a method for sending a message to all devices on a network segment, which allows for efficient communication and discovery of other devices on the same network. In an IoT context, this can be used for the discovery of devices and services, such as finding nearby devices for data exchange or sending a command to all devices in a network.

Multicast, on the other hand, allows for the efficient distribution of data to a group of devices on a network. This can be useful in scenarios where the same data needs to be sent to multiple devices at the same time, such as a live video stream or a software update.

One purpose of the architecture was to provide a seamless, isolated, LAN-like environment for each application. Therefore, it was critical to enable applications to use not only direct, IP, or DNS-based communication but also to allow multicasting and broadcasting messages. These protocols enable devices to communicate with each other in a way that is scalable and bandwidth-efficient, which is crucial for IoT systems where there may be limited network resources available.

The presented architecture provides a solution for dockerized applications that use UDP broadcast/multicast. The router Docker container environment is intended to host applications that are to distribute data to other containers in the manner.

Let’s check whether those techniques are available to our edge networks.

Broadcast

The test phase should start on the Container1 container with an enabled UDP listener. For that, run the command.

nc -ulp 5000

The command uses the netcat (nc) utility to listen (-l) for incoming UDP (-u) datagrams on port 5000 (-p 5000).

Then, let’s produce a message on the Router container.

echo -n "foo" | nc -uv -b -s 10.0.1.3 -w1 10.0.1.255 5000

The command above is an instruction that uses the echo and netcat to send a UDP datagram containing the string "foo" to all devices on the local network segment.

Breaking down the command:

echo -n "foo" - This command prints the string "foo" to standard output without a trailing newline character.

nc - The nc command is used to create network connections and can be used for many purposes, including sending and receiving data over a network.

-uv - These options specify that nc should use UDP as the transport protocol and that it should be run in verbose mode.

-b - This option sets the SO_BROADCAST socket option, allowing the UDP packet to be sent to all devices on the local network segment.

-s 10.0.1.3 - This option sets the source IP address of the UDP packet to 10.0.1.3.

-w1 - This option sets the timeout for the nc command to 1 second.

10.0.1.255 - This is the destination IP address of the UDP packet, which is the broadcast address for the local network segment.

5000 - This is the destination port number for the UDP packet.

Please note that both source and destination addresses belong to VLAN 1. Therefore, the datagram is sent via the eth0 interface to this VLAN only.

The expected result is the docker container Container1 receiving the message from the Router container via UDP broadcast.

Multicast

Let's focus on Docker Container parameters specified when creating containers (Docker containers [execute on host] sub-chapter in the previous article ). In the context of Docker containers, the --sysctl net.ipv4.icmp_echo_ignore_broadcasts=0 option is crucial if you need to enable ICMP echo requests to the broadcast address inside the container. For example, if your containerized application relies on UDP broadcast for service discovery or communication with other containers, you may need to set this parameter to 0 to allow ICMP echo requests to be sent and received on the network.

Without setting this parameter to 0, your containerized application may not be able to communicate properly with other containers on the network or may experience unexpected behavior due to ICMP echo requests being ignored. Therefore, the --sysctl net.ipv4.icmp_echo_ignore_broadcasts=0 option can be crucial in certain Docker use cases where ICMP echo requests to the broadcast address are needed.

Usage example

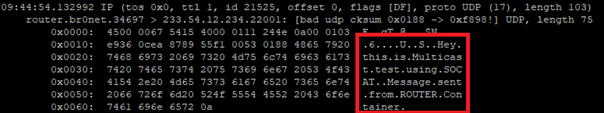

Run the command below in the container Container1 (see previous chapter for naming references). We use socat, which is a command line utility that establishes a bidirectional byte stream and transfers data between them. Please note that the IP address of the multicast group does not belong to the VLAN 1 address space.

socat -u UDP4-RECV:22001,ip-add-membership=233.54.12.234:eth0 /dev/null &

Then, add the route to the multicast group.

ip route add 233.54.12.234/32 dev eth0

You can ping the address from Device 1 to verify the group has been created.

ping -I eth0 -t 2 233.54.12.234

As you can see, an interface parameter is required with the ping command to enforce using the correct outgoing interface. You can also limit the TTL parameter (-t 2) to verify the route length to the multicast group.

Now, use socat on Device1 to open the connection inside the group.

ip route add 233.54.12.234/32 dev eth0

socat STDIO UDP-DATAGRAM:233.54.12.234:22001

Please note you have to setup the route to avoid sending packets to “unknown network” directly to the router.

Now, you can type the message on Device1 and use tcpdump on Container1 to see the incoming message.

tcpdump -i eth0 -Xavvv

Summary

Nowadays, a major challenge faced by developers and customers is to guarantee maximum security while ensuring compatibility and openness to change for edge devices. As part of IoT, it is imperative to keep in mind that the delivered solution may be extended in the future with additional hardware modules, and thus, the environment into which this module will be deployed must be ready for changes.

This problem asks the non-trivial question of how to meet business requirements while taking into account all the guidelines from standards from hardware vendors or the usual legal standards.

Translating the presented architecture into a fire trucks context, all the requirements from the introduction regarding isolation and modularity of the environment have been met. Each truck has the ability to expand the connected hardware while maintaining security protocols. In addition, the Docker images that work with the hardware know only their private scope and the router's scope.

The proposed solution provides a ready answer on how to obtain a change-ready environment that meets security requirements. A key element of the architecture is to guarantee communication for applications only in the VLAN space in which they are located.

This way, any modification should not affect already existing processes on the edge side. It is also worth detailing the role played by the Router component. With it, we guarantee a way to communicate between Docker containers while maintaining a configuration that allows you to control network traffic.

We have also included a solution for UDP Broadcast / Multicast communication. Current standards among hardware include solutions that transmit data via the standard. This means that if, for example, we are waiting for emergency data on a device, we must also be ready to handle Broadcasts and ensure that packets are consumed only by those components that are designed for this purpose.

Summarizing the presented solution, one should not forget about applications in other industries as well. The idea of independent Docker images and modularity for hardware allows application even in the Automotive and high-reliability areas, where the use of multiple devices, not necessarily from the same supplier, is required.

We encourage you to think about further potential applications and thank you for taking the time to read.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.