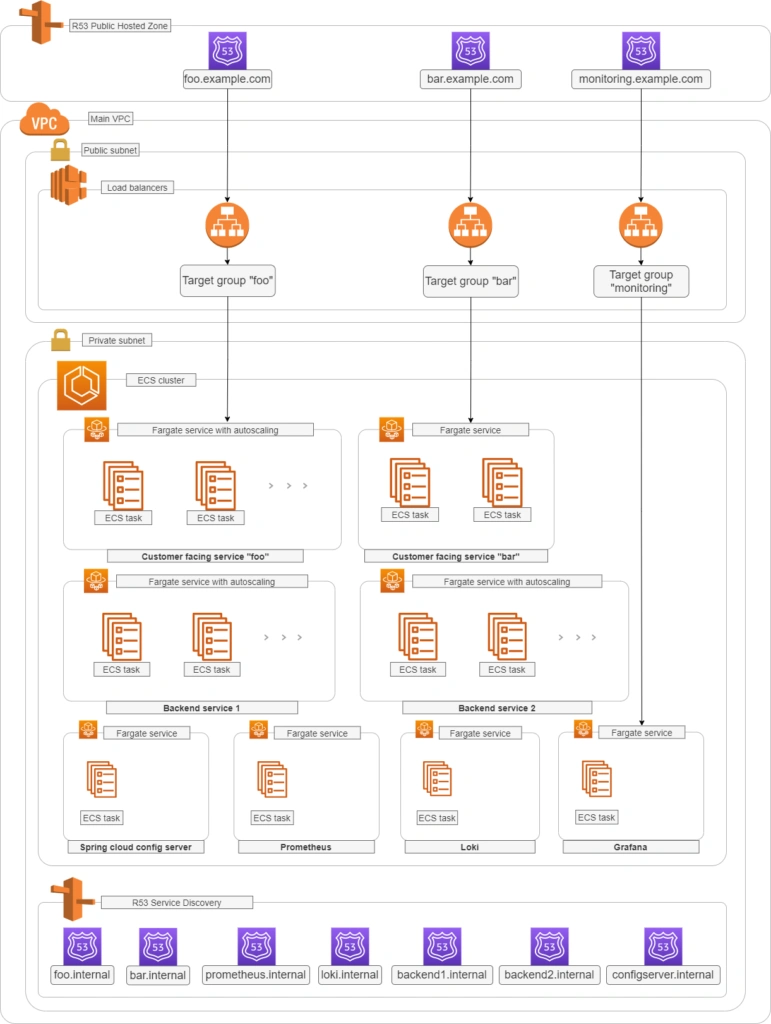

Monitoring your microservices on AWS with Terraform and Grafana - monitoring

Welcome back to the series. We hope you’ve enjoyed the previous part and you’re back to learn the key points. Today we’re going to show you how to monitor the application.

Monitoring

We would like to have logs and metrics in a single place. Let’s imagine you see something strange on your diagrams, mark it with your mouse, and immediately have proper log entries from this particular timeframe and this particular machine displayed below. Now, let’s make it real.

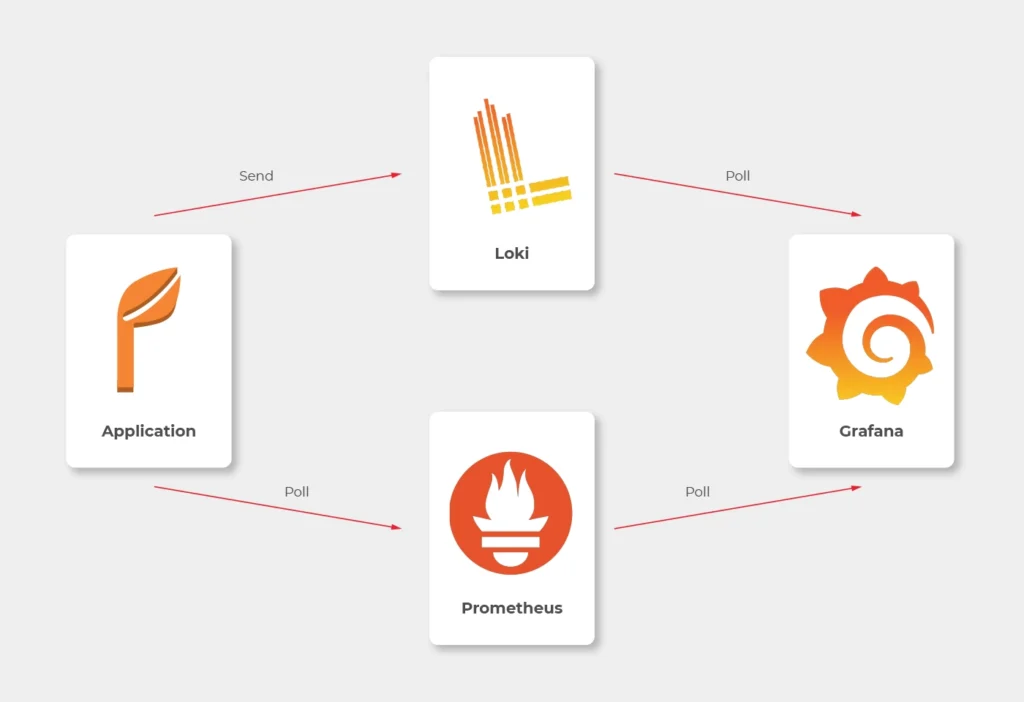

Some basics first. There is a huge difference between the way Prometheus and Loki get the data. Both of them are being called by Grafana to poll data, but Prometheus also actively calls the application to poll metrics. Loki, instead, just listens, so it needs some extra mechanism to receive logs from applications.

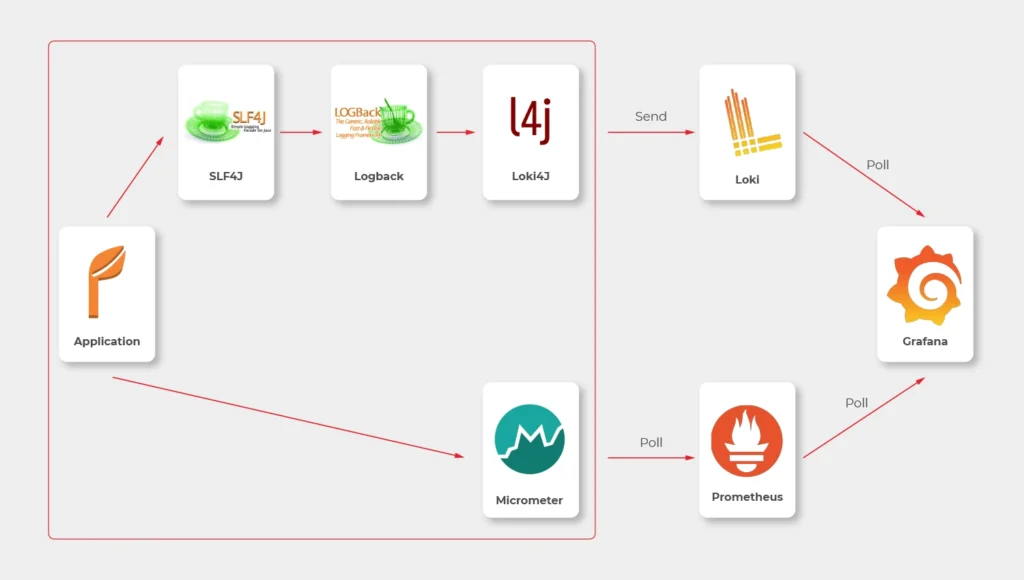

In most sources over the Internet, you’ll find that the best way to send logs to Loki is to use Promtail. This is a small tool, developed by Loki’s authors, which reads log files and sends them entry by entry to remote Loki’s endpoint. But it’s not perfect. Sending multiline logs is still in a bad shape (state for February 2021), some config is really designed to work with Kubernetes only and at the end of the day, this is one more additional application you would need to run inside your Docker image, which can get a little bit dirty. Instead, we propose to use a loki4j logback appender (https://github.com/loki4j). This is a zero-dependency Java library designed to send logs directly from your application.

There is one more Java library needed - Micrometer . We’re going to use it to collect metrics of the application.

So, the proper diagram should look like this.

Which means, we need to build or configure the following pieces:

- slf4j (default configuration is enough)

- Logback

- Loki4j

- Loki

- Micrometer

- Prometheus

- Grafana

Micrometer

Let’s start with metrics first.

There are just three things to do on the application side.

The first one is to add a dependency to the Micrometer with Prometheus integration (registry).

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

Now, we have a new endpoint exposable from Spring Boot Actuator, so we need to enable it.

management:

endpoints:

web:

exposure:

include: prometheus,health

This is a piece of configuration to add. Make sure you include prometheus in both config server and config clients’ configuration. If you have some Web Security configured, make sure to enable full access to /actuator/health and /actuator/prometheus endpoint.

Now we would like to distinguish applications in our metrics, so we have to add a custom tag in all applications. We propose to add this piece of code as a Java library and import it with Maven.

@Configuration

public class MetricsConfig {

@Bean

MeterRegistryCustomizer<MeterRegistry> configurer(@Value("${spring.application.name}") String applicationName) {

return (registry) -> registry.config().commonTags("application", applicationName);

}

}

Make sure you have spring.application.name configured in all bootstrap.yml files in config clients and application.yml in the config server.

Prometheus

The next step is to use a brand new /actuator/prometheus endpoint to read metrics in Prometheus.

The ECS configuration is similar to backend services. The image you need to push to your ECR should look like that.

FROM prom/prometheus

COPY prometheus.yml .

ENTRYPOINT prometheus --config.file=prometheus.yml

EXPOSE 9090

As Prometheus doesn’t support HTTPS endpoints, it’s just a temporary solution, and we’ll change it later.

The prometheus.yml file contains such a configuration.

scrape_configs:

- job_name: 'cloud-config-server'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$cloud_config_server_url'

type: 'A'

port: 8888

- job_name: 'foo'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$foo_url

type: 'A'

port: 8080

- job_name: bar

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$bar_url

type: 'A'

port: 8080

- job_name: 'backend_1'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$backend_1_url

type: 'A'

port: 8080

- job_name: 'backend_2'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$backend_2_url

type: 'A'

port: 8080

Let’s analyse the first job as an example.

We would like to call '$cloud_config_server_url' url with '/actuator/prometheus' relative path on a port 8080 . As we’ve used dns_sd_configs and type: 'A', the Prometheus can handle multivalue DNS answers from the Service Discovery, to analyze all tasks in each service. Please make sure you replace all ' $x' variables in the file with proper URLs from the Service Discovery.

The Prometheus isn’t exposed to the public load balancer, so you cannot verify your success so far. You can expose it temporarily or wait for Grafana.

Logback and Loki4j

If you use the Spring Boot, you probably already have spring-boot-starter-logging

library included. Therefore, you use logback as the default slf4j integration. Our job now is to configure it to send logs to Loki. Let’s start with the dependency:

<dependency>

<groupId>com.github.loki4j</groupId>

<artifactId>loki-logback-appender</artifactId>

<version>1.1.0</version>

</dependency>

Now let’s configure it. The first file is called logback-spring.xml and located in the config server next to the application.yml (1) file.

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/>

<appender name="Console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${LOG_PATTERN}</pattern>

</encoder>

</appender>

<springProfile name="aws">

<appender name="Loki" class="com.github.loki4j.logback.Loki4jAppender">

<http>

<url>${LOKI_URL}/loki/api/v1/push</url>

</http>

<format class="com.github.loki4j.logback.ProtobufEncoder">

<label>

<pattern>application=spring-cloud-config-server,instance=${INSTANCE},level=%level</pattern>

</label>

<message>

<pattern>${LOG_PATTERN}</pattern>

</message>

<sortByTime>true</sortByTime>

</format>

</appender>

</springProfile>

<root level="INFO">

<appender-ref ref="Console"/>

<springProfile name="aws">

<appender-ref ref="Loki"/>

</springProfile>

</root>

</configuration>

What do we have here? There are two appenders with the common pattern, and one root logger. So we start with pattern configuration <property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/> . Of course you can configure it, as you want.

Then, the standard console appender. As you can see, it uses the LOG_PATTERN .

Then you can see the com.github.loki4j.logback.Loki4jAppender appender. This way the library is being used. We’ve used < springProfile name="aws" > profile filter to enable it only in the AWS infrastructure and disable locally. We use the same when using the appender with appender-ref ref="Loki" . Please note the label pattern, used here to label each log with custom tags (application, instance, level). Another important part here is Loki’s URL. We need to provide it as an environment variable for the ECS task. To do that, you need to add one more line to your aws_ecs_task_definition configuration in terraform.

"environment" : [

...

{ "name" : "LOKI_URL", "value" : "loki.internal" }

],

As you can see, we defined “loki.internal” URL and we’re going to create it in a minute.

There are few issues with logback configuration for the config clients.

First of all, you need to provide the same LOKI_URL environment variable to each client, because you need Loki before reading config from the config server.

Now, let’s put another logback-spring.xml file in the config server next to the applic ation.yml (2) file.

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/>

<springProperty scope="context" name="APPLICATION_NAME" source="spring.application.name"/>

<appender name="Console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>\${LOG_PATTERN}</pattern>

</encoder>

</appender>

<springProfile name="aws">

<appender name="Loki" class="com.github.loki4j.logback.Loki4jAppender">

<http>

<requestTimeoutMs>15000</requestTimeoutMs>

<url>\${LOKI_URL}/loki/api/v1/push</url>

</http>

<format class="com.github.loki4j.logback.ProtobufEncoder">

<label>

<pattern>application=\${APPLICATION_NAME},instance=\${INSTANCE},level=%level</pattern>

</label>

<message>

<pattern>\${LOG_PATTERN}</pattern>

</message>

<sortByTime>true</sortByTime>

</format>

</appender>

</springProfile>

<root level="INFO">

<appender-ref ref="Console"/>

<springProfile name="aws"><appender-ref ref="Loki"/></springProfile>

</root>

</configuration>

The first change to notice are slashes before environment variables (eg. \${LOG_PATTERN } ). We need it to tell the config server not to resolve variables on it’s side (because it’s impossible). The next difference is a new variable <springProperty scope="context" name="APPLICATION_NAME" source="spring.application.name"/> . with this line and spring.application.name in all your applications each log will be tagged with a different name. There is also a trick with the ${INSTANCE} variable. As Prometheus uses IP address + port as an instance identifier and we want to use the same here, we need to provide this data to each instance separately.

So your Dockerfile files for your applications should have something like that.

FROM openjdk:15.0.1-slim

COPY /target/foo-0.0.1-SNAPSHOT.jar .

ENTRYPOINT INSTANCE=$(hostname -i):8080 java -jar foo-0.0.1-SNAPSHOT.jar

EXPOSE 8080

Also, to make it working, you are supposed to tell your clients to use this configuration. Just add this to bootstrap.yml files in all you config clients.

logging:

config: ${SPRING_CLOUD_CONFIG_SERVER:http://localhost:8888}/application/default/main/logback-spring.xml

spring:

application:

name: foo

That’s it, let’s move to the next part.

Loki

Creating Loki is very similar to Prometheus. Your dockerfile is as follows.

FROM grafana/loki

COPY loki.yml .

ENTRYPOINT loki --config.file=loki.yml

EXPOSE 3100

The good news is, you don’t need to set any URLs here - Loki doesn’t send any data. It just listens.

As a configuration, you can use a file from https://grafana.com/docs/loki/latest/configuration/examples/ . We’re going to adjust it later, but it’s enough for now.

Grafana

Now, we’re ready to put things together.

In the ECS configuration, you can remove service discovery stuff and add a load balancer, because Grafana will be visible over the internet. Please remember, it’s exposed at port 3000 by default.

Your Grafana Dockerfile should be like that.

FROM grafana/grafana

COPY loki_datasource.yml /etc/grafana/provisioning/datasources/

COPY prometheus_datasource.yml /etc/grafana/provisioning/datasources/

COPY dashboad.yml /etc/grafana/provisioning/dashboards/

COPY *.json /etc/grafana/provisioning/dashboards/

ENTRYPOINT [ "/run.sh" ]

EXPOSE 3000

Let’s check configuration files now.

loki_datasource.yml:

apiVersion: 1

datasources:

- name: Loki

type: loki

access: proxy

url: http://$loki_url:3100

jsonData:

maxLines: 1000

I believe the file content is quite obvious (we'll return here later).

prometheus_datasource.yml:

apiVersion: 1

datasources:

- name: prometheus

type: prometheus

access: proxy

orgId: 1

url: https://$prometheus_url:9090

isDefault: true

version: 1

editable: false

dashboard.yml:

apiVersion: 1

providers:

- name: 'Default'

folder: 'Services'

options:

path: /etc/grafana/provisioning/dashboards

With this file, you tell Grafana to install all json files from /etc/grafana/provisioning/dashboards directory as dashboards.

The last leg is to create some dashboards. You can, for example, download a dashboard from https://grafana.com/grafana/dashboards/10280 and replace ${DS_PROMETHEUS} datasource with your name “prometheus”.

Our aim was to create a dashboard with metrics and logs at the same screen. You can play with dashboards as you want, but take this as an example.

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": "-- Grafana --",

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"type": "dashboard"

}

]

},

"editable": true,

"gnetId": null,

"graphTooltip": 0,

"id": 2,

"iteration": 1613558886505,

"links": [],

"panels": [

{

"aliasColors": {},

"bars": false,

"dashLength": 10,

"dashes": false,

"datasource": null,

"fieldConfig": {

"defaults": {

"custom": {}

},

"overrides": []

},

"fill": 1,

"fillGradient": 0,

"gridPos": {

"h": 8,

"w": 24,

"x": 0,

"y": 0

},

"hiddenSeries": false,

"id": 4,

"legend": {

"avg": false,

"current": false,

"max": false,

"min": false,

"show": true,

"total": false,

"values": false

},

"lines": true,

"linewidth": 1,

"nullPointMode": "null",

"options": {

"alertThreshold": true

},

"percentage": false,

"pluginVersion": "7.4.1",

"pointradius": 2,

"points": false,

"renderer": "flot",

"seriesOverrides": [],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "system_load_average_1m{instance=~\"$instance\", application=\"$application\"}",

"interval": "",

"legendFormat": "",

"refId": "A"

}

],

"thresholds": [],

"timeRegions": [],

"title": "Panel Title",

"tooltip": {

"shared": true,

"sort": 0,

"value_type": "individual"

},

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

},

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"datasource": "Loki",

"fieldConfig": {

"defaults": {

"custom": {}

},

"overrides": []

},

"gridPos": {

"h": 33,

"w": 24,

"x": 0,

"y": 8

},

"id": 2,

"options": {

"showLabels": false,

"showTime": false,

"sortOrder": "Ascending",

"wrapLogMessage": true

},

"pluginVersion": "7.3.7",

"targets": [

{

"expr": "{application=\"$application\", instance=~\"$instance\", level=~\"$level\"}",

"hide": false,

"legendFormat": "",

"refId": "A"

}

],

"timeFrom": null,

"timeShift": null,

"title": "Logs",

"type": "logs"

}

],

"schemaVersion": 27,

"style": "dark",

"tags": [],

"templating": {

"list": [

{

"allValue": null,

"current": {

"selected": false,

"text": "foo",

"value": "foo"

},

"datasource": "prometheus",

"definition": "label_values(application)",

"description": null,

"error": null,

"hide": 0,

"includeAll": false,

"label": "Application",

"multi": false,

"name": "application",

"options": [],

"query": {

"query": "label_values(application)",

"refId": "prometheus-application-Variable-Query"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

},

{

"allValue": null,

"current": {

"selected": false,

"text": "All",

"value": "$__all"

},

"datasource": "prometheus",

"definition": "label_values(jvm_classes_loaded_classes{application=\"$application\"}, instance)",

"description": null,

"error": null,

"hide": 0,

"includeAll": true,

"label": "Instance",

"multi": false,

"name": "instance",

"options": [],

"query": {

"query": "label_values(jvm_classes_loaded_classes{application=\"$application\"}, instance)",

"refId": "prometheus-instance-Variable-Query"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

},

{

"allValue": null,

"current": {

"selected": false,

"text": [

"All"

],

"value": [

"$__all"

]

},

"datasource": "Loki",

"definition": "label_values(level)",

"description": null,

"error": null,

"hide": 0,

"includeAll": true,

"label": "Level",

"multi": true,

"name": "level",

"options": [

{

"selected": true,

"text": "All",

"value": "$__all"

},

{

"selected": false,

"text": "ERROR",

"value": "ERROR"

},

{

"selected": false,

"text": "INFO",

"value": "INFO"

},

{

"selected": false,

"text": "WARN",

"value": "WARN"

}

],

"query": "label_values(level)",

"refresh": 0,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

}

]

},

"time": {

"from": "now-24h",

"to": "now"

},

"timepicker": {},

"timezone": "",

"title": "Logs",

"uid": "66Yn-8YMz",

"version": 1

}

We don’t recommend playing with such files manually when you can use a very convenient UI and export a json file later on. Anyway, the listing above is a good place to start. Please note the following elements:

In variable’s definitions, we use Prometheus only, because Loki doesn’t expose any metric so you cannot filter one variable (instance) when another one (application) is selected.

Because we would like to sometimes see all instances or log levels together, we need to query data like here: {application=\"$application\", instance=~\"$instance\", level=~\"$level \"}" . The important element is a tilde in instance=~\"$instance\" and level=~\"$level\" , which allows us to use multiple values.

Conclusion

Congratulation! You have your application monitored. We hope you like it! But please remember - it’s not production-ready yet! In the last part, we’re going to cover a security issue - add encryption at transit to all components.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.