Kubernetes supports Windows workloads - the time to get rid of skeletons in your closet has come

Enterprises know that the future of their software is in the cloud. Despite keeping that in mind, many tech leaders delay the process of transforming their core legacy systems. How will the situation change with Kubernetes supporting Windows workloads? Can we assume that companies will leverage the Kubernetes upgrade to accelerate their journey towards the cloud?

How can this article help you?

- You can see what Kubernetes supporting Windows workloads provides for enterprises.

- We remind you why going to the cloud is crucial for your business excellence.

- You can get to know the main reason stopping enterprises from transforming their legacy systems.

- We describe the main risks that come with delaying the transition towards the cloud.

- You learn how to leverage Kubernetes supporting Windows workloads.

Technical debt is an unpleasant legacy you often come into money while taking charges of critical systems or enterprise software older than you. Laying under the cache layer and various interfaces, legacy systems encourage you to forget them. And you are good with it - you have enough tasks to perform and things to manage on a daily basis. Sprint after sprint, your team deals with developing applications and particular features to meet increasing customer demand and sophisticated needs. Initiating a tremendous venture, which may transform into opening Pandora's box, it's not exactly what you want to add to your checklist.

The bad news is that if you're willing to be successful at your job, the clock is ticking. The problem with legacy systems is that you don't know when they break down, causing disaster. You will justify yourself, but the impact on your work will be nightmarish. What you know for sure, legacy systems under applications built by your talented teams hinder further development and make your job harder than it already is.

Whatever you are going to go for it all or don't want to throw yourself in at the deep end - Kubernetes supporting Windows workloads is the news you needed. See how it can accelerate your transition towards the cloud.

What's the deal with Kubernetes supporting Windows workloads

Kubernetes was designed to run Linux containers. Such an approach complicated the transition towards the cloud for enterprises with Windows Server legacy systems. And while over 70% of the global server market is Windows-based (according to Statista), we can see why so many legacy apps are in the closets. If you work at a large enterprise, the chances that you have a few of them hidden carefully are very high.

How supporting Windows workloads by Kubernetes is changing the game? In the - not so much - olden days, Windows-based applications were immovable - they needed to be run on Windows, required Windows server, and access to numerous related databases and libraries. Such a demanding environment encouraged enterprises to wait for better days. And now they have come. Kubernetes, with production support for scheduling Windows containers on Windows nodes in the platform cluster, allows for running these Windows applications, enabling enterprises to modernize and move their apps to the cloud.

It’s believed that with this release, Kubernetes provides enterprises with the opportunity to accelerate their DevOps and cloud transformation . In case you missed 1 mln publications about cloud advantages, we will write up the main points.

Why do enterprises move their legacy applications to the cloud

As promised above, let’s keep it short:

- Scalability - the cloud allows you to easily manage your IT resources, data storage capacity, computing power, and networking (in both ways) without downtimes or other disruptions. Such flexibility supports business growth, product/service development, and better cost management.

- Security - the right set of strategies and policies allow enterprises to build and manage secure cloud environments. Decentralization and support for your cloud stack provide solutions to common challenges in maintaining on-premise infrastructure.

- Maintenance - using cloud services delivered by trusted providers, you don't have to maintain many things on your own, just leveraging available services.

- Accessibility - the pandemic showed us how crucial is remote access to our IT resources, and the cloud provides your remote or distributed teams with easy access regardless of your team members' localization - that is priceless.

- Reliability - cloud providers ensure easier and cheaper data backups, disaster recovery, and business continuity as they use the economy at scale.

- Performance - as the cloud service market is blooming and service providers are competing about increasing revenues, the quality and performance of cloud infrastructure are top-notch.

- Cost-effectiveness - with cloud computing, your enterprise can cut off numerous spendings from your books - including infrastructure, electricity, and IT experts responsible for managing resources.

- Agility - forget about capacity planning while your computing provisioning can be done within a few clicks leveraging self-service.

Sounds convincing? If everything is obvious, why are there still so many legacy apps?

Why do enterprises delay with moving apps to the cloud

Legacy systems are long-time friends with procrastination. If you are long enough in this business, you have definitely heard a few of these excuses:

- We cannot do it now. We have too many things on the list. A better day will come.

- It’s risky. It’s critically risky. Why do you even ask? Do you want to see the world burning?

- Ok, let’s do it! But wait….who knows how to do it?

- We can cover it with our UI or cache layer, and nobody will ever notice.

- It’s our core system. You touch it, everything will go bad.

- Why change it if it works well?

- It’s a too huge project for me to decide and take responsibility for the never-ending process.

These are some examples from the top of the iceberg. Diving into the process of moving legacy apps to the cloud , you can stumble upon numerous points convincing you to stay out of them. But can it last forever? What if the “zero hour” strikes?

Playing a risky game: what can happen if you don’t migrate to the cloud

Many of our business challenges wouldn’t have existed if we, at some point, tackled the underestimated issues. The excuses highlighted above can convince you to leave things as they are. But what if your real problems are just ahead of you? Let’s name some threats that may occur at enterprises that delay transition towards the cloud.

- Maintaining legacy systems becomes more expensive with time as your company has to pay for computing power supporting these solutions.

- Your enterprise may face a huge challenge to find experts understanding your legacy systems. The longer you postpone the process, the harder it will be to look for people working with frameworks and tools that are outdated.

- By allowing for increasing your technical debt, your enterprise acts against your willingness for innovation. Your legacy systems suppress the development of new products and services, undermining your competitive advantage.

- You can face a challenge to provide services to your customers because of downtimes and distractions caused by inefficient systems.

- Technology develops fast. Legacy systems stop you from participating in the movement and may generate new issues in the future, especially in the time you will need to be flexible.

- Most established enterprises work on highly regulated markets and have to meet challenging conditions. One of our business partners had to rebuild one of its core systems because of new regulations regarding data management. Such a situation can lead to enormous costs.

- There appears a serious security threat as legacy systems are prone to attacks, and without upgrades, your system may become insecure.

The list above can be expanded to many additional issues. But instead of describing challenges, let’s discuss how they can be addressed using Kubernetes .

How to leverage Kubernetes supporting Windows workloads

There is a ton of code written on Windows. With the Kubernetes update, you don’t have to think about rebuilding your applications from scratch, so myriads of working hours spent by your team are secured. Most of the code can be moved to the Kubernetes container and there developed. It’s safer and cheaper.

Kubernetes supporting Windows workloads gives you time to navigate your journey to the cloud properly. First of all, it ends the discussion for all those excuses mentioned above. The moment is now. Secondly, you can now utilize an evolutionary approach by developing and upgrading your systems instead of building them from ground zero. Furthermore, with your key legacy systems moved to the cloud, you can accelerate the overall transformation at your enterprise towards an agile, DevOps-oriented organization open to innovation and developing highly competitive software.

What should be your next move?

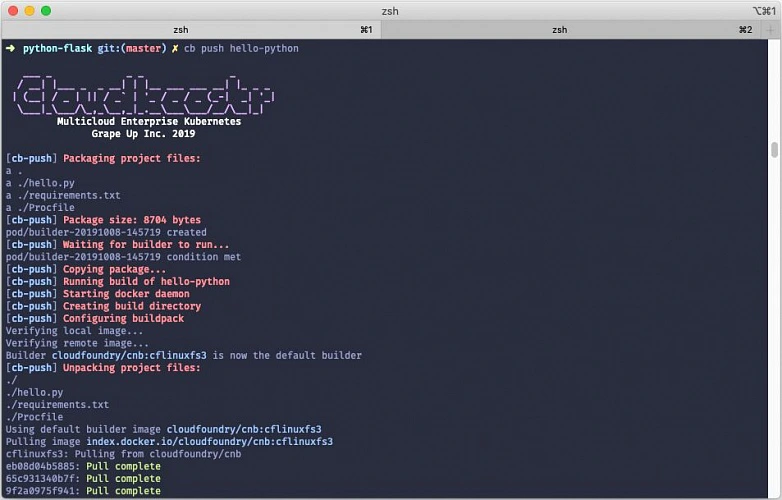

By supporting Windows workloads, Kubernetes makes the life of many tech teams easier. But it would be too easy if everything worked by itself. Configuration of the Kubernetes cluster to utilize Windows workloads is demanding and time-consuming. Instead of doing it on your own, you can leverage the ready-to-use solution provided by Grape Up. Cloudboostr , our Kubernetes stack, enables you to move your Windows-based apps to the cloud. Consult our expert on how to do it properly!

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.