Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Reactive service to service communication with RSocket – introduction

This article is the first one of the mini-series which will help you to get familiar with RSocket – a new binary protocol which may revolutionize machine-to-machine communication. In the following paragraphs, we discuss the problems of the distributed systems and explain how these issues may be solved with RSocket. We focus on the communication between microservices and the interaction model of RSocket.

Communication problem in distributed systems

Microservices are everywhere, literally everywhere. We went through the long journey from the monolithic applications, which were terrible to deploy and maintain, to the fully distributed, tiny, scalable microservices. Such architecture design has many benefits; however, it also has drawbacks, worth mentioning. Firstly, to deliver value to the end customers, services have to exchange tons of data. In the monolithic application that was not an issue, as the entire communication occurred within a single JVM. In the microservice architecture, where services are deployed in the separate containers and communicate via an internal or external network, networking is a first-class citizen. Things get more complicated if you decide to run your applications in the cloud, where network issues and periods of increased latency is something you cannot fully avoid. Rather than trying to fix network issues, it is better to make your architecture resilient and fully operational even during a turbulent time.

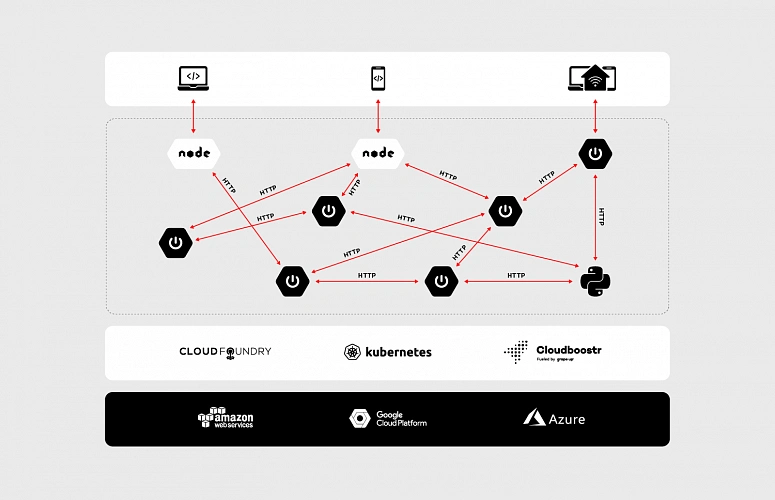

Let’s dive a bit deeper into the concept of the microservices, data, communication and the cloud. As an example, we will discuss the enterprise-grade system which is accessible through a website and mobile app as well as communicates with small, external devices (e.g home heater controller). The system consists of multiple microservices, mostly written in Java and it has a few Python and node.js components. Obviously, all of them are replicated across multiple availability zones to assure that the whole system is highly available.

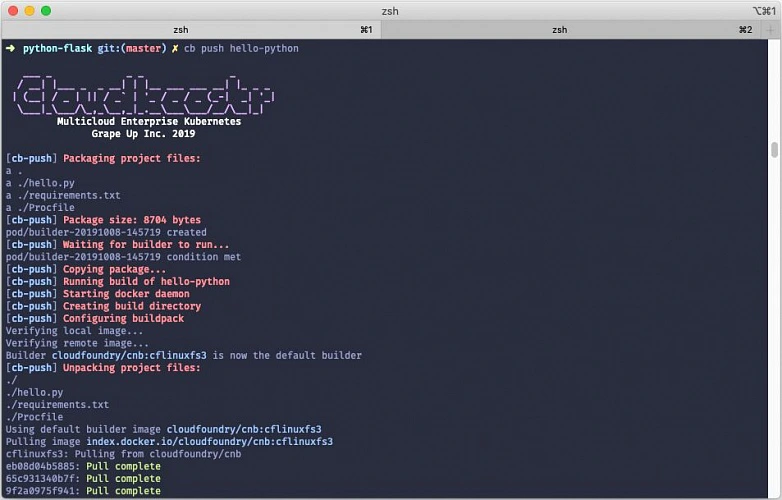

To be IaaS provider agnostic and improve developer experience the applications are running on top of PaaS. We have a wide range of possibilities here: Cloud Foundry, Kubernetes or both combined in Cloudboostr are suitable. In terms of communication between services, the design is simple. Each component exposes plain REST APIs – as shown in the diagram below.

At first glance, such an architecture does not look bad. Components are separated and run in the cloud – what could go wrong? Actually, there are two major issues – both of them related to communication.

The first problem is the request/response interaction model of HTTP. While it has a lot of use cases, it was not designed for machine to machine communication. It is not uncommon for the microservice to send some data to another component without taking care about the result of the operation (fire and forget) or stream data automatically when it becomes available (data streaming). These communication patterns are hard to achieve in an elegant, efficient way using a request/response interaction model. Even performing simple fire and forget operation has side effects – the server has to send a response back to the client, even if the client is not interested in processing it.

The second problem is the performance. Let’s assume that our system is massively used by the customers, the traffic increases, and we have noticed that we are struggling to handle more than a few hundred requests per second. Thanks to the containers and the cloud, we are able to scale up our services with ease. However, if we track resource consumption a bit more, we will notice that while we are running out of memory, the CPUs of our VMs are almost idle. The issue comes from the thread per request model usually used with HTTP 1.x, where every single request has its own stack memory. In such a scenario, we can leverage the reactive programming model and non-blocking IO. It will significantly cut down memory usage, nevertheless, it will not reduce the latency. HTTP 1.x is a text-based protocol thus size of data that need to be transferred is significantly higher than in the case of binary protocols.

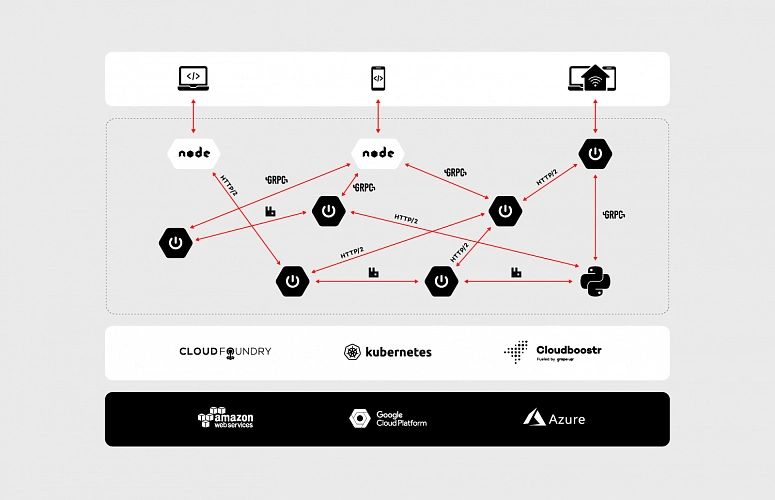

In the machine to machine communication we should not limit ourselves to HTTP (especially 1.x), its request/response interaction model and poor performance. There are many more suitable and robust solutions out there (on the market). Messaging based on the RabbitMQ, gRPC or even HTTP 2 with its support for multiplexing and binarized payloads will do way better in terms of performance and efficiency than plain HTTP 1.x.

Using multiple protocols allow us to link the microservices in the most efficient and suitable way in a given scenario. However, the adoption of multiple protocols forces us to reinvent the wheel again and again. We have to enrich our data with extra information related to security and create multiple adapters which handle translation between protocols. In some cases, transportation requires external resources (brokers, services, etc.) which need to be highly available. Extra resources entail extra costs, even though all we need is simple, message-based fire and forget operation. Besides, a multitude of different protocols may introduce serious problems related to application management, especially if our system consists of hundreds of microservices.

The issues mentioned above are the core reasons why RSocket was invented and why it may revolutionize communication in the cloud. By its reactiveness and built-in robust interaction model, RSocket may be applied in various business scenarios and eventually unify the communication patterns that we use in the distributed systems.

RSocket to the rescue

RSocket is a new, message-driven, binary protocol which standardizes the approach to communication in the cloud. It helps to resolve common application concerns with a consistent manner as well as it has support for multiple languages (e.g java, js, python) and transport layers (TCP, WebSocket, Aeron).

In the following sections, we will dive deeper into protocol internals and discuss the interaction model.

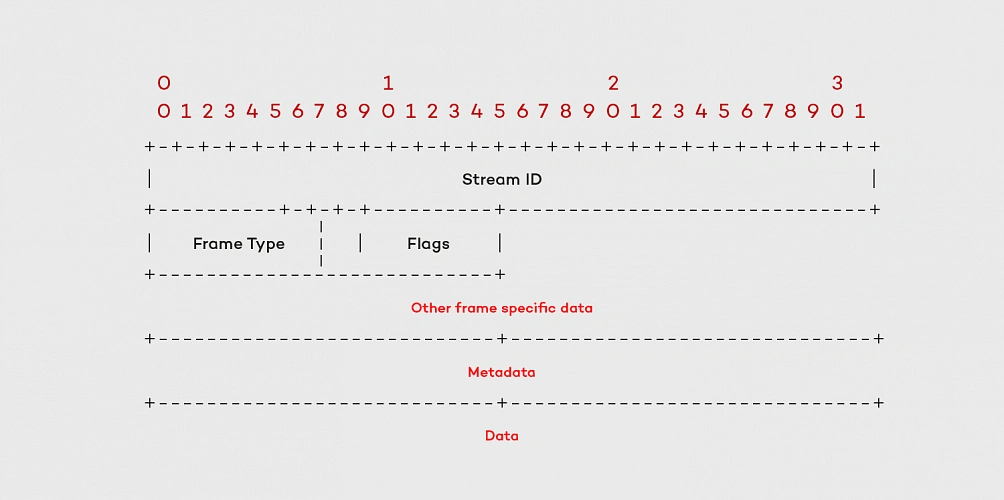

Framed and message-driven

Interaction in RSocket is broken down into frames. Each frame consists of a frame header which contains the stream id, frame type definition and other data specific to the frame type. The frame header is followed by meta-data and payload – these parts carry data specified by the user.

There are multiple types of frames which represent different actions and available methods of the interaction model. We’re not going to cover all of them as they are extensively described in the official documentation (http://rsocket.io/docs/Protocol). Nevertheless, there are few which are worth noting. One of them is the Setup Frame which the client sends to the server at the very beginning of the communication. This frame can be customized so that you can add your own security rules or other information required during connection initialization. It should be noted that RSocket does not distinguish between the client and the server after the connection setup phase. Each side can start sending the data to the other one – it makes the protocol almost entirely symmetrical.

Performance

The frames are sent as a stream of bytes. It makes RSocket way more efficient than typical text-based protocols. From a developer perspective, it is easier to debug a system while JSONs are flying back and forth through the network, but the impact on the performance makes such convenience questionable. The protocol does not impose any specific serialization/deserialization mechanism, it considers the frame as a bag of bits which could be converted to anything. That makes possible to use JSON serialization or more efficient solutions like Protobuf or AVRO.

The second factor, which has a huge impact on RSocket performance is the multiplexing. The protocol creates logical streams (channels) on the top of the single physical connection. Each stream has its unique ID which, to some extent, can be interpreted as a queue we know from messaging systems. Such design deals with major issues known from HTTP 1.x – connection per request model and weak performance of “pipelining”. Moreover, RSocket natively supports transferring of the large payloads. In such a scenario the payload frame is split into several frames with an extra flag – the ordinal number of the given fragment.

Reactiveness & Flow Control

RSocket protocol fully embraces the principles stated in the Reactive Manifesto . Its asynchronous character and thrift in terms of the resources helps decrease the latency experienced by the end users and costs of the infrastructure. Thanks to streaming we don’t need to pull data from one service to another, instead, the data is pushed when it becomes available. It is an extremely powerful mechanism, but it might be risky as well. Let’s consider a simple scenario: in our system, we are streaming events from service A to service B. The action performed on the receiver side is non-trivial and require some computation time. If service A pushes events faster than B is able to process them, eventually, B will run out of resources – the sender will kill the receiver. Since RSocket uses the reactor, it has built-in support for the flow control , which helps to avoid such situations.

We can easily provide the backpressure mechanism implementation, adjusted to our needs. The receiver can specify how much data it would like to consume and will not get more than that until it notifies the sender that it is ready to process more. On the other hand, to limit the number of incoming frames from the requester, RSocket implements a lease mechanism. The responder can specify how many requests requester may send within a defined time frame.

The API

As mentioned in the previous section, RSocket uses Reactor, so that on the API level we are mainly operating on Mono and Flux objects. It has full support for reactive signals as well – we can easily implement “reaction” on different events – onNext, onError, onClose, etc.

The following paragraphs will cover the API and each and every interaction option available in RSocket. The discussion will be backed with the code snippets and the description for all the examples. Before we jump into the interaction model, it is worth describing the API basics, as it will come up in the multiple code examples.

Setting up the connection with RSocketFactory

Setting up the RSocket connection between the peers is fairly easy. The API provides factory (RSocketFactory) with factory methods receive and connect to create RSocket and CloseableChannel instances on the client and the server side respectively. Second common property present in both parties of the communication (the requester and the responder) is a transport. RSocket can use multiple solutions as a transport layer (TCP, WebSocket, Aeron). Whichever you choose the API provides the factory methods which allows you to tweak and tune the connection.

RSocketFactory.receive()

.acceptor(new HelloWorldSocketAcceptor())

.transport(TcpServerTransport.create(HOST, PORT))

.start()

.subscribe();

RSocketFactory.connect()

.transport(TcpClientTransport.create(HOST, PORT))

.start()

.subscribe();

Moreover, in the case of the responder, we have to create a socket acceptor instance. The SocketAcceptor is an interface which provides the contract between the peers. It has a single method accept which accepts the RSocket for sending requests and returns an instance of RSocket that will be used for handling the requests from the peer. Besides providing the contract the SocketAcceptor enables us to access the setup frame content. On the API level, it is reflected by ConnectionSetupPayload object.

public interface SocketAcceptor {

Mono<RSocket> accept(ConnectionSetupPayload setup, RSocket sendingSocket);

}

As shown above, setting up the connection between the peers is relatively easy, especially for those of you who worked with WebSockets previously – in terms of the API both solutions are quite similar.

Interaction model

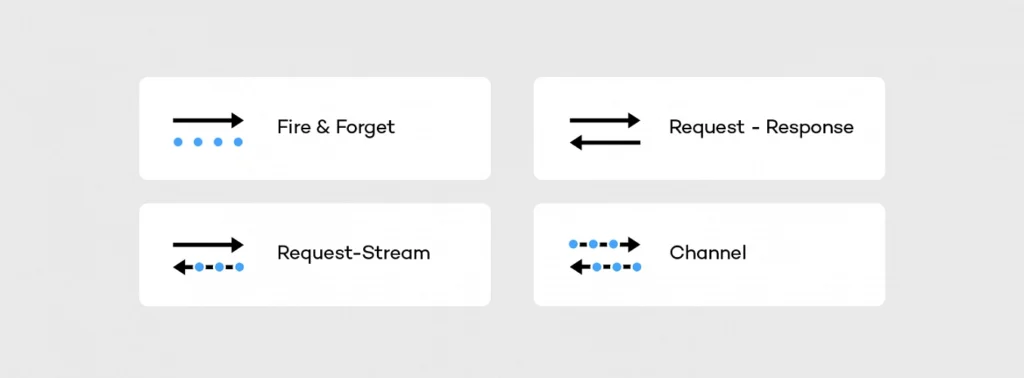

After setting up the connection we are able to move on to the interaction model. RSocket supports following operations:

The fire and forget , as well as the metadata push , were designed to push the data from the sender to the receiver. In both scenarios the sender does not care about the result of the operation – it is reflected on API level in a return type (Mono). The difference between these actions sits in the frame. In case of fire and forget the fully-fledged frame is sent to the receiver, while for the metadata push action the frame does not have payload – it consists only of the header and the metadata. Such a lightweight message can be useful in sending notifications to the mobile or peer-to-peer communication of IoT devices.

RSocket is also able to mimic HTTP behavior. It has support for request-response semantics, and probably that will be the main type of interaction you are going to use with RSocket. In streams context, such an operation can be represented as a stream which consists of the single object. In this scenario, the client is waiting for the response frame, but it does it in a fully non-blocking manner.

More interesting in the cloud applications are the request stream and the request channel interactions which operate on the streams of data, usually infinite. In case of the request stream operation, the requester sends a single frame to the responder and gets back the stream of data. Such interaction method enables services to switch from the pull data to the push data strategy. Instead of sending periodical requests to the responder requester can subscribe to the stream and react on the incoming data – it will arrive automatically when it becomes available.

Thanks to the multiplexing and the bi-directional data transfer support, we can go a step further using the request channel method. RSocket is able to stream the data from the requester to the responder and the other way around using a single physical connection. Such interaction may be useful when the requester updates the subscription – for example, to change the subscription criteria. Without the bi-directional channel, the client would have to cancel the stream and re-request it with the new parameters.

In the API, all operations of the interaction model are represented by methods of RSocket interface shown below.

public interface RSocket extends Availability, Closeable {

Mono<Void> fireAndForget(Payload payload);

Mono<Payload> requestResponse(Payload payload);

Flux<Payload> requestStream(Payload payload);

Flux<Payload> requestChannel(Publisher<Payload> payloads);

Mono<Void> metadataPush(Payload payload);

}

To improve the developer experience and avoid the necessity of implementing every single method of the RSocket interface, the API provides abstract AbstractRSocket we can extend. By putting the SocketAcceptor and the AbstractRSocket together, we get the server-side implementation, which in the basic scenario may look like this:

@Slf4j

public class HelloWorldSocketAcceptor implements SocketAcceptor {

@Override

public Mono<RSocket> accept(ConnectionSetupPayload setup, RSocket sendingSocket) {

log.info("Received connection with setup payload: [{}] and meta-data: [{}]", setup.getDataUtf8(), setup.getMetadataUtf8());

return Mono.just(new AbstractRSocket() {

@Override

public Mono<Void> fireAndForget(Payload payload) {

log.info("Received 'fire-and-forget' request with payload: [{}]", payload.getDataUtf8());

return Mono.empty();

}

@Override

public Mono<Payload> requestResponse(Payload payload) {

log.info("Received 'request response' request with payload: [{}] ", payload.getDataUtf8());

return Mono.just(DefaultPayload.create("Hello " + payload.getDataUtf8()));

}

@Override

public Flux<Payload> requestStream(Payload payload) {

log.info("Received 'request stream' request with payload: [{}] ", payload.getDataUtf8());

return Flux.interval(Duration.ofMillis(1000))

.map(time -> DefaultPayload.create("Hello " + payload.getDataUtf8() + " @ " + Instant.now()));

}

@Override

public Flux<Payload> requestChannel(Publisher<Payload> payloads) {

return Flux.from(payloads)

.doOnNext(payload -> {

log.info("Received payload: [{}]", payload.getDataUtf8());

})

.map(payload -> DefaultPayload.create("Hello " + payload.getDataUtf8() + " @ " + Instant.now()))

.subscribeOn(Schedulers.parallel());

}

@Override

public Mono<Void> metadataPush(Payload payload) {

log.info("Received 'metadata push' request with metadata: [{}]", payload.getMetadataUtf8());

return Mono.empty();

}

});

}

}

On the sender side using the interaction model is pretty simple, all we need to do is invoke a particular method on the RSocket instance we have created using RSocketFactory, e.g.

socket.fireAndForget(DefaultPayload.create("Hello world!"));

More interesting on the sender side is the implementation of the backpressure mechanism. Let’s consider the following example of the requester side implementation:

public class RequestStream {

public static void main(String[] args) {

RSocket socket = RSocketFactory.connect()

.transport(TcpClientTransport.create(HOST, PORT))

.start()

.block();

socket.requestStream(DefaultPayload.create("Jenny", "example-metadata"))

.subscribe(new BackPressureSubscriber());

socket.dispose();

}

@Slf4j

private static class BackPressureSubscriber implements Subscriber<Payload> {

private static final Integer NUMBER_OF_REQUESTED_ITEMS = 5;

private Subscription subscription;

int receivedItems;

@Override

public void onSubscribe(Subscription s) {

this.subscription = s;

subscription.request(NUMBER_OF_REQUESTED_ITEMS);

}

@Override

public void onNext(Payload payload) {

receivedItems++;

if (receivedItems % NUMBER_OF_REQUESTED_ITEMS == 0) {

log.info("Requesting next [{}] elements", NUMBER_OF_REQUESTED_ITEMS);

subscription.request(NUMBER_OF_REQUESTED_ITEMS);

}

}

@Override

public void onError(Throwable t) {

log.error("Stream subscription error [{}]", t);

}

@Override

public void onComplete() {

log.info("Completing subscription");

}

}

}

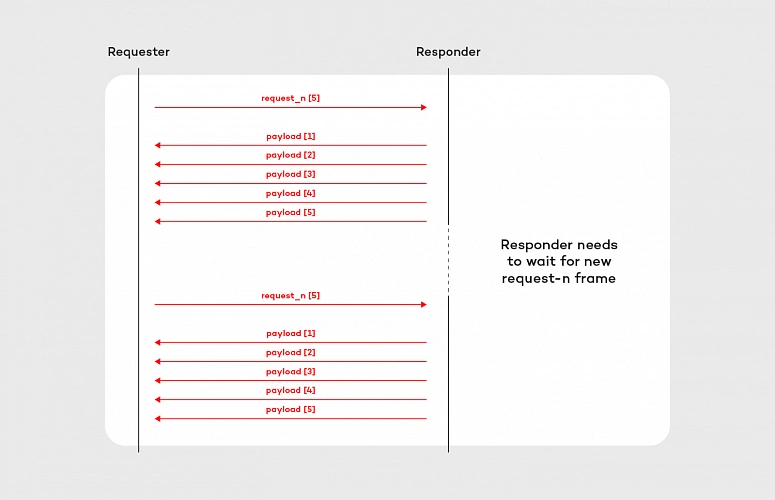

In this example, we are requesting the stream of data, but to ensure that the incoming frames will not kill the requester we have the backpressure mechanism put in place. To implement this mechanism we use request_n frame which on the API level is reflected by the subscription.request(n) method. At the beginning of the subscription [ onSubscribe(Subscription s) ], we are requesting 5 objects, then we are counting received items in onNext(Payload payload). When all expected frames arrived to the requester, we are requesting the next 5 objects – again using subscription.request(n) method. The flow of this subscriber is shown in the diagram below:

Implementation of the backpressure mechanism presented in this section is very basic. In the production, we should provide a more sophisticated solution based on more accurate metrics e.g. predicted/average time of computation. After all, the backpressure mechanism does not make the problem of an overproducing responder disappear. It shifts the issue to the responder side, where it can be handled better. Further reading about backpressure is available here on Medium and here on GitHub .

Summary

In this article, we discuss the communication issues in the microservice architecture, and how these problems can be solved using RSocket. We covered its API and the interaction model backed with simple “hello world” example and basic backpressure mechanism implementation.

In the next articles of this series, we will cover more advanced features of RSocket including Load Balancing and Resumability as well as we will discuss abstraction over RSocke t – RPC and Spring Reactor.

How to run a successful sprint review meeting

A Sprint Review is a meeting that closes and approves a Sprint in Scrum. The value of this meeting comes to inspect the increment and adapt the Product Backlog accordingly to current business conditions. This is a session where the Scrum Team and all interested stakeholders can attend to exchange information regarding progress made during the Sprint. They discuss problems and update the work plan to meet current market needs and approach a once determined product vision in a new way.

This meeting is supposed to enable feedback loop and foster collaboration, especially between the Scrum Team and all Stakeholders. A Sprint Review will be valuable for product development if there is an overall understanding of its purpose and plan. To maximize its value, it’s good to be aware of the impediments Scrum Team could meet. We would like to share our experience with a Sprint Review, the most important problems and facts discovered during numerous projects run by our team from Grape Up . As one of our company values is Drive Change, we are continuously working on enhancing our Scrum Process by investigating problems, resolving them, and implementing proper solutions to our daily work.

“Meetings – coding work balance.“

It’s a hard reality for managers leading projects. A lot of teams have a problem with too many meetings which disorganize daily work and may be an obstacle to deliver increment. Every group consists of both; people who hate meetings and prefer focusing on coding and meetings-lovers who always have thousands of questions and value teamwork over working independently. Internal team meetings or chats with stakeholders are an important part of daily work only when they bring any benefit to the team or product. Scrum prescribes four meetings during a Sprint: Sprint planning, the Daily Scrum, a Sprint Review and a Sprint Retrospective. It all makes collaboration critical to run a successful Scrum Team.

A stereotype developer who sits in his basement and doesn’t go on the daylight, who is introvert doesn’t fit this vision. Communication skills are very important for every scrum developer. Beyond technical skills and the ability to create good quality code, they need to develop their soft skills. Meetings are time-consuming and here comes a real threat that makes many people angry – after a few quarters of discussions, you may see no progress in coding. You need to confront with opinions of your colleagues and many times it’s not easy. Moreover, you need to describe to the managers what are you doing in a different language than JavaScript or Python.

For many professionals, the ideal world would be to sit comfortably in front of a screen and just write good quality code. But wait… Do people really want to do the work that no one wants? Create functionalities no one needs? Feedback exchange may not help to speed up the production process but for sure can boost the quality and usability of an application. The main idea of Scrum is to inspect and adapt continuously, and there is no way to do it without discussion and collaboration. In agile, the team’s information flow and early transformation is the main thing. It saves money and “lives”!

A Sprint Review gives a unique chance to stop for a moment and look at all the work done. It’s an important meeting that helps to keep roadmap and backlog in good health together with the market expectations. This is not only a one-side demo but a discussion of all participating guests. Each presented story should be talked through. Each attendee can ask questions, and the Product Owner or a team member should be able to answer it. It’s good to be as neutral as it’s possible neither praise nor criticize. Focus on evaluating features, not people.

Our team works for external clients from the USA, and our contact with them is constricted by time zones. A Sprint Review helps us to understand better client needs and step into their shoes. The development team can share their ideas and obstacles, and what is most important, enrich the understanding of the business value of their input. Each team member presents own part of a project, describes how it works, and asks the client for feedback. Sometimes we even share a work that's not 100% completed to be sure we are heading into the right direction to adapt on the early phase of implementation.

"Keep calm and work": No timeboxing

A Sprint Review should be open to everyone interested in joining the discussion. Main guests are the Product Owner, the Development Team, and business representation, but other stakeholders are also welcome. Is there a Scrum Master on board? For sure it should be! This may lead to a quite big meeting when many points of view occur and clash with each other. Uncontrolled discussion lasting hours is a real threat.

The Scrum Master’s role is to keep all meetings on track and allow everyone to talk. Parkinson’s Law is an old adage that says "work expands so as to fill the time available for its completion." Every Scrum Master’s golden rule of conducting meetings should be to schedule session blocks for the minimum amount of time needed. Remember that too long gathering may be a waste of time. Even if you are able to finish a meeting in 30 min, but had planned it for 1 hour, it will finally take the entire hour. Don’t forget that long and worthless meetings are boring.

An efficient Sprint Review needs to keep all guests focused and engaged. For a 1-week sprint, it should last about one hour; for a 2-week sprint, two hours. The Scrum Master’s role is to ensure theses time boxes but also to facilitate a meeting and inform when discussion leaves a trace. There should be time to present the summary of the last sprint: what was done and what wasn’t, to demonstrate progress and to answer all questions. Finally, additional time for gathering new ideas that will for sure appear. The second part of the meeting should be focused on discussing and prioritizing current backlog elements to adjust to customer and market needs. It’s a good moment for all team members to listen to the customer and get to know the management perspective and plan the next release content and date. A Sprint Review is an ideal moment for stakeholders to bring new ideas and priorities to the dev team and reorder backlog with Product Owner. During this session, all three Scrum pillars meet: transparency, inspection, and adaptation.

YOLO! : Preparation is for weak

A good plan is an attribute of a well-conducted meeting! No one likes mysterious meetings. An invitation should inform not only about time, place, and date of the meeting but also about the high-level program. Agenda is the first step of a good Sprint Review but not the only one. To be sure that Sprint Review will be satisfying for all participants, everyone needs to be well prepared. Firstly, the Product Owner should decide what work can be presented and create a clear plan of the demo and the entire meeting. The second step is to determine people responsible for each presented feature. Their job is to prepare the environment, interesting scenarios, and all needed materials. But before asking the team for it, make sure they know how to do it. Not all of the people are born presenters, but they can become one with your help.

In our team, we help each other with preparations to the Sprint Review. We work in Poland, but our customers are mostly from the USA. Not only the distance and time difference is a challenge but also language barriers. If there is a need everyone can ask for a rehearsal demo where we can present our work, discuss potential problems, and ask each other for more details. We’re constantly improving our English to make sure everything is clear to our clients. This boosts everyone’s self-confidence and final receipt. Team members know what to do, they’re ready for potential questions and focus on input from stakeholders. This is how we improve not only Sprint Reviews to be better and better but also communication skills.

"Work on your own terms": No DoD

“How ready is your work?”. Imagine a world where each developer decides when s/he finished work on her/his own terms. For one, it’s when the code is just compiled, for others it includes unit tests and it's compatible with coding standards, or even integration and usability tests. Everything works on a private branch but when it comes to integrating… boom! The team is trying to prepare a version to demonstrate but the final product crashes. The Product Owner and stakeholders are confused. A sprint without potentially releasable work is a wasted sprint. This dark scenario shouldn’t have happened to any Scrum Team.

To save time and to avoid misunderstandings, it’s good to speak the same language. All team members should be aware of the meaning of the word “done”. In our team, this keeps us on track with a transparent view of what work really can be found as delivered in a sprint. Definition of Done is like an examination of consistency for a team. Clear, generally achievable, and easy to understand steps to decide how much work is done to create a potentially releasable feature. This provides all stakeholders with clear information and allows the planning of the next business steps.

It is a guide that dictates when teams can legitimately claim that a given user’s story/task is "done" and can be moved to the next level - approval by the Product Owner and release. Basic elements of our DoD are; the code reviewed by other developers, merged to develop branch, and deployed on DEV/QA environment, and finally properly tested by QA and developers with the manual, and automation tests. The last step comes to fixing all defects, verifying if all the Acceptance Criteria are met, and reviewing by the Product Owner. Only when all DoD elements are done, we surely say that this is something we can potentially release when it will be needed.

"Curiosity killed a cat": don't report progress

Functionalities which meet requirements of Definition of Done are the first candidates to be shared on the Sprint Review. In the ideal Agile World, it’s not recommended to demonstrate not finished work. Everything we want to present should be potentially releasable… but wait! Could you agree that an ideal Agile World doesn’t exist? How many times external clients really don’t know how they want to implement something until they see the prototype.

Our company provides not only product development services but also consulting support. We don’t limit this collaboration to performing tasks, in most cases we co-create applications with our clients, advise the best solutions, and tackle their problems. Many times, there’s is no help from a Graphic Designer or a UX team. That happened to us. That’s why we have improved our Sprint Review. Since then we present not only finished work but also this in progress. Advanced features should be divided into smaller stories which can be delivered during one sprint. Final feature will be ready after a few sprints but completed parts of it should be demonstrated. It helps us discuss the vision and potential obstacles. Each meeting starts with work “done” and goes to work "in progress". What is the value? Clients trust us, believe in our ideas but at the same time still, have final word and control. Very quickly we can find out if we’re moving in the right direction or not. It’s better to hear “I imagined it in a different way, let’s change something” after one sprint than live with the falsified view of reality till the feature release.

"As you make your bed, so you must lie in it": don't inform about obstacle

Finally, even the best and ideal team can turn into the worst bunch of co-workers if they are not honest. We all know that customer satisfaction is a priority but not at all costs. It is not a shame to talk about problems the team met during the Sprint or obstacles we face with advanced features. Stakeholders need to know clearly the state of product development to confront it with the business teams involved in a project; marketing, sales or colleagues responsible for the project budget. When tasks take much more time than predicted, it’s better to show a delay in production and explain their reasons. Putting lipstick on a pig does not work. Transparency, which is so important is Scrum, allows all the people involved in the project to make good decisions for further product development. A Product Owner, as someone who defines the product vision, evaluates product progress and anticipates client needs, is obligated to look at the entire project from a general perspective, and monitor its health.

One more time, a stay-cool rule is up to date. Don’t panic and share a clear message based on facts.

We all want to implement the best practices and visions that make our life and work more productive and fruitful. Scrum helps with its values, pillars, and rules . But there is a long way from unconscious incompetence to conscious competence. It’s good to be aware of the problems we can meet and how to manage with them. “Rome wasn't built in a day”. If your team doesn’t use a Sprint Review but only a demo at the end of the sprint, just try to change it. As a team member, Scrum Master or Product Owner, observe, analyze and adapt continuously not only with your product but also with your team and processes.

Pro Tips:

- Adjust the time of a Sprint Review to accurate needs. For a 1-week Sprint, it’s one hour, for a 2-week Sprint give it two hours. It is a recommendation based on our experience.

- Have a plan. Create stable agenda and a brief summary of a Sprint and share it before meeting with everyone invited to a Sprint Review.

- Prepare yourself and a team. Coach your team members and discuss potential questions that can be asked by stakeholders.

- Facilitate meeting to keep all stakeholders interested and give everyone possibility to share feedback.

- Create a clear Definition of Done that is understandable by all team members and stakeholders.

- Be honest. Talk about problems and obstacles, show work in progress if you see there is value in it. Engage and co-create the product with your client.

- Try to be as neutral as it’s possible nor to praise or criticize. Focus on facts and substantive information.

Cloud Foundry Summit 2019 – continuously building the future

Cloud Foundry Summit 2019 in Philadelphia was the first event on our list of conferences we're heading to this year. It was an excellent season opening and another example that Cloud Foundry community is developing well. "Building the future" - this year’s motto is a good metaphor for the current situation. Cloud Foundry is evolving, and it's perfectly fine.

A quote from Abby Kearns, Executive Director of the Cloud Foundry Foundation, can summarize what's happening these days:

“We’re all kayakers now, navigating the rapids of change. The quickest learners will be the biggest winners.”

Abby Kearns started the summit with this powerful statement, and she addressed the fact that influence the way we develop our companies – technology is evolving at a rapid pace. The cloud-native landscape is continuously growing as new solutions and ideas are introduced on a regular basis. Often easy to use, but not developed enough to use them on production without taking some risk. How to choose the right technologies? When to adopt them? Should the whole application or component be rewritten? As Abby said, the quickest learners will be the biggest winners.

During the event, we had a chance to see how many of those tools have evolved into more mature and more customer-friendly apps. From the end-user perspective, it was promising to see them working together, as it gives a glimpse of how it may look in the future.

Cloud Foundry loves Kubernetes

One of the hottest topics in the corridors was for sure the Eirini project. This is a perfect example of how software should evolve – and yes, Cloud Foundry Foundation isn’t too proud to admit that Kubernetes is better than Diego/Garden in particular cases. This is how one open source should work with another.

A year ago one could ask if Kubernetes will push Cloud Foundry into the shadow. The question was wrong – how could we compare apples and oranges? To simplify things, we can call Cloud Foundry an Application PaaS, while Kubernetes can be described as a Container PaaS. This year we’re discussing how they can work together. As this seems to be the right question the Eirini project may be the right answer.

We can't wait to hear more about Cloud Foundry and Kubernetes collaboration. Especially given the fact that the project was proclaimed to pass core functional tests and it is ready early adopters. Learn more about Project Eirini

Interoperability

One of the keywords announcing the conference was interoperability. Eirini is a good example of that. TheCloud Foundry project continues to integrate with other open source projects to provide more capabilities for users. Platform maturity allows to incorporate new tools easily and makes the whole process user-friendly.

Worth noting is the fact that all of these tools are somehow used (or will be used soon) in ongoing projects, so it's not just state of the art. This is for sure a hard work of the community experts that help Cloud Foundry stay ahead of the curve.

The Comcast story

Philadelphia is home to a large Cloud Foundry member – Comcast. The company story of successful digital transformation is a practical guideline on how it should look like. In this year's agenda there was no case study about it, but anyway it is worth mentioning and congratulating the Comcast crew. This technology voyage and company restructuring – to be explicit, breaking siloses – should be an inspiration to all of us.

Cloudboostr

It wouldn't be an honest review without writing some personal summary of how do we feel about our participation in the summit. As an IT consulting company, we were more than excited to be a Silver Sponsor of a conference which gathers hundreds, if not thousands of cloud experts in one place.

Besides an engaging business case study of helping an established company to go through a digital transformation, we've also seen a huge interest in our cloud platform - Cloudboostr . A lot of companies want to automate their deployment & delivery tools. Becoming a cloud expert is a long and demanding process for firms that need to be focused on running their business operations. Majority of the world's top enterprises collaborate with external teams. Some of them use proven solutions that help them adjust to the fast-changing environment. A complete cloud platform (not only PaaS) meets market needs perfectly, and we were happy to tell our new friends about it.

As the "kayakers" we know that it's a long way to go and we'll be happy to share our knowledge and experience in order to help our customers move forward.

Bringing visibility to cloud-native applications

Working with cloud-native applications entails continuously tackling and implementing solutions to cross-cutting concerns. One of these concerns that every project is bound to run into comes to deploying highly scalable, available logging, and monitoring solutions.

You might ask, “how do we do that? Is it possible to find "one size fits all" solution for such a complex and volatile problem?” You need to look no further!

Taking into account our experience based on working with production-grade environments , we propose a generic architecture, built totally from open source components, that certainly provide you with the highly performant and maintainable workload. To put this into concrete terms, this platform is characterized by its:

- High availability - every component is available 24/7 providing users with constant service even in the case of a system failure.

- Resiliency - crucial data are safe thanks to redundancy and/or backups.

- Scalability - every component is able to be replicated on demand accordingly to the current load.

- Performance - ability to be used in any and all environments.

- Compatibility - easily integrated into any workflows.

- Open source - every component is accessible to anyone with no restrictions.

To build an environment that enables users to achieve outcomes described above, we decided to look at Elastic Stack, fully open source logging solution, structured in a modular way.

Elastic stack

Each component has a specific function, allowing it to be scaled in and out as needed. Elastic stack is composed of:

- Elasticsearch - RESTful, distributed search and analytics engine built on Apache Lucene able to index copious amount of data.

- Logstash - server-side data processing pipeline, able to transform, filter and enrich events on the fly.

- Kibana – a feature-rich visualization tool, able to perform advanced analysis on your data.

While all this looks perfect, you still need to be cautious while deploying your Elastic Stack cluster. Any downtime or data loss caused by incorrect capacity planning can be detrimental to your business value. This is extremely important, especially when it comes to production environments. Everything has to be carefully planned, including worst-case scenarios. Concerns that may weigh on the successful Elastic stack configuration and deployment are described below.

High availability

When planning any reliable, fault-tolerant systems, we have to distribute its critical parts across multiple, physically separated network infrastructures. It will provide redundancy and eliminate single points of failure.

Scalability

ELK architecture allows you to scale out quickly. Having good monitoring tools setup makes it easy to predict and react to any changes in the system's performance. This makes it resilient and helps you optimize the cost of maintaining the solution.

Monitoring and alerts

A monitoring tool along with a detailed set of alerting rules will save you a lot of time. It lets you easily maintain the cluster, plan many different activities in advance, and react immediately if anything bad happens to your software.

Resource optimization

In order to maximize the stack performance, you need to plan the hardware (or virtualized hardware) allocation carefully. While data nodes need efficient storage, ingesting nodes will need more computing power and memory. While planning this take into consideration the number of events you want to process and amount of data that has to be stored to avoid many problems in the future.

Proper component distribution

Make sure the components are properly distributed across the VMs. Improper setup may cause high CPU and memory usage, can introduce bottlenecks in the system and will definitely result in lower performance. Let's take Kibana and ingesting node as an example. Placing them on one VM will cause poor user experience since UI performance will be affected when more ingesting power is needed and vice-versa.

Data replication

Storing crucial data requires easy access to your data nodes. Ideally, your data should be replicated across multiple availability zones which will guarantee redundancy in case of any issues.

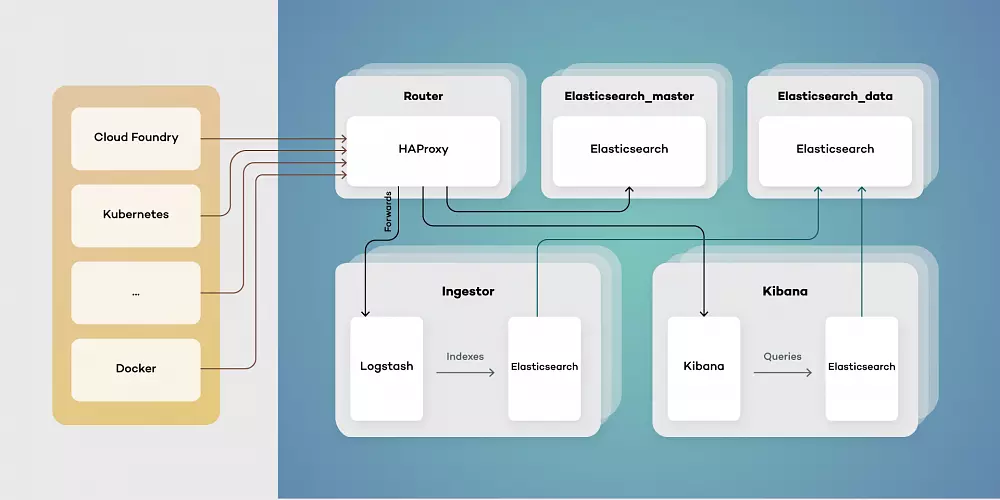

Architecture

Our proposed architecture consists of five types of virtual machines - Routers, elastic masters, elastic data, ingestors, and Kibana instances. This toolset simplifies scaling of components while separating their responsibilities. Each of them has a different function:

- Elasticsearch_master - controls indexes and Elasticsearch master. Responsible for creating new indexes, rolling updates and monitoring clusters health.

- Elasticsearch_data - stores data and retrieves it as needed. Can be run both as hot and warm storage, as well as provides redundancy on data.

- Ingestor - exposes input endpoints for events both while transforming and enriching data stored in Elasticsearch.

- Kibana - provides users with visualizations by querying Elasticsearch data.

- Router - serves as a single point of entry, both for users and services producing data events.

Architecting your Elastic Stack deployment in this way allows for the simple upgrade procedure. Thanks to using a single point of entry, switching to a new version of Elastic Stack is as simple as pointing HAProxy to an upgraded cluster.

Using a clustered structure also allows for freely adding data nodes as needed when your traffic inevitably grows.

How to successfully adopt Kubernetes in an enterprise?

Kubernetes has practically become the standard for container orchestration. Enterprises see it as one of the crucial elements contributing to the success of the implementation of a cloud-first strategy. Of course, Kubernetes is not the most important success factor in going cloud-native. But the right tooling is the enabler for achieving DevOps maturity in an enterprise, which builds primarily on cultural change and shift in design thinking. This article highlights the most common challenges an enterprise encounters while adopting Kubernetes and recommendations on how to make Kubernetes adoption smooth and effective in order to drive productivity and business value.

Challenges in Kubernetes adoption

Kubernetes is still complex to set up. Correct infrastructure and network setup, installation, and configuration of all Kubernetes components are not that straightforward even though there are tools created with the goal to streamline that part.

Kubernetes alone is not enough. Kubernetes is not a cloud-native platform by itself, but rather one of the tools needed to build a platform. A lot of additional tooling is needed to create a manageable platform that improves developers’ experience and drives productivity. Therefore, it requires a lot of knowledge and expertise to choose the right pieces of the puzzle and connect them in the right way.

Day 2 operations are not easy. When the initial problems with setup and installation are solved, there comes another challenge: how to productionize the platform, onboard users, and manage Kubernetes clusters at scale. Monitoring, upgrading & patching, securing, maintaining high availability, handling backups – these are just a few operational aspects to consider. And again, it requires a lot of knowledge to operate and manage Kubernetes in production.

Another aspect is the platform’s complexity from the developer’s perspective. Kubernetes requires developers to understand its internals in order to use it effectively for deploying applications, securing them and integrating them with external services.

Recommendations for a successful Kubernetes adoption

Choose a turnkey solution – do not build the platform by yourself as the very first step, considering the aforementioned complexity. It is better to pick a production-ready distribution, that allows to set it up quickly and focus on managing the cultural and organizational shift rather than struggling with the technology. Such a solution should offer a right balance between how much is pre-configured and available out-of-the-box, and the flexibility to customize it further down the road. Of course, it is good when the distribution is compatible with the upstream Kubernetes as it allows your engineers and operators to interact with native tools and APIs.

Start small and grow bigger in time – do not roll out Kubernetes for the whole organization immediately. New processes and tools should be introduced in a small, single team and incrementally spread throughout the organization. Adopting Kubernetes is just one of the steps on the path to cloud-native and you need to be cautious not to slip. Start with a single team or product, learn, gain knowledge and then share it with other teams. These groups being the early adopters, should eventually become facilitators and evangelists of Kubernetes and DevOps approach, and help spread these practices throughout the organization. This is the best way to experience Kubernetes value and understand the operational integration required to deliver software to production in a continuous manner.

Leverage others’ experiences – usually, it is good to start with the default, pre-defined or templated settings and leverage proven patterns and best practices in the beginning. As you get more mature and knowledgeable about the technology, you can adjust, modify and reconfigure iteratively to make it better suit your needs. At this point, it is good to have a solution which can be customized and gives the operator full control over the configuration of the cluster. Managed and hosted solutions, even though easy to use at the early stage of Kubernetes adoption, usually leave small to no space for custom modifications and cluster finetuning.

When in need, call for backups – it is good to have cavalry in reserve which can come to the rescue when bad things happen or simply when something is not clear. Secure yourself for the hard times and find a partner who can help you learn and understand the complexities of Kubernetes and other building blocks of the cloud-native toolset. Even when your long-term strategy is to build the Kubernetes skills in-house (both from development and operations perspective).

Do not forget about mindset change – adopting the technology is not enough. Starting to deploy applications to Kubernetes will not instantly transform your organization and speed up software delivery. Kubernetes can become the cornerstone in the new DevOps way the company builds and delivers software but needs to be supported by organizational changes touching many more areas of the company than just tools and technology: the way people think, act and work, the way they communicate and collaborate. And it is essential to educate all stakeholders at all levels throughout the adoption process, to have a common understanding of what DevOps is, what changes it brings and what are the benefits.

Adopting Kubernetes in an Enterprise - conclusion

Even though Kubernetes is not easy, it is definitely worth the attention. It offers a great value in the platform you can build with it and can help transition your organization to the new level. With Kubernetes as the core technology and DevOps approach to software delivery , the company can accelerate application development, manage its workflows more efficiently and get to the market faster.

Why nearshoring may be the best choice for your software development

Adapting the latest technologies obligates executives to decide if they should build an in-house team or outsource demanding processes and hire an external team. And while outsourced projects become more sophisticated, leaders responsible for making decisions are taking into account more factors than just cost savings. Here comes nearshoring.

Disruptive economy, the fast-changing landscape of cutting-edge technologies, and extremely demanding customers – regardless of the established position on the market, today’s most powerful enterprises need help to retain once gained a competitive advantage . Most of the world’s largest companies are undergoing radical changes that focus on adapting the latest technology and game-changing approaches to company culture.

To embrace digital transformation and get the most out of it, leaders in their fields – automotive, telco, insurance, banking, etc. – utilize knowledge and experience of external teams. As this collaboration is getting more recognition it also takes more sophisticated forms. For decades, the biggest enterprises have been opening their branches in different countries or delegating parts of their processes to specialized teams outside the organization – this is the way outsourcing and offshoring were born.

And while it works perfectly for many business fields, companies that implement the most comprehensive technologies, rebuilding their core businesses, and trying to adopt promising approaches to software delivery, need a solution that is more sophisticated.

What is nearshoring?

Delegating tasks to external teams is full of benefits, but also has some real treats – like cultural differences in communication between teams, challenges to manage processes remotely, disruption in transforming knowledge, and many more. After years of testing good and bad experiences, some enterprises have mastered the most effective way to delegate job – nearshoring.

Nearshoring is often described as the outsourcing of services, especially regarding the newest technologies, to companies in nearby countries. In practice, it means that an enterprise from Germany hires a company from Poland to develop a particular project like building an app or implementing software. Nearshoring is often used by brands expanding their services to the new yet advanced business fields, where building and an in-house team responsible for a given area would be too expensive and challenging.

Why do enterprises prefer nearshoring over offshoring?

While using nearshoring and offshoring comes to some common profits – cost savings, tax benefits, finding skilled professionals in given niches, the first one supposed to be an answer to the challenges addressed in a previous part. Companies that complain about their experiences with offshoring too often make the same mistakes. They decide on moving their processes to the far-distant countries regardless of cultural differences and working style. They focus on thing easier to measure, like financial results and don’t take into consideration less obvious factors.

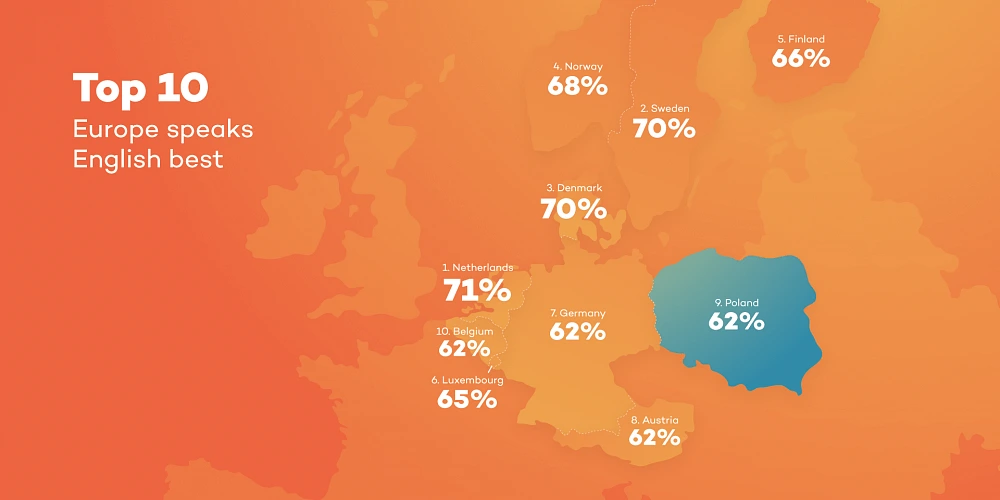

Backed with that experiences, enterprises from various industries have started to collaborate with teams of professionals that have more in common with their employees; geographically, by living in the same part of the world, often sharing a border, and culturally – by belonging to similar cultural circle, sharing common working culture, and being at a comparable level of language used in project (English, first!).

According to many leaders responsible for workforce management, when deciding on hiring a company that have to help you with improving your business, especially if you're trying to implement complex technology, you should pay at least as much attention to the communication and soft skills as to the know-how and experiences in working on similar projects.

Where do enterprises from Western and Northern Europe look for partners?

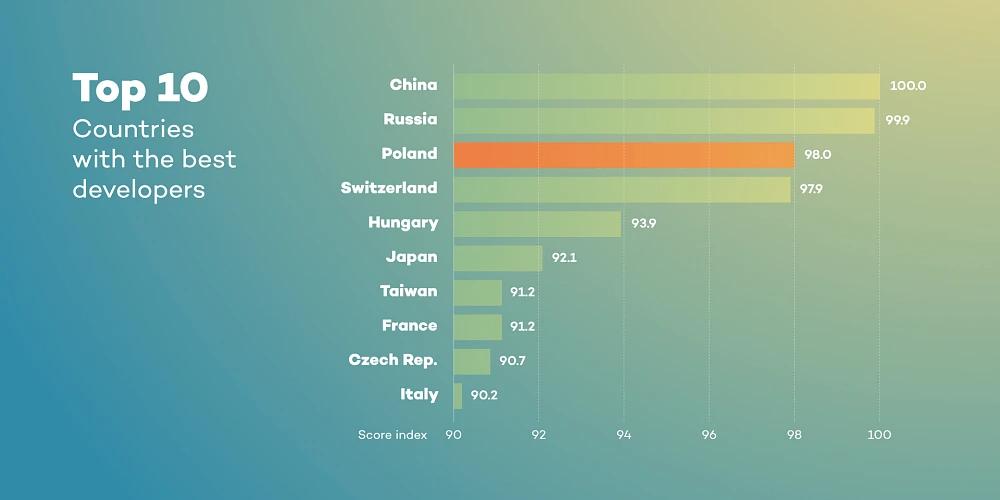

Agile teams from Central Europe that consist of experts in transforming businesses, implementing DevOps culture, and enabling cloud-native technologies are getting a strong recognition among established companies from Germany, France, UK. Norway and Italy. Professionals in Poland, Czechia or Hungary are known for their skills, language proficiency, and working culture. And it has never been more difficult to find some real differences in the ways people from these parts of Europe live.

All these things make collaboration more fluent and easier to manage. Partnering with companies from the same time zones can also save the cost of communication and transport, and makes them available during similar working hours.

Taking into account flexibility, language skills, technical knowledge, experience in various international projects, lower operational costs and cultural similarities to the leading European countries, companies from countries like Poland have become natural nearshoring hubs.

Collaboration with top enterprises from a nearshoring partner’s perspective

For the last couple of years, Grape Up has been working as a nearshoring partner with the most recognized brands in various industries like leading automakers from Germany and large telcos from Italy and Switzerland. These experiences have helped our team develop soft skills needed to get the most out of the cooperation, both for hiring enterprises and our employees who can now master their expertise working on demanding projects for the most competitive businesses.

We have to admit that our competitive advantage may seem unfair. We’ve gathered a huge team of experienced engineers familiar with the latest cloud-native technologies, Open Source tools, DevOps and Agile approaches. They feel well in the international environment, speak fluent English, and are good in adapting to a new working style. We have two R&D centers in Poland and a few offices in Europe. We use experience amassed through the years of working with companies that are willing to be early adopters of cutting-edge technologies and innovative methodologies. We’re active contributors to cloud-native and open source communities, we attend top conferences and industry events – that give us direct access to knowledge and innovative ideas. Being ahead of the competitive crowd allows us to focus on the most promising projects.

If your enterprise is working on digital transformation, trying to implement DevOps or adapt cloud-native technologies and you need some support or consultancy reach us without a doubt . We are responsible for numerous successful migration to the cloud, enabling cloud platforms, transforming legacy software into cutting-edge applications, and tackling business challenges that at first sight might seem unsolvable.

Highlights from KubeCon + CloudNativeCon Europe 2019 – Kubernetes, Service Mesh Interface, and Cloudboostr

This year’s KubeCon was a great occasion to sum up 5 years of Kubernetes and outline its future. It was also a great place to make some important announcements, and we got it. Our team spent those days not only on learning about new features and networking with this amazing community but also talking about how Cloudboostr can impact the adoption of cloud-native technology .

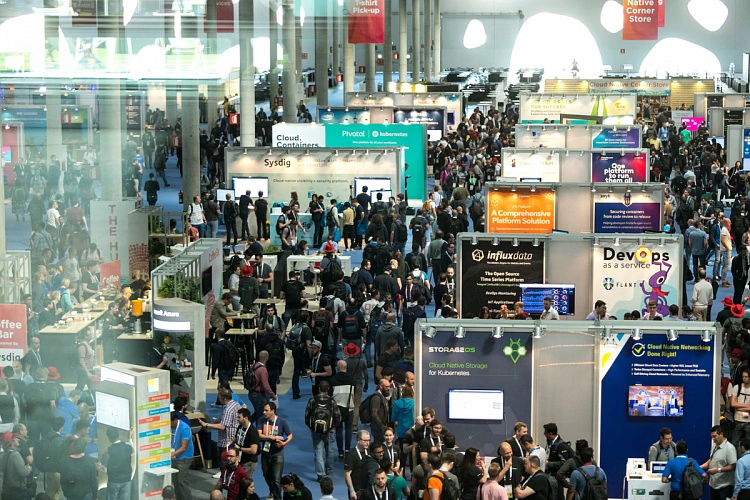

Grape Up team joined 7,700 attendees, top enterprises, media and analysts in Barcelona, Spain at KubeCon + CloudNativeCon Europe 2019 to discuss what’s new in Kubernetes, open source and cloud-native, learn from the most inspiring people in the industry, network with peers from different projects and together work on developing the community gathered around the Cloud Native Computing Foundation.

KubeCon + CloudNativeCon Europe 2019: Kubernetes is thriving

This year’s KubeCon provided us with many promising news and announcements. From the very first keynote to the last workshop, a strong focus was on leading technologies, mainly Kubernetes, and its improvements. Dan Kohn, Executive Director at CNCF, in his opening keynote, presented the Kubernetes place in the cloud-native environment and explained why Kubernetes, like many technologies and applications before, is winning the race called simultaneous invention.

Dan Kohn used engaging storytelling, to present that from ages people have been working on similar ideas at the same time, taking advantage of works done before them. He stressed the fact that there wouldn’t be Kubernetes without technologies, that had built the foundations. He gave us a glimpse of what, in his opinion, makes Kubernetes standing from the crowd:

- It works really well!

- Vendor-Neutral Open Source and Certified Partners.

- The People that develop and promote it.

The end of the opening keynote was strong – all the members of the community gathered around Kubernetes and CNCF have an impact on the technology improvements, as Kubernetes is a foundation on which many new technologies arise.

Hot news: Service Mesh Interface

Microsoft stole the show announcing the launch of Service Mesh Interface, an open project that defines a set of common APIs that provide developers with interoperability across different service mesh technologies.

The Service Mesh Interface includes:

- A standard interface for meshes on Kubernetes.

- A basic feature set for the most common mesh use cases.

- Flexibility to support new mesh capabilities over time.

- Space for the ecosystem to innovate with mesh technology.

This announcement from Microsoft led to many discussions during the conference. As the service mesh is a technology at the early stage of its development, an environment designed for growth should impact its further evolution. SMI is supposed to provide interoperability that will help the emerging ecosystem of tools integrate with existing mesh providers, and instead of doing it individually, gain cross-mesh capabilities through SMI. We’re looking forward to seeing how it will affect the developers' job and help to solve customer problems in the nearest future.

Cloudboostr – an enterprise-grade Kubernetes

What makes KubeCon + CloudNativeCon a special event is an incredible capability of CNCF to engage so many people representing multiple tech and business areas to collaborate together on developing a brighter future for the cloud-native ecosystem. As Dan Kohn said at the beginning of the conference, Kubernetes and parts of the cloud-native landscape build the foundation for the technologies of the future. We at Grape Up also want to participate in this process of developing cloud-native technology and helping various companies implementing Kubernetes.

This is why KubeCon + CloudNativeCon was a great occasion to talk with the end users and developers about their adoption of cloud-native tools. They had a chance to learn more about Cloudboostr, our cloud-native software stack, built with Kubernetes at its core , and allowing companies to stand up a complete cloud-native environment in a couple of hours. Our experts spent those days in Barcelona on discussing the evolution of Kubernetes, its business use cases, and ways to get the most of its capabilities. Our team value this kind of events the most – it's crucial for our product development and a better understanding of people’s challenges and business needs.

Seeing companies highly interested in Cloudboostr capabilities, we are encouraged to work even harder on new improvements. And this the clue to the Kubernetes success – by building a community that inspires other members to grow and connecting them to accelerate the development of associated services, CNCF has created a self-engaging mechanism that helps Kubernetes thrive and acquire new users. This strategy makes Kubernetes an important part of the technology development as a whole, which with every year becomes even more impressive.

What is it like to work on an on-site project?

Have you ever considered working abroad on an on-site project? Or maybe you have already tried it? Whether it's one of your goals for the upcoming year or you are just curious what the pros and cons are, it's always worth to explore other people's perspectives and scenarios of their episodes abroad. So, based on a recent episode of mine, let me share a few thoughts on the topic myself.

Different kinds of professional life abroad

There are a variety of ways to get a feeling of what it's like to work overseas. The first one is that you can get hired and work in a foreign country for a few years. Another option is to be employed by a company in your country, but work on a project abroad for, let’s say, half a year. Last but not least, you can travel to different places for just a few days on a weekly basis. My own experience below is based on a short-term contract with one of Grape Up’s US-based customers but can apply to any kind of professional experience in a foreign country in general.

Professional benefits of working on-site

While being involved in any type of project abroad you get to experience the global marketplace and have a chance to learn new ways of doing business. Interacting with people born and raised in a different country lets you understand their work culture, ethics and point of view. Failing to understand the culture norms is often a source of conflicts within geographically dispersed teams. For example, it takes time to become aware of different ways an email or conversation could be interpreted, both as a sender and a receiver. And although being on-site is not the only way to gain that experience, it is usually the most efficient and authentic one. Since you are able to meet face-to-face, you get to see the direct perspective of your international customers and peers.

Working on-site comes with all the benefits of a collaborative workspace. It goes without saying that it's easier to explain something face-to-face rather than on the phone or, let alone, via email. Especially when dealing with complex or urgent topics. There are no internet connection issues, there is just one time zone and there is a space for body language, which is quite an underestimated type of communication these days. In fact, social and teamwork-style settings are a perfect way to boost all kinds of interpersonal communication skills. Also, there is probably no better way to learn a foreign language than to be around people who use it every day, especially when those people happen to be native speakers.

Potential challenges of working on-site

Even though most of us prefer to work in a team, there are also those who like to work alone. Actually, there is a very good chance that even the most active and social teammates will need a moment alone every once in a while. Just a moment to zone out, avoid the potential distractions, focus and get their creative juices flowing. And that’s not always possible when you work on-site. If you travel to another office for a relatively short period of time to meet your customer or coworker, you want to make the most out of your visit. So usually you end up spending most of the time actively collaborating with others and there will be little time for individual work. Not only is it more intense but it also takes a lot of discipline and flexibility, which some may find quite challenging, especially at the beginning.

Another thing is that travelling to one office means missing out on events and meetings happening in the other office. You are solving a problem of your absence in one place but at the same time you are creating a similar problem somewhere else. So, it's always a matter of choosing which place is more beneficial to you and your company at a given moment.

Personal pros and cons of living abroad

What does living abroad really come with? Well, this part can vary in as many ways as there are people who have ever lived in a foreign country for a while. Some point out the ability to explore new places, cultures and cuisines. Others are happy to learn or practice their language skills. There are also those who do the exact same things as they would do in their hometowns, with the exception of leveraging the presence of local people and resources. Whether you choose to immerse yourself in a country or not, living abroad always gives you new perspectives and new ways of looking at things. And that's gold.

Of course, there are also the downsides of being away from your home country. Depending on what your current situation is, it very often means that you have to leave your home, family and friends for some period of time. You need to learn how to live without some people and things you got used to having around, or you can find a way to take them with you. For some people it can also be overwhelming to deal with all the cultural differences, local habits and the number of new things in general. It all depends on how open and flexible you are.

Summary

Getting a taste of living and working abroad has become very popular these days - and not without a reason. For one, it is easier than ever before, and it also gives you countless benefits and the kinds of experiences you wouldn't gain in any other way. So, if it has ever crossed your mind and if you ever come across such an opportunity , don't hesitate to take it.

The state of DevOps – main takeaways after DOES London

DevOps is moving forward and influences various industries, changing the way companies of all sizes deliver software. Few times a year, the community of DevOps experts and practitioners gathers at a conference to discuss the latest trends, share insights, and exchange best practices. This year’s DevOps Enterprise Summit in London was one of these unique chances to participate in this uplifting movement.

When our team got back after DevOps Enterprise Summit in London, we set an engaging, internal discussion. It’s probably a common attitude for every company valuing knowledge exchange, that once attending some interesting conference, your representatives share insights, their thoughts, and news regarding the topics covered during the event. The discussion arose when members of our team had started sharing their takeaways regarding keynotes, speeches, and ideas presented at the conference.

That opened the stream of news and opinions shared by those of our teammates who also follow the latest trends in the industry by attending various meetups, listening to podcasts, etc. Here is the list of the main topics.

DevOps 2nd day – introduction

DevOps is no longer one of these innovative ideas for early adopters, which everyone has heard about but is not aware of how to start with adopting it. Now, it’s a must-have for every organization that intends to stay relevant in competitive markets. When you ask enterprises about using DevOps in their organizations, their representatives will tell you that they have already implemented this culture or are in the process of doing that. On the other hand, if you ask them if they are already satisfied with the adoption, the answer would be no – there are so many practices and principles, what makes the process demanding and it lasts a while.

Nowadays, discussions from “How to implement DevOps in our organization” have evolved into “How can we improve our DevOps practices.” The truth has been told - tech advanced companies need this agile culture to build a successful business. But simultaneously, once they introduce DevOps to their teams, new challenges occur. It’s a natural way of technology/culture adoption. As a person responsible for the cultural shift, you have to communicate it clearly – DevOps wouldn’t solve all your issues. In some cases, it may seem like a reason for some new struggles. The answer to these concerns is simple, your organization is growing, evolution is never done, and change is a constant way of managing things.

Facing DevOps 2nd Day issues is rather the rich man’s problem – you should be there, and you have to tackle them. All the new challenges appear after making an advanced step forward.

Scaling Up - from a core tech team to the entire organization working globally

Core tech teams are the first to adopt the newest solutions, but they cannot work properly without supportive teams (HR, Sales, Marketing, Accounting, etc.). After going through the successful implementation, the next step is to encourage cooperating teams to this mindset and ways of running projects.

As the enterprises that consist of thousands of employees and hundreds of teams cannot provide their crew with the flexibility in designing their very own working culture, there is a need to encourage all teams to once implemented practices.

For tech leaders, responsible for introducing DevOps in their teams, it means that their job evolves to being a DevOps advocate, who presents its value to the whole organizations and makes it a commonly known and used approach. The larger the company is the more complex the entire change becomes, but it's unavoidable when you intend to get the most out of it.

Along with advocating for expanding DevOps in the entire organization, also the very challenging job is to determine the right tech stack. New tools come and go, being responsible for selecting to the most useful toolset that will be in use for a significant period is tough and requires overall knowledge, strategy, and deep understanding of tech processes. Once determined toolset should be recommended to cooperating teams and that may provoke new issues, but is unavoidable. Should you leverage the same tech stack for all teams? When is the right time to adopt new tools? Should you leave it all to the team? Well, there is no right answer to any of these questions, and it highly depends on the situation.

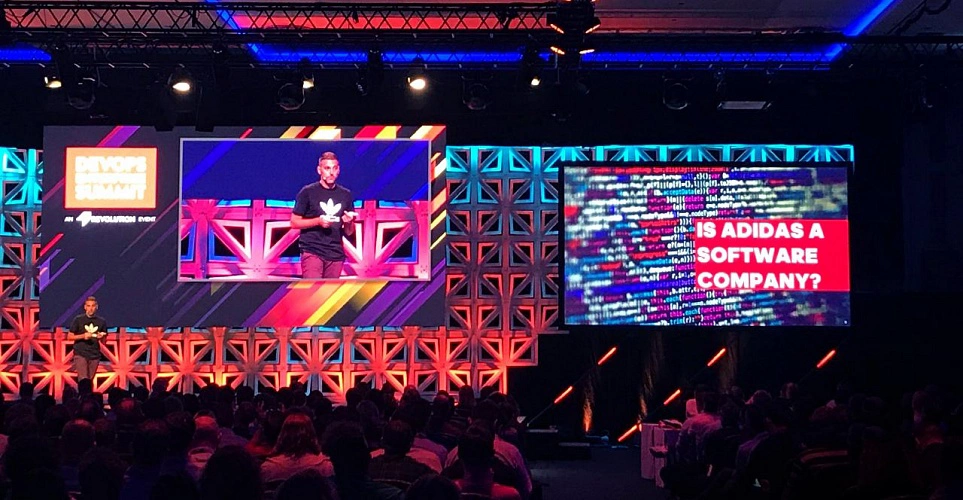

DevOps is changing various industries and not limit itself to tech companies

Attending DOES in London was a great opportunity to learn more about how DevOps influences the world’s coolest companies, not often associated with technology. Let’s look at the two of the most recognized sportswear retailers - Adidas and Nike. Both these brands are synonyms to heroism, activity, sports achievements. But, as their representatives presented, both companies can overshadow many of tech brands, with their DevOps maturity and advanced approach to using technology in growing their businesses.

Following these business cases, we can agree that the time when cutting-edge technologies and methodologies often paired with them are limited to IT companies is officially over. Nowadays, industry by industry is convincing themselves to the latest solutions as developing software for internal processes is a natural competitive advantage.

Continuous adaptation and life-long learning

The best thing about working in a DevOps culture is that you just cannot say that the process has finished, that a company has transformed, and that a team has mastered the way of delivering software. Taking into account how creative the community gathered around DevOps is, how fast new ideas arise, how often its fundamentals are improved, you have to keep learning about new things.

It would be extremely comfortable if a company could once undergo digital transformation and treat the process as a completed. But if we take a look at the evolution of technology and methodologies designed to take full advantage of its capabilities, it’s obvious that it cannot be finished. Adoption of a DevOps mindset is the beginning of a change and should be conducted as a never-ending evolution.

You can’t be good at everything, which is fine, but you have to know your pain points

Excluding enterprises with enormous budgets, all organizations have limitations that obligate them to focus only on some aspects of conducting business processes. As an expert, a professional who works in a highly competitive market, you have to follow the latest trends, be aware of upcoming solutions, and cutting-edge technologies that are reshaping the business.

Being up to date is extremely important, but almost equally essential is the ability to decide on which things you cannot engage, as your time and resources are not flexible. Being responsible for your company means being aware of pain points and focusing only on the things that matter. Technology is developing extremely fast, you cannot afford to be an early adopter of every promising solution. Your job is to make responsible decisions, based on your deep understanding of the current state of technology development.

If you don’t know what to choose, think about what’s better for your business

DevOps came to being as an efficient solution to the common challenge - how to sync software development and IT operations processes to help companies thrive. Built with business effectiveness in mind, this culture has the right foundations. Choosing approaches that were designed to resolve not only internal issues but also to enable revenue growth is good for your overall success.

Anytime you face a situation when you have to decide between different solutions, always consider your company's long term perspective. When you are focused only on your goals, you may contribute to building siloses. The key to determine which ideas are the right to choose is their overall usability. We all, as professionals in our niches, may tend to prefer idealistic solutions. It’s important that we don’t work in an ideal world and our job is verified by the market.

Adopting new tools and technologies is challenging, but the real quest appears when it comes to change people's habits and company culture

If you want to make your colleagues angry, implement new toolset and new technologies in your team. Apart from tech freaks and beta testers, people are rather skeptical when it comes to learning new features and new UI. Things change when you provide them with solutions that make their work easier and more efficient.

But the real trouble occurs when you are trying to change your company's culture. It’s nothing new that we protect what we know, don’t want to change our habits, or even feel in danger when someone is trying to reshape the way we have been doing our job for ages. Your colleagues defend themselves which is natural, you cannot change it. You have to take this into account and make sure that the process will be smooth enough to help everyone adjust to the new reality. Start with small steps, be the example, discuss the issues, and explain potential opportunities. Shock therapy as a path to the cultural shift is not the way to go.

As a team developing our product - Cloudboostr - multicloud, enterprise-ready Kubernetes, we help companies adopt a complete cloud-native stack, built with proven patterns and best practices, so they could focus their resources on improving their working culture. The feedback we’re receiving is that our customer’s teams are more open to start using new toolset then to change the approach to software delivery.

Being a DevOps Pprofessional is hot now

DevOps practitioners are much in demand. It’s a great time to master the skills required to be a specialist in DevOps as companies of all sizes are looking for help in modernizing their businesses. There are various ways of approaching it - by building an in-house team, outsourcing processes, collaborating with external consultants. Companies choose preferred manner accordingly to their needs and budget.

No matter if you work in a dedicated team at a huge enterprise, developing startup with your colleagues, or providing consulting services for global brands, being a DevOps expert is a strong competitive advantage on the talent market.

DOES London - sum up

DevOps is moving forward and is great to be among teams that contribute to its evolution. We are willing to share our expertise , exchange knowledge, and learn from the best in the business, and conferences like DevOps Enterprise Summit are the best platforms to do it.

Serverless - why, when and how?