Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

STAMP - Satisfying Things About Managing Projects

Project Managers really are in the driver’s seat when it comes to creating new products. Compare it to a bumpy road - there are deadlines, a solid dose of uncertainty, and plenty of planning along the way, but on the flip side, there also these truly satisfying moments associated with that position which I tend to label as: Satisfying Things About Managing Projects. And here they are:

Making a difference

As the stakes get higher, the vision of taking the lead and being in charge can be quite paralyzing for some. Others on the other hand will find it really satisfying. This is why the role of an effective Project Manager is so important. A person on such position will lead with an understanding that if they drive an idea or IT project towards success, a real difference can be made. No one will ever know the world we would live in if all those ideas and projects had been executed perfectly. What we can imagine, though, is the impact and value that can be added not only to the business, but through the world at large.

Building amazing things

This one is self-explanatory. Regardless of market competition and the constraints provided by stakeholders, it feels good to know that you are creating something that will meet or exceed expectations. Whether your task is to solve a complex problem, improve an already existing solution or create something new, yet desired, it is crucial to believe that you can do great - if only you are able to pass that feeling on your team, it will motivate them to work hard and accomplish amazing things together.

Being always able to perform better

Think about the spreadsheets tracking status of a project. How do they make you feel? Motivated and excited? Most likely not. At first thought, we do not think of spreadsheets as the beginning of achieving a goal. According to the SMART principle, goals should be measurable. I realized that during my time as a software developer, I found it difficult to set measurable goals. I would always think to myself: How do I measure this if the architecture I created is already good? How can I quantify whether my code was easy to maintain and extend, so I know how much better it gets over time?

As a Project Manager this is no longer the case - you are surrounded by precise numbers, relentless dates, and unswerving percentages. You know what are the costs, you know the ROI (return of investment) and plenty of other metrics. You are able to compare this to the rules in sports where you know your scores and all the statistics. The environment is perfect for setting and reaching measurable goals and endless opportunities to adapt and perform better.

Quick throwback to the spreadsheets. Can you see all the available opportunities now?

Watching people grow

Being a Project Manager, just like any other manager, gives you the opportunity to work with many people. Some may see this as one of the most difficult aspects within the Project Manager role. But it is important to keep in mind, that in this role you have the chance to create an open communication and bring your team together in order to be more efficient. A manager also has the opportunity to help individuals grow and develop from the very beginning of a project till its very end . Knowing you had a hand in someone's development is very gratifying.

Overcoming challenges

There are plenty of them. During the execution of every project, regardless of internal or external factors, a lot of unexpected things can happen.

You need to adapt and react quickly to be most efficient at change and risk management. Not only that, but also today’s solutions are the beginning of tomorrow’s problems. This can perhaps be a pessimistic way to see things, but there is a grain of truth in it. There will always be challenges to overcome. The beauty is that after achieving any milestone, you can take a look back from the top of that mountain. From that peak, you will be able to see this steep and bumpy road, all the past iterations and realize that these were the things that you and your team have overcome to get to the top. This is truly a great feeling of pride, reward, satisfaction and accomplishment.

Server Side Swift – quick start

Building Swift web applications allows you to reuse the skills you already have. However, you can also start from scratch if you are not an ”iPhone mac„ developer. This article shows you the most popular Server-Side Swift frameworks and lets you dive into the world of faster and safer APIs using Swift.

Swift was released in 2014. Ever since then, it has become a popular solution for the iOS and macOS development. Since Swift was the open source and people started playing with it. There were a lot of attempts to find other usages of Swift. They turned out to be perfect for the server-side while being faster and safer than other languages. The next step in the process was to apply Swift to the server-side development. Here, it is important to mention that Swift can be compiled on macOS as well as Linux and even Windows. Moreover, some frameworks allow developers to create cloud applications .

Just after Server-Side Swift has been released I felt the urge to take a quick look on it. It was really hard to tell if it at that point, it was ready for commercial use. Now, as I look at it, I would say it is stable enough. So let’s try to compare the most popular frameworks. Personally, I would recommend trying each framework before you even pick the one that works best for you – there are slight differences between them.

Currently, the most popular Server-Side Swift frameworks are Vapor, Perfect and Kitura. There are also a few other ones such as Zewo and NozelIO, however they’re not as popular, but that doesn’t take away from their value. As a matter of fact, I looked into each framework and my verdict is: Perfect and Vapor.

Why Server-Side Swift?

A few years ago, when Server-Side Swift was still new to me, I wasn’t convinced that it would be the optimal language for backend specific. Why? Because it worked just like every other backend solution and there was nothing particularly specific in it making it special, but still for me as an IOS developer it allowed me to be at a full-stack. I know Java and JS and, in my opinion, the newest technologies are good to get hold of mainly because they let us become better developers. I haven’t found too many tutorials or articles about it. Certainly not as many as there are about Java or JS. Therefore, you have to create lots of things on your own. In my opinion, Swift is also faster than other languages. If you want to compare it with others, here is the benchmark server-side swift frameworks vs nodeJS.

Tools

SPM - Swift Package Manager - manages the distribution Swift code, integrated with the build system to automate downloading, compiling and linking dependencies process runs on Mac and Linux.

XCode – Apple IDE to compile and debug Obj-C and Swift code.

AppCode – Jetbrains IDE for compile and debug Obj-C and Swift code.

Other text editors like Brackets, VS Code, Sublime etc. + SPM

There are also a bunch of very specific tools for the frameworks and I will tell a bit more about that in a quick overview below.

Overview

Vapor

It has a very active community and simple syntax as well. This framework is mostly focused on being written purely in Swift. Just as the Vapor - Swift is a very readable and understandable API, due to the fact that it keeps the naming and conventions.

Data formats:

JSON, XML

Databases:

MySQL, PostgresSQL, SQLite, MongoSB

Cloud:

Heroku, Amazon Web Services and Vapor Cloud

Tools:

Vapor toolbox

Perfect:

The most popular framework for the server-side swift development and as the author claims, it is production ready and can be used with commercial products. This one is highly recommended to use as it’s big, powerful and fast. The framework is updated on a monthly basis.

Data formats:

JSON, XML, native support for direct operations on files, directories and .zip files

Databases:

MySQL, PostgresSQL, SQLite, CouchDB, MongoSB, MariaDB, FileMaker

Cloud:

Heroku, Amazon Web Services

Tools:

Perfect Assistant

Kitura:

This Framework is like a WebFramework. Kitura is unique in its approach to databases. Example SQL DB types Kitura uses abstraction layer called "Kuery" instead of "Query". Kuery supports MySQL, SQLite and PostgreSQL. Kitura also has support for Redis, CouchDB, Apache Cassandra and ScyllaDB, but through native packages these are not related to Kuery.

Supported Data formats:

JSON, Aphid MQTT (IoT/IoT Messaging)

Databases:

MySQL, PostgresSQL, SQLite, CouchDB, Apache Cassandra, ScyllaDB

Cloud:

Heroku, Amazon Web Services, Pivotal Web Services, IBM Bluemix

Tools:

Kitura CLI

Summary

Server-Side Swift is a fast growing solution and can be used commercially with the newest technologies like cloud. After a few years, we have acquired a mature language and amazing frameworks which constantly make Server-Side Swift better. When it comes to the Vapor and Perfect, both of them seems to be in a pretty good shape by now. Vapor, for example, is growing quite fast since its first release in September 2016 and currently there is 3.1 version of it available. Perfect has similar qualities and was first released in November 2015. As I mentioned before, I would personally recommend trying to play a bit with each framework before choosing the right one.

Tutorials and code examples

Perfect

Vapor

Kitura

Swift NIO:

Released by Apple on 1st March 2018 – low-level, cross platform, event driven network application framework.

Other frameworks

There are also Zewo and NozelIO but those just based on Swift and Zewo is a Go-Style framework, NozelIO is the node.js style framework.

Swift for specific OS

Key takeaways from XaaS Evolution Europe: IaaS, PaaS, SaaS

At Grape Up we attend multiple events all over the globe, particularly those that focus on cloud-native technologies, gather thousands of attendees and have hundreds of stunning keynote presentations.

But keeping in mind the fact that beauty comes in all sizes, we also exhibit at smaller, but just as important events such as the XaaS Evolution Europe which took place on November 26-27 at the nHow hotel Berlin.

With only 100 exclusive attendees and a few exhibiting vendors, XaaS stresses the importance of delivering a great and professional networking experience through its unique “Challenge your Peers”, “Icebraker” and “Round Tabele” sessions.

Summary

It was great to see that we are in the right place at the right time with our business model: digital transformation, moving workloads to the cloud, and market disruption are still hot topics among decision makers across Europe. We had great conversations which we hope will convert to business opportunities and long-lasting partnerships!

About the event

XaaS Evolution Europe 2018 is the only all-European XaaS use and business cases' event. IT decision-makers from large as well as medium size companies from all major industries meet to present their case studies and discuss major challenges, strategies, integration approaches and technological solutions related to the practical implementation of cloud services. Among those great presentations our very own Roman Swoszowski presented Cloudboostr as a go to platform for all those companies that want to avoid vendor lock-in and use open source but in the same time have Enterprise Support and professional enablement partner.

Secure Docker images

Containers are great. They allow you to move faster with your development team, deploy in reliable and consistent way and scale up to the sky. With some orchestrators it is even better. It helps you grow faster, use more containers, accelerate growth even more and use even more containers. Then, at some point you may wonder how I can be sure that the container I have just started or pulled is the correct one? What if someone injected there some malicious code and I did not notice? How can I be sure this image is secure? There has to be some tool that guarantees us such confidence… and there is one!

The update framework

The key element that helps and in fact solves many of those concerns is The Update Framework (TUF) that describes the update system as “secure” if:

- “it knows about the latest available updates in a timely manner

- any files it downloads are the correct files, and,

- no harm results from checking or downloading files.”

(source: https://theupdateframework.github.io/security.html)

There are four principles defined by the framework that makes almost impossible to make a successful attack on such update system.

The first principle is responsibility separation. In other words, there are a few different roles defined (that are used by e.g. the user or server) that are able to do different actions and use different keys for that purpose.

The next one is the multi-signature trust. Which simply says that you need a fixed number of signatures which has to come together to perform certain actions, e.g. two developers using their keys to agree that a specific package is valid.

The third principle is explicit and implicit revocation. Explicit means that some parties come together and revoke another key, whereas implicit is when for e.g. after some time, the repository may automatically revoke signing keys.

The last principle is minimize individual key and role risk. As it says, the goal is to minimize the expected damage which can defined by the probability of event happening and the impact. So if there is a root role with high impact to the system the key it uses is kept offline. The idea of TUF is to create and manage a set of metadata, signed by corresponding roles which provide general information about the valid state of repository at a specified time.

Notary

The next question is: how can a Docker use this update framework and what does it mean to you and me? First of all, a Docker already uses it in the Content Trust which definition seems to answer our first question about the image correctness. As per documentation:

“Content trust provides the ability to use digital signatures for data sent to and received from remote Docker registries. These signatures allow client-side verification of the integrity and publisher of specific image tags.”

(source: https://docs.docker.com/engine/security/trust/content_trust)

To be more precise, Content Trust does not use TUF directly. Instead, it uses Notary, a tool created by Docker which is an opinionated implementation of TUF. It keeps the TUF principles so there are five roles with corresponding keys, same as in TUF, so we have:

- root role – it uses the most important key that is used to sign the root metadata, which specifies other roles so it is strongly advised to keep it securely offline,

- snapshot role – this role signs snapshot metadata that contains information about filenames, sizes, hashes of other (root, target and delegation) metadata files so it ensures the user about their integrity. It can be held by the owner or admin or the Notary service itself,

- timestamp role – using timestamp key Notary signs metadata files which guarantees freshness of the trusted collection, because of short expiration time. Due to that fact it is kept by Notary service to automatically regenerate when it will be outdated,

- target role – it uses the target’s key to sign the target’s metadata file with information about files in the collection (filenames, sizes and corresponding hashes) and it should be used to verify the integrity of the files inside the collection. The other usage of the target key is to delegate trust to other peers using delegation roles,

- delegation role – which are pretty similar to the target role but instead of the whole content of repository, those keys ensure integrity of some (or sometimes all) of the actual content. They also can be used to delegate trust to other collaborators via lower level delegation role.

All this metadata can be pulled or pushed to the Notary service. There are two components in the Notary service – server and signer. The server is responsible for storing the metadata (those files generated by TUF framework underneath) for trusted collections in an associated database, generating the timestamp metadata and - most importantly - validating any uploaded metadata. Notary signer stores private keys, it is done not to keep them in the Notary server and in case of request from Notary server, it signs metadata for it. In addition, there is a Notary CLI that helps you manage trusted collections and supports Content Trust with additional functionality. The basic interaction between client, server and service can be described when the client wants to upload new metadata. After authentication, and if required, the metadata is validated by the server it generates timestamp metadata (and sometimes snapshot based on what has changed) and send it to the Notary signer for signing. After that, the server stores the client metadata, timestamp and snapshot metadata which ensure that the client files are valid and it their most recent versions.

What's next?

It sounds like a complete solution and it surely is one, but just for one particular area of threats. There are a lot of other threats that just sit there and wait to impact your container images. Along with those problems there are solutions:

- Vulnerability Static analyzer, which scans container images for any known vulnerabilities (mostly based on CVE databases),

- Container firewalls or activity monitors designed to detect some inappropriate behavior when your application is running,

- There are plenty of “Best practices for secure Docker images” – rules that you should follow when creating a Docker image,

- and many more…

How to avoid cloud-washed software?

The term "cloud-washed" was invented in the exact same way as the phrase "green-washed" came into being, which was used to describe products that don’t have anything to do with being "eco", but were sold in "green" packaging. The same with cloud. There is a trend in the software development community where many companies take perfect advantage of the cloud computing hype and re brand their products as "cloud", while in reality their solutions don’t have much in common with cloud. But first, let’s define what exactly "cloud washing" is:

Companies stick a "cloud" label on their products in order to stay on this competitive market. The only thing that they don’t do is explain whether it is cloud-native, cloud-based or something else.

Don’t get lost in the clouds

In order for you to easily expose fake cloud solution, I have prepared a list of questions to ask cloud solutions vendors to make sure you are buying a product with all the benefits of cloud-native software.

1. Is the platform hosted in the cloud or does it rely on an on-site server?

If the platform is hosted purely in the cloud with proper backup strategy, availability zones and replication in place, you will not be affected in case the server fails and should not worry a lot about data loss. But if it’s an on-site server, be sure to expect an outage when the server fails make sure there are backups – preferably in the different data center.

2. Is the service multi-tenant or single-tenant?

In the case of single-tenant services, support may not be as dedicated as you would expect it to be. Some issues might take weeks to resolve. Multi-tenant and cloud-based are a much better option, but only when you go cloud-native can you expect the best support, scalability and quick fixes. Also, cloud native platforms provide better level of system-level security for all deployed services.

3. How often are updates and new features released? Do they require downtime?

The answer is simple, if updates require downtime, it’s not cloud-native at all. If the features are available immediately, the software is cloud-native. Also, critical updates are deployed to all customers. On top of that, there is no disruption in service once they are deployed, because rolling update strategies are already in place. In addition, when failure happens rollback to previous version can be done immediately, or even automatically.

4. Who is responsible for security? Do you include security certifications or do I need to pay extra?

Remember one rule, cloud-native software is secure by definition . Cloud platforms "by design" are more secure because the whole system layer is abstracted from the developers and therefor easier to update with the latest security patches.

Continuous deployment of iOS apps with Fastlane

Automation helps with all the mundane and error-prone tasks that developers need to do on a daily basis. It also saves a lot of time. For automating iOS beta deployment, a very popular tool is Fastlane. The tools automate entire iOS CI/CD pipelines and allow us to keep an iOS infrastructure as code. Today I will share my observations and tips regarding setting up fastlane for iOS project.

Setting up Jenkins

Jenkins is an open-source automation server, but you can use fastlane on your local machine too. However, a separate build server can certainly help you create a more streamlined process.

The Jenkins installation process is pretty straightforward, so I will not be going into details here. But there are some quirks that we should take into consideration.

To elevate the problem with detached HEAD, we should provide Branch Specifier with „origin” e.g. „origin/master” and choose the option Check out to specific local branch with branch name including ** . For more details, see the Jenkins' help.

All the tool commands should be put in the Execute shell phase.

Setting up Fastlane

Fastlane is used to simplify deployment of iOS and Android apps. It is well documented and its community always tries to help in case of any bugs etc. We can install it using Homebrew or RubyGems and I personally recommend using the latter. I stumbled upon some problems during installation of fastlane plugins after using `brew` to install fastlane.

To integrate the tool with our project, we should open its directory in terminal and type:

fastlane init

It will start a very intuitive creator that guides us through the process of setting up fastlane for our project. After this process is finished, we have configured it. Now we can customize and create new lanes.

Fastlane lanes are written in Ruby. Even if you are unfamiliar with this programming language, learning the basics that are needed to configure it shouldn't take too long. There is also a version of fastlane that supports Swift. However, it is still in beta.

Fastlane tips

The tool provides a few ways of handling code signing e.g. „cert” and „sigh” or „match”. However, if you plan to use fastlane mainly on CI/CD server you can rely on automatic code by signing in Xcode and installing the certificates manually on that machine. In that case you don't need to use any of those actions in your lanes.

If you prefer to move your Fastfile (fastlane configuration file) to a different directory than the project's directory, you will have to provide manually paths to the actions. In that case it is better to use absolutes paths since when you just use ../ prefix you may end up in a different directory that you would expect.

Error block error do |lane, exception| is very useful for error notifications. For example, you can post message to Slack when lane executed by the CI server failed.

DevOps Enterprise Summit 2018: Driving the future of DevOps

What happens when you gather 1500 attendees, more than 50 speakers and a few hundred media representatives under one roof? The answer is DevOps Enterprise Summit – one of the largest global events devoted to DevOps enabling top technology and business leaders from around the world to learn and network for 3 days in a row.

DevOps Enterprise Summit describes itself as an „unfolding documentary of ongoing transformations which leaders are helping drive in their large, complex organizations”. The atmosphere at the Cosmopolitan Hotel in Las Vegas, the event venue, we could literally feel the excitement of all the attendees throughout the whole time. Everything thanks to the openness of the attendees to learning, connecting and new experiences from peers from the industry. Throughout the discussions, we have also gotten inspired by key thought leaders and industry experts and found the answers to constantly debated questions.

As Grape Up, we had over 180 visitors in just 3 days at our booth. All of them were genuinely interested in our services, both those who knew our company from previous events and those who haven’t heard of us yet (well, now they have). On top of that, we have seen one of the biggest domestic airlines in the US, that had delegated over 70 of her employees to DOES to learn. The DevOps Enterprise Summit was definitely the event of the year for this company and served the purpose of helping further accelerate their digital transformation – from our perspective this is a company that is going all-in DevOps. There have also been several others companies who have come to Las Vegas all the way from New Zealand – this is also a great example of the global nature of digital transformation.

Our new product, Cloudboostr, has really caught the eye of many visitors. The ability to run any workload anywhere, using enterprise-grade open source, supported by an experienced company like Grape Up who has been around for over a decade, has proved to be a winning combination. Apart from booth visitors, we have also collected many inquiries from large companies who are already interested in the product. The future for Cloudboostr as Grape Up’s first product looks bright.

Overall, the DevOps Enterprise Summit has been a successful event for Grape Up. Besides having met many DevOps enthusiasts, we had the opportunity to get to know plenty of sponsors who are also delivering amazing value through their products and services . The event definitely feels like a one-stop-shop for all needs DevOps.

Yet another look at cloud-native apps architecture

Cloud is getting extremely popular and ubiquitous. It’s everywhere. Imagine, the famous “everywhere” meme with Buzz and Woody from Toy Story in which Buzz shows Woody an abundance of...whatever the caption says. It’s the same with the cloud.

The original concepts of what exactly cloud is, how it should or could be used and adopted, has changed over time. Monolithic applications were found to be difficult to maintain and scale in the modern environments. Figuring out the correct solutions has turned into an urgent problem and blocked the development of the new approach.

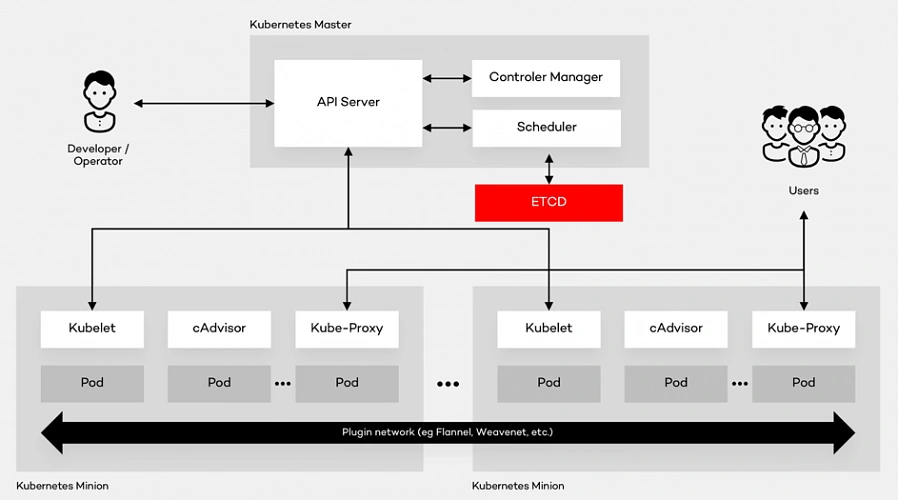

Once t he cloud platform like Pivotal Cloud Foundry or Kubernetes is deployed and properly maintained , developers, managers and leaders step in. They start looking for best practices and guidelines for how cloud applications should actually be developed and how these apps should operate. Is it too little too late? No, not really.

If cloud platforms were live creatures, we could compare them to physical infrastructure. Why? Because they both don't really care if developers deploy a huge monolith or well-balanced microservices. You can really do anything you want, but before you know it, will be clearly visible that the original design does not scale very well. It may fail very often and is virtually impossible to configure and deploy it on different environments without making specific changes to the platform or extremely complex and volatile configuration scripts.

Does a universal architecture exist?

The problem with the not-really-defined cloud architecture led to the creation of the idea of the “application architecture” which performs well in the cloud. It should not be limited to the specific platform or infrastructure, but a set of universal rules that help developers bootstrap their applications. The answer to that problem was constituted in Heroku, one of the first commonly used PaaS platforms.

It’s a concept of twelve-factor applications which is still applicable nowadays, but also endlessly extended as the environment of cloud platforms and architectures mutate. That concept was extended in a book by Kevin Hoffman, titled "Beyond Twelve-Factor App" to the number of 15 factors. Despite the fact that the list is not the one and only solution to the problem, it has been successfully applied by a great deal of companies. The order of factors is not important, but in this article, I have tried to preserve the order from the original webpage to make the navigation easier. Shall we?

I. Codebase

There is one codebase per application which is tracked in revision control (GIT, SVN - doesn’t matter which one). However, a shared code between applications does not mean that the code should be duplicated - it can be yet another codebase which will be provided to the application as a component, or even better, as a versioned dependency. The same codebase may be deployed to multiple different environments and has to produce the same release. The idea of one application is tightly coupled with the single responsibility pattern. A single repository should not contain multiple applications or multiple entry points - it has to be a single responsibility, a single execution point code that consists of a single microservice.

II. Dependencies

Dependency management and isolation consist of two problems that should be solved. Firstly, it is very important to explicitly declare the dependencies to avoid a situation in which there is an API change in the dependency which renders the library useless from the point of the existing code and fails the build or release process.

The next thing are repeatable deployments. In an ideal world all dependencies should be isolated and bundled with the release artifact of the application. It is not always entirely achievable, but should be done and it should be possible too. In some platforms such as Pivotal Cloud Foundry this is being managed by buildpack which clearly isolates the application from, for example, the server that runs it.

III. Config

This guideline is about storing configuration in the environment. To be explicit, it also applies to the credentials which should also be securely stored in the environment using, for example, solutions like CredHub or Vault. Please mind that at no time the credentials or configuration can be a part of the source code! The configuration can be passed to the container via environment variables or by files mounted as a volume. It is recommended to think about your application as if it was open source. If you feel confident to push all the code to a publicly accessible repository, you have probably already separated the configuration and credentials from the code. An even better way to provide the configuration will be to use a configuration server such as Consul or Spring Cloud Config.

IV. Backing services

Treat backing services like attached resources. The bound services are for example databases, storage but also configuration, credentials, caches or queues. When the specific service or resource is bound, it can be easily attached, detached or replaced if required. This adds flexibility to using external services and, as a result, it may allow you to easily switch to the different service provider.

V. Build, release, run

All parts of the deployment process should be strictly separated. First, the artifact is created in the build process. Build artifact should be immutable. This means the artifact can be deployed to any environment as the configuration is separated and applied in the release process. The release artifact is unique per environment as opposed to the build artifact which is unique for all environments. This means that there is one build artifact for the dev, test and prod environments, but three release artifacts (each for each environment) with specific configuration included. Then the release artifact is being run in the cloud. Kevin adds to this list yet another discrete part of the process - the design which happens before the build process and includes (but is not limited) to selecting dependencies for the component or the user story.

VI. Stateless processes

Execute the application as one or more stateless processes. One of the self-explanatory factors, but somehow also the one that creates a lot of confusion among developers. How can my service be stateless if I need to preserve user data, identities or sessions? In fact, all of this stateful data should be saved to the backing services like databases or file systems (for example Amazon S3, Azure Blob Storage or managed by services like Ceph). The filesystem provided by the container to the service is ephemeral and should be treated as volatile. One of the easy ways to maintain microservices is to always deploy two load balanced copies. This way you can easily spot an inconsistency in responses if the response depends on locally cached or stateful data.

VII. Port binding

Expose your services via the port binding and avoid specifying ports in the container. Port selection should be left for the container runtime to be assigned on the runtime. This is not necessarily required on platforms such as Kubernetes. Nevertheless, ports should not be micromanaged by the developers, but automatically assigned by the underlying platform, which largely reduces the risk of having a port conflict. Ports should not be managed by other services, but automatically bound by the platform to all services that communicate with each other.

VIII. Concurrency

Services should scale out via the process model as opposed to vertical scaling. When the application load reaches its limits, it should manually or automatically scale horizontally. This means creating more replicas of the same stateless service.

IX. Disposability

Application should start and stop rapidly to avoid problems like having an application which does not respond to healthchecks. Why? Because it starts (which may even result in the infinite loop of restarts) or cancels the request when the deployment is scaled down.

X. Development, test and production environments parity

Keeping all environments the same, or at least very similar, may be a complex task. The difficulties vary from VMs and licenses costs to the complexity of deployment. The second problem may be avoided by properly configured and managed underlying platform. The advantages of the approach is to avoid the "works for me" problem which gets really serious when happens on production which is automatically deployed and the tests passed on all environments except production because totally different database engine was used.

XI. Logs

Logs should be treated as event streams and entirely independent of the application. The only responsibility of the application is to output the logs to the stdout and stderr streams. Everything else should be handled by the platform. That means passing the logs to centralized or decentralized store like ELK, Kafka or Splunk.

XII. Admin processes

The administrative and management processes should be run as "one-off". This actually self-explanatory and can be achieved by creating Concourse pipelines for the processes or by writing Azure Function/AWS Lambdas for that purpose. This list concludes all factors provided by Heroku team. Additional factors added to the list in "Beyond Twelve-Factor App" are:

XIII. Telemetry

Applications can be deployed into multiple instances which means it is not viable anymore to connect and debug the application to find out if it works or what is wrong. The application performance should be automatically monitored, and it has to be possible to check the application health using automatic health checks. Also, for specific business domains telemetry is useful and should be included to monitor the current and past state of the application and all resources.

XIV. Authentication and authorization

Authentication, authorization, or the general aspect of security, should be the important part of the application design and development, but also configuration and management of the platform. RBAC or ABAC should be used on each endpoint of the application to make sure the user is authorized to make that specific request to that specific endpoint.

XV. API First

API should be designed and discussed before the implementation. It enables rapid prototyping, allows to use mock servers and moves team focus to the way services integrate. As product is consumed by the clients the services are consumed by different services using the public APIs - so the collaboration between the provider and the consumer is necessary to create the great and useful product. Even the excellent code can be useless when hidden behind poorly written and badly documented interface. For more details about the tools and the concept visit API Blueprint website.

Is that all?

This is very extensive list and in my opinion from technical perspective this is just enough to navigate the cloud native world. On the other hand there is one more point that I would like to add which is not exactly technical, but still extremely important when thinking about creating successful product.

XVI. Agile company and project processes

The way to succeed in the rapidly changing and evolving cloud world is not only to create great code and beautiful, stateless services. The key is to adapt to changes and market need to create the product that is appreciated by the business. The best fit for that is to adopt the agile or lean process, extreme programming, pair programming . This allows the rapid growth in short development cycles which also means quick market response. When the team members think that their each commit is a candidate to production release and work in pairs the quality of the product improves. The trick is to apply the processes as widely as possible, because very often, as in Conway's law, the organization of your system is only as good as organizational structure of your company.

Summary of cloud-native apps

That’s the end of our journey through to the perfect Cloud-Native apps. Of course this is not the best solution to every problem. In the real world we don’t really have handy silver bullets. We must work with what we’ve got, but there is always room for improvement. Next time when you design your, hopefully, cloud-native apps, bear these guidelines in mind.

Key takeaways from Cloud Foundry Summit Europe 2018 in Basel

This year's Cloud Foundry Summit Europe was held in Basel, Switzerland. As a company which strongly embraces the cloud, we could simply not miss it! Throughout the event, all visitors to our Silver Sponsor booth no. 15 could not only discuss all things cloud-native, but also drink a cup of our delicious, freshly brewed vanilla coffee. First, lets take a look at some numbers from the event. There were:

- 144 keynotes, break notes and lightning talks

- 65 member companies

- 8 certified platforms

- 961 registered attendees

Why Cloud Foundry Summit?

However, coffee wasn’t the only reason we traveled over 1000 kilometers. What mattered to us was the impressive conference line-up. We were hungry for the upcoming tech news, to see what’s trending and, of course, to learn new things. By the very looks of the schedule we could tell that the European edition in Basel was slightly different from the Boston edition earlier this year.

Everyone’s talking about Kubernetes with Cloud Foundry

Let’s focus first on serverless. This topic has either been exhausted or has only been a temporary fling among the international circles. Why? Because this year’s edition has only devoted two speeches in the Containers and Serverless track to FaaS. Whereas, 6 months ago in Boston the number of speeches about it was twice as big. One more reason for that might be the growing popularity of Kubernetes. There were 6 tracks and, apart from Contrainers and Serverless, each had at least one speech about K8S/containers.

Currently, the interest in Kubernetes is so palpable and obvious that even Cloud Foundry themselves confirmed it by announcing a CF Containerization project:

But there’s more…

Finally, it's worth mentioning the second project approved by Cloud Foundry - Eirini - which brings the CF closer to K8S and enables using Kubernetes as a container scheduler in the Cloud Foundry Application Runtime. It means nothing else but the fact that we are getting closer to KubeFoundry or CloudFubernetes - platforms from platforms.

Our anncouncement

During the Summit, one of the most important moments for us was the announcement of our new product, Cloudboostr , an Open Cloud Platform which is a complete cloud-native software stack that integrates open-source technologies like Cloud Foundry and Kubernetes. Thanks to Cloudboostr, companies can build, test, deploy and operate any workload on public, private or hybrid cloud.

Co-author: Julia Haleniuk

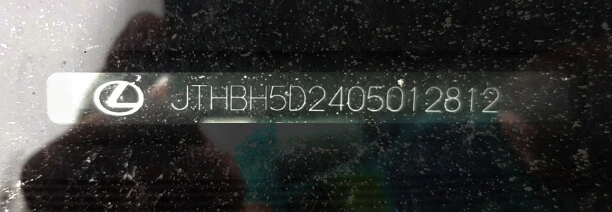

Leveraging AI to improve VIN recognition - how to accelerate and automate operations in the insurance industry

Here we share our approach to automatic Vehicle Identification Number (VIN) detection and recognition using Deep Neural Networks. Our solution is robust in many aspects such as accuracy, generalization, and speed, and can be integrated into many areas in the insurance and automotive sectors.

Our goal is to provide a solution allowing us to take a picture using a mobile app and read the VIN that is present in the image. With all the similarities to any other OCR application and common features, the differences are colossal.

Our objective is to create a reliable solution and to do so we jumped directly into analysis of the real domain images.

VINs are located in many places on a car and its parts. The most readable are those printed on side doors and windshields. Here we focus on VINs from windshields.

OCR doesn’t seem to be rocket science now, does it? Well, after some initial attempts, we realized we’re not able to use any available commercial tools with success, and the problem was much harder than we had thought.

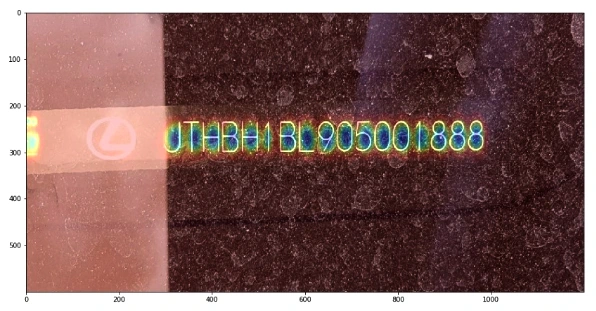

How do you like this example of KerasOCR ?

Despite many details, like the fact that VINs don’t contain the characters ‘I’, ‘O’, ‘Q’, we have very specific distortions, proportions, and fonts.

Initial approach

How can we approach the problem? The most straightforward answer is to divide the system into two components:

VIN detection VIN recognition Cropping the characters from the big image Recognizing cropped characters

In the ideal world images like that:

Will be processed this way:

After we have the intuition how the problem looks like, we can we start solving it. Needless to say, there is no “VIN reading” task available on the internet, therefore we need to design every component of our solution from scratch. Let’s introduce the most important stages we’ve created, namely:

- VIN detection

- VIN recognition

- Training data generation

- Pipeline

VIN detection

Our VIN detection solution is based on two ideas:

- Encouraging users to take a photo with VIN in the center of the picture - we make that easier by showing the bounding box.

- Using Character Region Awareness for Text Detection (CRAFT) - a neural network to mark VIN precisely and be more error-prone.

CRAFT

The CRAFT architecture is trying to predict a text area in the image by simultaneously predicting the probability that the given pixel is the center of some character and predicting the probability that the given pixel is the center of the space between the adjacent characters. For the details, we refer to the original paper .

The image below illustrates the operation of the network:

Before actual recognition, it had sound like a good idea to simplify the input image vector to contain all the needed information and no redundant pixels. Therefore, we wanted to crop the characters’ area from the rest of the background.

We intended to encourage a user to take a photo with a good VIN size, angle, and perspective.

Our goal was to be prepared to read VINs from any source, i.e. side doors. After many tests, we think the best idea is to send the area from the bounding box seen by users and then try to cut it more precisely using VIN detection. Therefore, our VIN detector can be interpreted more like a VIN refiner.

It would be remiss if we didn’t note that CRAFT is exceptionally unusually excellent. Some say every precious minute communing with it is pure joy.

Once the text is cropped, we need to map it to a parallel rectangle. There are dozens of design dictions such as the affine transform, resampling, rectangle, resampling for text recognition, etc.

Having ideally cropped characters makes recognition easier. But it doesn’t mean that our task is completed.

VIN recognition

Accurate recognition is a winning condition for this project. First, we want to focus on the images that are easy to recognize – without too much noise, blur, or distortions.

Sequential models

The SOTA models tend to be sequential models with the ability to recognize the entire sequences of characters (words, in popular benchmarks) without individual character annotations. It is indeed a very efficient approach but it ignores the fact that collecting character bounding boxes for synthetic images isn’t that expensive.

As a result, we devaluated supposedly the most important advantage of the sequential models. There are more, but are they worth watching out all the traps that come with them?

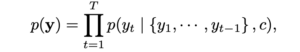

First of all, training attention-based model is very hard in this case because of

As you can see, the target characters we want to recognize are dependent on history. It could be possible only with a massive training dataset or careful tuning, but we omitted it.

As an alternative, we can use Connectionist Temporal Classification (CTC) models that in opposite predict labels independently of each other.

More importantly, we didn’t stop at this approach. We utilized one more algorithm with different characteristics and behavior.

YOLO

You Only Look Once is a very efficient architecture commonly used for fast and accurate object detection and recognition. Treating a character as an object and recognizing it after the detection seems to be a definitely worth trying approach to the project. We don’t have the problem and there are some interesting tweaks that can allow even more precise recognition in our case. Last but not least, we are able to have a bigger control of the system as much of the responsibility is transferred from the neural network.

However, the VIN recognition requires some specific design of YOLO. We used YOLO v2 because the latest architecture patterns are more complex in areas that do not fully address our problem.

- We use 960 x 32 px input (so images cropped by CRAFT are usually resized to meet this condition). Then we divide the input into 30 gird cells (each of size 32 x 32 px),

- For each grid cell, we run predictions in predefined anchor boxes,

- We use anchor boxes of 8 different widths but height always remains the same and is equal to 100% of the image height.

As the results came, our approach proved to be effective in recognizing individual characters from VIN.

Metrics

Appropriate metrics becomes crucial in machine learning-based solutions as they drive your decisions and project dynamic. Fortunately, we think simple accuracy fulfills the demands of a precise system and we can omit the research in this area.

We just need to remember one fact: a typical VIN contains 17 characters, and it’s enough to miss one of them to classify the prediction as wrong. At any point of work, we measure Character Recognition Rate (CER) to understand the development better. CERs at a level 5% (5% of wrong characters) may result in accuracy lower than 75%.

About the models tuning

It's easy to notice that all OCR benchmark solutions have much bigger effective capacity that exceeds the complexity of our task despite being too general as well at the same time. That itself emphasizes the danger of overfitting and directs our focus to generalization ability.

It is important to distinguish hyperparameters tuning from architectural design. Apart from ensuring information flow through the network extracts correct features, we do not dive into extended hyperparameters tuning.

Training data generation

We skipped one important topic: the training data.

Often, we support our models with artificial data with reasonable success but this time the profit is huge. Cropped synthetized texts are so similar to the real images that we suppose we can base our models on them, and only finetune it carefully with real data.

Data generation is a laborious, tricky job. Some say your model is as good as your data. It feels like the craving and any mistake can break your material. Worse, you can spot it as late as after the training.

We have some pretty handy tools in arsenal but they are, again, too general. Therefore we had to introduce some modifications.

Actually, we were forced to generate more than 2M images. Obviously, there is no point nor possibility of using all of them. Training datasets are often crafted to resemble the real VINs in a very iterative process, day after day, font after font. Modeling a single General Motors font took us at least a few attempts.

But finally, we got there. No more T’s as 1’s, V’s as U’s, and Z’s as 2’s!

We utilized many tools. All have advantages and weaknesses and we are very demanding. We need to satisfy a few conditions:

- We need a good variance in backgrounds. It’s rather hard to have a satisfying amount of windshields background, so we’d like to be able to reuse those that we have, and at the same time we don’t want to overfit to them, so we want to have some different sources. Artificial backgrounds may not be realistic enough, so we want to use some real images from outside our domain,

- Fonts, perhaps most important ingredients in our combination, have to resemble creative VIN’s fonts (who made them!?) and cannot interfere with each other. At the same time, the number of car manufacturers is much higher than our collector’s impulses, so we have to be open to unknown shapes.

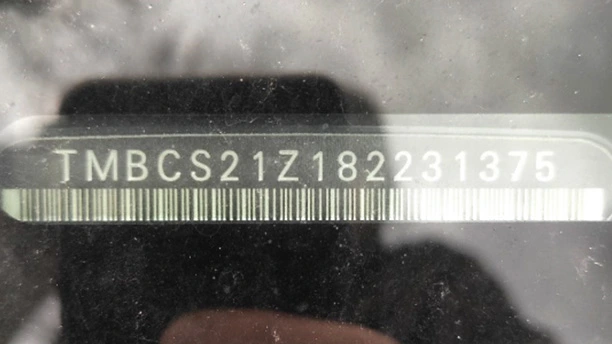

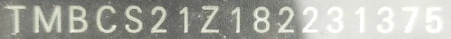

The below images are the example of VIN data generation for recognizers:

Putting everything together

It’s the art of AI to connect so many components into a working pipeline and not mess it up.

Moreover, we have a lot of traps here. Mind these images:

VIN labels often consist of separated strings, two rows, logos and bar codes present near the caption.

90% of end-to-end accuracy provided by our VIN reader

Under one second solely on mid-quality CPU, our solution has over 90% of end-to-end accuracy.

This result depends on the problem definition and test dataset. For example, we have to decide what to do with the images that are impossible to read by a human. Nevertheless, not regarding the dataset, we approached human-level performance which is a typical reference level in Deep Learning projects.

We also managed to develop a mobile offline version of our system with similar inference accuracy but a bit slower processing time.

App intelligence

While working on the tools designed for business , we can’t forget about the real use-case flow. With the above pipeline, we’re absolutely unresistant to photos that are impossible to read, even though we want it to be. Often similar situations happen due to:

- incorrect camera focus,

- light flashes,

- dirt surfaces,

- damaged VIN plate.

Usually, we can prevent these situations by asking users to change the angle or retake a photo, before we send it to the further processing engines.

However, the classification of these distortions is a pretty complex task! Nevertheless, we implemented a bunch of heuristics and classifiers that allow us to ensure that VIN, if recognized, is correct. For the details, you have to wait for the next post.

Last but not least, we’d like to mention that, as usual, there are a lot of additional components built around our VIN Reader . Apart from a mobile application, offline on-device recognition, we’ve implemented remote backend, pipelines, tools for tagging, semi-supervised labeling, synthesizers, and more.

https://youtu.be/oACNXmlUgtY

A day in the life of a Business Development Manager

Have you ever wondered what it's like to be a Business Development Manager? Marcin Wiśniewski of Grape Up, shares his experience and tips for successful business development management.

1. Hi Marcin, what is your background?

It’s absolutely non-technical and I have a degree in Management and Marketing which can be quite unusual as for someone who works in IT. But I just can’t help the fact that I like all these outlandish tech gimmicks and news. And I like learning new things – which is the crucial part of my job.

2. Why did you decide on a career in business development?

I don’t recall making any big decision of a lifetime to work in sales. What I do know is that I have always wanted to have a career which would allow me to meet new people, discover new views of the world, new beliefs and opinions. I like to talk, travel, I like to be around. But what gives me a rush of adrenaline is the intensity which comes with the sales process. So maybe not intentionally, but I have found myself in the right place.

3. What does your typical day at the office look like? Is there a typical day at all?

I don’t really have a typical day at work. Of course, there are tasks or processes which are repetitive and we do them according to a pattern, but it’s always about a different company, different people, new conferences, new challenges. Therefore, each and every case requires an individual approach.

4. What do you love most about the job and what is its most challenging aspect?

I like it that we work in a niche part of the IT market mainly abroad. We cooperate with Fortune 500 companies which is the most exciting and challenging part of the job at once. Selling to Fortune 500 companies which are basically dream clients isn’t easy-peasy so once you do it, that’s something to be proud of!

5. What competencies do you think makes a good Business Development Manager?

I’m not sure how my colleagues from the industry would answer this question, but I like people, I like talking to them and, of course, learning new things. And my job incorporates all of it, which is exactly why I love what I do.

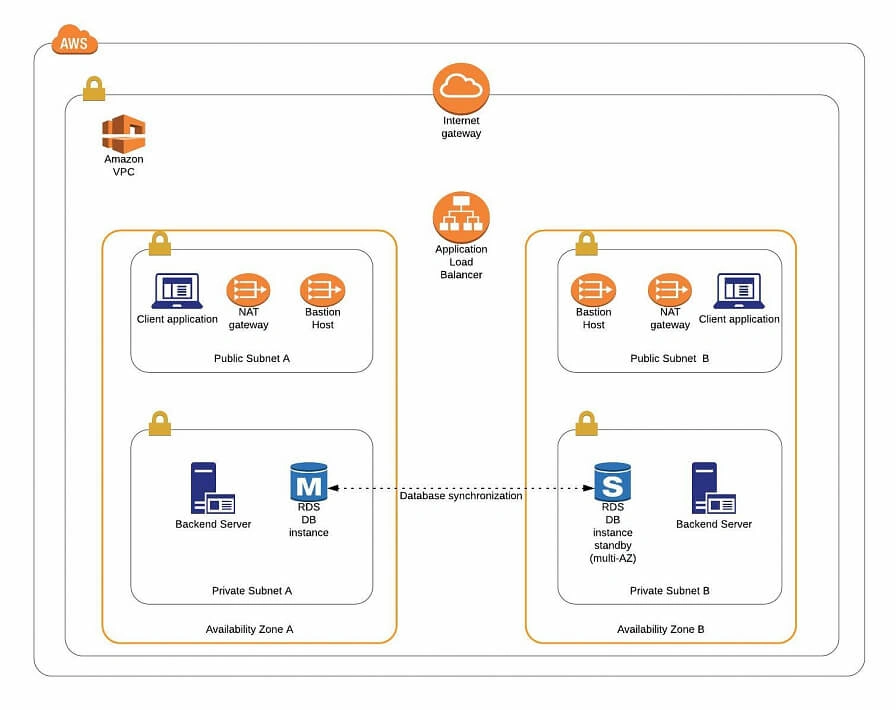

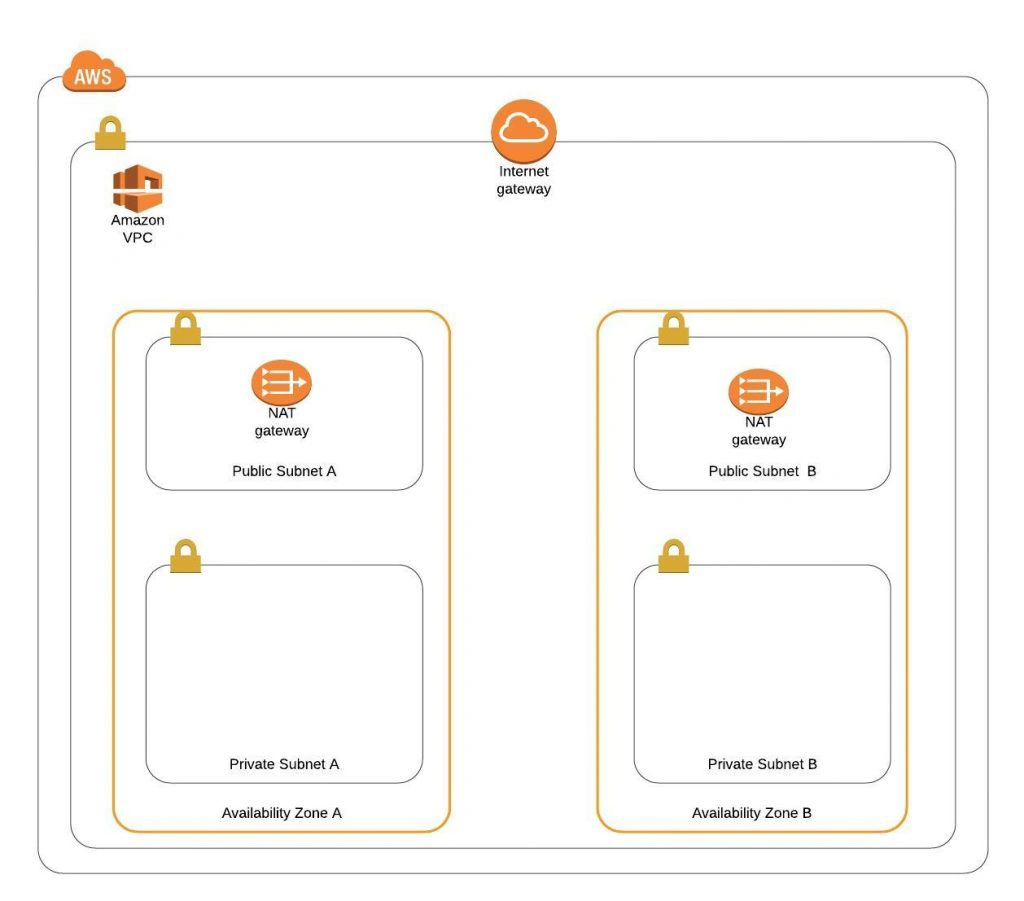

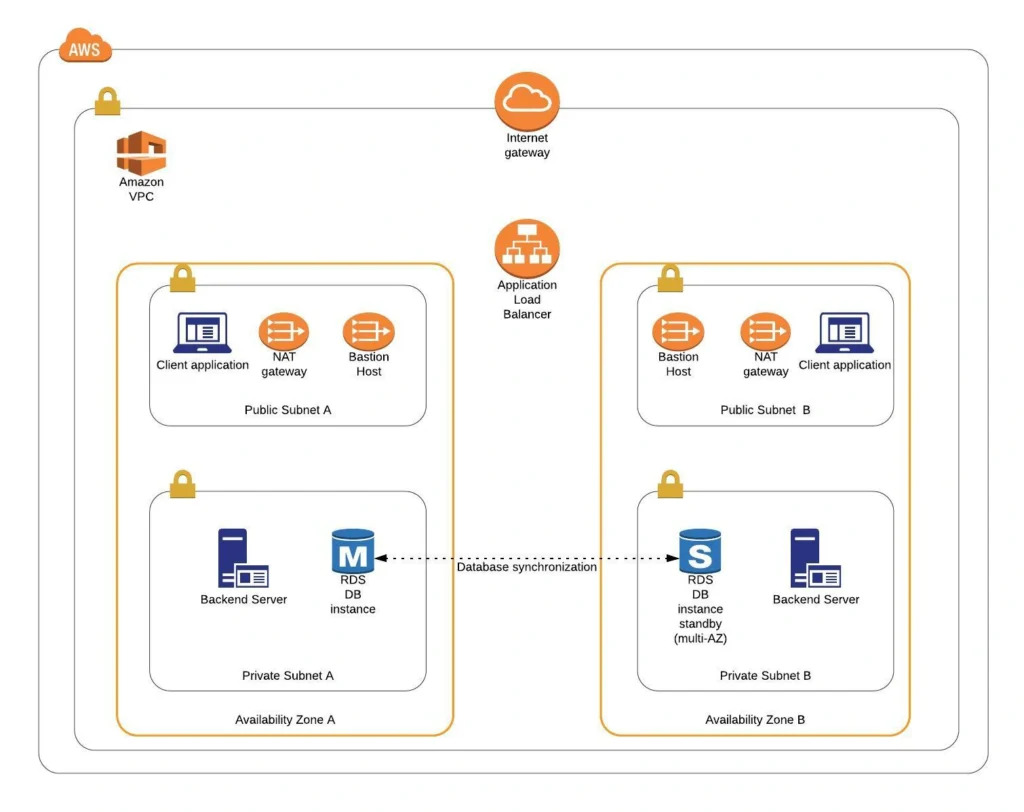

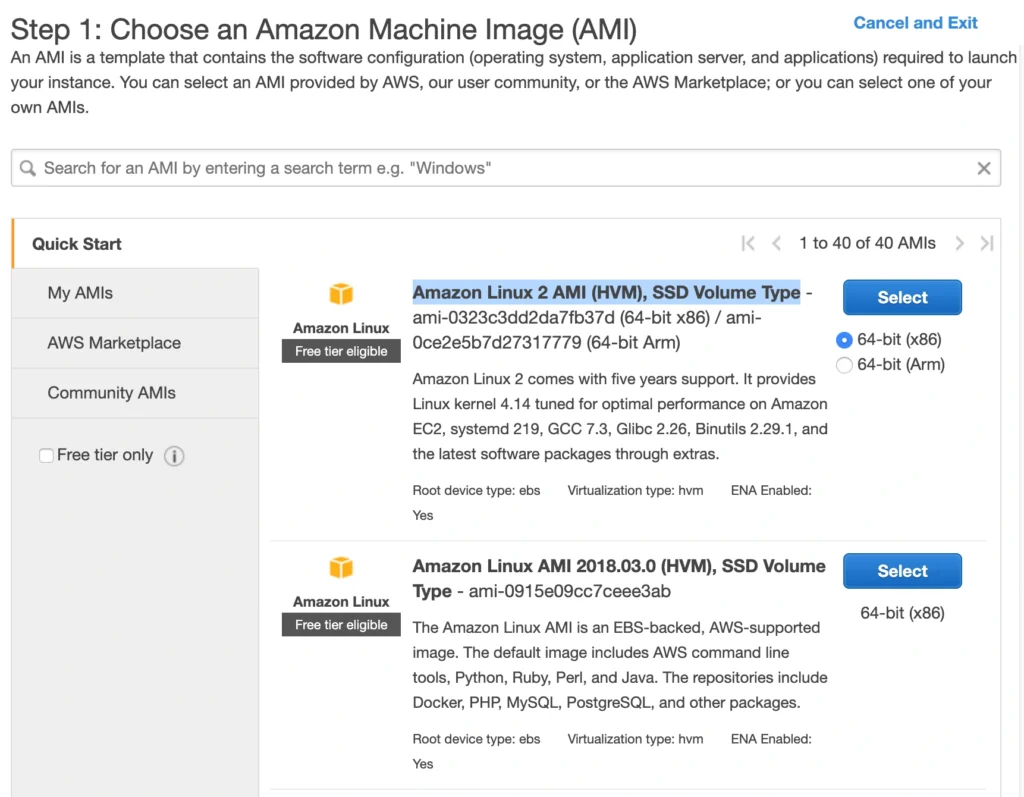

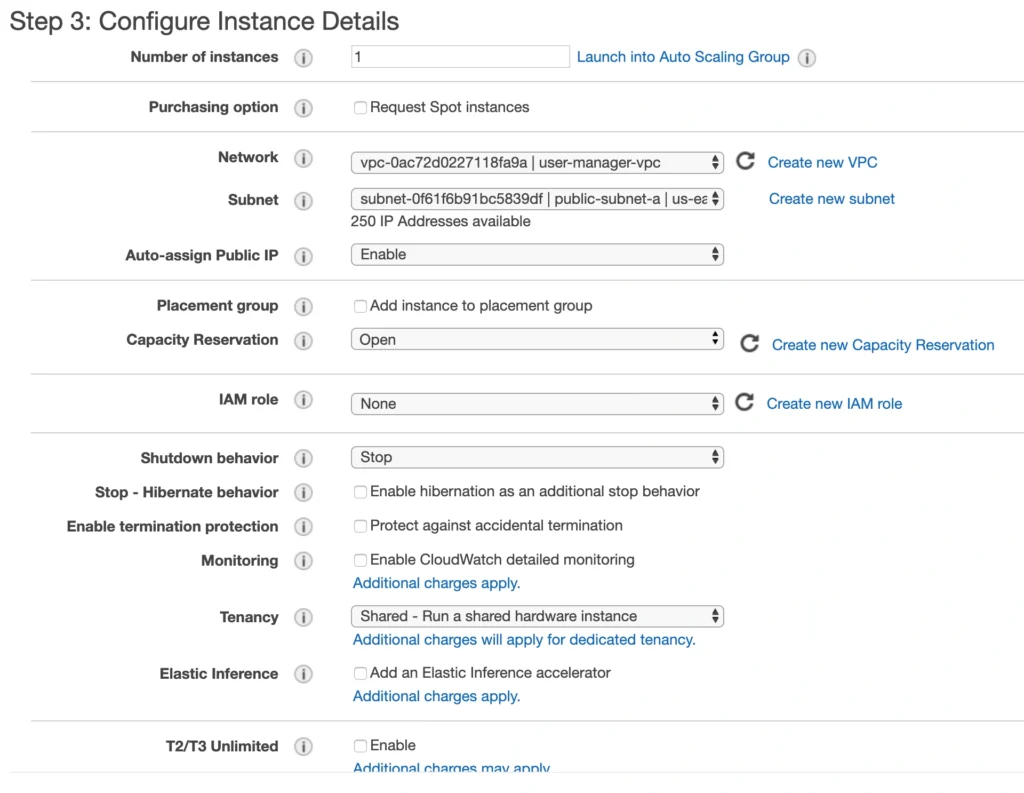

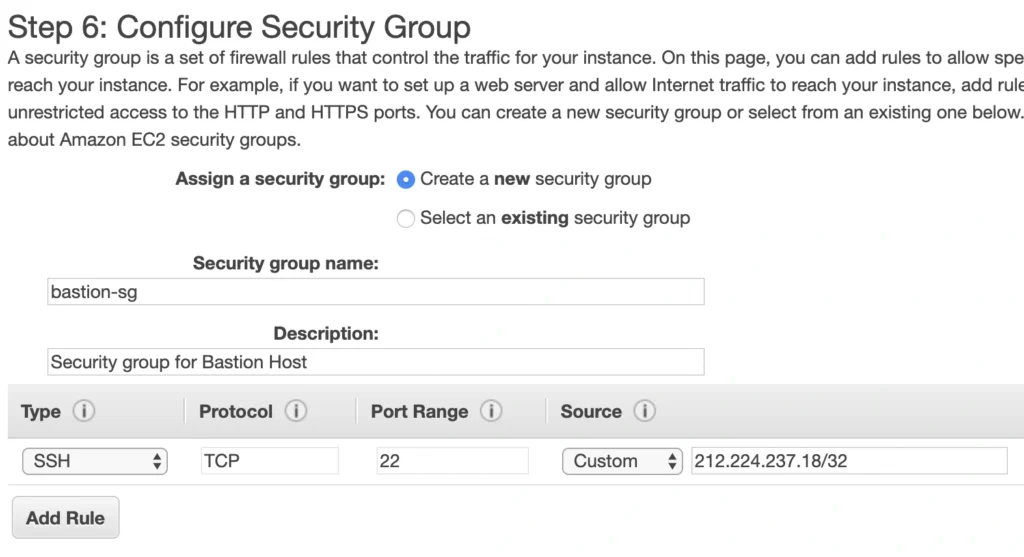

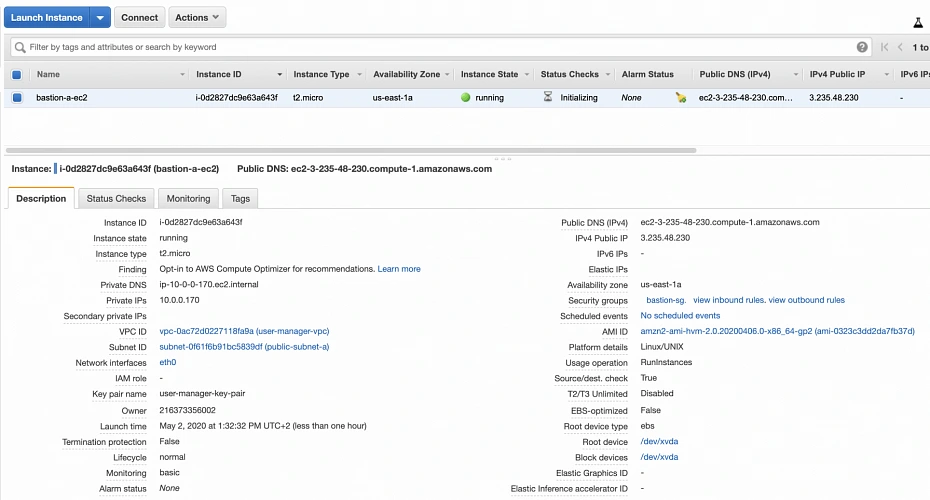

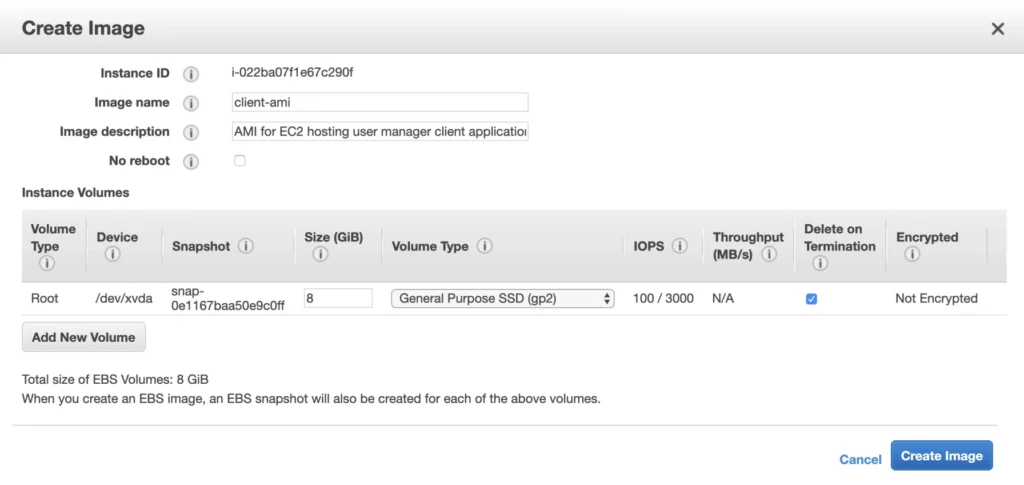

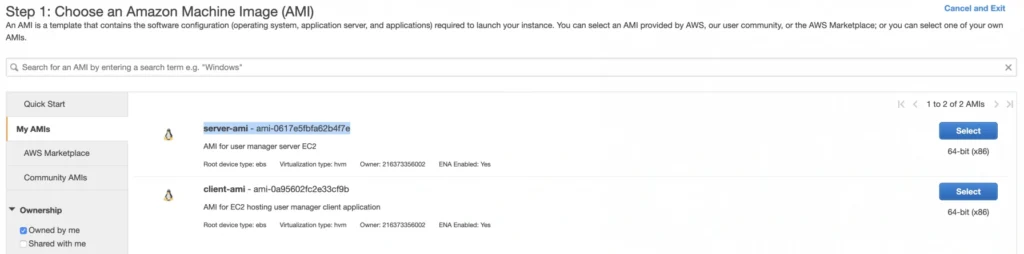

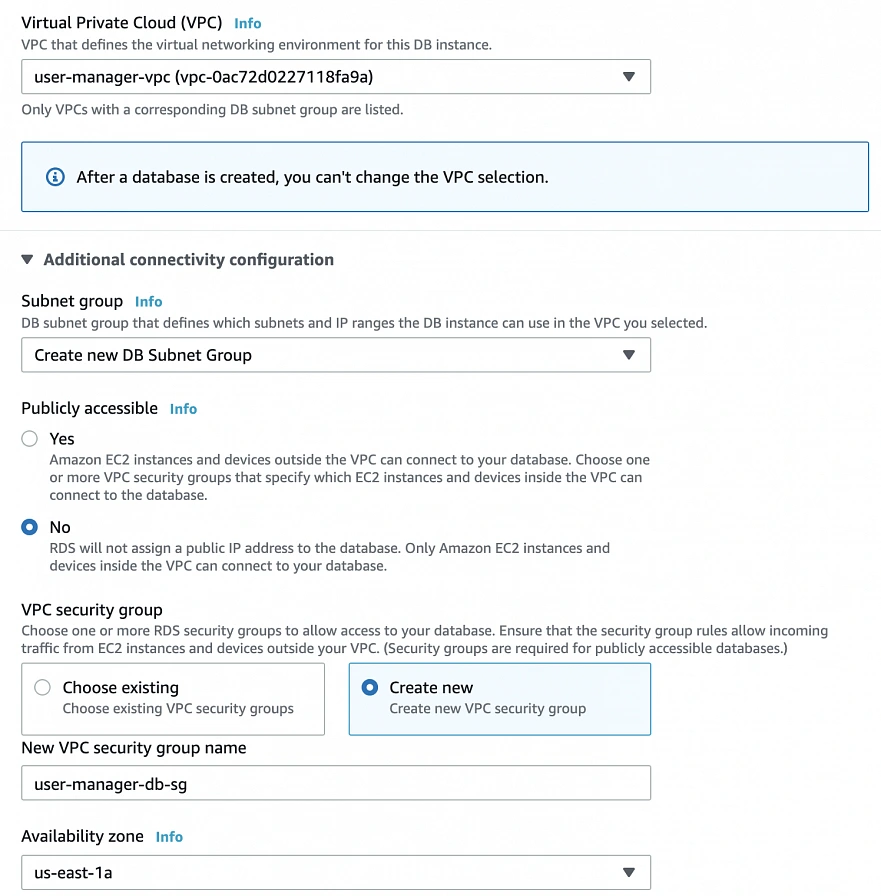

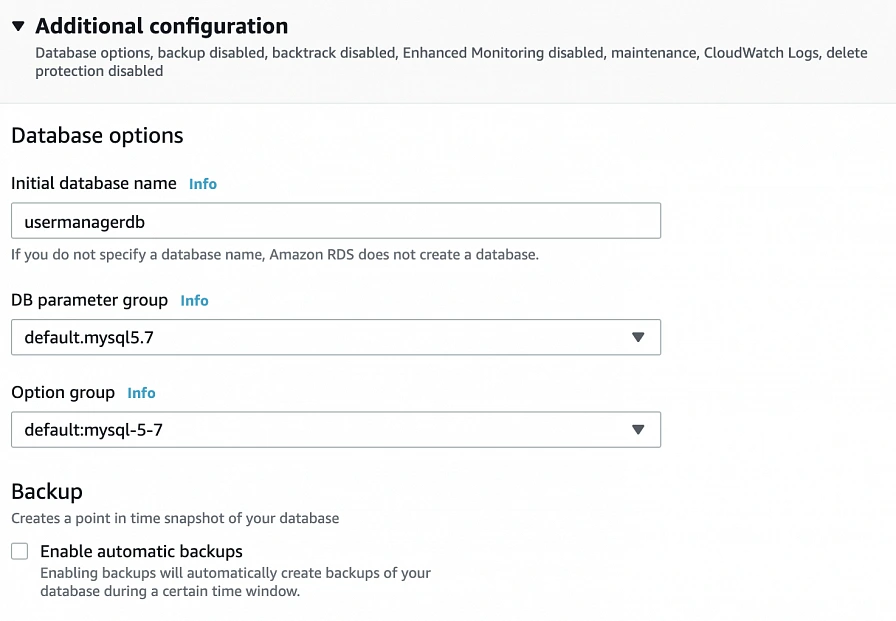

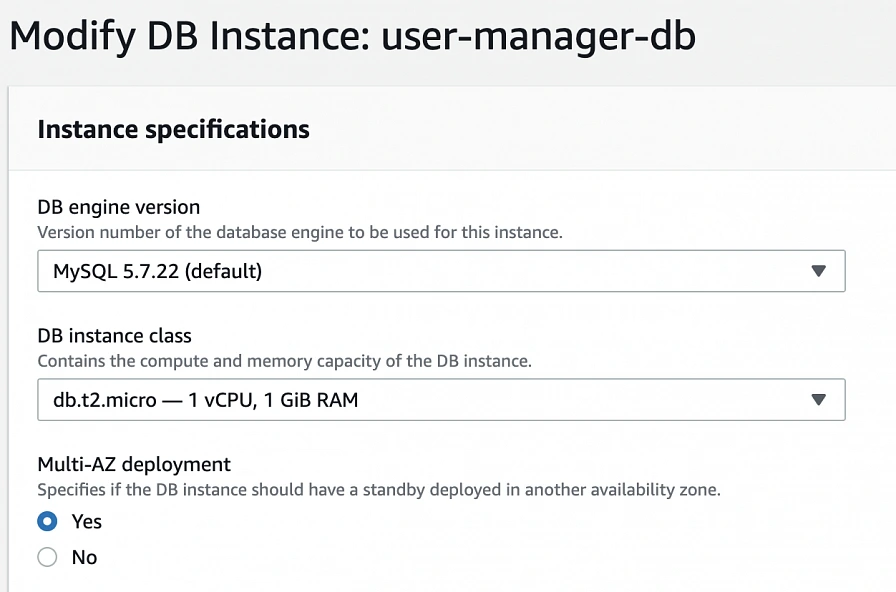

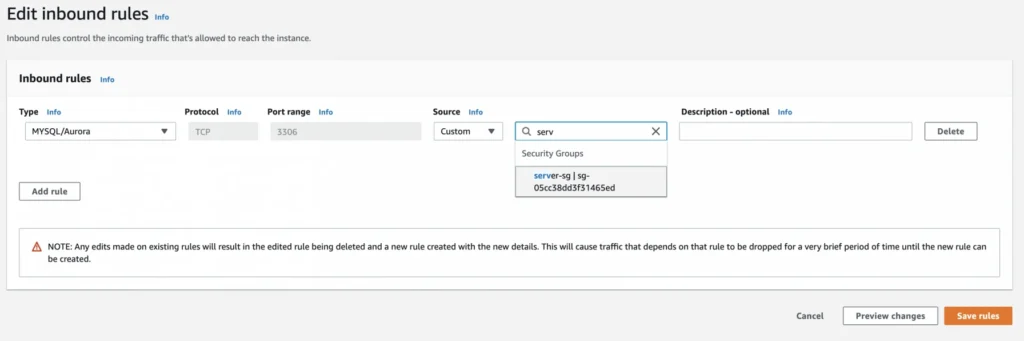

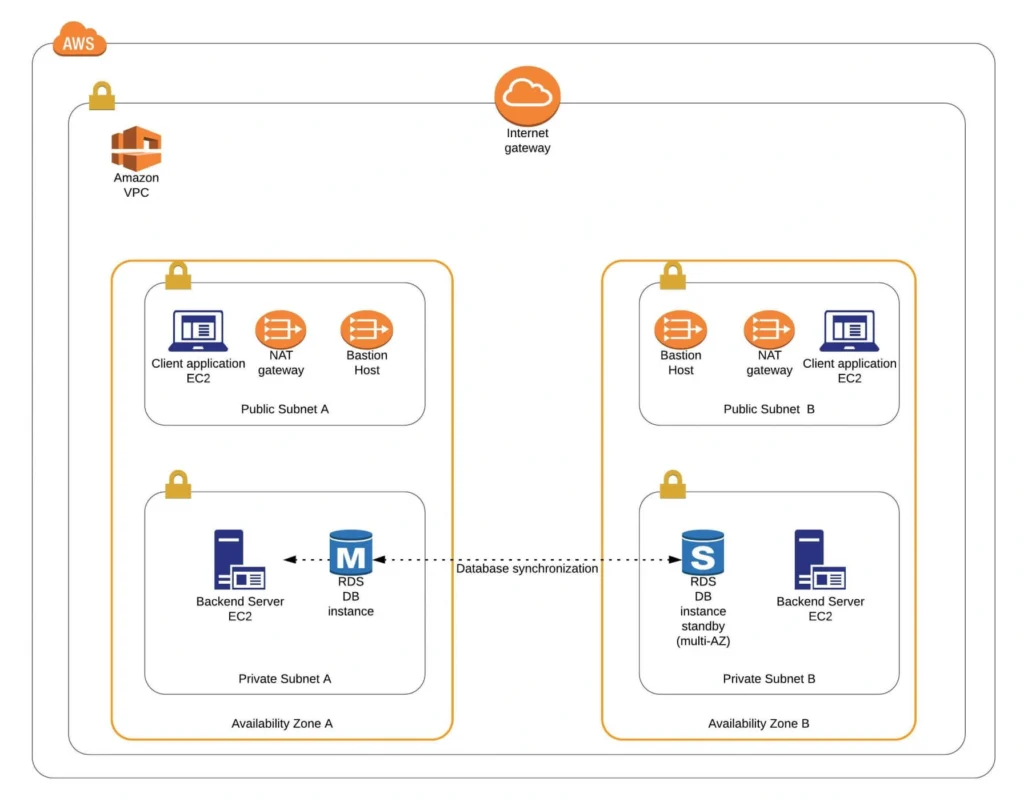

The path towards enterprise level AWS infrastructure – architecture scaffolding

This article is the first one of the mini-series which will walk you through the process of creating an enterprise-level AWS infrastructure. By the end of this series, we will have created an infrastructure comprising a VPC with four subnets in two different availability zones with a client application, backend server, and a database deployed inside. Our architecture will be able to provide scalability and availability required by modern cloud systems. Along the way, we will explain the basic concepts and components of the Amazon Web Services platform. In this article, we will talk about the scaffolding of our architecture to be specific a Virtual Private Cloud (VPC), Subnets, Elastic IP Addresses, NAT gateways, and route tables. The whole series comprises of:

- Part 1 - Architecture Scaffolding (VPC, Subnets, Elastic IP, NAT)

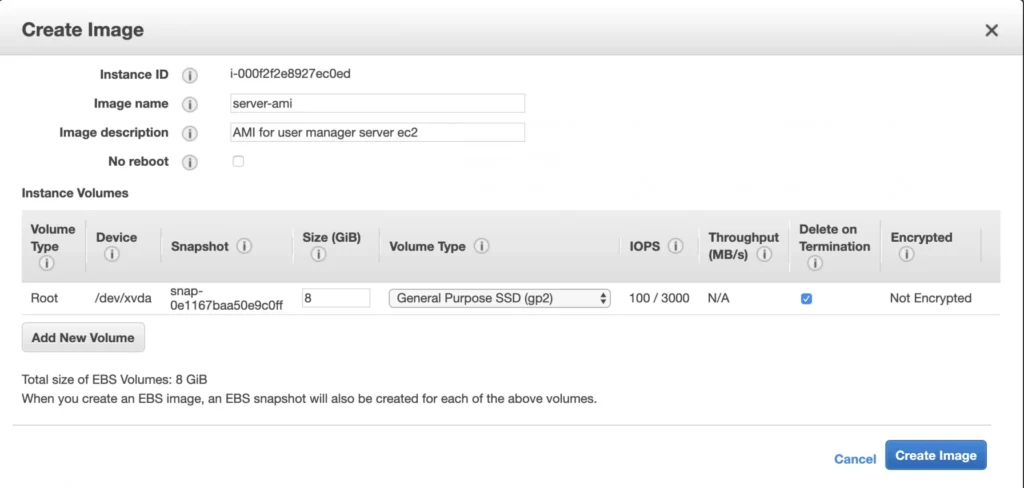

- Part 2 - The Path Towards Enterprise Level AWS Infrastructure – EC2, AMI, Bastion Host, RDS

- Part 3 - Load Balancing and Application Deployment (Elastic Load Balancer)

The cloud, as once explained in the Silicon Valley tv-series, is “this tiny little area which is becoming super important and in many ways is the future of computing.” This would be accurate, except for the fact that it is not so tiny and the future is now. So let’s delve into the universe of cloud computing and learn how to build highly available, secure and fault-tolerant cloud systems, how to utilize the AWS platform for that, what are its key components and how to deploy your applications on AWS.

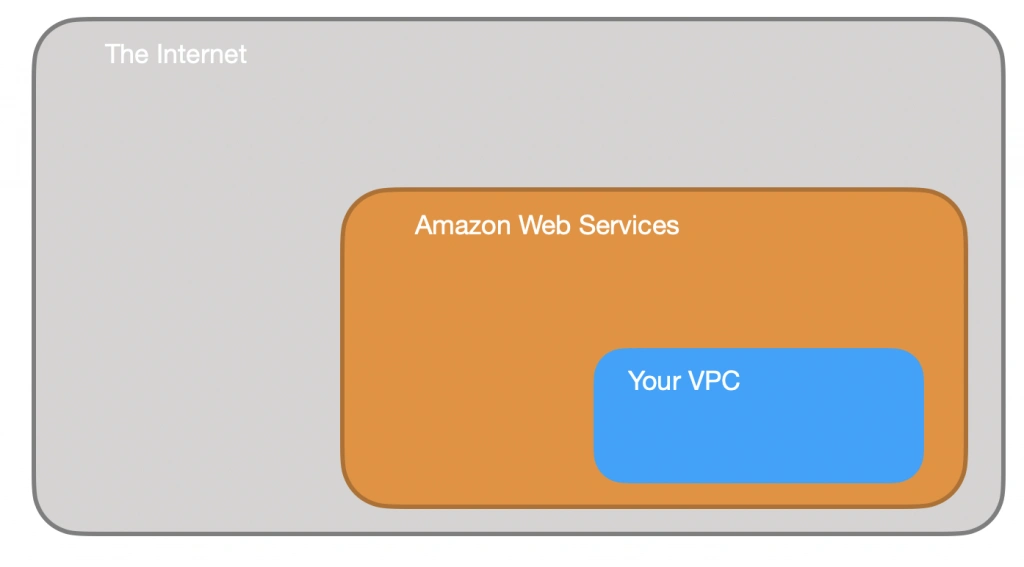

Cloud computing

Over the last years, the IT industry underwent a major transformation in which most of the global enterprises moved away from their traditional IT infrastructures towards the cloud. The main reason behind that is the flexibility and scalability which comes with cloud computing, understood as provisioning of computing services such as servers, storage, databases, networking, analytic services, etc. over the Internet ( the cloud ). In this model organizations only pay for the cloud resources they are actually using and do not need to manage the physical infrastructure behind it. There are many cloud platform providers on the market with the major players being Amazon Web Services (AWS), Microsoft Azure and Google Cloud. This article focuses on services available on AWS, but bear in mind that most of the concepts explained here will have their equivalents on the other platforms.

Infrastructure overview

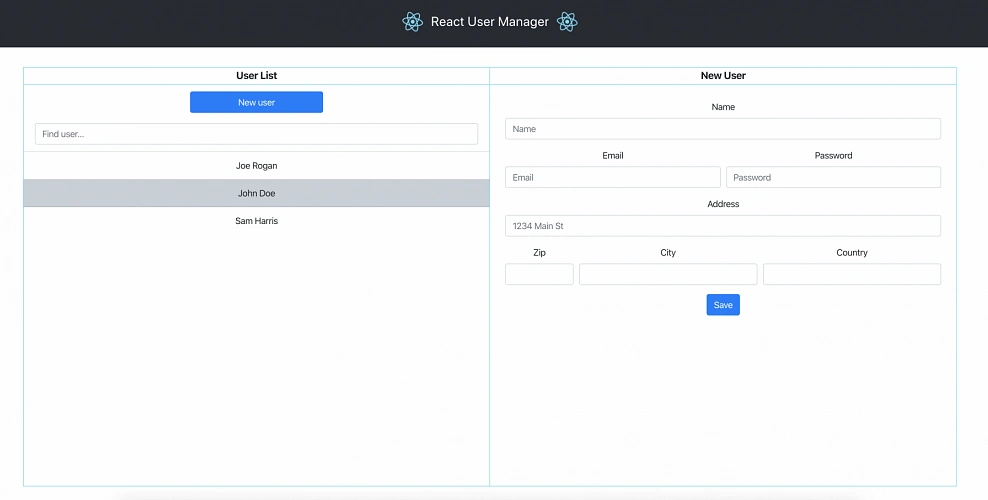

Let’s start with what we will build throughout this series. The goal is to create a real-life, enterprise-level AWS infrastructure that will be able to host a user management system consisting of a React.js web application, Java Spring Boot server and a relational database.

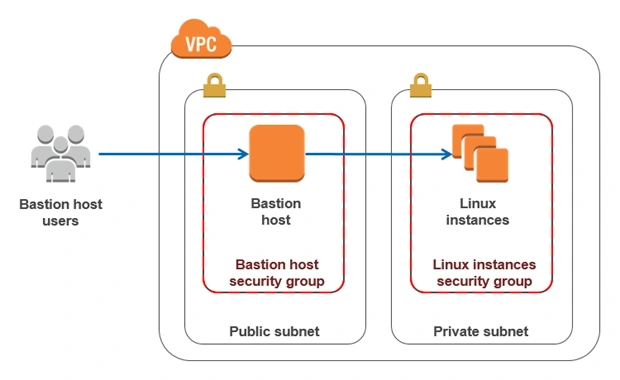

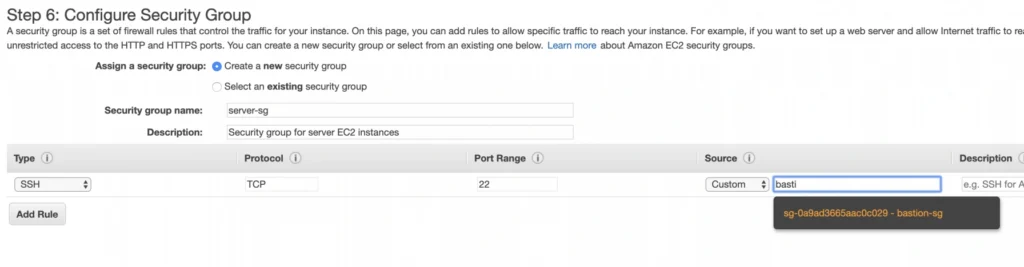

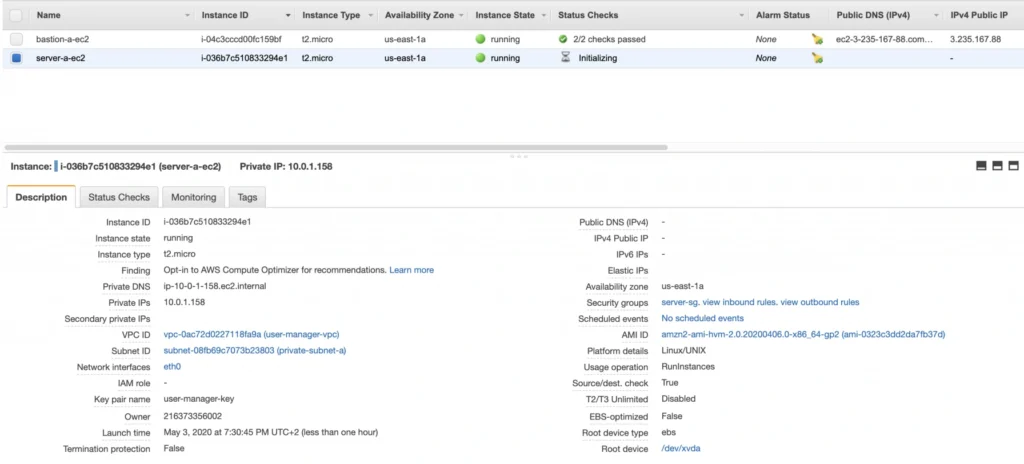

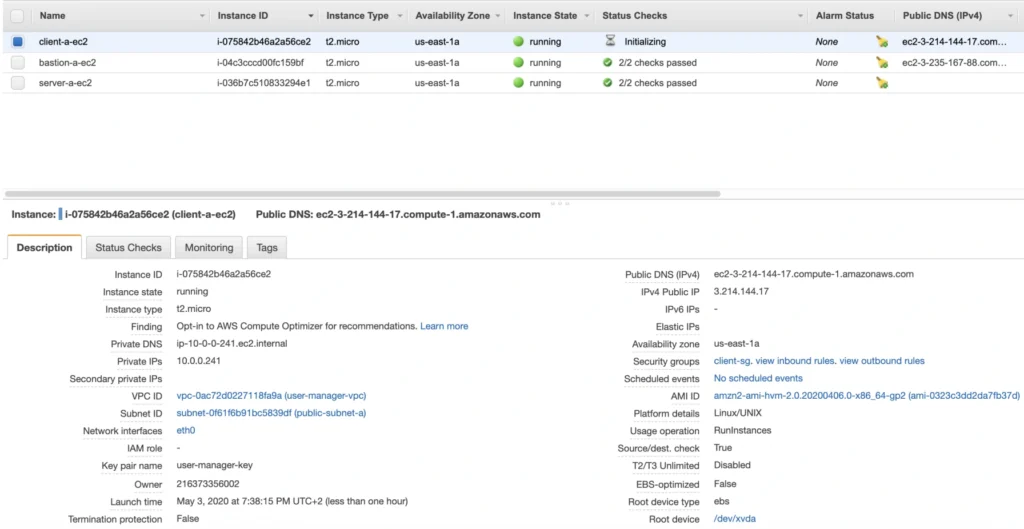

The architecture diagram is shown in figure 1. It comprises a VPC with four subnets (2 public and 2 private) distributed across two different availability zones. In public subnets are hosted a client application, a NAT gateway and a Bastion Host (more on that later), while our private subnets contain backend server and database instances. The infrastructure also includes Internet Gateway to enable access to the Internet from our VPC and a Load Balancer. The reasoning behind placing the backend server and database in private subnets is to protect those instances from being directly exposed to the Internet as they may contain sensitive data. Instead, they will only have private IP addresses and be behind a NAT gateway and a public-facing Elastic Load Balancer. Presented infrastructure provides a high level of scalability and availability through the introduction of redundancy with instances deployed in two different availability zones and the use of auto-scaling groups which provide automatic scaling and health management of the system.

Figure 2 presents the view of the user management web application system we will host on AWS:

The applications can be found on GitHub.

In this part of the article series, we will focus on the scaffolding of the infrastructure, namely allocating elastic IP addresses, setting up the VPC, creating the subnets, configuring NAT gateways and route tables.

AWS Free Tier Note

AWS provides its new users with a 12-month free tier, which gives customers the ability to use their services up to specified limits free of charge. Those limits include 750 hours per month of t2.micro size EC2 instances, 5GB of Amazon S3 storage, 750 hours of Amazon RDS per month, and much more. In the AWS Management Console, Amazon usually provides indicators in which resource choices are part of the free tier, and throughout this series, we will stick to those. If you want to be sure you will not exceed the free tier limits, remember to stop your EC2 and RDS instances whenever you finish working on AWS. You can also set up a billing alert that will notify you if you exceed the specified limit.

AWS theory

1. VPC

The first step of our journey into the wide world of the AWS infrastructure is getting to know Amazon Virtual Private Cloud (VPC). VPC allows developers to create a virtual network in which they can launch resources and have them logically isolated from other VPCs and the outside world. Within the VPC your resources have private IP addresses with which they can communicate with one another. You can control the access to all those resources inside the VPC and route outgoing traffic as you like.

Access to the VPC is configured with the use of several key structures:

Security groups - They basically work like mini firewalls defining allowed incoming and outgoing IP addresses and ports. They can be attached at the instance level, be shared among many instances and provide the possibility to allow access from other security groups instead of IPs.

Routing tables - Routing tables are responsible for determining where the network traffic from a subnet or gateway should be directed. There is a main route table associated with your VPC, and you can define custom routing tables for your subnets and gateways.

Network Access Control List (Network ACL) - It acts as an IP filtering table for incoming and outgoing traffic and can be used as an additional security layer on top of security groups. Network ACLs act similarly to the security groups, but instead of applying rules on the instance level, they apply them to the entire VPC or subnet.

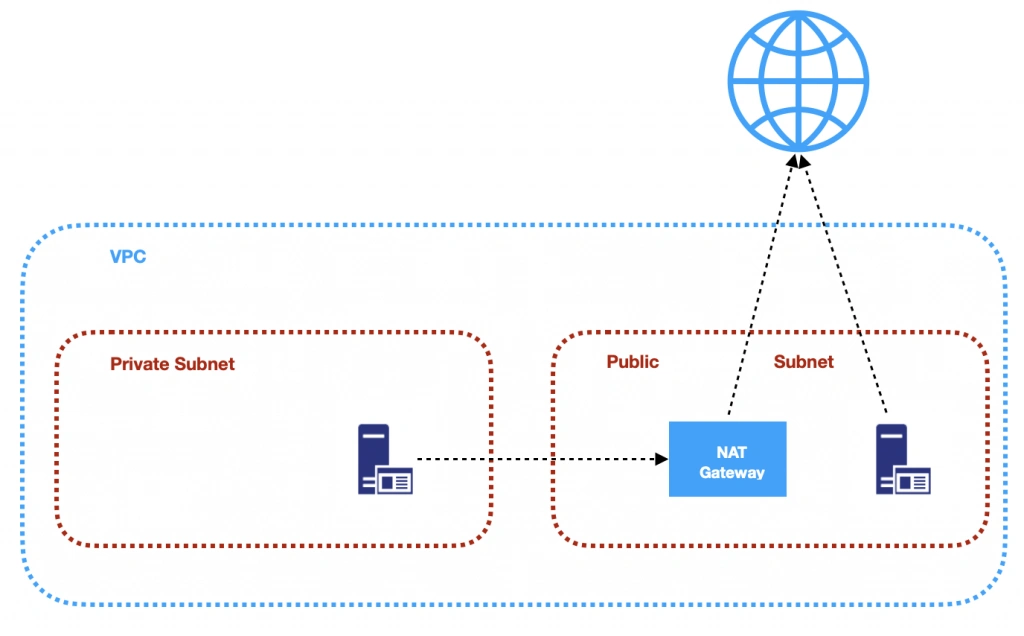

2. Subnets

Instances cannot be launched directly into a VPC. They need to live inside subnets. A Subnet is an additional isolated area that has its own CIDR block, routing table, and Network Access Control List. Subnets allow you to create different behaviors in the same VPC. For instance, you can create a public subnet that can be accessed and have access to the public internet and a private subnet that is not accessible through the Internet and must go through a NAT (Network Address Translation) gateway in order to access the outside world.

3. NAT (Network Address Transfer) gateway

NAT Gateways are used in order to enable instances located in private subnets to connect to the Internet or other AWS services, while still preventing direct connections from the Internet to those instances. NAT may be useful for example when you need to install or upgrade software or OS on EC2 instances running in private subnets. AWS provides a NAT gateway managed service which requires very little administrative effort. We will use it while setting up our infrastructure.

4. Elastic IP

AWS provides a concept of Elastic IP Address which is used to facilitate the management of dynamic cloud computing. Elastic IP Address is a public, static IP Address that is associated with your AWS account and can be easily allocated to one of your EC2 instances. The idea behind it is that the address is not strongly associated with your instance but instead elasticity of the address allows in a case of any failure in the system to swiftly remap the address to another healthy instance in your account.

5. AWS Region

AWS Regions are geographical areas in which AWS has data centers. Regions are divided into Availability Zones (AZ) which are independent data centers placed relatively close to each other. Availability Zones are used to provide redundancy and data replication. The choice of AWS region for your infrastructure should be determined to take into account factors such as:

- Proximity - you would usually want your application to be deployed close to your region of operation for latency or regulatory reasons.

- Cost - different regions come with different pricing.

- Feature selection - not all services are available in all regions, this is especially the case for newly introduced features.

- Several availability zones - all regions have at least 2 AZ, but some of them have more. Depending on your needs, this may be a key factor.

Practice

AWS Region

Let’s commence with a selection of the AWS region to operate in. In the top right corner of the AWS Management Console, you can choose a region. At this point, it does not really matter which region you choose (as discussed earlier, it may for your organization). However, it is important to note that you will always only view resources launched in the currently selected region.

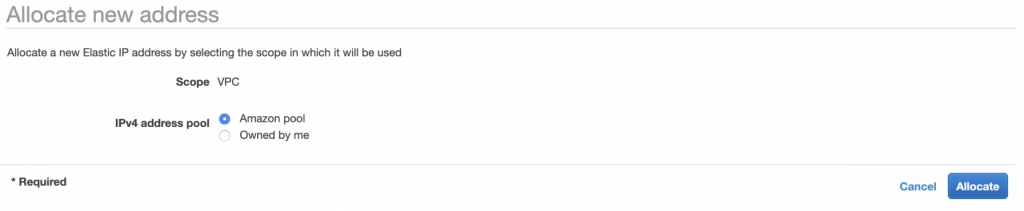

Elastic IP

The next step is the allocation of an elastic IP address. For that purpose, go into the AWS Management console, and find the VPC service. In the left menu bar, under the Virtual Private Cloud section, you should see the Elastic IPs link. There you can allocate a new address owned by yourself or from the pool of Amazon’s available addresses.

Availability Zone A configuration

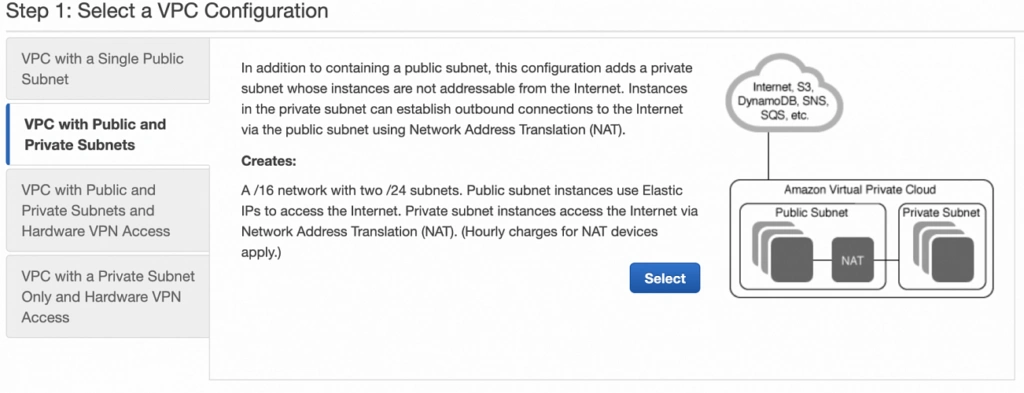

Next, let’s create our VPC and subnets. For now, we are going to set up only Availability Zone A and we will work on High Availability after the creation of the VPC. So go again into the VPC service dashboard and click the Launch VPC Wizard button. You will be taken to the screen where you can choose what kind of a VPC configuration you want Amazon to set you up with. In order to match our target architecture as closely as possible, we are going to choose VPC with Public and Private Subnets .

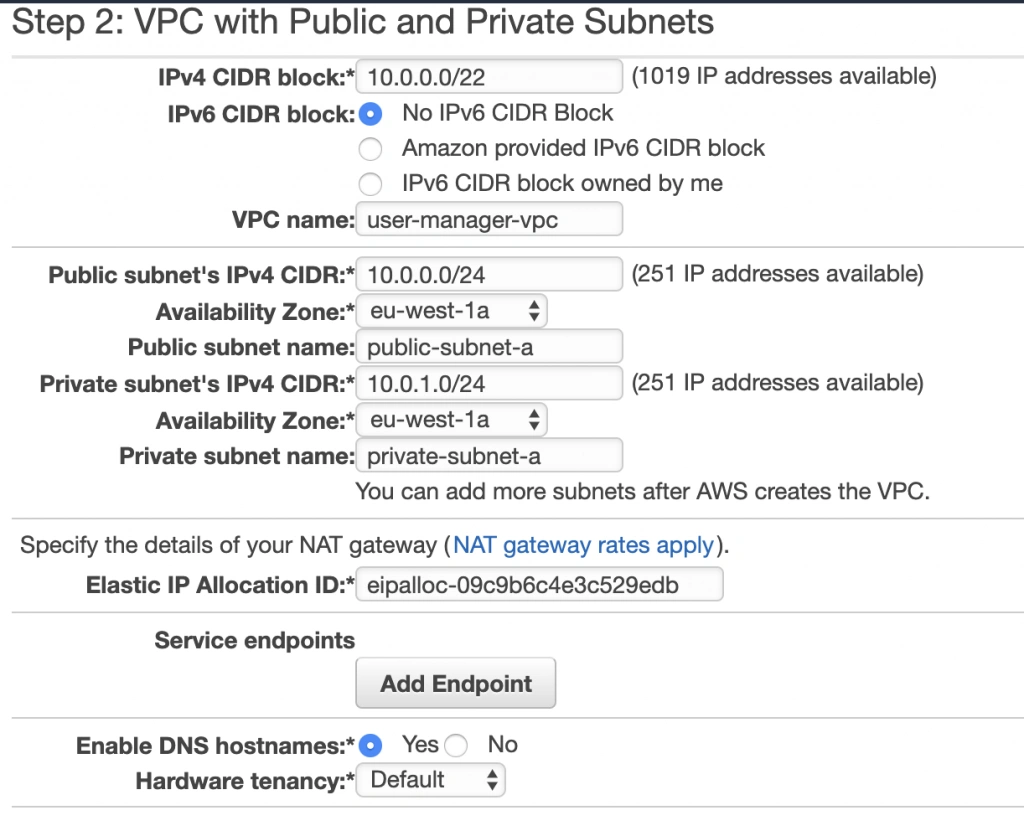

The next screen allows you to set up your VPC configuration details such as:

- name,

- CIDR block,

- details of the subnets:

- name,

- IP address range - a subset of the VPC CIDR range,

- availability zone,

As shown in the architecture diagram (fig. 1), we need 4 subnets in 2 different availability zones. So let’s set our VPC CIDR to 10.0.0.0/22, and have our subnets as follows:

- public-subnet-a: 10.0.0.0/24 (zone A)

- private-subnet-a: 10.0.1.0/24 (zone A)

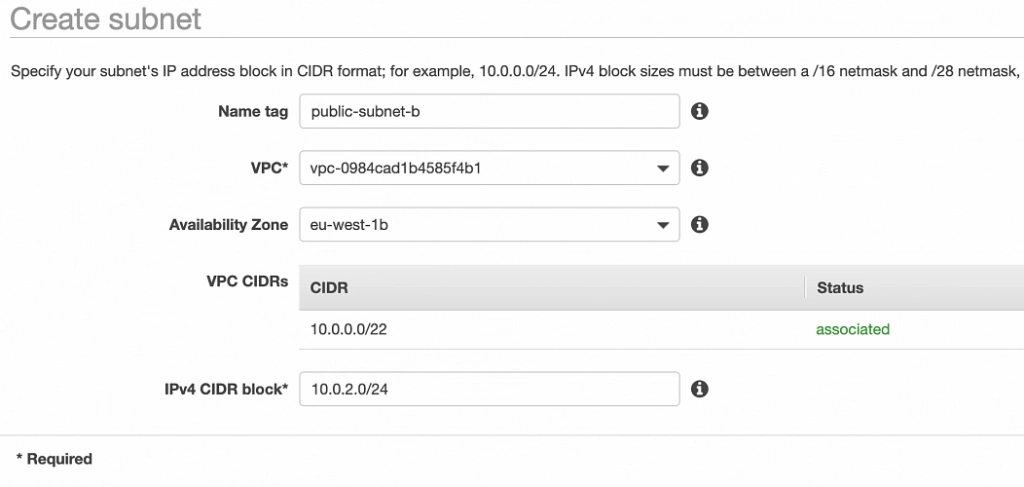

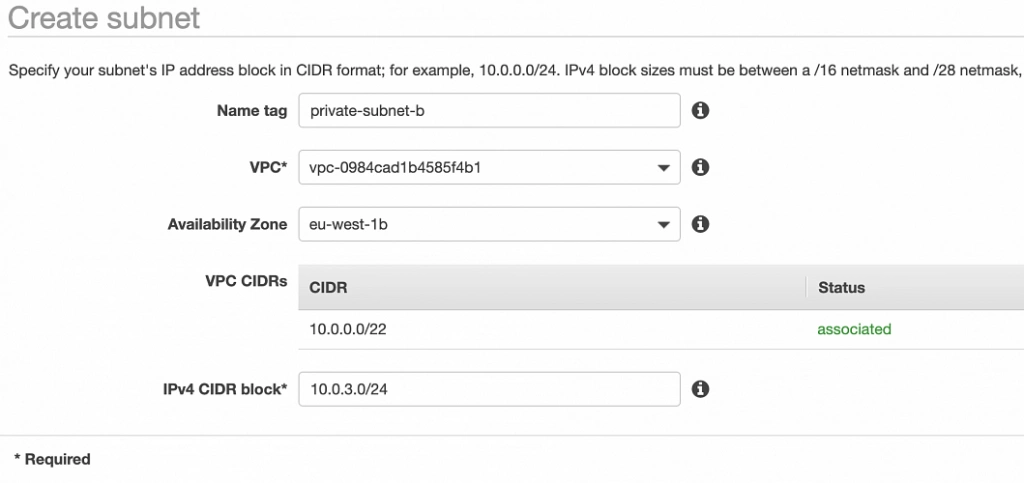

- public-subnet-b: 10.0.2.0/24 (zone B)

- private-subnet-b: 10.0.3.0/24 (zone B)

Set everything up as shown in figure 7. The important aspects to note here are the choice of the same availability zone for public and private subnets, and the fact that Amazon will automatically set us up with a NAT gateway for which we just need to specify our previously allocated Elastic IP Address. Now, click the Create VPC button, and Amazon will configure your VPC.

NAT gateway

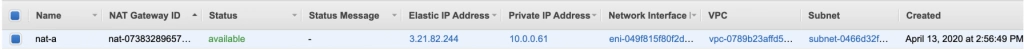

When the creation of the VPC is over, go to the NAT Gateways section, and you should see the gateway created for you by AWS. To make it more recognizable, let us edit its Name tag to nat-a .

Route tables

Amazon also configured Route Tables for your VPC. Go to the Route Tables section, and you should have there two route tables associated with your VPC. One of them is the main route table of your VPC, and the second one is currently associated with your public-subnet-a. We will modify that setting a bit.

First, select the main route table, go to the routes tab and click Edit routes . There are currently two entries. The first one means Any IP address referencing local VPC CIDR should resolve locally and we shouldn’t modify it. The second one is pointing to the NAT gateway, but we will change it to configure the Internet Gateway of our VPC in order to let outgoing traffic reach the outside world.

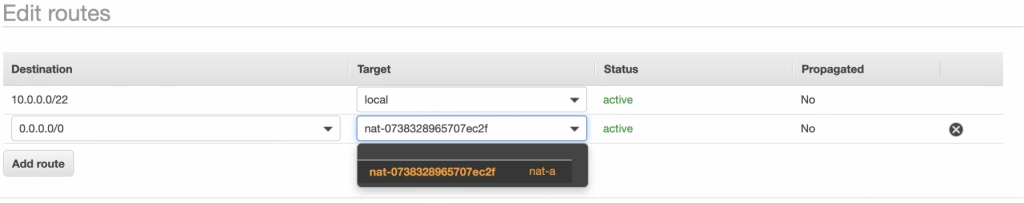

Next, go to the Subnet Associations tab and associate the main route table with public-subnet-a. You can also edit its Name tag to main-rt . Then, select the second route table associated with your VPC, edit its routes to route every outgoing Internet request to the nat-a gateway as shown in figure 10. Associate this route table with private-subnet-a and edit its Name tag to private-a-rt .

Availability Zone B Configuration

Well done, availability zone A is configured. In order to provide High Availability, we need to set everything up in the second availability zone as well. The first step is the creation of the subnets. Go again to a VPC dashboard in the AWS management console and in the left menu bar find the Subnets section. Now, click the Create subnet button and configure everything as shown in figures 11 and 12.

public-subnet-b

private-subnet-b

NAT gateway

For availability zone B we need to create the NAT gateway manually. For that, find the NAT Gateways section in the left menu bar of the VPC dashboard, and click Create NAT Gateway . Select public-subnet-b , allocate EIP and add a Name tag with value nat-b .

Route tables

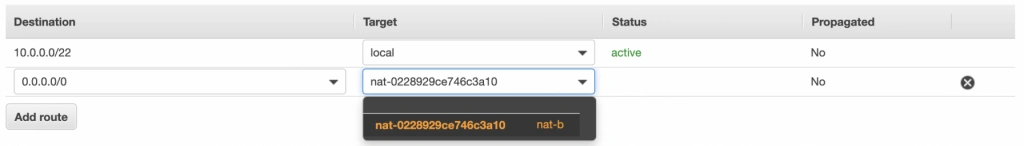

The last step is the configuration of the route tables for the subnets in availability zone B. For that, go to the Route Tables section again. Our public-subnet-b is going to have the same routing rules as the public-subnet-a, so let’s add a new association to our main-rt table for public-subnet-b. Then, click the Create route table button, name it private-b-rt , choose our VPC and click create . Next, select the newly created table go to the Routes tab and Edit routes by analogy with the private-a-rt table, but instead of directing every outside going request to nat-a gateway route it to nat-b (fig. 13).

In the end, you should have three route tables associated with your VPC as shown in figure 14.

Summary

That’s it, the scaffolding of our VPC is ready. The diagram shown in fig.15 presents a view of the created infrastructure. It is now ready for the creation of required EC2 instances, Bastion Hosts, configuration of an RDS database and deployment of our applications, which we will do in the next part of the series .

Sources:

- https://azure.microsoft.com/en-us/overview/what-is-cloud-computing/

- https://aws.amazon.com/what-is-aws/

- https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/elastic-ip-addresses-eip.html

- https://docs.aws.amazon.com/vpc/latest/userguide/VPC_Route_Tables.html

- https://docs.aws.amazon.com/vpc/latest/userguide/VPC_SecurityGroups.html#DefaultSecurityGroup

- https://docs.aws.amazon.com/vpc/latest/userguide/vpc-network-acls.html

- https://medium.com/@datapath_io/elastic-ip-static-ip-public-ip-whats-the-difference-8e36ac92b8e7

- https://cloudacademy.com/blog/aws-bastion-host-nat-instances-vpc-peering-security/

- https://aws.amazon.com/blogs/aws/internal-elastic-load-balancers/

- https://aws.amazon.com/quickstart/architecture/linux-bastion/

- https://aws.amazon.com/blogs/security/securely-connect-to-linux-instances-running-in-a-private-amazon-vpc/

- http://thebluenode.com/exposing-private-ec2-instances-behind-public-elastic-load-balancer-elb-aws

- https://app.pluralsight.com/library/courses/aws-developer-getting-started/table-of-contents

- https://app.pluralsight.com/library/courses/aws-developer-designing-developing/table-of-contents

- https://app.pluralsight.com/library/courses/aws-networking-deep-dive-vpc/table-of-contents

- https://datanextsolutions.com/blog/using-nat-gateways-in-aws/

- https://docs.aws.amazon.com/vpc/latest/userguide/vpc-nat-gateway.html

Reactive service to service communication with RSocket – abstraction over RSocket

If you are familiar with the previous articles of this series ( Introduction , Load balancing & Resumability ), you have probably noticed that RSocket provides a low-level API. We can operate directly on the methods from the interaction model and without any constraints sends the frames back and forth. It gives us a lot of freedom and control, but it may introduce extra issues, especially related to the contract between microservices. To solve these problems, we can use RSocket through a generic abstraction layer. There are two available solutions out there: RSocket RPC module and integration with Spring Framework. In the following sections, we will discuss them briefly.

RPC over RSocket

Keeping the contract between microservices clean and well-defined is one of the crucial concerns of the distributed systems. To assure that applications can exchange the data we can leverage Remote Procedure Calls. Fortunately, RSocket has dedicated RPC module which uses Protobuf as a serialization mechanism, so that we can benefit from RSocket performance and keep the contract in check at the same time. By combining generated services and objects with RSocket acceptors we can spin up fully operational RPC server, and just as easily consume it using RPC client.

In the first place, we need the definition of the service and the object. In the example below, we create simple CustomerService with four endpoints – each of them represents a different method from the interaction model.

syntax = "proto3";

option java_multiple_files = true;

option java_outer_classname = "ServiceProto";

package com.rsocket.rpc;

import "google/protobuf/empty.proto";

message SingleCustomerRequest {

string id = 1;

}

message MultipleCustomersRequest {

repeated string ids = 1;

}

message CustomerResponse {

string id = 1;

string name = 2;

}

service CustomerService {

rpc getCustomer(SingleCustomerRequest) returns (CustomerResponse) {} //request-response

rpc getCustomers(MultipleCustomersRequest) returns (stream CustomerResponse) {} //request-stream

rpc deleteCustomer(SingleCustomerRequest) returns (google.protobuf.Empty) {} //fire'n'forget

rpc customerChannel(stream MultipleCustomersRequest) returns (stream CustomerResponse) {} //request-channel

}

In the second step, we have to generate classes out of the proto file presented above. To do that we can create a gradle task as follows:

protobuf {

protoc {

artifact = 'com.google.protobuf:protoc:3.6.1'

}

generatedFilesBaseDir = "${projectDir}/build/generated-sources/"

plugins {

rsocketRpc {

artifact = 'io.rsocket.rpc:rsocket-rpc-protobuf:0.2.17'

}

}

generateProtoTasks {

all()*.plugins {

rsocketRpc {}

}

}

}

As a result of generateProto task, we should obtain service interface, service client and service server classes, in this case, CustomerService , CustomerServiceClient , CustomerServiceServer respectively. In the next step, we have to implement the business logic of generated service (CustomerService):

public class DefaultCustomerService implements CustomerService {

private static final List RANDOM_NAMES = Arrays.asList("Andrew", "Joe", "Matt", "Rachel", "Robin", "Jack");

@Override

public Mono getCustomer(SingleCustomerRequest message, ByteBuf metadata) {

log.info("Received 'getCustomer' request [{}]", message);

return Mono.just(CustomerResponse.newBuilder()

.setId(message.getId())

.setName(getRandomName())

.build());

}

@Override

public Flux getCustomers(MultipleCustomersRequest message, ByteBuf metadata) {

return Flux.interval(Duration.ofMillis(1000))

.map(time -> CustomerResponse.newBuilder()

.setId(UUID.randomUUID().toString())

.setName(getRandomName())

.build());

}

@Override

public Mono deleteCustomer(SingleCustomerRequest message, ByteBuf metadata) {

log.info("Received 'deleteCustomer' request [{}]", message);

return Mono.just(Empty.newBuilder().build());

}

@Override

public Flux customerChannel(Publisher messages, ByteBuf metadata) {

return Flux.from(messages)

.doOnNext(message -> log.info("Received 'customerChannel' request [{}]", message))

.map(message -> CustomerResponse.newBuilder()

.setId(UUID.randomUUID().toString())

.setName(getRandomName())

.build());

}

private String getRandomName() {

return RANDOM_NAMES.get(new Random().nextInt(RANDOM_NAMES.size() - 1));

}

}

Finally, we can expose the service via RSocket. To achieve that, we have to create an instance of a service server (CustomerServiceServer) and inject an implementation of our service (DefaultCustomerService). Then, we are ready to create an RSocket acceptor instance. The API provides RequestHandlingRSocket which wraps service server instance and does the translation of endpoints defined in the contract to methods available in the RSocket interaction model.

public class Server {

public static void main(String[] args) throws InterruptedException {

CustomerServiceServer serviceServer = new CustomerServiceServer(new DefaultCustomerService(), Optional.empty(), Optional.empty());

RSocketFactory

.receive()

.acceptor((setup, sendingSocket) -> Mono.just(

new RequestHandlingRSocket(serviceServer)

))

.transport(TcpServerTransport.create(7000))

.start()

.block();

Thread.currentThread().join();

}

}

On the client-side, the implementation is pretty straightforward. All we need to do is create the RSocket instance and inject it to the service client via the constructor, then we are ready to go.

@Slf4j

public class Client {

public static void main(String[] args) {

RSocket rSocket = RSocketFactory

.connect()

.transport(TcpClientTransport.create(7000))

.start()

.block();

CustomerServiceClient customerServiceClient = new CustomerServiceClient(rSocket);

customerServiceClient.deleteCustomer(SingleCustomerRequest.newBuilder()

.setId(UUID.randomUUID().toString()).build())

.block();

customerServiceClient.getCustomer(SingleCustomerRequest.newBuilder()

.setId(UUID.randomUUID().toString()).build())

.doOnNext(response -> log.info("Received response for 'getCustomer': [{}]", response))

.block();

customerServiceClient.getCustomers(MultipleCustomersRequest.newBuilder()

.addIds(UUID.randomUUID().toString()).build())

.doOnNext(response -> log.info("Received response for 'getCustomers': [{}]", response))

.subscribe();

customerServiceClient.customerChannel(s -> s.onNext(MultipleCustomersRequest.newBuilder()

.addIds(UUID.randomUUID().toString())

.build()))

.doOnNext(customerResponse -> log.info("Received response for 'customerChannel' [{}]", customerResponse))

.blockLast();

}

}

Combining RSocket with RPC approach helps to maintain the contract between microservices and improves day to day developer experience. It is suitable for typical scenarios, where we do not need full control over the frames, but on the other hand, it does not limit the protocol flexibility. We can still expose RPC endpoints as well as plain RSocket acceptors in the same application so that we can easily choose the best communication pattern for the given use case.