Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Is rise of data and AI regulations a challenge or an opportunity?

Right To Repair and EU Data Act as a step towards data monetization.

Legislators try to shape the future

In recent years the automotive market has witnessed a growing amount of laws and regulations protecting customers across various markets. At the forefront of such legislation is the European Union, where the most significant disruption for modern software-defined vehicles come from the EU Data Act and EU AI Act. The legislation aims to control the use of AI and to make sure that the equipment/vehicle owner is also the owner of the data generated by using the device. The vehicle owner can decide to share the data with any 3rd party he wants, effectively opening the data market for repair shops, custom applications, usage-based insurance or fleet management.

Across the Atlantic, in the United States, there is a strong movement called “Right to Repair”, which effectively tries to open the market of 3rd party repair of all customer devices and appliances. This also includes access to the data generated by the vehicle. While the federal legislation is not there, there are two states that that stand out in terms of their approach to Right to Repair in the automotive industry – Massachusetts and Maine.

Both states have a very different approach, with Maine leaning towards an independent entity and platform for sharing information (which as of now does not exist) and Massachusets towards OEMs creating their own platforms. With numerous active litigations, including lawsuits OEMs vs State, it’s hard to judge what will be the final enforceable version of the legislation.

The current situation

Both pieces of legislation impose a penalty when it’s not fulfilled – severe in the case of EDA (while not final, the fines are expected to be substantial, potentially reaching up to €20 million or 4% of total worldwide annual turnover!), and slightly lower for state Right to Repair (for civil law suits it may be around $1000 per VIN per day, or in Massachusets $10.000 per violation).

The approach taken by the OEMs to tackle this fact varies greatly. In the EU most of the OEMs either reused existing software or build/procured new systems to fulfill the new regulation. In the USA, because of the smaller impact, there are two approaches: Subaru and Kia in 2022 decided to just disable their connected services (Starlink and Kia Connect respectively) in states with strict legislation. Others decided to either take part in litigation, or just ignore the law and wait. Lately federal judges decided in favor of the state, making the situation of OEMs even harder.

Data is a crucial asset in today’s world

Digital services, telematics, and in general data are extremely important assets. This has been true for years in e-commerce, where we have seen years of tracking, cookies and other means to identify customers behavior. The same applies to telemetry data from the vehicle. Telemetry data is used to repair vehicles, to design better features and services offering for existing and new models, identify market trends, support upselling, lay out and optimize charging network, train AI models, and more. The list never ends.

Data is collected everywhere. And in a lot of cases stored everywhere. The sales department has its own CRM, telemetry data is stored in a data lake, the mobile app has its own database. Data is siloed and dispersed, making it difficult to locate and use effectively.

Data platform importance

To solve the problem with both mentioned legislations you need a data sharing platform. The platform is required to manage the data owner consent, enable collection of data in single place and sharing with either data owner, or 3rd party. While allowing to be compliant with upcoming legislation, it also helps with identifying the location of different data points, describing it and making available in single place – allowing to have a better use of existing datasets.

A data platform like Grape Up Databoostr helps you quickly become compliant, while our experienced team can help you find, analyze, prepare and integrate various data sources into the systems, and at the same time navigate the legal and business requirements of the system.

Cost of becoming compliant

Building a data streaming platform comes at the cost. Although not terribly expensive, platform requires investment which does not immediately seem useful from a business perspective. Let’s then now explore the possibilities of recouping the investment.

- You can use the same data sharing platform to sell the data, even reusing the mechanism used to get user consent for sharing the data. For B2B use cases, the mechanism is not required.

- Legislation mainly mandates to share data “as is”, which means raw, unprocessed data. Any derived data, like predictive maintenance calculation from AI algorithms, proprietary incident detection systems, or any data that is processed by OEM. This allows not just to put a price tag on data point, but also to charge more due to additional work required to build analytics models.

- You can share the anonymized datasets, which then can be used to train AI models, identify EVs charging patterns, or plan marketing campaigns.

- And lastly, EU Data Act allows to charge fair amount for sharing the data, to recoup the cost of building and maintaining the platform. The allowed price depends on the requestor, where enterprises can be charged with a margin, and the data owner should be able to get data for free.

We can see that there are numerous ways to recoup the cost of building the platform. This is especially important as the platform might be required to fulfill certain regulations, and procuring the system is required, not optional.

The power of scale in data monetization

As we now know, building a data streaming platform is more of a necessity, than an option, but there is a way to change the problem into an opportunity. Let’s see if the opportunity is worth the struggle.

We can begin with dividing the data into two types – raw and derived. And let’s put a price tag on both to make the calculation easier. To further make our case easier to calculate and visualize, I went to high-mobility and checked current pricing for various brands, and took the average of lower prices.

The raw data in our example will be $3 per VIN per month, and derived data will be $5 per VIN per month. In reality the prices can be higher and associated with selected data package (the data from powertrain will be different from chassis data).

Now let’s assume we start the first year with a very small fleet, like the one purchased for sales representatives by two or three enterprises – 30k of vehicles. Next year we will add a leasing company which will increase the number to 80k of vehicles, and in 5 years we will have 200k VINs/month with subscription.

Of course, this represents just a conservative projection, which assumes rather small usage of the system and slow growth, and exclusive subscription to VIN (in reality the same VIN data can be shared to an insurance company, leasing company, and rental company).

This is constant additional revenue stream, which can be created along the way of fulfilling the data privacy and sharing regulations.

Factors influencing the value

$3 per VIN per month may initially appear modest. Of course with the effect of scale we have seen before, it becomes significant, but what are the factors which influence the price tag you can put on your data?

- Data quality and veracity – the better quality of data you have, the less data engineering is required on the customer side to integrate it into their systems.

- Data availability (real-time versus historical datasets) – in most cases real-time data will be more valuable – especially when the location of the vehicle is important.

- Data variety – more variety of data can be a factor influencing the value, but more importantly is to have the core data (like location and lock state). Missing core data will reduce the value greatly.

- Legality and ethics – the data can only be made available with the owner consent. That’s why consent management systems like the ones required by EDA are important.

What is required

To monetize the data you need a platform, like Grape Up’s Databoostr. This platform should be integrated into various data sources in the company, making sure that data is streamed in a close to real-time way. This aspect is important, as quite a lot of modern use cases (like Fleet Management System) requires data to be fresh.

Next step is to create pricing strategy and identify customers, who are willing to pay for the data. It is a good start to ask the business development department if there are customers who already asked for data access, or even required to have this feature before they invest in bigger fleet.

The final step would be to identify the opportunities to further increase revenue, by adding additional data points for which customers are willing to pay extra.

Summary

Ultimately, data is no longer a byproduct of connected vehicles – it is a strategic asset. By adopting platforms like Grape Up’s Databoostr, OEMs can not only meet regulatory requirements but also position themselves to capitalize on the growing market for automotive data. With the right strategy, what begins as a compliance necessity can evolve into a long-term competitive advantage.

Spring AI Alternatives for Java Applications

In today's world, as AI-driven applications grow in popularity and the demand for AI-related frameworks is increasing, Java software engineers have multiple options for integrating AI functionality into their applications.

This article is a second part of our series exploring java-based AI frameworks. In the previous article we described main features of the Spring AI framework. Now we'll focus on its alternatives and analyze their advantages and limitations compared to Spring AI.

Supported Features

Let's compare two popular open-source frameworks alternative to Spring AI. Both offer general-purpose AI models integration features and AI-related services and technologies.

LangChain4j - a Java framework that is a native implementation of a widely used in AI-driven applications LangChain Python library.

Semantic Kernel - a framework written by Microsoft that enables integration of AI Model into applications written in various languages, including Java.

LangChain4j

LangChain4j has two levels of abstraction.

High-level API, such as AI Services, prompt templates, tools, etc. This API allows developers to reduce boilerplate code and focus on business logic.

Low-level primitives: ChatModel, AiMessage, EmbeddingStore etc. This level gives developers more fine-grained control on the components behavior or LLM interaction although it requires writing of more glue code.

Models

LangChain4j supports text, audio and image processing using LLMs similarly to Spring AI. It defines a separate model classes for different types of content:

- ChatModel for chat and multimodal LLMs

- ImageModel for image generation.

Framework integrates with over 20 major LLM providers like OpenAI, Google Gemini, Anthropic Claude etc. Developers can also integrate custom models from HuggingFace platform using a dedicated HuggingFaceInferenceApiChatModel interface. Full list of supported model providers and model features can be found here: https://docs.langchain4j.dev/integrations/language-models

Embeddings and Vector Databases

When it comes to embeddings, LangChain4j is very similar to Spring AI. We have EmbeddingModel to create vectorized data for further storing it in vector store represented by EmbeddingStore class.

ETL Pipelines

Building ETL pipelines in LangChain4j requires more manual code. Unlike Spring AI, it does not have a dedicated set of classes or class hierarchies for ETL pipelines. Available components that may be used in ETL:

- TokenTextSegmenter, which provides functionality similar to TokenTextSplitter in Spring AI.

- Document class representing an abstract text content and its metadata.

- EmbeddingStore to store the data.

There are no built-in equivalents to Spring AI's KeywordMetadataEnricher or SummaryMetadataEnricher. To get a similar functionality developers need to implement custom classes.

Function Calling

LangChain4j supports calling code of the application from LLM by using @Tool annotation. The annotation should be applied to method that is intended to be called by AI model. The annotated method might also capture the original prompt from user.

Semantic Kernel for Java

Semantic Kernel for Java uses a different conceptual model of building AI related code compared to Spring AI or LangChain4j. The central component is Kernel, which acts as an orchestrator for all the models, plugins, tools and memory stores.

Below is an example of code that uses AI model combined with plugins for function calling and a memory store for vector database. All the components are integrated into a kernel:

public class MathPlugin implements SKPlugin {

@DefineSKFunction(description = "Adds two numbers")

public int add(int a, int b) {

return a + b;

}

}

...

OpenAIChatCompletion chatService = OpenAIChatCompletion.builder()

.withModelId("gpt-4.1")

.withApiKey(System.getenv("OPENAI_API_KEY"))

.build();

KernelPlugin plugin = KernelPluginFactory.createFromObject(new MyPlugin(), "MyPlugin");

Store memoryStore = new AzureAISearchMemoryStore(...);

// Creating kernel object

Kernel kernel = Kernel.builder()

.withAIService(OpenAIChatCompletion.class, chatService)

.withPlugin(plugin)

.withMemoryStorage(memoryStore)

.build();

KernelFunction<String> prompt = KernelFunction.fromPrompt("Some prompt...").build();

FunctionResult<String> result = prompt.invokeAsync(kernel)

.withToolCallBehavior(ToolCallBehavior.allowAllKernelFunctions(true))

.withMemorySearch("search tokens", 1, 0.8) // Use memory collection

.block();

Models

When it comes to available Models Semantic Kernel is more focused on chat-related functions such as text completion and text generation. It contains a set of classes implementing AIService interface to communicate with different LLM providers, e.g. OpenAIChatCompletion, GeminiTextGenerationService etc. It does not have Java implementation for Text Embeddings, Text to Image/Image to Text, Text to Audio/Audio to Text services, although there are experimental implementations in C# and Python for them.

Embeddings and Vector Databases

For Vector Store Semantic Kernel offers the following components: VolatileVectorStore for in-memory storage, AzureAISearchVectorStore that integrates with Azure Cognitive Search and SQLVectorStore/JDBCVectorStore for an abstraction of SQL database vector stores.

ETL Pipelines

Semantic Kernel for Java does not provide an abstraction for building ETL pipelines. It doesn't have dedicated classes for extracting data or transforming it like Spring AI. So, developers would need to write custom code or use third party libraries for data processing for extraction and transformation parts of the pipeline. After these phases the transformed data might be stored in one of the available Vector Stores.

Azure-centric Specifics

The framework is focused on Azure related services such as Azure Cognitive Search or Azure OpenAI and offers a smooth integration with them. It provides a functionality for smooth integration requiring minimal configuration with:

- Azure Cognitive Search

- Azure OpenAI

- Azure Active Directory (authentication and authorization)

Because of these integrations, developers need to write little or no glue code when using Azure ecosystem.

Ease of Integration in a Spring Application

LangChain4j

LangChain4j is framework-agnostic and designed to work with plain Java. It requires a little more effort to integrate into Spring Boot app. For basic LLM interaction the framework provides a set of libraries for popular LLMs. For example, langchain4j-open-ai-spring-boot-starter that allows smooth integration with Spring Boot. The integration of components that do not have a dedicated starter package requires a little effort that often comes down to creating of bean objects in configuration or building object manually inside of the Spring service classes.

Semantic Kernel for Java

Semantic Kernel, on the other hand, doesn't have a dedicated starter packages for spring boot auto config, so the integration involves more manual steps. Developers need to create spring beans, write a spring boot configuration, define kernels objects and plugin methods so they integrate properly with Spring ecosystem. So, such integration needs more boilerplate code compared to LangChain4j or Spring AI.

It's worth mentioning that Semantic Kernel uses publishers from Project Reactor concept, such as Mono<T> type to asynchronously execute Kernel code, including LLM prompts, tools etc. This introduces an additional complexity to an application code, especially if the application is not written in a reactive approach and does not use publisher/subscriber pattern.

Performance and Overhead

LangChain4j

LangChain4j is distributed as a single library. This means that even if we use only certain functionality the whole library still needs to be included into the application build. This slightly increases the size of application build, though it's not a big downside for the most of Spring Boot enterprise-level applications.

When it comes to memory consumption, both LangChain4j and Spring AI have a layer of abstraction, which adds some insignificant performance and memory overhead, quite a standard for high-level java frameworks.

Semantic Kernel for Java

Semantic Kernel for Java is distributed as a set of libraries. It consists of a core API, and of various connectors each designed for a specific AI services like OpenAI, Azure OpenAI. This approach is similar to Spring AI (and Spring related libraries in general) as we only pull in those libraries that are needed in the application. This makes dependency management more flexible and reduces application size.

Similarly to LangChain4j and Spring AI, Semantic Kernel brings some of the overhead with its abstractions like Kernel, Plugin and SemanticFunction. In addition, because its implementation relies on Project Reactor, the framework adds some cpu overhead related to publisher/subscriber pattern implementation. This might be noticeable for applications that at the same time require fast response time and perform large amount of LLM calls and callable functions interactions.

Stability and Production Readiness

LangChain4j

The first preview of LangChain4j 1.0.0 version has been released on December 2024. This is similar to Spring AI, whose preview of 1.0.0-M1 version was published on December same year. Framework contributor's community is large (around 300 contributors) and is comparable to the one of Spring AI.

However, the observability feature in LangChain4j is still experimental, is in development phase and requires manual adjustments. Spring AI, on the other hand, offers integrated observability with micrometer and Spring Actuator which is consistent with other Spring projects.

Semantic Kernel for Java

Semantic Kernel for Java is a newer framework than LangChain4j or Spring AI. The project started in early 2024. Its first stable version was published back in 2024 too. Its contributor community is significantly smaller (around 30 contributors) comparing to Spring AI or LangChain4j. So, some features and fixes might be developed and delivered slower.

When it comes to functionality Semantic Kernel for Java has less abilities than Spring AI or LangChain4j especially those related to LLM models integration or ETL. Some of the features are experimental. Other features, like Image to Text are available only in .NET or Python.

On the other hand, it allows smooth and feature-rich integration with Azure AI services, benefiting from being a product developed by Microsoft.

Choosing Framework

For developers already familiar with LangChain framework and its concepts who want to use Java in their application, the LangChain4j is the easiest and more natural option. It has same or very similar concepts that are well-known from LangChain.

Since LangChain4j provides both low-level and high-level APIs it becomes a good option when we need to fine tune the application functionality, plug in custom code, customize model behavior or have more control on serialization, streaming etc.

It's worth mentioning that LangChain4j is an official framework for AI interaction in Quarkus framework. So, if the application is going to be written in Quarkus instead of Spring, the LangChain4j is a go-to technology here.

On the other hand, Semantic Kernel for Java is a better fit for applications that rely on Microsoft Azure AI services, integrate with Microsoft-provided infrastructure or primarily focus on chat-based functionality.

If the application relies on structured orchestration and needs to combine multiple AI models in a centralized consistent manner, the kernel concept of Semantic Kernel becomes especially valuable. It helps to simplify management of complex AI workflows. Applications written in reactive style will also benefit from Semantic Kernel's design.

Links

https://learn.microsoft.com/en-us/azure/app-service/tutorial-ai-agent-web-app-semantic-kernel-java

https://gist.github.com/Lukas-Krickl/50f1daebebaa72c7e944b7c319e3c073

https://javapro.io/2025/04/23/build-ai-apps-and-agents-in-java-hands-on-with-langchain4j

Challenges of EU Data Act in Home Appliance business

As we started 2026, the EU Data Act (Regulation (EU) 2023/2854), which is now in force in whole European Union, is mandatory for all “connected” home appliance manufacturers. IT is applicable since 12 September 2025.

Compared to other industries, like automotive or agriculture, the situation is far more complicated. The implementation of connected services varies between manufacturers, and lack of connectivity is not often considered important factor, especially for lower-segment devices.

The core approaches to connectivity in home appliances are:

- Devices connected to Wi-Fi network and sharing the data constantly to the cloud.

- Devices which can be connected through bluetooth and mobile app (those devices technically expose local API which should be accessible to owner)

- Devices with no connectivity available to customer (no mobile app), but still collecting data for diagnostic and repair purposes, accessible through undocumented service interface.

- Devices with no data collection at all (even diagnostic data).

Apart from the last bullet point, all of the mentioned approaches to building Smart HomeAppliance require the EU Data Act implementation, and those devices are considered “connected product”, even without actual internet connectivity.

The rule of thumb would be - if there is data collected by the home appliance or mobile app associated with its functions, it falls under the EU Data Act.

Short overview of EU Data Act

To make the discussion more concrete, it helps to name the key roles and the types of data upfront. In EU Data Act language, the user is the person or entity entitled to access and share the data; the data holder is typically the manufacturer and/or provider of the related service (mobile app, cloud platform); and a data recipient is the third party selected by the user to receive the data. In home appliances, “data” usually means both product data (device signals, status, events) and related-service data (app/cloud configuration, diagnostics, alerts, usage history, metadata), and access often needs to cover both historical and near-real-time datasets.

Another important dimension is balancing data access with trade secrets, security, and abuse prevention. Home appliances are not read-only devices. Many can be controlled remotely, and exposing interfaces too broadly can create safety and cybersecurity risks, so strong authentication and fine-grained authorization are essential. On top of that, direct access must be resilient:rate limiting, anti-scraping protections, and audit logs help prevent major misuse. Direct access should be self-service, but not unrestricted.

Current market situation

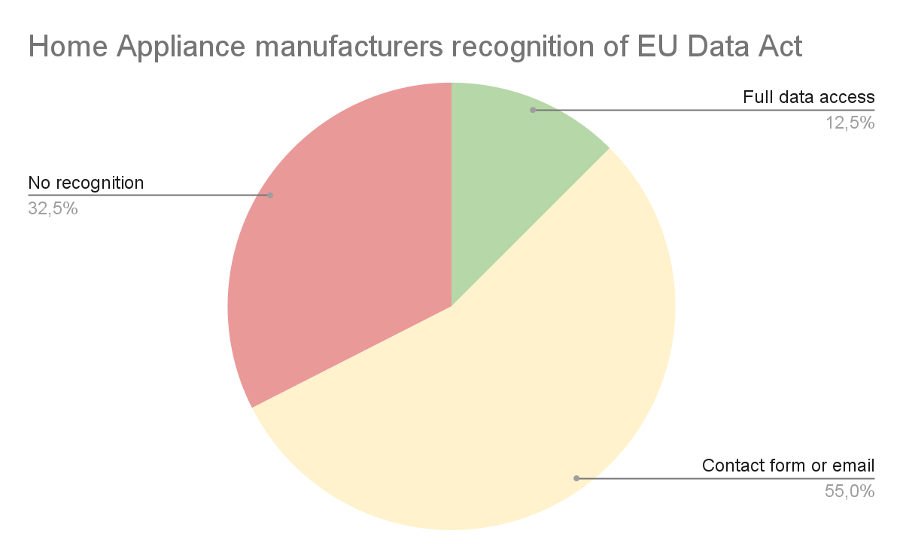

As of the 01.2026, most of the home appliance manufacturers (>85% of the 40manufacturers researched, responsible for 165 home appliance brands currently present on the European market) either provide the data access through a manual process (ticket, contact form, email, chatbot) or does not recognize the need of sharing data with owner at all.

If we look at the market from the perspective of how manufacturer treats the requirementsEU Data Act imposes on them, we can see that only 12,5% of 40 companies researched (which means 5 manufacturers) provide the full data access with portal allowing to easily, in self-service manner access your data (green on chart below). 55% companies researched (yellow on the diagram below) recognize the need of sharing the data with their customers, but as a manual service request or email, not in automated or direct way.

The red group (32,5%) is a group of manufacturers, which according to our researchers:

- does not provide an easy way to access your data,

- does not recognize EU Data Act legislation at all,

- recognizes EDA, but their interpretation is that they don’t need to share data with devices owners.

A contact form or email can be treated as a temporary solution, but it fails to fulfill the next set of requirements around direct data access. Although direct access can be understood differently and can be fulfilled in different ways, a manual request requiring manufacturer permission and interaction is generally not considered “direct”. (Notably, “access by design” expectations intensify for products placed on the market from September 2026.)

API access

We can’t talk about EU Data Act implementation without understanding the current technical landscape. For home appliance industry, especially high-end devices, the competitive edge is smart features and Smart Home integration support.That’s why many manufacturers already have the cloud API access to their devices.

Major manufacturers, like Samsung, LG, Bosch, allow to access the appliance (like electric ovens, air conditioning system, humidifier, or dishwasher) data and control its functions. This API is then use by mobile app (which is a related service in terms of EU Data Act), or by owners building popular Smart Home systems.

There are two approaches: either device itself provides local API through server running on it (very rare), or the API is provided in the manufacturer cloud (most common), making the access easier from outside world, securely through their authentication mechanism, but requiring data storage in the cloud.

Both approaches in the light of EDA can be treated as direct access. The access does not require specific permission from the manufacturer, anyone can configure, and if all functions and data is available, this might be considered the solution.

Is API access enough?

The unfortunate part is that it rarely is, and for more than one reason. Let’s get through all of them to understand why Samsung, which has great Smart Things ecosystem, still developed separate EU Data Act portal for data access.

1. The API does not make all data accessible

The APIs are mostly developed for Smart Home and integration purposes, not with the goal of sharing all the data collected by the appliance or by the related service(mobile app).

Adding endpoints for every single datapoint, especially for metadata, will be costly and not really useful for both customers and the manufacturer. It’s easier and better to provide all supplementary data as a single package.

2. The APIs were developed with device owner in mind

EU Data Act streamlines the data access for all data market participants - not only device owners, but also other businesses in B2B scenarios. Sharing data to other business entities under fair, reasonable and non-discriminatory terms is the core of EDA.

This means that there must be a way to share data with the company selected by the data owner in a simple and secure way. This effectively means that the sharing must be coordinated by the manufacturer, or at least the device should be designed in a way which allows to securely share the data, which in most cases requires a separate account or access API for B2B.

3. The API does not make all data accessible

The B2B data access scenarios require a carefully designed consent management system to make sure the owner has full control regarding the scope of data sharing, the way it’s shared and with whom. The owner can also revoke the data sharing permission any time.

This system falls under the partner portal purposes, not Smart Home API. Some of the global manufacturers already have partner portals which can be used for this purpose, but a sole API is not enough.

If API is not enough - what is enough?

The EU Data Act problem is not really the problem of expanding the API with new endpoints.The recommended approach, as taken by previously mentioned Samsung, is to create a separate portal solving compliance problems. Let’s briefly look also at the potential solutions for direct access to data:

- Self-service export (download package, machine-readable +human-readable - as long as the export is fast, automatic and allows to access the data as fast as the manufacturer can.

- Delegated access to a third party (OAuth-style authorization, scoped consent, logs).

- Continuous data feed (webhook/stream for authorized recipients).

Those are approaches which OEMs currently take to solve the problem.

Other challenges bound to home appliance market

Home appliance connectivity is different to the automotive market. Because devices are bound to Wi-Fi or Bluetooth networks, or in rare cases smart home protocols(ZigBee, Z-Wave, Matter), they are not moving and changing owners that often.

The device ownership change happens only when the whole residence changes owners, which is either the specific situation of businesses like AirBnB, or current owners moving out - which very often means the Wi-Fi and/or ISP (Internet ServiceProvider) is changed anyway.

On the other hand, it is hard to point at the specific “device owner”. If there is more than one resident, so effectively any scenario outside “single-person household”, there is no way to effectively separate the data applicable to specific individuals. Of course, every reasonable system would create a check box or notification, that the data can only be requested when there is legal basis under GDPR, but selecting correct user or admin to authorize data sharing is challenging.

From the business perspective, the challenge also arises from the fact that there are white label OEMs manufacturing for global brands in specific market segments.The good example here is the TV market - to access the system data there can beGoogle/Android access point, diagnostic data is separate and should be provided by the manufacturer (which can be the brand selling the device, but not always). If you purchase a TV branded by Toshiba, Sharp, or Hitachi, it can be all manufactured by Vestel. At the same time the other home appliances with the same brand can be manufactured elsewhere. Gathering all the data and helping the user understand where his data is, can be tricky at least.

The one other important challenge is the broad spectrum of devices with different functions and collecting different signals. This requires complex data catalogs, potentially also integrating different data sources, and different data formats. It’s not uncommon for users to purchase multiple different devices from the same brand and request access to all data at once. The user shouldn’t have to guess whether the brand, OEM, or platform provider holds specific datasets - the compliance experience must reconcile identities and data sources to make it easy to use.

Conclusion

Navigating the EU Data Act is complicated, it does not matter which industry we focus on.When we were researching the home appliance market we have seen very different approaches - from state-of-the-art system created by Samsung compliant with allEDA requirements, to manfuacturers who explain in user manual that to “access the data” you need to open system settings and reset the device to factory settings, effectively removing the data instead of sharing. The market as a whole is clearly not ready.

Making your company compliant with EU Data Act is not that hard. The overall idea and approach is similar, does not matter which industry you represent, but building or procuring the new system to fulfill all requirements is for most of the manufacturers a must.

That’s why Grape Up designed and developed Databoostr, the EU Data Act compliance platform which can be either installed on customer infrastructure, or integrated as a SaaS system. This is the quickest and most cost-effective way to become compliant, especially considering the shrinking timeline, while also enabling data monetization.