Native bindings for JavaScript - why should I care?

Table of contents

Schedule a consultation with software experts

Contact usAt the very beginning, let’s make one important statement: I am not a JavaScript Developer. I am a C++ Developer, and with my skill set I feel more like a guest in the world of JavaScript. If you’re also a Developer, you probably already know why companies today are on the constant lookout for web developers - the JavaScript language is flexible and has a quite easy learning curve. Also, it is present everywhere from computers, to mobile devices, embedded devices, cars, ATMs or washing machines - you name it. Writing portable code that may be run everywhere is tempting and that's why it gets more and more attention.

Desktop applications in JavaScript

In general, JavaScript is not the first or best fit for desktop applications. It was not created for that purpose and it lacks GUI libraries like C++, C#, Java or even Python. Even though it has found its niche.

Just take a look at the Atom text editor. It is based on Electron [1], a framework to run JS code in a desktop application. The tool internally uses chromium engine so it is more or less a browser in a window, but still quite an impressive achievement to have the same codebase for both Windows, macOS and Linux. It is quite meaningful for those that want to use agile processes. Especially because it is really easy to start with an MVP and have incremental updates with new features often, as it is the same code.

Native code and JavaScript? Are you kidding?

Since JavaScript works for desktop applications, one may think: why bother with native bindings then? But before you also think so, consider the fact that there are a few reasons for that, usually performance issues:

- Javascript is a script, virtualized language. In some scenarios, it may be of magnitude slower than its native code equivalent [2].

- Javascript is characterized by garbage collection and has a hard time with memory consuming algorithms and tasks.

- Access to hardware or native frameworks is sometimes only possible from a native, compiled code.

Of course, this is not always necessary. In fact, you may happily live your developer life writing JS code on a daily basis and never find yourself in a situation when creating or using native code in your application is unavoidable. Hardware performance is still on the uphill and often there is no need to even profile an application. On the other hand, once it happens, every developer should know what options are available for them.

The strange and scary world of static typing

If a JavaScript Developer who uses Electron finds out that his great video encoding algorithm does not keep up with the framerate, is that the end of his application? Should he rewrite everything in assembler?

Obviously not. He might try to use the native library to do the hard work and leverage the environment without a garbage collector and with fast access to the hardware - or maybe even SIMD SSE extensions. The question that many may ask is: but isn’t it difficult and pointless?

Surprisingly not. Let’s dig deeper into the world of native bindings when as an opposite to JavaScript, you often have to specify the type of variable or return value for functions in your code.

First of all, if you want to use Electron or any framework based on NodeJS, you are not alone - you have a small friend called “nan” (if you have seen “wat”[3] you are probably already smiling). Nan is a “native abstraction for NodeJS” [4] and thanks to its very descriptive name, it is enough to say that it allows to create add-ons to NodeJS easily. As literally everything today you can install it using the npm below:

$ npm install --save nan

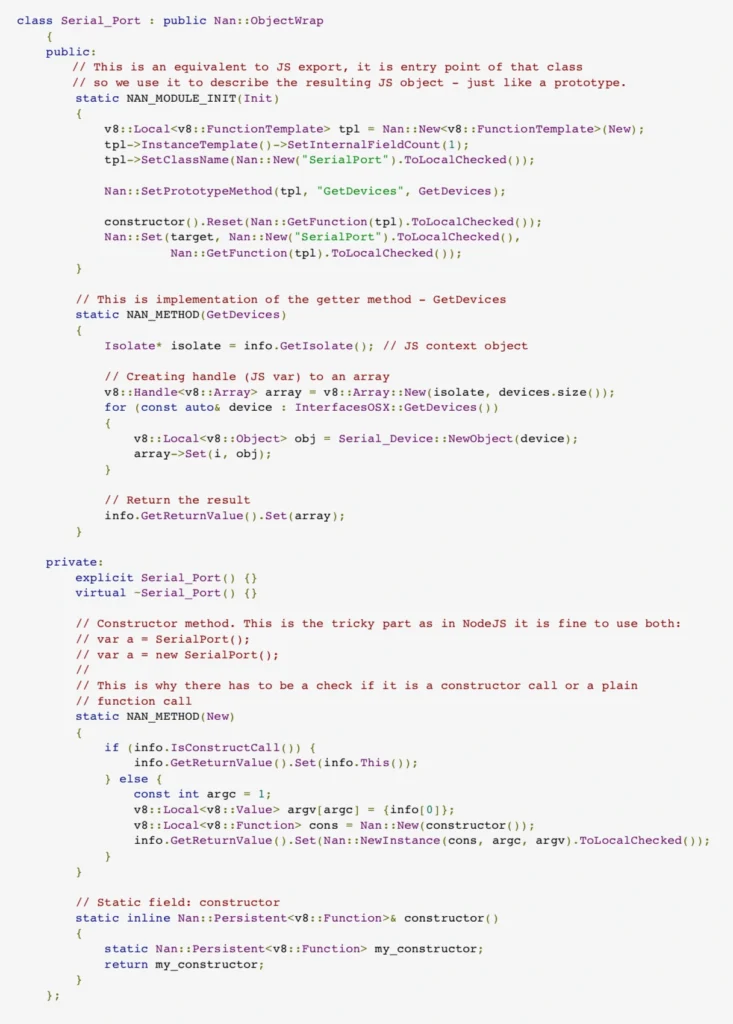

Without getting into boring, technical details, here is an example of wrapping C++ class to a JavaScript object:

Nothing really fancy and the code isn’t too complex either. Just a thin wrapping layer over the C++ class. Obviously, for bigger frameworks there will be much code and more problems to solve, but in general we have a native framework bound with the JavaScript application.

Summary

The bad part is that we, unfortunately, need to compile the code for each platform to use it and by each platform we usually mean windows, linux, macOS, android and iOS depending on our targets. Also, it is not rocket science, but for JavaScript developers that never had a chance to compile the code it may be too difficult.

On the other hand, we have a not so perfect, but working solution for writing applications that run on multiple platforms and handle even complex business logic . Programming has never been a perfect world, it has always been a world of trade-offs, beautiful solutions that never work and refactoring that never happens. In the end, when you are going to look for yet another compromise between your team’s skills and the client’s requirements for the next desktop or mobile application framework, you might consider using JavaScript.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Spring AI framework overview – introduction to AI world for Java developers

In this article, we explain the fundamentals of integrating various AI models and employing different AI-related techniques within the Spring framework. We provide an overview of the capabilities of Spring AI and discuss how to utilize the various supported AI models and tools effectively.

Understanding Spring AI - Basic concepts

Traditionally, libraries for AI integration have primarily been written in Python, making knowledge of this language essential for their use. Additionally, their integration in applications written in other languages implies the writing of a boilerplate code to communicate with the libraries. Today, Spring AI makes it easier for Java developers to enable AI in Java-based applications.

Spring AI aims to provide a unified abstraction layer for integrating various AI LLM types and techniques (e.g., ETL, embeddings, vector databases) into Spring applications. It supports multiple AI model providers, such as OpenAI, Google Vertex AI, and Azure Vector Store, through standardized interfaces that simplify their integration by abstracting away low-level details. This is achieved by offering concrete implementations tailored to each specific AI provider.

Generating data: Integration with AI models

Spring AI API supports all main types of AI models, such as chat, image, audio, and embeddings. The API for the model is consistent across all model types. It consists of the following main components:

1) Model interfaces that provide similar methods for all AI model providers. Each model type has its own specific interface, such as ChatModel for chat AI models and ImageModel for image AI models. Spring AI provides its own implementation of each interface for every supported AI model provider.

2) Input prompt/request class that is used by the AI model (via model interface) providing user input (usually text) instructions, along with options for tuning the model’s behavior.

3) Response for output data produced by the model. Depending on the model type, it contains generated text, image, or audio (for Chat Image and Audio models correspondingly) or more specific data like floating-point arrays in the case of Embedding models.

All AI model interfaces are standard Spring beans that can be injected using auto-configuration or defined in Spring Boot configuration classes.

Chat models

The chat LLMs gnerate text in response to the user’s prompts. Spring AI has the following main API for interaction with this type of model.

- The ChatModel interface allows sending a String prompt to a specific chat AI model service. For each supported AI chat model provider in Spring AI, there is a dedicated implementation of this interface.

- The prompt class contains a list of text messages (queries, typically a user input) and a ChatOptions object. The ChatOptions interface is common for all the supported AI models. Additionally, every model implementation has its own specific options for class implementation.

- The ChatResponse class encapsulates the output of the AI chat model, including a list of generated data and relevant metadata.

- Furthermore, the chat model API has a ChatClient class , which is responsible for the entire interaction with the AI model. It encapsulates the ChatModel, enabling users to build and send prompts to the model and retrieve responses from it. ChatClient has multiple options for transforming the output of the AI model, which includes converting raw text response into a custom Java object or fetching it as a Flux-based stream.

Putting all these components together, let’s give an example code of Spring service class interacting with OpenAI chat API:

// OpenAI model implementation is available via auto configuration

// when ‘org.springframework.ai:spring-ai-openai-spring-boot-starter'

// is added as a dependency

@Configurationpublic class ChatConfig {

// Defining chat client bean with OpenAI model

@Bean

ChatClient chatClient(ChatModel chatModel) {

return ChatClient.builder(chatModel)

.defaultSystem("Default system text")

.defaultOptions(

OpenAiChatOptions.builder()

.withMaxTokens(123)

.withModel("gpt-4-o")

.build()

).build();

}

}@Servicepublic class ChatService {

private final ChatClient chatClient;

...

public List<String> getResponses(String userInput) {

var prompt = new Prompt(

userInput,

// Specifying options of concrete AI model options

OpenAiChatOptions.builder()

.withTemperature(0.4)

.build()

);

var results = chatClient.prompt(prompt)

.call()

.chatResponse()

.getResults();

return results.stream()

.map(chatResult -> chatResult.getOutput().getContent())

.toList();

}

}

Image and Audio models

Image and Audio AI model APIs are similar to the chat model API; however, the framework does not provide a ChatClient equivalent for them.

For image models the main classes are represented by:

- ImagePrompt that contains text query and ImageOptions

- ImageModel for abstraction of the concrete AI model

- ImageResponse containing a list of the ImageGeneration objects as a result of ImageModel invocation.

Below is the example Spring service class for generating images:

@Servicepublic class ImageGenerationService {

// OpenAI model implementation is used for ImageModel via autoconfiguration

// when ‘org.springframework.ai:spring-ai-openai-spring-boot-starter’ is

// added as a dependency

private final ImageModel imageModel;

...

public List<Image> generateImages(String request) {

var imagePrompt = new ImagePrompt(

// Image description and prompt weight

new ImageMessage(request, 0.8f),

// Specifying options of a concrete AI model

OpenAiImageOptions.builder()

.withQuality("hd")

.withStyle("natural")

.withHeight(2048)

.withWidth(2048)

.withN(4)

.build()

);

var results = imageModel

.call(imagePrompt)

.getResults();

return results.stream()

.map(ImageGeneration::getOutput)

.toList();

}

}

When it comes to audio models there are two types of them supported by Spring AI: Transcription and Text-to-Speech.

The text-to-speech model is represented by the SpeechModel interface. It uses text query input to generate audio byte data with attached metadata.

In transcription models , there isn't a specific general abstract interface. Instead, each model is represented by a set of concrete implementations (as per different AI model providers). This set of implementations adheres to a generic "Model" interface, which serves as the root interface for all types of AI models.

Embedding models

1. The concept of embeddings

Let’s outline the theoretical concept of embeddings for a better understanding of how the embeddings API in Spring AI functions and what its purpose is.

Embeddings are numeric vectors created through deep learning by AI models. Each component of the vector corresponds to a certain property or feature of the data. This allows to define the similarities between data (like text, image or video) using mathematical operations on those vectors.

Just like 2D or 3D vectors represent a point on a plane or in a 3D space, the embedding vector represents a point in an N-dimensional space. The closer points (vectors) are to each other or, in other words, the shorter the distance between them is, the more similar the data they represent is. Mathematically the distance between vectors v1 and v2 may be defined as: sqrt(abs(v1 - v2)).

Consider the following simple example with living beings (e.g., their text description) as data and their features:

Is Animal (boolean) Size (range of 0…1) Is Domestic (boolean) Cat 1 0,1 1 Horse 1 0,7 1 Tree 0 1,0 0

In terms of the features above, the objects might be represented as the following vectors: “cat” -> [1, 0.1, 1] , “horse” -> [1, 0.7, 1] , “tree” -> [0, 1.0, 0]

For the most similar animals from our example, e.g. cat and horse, the distance between the corresponding vectors is sqrt(abs([1, 0.1, 1] - [1, 0.7, 1])) = 0,6 While comparing the most distinct objects, that is cat and tree gives us: sqrt(abs([1, 0.1, 1] - [0, 1.0, 0])) = 1,68

2. Embedding model API

The Embeddings API is similar to the previously described AI models such as ChatModel or ImageModel.

- The Document class is used for input data. The class represents an abstraction that contains document identifier, content (e.g., image, sound, text, etc), metadata, and the embedding vector associated with the content.

- The EmbeddingModel interface is used for communication with the AI model to generate embeddings. For each AI embedding model provider, there is a concrete implementation of that interface. This ensures smooth switching between various models or embedding techniques.

- The EmbeddingResponse class contains a list of generated embedding vectors.

Storing data: Vector databases

Vector databases are specifically designed to efficiently handle data in vector format. Vectors are commonly used for AI processing. Examples include vector representations of words or text segments used in chat models, as well as image pixel information or embeddings.

Spring AI has a set of interfaces and classes that allow it to interact with vector databases of various database vendors. The primary interface of this API is the VectorStore , which is designed to search for similar documents using a specific similarity query known as SearchRequest.

It also has methods for adding and removing the Document objects. When adding to the VectorStore, the embeddings for documents are typically created by the VectorStore implementation using an EmbeddingMode l. The resulting embedding vector is assigned to the documents before they are stored in the underlying vector database.

Below is an example of how we can retrieve and store the embeddings using the input documents using the Azure AI Vector Store.

@Configurationpublic class VectorStoreConfig {

...

@Bean

public VectorStore vectorStore(EmbeddingModel embeddingModel) {

var searchIndexClient = ... //get azure search index client

return new AzureVectorStore(

searchIndexClient,

embeddingModel,

true,

// Metadata fields to be used for the similarity search

// Considering documents that are going to be stored in vector store

// represent books/book descriptions

List.of(MetadataField.date("yearPublished"),

MetadataField.text("genre"),

MetadataField.text("author"),

MetadataField.int32("readerRating"),

MetadataField.int32("numberOfMainCharacters")));

}

}

@Servicepublic class EmbeddingService {

private final VectorStore vectorStore;

...

public void save(List<Document> documents) {

// The implementation of VectorStore uses EmbeddingModel to get embedding vector

// for each document, sets it to the document object and then stores it

vectorStore.add(documents);

}

public List<Document> findSimilar(String query,

double similarityLimit,

Filter.Expression filter) {

return vectorStore.similaritySearch(

SearchRequest.query(query) // used for embedding similarity search

// only having equal or higher similarity

.withSimilarityThreshold(similarityLimit)

// search only documents matching filter criteria

.withFilterExpression(filter)

.withTopK(10) // max number of results

);

}

public List<Document> findSimilarGoodFantasyBook(String query) {

var goodFantasyFilterBuilder = new FilterExpressionBuilder();

var goodFantasyCriteria = goodFantasyFilterBuilder.and(

goodFantasyFilterBuilder.eq("genre", "fantasy"),

goodFantasyFilterBuilder.gte("readerRating", 9)

).build();

return findSimilar(query, 0.9, goodFantasyCriteria);

}

}

Preparing data: ETL pipelines

The ETL, which stands for “Extract, Transform, Load” is a process of transforming raw input data (or documents) to make it applicable or more efficient for the further processing by AI models. As the name suggests, the ETL consists of three main stages: extracting the raw data from various data sources, transforming data into a structured format, and storing the structured data in the database.

In Spring AI the data used for ETL in every stage is represented by the Document class mentioned earlier. Here are the Spring AI components representing each stage in ETL pipeline:

- DocumentReader – used for data extraction, implements Supplier<List<Document>>

- DocumentTransformer – used for transformation, implements Function<List<Document>, List<Document>>

- DocumentWriter – used for data storage, implements Consumer<List<Document>>

The DocumentReader interface has a separate implementation for each particular document type, e.g., JsonReader, TextReader, PagePdfDocumentReader, etc. Readers are temporary objects and are usually created in a place where we need to retrieve the input data, just like, e.g., InputStream objects. It is also worth mentioning that all the classes are designed to get their input data as a Resource object in their constructor parameter. And, while Resource is abstract and flexible enough to support various data sources, such an approach limits the reader class capabilities as it implies conversion of any other data sources like Stream to the Resource object.

The DocumentTransformer has the following implementations:

- TokenTextSplitter – splits document into chunks using CL100K_BASE encoding, used for preparing input context data of AI model to fit the text into the model’s context window.

- KeywordMetadataEnricher – uses a generative AI model for getting the keywords from the document and embeds them into the document’s metadata.

- SummaryMetadataEnricher – enriches the Document object with its summary generated by a generative AI model.

- ContentFormatTransformer – applies a specified ContentFormatter to each document to unify the format of the documents.

These transformers cover some of the most popular use cases of data transformation. However, if some specific behavior is required, we’ll have to provide a custom DocumentTransformer.

When it comes to the DocumentWriter , there are two main implementations: VectorStore, mentioned earlier, and FileDocumentWriter, which writes the documents into a single file. For real-world development scenarios the VectorStore seems the most suitable option. FileDocumentWriter is more suitable for simple or demo software where we don't want or need a vector database.

With all the information provided above, here is a clear example of what a simple ETL pipeline looks like when written using Spring AI:

public void saveTransformedData() {

// Get resource e.g. using InputStreamResource

Resource textFileResource = ...

TextReader textReader = new TextReader(textFileResource);

// Assume tokenTextSplitter instance in created as bean in configuration

// Note that the read() and split() methods return List<Document> objects

vectorStore.write(tokenTextSplitter.split(textReader.read()));

}

It is worth mentioning that the ETL API uses List<Document> only to transfer data between readers, transformers, and writers. This may limit their usage when the input document set is large, as it requires the loading of all the documents in memory at once.

Converting data: Structured output

While the output of AI models is usually raw data like text, image, or sound, in some cases, we may benefit from structuring that data. Particularly when the response includes a description of an object with features or properties that suggest an implicit structure within the output.

Spring AI offers a Structured Output API designed for chat models to transform raw text output into structured objects or collections. This API operates in two main steps: first, it provides the AI model with formatting instructions for the input data, and second, it converts the model's output (which is already formatted according to these instructions) into a specific object type. Both the formatting instructions and the output conversion are handled by implementations of the StructuredOutputConverter interface.

There are three converters available in Spring AI:

- BeanOutputConverter<T> – instructs AI model to produce JSON output using JSON Schema of a specified class and converts it to the instances of the that class as an output.

- MapOutputConverter – instructs AI model to produce JSON output and parses it into a Map<String, Object> object

- ListOutputConverter – retrieves comma separated items form AI model creating a List<String> output

Below is an example code for generating a book info object using BeanOutputConverter:

public record BookInfo (String title,

String author,

int yearWritten,

int readersRating) { }

@Servicepublic class BookService {

private final ChatClient chatClient;

// Created in configuration of BeanOutputConverter<BookInfo> typeBookInfo> type

private final StructuredOutputConverter<BookInfo> bookInfoConverter;

...

public final BookInfo findBook() {

return chatClient.prompt()

.user(promptSpec ->

promptSpec

.text("Generate description of the best " +

"fantasy book written by {author}.")

.param("author", "John R. R. Tolkien"))

.call()

.entity(bookInfoConverter);

}

}

Production Readiness

To evaluate the production readiness of the Spring AI framework, let’s focus on the aspects that have an impact on its stability and maintainability.

Spring AI is a new framework. The project was started back in 2023. The first publicly available version, the 0.8.0 one, was released in February 2024. There were 6 versions released in total (including pre-release ones) during this period of time.

It’s an official framework of Spring Projects, so the community developing it should be comparable to other frameworks, like Spring JPA. If the framework development continues, it’s expected that the community will provide support on the same level as for other Spring-related frameworks.

The latest version, 1.0.0-M4, published in November, is still a release candidate/milestone. The development velocity, however, is quite good. Framework is being actively developed: according to the GitHub statistics, the commit rate is 5.2 commits per day, and the PR rate is 3.5 PRs per day. We may see it by comparing it to some older, well-developed frameworks, such as Spring Data JPA, which has 1 commit per day and 0.3 PR per day accordingly.

When it comes to bug fixing, there are about 80 bugs in total, with 85% of them closed on their official GitHub page. Since the project is quite new, these numbers may not be as representable as in other older Spring projects. For example, Spring Data JPA has almost 800 bugs with about 90% fixed.

Conclusion

Overall, the Spring AI framework looks very promising. It might become a game changer for AI-powered Java applications because of its integration with Spring Boot framework and the fact that it covers the vast majority of modern AI model providers and AI-related tools, wrapping them into abstract, generic, easy-to-use interfaces.

Native app vs. hybrid app: How to choose a solution that meets your business needs

Every time we start a new project, we organize brainstorming sessions about architecture and how to manage the project. Regarding mobile apps, we have one additional question – should we choose a hybrid or native app?

Let's start from the absolute beginning. What is a native app?

A native app is a software or program developed to carry out a specific task within a platform or environment. Native apps are built using software development tools (SDK) for particular software frameworks, hardware platforms, or operating systems. Native apps are built for use on a particular device, such as Apple iPhone or Google Android. To create an iOS application, we use Swift, which replaced Objective-C a few years ago. If we want our app to be available on Android phones, we can choose Kotlin (more popular) or Java (replaced by Kotlin a few years ago, however, we can still use it). Let's start by discussing the pros and cons of native apps.

PROS

First – native apps are stable and reliable. That's especially worthwhile cause if you work on large projects, you want to focus on implementing new features, not fight with platform limitations. While working on a native app, you can be sure you will find well-prepared documentation for each part of the SDK – it does not matter if it's a camera, Bluetooth, or design principles.

If you want to use a specific part of SDK or hardware that is specific to a platform, such as Bluetooth, a camera native app also would be a better choice. Even if you decide to go hybrid, these components need to be handled by native code, so you would need a library or plugins. It doesn't make sense to use native frameworks in hybrid apps.

If we are planning a big battery driller app with a beautiful, complicated, animated UI, we should choose a native app. Performance and responsiveness are much better than hybrid can offer. Same thing if we're talking about UX; better UX equals digital customer engagement.

All these points are essential, but what matters the most is security. You should know that native apps are much safer, more stable, and less vulnerable to security risks.

CONS

Of course, native apps are not perfect, and we can easily find examples of ideas when a hybrid app would be a better choice.

Native apps, of course, have a separate codebase for Android and iOS. This means we have (at least) two teams to manage one project. This also reflects bug fixing – it's much easier and takes less time to find and fix bugs if we have one code base.

You should also consider the time you have to create an app. Developing on multiple platforms means a more extended development schedule and, as a result, higher development costs. Therefore, native app development quickly eats up resources.

Go hybrid?

You always have a choice 😊 Native app is a clever idea, but is it always the best one, and what is it - a hybrid app? A (hybrid app) is a software application that combines elements of both native apps and web applications. Simply – it is the technology where we share the codebase between platforms – Android and iOS. The most popular frameworks are:

- React Native

- Flutter

- Xamarin

- KMM (soon 😉)

Let's discuss the pros and cons of hybrid apps.

PROS

Of course, the most significant advantage of hybrid apps is that they can be used across platforms and devices – they share one code base. Cool, isn't it? This means more accessible updates, bug fixes, and maintenance. Also, Development is much simpler and quicker by not having to build from the ground up for each platform.

A crucial thing is the deployment process. Hybrid apps can be deployed much faster than native apps, which can be extremely helpful, especially in big, complicated projects.

Regarding performance, reduced time frames equate to reduced resource drain. We should keep that in mind.

Finally - Hybrid apps can take advantage of dynamic web content. If you plan to create a big social platform – mobile app, web page, and so on – you should consider a hybrid app.

CONS

Looks interesting, right? Well, it is not an ideal solution. Like everything else – it has some limitations.

As we mentioned, there are components that 100% rely on device-specific components or hardware – like cameras, Bluetooth, etc. To use that feature in hybrid applications, we need to implement plugins or parts – or create them ourselves. That can be time assuming but also can present security risks.

The second important thing is user experience - it can suffer as hybrid apps cannot take advantage of the platform's UI.

The last thing - being unable to take full advantage of the hardware sometimes impacts the performance of our applications by making them poor and insecure.

There is one proposition that eliminates most of our cons – KMM, Kotlin Multiplatform Mobile. Its significant advantage is that we share the whole business logic between platforms – Android and iOS using Kotlin language. We create separate native layouts and UI layers for each platform. But of course – there is a problem 😊 KMM is still in the alpha phase, and, in my opinion, it is not production ready yet. But we should keep an eye on that as it might be a revolution.

Summary

It is not an easy task to choose the best technology. We should consider many varied factors that can impact our app. Here are key takeaways for both solutions – native and hybrid.

Native apps: Key takeaways

Native apps provide the best stability and security. They will tend to perform faster and be able to handle the most demanding tasks. This kind of application is best placed to use specific devices' hardware functionality. The user experience is smooth and featureful.

Hybrid apps: Key takeaways

Hybrid apps are easy to get onto iOS and Android. By utilizing a single codebase, you can reduce budget and time costs.

Cloud-native applications: 5 things you should know before you start

Introduction

Building cloud-native applications has become a direction in IT that has already been followed by companies like Facebook, Netflix or Amazon for years. The trend allows enterprises to develop and deploy apps more efficiently by means of cloud services and provides all sorts of run-time platform capabilities like scalability, performance and security. Thanks to this, developers can actually spend more time delivering functionality and speed up Innovation. What else is there that leaves the competition further behind than introducing new features at a global scale according to customer needs? You either keep up with the pace of the changing world or you don’t. The aim of this article is to present and explain the top 5 elements of cloud-native applications.

Why do you need cloud-native applications in the first place?

It is safe to say that the world we live in has gone digital. We communicate on Facebook, watch movies on Netflix, store our documents on Google Drive and at least a certain percentage of us shop on Amazon. It shouldn’t be a surprise that business demands are on a constant rise when it comes to customer expectations. Enterprises need a high-performance IT organization to be on top of this crowded marketplace.

Throughout the last 20 years the world has witnessed an array of developments in technology as well as people & culture. All these improvements took place in order to start delivering software faster. Automation, continuous integration & delivery to DevOps and microservice architecture patterns also serve that purpose. But still, quite frequently teams have to wait for infrastructure to become available, which significantly slows down the delivery line.

Some try to automate infrastructure provisioning or make an attempt towards DevOps. However, if the delivery of the infrastructure relies on a team that works remotely and can’t keep up with your speed, automated infrastructure provisioning will not be of much help. The recent rise of cloud computing has shown that infrastructure can be made available at a nearly infinite scale. IT departments are able to deliver their own infrastructure just as fast as if they were doing their regular online shopping on Amazon. On top of that, cloud infrastructure is simply cost efficient, as it doesn’t need tons of capital investment in advance. It represents a transparent pay-as-you-go model. Which is exactly why this kind of infrastructure has won its popularity among startups or innovation departments where a solution that tries out new products quickly is a golden ticket. So, what IS there to know before you dive in?

The ingredients of cloud-native apps, and what makes them native?

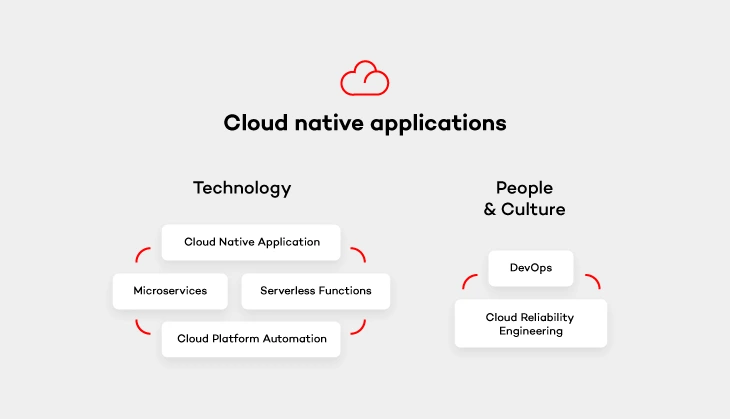

Now that we have explained the need for cloud-native apps, we can still shed a light on the definition, especially because it doesn’t always go hand in hand with its popularity. Cloud native apps are those applications that have been built to live and run in the cloud. If you want to get a better understanding of that it means - I recommend reading on. There are 5 elements divided into 2 categories (excluding the application itself) that are essential for creating cloud native environments.

Cloud platform automation

In other words, the natural habitat in which cloud-native applications live. It provides services that keep the application running, manage security and network. As stated above, the flexibility of such cloud platform and its cost-efficiency thanks to the pay-as-you-go model is perfect for enterprises who don’t want to pay through the nose for infrastructure from the very beginning.

Serverless functions

These small, one-purpose functions that allow you to build asynchronous event-driven architectures by means of microservices. Don’t let the „serverless” part of their name misleads you, though. Your code WILL run on servers. The only difference is that it’s on the cloud provider’s side to handle accelerating instances to run your code and scale out under load. There are plenty of serverless functions out there offered by cloud providers that can be used by cloud-native apps. Services like IoT, Big Data or data storage are built from open-source solutions. It means that you simply don’t have to take care of some complex platform, but rather focus on functionality itself and not have to deal with the pains of installation and configuration.

Microservices architecture pattern

Architecture pattern aims to provide self-contained software products which implement one type of a single-purpose function that can be developed, tested and deployed separately to speed up the product’s time to market. Which is equal to what cloud-native apps offer.

Once you get down to designing microservices, remember to do it in a way so that they can run cloud native. Most likely, you will come across one of the biggest challenges when it comes to the ability to run your app in a distributed environment across application instances. Luckily, there are these 12-factor app design principles which you can follow with a peaceful mind. These principles will help design such microservices that can run cloud native.

DevOps culture

The journey with cloud-native applications comes not only with a change in technology, but also with a culture change. Following DevOps principles is essential for cloud-native apps . Therefore, getting the whole software delivery pipeline to work automatically will only be possible if development and operation teams cooperate closely together. Development engineers are those who are in charge of getting the application to run on the cloud platform. While operations engineers handle development, operations and automation of that platform.

Cloud reliability engineering

And last but not least – cloud reliability engineering that comes from Google’s Site Reliability Engineering (SRE) concept. It is based on approaching the platform administration in the same way as software engineering. As stated by Ben Treynor , VP of Engineering at Google „Fundamentally, it’s what happens when you ask a software engineer to design an operations function (…). So SRE is fundamentally doing work that has historically been done by an operations team, but using engineers with software expertise, and banking on the fact that these engineers are inherently both predisposed to, and have the ability to, substitute automation for human labor.”

Treynor also claims that the roles of many operations teams nowadays are similar. But the way SRE teams work is significantly different because of several reasons. SRE people are software engineers with software development abilities and are characterized by proclivity. They have enough knowledge about programming languages, data structures, algorithms and performance. The result? They can create software that is more effective.

Summary

Cloud- native applications are one of the reasons why big players such as Facebook or Amazon stay ahead of the competition. The article sums up the most important factors that comprise them. Of course, the process of building cloud native apps goes far beyond choosing the tools. The importance of your team (People & Culture) can never be stressed enough. So what are the final thoughts? Advocate DevOps and follow Google’s Cloud Engineering principles to make your company efficient and your platform more reliable. You will be surprised at how fast cloud-native will help your organization become successful.

Interested in our services?

Reach out for tailored solutions and expert guidance.