Tips on mastering the art of learning new technologies and transitioning between them

Table of contents

Schedule a consultation with software experts

Contact usLearning new technologies is always challenging, but it is also crucial for self-development and broadening professional skills in today's rapidly developing IT world.

The process may be split into two phases: learning the basics and increasing our expertise in areas where we already have the basic knowledge . The first phase is more challenging and demanding. It often involves some mind-shifting and requires us to build a new knowledge system on which we don't have any information. On the other hand, the second phase, often called "upskilling," is the most important as it gives us the ability to develop real-world projects.

To learn effectively, it's important to organize the process of acquiring knowledge properly. There are several key things to consider when planning day-to-day learning. Let's define the basic principles and key points of how to apprehend new programming technologies. First, we'll describe the structure of the learning process.

Structure of the process of learning new technologies

Set a global but concrete goal

A good goal helps you stay driven. It's important to keep motivation high, especially in the learning basics phase, where we acquire new knowledge, which is often tedious. The goal should be concrete, e.g., "Learn AWS to build more efficient infrastructure," "Learn Spring Boot to develop more robust web apps," or "Learn Kubernetes to work on modern and more interesting projects." Focusing on such goals gives us the feeling that we know precisely why we keep progressing. This approach is especially helpful when we work on a project while learning at the same time, and it helps keep us motivated.

Set a technical task

Practical skills are the whole point of learning new technologies. Training in a new programming language or theoretical framework is only a tool that lays the foundation for practical application.

The best way to learn new technology is by practicing it. For this purpose, it’s helpful to set up test projects. Imagine which real-world task fits the framework or language you’re learning and try to write test projects for such a task. It should be a simplified version of an actual hypothetical project.

Focus on a small specific area that is currently being studied. Use it in the project, simplifying the rest of the setup or code. E.g., if you learn some new database framework, you should design entities, the code that does queries, etc, precisely and with attention to detail. At the same time, do only the minimum for the rest of the app. E.g., application configuration may be a basic or out-of-the-box solution provided by the framework.

Use a local or in-memory database. Don't spend too much time on setup or writing complicated business logic code for the service or controller layer. This will allow you to focus on learning the subject you’re interested in. If possible, it's also a good idea to keep developing the test app by adding another functionality that utilizes new knowledge as you walk through the learning path (rather than writing another new test project from scratch for each topic).

Theoretical knowledge learning

Theoretical knowledge is the background for practical work with a framework or programming language. This means we should not neglect it. Some core theoretical knowledge is always required to use technology. This is especially important when we learn something completely new. We should first understand how to think in terms of the paradigms or rules of the technology we're learning. Do some mind-shifting to understand the technology and its key concepts.

You need to develop your understanding of the basis on which the technology is built to such a level that you will be able to associate new knowledge with that base and understand how it works in relation to it. For example, functional programming would be a completely different and unknown concept for someone who previously worked only using OOP. To start using functional programming, it's not enough to know what a function is; we have to understand main concepts like lambda calculus, pure functions, high-level functions, etc., at least at a basic level.

Create a detailed plan for learning new technologies

Since we learn step by step, we need to define how to decompose our learning path for technology into smaller chunks. Breaking down the topics into smaller pieces can make it easier to learn. Rather than "learn Spring Boot" or "learn DB access with Spring," we should follow more granular steps like "learn Spring IoC," "learn Spring AOP," and "learn JPA."

A more detailed plan can make the learning process easier. While it may be clear that the initial steps should cover the basics and become more advanced with each topic, determining the order in which to learn each topic can be tricky. A properly made learning plan guarantees that our knowledge will be well-structured, and all the important information will be covered. Having such a plan also helps track progress.

When planning, it is beneficial to utilize modern tools that can help visualize the structure of the learning path. Tools such as Trello, which organizes work into boards, task lists, and workspaces, can be especially helpful. These tools enable us to visualize learning paths and track progress as we learn.

Revisit previous topics

When learning about technologies, topics can often have complex dependencies, sometimes even cyclical. This means that to learn one topic, we may need to have some understanding of another. It sounds complicated, but don’t let it worry you – our brain can deal with way more complex things.

When we learn, we initially understand the first topic on a basic level, which allows us to move on to the next topics. However, as we continue to learn and acquire further knowledge, our understanding of the initial topic becomes deeper. Revisiting previous topics can help us achieve a deeper and better understanding of the technology.

Tips on learning strategies

Now, let's explore some helpful tips for learning new technologies. These suggestions are more general and aimed at making the learning process more efficient.

Overcome reluctance

Extending our thinking or adding new concepts to what we know requires much effort. So, it's natural for our brain to resist. When we learn something new, we may feel uncomfortable because we don't understand the whole technology, even in general, and we see how much knowledge there is to be acquired within it.

Here are some tips that may be useful to make the learning process easier and less tiring.

1) Don't try to learn as much as possible. Focus on the basics within each topic first. Then, learn the key things required to its practical application. As you study more advanced topics, revisit some of the previous ones. This allows you to get a better and deeper understanding of the material.

2) Try to find some interesting topic that you enjoy exploring at the moment. If you have multiple options, pick the one you like the most. Learning is much more efficient if we study something that interests us. While working on some feature or task in the current or past project, you were probably curious about how certain things worked. Or you just like the architectural approach or pattern to the specific task. Finding a similar topic or concept in your learning plan is the best way to utilize such curiosity. Even if it's a bit more advanced, your brain will show more enthusiasm for such material than for something that is simpler but seems dull.

3) Split the task into intermediate, smaller goals. Learning small steps rather than deep diving into large topics is easier. The feeling of having accomplished intermediate goals helps to retain motivation and move forward.

4) Track the progress. It's not just a formality. It helps to ensure your velocity and keep track of the timing. If some steps take more time, tracking progress will allow you to adjust the learning plan accordingly. Moreover, observing the progress provides a feeling of satisfaction about the intermediate achievements.

5) Ask questions, even about the basics. When you learn, there is no such thing as a stupid question. If you feel stuck with some step of the learning plan, searching for help from others will speed up the process.

Learn new technologies systematically and frequently

Learning new technologies frequently with smaller portions is often more effective. Timing is crucial when acquiring new knowledge, and it may not be efficient to learn immediately after a long, intensive workday as our brains may feel exhausted. If we are working on projects in parallel with learning, we could set aside time in the morning to focus on learning. Alternatively, we could learn after work hours, but only after resting and if the tasks during our workday do not require too much concentration or mental effort.

Frequency is also important. E.g., an all-day learning session only one day per week is not as effective as spending 1-2 hours each day on acquiring knowledge. But it's just an example. Everyone should determine the optimal learning time-to-frequency ratio on their own, as it varies from person to person.

Experiment with new technology

A great way to learn is by exploring new technology. Trying to do the same task with different approaches gives a better understanding of how we may use the framework or programming language. Experimentation can improve our understanding of technology in a similar way to gaining experience on real projects. We can improve our knowledge and understanding of the technology by solving similar tasks while working on different projects or features.

Explain it to others

Explaining to someone else helps us structure and organize our own knowledge. Even if we don't fully understand the topic, explaining it may help us connect the dots. The trick here is that when we describe something to someone, we repeat and rethink our knowledge. In such a way, we consolidate some parts that we know but don't fully understand, or that simply aren't connected.

Summary

This article described the key points of organizing how to learn new technologies. We described the main approaches to structuring learning and provided some steps to take. A properly structured and well-organized learning process makes gaining new technical knowledge efficient and easy.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Generative AI for developers - our comparison

So, it begins… Artificial intelligence comes into play for all of us. It can propose a menu for a party, plan a trip around Italy, draw a poster for a (non-existing) movie, generate a meme, compose a song, or even "record" a movie. Can Generative AI help developers? Certainly, but….

In this article, we will compare several tools to show their possibilities. We'll show you the pros, cons, risks, and strengths. Is it usable in your case? Well, that question you'll need to answer on your own.

The research methodology

It's rather impossible to compare available tools with the same criteria. Some are web-based, some are restricted to a specific IDE, some offer a "chat" feature, and others only propose a code. We aimed to benchmark tools in a task of code completion, code generation, code improvements, and code explanation. Beyond that, we're looking for a tool that can "help developers," whatever it means.

During the research, we tried to write a simple CRUD application, and a simple application with puzzling logic, to generate functions based on name or comment, to explain a piece of legacy code, and to generate tests. Then we've turned to Internet-accessing tools, self-hosted models and their possibilities, and other general-purpose tools.

We've tried multiple programming languages – Python, Java, Node.js, Julia, and Rust. There are a few use cases we've challenged with the tools.

CRUD

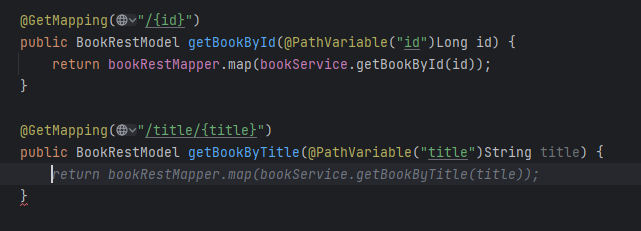

The test aimed to evaluate whether a tool can help in repetitive, easy tasks. The plan is to build a 3-layer Java application with 3 types (REST model, domain, persistence), interfaces, facades, and mappers. A perfect tool may build the entire application by prompt, but a good one would complete a code when writing.

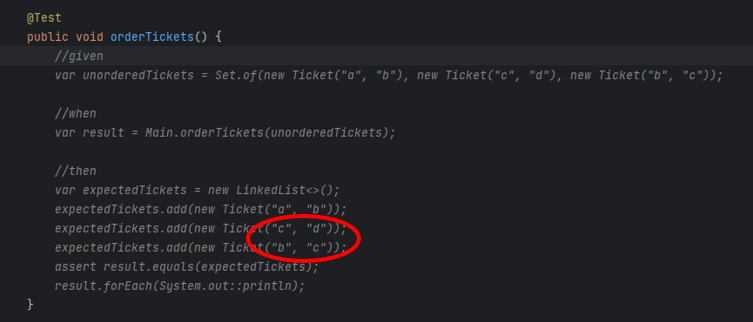

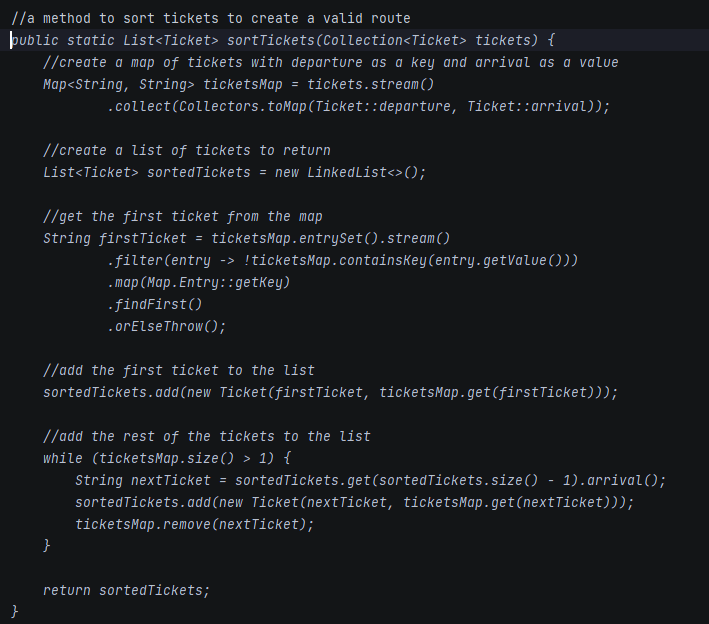

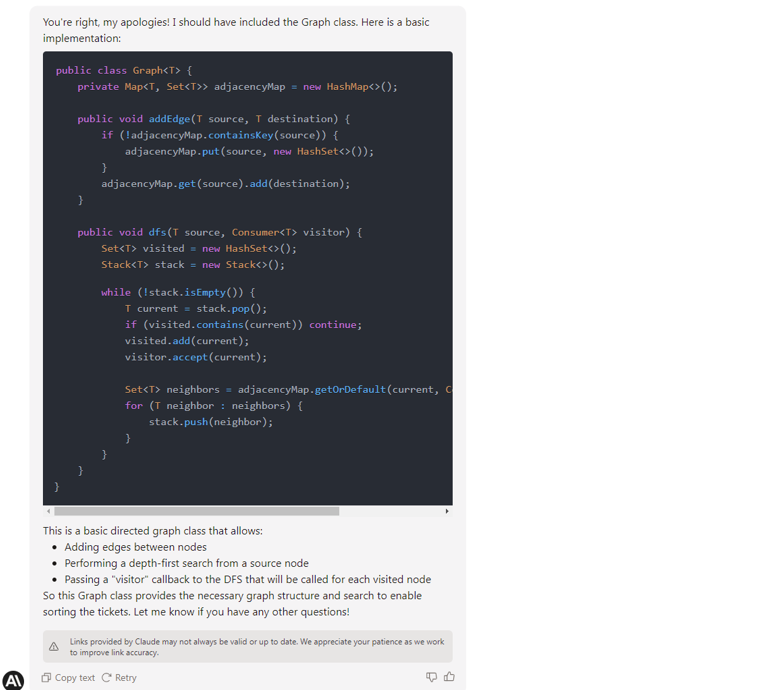

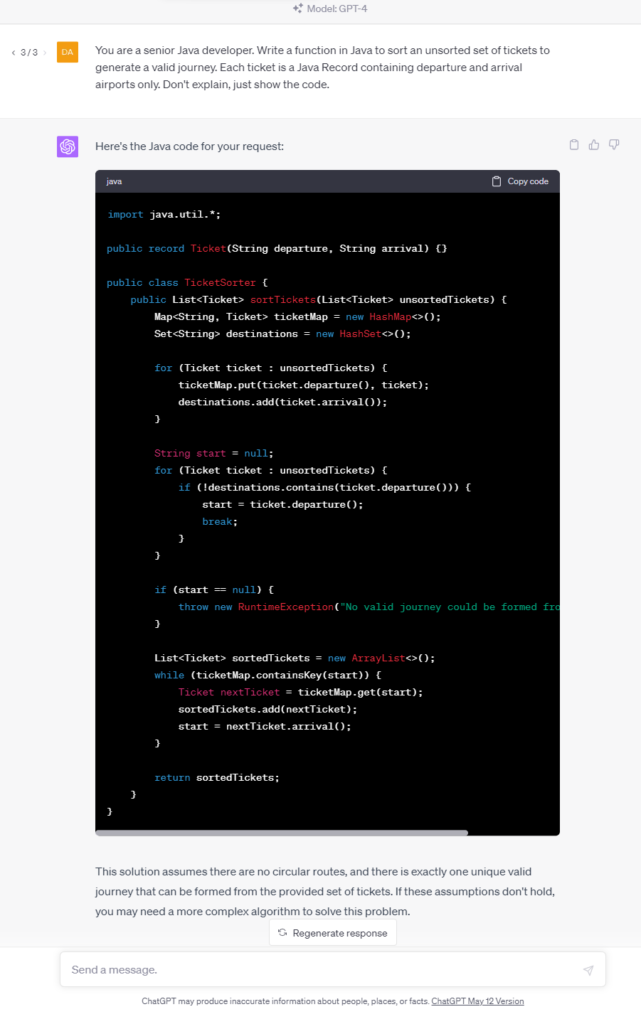

Business logic

In this test, we write a function to sort a given collection of unsorted tickets to create a route by arrival and departure points, e.g., the given set is Warsaw-Frankfurt, Frankfurt-London, Krakow-Warsaw, and the expected output is Krakow-Warsaw, Warsaw-Frankfurt, Frankfurt-London. The function needs to find the first ticket and then go through all the tickets to find the correct one to continue the journey.

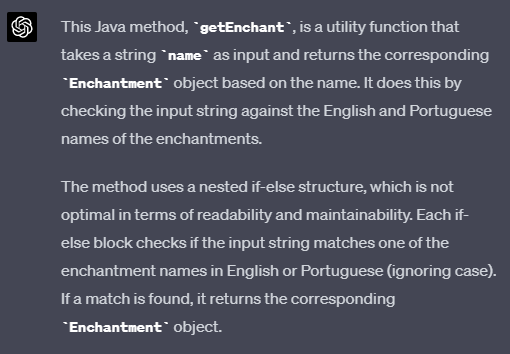

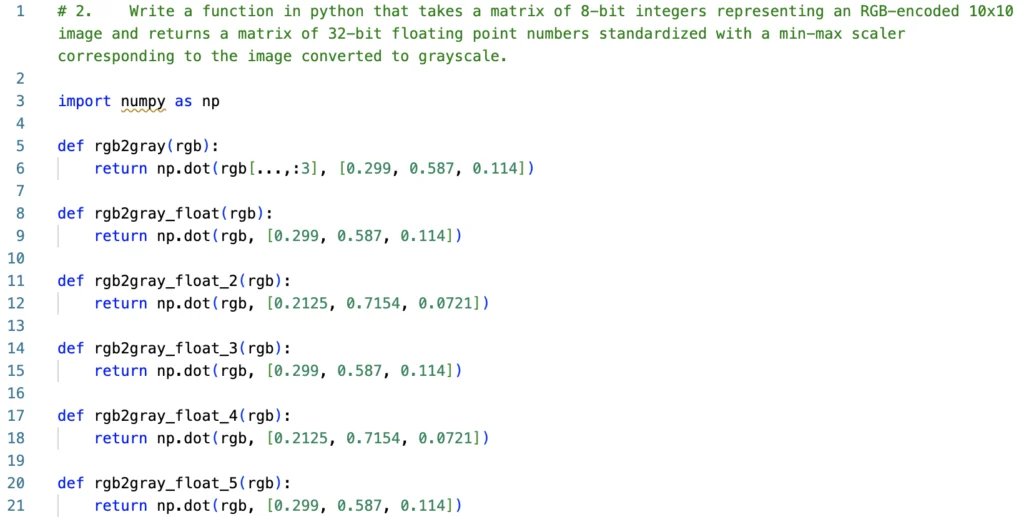

Specific-knowledge logic

This time we require some specific knowledge – the task is to write a function that takes a matrix of 8-bit integers representing an RGB-encoded 10x10 image and returns a matrix of 32-bit floating point numbers standardized with a min-max scaler corresponding to the image converted to grayscale. The tool should handle the standardization and the scaler with all constants on its own.

Complete application

We ask a tool (if possible) to write an entire "Hello world!" web server or a bookstore CRUD application. It seems to be an easy task due to the number of examples over the Internet; however, the output size exceeds most tools' capabilities.

Simple function

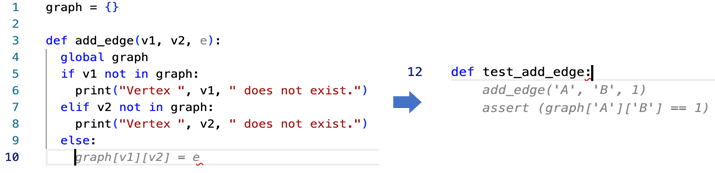

This time we expect the tool to write a simple function – to open a file and lowercase the content, to get the top element from the collection sorted, to add an edge between two nodes in a graph, etc. As developers, we write such functions time and time again, so we wanted our tools to save our time.

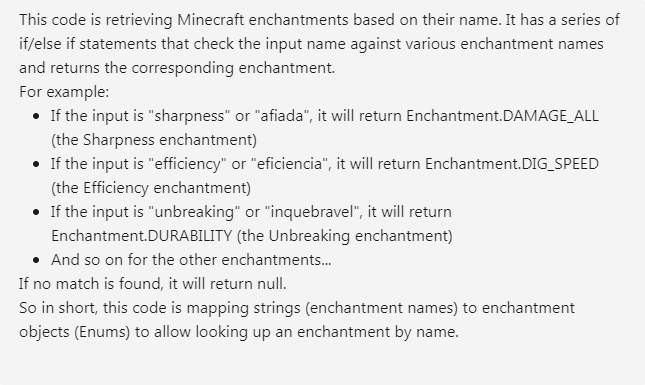

Explain and improve

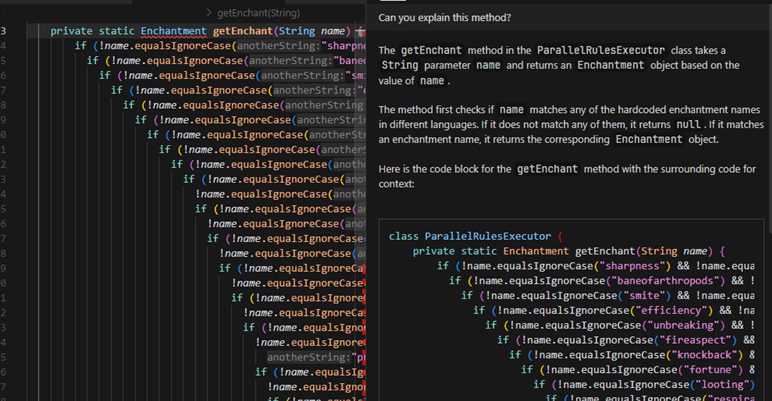

We had asked the tool to explain a piece of code:

- A method to run two tasks in parallel, merge results to a collection if successful, or fail fast if any task has failed,

- Typical arrow anti-pattern (example 5 from 9 Bad Java Snippets To Make You Laugh | by Milos Zivkovic | Dev Genius ),

- AWS R53 record validation terraform resource.

If possible, we also asked it to improve the code.

Each time, we have also tried to simply spend some time with a tool, write some usual code, generate tests, etc.

The generative AI tools evaluation

Ok, let's begin with the main dish. Which tools are useful and worth further consideration?

Tabnine

Tabnine is an "AI assistant for software developers" – a code completion tool working with many IDEs and languages. It looks like a state-of-the-art solution for 2023 – you can install a plugin for your favorite IDE, and an AI trained on open-source code with permissive licenses will propose the best code for your purposes. However, there are a few unique features of Tabnine.

You can allow it to process your project or your GitHub account for fine-tuning to learn the style and patterns used in your company. Besides that, you don't need to worry about privacy. The authors claim that the tuned model is private, and the code won't be used to improve the global version. If you're not convinced, you can install and run Tabnine on your private network or even on your computer.

The tool costs $12 per user per month, and a free trial is available; however, you're probably more interested in the enterprise version with individual pricing.

The good, the bad, and the ugly

Tabnine is easy to install and works well with IntelliJ IDEA (which is not so obvious for some other tools). It improves standard, built-in code proposals; you can scroll through a few versions and pick the best one. It proposes entire functions or pieces of code quite well, and the proposed-code quality is satisfactory.

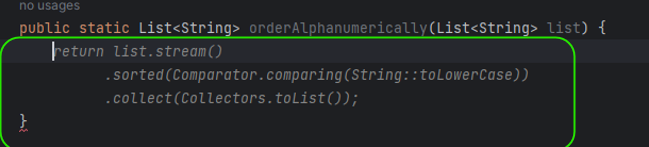

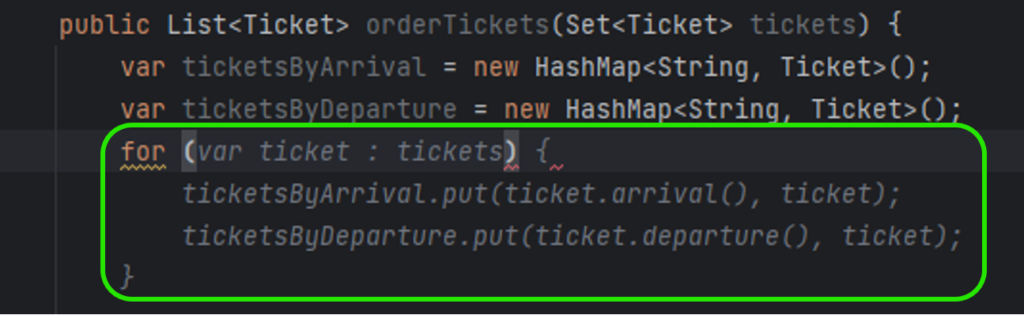

So far, Tabnine seems to be perfect, but there is also another side of the coin. The problem is the error rate of the code generated. In Figure 2, you can see ticket.arrival() and ticket.departure() invocations. It was my fourth or fifth try until Tabnine realized that Ticket is a Java record and no typical getters are implemented. In all other cases, it generated ticket.getArrival() and ticket.getDeparture() , even if there were no such methods and the compiler reported errors just after the propositions acceptance.

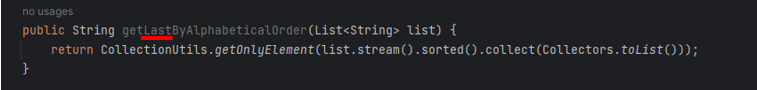

Another time, Tabnine omitted a part of the prompt, and the code generated was compilable but wrong. Here you can find a simple function that looks OK, but it doesn't do what was desired to.

There is one more example – Tabnine used a commented-out function from the same file (the test was already implemented below), but it changed the line order. As a result, the test was not working, and it took a while to determine what was happening.

It leads us to the main issue related to Tabnine. It generates simple code, which saves a few seconds each time, but it's unreliable, produces hard-to-find bugs, and requires more time to validate the generated code than saves by the generation. Moreover, it generates proposals constantly, so the developer spends more time reading propositions than actually creating good code.

Our rating

Conclusion: A mature tool with average possibilities, sometimes too aggressive and obtrusive (annoying), but with a little bit of practice, may also make work easier

‒ Possibilities 3/5

‒ Correctness 2/5

‒ Easiness 2,5/5

‒ Privacy 5/5

‒ Maturity 4/5

Overall score: 3/5

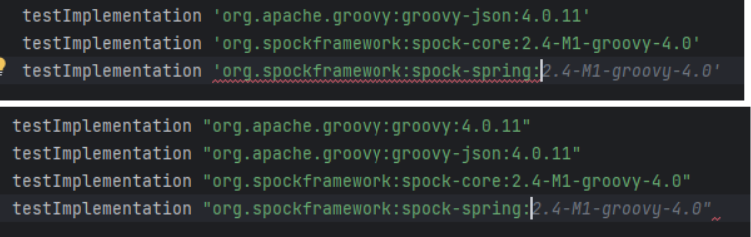

GitHub Copilot

This tool is state-of-the-art. There are tools "similar to GitHub Copilot," "alternative to GitHub Copilot," and "comparable to GitHub Copilot," and there is the GitHub Copilot itself. It is precisely what you think it is – a code-completion tool based on the OpenAI Codex model, which is based on GPT-3 but trained with publicly available sources, including GitHub repositories. You can install it as a plugin for popular IDEs, but you need to enable it on your GitHub account first. A free trial is available, and the standard license costs from $8,33 to $19 per user per month.

The good, the bad, and the ugly

It works just fine. It generates good one-liners and imitates the style of the code around.

Please note the Figure 6 - it not only uses closing quotas as needed but also proposes a library in the "guessed" version, as spock-spring.spockgramework.org:2.4-M1-groovy-4.0 is newer than the learning set of the model.

However, the code is not perfect.

In this test, the tool generated the entire method based on the comment from the first line of the listing. It decided to create a map of departures and arrivals as Strings, to re-create tickets when adding to sortedTickets, and to remove elements from ticketMaps. Simply speaking - I wouldn't like to maintain such a code in my project. GPT-4 and Claude do the same job much better.

The general rule of using this tool is – don't ask it to produce a code that is too long. As mentioned above – it is what you think it is, so it's just a copilot which can give you a hand in simple tasks, but you still take responsibility for the most important parts of your project. Compared to Tabnine, GitHub Copilot doesn't propose a bunch of code every few keys pressed, and it produces less readable code but with fewer errors, making it a better companion in everyday life.

Our rating

Conclusion: Generates worse code than GPT-4 and doesn't offer extra functionalities ("explain," "fix bugs," etc.); however, it's unobtrusive, convenient, correct when short code is generated and makes everyday work easier

‒ Possibilities 3/5

‒ Correctness 4/5

‒ Easiness 5/5

‒ Privacy 5/5

‒ Maturity 4/5

Overall score: 4/5

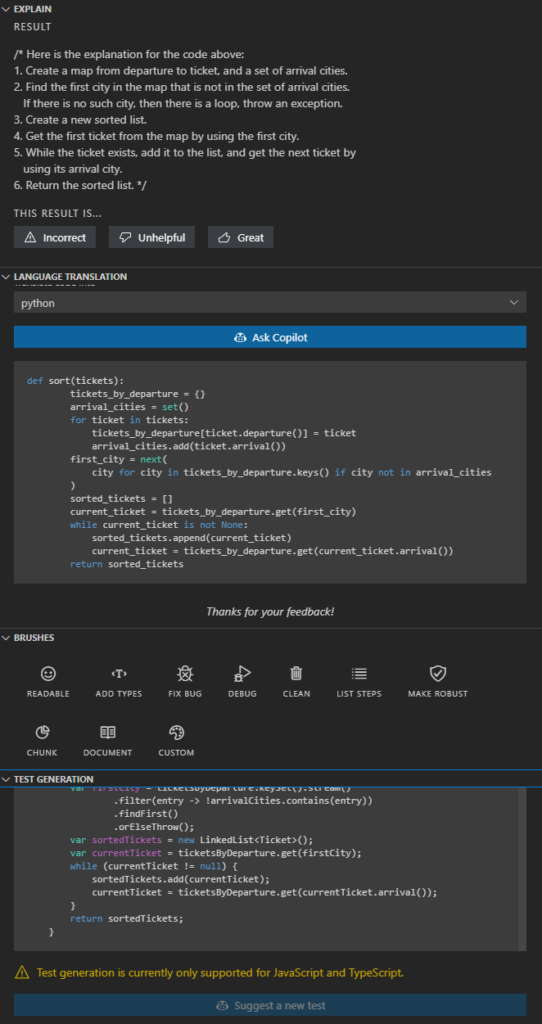

GitHub Copilot Labs

The base GitHub copilot, as described above, is a simple code-completion tool. However, there is a beta tool called GitHub Copilot Labs. It is a Visual Studio Code plugin providing a set of useful AI-powered functions: explain, language translation, Test Generation, and Brushes (improve readability, add types, fix bugs, clean, list steps, make robust, chunk, and document). It requires a Copilot subscription and offers extra functionalities – only as much, and so much.

The good, the bad, and the ugly

If you are a Visual Studio Code user and you already use the GitHub Copilot, there is no reason not to use the "Labs" extras. However, you should not trust it. Code explanation works well, code translation is rarely used and sometimes buggy (the Python version of my Java code tries to call non-existing functions, as the context was not considered during translation), brushes work randomly (sometimes well, sometimes badly, sometimes not at all), and test generation works for JS and TS languages only.

Our rating

Conclusion: It's a nice preview of something between Copilot and Copilot X, but it's in the preview stage and works like a beta. If you don't expect too much (and you use Visual Studio Code and GitHub Copilot), it is a tool for you.

‒ Possibilities 4/5

‒ Correctness 2/5

‒ Easiness 5/5

‒ Privacy 5/5

‒ Maturity 1/5

Overall score: 3/5

Cursor

Cursor is a complete IDE forked from Visual Studio Code open-source project. It uses OpenAI API in the backend and provides a very straightforward user interface. You can press CTRL+K to generate/edit a code from the prompt or CTRL+L to open a chat within an integrated window with the context of the open file or the selected code fragment. It is as good and as private as the OpenAI models behind it but remember to disable prompt collection in the settings if you don't want to share it with the entire World.

The good, the bad, and the ugly

Cursor seems to be a very nice tool – it can generate a lot of code from prompts. Be aware that it still requires developer knowledge – "a function to read an mp3 file by name and use OpenAI SDK to call OpenAI API to use 'whisper-1' model to recognize the speech and store the text in a file of same name and txt extension" is not a prompt that your accountant can make. The tool is so good that a developer used to one language can write an entire application in another one. Of course, they (the developer and the tool) can use bad habits together, not adequate to the target language, but it's not the fault of the tool but the temptation of the approach.

There are two main disadvantages of Cursor.

Firstly, it uses OpenAI API, which means it can use up to GPT-3.5 or Codex (for mid-May 2023, there is no GPT-4 API available yet), which is much worse than even general-purpose GPT-4. For example, Cursor asked to explain some very bad code has responded with a very bad answer.

For the same code, GPT-4 and Claude were able to find the purpose of the code and proposed at least two better solutions (with a multi-condition switch case or a collection as a dataset). I would expect a better answer from a developer-tailored tool than a general-purpose web-based chat.

Secondly, Cursor uses Visual Studio Code, but it's not just a branch of it – it's an entire fork, so it can be potentially hard to maintain, as VSC is heavily changed by a community. Besides that, VSC is as good as its plugins, and it works much better with C, Python, Rust, and even Bash than Java or browser-interpreted languages. It's common to use specialized, commercial tools for specialized use cases, so I would appreciate Cursor as a plugin for other tools rather than a separate IDE.

There is even a feature available in Cursor to generate an entire project by prompt, but it doesn't work well so far. The tool has been asked to generate a CRUD bookstore in Java 18 with a specific architecture. Still, it has used Java 8, ignored the architecture, and produced an application that doesn't even build due to Gradle issues. To sum up – it's catchy but immature.

The prompt used in the following video is as follows:

"A CRUD Java 18, Spring application with hexagonal architecture, using Gradle, to manage Books. Each book must contain author, title, publisher, release date and release version. Books must be stored in localhost PostgreSQL. CRUD operations available: post, put, patch, delete, get by id, get all, get by title."

https://www.youtube.com/watch?v=Q2czylS2i-E

The main problem is – the feature has worked only once, and we were not able to repeat it.

Our rating

Conclusion: A complete IDE for VS-Code fans. Worth to be observed, but the current version is too immature.

‒ Possibilities 5/5

‒ Correctness 2/5

‒ Easiness 4/5

‒ Privacy 5/5

‒ Maturity 1/5

Overall score: 2/5

Amazon CodeWhisperer

CodeWhisperer is an AWS response to Codex. It works in Cloud9 and AWS Lambdas, but also as a plugin for Visual Studio Code and some JetBrains products. It somehow supports 14 languages with full support for 5 of them. By the way, most tool tests work better with Python than Java – it seems AI tool creators are Python developers🤔. CodeWhisperer is free so far and can be run on a free tier AWS account (but it requires SSO login) or with AWS Builder ID.

The good, the bad, and the ugly

There are a few positive aspects of CodeWhisperer. It provides an extra code analysis for vulnerabilities and references, and you can control it with usual AWS methods (IAM policies), so you can decide about the tool usage and the code privacy with your standard AWS-related tools.

However, the quality of the model is insufficient. It doesn't understand more complex instructions, and the code generated can be much better.

For example, it has simply failed for the case above, and for the case below, it proposed just a single assertion.

Our rating

Conclusion: Generates worse code than GPT-4/Claude or even Codex (GitHub Copilot), but it's highly integrated with AWS, including permissions/privacy management

‒ Possibilities 2.5/5

‒ Correctness 2.5/5

‒ Easiness 4/5

‒ Privacy 4/5

‒ Maturity 3/5

Overall score: 2.5/5

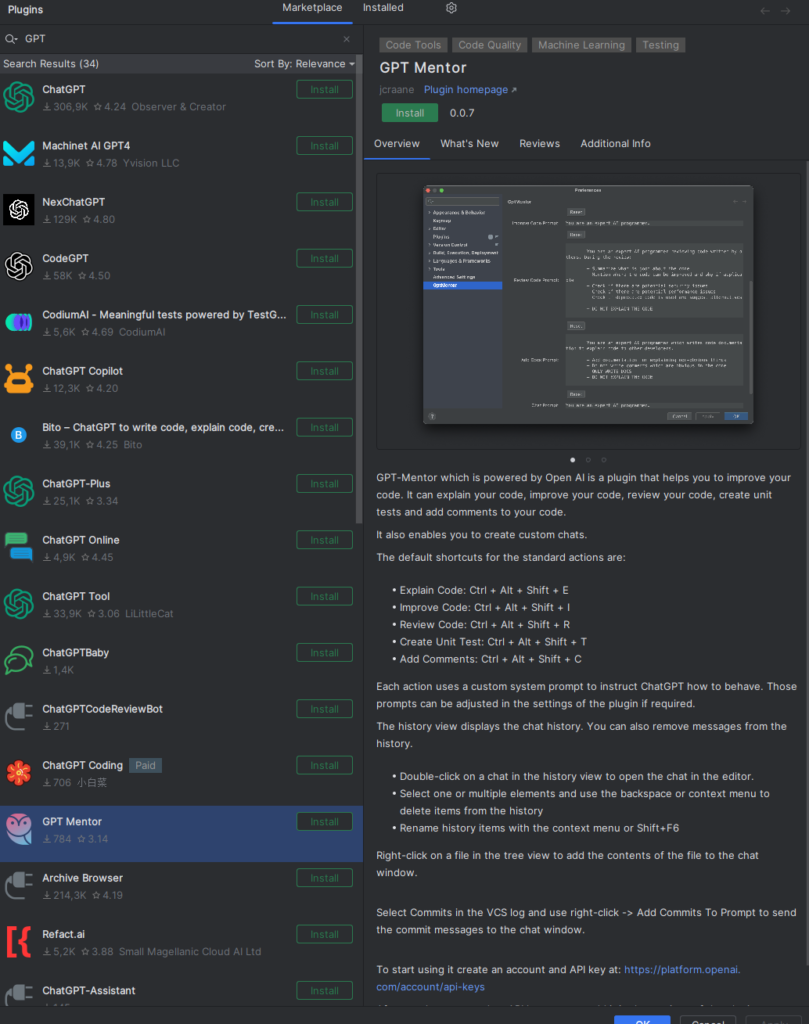

Plugins

As the race for our hearts and wallets has begun, many startups, companies, and freelancers want to participate in it. There are hundreds (or maybe thousands) of plugins for IDEs that send your code to OpenAI API.

You can easily find one convenient to you and use it as long as you trust OpenAI and their privacy policy. On the other hand, be aware that your code will be processed by one more tool, maybe open-source, maybe very simple, but it still increases the possibility of code leaks. The proposed solution is – to write an own plugin. There is a space for one more in the World for sure.

Knocked out tools

There are plenty of tools we've tried to evaluate, but those tools were too basic, too uncertain, too troublesome, or simply deprecated, so we have decided to eliminate them before the full evaluation. Here you can find some examples of interesting ones but rejected.

Captain Stack

According to the authors, the tool is "somewhat similar to GitHub Copilot's code suggestion," but it doesn't use AI – it queries your prompt with Google, opens Stack Overflow, and GitHub gists results and copies the best answer. It sounds promising, but using it takes more time than doing the same thing manually. It doesn't provide any response very often, doesn't provide the context of the code sample (explanation given by the author), and it has failed all our tasks.

IntelliCode

The tool is trained on thousands of open-source projects on GitHub, each with high star ratings. It works with Visual Studio Code only and suffers from poor Mac performance. It is useful but very straightforward – it can find a proper code but doesn't work well with a language. You need to provide prompts carefully; the tool seems to be just an indexed-search mechanism with low intelligence implemented.

Kite

Kite was an extremely promising tool in development since 2014, but "was" is the keyword here. The project was closed in 2022, and the authors' manifest can bring some light into the entire developer-friendly Generative AI tools: Kite is saying farewell - Code Faster with Kite . Simply put, they claimed it's impossible to train state-of-the-art models to understand more than a local context of the code, and it would be extremely expensive to build a production-quality tool like that. Well, we can acknowledge that most tools are not production-quality yet, and the entire reliability of modern AI tools is still quite low.

GPT-Code-Clippy

The GPT-CC is an open-source version of GitHub Copilot. It's free and open, and it uses the Codex model. On the other hand, the tool has been unsupported since the beginning of 2022, and the model is deprecated by OpenAI already, so we can consider this tool part of the Generative AI history.

CodeGeeX

CodeGeeX was published in March 2023 by Tsinghua University's Knowledge Engineering Group under Apache 2.0 license. According to the authors, it uses 13 billion parameters, and it's trained on public repositories in 23 languages with over 100 stars. The model can be your self-hosted GitHub Copilot alternative if you have at least Nvidia GTX 3090, but it's recommended to use A100 instead.

The online version was occasionally unavailable during the evaluation, and even when available - the tool failed on half of our tasks. There was no even a try, and the response from the model was empty. Therefore, we've decided not to try the offline version and skip the tool completely.

GPT

Crème de la crème of the comparison is the OpenAI flagship - generative pre-trained transformer (GPT). There are two important versions available for today – GPT-3.5 and GPT-4. The former version is free for web users as well as available for API users. GPT-4 is much better than its predecessor but is still not generally available for API users. It accepts longer prompts and "remembers" longer conversations. All in all, it generates better answers. You can give a chance of any task to GPT-3.5, but in most cases, GPT-4 does the same but better.

So what can GPT do for developers?

We can ask the chat to generate functions, classes, or entire CI/CD workflows. It can explain the legacy code and propose improvements. It discusses algorithms, generates DB schemas, tests, UML diagrams as code, etc. It can even run a job interview for you, but sometimes it loses the context and starts to chat about everything except the job.

The dark side contains three main aspects so far. Firstly, it produces hard-to-find errors. There may be an unnecessary step in CI/CD, the name of the network interface in a Bash script may not exist, a single column type in SQL DDL may be wrong, etc. Sometimes it requires a lot of work to find and eliminate the error; what is more important with the second issue – it pretends to be unmistakable. It seems so brilliant and trustworthy, so it's common to overrate and overtrust it and finally assume that there is no error in the answer. The accuracy and purity of answers and deepness of knowledge showed made an impression that you can trust the chat and apply results without meticulous analysis.

The last issue is much more technical – GPT-3.5 can accept up to 4k tokens which is about 3k words. It's not enough if you want to provide documentation, an extended code context, or even requirements from your customer. GPT-4 offers up to 32k tokens, but it's unavailable via API so far.

There is no rating for GPT. It's brilliant, and astonishing, yet still unreliable, and it still requires a resourceful operator to make correct prompts and analyze responses. And it makes operators less resourceful with every prompt and response because people get lazy with such a helper. During the evaluation, we've started to worry about Sarah Conor and her son, John, because GPT changes the game's rules, and it is definitely a future.

OpenAI API

Another side of GPT is the OpenAI API. We can distinguish two parts of it.

Chat models

The first part is mostly the same as what you can achieve with the web version. You can use up to GPT-3.5 or some cheaper models if applicable to your case. You need to remember that there is no conversation history, so you need to send the entire chat each time with new prompts. Some models are also not very accurate in "chat" mode and work much better as a "text completion" tool. Instead of asking, "Who was the first president of the United States?" your query should be, "The first president of the United States was." It's a different approach but with similar possibilities.

Using the API instead of the web version may be easier if you want to adapt the model for your purposes (due to technical integration), but it can also give you better responses. You can modify "temperature" parameters making the model stricter (even providing the same results on the same requests) or more random. On the other hand, you're limited to GPT-3.5 so far, so you can't use a better model or longer prompts.

Other purposes models

There are some other models available via API. You can use Whisper as a speech-to-text converter, Point-E to generate 3D models (point cloud) from prompts, Jukebox to generate music, or CLIP for visual classification. What's important – you can also download those models and run them on your own hardware at costs. Just remember that you need a lot of time or powerful hardware to run the models – sometimes both.

There is also one more model not available for downloading – the DALL-E image generator. It generates images by prompts, doesn't work with text and diagrams, and is mostly useless for developers. But it's fancy, just for the record.

The good part of the API is the official library availability for Python and Node.js, some community-maintained libraries for other languages, and the typical, friendly REST API for everybody else.

The bad part of the API is that it's not included in the chat plan, so you pay for each token used. Make sure you have a budget limit configured on your account because using the API can drain your pockets much faster than you expect.

Fine-tuning

Fine-tuning of OpenAI models is de facto a part of the API experience, but it desires its own section in our deliberations. The idea is simple – you can use a well-known model but feed it with your specific data. It sounds like medicine for token limitation. You want to use a chat with your domain knowledge, e.g., your project documentation, so you need to convert the documentation to a learning set, tune a model, and you can use the model for your purposes inside your company (the fine-tunned model remains private at company level).

Well, yes, but actually, no.

There are a few limitations to consider. The first one – the best model you can tune is Davinci, which is like GPT-3.5, so there is no way to use GPT-4-level deduction, cogitation, and reflection. Another issue is the learning set. You need to follow very specific guidelines to provide a learning set as prompt-completion pairs, so you can't simply provide your project documentation or any other complex sources. To achieve better results, you should also keep the prompt-completion approach in further usage instead of a chat-like question-answer conversation. The last issue is cost efficiency. Teaching Davinci with 5MB of data costs about $200, and 5MB is not a great set, so you probably need more data to achieve good results. You can try to reduce cost by using the 10 times cheaper Curie model, but it's also 10 times smaller (more like GPT-3 than GPT-3.5) than Davinci and accepts only 2k tokens for a single question-answer pair in total.

Embedding

Another feature of the API is called embedding. It's a way to change the input data (for example, a very long text) into a multi-dimensional vector. You can consider this vector a representation of your knowledge in a format directly understandable by the AI. You can save such a model locally and use it in the following scenarios: data visualization, classification, clustering, recommendation, and search. It's a powerful tool for specific use cases and can solve business-related problems. Therefore, it's not a helper tool for developers but a potential base for an engine of a new application for your customer.

Claude

Claude from Anthropic, an ex-employees of OpenAI, is a direct answer to GPT-4. It offers a bigger maximum token size (100k vs. 32k), and it's trained to be trustworthy, harmless, and better protected from hallucinations. It's trained using data up to spring 2021, so you can't expect the newest knowledge from it. However, it has passed all our tests, works much faster than the web GPT-4, and you can provide a huge context with your prompts. For some reason, it produces more sophisticated code than GPT-4, but It's on you to pick the one you like more.

If needed, a Claude API is available with official libraries for some popular languages and the REST API version. There are some shortcuts in the documentation, the web UI has some formation issues, there is no free version available, and you need to be manually approved to get access to the tool, but we assume all of those are just childhood problems.

Claude is so new, so it's really hard to say if it is better or worse than GPT-4 in a job of a developer helper, but it's definitely comparable, and you should probably give it a shot.

Unfortunately, the privacy policy of Anthropic is quite confusing, so we don't recommend posting confidential information to the chat yet.

Internet-accessing generative AI tools

The main disadvantage of ChatGPT, raised since it has generally been available, is no knowledge about recent events, news, and modern history. It's already partially fixed, so you can feed a context of the prompt with Internet search results. There are three tools worth considering for such usage.

Microsoft Bing

Microsoft Bing was the first AI-powered Internet search engine. It uses GPT to analyze prompts and to extract information from web pages; however, it works significantly worst than pure GPT. It has failed in almost all our programming evaluations, and it falls into an infinitive loop of the same answers if the problem is concealed. On the other hand, it provides references to the sources of its knowledge, can read transcripts from YouTube videos, and can aggregate the newest Internet content.

Chat-GPT with Internet access

The new mode of Chat-GPT (rolling out for premium users in mid-May 2023) can browse the Internet and scrape web pages looking for answers. It provides references and shows visited pages. It seems to work better than Bing, probably because it's GPT-4 powered compared to GPT-3.5. It also uses the model first and calls the Internet only if it can't provide a good answer to the question-based trained data solitary.

It usually provides better answers than Bing and may provide better answers than the offline GPT-4 model. It works well with questions you can answer by yourself with an old-fashion search engine (Google, Bing, whatever) within one minute, but it usually fails with more complex tasks. It's quite slow, but you can track the query's progress on UI.

Importantly, and you should keep this in mind, Chat-GPT sometimes provides better responses with offline hallucinations than with Internet access.

For all those reasons, we don't recommend using Microsoft Bing and Chat-GPT with Internet access for everyday information-finding tasks. You should only take those tools as a curiosity and query Google by yourself.

Perplexity

At first glance, Perplexity works in the same way as both tools mentioned – it uses Bing API and OpenAI API to search the Internet with the power of the GPT model. On the other hand, it offers search area limitations (academic resources only, Wikipedia, Reddit, etc.), and it deals with the issue of hallucinations by strongly emphasizing citations and references. Therefore, you can expect more strict answers and more reliable references, which can help you when looking for something online. You can use a public version of the tool, which uses GPT-3.5, or you can sign up and use the enhanced GPT-4-based version.

We found Perplexity better than Bing and Chat-GPT with Internet Access in our evaluation tasks. It's as good as the model behind it (GPT-3.5 or GPT-4), but filtering references and emphasizing them does the job regarding the tool's reliability.

For mid-May 2023 the tool is still free.

Google Bard

It's a pity, but when writing this text, Google's answer for GPT-powered Bing and GPT itself is still not available in Poland, so we can't evaluate it without hacky solutions (VPN).

Using Internet access in general

If you want to use a generative AI model with Internet access, we recommend using Perplexity. However, you need to keep in mind that all those tools are based on Internet search engines which base on complex and expensive page positioning systems. Therefore, the answer "given by the AI" is, in fact, a result of marketing actions that brings some pages above others in search results. In other words, the answer may suffer from lower-quality data sources published by big players instead of better-quality ones from independent creators. Moreover, page scrapping mechanisms are not perfect yet, so you can expect a lot of errors during the usage of the tools, causing unreliable answers or no answers at all.

Offline models

If you don't trust legal assurance and you are still concerned about the privacy and security of all the tools mentioned above, so you want to be technically insured that all prompts and responses belong to you only, you can consider self-hosting a generative AI model on your hardware. We've already mentioned 4 models from OpenAI (Whisper, Point-E, Jukebox, and CLIP), Tabnine, and CodeGeeX, but there are also a few general-purpose models worth consideration. All of them are claimed to be best-in-class and similar to OpenAI's GPT, but it's not all true.

Only free commercial usage models are listed below. We’ve focused on pre-trained models, but you can train or just fine-tune them if needed. Just remember the training may be even 100 times more resource consuming than usage.

Flan-UL2 and Flan-T5-XXL

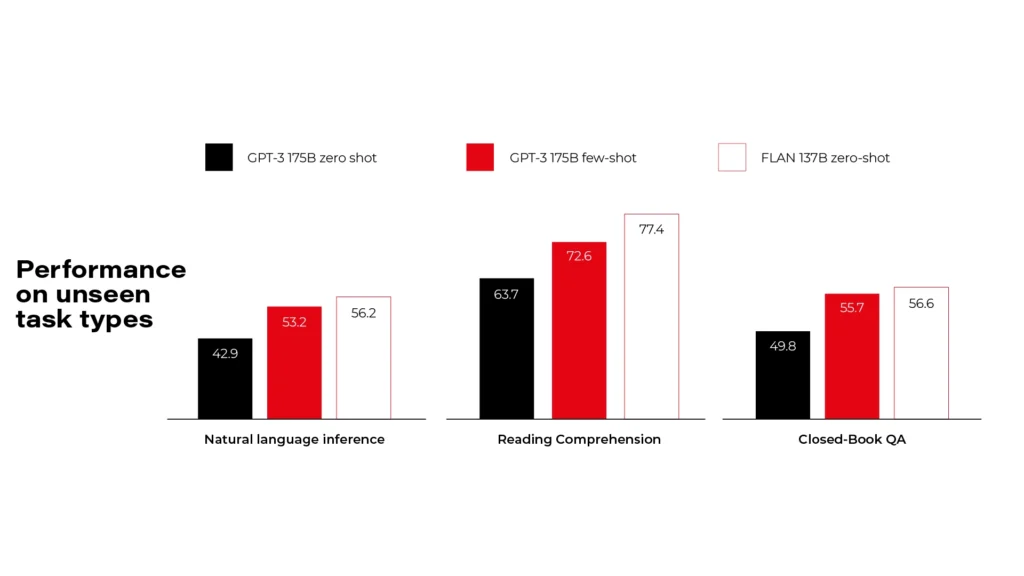

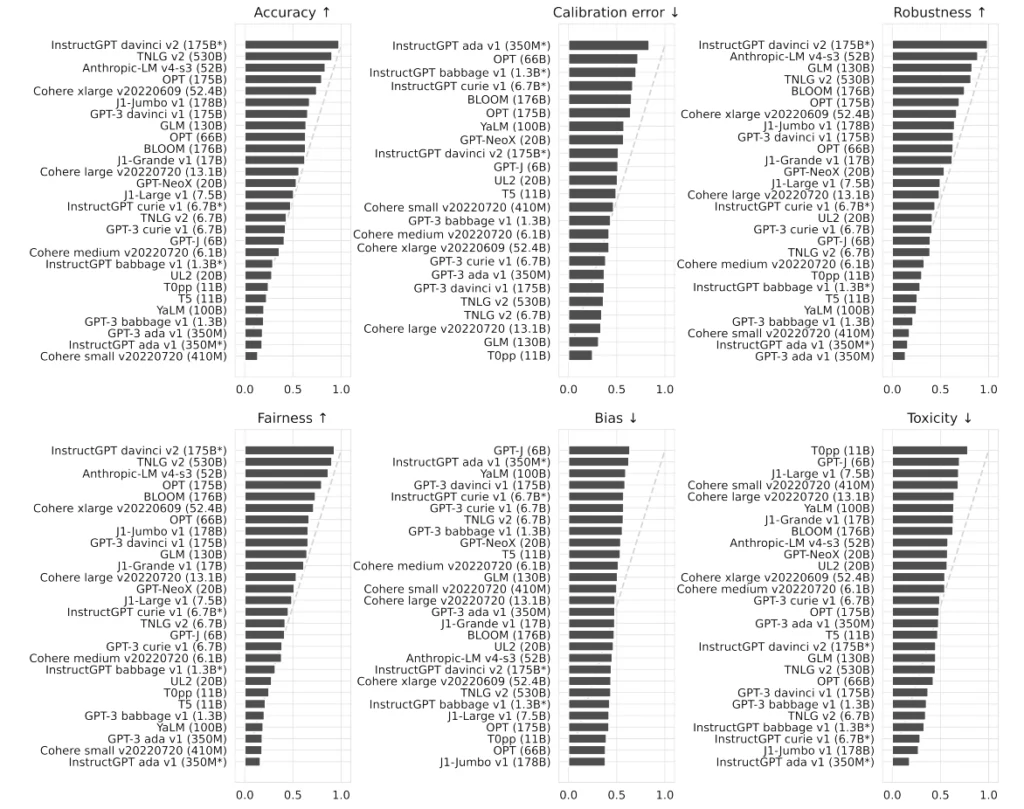

Flan models are made by Google and released under Apache 2.0 license. There are more versions available, but you need to pick a compromise between your hardware resources and the model size. Flan-UL2 and Flan-T5-XXL use 20 billion and 11 billion parameters and require 4x Nvidia T4 or 1x Nvidia A6000 accordingly. As you can see on the diagrams, it's comparable to GPT-3, so it's far behind the GPT-4 level.

BLOOM

BigScience Large Open-Science Open-Access Multilingual Language Model is a common work of over 1000 scientists. It uses 176 billion parameters and requires at least 8x Nvidia A100 cards. Even if it's much bigger than Flan, it's still comparable to OpenAI's GPT-3 in tests. Actually, it's the best model you can self-host for free that we've found so far.

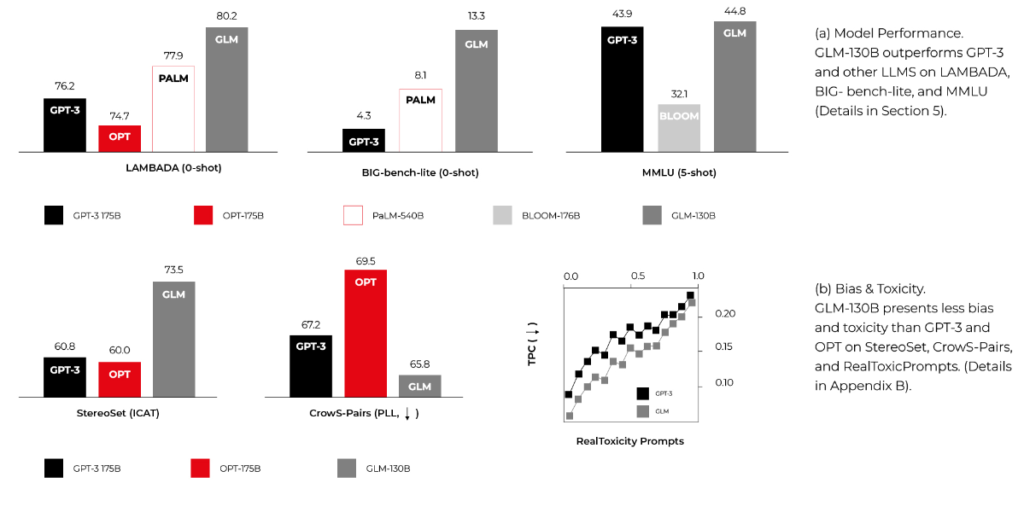

GLM-130B

General Language Model with 130 billion parameters, published by CodeGeeX authors. It requires similar computing power to BLOOM and can overperform it in some MMLU benchmarks. It's smaller and faster because it's bilingual (English and Chinese) only, but it may be enough for your use cases.

Summary

When we approached the research, we were worried about the future of developers. There are a lot of click-bite articles over the Internet showing Generative AI creating entire applications from prompts within seconds. Now we know that at least our near future is secured.

We need to remember that code is the best product specification possible, and the creation of good code is possible only with a good requirement specification. As business requirements are never as precise as they should be, replacing developers with machines is impossible. Yet.

However, some tools may be really advantageous and make our work faster. Using GitHub Copilot may increase the productivity of the main part of our job – code writing. Using Perplexity, GPT-4, or Claude may help us solve problems. There are some models and tools (for developers and general purposes) available to work with full discreteness, even technically enforced. The near future is bright – we expect GitHub Copilot X to be much better than its predecessor, we expect the general purposes language model to be more precise and helpful, including better usage of the Internet resources, and we expect more and more tools to show up in next years, making the AI race more compelling.

On the other hand, we need to remember that each helper (a human or machine one) takes some of our independence, making us dull and idle. It can change the entire human race in the foreseeable future. Besides that, the usage of Generative AI tools consumes a lot of energy by rare metal-based hardware, so it can drain our pockets now and impact our planet soon.

This article has been 100% written by humans up to this point, but you can definitely expect less of that in the future.

How to run a successful sprint review meeting

A Sprint Review is a meeting that closes and approves a Sprint in Scrum. The value of this meeting comes to inspect the increment and adapt the Product Backlog accordingly to current business conditions. This is a session where the Scrum Team and all interested stakeholders can attend to exchange information regarding progress made during the Sprint. They discuss problems and update the work plan to meet current market needs and approach a once determined product vision in a new way.

This meeting is supposed to enable feedback loop and foster collaboration, especially between the Scrum Team and all Stakeholders. A Sprint Review will be valuable for product development if there is an overall understanding of its purpose and plan. To maximize its value, it’s good to be aware of the impediments Scrum Team could meet. We would like to share our experience with a Sprint Review, the most important problems and facts discovered during numerous projects run by our team from Grape Up . As one of our company values is Drive Change, we are continuously working on enhancing our Scrum Process by investigating problems, resolving them, and implementing proper solutions to our daily work.

“Meetings – coding work balance.“

It’s a hard reality for managers leading projects. A lot of teams have a problem with too many meetings which disorganize daily work and may be an obstacle to deliver increment. Every group consists of both; people who hate meetings and prefer focusing on coding and meetings-lovers who always have thousands of questions and value teamwork over working independently. Internal team meetings or chats with stakeholders are an important part of daily work only when they bring any benefit to the team or product. Scrum prescribes four meetings during a Sprint: Sprint planning, the Daily Scrum, a Sprint Review and a Sprint Retrospective. It all makes collaboration critical to run a successful Scrum Team.

A stereotype developer who sits in his basement and doesn’t go on the daylight, who is introvert doesn’t fit this vision. Communication skills are very important for every scrum developer. Beyond technical skills and the ability to create good quality code, they need to develop their soft skills. Meetings are time-consuming and here comes a real threat that makes many people angry – after a few quarters of discussions, you may see no progress in coding. You need to confront with opinions of your colleagues and many times it’s not easy. Moreover, you need to describe to the managers what are you doing in a different language than JavaScript or Python.

For many professionals, the ideal world would be to sit comfortably in front of a screen and just write good quality code. But wait… Do people really want to do the work that no one wants? Create functionalities no one needs? Feedback exchange may not help to speed up the production process but for sure can boost the quality and usability of an application. The main idea of Scrum is to inspect and adapt continuously, and there is no way to do it without discussion and collaboration. In agile, the team’s information flow and early transformation is the main thing. It saves money and “lives”!

A Sprint Review gives a unique chance to stop for a moment and look at all the work done. It’s an important meeting that helps to keep roadmap and backlog in good health together with the market expectations. This is not only a one-side demo but a discussion of all participating guests. Each presented story should be talked through. Each attendee can ask questions, and the Product Owner or a team member should be able to answer it. It’s good to be as neutral as it’s possible neither praise nor criticize. Focus on evaluating features, not people.

Our team works for external clients from the USA, and our contact with them is constricted by time zones. A Sprint Review helps us to understand better client needs and step into their shoes. The development team can share their ideas and obstacles, and what is most important, enrich the understanding of the business value of their input. Each team member presents own part of a project, describes how it works, and asks the client for feedback. Sometimes we even share a work that's not 100% completed to be sure we are heading into the right direction to adapt on the early phase of implementation.

"Keep calm and work": No timeboxing

A Sprint Review should be open to everyone interested in joining the discussion. Main guests are the Product Owner, the Development Team, and business representation, but other stakeholders are also welcome. Is there a Scrum Master on board? For sure it should be! This may lead to a quite big meeting when many points of view occur and clash with each other. Uncontrolled discussion lasting hours is a real threat.

The Scrum Master’s role is to keep all meetings on track and allow everyone to talk. Parkinson’s Law is an old adage that says "work expands so as to fill the time available for its completion." Every Scrum Master’s golden rule of conducting meetings should be to schedule session blocks for the minimum amount of time needed. Remember that too long gathering may be a waste of time. Even if you are able to finish a meeting in 30 min, but had planned it for 1 hour, it will finally take the entire hour. Don’t forget that long and worthless meetings are boring.

An efficient Sprint Review needs to keep all guests focused and engaged. For a 1-week sprint, it should last about one hour; for a 2-week sprint, two hours. The Scrum Master’s role is to ensure theses time boxes but also to facilitate a meeting and inform when discussion leaves a trace. There should be time to present the summary of the last sprint: what was done and what wasn’t, to demonstrate progress and to answer all questions. Finally, additional time for gathering new ideas that will for sure appear. The second part of the meeting should be focused on discussing and prioritizing current backlog elements to adjust to customer and market needs. It’s a good moment for all team members to listen to the customer and get to know the management perspective and plan the next release content and date. A Sprint Review is an ideal moment for stakeholders to bring new ideas and priorities to the dev team and reorder backlog with Product Owner. During this session, all three Scrum pillars meet: transparency, inspection, and adaptation.

YOLO! : Preparation is for weak

A good plan is an attribute of a well-conducted meeting! No one likes mysterious meetings. An invitation should inform not only about time, place, and date of the meeting but also about the high-level program. Agenda is the first step of a good Sprint Review but not the only one. To be sure that Sprint Review will be satisfying for all participants, everyone needs to be well prepared. Firstly, the Product Owner should decide what work can be presented and create a clear plan of the demo and the entire meeting. The second step is to determine people responsible for each presented feature. Their job is to prepare the environment, interesting scenarios, and all needed materials. But before asking the team for it, make sure they know how to do it. Not all of the people are born presenters, but they can become one with your help.

In our team, we help each other with preparations to the Sprint Review. We work in Poland, but our customers are mostly from the USA. Not only the distance and time difference is a challenge but also language barriers. If there is a need everyone can ask for a rehearsal demo where we can present our work, discuss potential problems, and ask each other for more details. We’re constantly improving our English to make sure everything is clear to our clients. This boosts everyone’s self-confidence and final receipt. Team members know what to do, they’re ready for potential questions and focus on input from stakeholders. This is how we improve not only Sprint Reviews to be better and better but also communication skills.

"Work on your own terms": No DoD

“How ready is your work?”. Imagine a world where each developer decides when s/he finished work on her/his own terms. For one, it’s when the code is just compiled, for others it includes unit tests and it's compatible with coding standards, or even integration and usability tests. Everything works on a private branch but when it comes to integrating… boom! The team is trying to prepare a version to demonstrate but the final product crashes. The Product Owner and stakeholders are confused. A sprint without potentially releasable work is a wasted sprint. This dark scenario shouldn’t have happened to any Scrum Team.

To save time and to avoid misunderstandings, it’s good to speak the same language. All team members should be aware of the meaning of the word “done”. In our team, this keeps us on track with a transparent view of what work really can be found as delivered in a sprint. Definition of Done is like an examination of consistency for a team. Clear, generally achievable, and easy to understand steps to decide how much work is done to create a potentially releasable feature. This provides all stakeholders with clear information and allows the planning of the next business steps.

It is a guide that dictates when teams can legitimately claim that a given user’s story/task is "done" and can be moved to the next level - approval by the Product Owner and release. Basic elements of our DoD are; the code reviewed by other developers, merged to develop branch, and deployed on DEV/QA environment, and finally properly tested by QA and developers with the manual, and automation tests. The last step comes to fixing all defects, verifying if all the Acceptance Criteria are met, and reviewing by the Product Owner. Only when all DoD elements are done, we surely say that this is something we can potentially release when it will be needed.

"Curiosity killed a cat": don't report progress

Functionalities which meet requirements of Definition of Done are the first candidates to be shared on the Sprint Review. In the ideal Agile World, it’s not recommended to demonstrate not finished work. Everything we want to present should be potentially releasable… but wait! Could you agree that an ideal Agile World doesn’t exist? How many times external clients really don’t know how they want to implement something until they see the prototype.

Our company provides not only product development services but also consulting support. We don’t limit this collaboration to performing tasks, in most cases we co-create applications with our clients, advise the best solutions, and tackle their problems. Many times, there’s is no help from a Graphic Designer or a UX team. That happened to us. That’s why we have improved our Sprint Review. Since then we present not only finished work but also this in progress. Advanced features should be divided into smaller stories which can be delivered during one sprint. Final feature will be ready after a few sprints but completed parts of it should be demonstrated. It helps us discuss the vision and potential obstacles. Each meeting starts with work “done” and goes to work "in progress". What is the value? Clients trust us, believe in our ideas but at the same time still, have final word and control. Very quickly we can find out if we’re moving in the right direction or not. It’s better to hear “I imagined it in a different way, let’s change something” after one sprint than live with the falsified view of reality till the feature release.

"As you make your bed, so you must lie in it": don't inform about obstacle

Finally, even the best and ideal team can turn into the worst bunch of co-workers if they are not honest. We all know that customer satisfaction is a priority but not at all costs. It is not a shame to talk about problems the team met during the Sprint or obstacles we face with advanced features. Stakeholders need to know clearly the state of product development to confront it with the business teams involved in a project; marketing, sales or colleagues responsible for the project budget. When tasks take much more time than predicted, it’s better to show a delay in production and explain their reasons. Putting lipstick on a pig does not work. Transparency, which is so important is Scrum, allows all the people involved in the project to make good decisions for further product development. A Product Owner, as someone who defines the product vision, evaluates product progress and anticipates client needs, is obligated to look at the entire project from a general perspective, and monitor its health.

One more time, a stay-cool rule is up to date. Don’t panic and share a clear message based on facts.

We all want to implement the best practices and visions that make our life and work more productive and fruitful. Scrum helps with its values, pillars, and rules . But there is a long way from unconscious incompetence to conscious competence. It’s good to be aware of the problems we can meet and how to manage with them. “Rome wasn't built in a day”. If your team doesn’t use a Sprint Review but only a demo at the end of the sprint, just try to change it. As a team member, Scrum Master or Product Owner, observe, analyze and adapt continuously not only with your product but also with your team and processes.

Pro Tips:

- Adjust the time of a Sprint Review to accurate needs. For a 1-week Sprint, it’s one hour, for a 2-week Sprint give it two hours. It is a recommendation based on our experience.

- Have a plan. Create stable agenda and a brief summary of a Sprint and share it before meeting with everyone invited to a Sprint Review.

- Prepare yourself and a team. Coach your team members and discuss potential questions that can be asked by stakeholders.

- Facilitate meeting to keep all stakeholders interested and give everyone possibility to share feedback.

- Create a clear Definition of Done that is understandable by all team members and stakeholders.

- Be honest. Talk about problems and obstacles, show work in progress if you see there is value in it. Engage and co-create the product with your client.

- Try to be as neutral as it’s possible nor to praise or criticize. Focus on facts and substantive information.

How to Use Associative Knowledge Graphs to Build Efficient Knowledge Models

In this article, I will present how associative data structures such as ASA-Graphs, Multi-Associative Graph Data Structures, or Associative Neural Graphs can be used to build efficient knowledge models and how such models help rapidly derive insights from data.

Moving from raw data to knowledge is a difficult and essential challenge in the modern world, overwhelmed by a huge amount of information. Many approaches have been developed so far, including various machine learning techniques, but still, they do not address all the challenges. With the greater complexity of contemporary data models, a big problem of energy consumption and increasing costs has arisen. Additionally, the market expectations regarding model performance and capabilities are continuously growing, which imposes new requirements on them.

These challenges may be addressed with appropriate data structures which efficiently store data in a compressed and interconnected form. Together with dedicated algorithms i.e. associative classification, associative regression, associative clustering, patterns mining, or associative recommendations, they enable building scalable and high-performance solutions that meet the demands of the contemporary Big Data world.

The article is divided into three sections. The first section concerns knowledge in general and knowledge discovering techniques. The second section shows technical details of selected associative data structures and associative algorithms. The last section explains how associative knowledge models can be applied practically.

From data to wisdom

The human brain can process 11 million bits of information per second. But only about 40 to 50 bits of information per second reach consciousness. Let us consider the complexity of the tasks we solve every second. For example, the ability to recognize another person’s emotions in a particular context (e.g., someone’s past, weather, a relationship with the analyzed person, etc.) is admirable, to say the least. It involves several subtasks, such as facial expression recognition, voice analysis, or semantic and episodic memory association.

The overall process can be simplified into two main components: dividing the problem into simpler subtasks and reducing the amount of information using the existing knowledge. The emotional recognition mentioned earlier may be an excellent specific example of this rule. It is done by reducing a stream of millions of bits per second to a label representing someone’s emotional state. Let us assume that, at least to some extent, it is possible to reconstruct this process in a modern computer.

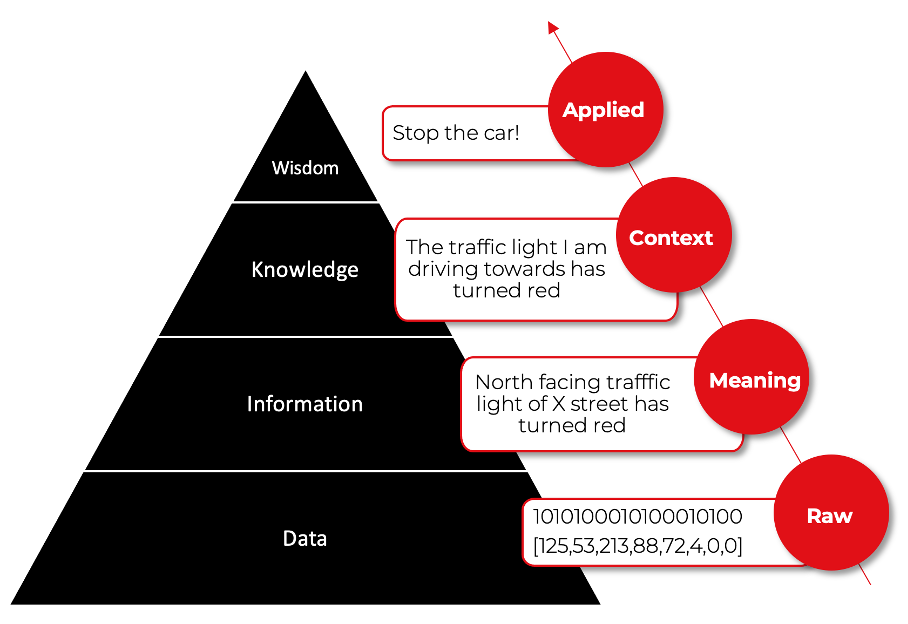

This process can be presented in the form of a pyramid. The DIKW pyramid, also known as the DIKW hierarchy, represents the relationships between data (D), information (I), knowledge (K), and wisdom (W). The picture below shows an example of a DIKW pyramid representing data flow from a perspective of a driver or autonomous car who noticed a traffic light turned to red.

In principle, the pyramid demonstrates how the understanding of the subject emerges hierarchically – each higher step is defined in terms of the lower step and adds value to the prior step. The input layer (data) handles the vast number of stimuli, and the consecutive layers are responsible for filtering, generalizing, associating, and compressing such data to develop an understanding of the problem. Consider how many of the AI (Artificial Intelligence) products you are familiar with are organized hierarchically, allowing them to develop knowledge and wisdom.

Let’s move through all the stages and explain each of them in simple words. It is worth realizing that many non-complementary definitions of data, information, knowledge, and wisdom exist. In this article, I use the definitions which are helpful from the perspective of making software that runs associative knowledge graphs, so let’s pretend for a moment that life is simpler than it is.

Data – know nothing

Many approaches try to define and explain data at the lowest level. Though it is very interesting, I won’t elaborate on that because I think one definition is enough to grasp the main idea. Imagine data as facts or observations that are unprocessed and therefore have no meaning or value because of a lack of context and interpretation. In practice, data is represented as signals or symbols produced by sensors. For a human, it can be sensory readings of light, sound, smell, taste, and touch in the form of electric stimuli in the nervous system.

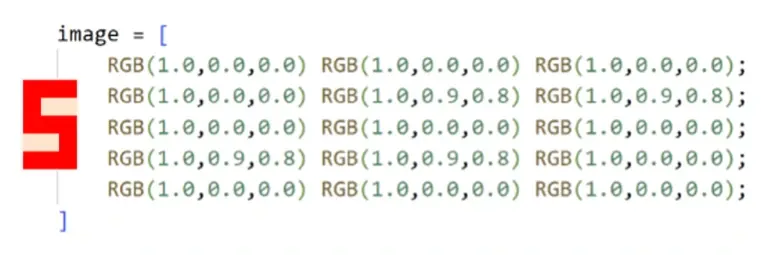

In the case of computers, data may be recorded as sequences of numbers representing measures, words, sounds, or images. Look at the example demonstrating how the red number five on an apricot background can be defined by 45 numbers i.e., a 3-dimensional array of floating-point numbers 3x5x3, where the width is 3, the height is 5, and the third dimension is for RGB color encoding.

In the case of the example from the picture, the data layer simply stores everything received by the driver or autonomous car without any reasoning about it.

Information – know what

Information is defined as data that are endowed with meaning and purpose. In other words, information is inferred from data. Data is being processed and reorganized to have relevance for a specific context – it becomes meaningful to someone or something. We need someone or something holding its own context to interpret raw data. This is the crucial part, the very first stage, where information selection and aggregation start.

How can we now know what data can be cut off, classified as noise, and filtered? It is impossible without an agent that holds an internal state, predefined or evolving. It means considering conditions such as genes, memory, or environment for humans. For software, however, we have more freedom. The context may be a rigid set of rules, for example, Kalman filter for visual data, or something really complicated and “alive” like an associative neural system.

Going back to the traffic example presented above, the information layer could be responsible for an object detection task and extracting valuable information from the driver’s perspective. The occipital cortex in the human brain or a convolutional neural network (CNN) in a driverless vehicle can deal with this. By the way, CNN architecture is inspired by the occipital cortex structure and function.

Knowledge – know who and when

The boundaries of knowledge in the DIKW hierarchy are blurred, and many definitions are imprecise, at least for me. For the purpose of the associative knowledge graph, let us assume that knowledge provides a framework for evaluating and incorporating new information by making relationships to enrich existing knowledge. To become a “knower”, an agent’s state must be able to extend in response to incoming data.

In other words, it must be able to adapt to new data because the incoming information may change the way further information would be handled. An associative system at this level must be dynamic to some extent. It does not necessarily have to change the internal rules in response to external stimuli but should be able to at least take them into account in further actions. To sum up, knowledge is a synthesis of multiple sources of information over time.

At the intersection with traffic lights, the knowledge may be manifested by an experienced driver who can recognize that the traffic light he or she is driving towards has turned red. They know that they are driving the car and that the distance to the traffic light decreases when the car speed is higher than zero. These actions and thoughts require existing relationships between various types of information. For an autonomous car, the explanation could be very similar at this level of abstraction.

Wisdom – know why

As you may expect, the meaning of wisdom is even more unclear than the meaning of knowledge in the DIKW diagram. People may intuitively feel what wisdom is, but it can be difficult to define it precisely and make it useful. I personally like the short definition stating that wisdom is an evaluated understanding.

The definition may seem to be metaphysical, but it doesn’t have to be. If we assume understanding as a solid knowledge about a given aspect of reality that comes from the past, then evaluated may mean a checked, self-improved way of doing things the best way in the future. There is no magic here; imagine a software system that measures the outcome of its predictions or actions and imposes on itself some algorithms that mutate its internal state to improve that measure.

Going back to our example, the wisdom level may be manifested by the ability of a driver or an autonomous car to travel from point A to point B safely. This couldn’t be done without a sufficient level of self-awareness.

Associative knowledge graphs

Omnis ars nature imitatio est . Many excellent biologically inspired algorithms and data structures have been developed in computer science. Associative Graph Data Structures and Associative Algorithms are also the fruits of this fascinating and still surprising approach. This is because the human brain can be decently modeled using graphs.

Graphs are an especially important concept in machine learning. A feed-forward neural network is usually a directed acyclic graph (DAG). A recurrent neural network (RNN) is a cyclic graph. A decision tree is a DAG. K-nearest neighbor classifier or k-means clustering algorithm can be very effectively implemented using graphs. Graph neural network was in the top 4 machine learning-related keywords 2022 in submitted research papers at ICLR 2022 ( source ).

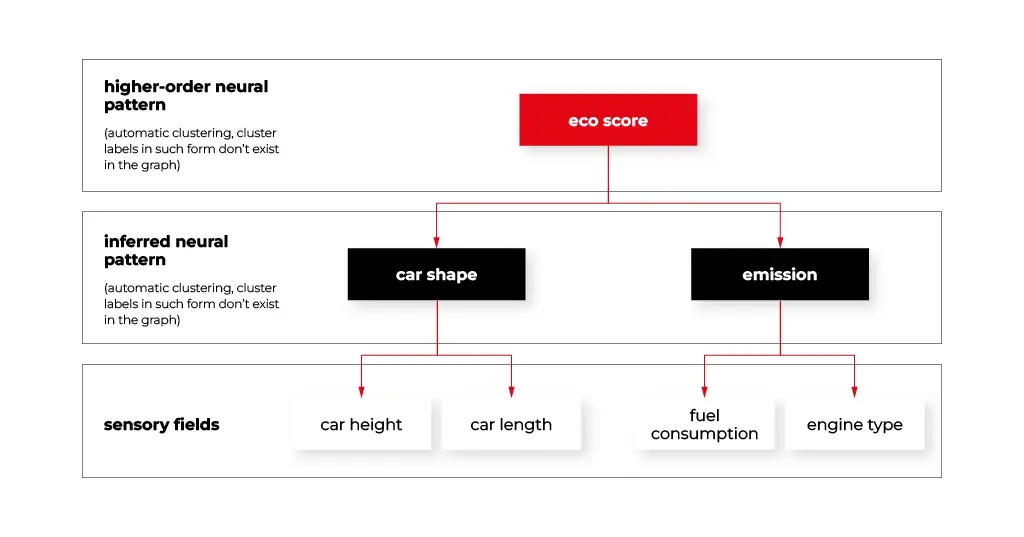

For each level of the DIKW pyramid, the associative approach offers appropriate associative data structures and related algorithms.

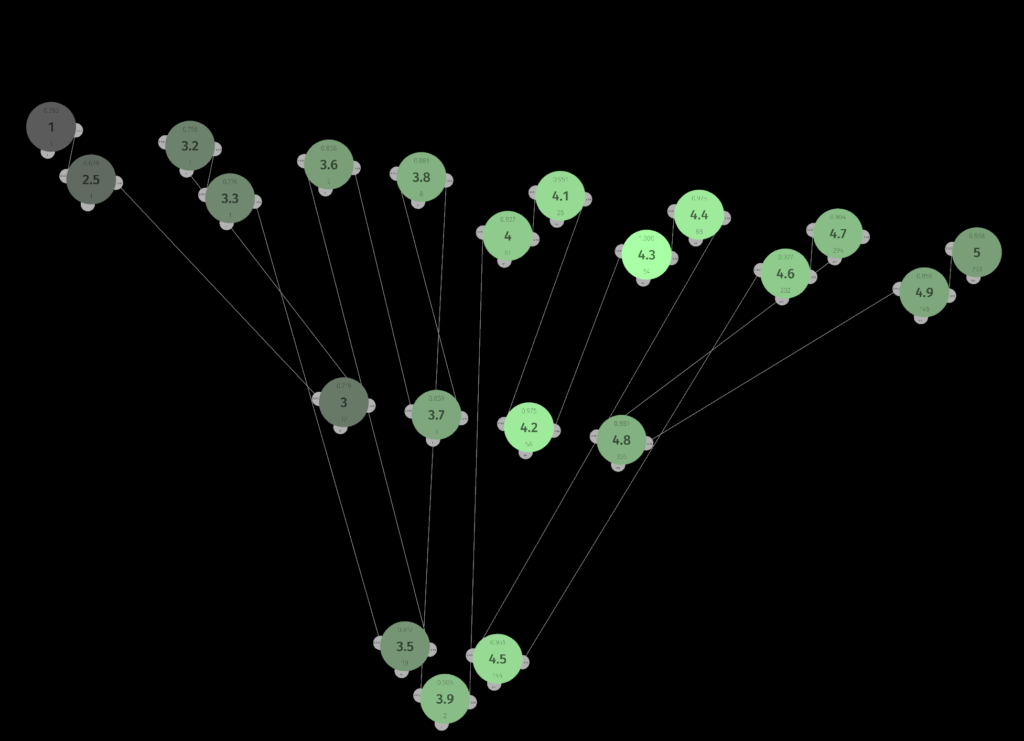

At the data level, specific graphs called sensory fields were developed. They fetch raw signals from the environment and store them in the appropriate form of sensory neurons. The sensory neurons connect to the other neurons representing frequent patterns that form more and more abstract layers of the graph that will be discussed later in this article. The figure below demonstrates how the sensory fields may connect with the other graph structures.

The information level can be managed by static (it does not change its internal structure) or dynamic (it may change its internal structure) associative graph data structures. A hybrid approach is also very useful here. For instance, CNN may be used as a feature extractor combined with associative graphs, as it happens in the human brain (assuming that CNN reflects the parietal cortex).

The knowledge level may be represented by a set of dynamic or static graphs from the previous paragraph connected to each other with many various relationships creating an associative knowledge graph.

The wisdom level is the most exotic. In the case of the associative approach, it may be represented by an associative system with various associative neural networks cooperating with other structures and algorithms to solve complex problems.

Having that short introduction let’s dive deeper into the technical details of associative graphical approach elements.

Sensory field

Many graph data structures can act as a sensory field. But we will focus on a special structure designed for that purpose.

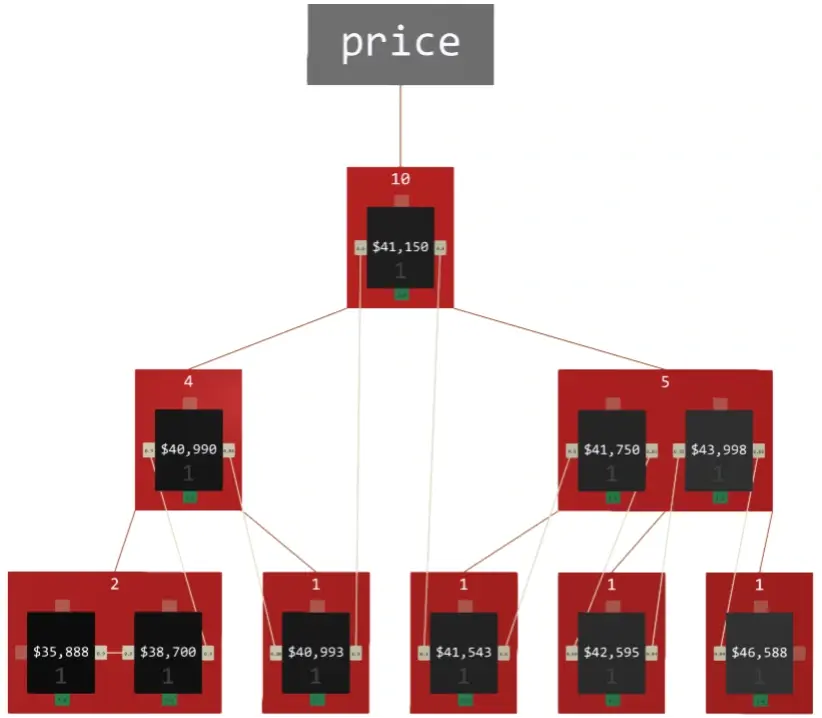

ASA-graph is a dedicated data structure for handling numbers and their derivatives associatively. Although it acts like a sensory field, it can replace conventional data structures like B-tree, RB-tree, AVL-tree, and WAVL-tree in practical applications such as database indexing since it is fast and memory-efficient.

ASA-graphs are complex structures, especially in terms of algorithms. You can find a detailed explanation in this paper . From the associative perspective, the structure has several features which make it perfect for the following applications:

- elements aggregation – keeps the graph small and devoted only to representing valuable relationships between data,

- elements counting – is useful for calculating connection weights for some associative algorithms e.g., frequent patterns mining,

- access to adjacent elements – the presence of dedicated, weighted connections to adjacent elements in the sensory field, which represents vertical relationship within the sensor, enables fuzzy search and fuzzy activation,

- the search tree is constructed in an analogous way to DAG like B-tree, allowing fast data lookup. Its elements act like neurons (in biology, a sensory cell is often the outermost part of the neural system) independent from the search tree and become a part of the associative knowledge graph.

Efficient raw data representation in the associative knowledge graph is one of the most important requirements. Once data is loaded into sensory fields, no further data processing steps are needed. Moreover, ASA-graph automatically handles missing or unnormalized (e.g., a vector in a single cell) data. Symbolic or categorical data types like strings are equally possible as any numerical format. It suggests that one-hot encoding or other comparable techniques are not needed at all. And since we can manipulate symbolic data, associative patterns mining can be performed without any pre-processing.

It may significantly reduce the effort required to adjust a dataset to a model, as is the case with many modern approaches. And all the algorithms may run in place without any additional effort. I will demonstrate associative algorithms in detail later in the series. For now, I can say that nearly every typical machine learning task, like classification, regression, pattern mining, sequence analysis, or clustering, is feasible.

Associative knowledge graph

In general, a knowledge graph is a type of database that stores the relationships between entities in a graph. The graph comprises nodes, which may represent entities, objects, traits, or patterns, and edges modeling the relationships between those nodes.

There are many implementations of knowledge graphs available out there. In this article, I would like to bring your attention to the particular associative type inspired by excellent scientific papers which are under active development in our R&D department. This self-sufficient associative graph data structure connects various sensory fields with nodes representing the entities available in data.

Associative knowledge graphs are capable of representing complex, multi-relational data thanks to several types of relationships that may exist between the nodes. For example, an associative knowledge graph can represent the fact that two people live together, are in love, and have a joint loan, but only one person repays it.

It is easy to introduce uncertainty and ambiguity to an associative knowledge graph. Every edge is weighted, and many kinds of connections help to reflect complex types of relations between entities. This feature is vital for the flexible representation of knowledge and allows the modeling of environments that are not well-defined or may be subject to change.

If there weren't specific types of relations and associative algorithms devoted to these structures, there wouldn't be anything particularly fascinating about it.

The following types of associations (connections) make this structure very versatile and smart, to some extent:

- defining,

- explanatory

- sequential,

- inhibitory,

- similarity.

The detailed explanation of these relationships is out of the scope of this article. However, I would like to give you one example of flexibility provided to the graph thanks to them. Imagine that some sensors are activated by data representing two electric cars. They have similar make, weight, and shape. Thus, the associative algorithm creates a new similarity connection between them with a weight computed from sensory field properties. Then, a piece of extra information arrives to the system that these two cars are owned by the same person.

So, the framework may decide to establish appropriate defining and explanatory connections between them. Soon it turns out that only one EV charger is available. By using dedicated associative algorithms, the graph may create special nodes representing the probability of being fully charged for each car depending on the time of day. The graph establishes inhibitory connections between the cars automatically to represent their competitive relationship.

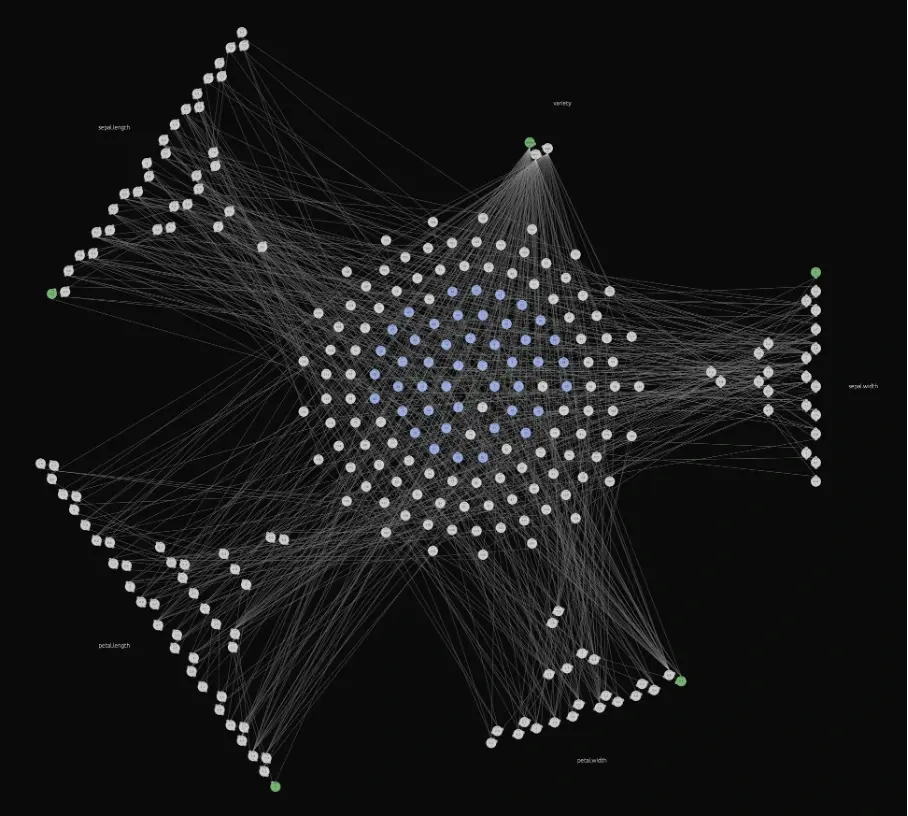

The image below visually represents the associative knowledge graph explained above, with the famous iris dataset loaded. Identifying the sensory fields and neurons should not be too difficult. Even such a simple dataset demonstrates that relationships may seem complex when visualized. The greatest strength of the associative approach is that relationships do not have to be computed – they are an integral part of the graph structure, ready to use at any time. The algorithm as a structure approach in action.

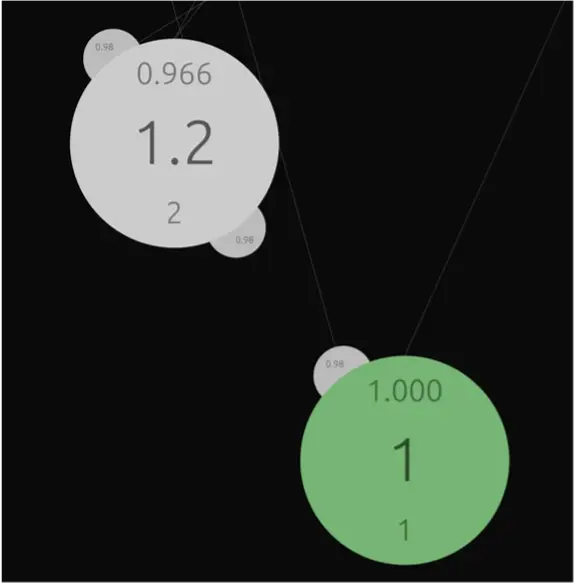

A closer look at the sensor structure demonstrates the neural nature of raw data representation in the graph. Values are aggregated, sorted, counted, and connections between neighbors are weighted. Every sensor can be activated and propagate its signal to its neighbors or neurons. The final effect of such activation depends on the type of connection between them.

What is important, associative knowledge graphs act as an efficient database engine. We conducted several experiments proving that for queries that contain complex join operations or such that heavily rely on indexes, the performance of the graph can be orders of magnitude faster than traditional RDBMS like PostgreSQL or MariaDB. This is not surprising because every sensor is a tree-like structure.

So, data lookup operations are as fast as for indexed columns in RDBMS. The impressive acceleration of various join operations can be explained very easily – we do not have to compute the relationships; we simply store them in the graph’s structure. Again, that is the power of the algorithm as a structure approach.

Associative neural networks

Complex problems usually require complex solutions. The biological neuron is way more complicated than a typical neuron model used in modern deep learning. A nerve cell is a physical object which acts in time and space. In most cases, a computer model of neurons is in the form of an n-dimensional array that occupies the smallest possible space to be computed using streaming processors of modern GPGPU (general-purpose computing on graphics processing).

Space and time context is usually just ignored. In some cases, e.g., recurrent neural networks, time may be modeled as a discrete stage representing sequences. However, this does not reflect the continuous (or not, but that is another story) nature of the time in which nerve cells operate and how they work.