Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

Building intelligent document processing systems – entity finders

Our journey towards building Intelligent Document Processing systems will be completed with entity finders, components responsible for extracting key information.

This is the third part of the series about Intelligent Document Processing (IDP). The series consists of 3 parts:

- Problem definition and data

- Classification and validation

- Entity finders

Entity finders

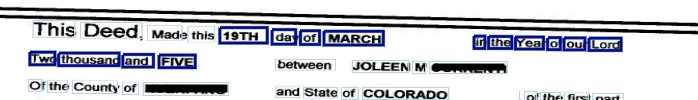

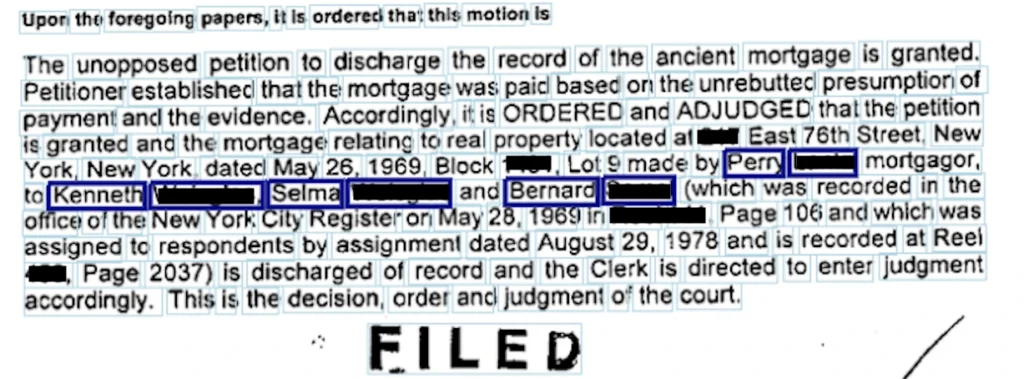

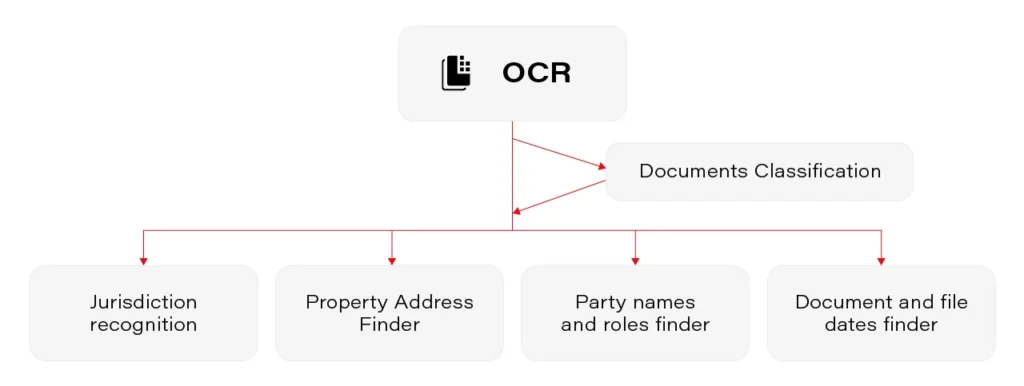

After classifying the documents, we focus on extracting some class-specific information. We pose the main interests in the jurisdiction, property address, and party names. We called the components responsible for their extraction simply “finders”.

Jurisdictions showed they could be identified based on dictionaries and simple rules. The same applies to file dates.

Context finders

The next 3 entities – addresses, parties, and document dates, provide us with a challenge.

Let us note the fact that:

- Considering addresses. There may be as many as 6 addresses on a first page on its own. Some belong to document parties, some to the law office, others to other entities engaged in a given process. Somewhere in this maze of addresses, there is this one that we are interested in – property address. Or there isn’t - not every document has to have the address at all. Some have, often, only the pointers to the page or another document (which we need to extract as well).

- The case with document dates is a little bit simpler. Obviously, there are often a few dates in the document not mentioning any numbers, dates are in every format possible, but generally, the document date occurs and is possible to distinguish.

- Party names – arguably the hardest entities to find. Depending on the document, there may be one or more parties engaged or none. The difficulty is that virtually any name that represents a person, company, or institution in the document is a potential candidate for the party. The variability of contexts indicating that a given name represents a party is huge, including layout and textual contexts.

Generally, our solutions are based on three mechanisms.

- Context finders: We search for the contexts in which the searched entities may occur.

- Entity finders: We are estimating the probability that a given string is the search value.

- Managers: we merge the information about the context with the information About the values and decide whether the value is accepted

Address finder

Addresses are sometimes multi-line objects such as:

“LOT 123 OF THIS AND THIS ESTATES, A SUBDIVISION OF PART OF THE SOUTH HALF OF THE NORTHEAST QUARTER AND THE NORTH HALF OF THE SOUTHEAST QUARTER OF SECTION 123 (...)”.

It is possible that the address is written over more than one or a few lines. When such expression occurs, we are looking for something simpler like :

“The Institution, P.O. Box 123 Cheyenne, CO 123123”

But we are prepared for each type of address.

In the case of addresses, our system is classifying every line in a document as a possible address line. The classification is based on n-grams and other features such as the number of capital letters, the proportion of digits, proportion of special signs in a line. We estimate the probability of the address occurring in the line. Then we merge lines into possible address blocks.

The resulting blocks may be found in many places. Some blocks are continuous, but some pose gaps when a single line in the address is not regarded as probable enough. Similarly, there may occur a single outlier line. That’s why we smooth the probabilities with rules.

After we construct possible address blocks, we filter them with contexts.

We manually collected contexts in which addresses may occur. We can find them in the text later in a dictionary-like manner. Because contexts may be very similar but not identical, we can use Dynamic Time Warping.

An example of similar but not identical context may be:

“real property described as follows:”

“real property described as follow:”

Document date finder

Document dates are the easiest entities to find thanks to a limited number of well-defined contexts, such as “dated this” or “this document is made on”. We used frequent pattern mining algorithms to reveal the most frequent document date context patterns among training documents. After that, we marked every date occurrence in a given document using a modified open-source library from the python ecosystem. Then we applied context-based rules for each of them to select the most likely date as document date. This solution has an accuracy of 82-98% depending on the test set and labels quality.

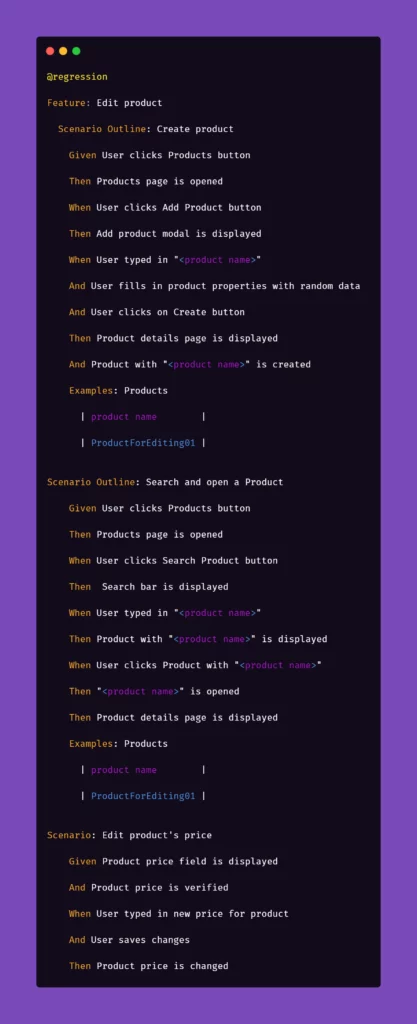

Parties finder

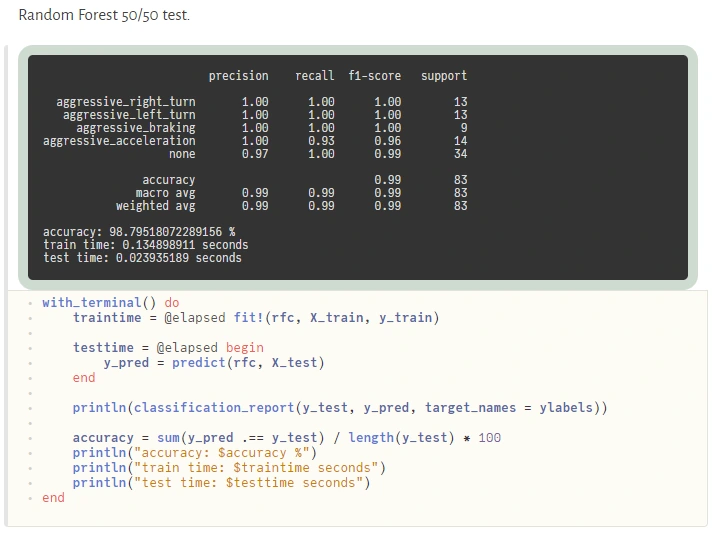

It’s worth mentioning that this part of our solution together with the document dates finder is implemented and developed in the Julia language . Julia is a great tool for development on the edge of science and you can read about views on it in another blog post here.

The solution on its own is somehow similar to the previously described, especially to the document date finder. We omit the line classifier and emphasize the impact of the context. Here we used a very generic name finder based on regular expression and many groups of hierarchical contexts to mark potential parties and pick the most promising one.

Summary

This part concludes our project focused on delivering an Intelligent Document Processing system. As we also, AI enables us to automate and improve operations in various areas.

The processes in banks are often labor bound, meaning they can only take on as much work as the labor force can handle as most processes are manual and labor-intensive. Using ML to identify, classify, sort, file, and distribute documents would be huge cost savings and add scalability to lucrative value streams where none exists today.

Train your computer with the Julia programming language – Machine Learning in Julia

Once we know the basics of Julia , we focus on its utilization in building machine learning software. We go through the most helpful tools and moving from prototyping to production.

How to do Machine Learning in Julia

Machine Learning tools dedicated to Julia have evolved very fast in the last few years. In fact, quite recently, we can say that Julia is production-ready! - as it was announced on JuliaCon2020.

Now, let's talk about the native tools available in Julia's ecosystem for Data Scientists. Many libraries and frameworks that serve machine learning models are available in Julia. In this article, we focus on a few the most promising libraries.

Da t aFrames.jl is a response to the great popularity of pandas – a library for data analysis and manipulation, especially useful for tabular data. DataFrames module plays a central role in the Julia data ecosystem and has tight integrations with a range of different libraries. DataFrames are essentially collections of aligned Julia vectors so they can be easily converted to other types of data like Matrix. Pand as.jl package provides binding to the pandas' library if someone can’t live without it, but we recommend using a native DataFrames library for tabular data manipulation and visualization.

In Julia, usually, we don’t need to use external libraries as we do with numpy in Python to achieve a satisfying performance of linear algebra operations. Native Arrays and Matrices may perform satisfactorily in many cases. Still, if someone needs more power here there is a great library StaticArrays.jl implementing statically sized arrays in Julia. Potential speedup falls in a range from 1.8x to 112.9x if the array isn’t big (based on tests provided by the authors of the library).

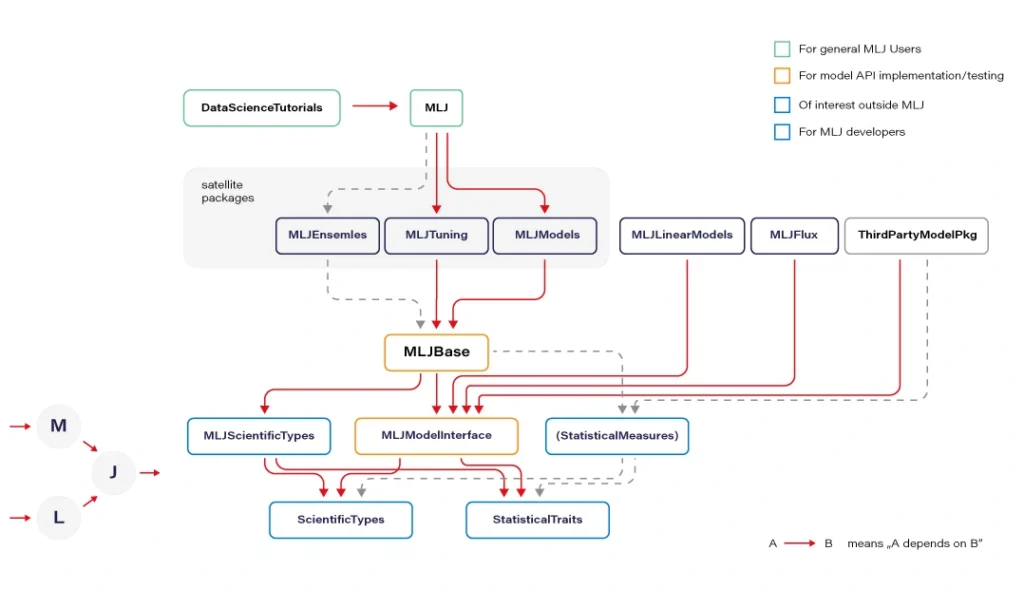

MLJ.jl created by Alan Turing Institute provides a common interface and meta-algorithms for selecting, tuning, evaluating, composing, and comparing over 150 machine learning models written in Julia and other languages. The library offers an API that lets you manage ML workflows in many aspects. Some parts of the API syntax may seem unfamiliar to the audience but remains clear and easy to use.

Flux.jl defines models just like mathematical notation. Provides lightweight abstractions on top of Julia's native GPU and TPU - GPU kernels can be written directly in Julia via CU DA.jl. Flux has its own Model Zoo and great integration with Julia’s ecosystem.

MXNet.jl is a part of a big Apache MXNet project. MXNet brings flexible and efficient GPU computing and state-of-art deep learning to Julia. The library offers very efficient tensor and matrix computation across multiple CPUs, GPUs, and disturbed server nodes.

Knet.jl (pronounced "kay-net") is the Koç University deep learning framework. The library supports GPU operations and automates differentiation using dynamic computational graphs for models defined in plain Julia.

AutoMLPipeline is a package that makes it trivial to create complex ML pipeline structures using simple expressions. AMLP leverages on the built-in macro programming features of Julia to symbolically process, manipulate pipeline expressions, and makes it easy to discover optimal structures for machine learning prediction and classification.

There are many more specific libraries like DecisionTree.jl , Transformers.jl, or YOLO.jl which are often immature but still can be utilized. Obviously, bindings to other popular ML frameworks exists, where many people may find TensorFlow.jl , Torch.jl, or ScikitLearn.jl as useful. We recommend using Flux or MLJ as the default choice for a new ML project.

Now let’s discuss the situation when Julia is not ready. And here, PyCall.jl comes to the rescue. The Python ecosystem is far greater than Julia’s. Someone could argue here that using such a connector loses all of the speed gained from using Julia and can even be slower than using Python standalone. Well, that’s true. But it’s worth to realize that we ask PyCall for help not so often because the number of native Julia ML libraries is quite good and still growing. And even if we ask, the scope is usually limited to narrow parts of our algorithms.

Sometimes sacrificing a part of application performance can be a better choice than sacrificing too much of our time, especially during prototyping. In a production environment, the better idea may be (but it's not a rule) to call to a C or C++ API of some of the mature ML frameworks (there are many of them) if a Julia equivalent is not available. Here is an example of how easily one can use the famous python scikit-learn library during prototyping:

@sk_import ensemble: RandomForestClassifier; fit!(RandomForestClassifier(), X, y)

The powerful metaprogramming features ( @sk_import macro via PyCall) take care of everything, exposing clean and functional style API of the selected package. On the other hand, because the Python ecosystem is very easily accessible from Julia (thanks to PyCall), many packages depend on it, and in turn, depend on Python, but that’s another story.

From prototype to Pproduction

In this section, we present a set of basic tools used in a typical ML workflow, such as writing a notebook, drawing a plot, deploying an ML model to a webserver or more sophisticated computing environments. I want to emphasize that we can use the same language and the same basic toolset for every stage of the machine learning software development process: from prototyping to production at full speed.

For writing notebooks, there are two main libraries available. IJulia.jl is a Jupyter language kernel and works with a variety of notebook user interfaces. In addition to the classic Jupyter Notebook, IJulia also works with JupyterLab, a Jupyter-based integrated development environment for notebooks and code. This option is more conservative.

For anyone who’s looking for something fresh and better, there is a great project called Pluto.jl - a reactive, lightweight and simple notebook with beautiful UI. Unlike Jupyter or Matlab, there is no mutable workspace, but an important guarantee: At any instant, the program state is completely described by the code you see. No hidden state, no hidden bugs. Changing one cell instantly shows effects on all other cells thanks to the reactive technologies used. And the most important feature: your notebooks are saved as pure Julia files! You can also export your notebook as HTML and PDF documents.

Visualization and plotting are essential parts of a typical machine learning workflow. We have several options here. For tabular data visualization there we can just simply use the DataFrame variable in a printable context. In Pluto, it looks really nice (and is interactive):

The primary option for plotting is Plots.jl , a plotting meta package that brings many different plotting packages under a single API, making it easy to swap between plotting "backends". This is a mature package with a large number of features (including 3D plots). The downside is that it uses Python behind the scenes (but that’s not a severe issue here) and can cause problems with configuration.

Gadfly.jl is based largely on ggplot2 for R and renders high-quality graphics to SVG, PNG, Postscript, and PDF. The interface is simple and cooperates well with DataFrames.

There is an interesting package called StatsPlots.jl which is a replacement for Plots.jl that contains many statistical recipes for concepts and types introduced in the JuliaStats organization, including correlation plot, Andrew's plot, MDS plot, and many more.

To expose the ML model as a service, we can establish a custom model server. To do so, we can use Genie.jl - a full-stack MVC web framework that provides a streamlined and efficient workflow for developing modern web applications and much more. Genie manages all of the virtual environments, database connectivity, or automatic deployment into docker containers (you just run one function, and everything works). It’s pure Julia and that’s important here because this framework manages the entire project for you. And it’s really very easy to use.

Apache Spark is a distributed data and computation engine that becomes more and more popular, especially among large companies and corporations. Hosted Spark instances offered by cloud service providers make it easy to get started and to run large, on-demand clusters for dynamic workloads.

While Scala, as the primary language of Spark, is not the best choice for some numerical computing tasks, being built for numerical computing, Julia is however perfectly suited to create fast and accurate numerical applications. Spark.jl is a library for that purpose. It allows you to connect to a Spark cluster from the Julia REPL and load data and submit jobs. It uses JavaCall.jl behind the scenes. This package is still in the initial development phase. Someone said that Julia is a bridge between Python and Spark - being simple like Python but having the big-data manipulation capabilities of Spark.

In Julia, we can do distributed computing effortlessly. We can do it with a useful JuliaDB.jl package, but straight Julia with distributed processes work well. We use it in production, distributed across multiple servers at scale. Implementation of distributed parallel computing is provided by module Distributed as part of the standard library shipped with Julia.

Machine Learning in Julia - conclusions

We covered a lot of topics, but in fact, we only scratched the surface. Presented examples show that, under certain conditions, Julia can be considered as a serious option for your next machine learning project in an enterprise environment or scientific work. Some Rustaceans (Rust language users call themselves that) ask themselves in terms of machine learning capabilities in their loved language: Are we learning yet? Julia's users can certainly answer yes! Are we production ready? Yes, but it doesn't mean Julia is the best option for your machine learning projects. More often, the mature Python ecosystem will be the better choice. Is Julia the future of machine learning? We believe so, and we’re looking forward to see some interesting apps written with Julia.

Building intelligent document processing systems - classification and validation

We continue our journey towards building Intelligent Document Processing Systems. In this article, we focus on document classification and validation.

This is the second part of the series about Intelligent Document Processing ( IDP ). The series consists of 3 parts:

- Problem definition and data

- Classification and validation

- Entities finders

If you are interested in data preparation, read the previous article. We describe there what we have done to get the data transformed into the form.

Classes

The detailed classification of document types shows that documents fall into around 80 types. Not every type is well-represented, and some of them have a minor impact or neglectable specifics that would force us to treat them as a distinct class.

After understanding the specifics, we ended up with 20 classes of documents. Some classes are more general, such as Assignment, some are as specific as Bankruptcy. The types we classify are: Assignment, Bill, Deed, Deed Of Separation, Deed Of Subordination, Deed Of Trust, Foreclosure, Deed In Lieu Foreclosure, Lien, Mortgage, Trustees Deed, Bankruptcy, Correction Deed, Lease, Modification, Quit Claim Deed, Release, Renunciation, Termination.

We chose these document types after summarizing the information present in each type. When the following services and routing are the same for similar documents, we do not distinguish them in target classes. We abandoned a few other types that do not occur in the real world often.

Classification

Our objective was to classify them for the correct next routing and for the application of the consecutive services. For example, when we are looking for party names, dealing with the Bankruptcy type of document, we are not looking for more than one legal entity.

The documents are long and various. We can now start to think about the mathematical representation of them. Neural networks can be viewed as a complex encoders with classifier on top. These encoders are usually, in fact, powerful systems that can comprehend a lot of content and dependencies in text. However, the longer the text, the harder for a network to focus on a single word or single paragraph. There was a lot of research that confirms our intuition, which shows that the responsibility of classification of long documents on huge encoders is on the final layer and embeddings could be random to give similar results.

Recent GPT-3 (2020) is obviously magnificent, and who knows, maybe such encoders have the future for long texts. Even if it comes with a huge cost – computational power, processing time. Because we do not have a good opinion on representing long paragraphs of text in a low dimensional embedding made up by a neural network, we made ourselves a favor leaning towards simpler methods.

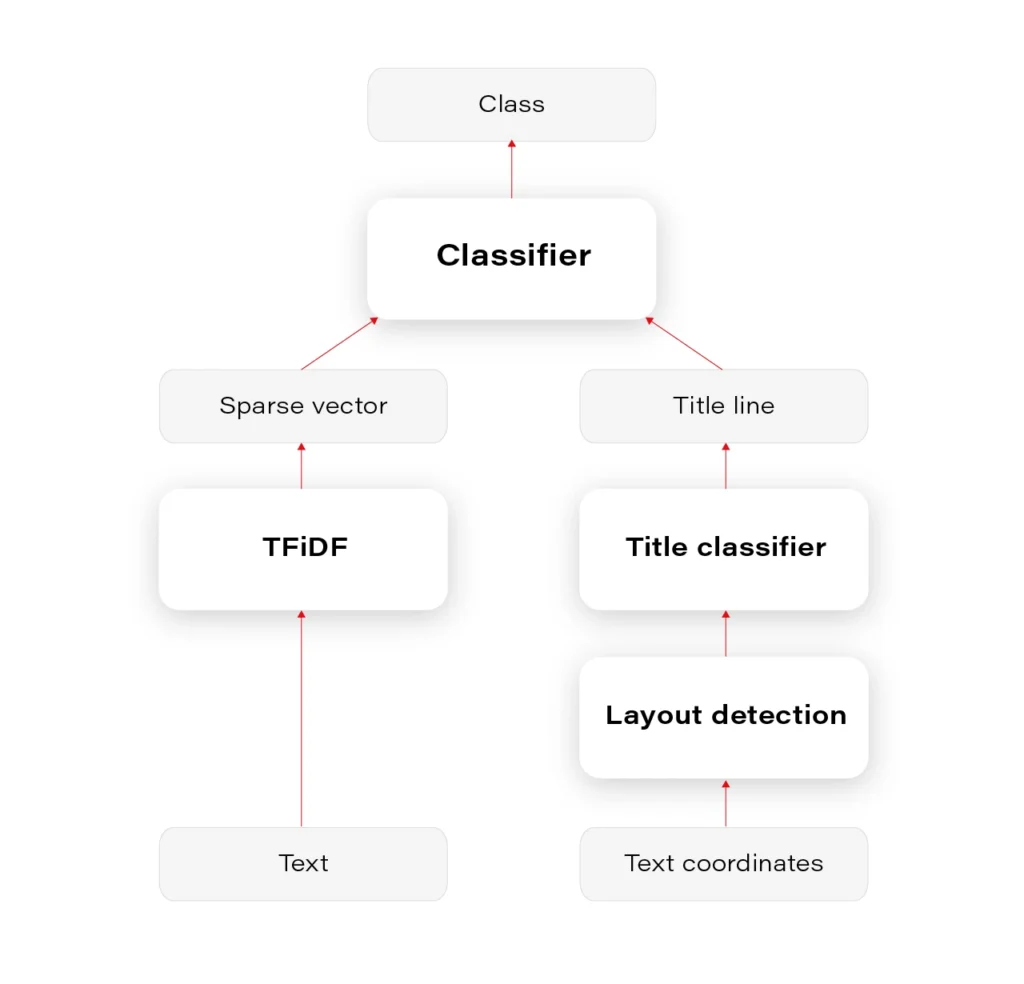

We had to prepare a multiclass-multilabel classifier that doesn’t smooth the probability distribution in any way on the layer of output classes, to be able to interpret and tune classes' thresholds correctly. This is often a necessary operation to unsmooth the output probability distribution. Our main classifier was Logistic Regression on TFiDF (Term Frequency - Inverse Document Frequency). We tuned mainly TFiDF but spent some time on documents themselves – number of pages, stopwords, etc.

Our results were satisfying. In our experiments, we are above 95% accuracy, which we find quite good, considering ambiguity in the documents and some label noise.

It is, however, natural to estimate whether it wouldn’t be enough to classify the documents based on the heading – document title, the first paragraph, or something like this. Whether it’s useful for a classifier to emphasize the title phrase or it’s enough to classify only based on titles – it can be settled after the title detection.

Layout detection

Document Layout Analysis is the next topic we decided to apply in our solution.

First of all, again, the variety of layouts in our documents is tremendous. The available models are not useful for our tasks.

The simple yet effective method we developed is based on the DBSCAN algorithm. We derived a specialized custom distance function to calculate the distances between words and lines in a way that blocks in the layout are usefully separated. The custom distance function is based on Euclidean distance but smartly uses the fact that text is recognized by OCR in lines. The function is dynamic in terms of proportion between the width and height of a line.

You can see the results in Figure 1. We can later use this layout information for many purposes.

Based on the content, we can decide whether any block in a given layout contains the title. For document classification based on title, it seems that predicting document class based only on the detected title would be as good as based on the document content. The only problem occurs when there are no document titles, which unfortunately happens often.

Overall, mixing layout information with the text content is definitely a way to go, because layout seems to be an integral part of a document, fulfilling not only the cosmetic needs but also storing substantive information. Imagine you are reading these documents as plain text in notepad - some signs, dates, addresses, are impossible to distinguish without localizations and correctly interpreted order of text lines.

The entire pipeline of classification is visualized in Figure 2.

Validation

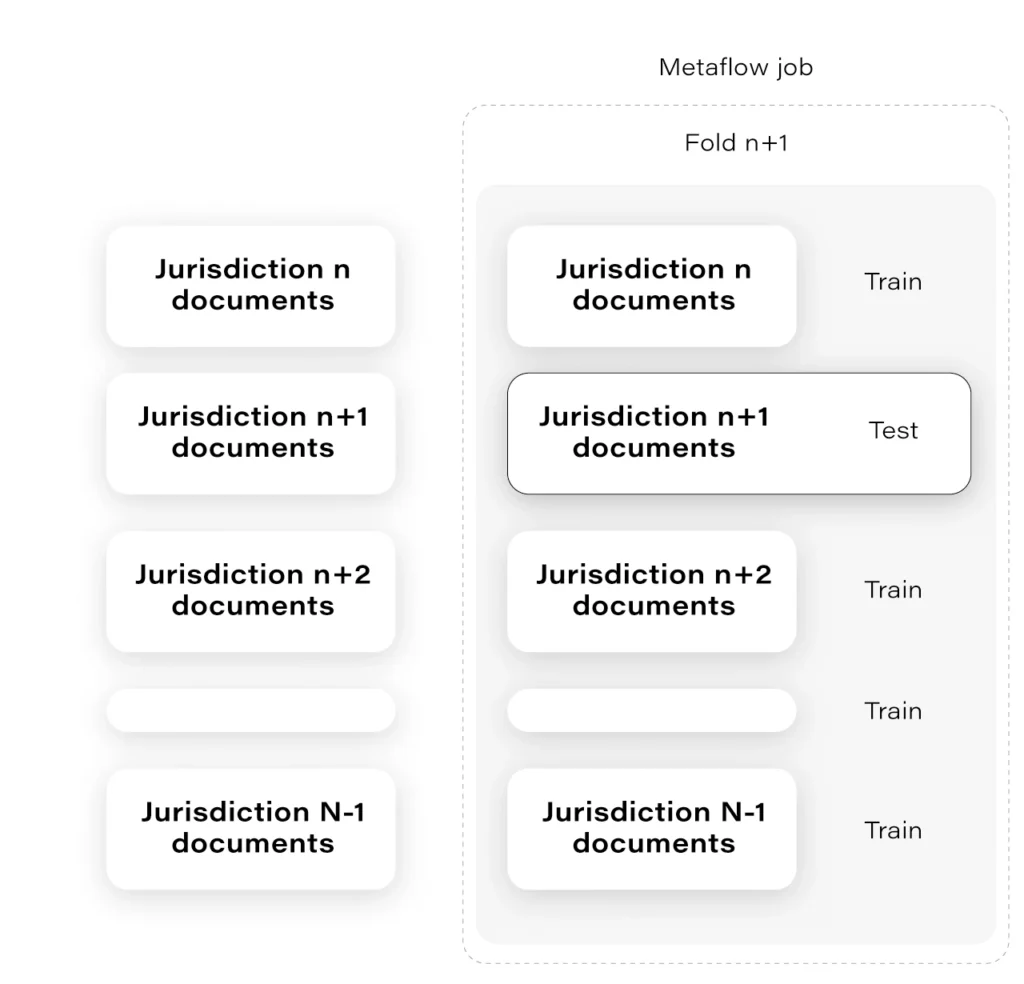

We incorporated the Metaflow python package for this project. It is a complicated technology that does not always work fluently but overall we think it gave us useful horizontal scalability (some time-consuming processes) and facilitated the cooperation between team members.

The interesting example of Metaflow usage is as follows: at some time, we had to assure that the number of jurisdictions that we had in our trainset is enough for the model to generalize over all jurisdictions.

Are we sure the mortgage from some small jurisdiction in Alaska will work even though most of our documents come from, let’s say, West Side?

The solution to that was to prepare the “leave-one-out" cross-validation in a way that we hold documents from one jurisdiction as a validation set. Having a lot of jurisdictions, we had to choose N of them. Each fold was tested on a remote machine independently and in parallel, which was largely facilitated thanks to Metaflow. Check the Figure 3.

Next

Classification is a crucial component of our system and allows us to take further steps. Having solid fundamentals, after the classifier routing, we can run the next services – the finders .

Key insights from Insurance Innovators USA 2021

Insurance Innovators USA 2021, organized by Market Force Live, provided us with engaging sessions and insights into trends shaping the industry. We sum up key points and share our thoughts regarding commonly discussed predictions.

External sources of innovation

In a traditional approach, innovation was supposed to be driven by the internal capacity of an organization. So, achieved goals in innovation and R&D used to be an internal cost/benefit relation. That paradigm has started to shift in recent years and was clearly accelerated by the unexpected pressure from COVID pandemic.

Insurance companies understand the importance of collaborating with external experts to drive innovation. Both InsurTechs and technology vendors they choose to collaborate with, provide them with a fresh take on your organization and processes, and that leads to unlocking innovation. Staying open-minded, monitoring what the market has to offer, and evaluating new products and services enable organizations to be agile and competitive.

Amazon is the new customer experience baseline

The insurance industry drags behind many other industry sectors in the digital transformation. But the rise of customer-centric technology providers encourages the industry to reconsider its stance. Until recently, customers tended to remain with their providers for long periods because it was easier to do a renewal than to shop for a policy and face all the hassle.

Now that the InsurTechs are here, insurance companies face the same dilemma the retail industry has a couple of years back. They either adopt the customer-centric, digital approach to servicing their customers, or they will lose market share. The days when customers went to see their insurance agents are mostly gone now. In the age of Amazon, insurance companies must offer a buying experience and a personalized relationship that will make finding, buying and renewing insurance simple and pleasant.

Covid-19 accelerated virtual inspections

Prior to Covid-19, only the largest companies advanced in their digital transformation journey leveraged virtual inspections. As no one expected that a global pandemic would hit and force everyone to retreat to virtual channels, they mainly used virtual inspections to enhance productivity and to speed up claims processing. Companies like Allstate that have implemented virtual inspections back in 2016 ( you can read here how Grape Up helped Allstate build their solution ) have simply scaled up the teams and made it their no.1 channel of communication.

For companies that have not considered VI before, it took some time to launch the virtual inspection capability, but fortunately, with SaaS solutions available, the adoption was relatively easy. What seems to be certain at this point, according to the conference panelists, is that the virtual inspections are here to stay. Even with the pandemic gone and social interactions resumed, it is unlikely the insurance companies will want to go back to in-person inspections, and more importantly, that the customers will.

Embedded insurance is growing

Embedded insurance is one of the hot topics of 2021 that may well change forever the adage saying insurance is something a person needs rather than wants. With the development of connectivity, IT architectures, APIs, and digital ecosystems, embedded insurance has the potential to provide a one-stop-shop experience for customers buying new products. The embedded insurance model installs the coverage within the purchase of a product. That means the insurance is not sold to the customer at some point in the future but is instead provided as a native feature of the product.

That way a new smartphone is covered for theft and damage right from the get-go. For insurance companies, this new way of doing business promises a variety of new opportunities to develop, enter, and target insurance segments they have not operated in before.

From the technology standpoint, it seems clear that all these trends will drive insurance companies to further develop their cloud and data capabilities . The ability to perform operations on data in near real-time will be the key to sustaining and growing the customer-centric approach that is necessary if carriers want to remain competitive.

Train your computer with the Julia programming language - introduction

As the Julia programming language is becoming very popular in building machine learning applications, we explain its advantages and suggest how to leverage them.

Python and its ecosystem have dominated the machine learning world – that’s an undeniable fact. And it happened for a reason. Ease of use and simple syntax undoubtedly contributed to still growing popularity. The code is understandable by humans, and developers can focus on solving an ML problem instead of focusing on the technical nuances of the language. But certainly, the most significant source of technology success comes from community effort and the availability of useful libraries.

In that context, the Python environment really shines. We can google in five seconds a possible solution for a great majority of issues related to the language, libraries, and useful examples, including theoretical and practical aspects of our intelligent application or scientific work. Most of the machine learning related tutorials and online courses are embedded in the Python ecosystem. If some ML or AI algorithm is worth of community’s attention, there is a huge probability that somebody implemented it as a Python open-source library.

Python is also the "Programming Language of the 2020" award winner. The award is given to the programming language that has the highest rise in ratings in a year based on the TIOBE programming community index (a measure of the popularity of programming languages). It is worth noting that the rise of Python language popularity is strongly correlated with the rise of machine learning popularity.

Equipped with such great technology, why still are we eager to waste a lot of our time looking for something better? Except for such reasons as being bored or the fact that many people don’t like snakes (although the name comes from „Monty Python’s Flying Circus”, a python still remains a snake). We think that the answer is quite simple: because we can do it better.

From Python to Julia

To understand that there is a potential to improve, we can go back to the early nineties when Python was created. It was before 3rd wave of artificial intelligence popularity and before the exponential increase in interest in deep learning. Some hard-to-change design decisions that don’t fit modern machine learning approaches were unavoidable. Python is old, it’s a fact, a great advantage, but also a disadvantage. A lot of great and groundbreaking things happened from the times when Python was born.

While Python has dominated the ML world, a great alternative has emerged for anyone who expects more. The Julia Language was created in 2009 by a four-person team from MIT and released in 2012. The authors wanted to address the shortcomings in Python and other languages. Also, as they were scientists, they focused on scientific and numerical computation, hitting a niche occupied by MATLAB, which is very good for that application but is not free and not open source. The Julia programming language combines the speed of C with the ease of use of Python to satisfy both scientists and software developers. And it integrates with all of them seamlessly.

In the following sections, we will show you how the Julia Language can be adapted to every Machine Learning problem . We will cover the core features of the language shown in the context of their usefulness in machine learning and comparison with other languages. A short overview of machine learning tools and frameworks available in Julia is also included. Tools for data preparation, visualization of results, and creating production pipelines also are covered. You will see how easily you can use ML libraries written in other languages like Python, MATLAB, or C/C++ using powerful metaprogramming features of the Julia language. The last part presents how to use Julia in practice, both for rapid prototyping and building cloud-based production pipelines.

The Julia language

Someone said if Python is a premium BMW sedan (petrol only, I guess, eventual hybrid) then Julia is a flagship Tesla. BMW has everything you need, but more and more people are buying Tesla. I can somehow agree with that, and let me explain the core features of the language which makes Julia so special and let her compete for a place in the TIOBE ranking with such great players as LISP, Scala, or Kotlin (31st place in March 2021).

Unusual JIT/AOT complier

Julia uses the LLVM compiler framework behind the scenes to translate very simple and dynamic syntax into machine code. This happens in two main steps. The first step is precompilation, before final code execution, and what may be surprising this it actually runs the code and stores some precompilation effects in the cache. It makes runtime faster but slower building – usually this is an acceptable cost.

The second step occurs in runtime. The compiler generates code just before execution based on runtime types and static code analysis. This is not how traditional just-in-time compilers work e.g., in Java. In “pure” JIT the compiler is not invoked until after a significant number of executions of the code to be compiled. In that context, we can say that Julia works in much the same way as C or C++. That’s why some people call Julia compiler a just-ahead-of-time compiler, and that’s why Julia can run near as fast as C in many cases while remaining a dynamic language like Python. And this is just awesome.

Read-eval-print loop

Read-eval-print loop (REPL) is an interactive command line that can be found in many modern programming languages. But in the case of Julia, the REPL can be used as the real heart of the entire development process. It lets you manage virtual environments, offers a special syntax for the package manager, documentation, and system shell interactions, allows you to test any part of your code, the language, libraries, and many more.

Friendly syntax

The syntax is similar to MATLAB and Python but also takes the best of other languages like LISP. Scientists will appreciate that Unicode characters can be used directly in source code, for example, this equation: f(X,u,σᵀ∇u,p,t) = -λ * sum(σᵀ∇u.^2)

is a perfectly valid Julia code. You may notice how cool it can be in terms of machine learning. We use these symbols in machine learning related books and articles, why not use them in source code?

Optional typing

We can think of Julia as dynamically typed but using type annotation syntax, we can treat variables as being statically typed, and improve performance in cases where the compiler could not automatically infer the type. This approach is called optional typing and can be found in many programming languages. In Julia however, if used properly, can result in a great boost of performance as this approach fits very well with the way Julia compiler works.

A ‘Glue’ language

Julia can interface directly with external libraries written in C, C++, and Fortran without glue code. Interface with Python code using PyCall library works so well that you can seamlessly use almost all the benefits of great machine learning Python ecosystem in Julia project as if it were native code! For example, you can write:

np = pyimport(numpy)

and use numpy in the same way you do with Python using Julia syntax. And you can configure a separate miniconda Python interpreter for each project and set up everything with one command as with Docker or similar tools. There are bindings to other languages as well e.g., Java, MATLAB, or R.

Julia supports metaprogramming

One of Julia's biggest advantages is Lisp-inspired metaprogramming. A very powerful characteristic called homoiconicity explained by a famous sentence: “code is data and data is code” allows Julia programs to generate other Julia programs, and even modify their own code. This approach to metaprogramming gives us so much flexibility, and that’s how developers do magic in Julia.

Functional style

Julia is not an object-oriented language. Something like a model.fit() function call is possible (Julia is very flexible) but not common in Julia. Instead, we write fit(model) , and it's not about the syntax, but it is about the organization of all code in our program (modules, multiple dispatches, functions as a first-class citizen, and many more).

Parallelization and distributed computing

Designed with ML in mind, Julia focusses on the scientific computing domain and its needs like parallel, distributed intensive computation tasks. And the syntax is very easy for local or remote parallelism.

Disadvantages

Well, it might be good if the compiler wasn't that slow, but it keeps getting better. Sometimes REPL could be faster, but again it’s getting better, and it depends on the host operating system.

Conclusion

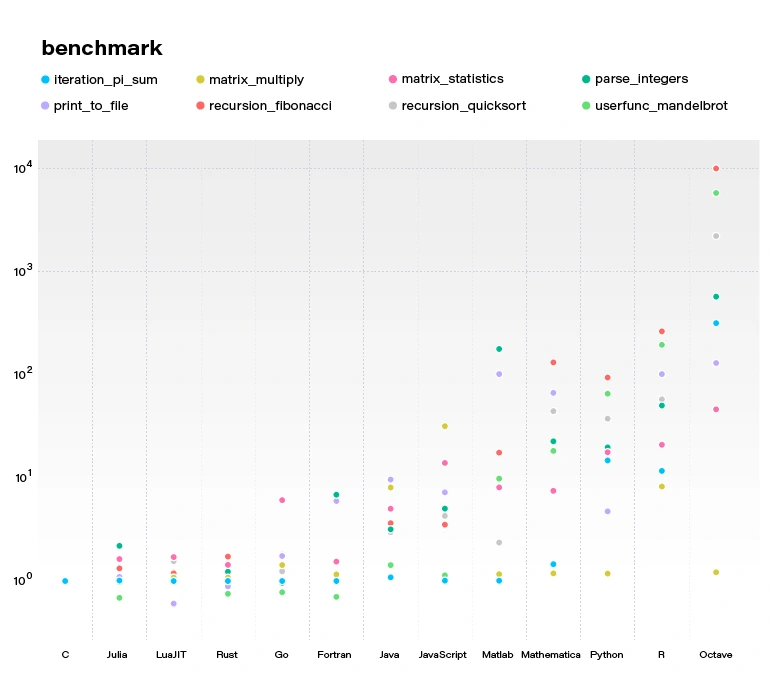

By concluding this section, we would like to demonstrate a benchmark comparing several popular languages and Julia. All language benchmarks should be treated not too seriously, but they still give an approximate view of the situation.

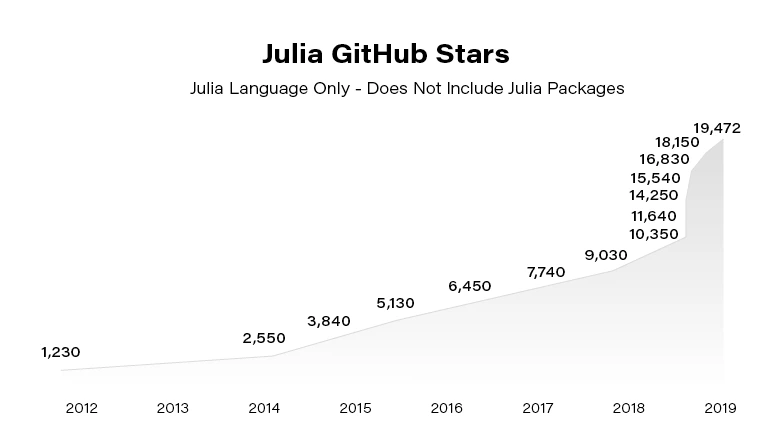

Julia becomes more and more popular. Since the 2012 launch, Julia has been downloaded over 25,000,000 times as of February 2021, up by 87% in a year.

In the next article, we focus on using Julia in building Machine Learning models. You can also check our guide to getting started with the language .

Introduction to building intelligent document processing systems

Building Intelligent Document Processing systems for financial institutions is challenging. In this article, we share our approach to developing an IDP solution that goes far beyond a simple NLP task.

The series about Intelligent Document Processing (IDP) consists of 3 parts:

- Problem definition and data

- Classification and validation

- Entities finders

Building intelligent document processing systems - problem introduction

The selected domain that could be improved with AI was mortgage filings . These filings are required for mortgages to be serviced or transferred and are jurisdiction-specific. When a loan is serviced, many forms are filed with jurisdictions, banks, servicing companies, etc. These forms must be filed promptly, correctly, and accurately. Many of these forms are actual paper as only a relatively small number of jurisdictions allow for e-sign.

The number of types of documents is immense. For example, we are looking at MSR Transfers, lien release, lien perfection, servicing transfer, lien enforcement, lien placement, foreclosure, forbearance, short sell, etc. All of these procedures have more than one form and require specific timeframes for not only filing but also follow-up. Most jurisdictions are extremely specific on the documents and their layout. Ranging from margins to where the seals are placed to a font to sizing to wording. It can change between geographically close jurisdictions.

What may be surprising, these documents, usually paper, are sent to the centers to be sorted and scanned. The documents are visually inspected by a human. They decide not only further processing of the documents but sometimes need to extract or tag some knowledge at the stage of routing. This process seems incredibly laborious considering the fact that a large organization can process up to tens of thousands of documents per day!

AI technology, as its understanding and trust grows, naturally finds a place in similar applications, automating subsequent tasks, one by one. There are many places waiting for technological advancement, and here are some ideas on how it can be done.

Overview

There are a few crucial components in the prepared solution

- OCR

- Documents classification

- Jurisdiction recognition

- Property addresses

- Party names and roles

- Document and file date

Each of them has some specific aspects that have to be handled, but all of them (except OCR) fall into one of 2 classical Natural Language Processing tasks: classification and Named Entity Recognition (NER).

OCR

There are a lot of OCRs that can transcribe the text from a document. Contrary to what we know after working on VIN Recognition System , the available OCRs are probably designed and are doing well on random documents of various kinds.

On the other hand, having some possibilities – Microsoft Computer Vision, AWS Textract, Google Cloud Vision, open-source Tesseract, naming a few, how to choose the best one? Determining the solution that fits best in our needs is a tough decision on its own. It requires well-structured experiments.

- We needed to prepare test sets to benchmark overall accuracy

- We needed to analyze the performance on handwriting

The results showed huge differences between the services, both in terms of accuracy on regular and hand-written text.

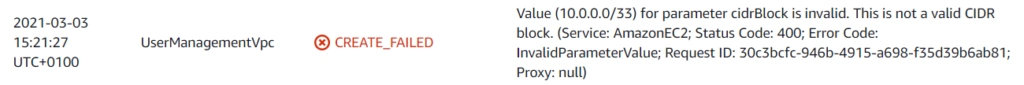

The best services we found were Microsoft Computer Vision, AWS Textract, and Google Cloud Vision. On 3 sets, they achieved the following results:

AWS Textract Microsoft CV Google CV Set 1 66.4 95.8 93.1 Set 2 87.2 96.5 91.8 Set 3 78.0 92.6 93.8 % of OCR results on different benchmarks

Hand-written text works on its own terms. As often in the real world, any tool has weaknesses, and the performance on printed text is somehow opposite to the performance on the hand-written text. In summary, OCRs have different characteristics in terms of output information, recognition time, detection, and recognition accuracy. There are at best 8% errors, but some services work as badly as recognizing 25% of words wrongly.

After selecting OCR, we had to generate data for classifiers. Recognizing tons of documents is a time-consuming process (the project team spent an extra month on the character recognition itself.) After that step, we could collect the first statistics and describe the data.

Data diversity

We collected over 80000 documents. The average file had 4.3 pages. Some of them longer than 10 pages, with a record holder of 96 pages.

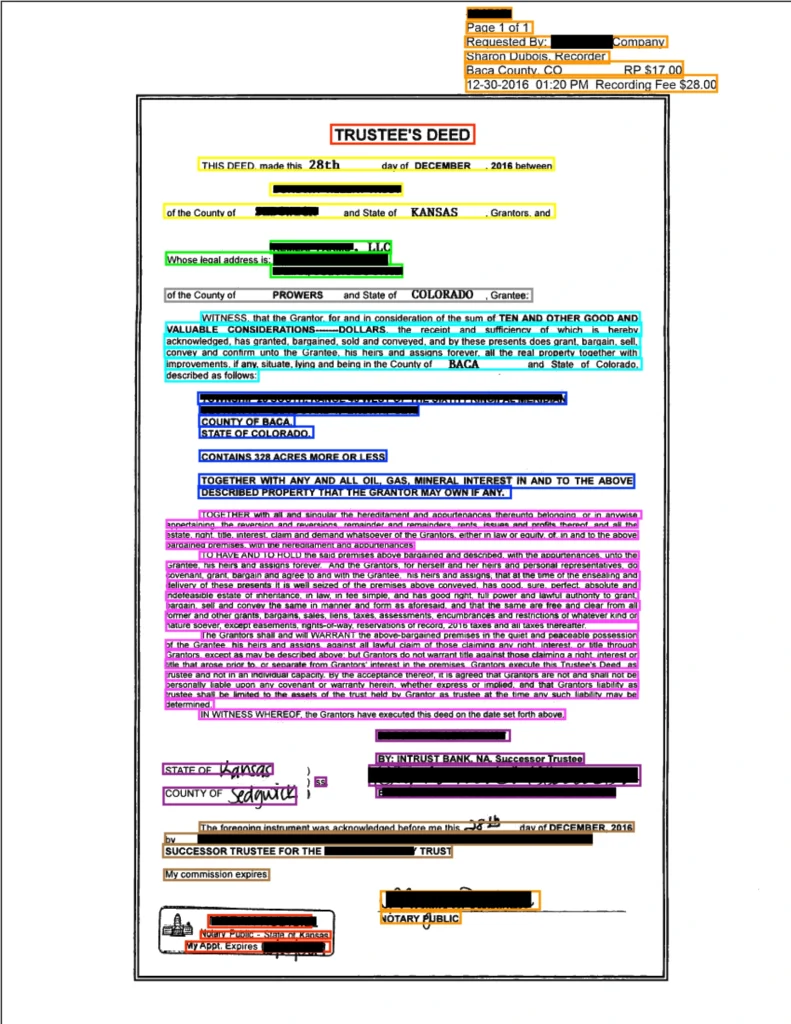

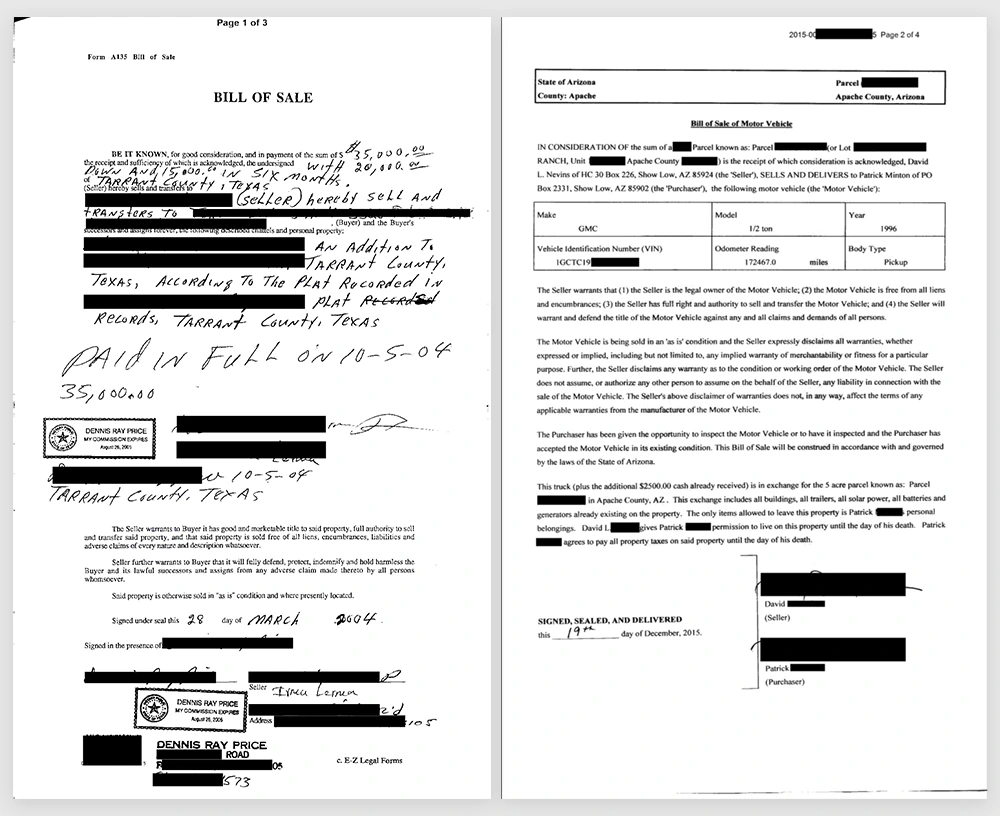

Take a look at the following documents – Document A and Document B. They are both of the same type – Bill of Sale!

- Half of document A is hand-written, while the other has only signatures

- There is just a brief detail about the selling process on Doc A, whereas on the other there are a lot of details about the truck inspection

- The sold vehicle in document B is described in the table

- Only the day in the document date of document B is hand-written

- There is a barcode on the Doc A

- The B document has 300% more words than the A

Also, we find a visual impression of these documents much different.

How can the documents be so different? The types of documents are extremely numerous and varied, but also are constantly being changed and added by the various jurisdictions. Sometimes they are sent together with the attachments, so we have to distinguish the attachment from the original document.

There are more than 3000 jurisdictions in the USA. Only a few administrative jurisdictions share mortgage fillings. Fortunately, we could focus on present-day documents, but it happens that some of the documents have to be processed that are more than 30 years old.

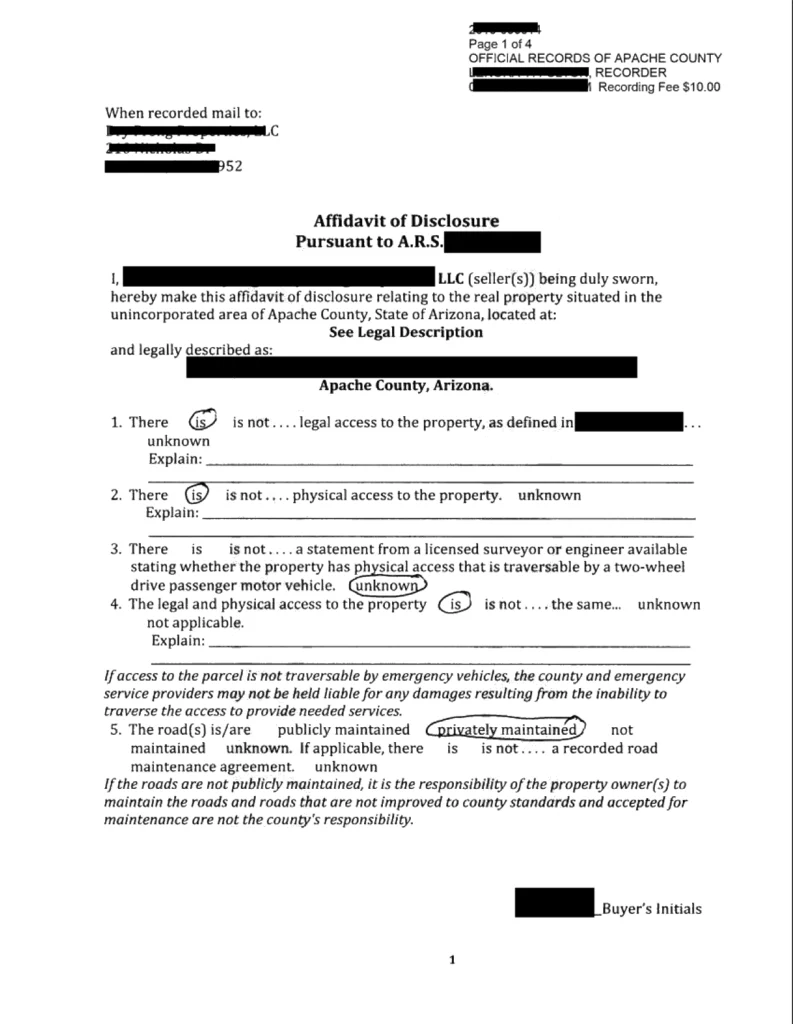

Some documents were well structured: each interesting value was annotated with a key, everything in tables. It happened, however, that a document was entirely hand-written. You can see some documents in the figures. Take a note that some information on the first is just a work marked with a circle!

Next steps

The obtained documents were just the fundamentals for the next research. Such a rich collection enabled us to take the next steps , even though the variety of documents was slightly frightening. Did we manage to use the gathered documents for a working system?

Getting started with the Julia language

Are you looking for a programming language that is fast, easy to start with, and trending? The Julia language meets the requirements. In this article, we show you how to take your first steps.

What is the Julia language?

Julia is an open-source programming language for general purposes. However, it is mainly used for data science, machine learning , or numerical and statistical computing. It is gaining more and more popularity. According to the TIOBE index, the Julia language jumped from position 47 to position 23 in 2020 and is highly expected to head towards the top 20 next year.

Despite the fact Julia is a flexible dynamic language, it is extremely fast. Well-written code is usually as fast as C, even though it is not a low-level language. It means it is much faster than languages like Python or R, which are used for similar purposes. High performance is achieved by using type interference and JIT (just-in-time) compilation with some AOT (ahead-of-time) optimizations. You can directly call functions from other languages such as C, C++, MATLAB, Python, R, FORTRAN… On the other hand, it provides poor support for static compilation, since it is compiled at runtime.

Julia makes it easy to express many object-oriented and functional programming patterns. It uses multiple dispatches, which is helpful, especially when writing a mathematical code. It feels like a scripting language and has good support for interactive use. All those attributes make Julia very easy to get started with and experiment with.

First steps with the Julina language

- Download and install Julia from Download Julia .

- (Optional - not required to follow the article) Choose your IDE for the Julia language. VS Code is probably the most advanced option available at the moment of writing this paragraph. We encourage you to do your research and choose one according to your preferences. To install VSCode, please follow Installing VS Code and VS Code Julia extension .

Playground

Let’s start with some experimenting in an interactive session. Just run the Julia command in a terminal. You might need to add Julia's binary path to your PATH variable first. This is the fastest way to learn and play around with Julia.

C:\Users\prso\Desktop>julia

_

_ _ _(_)_ | Documentation: https://docs.julialang.org

(_) | (_) (_) |

_ _ _| |_ __ _ | Type "?" for help, "]?" for Pkg help.

| | | | | | |/ _` | |

| | |_| | | | (_| | | Version 1.5.3 (2020-11-09)

_/ |\__'_|_|_|\__'_| | Official https://julialang.org/ release

|__/ |

julia> println("hello world")

hello world

julia> 2^10

1024

julia> ans*2

2048

julia> exit()

C:\Users\prso\Desktop>

To get a recently returned value, we can use the ans variable. To close REPL, use exit() function or Ctrl+D shortcut.

Running the scripts

You can create and run your scripts within an IDE. But, of course, there are more ways to do so. Let’s create our first script in any text editor and name it: example.jl.

x = 2

println(10x)

You can run it from REPL:

julia> include("example.jl")

20

Or, directly from your system terminal:

C:\Users\prso\Desktop>julia example.jl

20

Please be aware that REPL preserves the current state and includes statement works like a copy-paste. It means that running included is the equivalent of typing this code directly in REPL. It may affect your subsequent commands.

Basic types

Julia provides a broad range of primitive types along with standard mathematical functions and operators. Here’s the list of all primitive numeric types:

- Int8, UInt8

- Int16, UInt16

- Int32, UInt32

- Int64, UInt64

- Int128, UInt128

- Float16

- Float32

- Float64

A digit suffix implies several bits and a U prefix that is unsigned. It means that UInt64 is unsigned and has 64 bits. Besides, it provides full support for complex and rational numbers.

It comes with Bool , Char , and String types along with non-standard string literals such as Regex as well. There is support for non-ASCII characters. Both variable names and values can contain such characters. It can make mathematical expressions very intuitive.

julia> x = 'a'

'a': ASCII/Unicode U+0061 (category Ll: Letter, lowercase)

julia> typeof(ans)

Char

julia> x = 'β'

'β': Unicode U+03B2 (category Ll: Letter, lowercase)

julia> typeof(ans)

Char

julia> x = "tgα * ctgα = 1"

"tgα * ctgα = 1"

julia> typeof(ans)

String

julia> x = r"^[a-zA-z]{8}$"

r"^[a-zA-z]{8}$"

julia> typeof(ans)

Regex

Storage: Arrays, Tuples, and Dictionaries

The most commonly used storage types in the Julia language are: arrays, tuples, dictionaries, or sets. Let’s take a look at each of them.

Arrays

An array is an ordered collection of related elements. A one-dimensional array is used as a vector or list. A two-dimensional array acts as a matrix or table. More dimensional arrays express multi-dimensional matrices.

Let’s create a simple non-empty array:

julia> a = [1, 2, 3]

3-element Array{Int64,1}:

1

2

3

julia> a = ["1", 2, 3.0]

3-element Array{Any,1}:

"1"

2

3.0

Above, we can see that arrays in Julia might store Any objects. However, this is considered an anti-pattern. We should store specific types in arrays for reasons of performance.

Another way to make an array is to use a Range object or comprehensions (a simple way of generating and collecting items by evaluating an expression).

julia> typeof(1:10)

UnitRange{Int64}

julia> collect(1:3)

3-element Array{Int64,1}:

1

2

3

julia> [x for x in 1:10 if x % 2 == 0]

5-element Array{Int64,1}:

2

4

6

8

10

We’ll stop here. However, there are many more ways of creating both one and multi-dimensional arrays in Julia.

There are a lot of built-in functions that operate on arrays. Julia uses a functional style unlike dot-notation in Python. Let’s see how to add or remove elements.

julia> a = [1,2]

2-element Array{Int64,1}:

1

2

julia> push!(a, 3)

3-element Array{Int64,1}:

1

2

3

julia> pushfirst!(a, 0)

4-element Array{Int64,1}:

0

1

2

3

julia> pop!(a)

3

julia> a

3-element Array{Int64,1}:

0

1

2

Tuples

Tuples work the same way as arrays. A tuple is an ordered sequence of elements. However, there is one important difference. Tuples are immutable. Trying to call methods like push!() will result in an error.

julia> t = (1,2,3)

(1, 2, 3)

julia> t[1]

1

Dictionaries

The next commonly used collections in Julia are dictionaries. A dictionary is called Dict for short. It is, as you probably expect, a key-value pair collection.

Here is how to create a simple dictionary:

julia> d = Dict(1 => "a", 2 => "b")

Dict{Int64,String} with 2 entries:

2 => "b"

1 => "a"

julia> d = Dict(x => 2^x for x = 0:5)

Dict{Int64,Int64} with 6 entries:

0 => 1

4 => 16

2 => 4

3 => 8

5 => 32

1 => 2

julia> sort(d)

OrderedCollections.OrderedDict{Int64,Int64} with 6 entries:

0 => 1

1 => 2

2 => 4

3 => 8

4 => 16

5 => 32

We can see, that dictionaries are not sorted. They don’t preserve any particular order. If you need that feature, you can use SortedDict .

julia> import DataStructures

julia> d = DataStructures.SortedDict(x => 2^x for x = 0:5)

DataStructures.SortedDict{Any,Any,Base.Order.ForwardOrdering} with 6 entries:

0 => 1

1 => 2

2 => 4

3 => 8

4 => 16

5 => 32

DataStructures is not an out-of-the-box package. To use it for the first time, we need to download it. We can do it with a Pkg package manager.

julia> import Pkg; Pkg.add("DataStructures")

Sets

Sets are another type of collection in Julia. Just like in many other languages, Set doesn’t preserve the order of elements and doesn’t store duplicated items. The following example creates a Set with a specified type and checks if it contains a given element.

julia> s = Set{String}(["one", "two", "three"])

Set{String} with 3 elements:

"two"

"one"

"three"

julia> in("two", s)

true

This time we specified a type of collection explicitly. You can do the same for all the other collections as well.

Functions

Let’s recall what we learned about quadratic equations at school. Below is an example script that calculates the roots of a given equation: ax2+bx+c .

discriminant(a, b, c) = b^2 - 4a*c

function rootsOfQuadraticEquation(a, b, c)

Δ = discriminant(a, b, c)

if Δ > 0

x1 = (-b - √Δ)/2a

x2 = (-b + √Δ)/2a

return x1, x2

elseif Δ == 0

return -b/2a

else

x1 = (-b - √complex(Δ))/2a

x2 = (-b + √complex(Δ))/2a

return x1, x2

end

end

println("Two roots: ", rootsOfQuadraticEquation(1, -2, -8))

println("One root: ", rootsOfQuadraticEquation(2, -4, 2))

println("No real roots: ", rootsOfQuadraticEquation(1, -4, 5))

There are two functions. The first one is just a one-liner and calculates a discriminant of the equation. The second one computes the roots of the function. It returns either one value or multiple values using tuples.

We don’t need to specify argument types. The compiler checks those types dynamically. Please take note that the same happens when we call sqrt() function using a √ symbol. In that case, when the discriminant is negative, we need to wrap it with a complex()function to be sure that the sqrt() function was called with a complex argument.

Here is the console output of the above script:

C:\Users\prso\Documents\Julia>julia quadraticEquations.jl

Two roots: (-2.0, 4.0)

One root: 1.0

No real roots: (2.0 - 1.0im, 2.0 + 1.0im)

Plotting

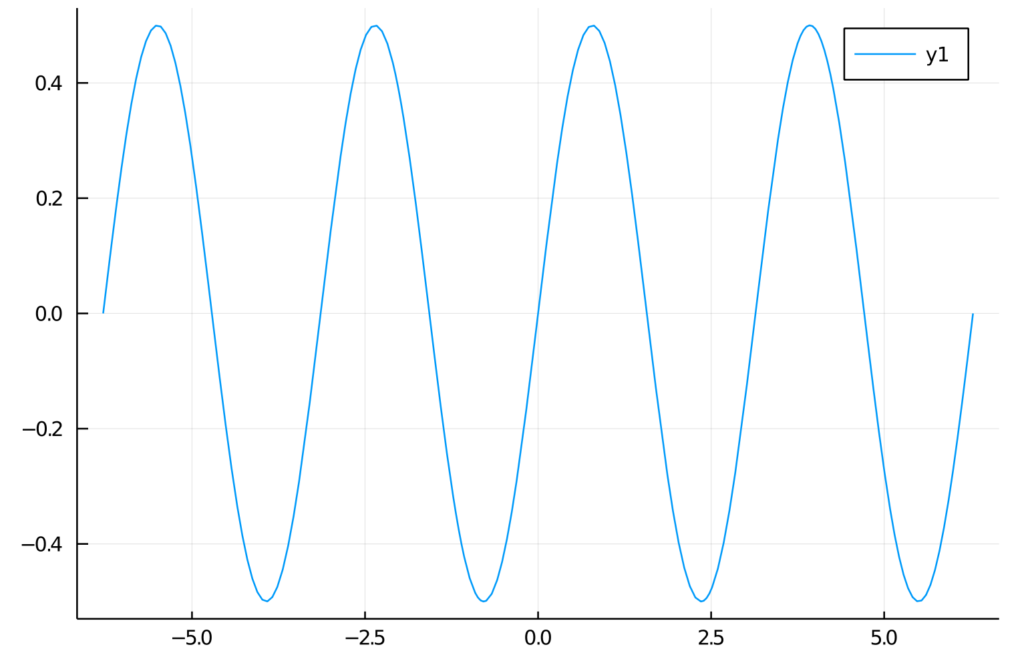

Plotting with the Julia language is straightforward. There are several packages for plotting. We use one of them, Plots.jl.

To use it for the first, we need to install it:

julia> using Pkg; Pkg.add("Plots")

After the package was downloaded, let’s jump straight to the example:

julia> f(x) = sin(x)cos(x)

f (generic function with 1 method)

julia> plot(f, -2pi, 2pi)

We’re expecting a graph of a function in a range from -2π to 2π. Here is the output:

Summary and further reading

In this article, we learned how to get started with Julia. We installed all the required components. Then we wrote our first “hello world” and got acquainted with basic Julia elements.

Of course, there is no way to learn a new language from reading one article. Therefore, we encourage you to play around with the Julia language on your own.

To dive deeper, we recommend reading the following sources:

Automotive Tech Week Megatrends: Key takeaways

For the last couple of months, the ones during which we have to cope with COVID-19, we could have heard people all over the world saying that virtual events are pointless, and it’s not the same as it used to be. Presentations are pre-recorded, and speakers are reading the text instead of presenting. If you ask the speaker a question, you may not get the answer because s/he is not there, and the audience is very limited.

Luckily, not all the events go like this. A perfect example of a great virtual event is Automotive Tech Week Megatrends that took place 2 weeks ago. Great speakers, insightful topics even for the most demanding automotive industry enthusiasts. A lot of attendees and most importantly - everything was happening live!

If you are a member of the automotive community, or at least you follow the industry trends, you have probably noticed how impactful changes have happened there during the last few years. From a very conservative industry, it turned into a forefront of digital disruption, adopting the newest technologies and trends faster than ever. How does it work? High-speed internet availability, LTE and 5G network coverage increased largely. Extreme miniaturization of fast, low-energy silicon chips made modern technologies like augmented reality or autonomous driving available for the users, but it also completely changed the way OEM’s build cars. We can also observe new channels of monetization in the industry.

Results are spectacular. Not so long ago, introducing a new model or solutions used to take a few years, now automotive enterprises work at a rapid pace having a new competition on the market like Tesla or Rimac. Subscription-based monetization models are here to stay, as well as huge infotainment screens and very sophisticated, intelligent driver assistants.

But technology is not the only aspect of the fast-changing world. The other, maybe even more important trend is the ecology in mobility, with the main focus on electric and hybrid drivetrains. Reducing the CO 2 footprint of the automotive industry by decreasing fossil fuel share in favor of electric or even fuel cell motors have become important and limited only by charging infrastructure and, still awaiting for game-changing invention, batteries capacity.

However, what are the next fundamental trends for the industry? Which of the trends, we can notice now, are here to stay and which are just fads? Speakers of Automotive Tech Week Megatrends tried to answer that specific question in the last week of January 2021.

Presentations covered a wide spectrum of topics: electric cars and charging infrastructures, urban mobility, and mobility as a service, user experience based vehicle and infotainment designs, V2X, and modern vehicle system infrastructure. Let’s quickly walk through the most interesting ones.

Evolving Mobility in an everchanging digital world

In the opening presentation, Stephen Zeh from Silvercar, Audi, explained the challenges of shared mobility, the evolution of their rental services and value of digital offerings, especially subscription-based. The shared mobility experiences dramatic growth caused by a shift in customer behavior and approach - owning a car is no longer a goal, but people still want to travel by car. Different means of travel require different vehicles - small cars for short trips, bigger for family tours, micromobility like electric bikes or scooters for urban travels or shared mobility like Uber replacing taxis.

The automotive industry had to quickly adapt to the pandemic situation, especially when, in April 2020, the ride-halting customer count plummeted as the fear of infection emerged in society and demand for contactless, safe car rental surged.

All of that caused major changes in Audi Dealerships offering, not just limited to vehicle sales anymore, but providing a much wider set of services for their clients.

How user experience drove design for the all-new F-150

The next day, Ehab Kaod, Chief Designer for F-150 at Ford, explained the challenges of designing a modern car by starting from user experience, affecting interior, exterior, and infotainment design and architecture.

Starting from on-site and remote customer research, finding the important features, and their pain points in previous truck models and how the new F-150 can solve their everyday issues. All of that resulted in new UX inventions like stow away, foldable shifter, bigger work surface inside, tailgate workbench or improved front seat design allowing a customer to take a nap in the vehicle.

5G & V2X – shaping the future of automotive

Another important presentation delivered by Claes Herlitz, VP and Head of Global Automotive Services at Ericsson, went through a relevant topic - always-connected vehicle infrastructure.

The challenge of a connected vehicle is the network bandwidth, stability of the connection, but also prioritization of services and a cost factor for the customer.

With overcoming this challenge, organic growth can come because of new services being available: fast, over-the-air updates improving the car and adding new features, data used for analytics to find the ways to improve cost efficiency and user experience.

Finding a balance between customer consent, data & new products

Next, Magnus Gunnarsson from Ericson, Sebastian Lasek from Skoda Auto, Manfred Wiedemann from Porsche AG, and Diego Villuendas Pellicero from Seat jointly participated in a panel covering the customer data handling from the perspective of different regions: Europe, USA, and China, using the data for designing and evaluating new products and strategies increasing customer acceptance for the handling of data securely.

With cars becoming always connected, IoT devices, the amounts of data about customer behavior or the vehicles itself grows exponentially. This may raise questions about data security, user consent for data storage and usage for e-commerce, marketing and improving user experience.

In Europe, the common framework for handling this situation is GDPR (General Data Protection Regulation), which helps to establish a common baseline for all manufacturers regarding data security and customer consent. It also helps OEMs feel safe when operating under this kind of strict regulations.

On the other hand, the big question arises if other regions, like America or China, should use the same processes, as both legal regulations and cultural differences may be an important aspect to consider when, for example, the vehicle infotainment asks customers for license acceptance or data usage consent when this is not really necessary.

All things considered, the big challenge for data monetization in Europe now is getting higher user acceptance.

The future of in-car payments – converting the car to a marketplace

Will Judge, Vice-President for Mobility Payments in Mastercard, explained in his presentation how car infotainment can be converted into a digital marketplace. For most customers, the convenience of digital commerce is not as important as its security. They want to feel they control the payment process with full knowledge about all parties that can access their sensitive data. Modern credit card providers enable digital transactions using secure tokens of the payment card that can be transferred through the internet, as well as heuristic and AI for fraud detection making the whole process safer.

With this level of security, the digital purchases can be safely provided in-car directly from infotainment systems with a rather easy-to-use manner for the user.

Automotive Tech Week Megatrends covered most of the hot topics that the industry is currently talking about. Great speakers representing world-recognized brands like Skoda and Audi showed some great results of their approach to innovation but most importantly how they are changing the way their organizations think of monetizing cars. Having advertisements appearing on your infotainment and a marketplace in Skoda cars seems to be the way that others will follow in the coming months/years. It is clearly visible how the Internet and Internet of Things, ecology, and data-driven approaches are guiding the industry to expand the market presence through sharing economy and subscription-based mobility. It seems that C.A.S.E strategy (Connected-Autonomous-Shared-Electric) is still the way to go!

Personalized finance as a key driver leading Financial Services industry into the future

Personalization in finance is a process that has been steadily developing for the last decade. It’s the most crucial trend you can pay attention to as it captures the essence of what modern consumers want. Individual service and attention.

Personalized finance is a journey with the customer in focus. Getting closer to customers means meeting them where they are, understanding their individual goals, and providing advice they actually want need.

Technology has introduced a tremendous change in personal finance. While it hasn’t yet democratized traditional banking and finance the way it’s promised, it’s well on its way – transforming the way we budget, invest, save or borrow money today. As companies enter the post-pandemic world, they face a consumer landscape undergoing sudden and radical change. Customer expectations have been transformed to make “anywhere, anytime” the norm. Achieving personalization at scale will be definitely one of the biggest challenges for traditional institutions in the race to differentiate through a digital channel in the coming years.

Why personalization matters in Financial Services?

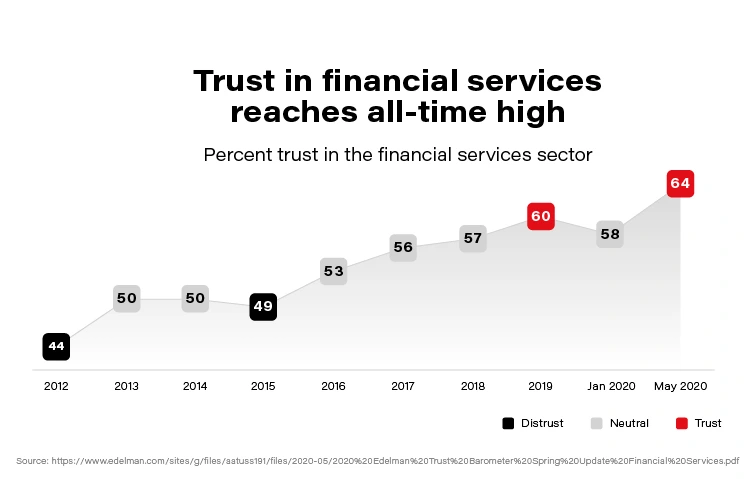

Personalized services shorten significantly the distance between a financial organization and its users, and are based on trust. The more we trust the product the more we are eager to share our personal data in order to receive a tailored, highly individualized service that maximizes value for customers. Trust is still the strongest word in banking. The 2020 Edelman Trust Barometer Spring Update: Special Report on Financial Services and the Covid-19 Pandemic reveals that the public’s trust in financial services has reached an all-time high of 65 percent amid the pandemic.

Now, when we associate the trust clients put i.e., into banks with the scale of daily operations (counted in millions), we easily conclude that there is an enormous opportunity in front of the financial sector to build highly personalized products and enable customers to realize their financial wellbeing potential.

With an increasing demand for more personalized experiences and focus on sustainability, Banks, Insurers, or Asset Management organizations are reaching the limits of where their current technology can take them with their business transformation initiatives. Therefore, it’s more critical than ever for Financial Institutions to turn towards Data and AI and get the most out of it - to meet these demands.

While reading different collaterals for the purpose of this article, we noticed an interesting paradox related to the financial domain. According to the variety of global innovation studies (i.e., Forbes Most Innovative Companies or The Global Innovation 1000 study), the Financial Services domain lacks innovation for nearly two decades. On the contrary, it has consistently been the most profitable sector in FORTUNE GLOBAL 500!

Data and AI for personalized finance

To make sure an organization stands out of the competition in an ever-changing financial environment and understands its customers better, it should radically change its approach to Data & AI utilization. Although traditional banks and financial enterprises differ by size, market dynamic, or type of services offering - there is a common set of requirements (attached below) worth taking into consideration when pivoting towards better personalization which can be achieved thanks to the advanced use of Data & AI within an enterprise:

- The Executive Board must believe that Data & AI will make a difference, and this commitment requires long-term investment/research.

- Organizations need to find a Champion that can lead a data/AI team, but at the same time be able to talk to the business stakeholders and articulate the benefits of the models *tools* being implemented.

- It’s necessary to spend time to create Data Strategy, determine a toolset, and figure out when to use Data vs. Analytics vs. AI. Without a strategy, an organization is flying blind and wastes precious time/resources *money*.

- Focus on what brings real value to the organization. Build a use case backlog that balances return on investment, time to market, innovation, and data availability. All of these are important and can help an organization determine where to start and why.

- Data Ethics, Privacy, and Governance are critical to not just the organization but its customers. Use their data inappropriately or violate their privacy even once, and you risk forever damaging the relationship (see recent case of Robinhood).

- Don't assume you can do it by yourself. Consider hiring, engage with a consulting partner, outsource - all of the above are likely required.

How does technology help achieve personalization at a large scale?

Commonwealth Bank of Australia and Royal Bank of Scotland are early adopters in the traditional banking ecosystem when it comes to personalized CX. These companies use advanced data analytics coupled with artificial intelligence to offer personalized experiences – technology that allowed them to determine and deliver ‘the next best conversation’ at scale saw a 30 to 40 percent increase in sales, back in 2017!

Personetics , a data-driven platform that uses AI to help banks issue personalized advice and insights to customers, has raised $75 million in funding from private equity firm Warburg Pincus. Founded out of Israel in 2010, Personetics offers technology that works inside financial institutions’ software. It aims to analyze customers’ financial transactions and behavior and deliver real-time tips and suggestions to improve their longer-term financial health.

In the latest report related to digitalization at Handelsbanken (one of the largest European banks with HQ in Sweden), we see practical examples of how technology played a vital role in building highly personal advisory services, which increased the customer value and realize the potential in their 35 million digital meetings per month by utilizing data and treating each customer as an individual.

Thinking about the scale and impact of the financial business, there was no better time in human history to maintain simultaneously hundreds of millions of interactions to deliver value per individual need. Advancements in automation, cloud computing, or machine intelligence technologies create new space for maximizing the power of data and give an enormous opportunity for traditional businesses to exponentially grow the quality of relationships and keeping up with customers.

It’s very hard to compete with an organization that is capable to save its clients precious time and deliver value which is a sum of trust + context + momentum in an engaging and simple to consume form.

Head of Digital at Bank of America, David Tyrie, shared an interesting viewpoint that refers to personalization challenges for the traditional banking ecosystem during discussion at the Digital Banking 2020 Conference:

Tailoring client experiences 1:1 to feel timely, relevant, and credible requires real-time decisioning of transactional, contextual, and behavioral data and an adoption of open platforms so that information can flow to trusted partners. “Closed-loop, data ecosystems” can help realize hyper-personalization at scale because they enable continuous learning to serve customized and contextual experiences, content, offers, recommendations, and insights.

This continuous learning component is vital as it helps big organizations like banks or insurers build more individualized interaction with their customers and learn their behavior along the way. We already see a growing post-pandemic trend to emulate everything that’s physical and build a digital representation of physical relationships to make sure the customer is at the heart of financial transformation.

Obstacles and opportunities for personalized finance

Personalization requires financial organizations to jump multiple levels when it comes to data maturity. In order to win the race for customer attention in 2021, companies should become not only a data organization from the ground up but to be flexible and fast with implementing new structures (incepting necessary cultural shift), systems and competencies (digital skills) that distinguish them from the old legacy times.

Even though increased focus on customers brings obvious benefits for both the organization and its clients, there are several significant challenges before financial enterprises can reap the great benefits from personalization. It’s worth making sure the organization is prepared and can handle some of the existing challenges:

- Data consists of a lot of unstructured content, which makes it difficult to interpret.

- Instead of considering data as an IT asset, the ownership of data should be moved to the business users, making data a key asset for decision making (it’s necessary to strengthen cooperation between business & tech, to tear down the product-silos).

- Restrictions of regulatory requirements and privacy concerns.

- Integrating customer data from multiple sources to create comprehensive profiles that are then used by predictive analytics tools to generate the most relevant recommendations and products might cause data quality issue due to third-party, publicly available data sources for which financial services companies can’t manage the reliability.

It seems unreal that the successful banks and financial industry organizations of tomorrow will be that far removed from those of today. Rather how customers interact with them will be different (digital customer interface). Financial services have become anything but personal. The relationship we have with our money is being completely reimagined for the digital world, same as how we go about building financial services is changing. We are sure this trend will not only grow but will be one of the key drivers in the financial services transformation .

A path to a successful AI adoption

Artificial Intelligence seems to be a quite overused term in recent years, yet it is hard to argue that it is definitely the greatest technological promise of current times. Every industry strongly believes that AI will empower them to introduce innovations and improvements to drive their businesses, increase sales, and reduce costs.

But even though AI is no longer a new thing, companies struggle with adopting and implementing AI-driven applications and systems . That applies not only to large scale implementations (which are still very rare) but often to the very first projects and initiatives within an organization. In this article, we will shed some light on how to successfully adopt AI and benefit from it.

AI adoption - how to start?

How to start then? The answer might sound trivial, but it goes like this: start small and grow incrementally. Just like any other innovation, AI cannot be rolled out throughout the organization at once and then harvested across various business units and departments. The very first step is to start with a pilot AI adoption project in one area, prove its value and then incrementally scale up AI applications to other areas of the organization.

But how to pick the right starting point? A good AI pilot project candidate should have certain characteristics:

- It should create value in one of 3 ways:

- By reducing costs

- By increasing revenue

- By enabling new business opportunities

- It should give a quick win (6-12 months)

- It should be meaningful enough to convince others to follow

- It should be specific to your industry and core business

At Grape Up, we help our customers choose the initial AI project candidate by following a proven process. The process consists of several steps and eventually leads to implementing a single pilot AI project in production.

Step 1: Ideation

We start with identifying possible areas in the organization that might be enhanced with AI, e.g., parts of processes to improve, problems to solve, or tasks to automate. This part of the process is the most essential as it becomes the baseline for all subsequent phases. Therefore it is crucial to execute it together with the customer but also ensure the customer understands what AI can do for their organization. To enable that, we explain the AI landscape, including basic technology, data, and what AI can and cannot do. We also show exemplary AI applications to a customer-specific industry or similar industries.

Having that as a baseline, we move on to the more interactive part of that phase. Together with customer executives and business leaders, we identify major business value drivers as well as current pain points & bottlenecks through collaborative discussion and brainstorming. We try to answer questions such as:

- What in current processes impedes your business development?

- What tasks in current processes are repeatable, manual, and time-consuming?

- What are your pain points, bottlenecks, and inefficiencies in your current processes?

This step results in a list of several (usually 5 to 10) ideas ready for further investigation on where to potentially start applying AI in the organization.

Step 2: Business value evaluation

The next step aims at detailing the previously selected ideas. Again, together with the customer, we define detailed business cases describing how problems identified in step 1 could be solved and how these solutions can create business value.

Every idea is broken down into a more detailed description using the Opportunity Canvas approach - a simple model that helps define the idea better and consider its business value. Using filled canvas as the baseline, we analyze each concept and evaluate against the business impact it might deliver, focusing on business benefits and user value but also expected effort and cost.

Eventually, we choose 4-8 ideas with the highest impact and the lowest effort and describe detailed use cases (from business and high-level functional perspective).

Step 3: Technical evaluation

In this phase, we evaluate the technical feasibility of previously identified business cases – in particular, whether AI can address the problem, what data is needed, whether the data is available, what is the expected cost and timeframe, etc.

This step usually requires technical research to identify AI tools, methods, and algorithms that could best address the given computational problem, data analysis – to verify what data is needed vs. what data is available and often small-scale experiments to better validate the feasibility of concepts.

We finalize this phase with a list of 1-3 PoC candidates that are technically feasible to implement but more importantly – are verified to have a business impact and to create business value.

Step 4: Proof of Concept

Implementation of the PoC project is the goal of this phase and involves data preparation (to create data sets whose relationship to the model targets is understood), modeling (to design, train, and evaluate machine learning models), and eventual deployment of the PoC model that best addresses the defined problem.

It results in a working PoC that creates business value and is the foundation for the production-ready implementation.

How to move AI adoption forward?

Once the customer is satisfied with PoC results, they want to productionize the solution to fully benefit from the AI-driven tool. Moving pilots to production is also a crucial part of scaling up AI adoption. If the successful projects remain still just experiments and PoCs, then it is demanding for a company to move forward and apply AI to other processes within the organization .

To summarize the most important aspects of a successful AI adoption:

- Start small – do not try to roll out innovation globally at once.

- Begin with a pilot project – pick the meaningful starting point that provides business value but also is feasible.