Be lazy – do not reinvent the cloud wheel from scratch

Keeping up with the newest technologies is demanding. A lot of companies tend to do that once in a while, and it is totally understandable. It is hard to follow and discover the perfect momentum to choose the cloud technology that will be valuable and cost-effective for years to come.

Cloud is not only a technology, but it also determines how to design, build and maintain applications and systems. For someone who is currently engaged in the process of digital transformation that is a huge deal – it's like upgrading your rickshaw to the newest Mustang car. All of the development tasks seems to be so easy after adopting to all cloud and DevOps conditions.

The common mistake in the situation where your process and software tools are outdated is to reinvent the new environment by yourself. Especially, when sophisticated platforms or infrastructures come into the picture. Becoming an expert in cloud technologies is a perfect example. It requires a lot of time and resources to master the best tools and to make them work together in the way we want them to. That time should be spent instead on what drives the company’s business and what the customer cares about the most: developing the product that will be running in the cloud.

At Grape Up, we follow a technology agnostic approach. We choose tools and technologies that are tailored to every specific customer and project. While working with the various companies on digital transformation, we helped several teams leverage cloud-native technologies and adapt a DevOps approach to deliver software faster, better, and safer. And despite the fact that every case was different, we have identified a visible pattern. Our team discovered a strong market demand on a cloud-native platform based on open source solutions. We also noticed that in most cases platform maintenance operations were outsourced. Again, building know-how is a hard process, so why not let someone with the right experience lead the way?

This is how Cloudboostr was born. A complete solution to run any workload in any cloud . All based on available open source tools that are well-known by the community and most importantly widely used and maintained. In the beginning, we’ve created a type of reference architecture to accelerate the digital transformation process. It was used as our internal boilerplate, not to reinvent to the whole thing with every customer, but rather use something based on the experiences from previous projects. Along the way, what used to be our template, got mature, and the time has come to make it an external platform, available for other companies.

How does the Cloudboostr platform work?

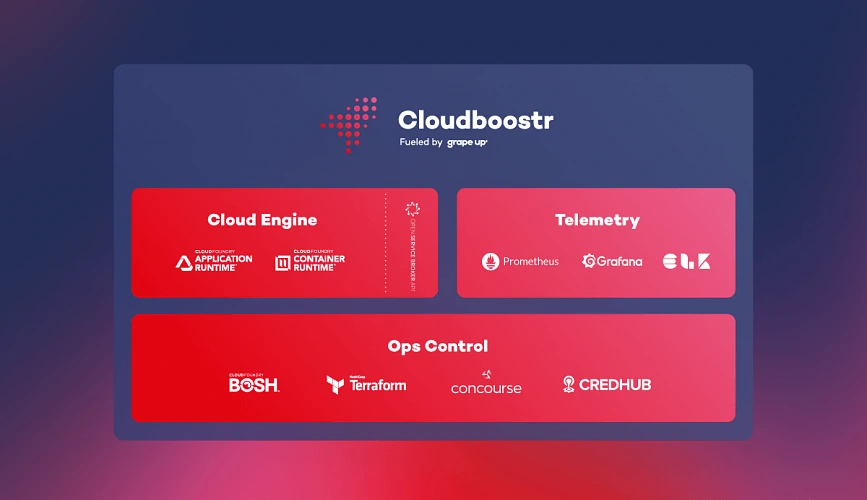

The Cloudboostr’s technology stack incorporates a number of leading open source technologies proven to work well together. Cloudboostr’s Cloud Engine is built with Kubernetes and Cloud Foundry, making applications deployment easy and both solutions cover a wide spectrum of application use cases. Terraform, BOSH & Concourse are used to automate the platform’s management and operations, and also track who, when & why applied a particular change. Built-in Telemetry toolkit consisting of Prometheus, Grafana and ELK stack allows to monitor the whole platform, investigate any issues and also recognize areas which should be improved.

The main abstraction layer of the platform is called Ops Control. It is a control panel for the whole platform. Here, all of the environments and also all shared resources like managing, monitoring or alerting services, are created and configured. Every environment can be created with either Cloud Foundry or Kubernetes or both of them. Shared resources help to monitor and maintain all of the environments and ensure proper workflows. From the operator’s perspective, the main point of interaction is Concourse with all of the pipelines and CLI for more advanced commands. Plus all of the Telemetry dashboards, with all sorts of graphical charts and metrics, where all concerns are clearly visible.

Cloudboostr as a platform includes all of the third party components under its wings. This means that any new platform releases will incorporate not only internal features or improvements but also upgrades of its elements if available. From a perspective of platform user, this is convenient - there is no need to worry about any upgrades, but one - the platform.

From a production perspective, it is crucial to have your platform always up and running. Cloudboostr uses HA-mode by default and leverages the cloud’s multi-AZ capabilities. It also automatically backs up and restores individual components of the platform and their state and data, allowing you to easily recreate and restore a corrupted or failed environment.

It is also important that Cloudboostr comes pre-configured, so if you're starting a journey with the cloud, you can install the platform very fast and be ready to deploy your applications very quickly. The initial cost of getting platform working is fairly low when it comes to getting the know-how, and that's a huge benefit.

How can Cloudboostr be tested?

It is obvious that platform is a big deal and no one would like to buy a pig in a poke. Especially when long term services are in the picture. Grape Up enables customers to try out the platform for a while before making a final decision. It is also understandable that most of the companies need more time to get to know the technology behind the platform. That's why the trial time is done in the dojo style. For a few weeks, the Grape Up crew settles in a customer location, installs the out of the box product, teaches how to use it and also navigates migration of the first application to the platform. Support provided by experienced platform engineers helps to understand the full capabilities of the product and how to use it in particular scenarios.

After the first period, there is another time frame for unassisted tests, where the client’s operations team can play with the platform, apply various tests or migrate other applications to see how they will run on the new environment. During that time, Grape Up platform engineers are still available to help and guide.

What about costs?

All the mentioned technologies are open source and if one would like to build this platform on their own they can do that. So why should anyone pay for that? The answer is, no one needs to pay for Cloudboostr license – it's totally free.

In order to use Cloudboostr platform though, you need to have a support service subscription plan. It's obvious that complex solutions, such as cloud platforms, need to be maintained by someone. Majority of the market outsource the services, which is especially convenient if you use software organized as a product. Again, it's not worth it to gain experience by yourself and spend weeks or months on that, while your competitors are moving forward. Also knowing that there is already a fair share of the market, who knows the technical details that you're looking for. However if one feels that they already have all of the knowledge needed to maintain the platform themselves, that's fine too - they can simply choose a minimal support plan.

Conclusion

The wisdom coming out from this article is – if you want to go cloud, do not reinvent the cloud wheel from scratch. Do the things that you were meant to – focus on building your applications and delivering true value to your customers and let the experts help you run your applications in the cloud and maintain the cloud environment. Boost your cloud journey with Cloudboostr !

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.