Thinking out loud

Where we share the insights, questions, and observations that shape our approach.

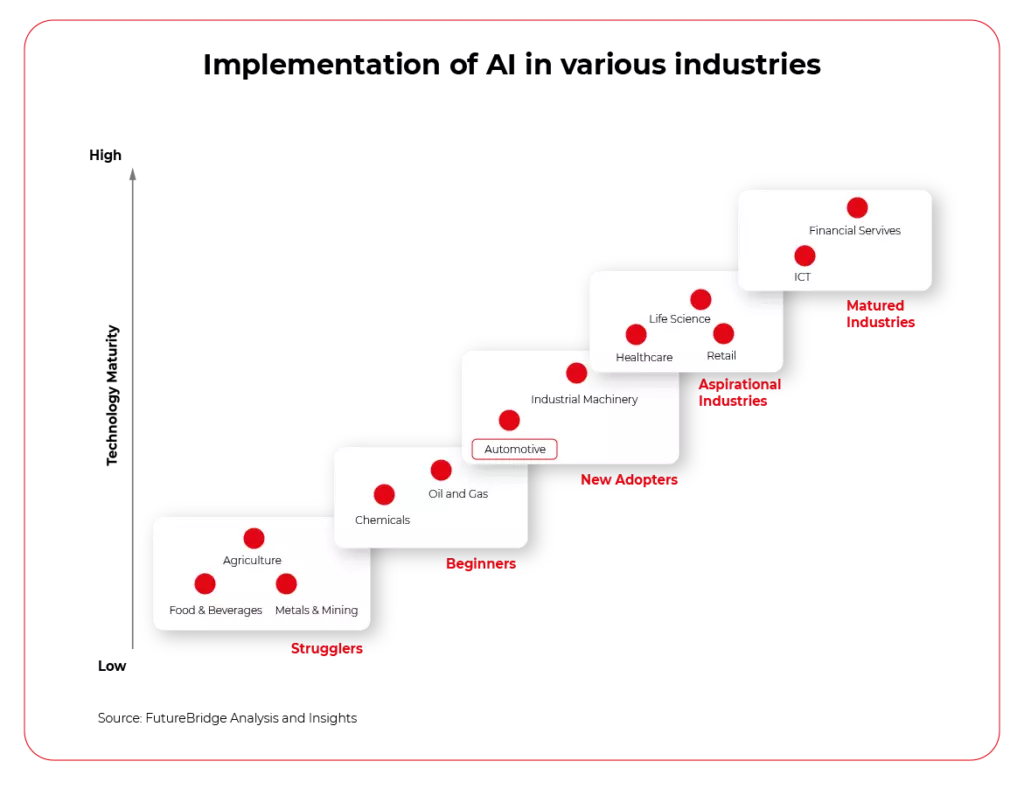

IoT SaaS - why automotive industry should care, and which AWS IoT or Azure IoT is better to use as a base platform for connected vehicle development

We're connected. There’s no doubt about it. At work, at home, in town, on holidays. Our life is no longer divided into offline and online, digital and analog. Our life is somewhere in between, and it happens in both worlds at once. Also in our car, where we expect access to data, instant updates, entertainment, and understanding of our needs. The proven IoT SaaS platform makes this much easier. Today choosing this option is crucial for every company in the automotive industry. Without it, the connected vehicle wouldn’t exist.

What you will learn from this article:

- Why an automotive company needs cloud services and how to build new business value on them

- What features an IoT platform for the automotive industry should have

- What cloud solutions are chosen by the largest producers

Before our very eyes, the car is becoming part of the Internet of Things ecosystem. We want safer driving and 'being led by the hand', ease of integration with external digital services like music streaming, automatic parking payments, or real-time traffic alerts, and the transfer of virtual experiences from one tool to another (including the car).

The vehicles we drive have become more service-oriented, which not only creates new options and business opportunities for companies from the automotive sector but also poses potential threats.

A hacking attack on a phone may result in money loss or compromising the user, whereas an attack on a car can have much more serious consequences. This is why choosing the platform for a connected vehicle is crucial.

Let's have a look at the basic assumptions that such a platform should meet. Let's get to know the main service providers and market use cases influencing the choice of the largest brands in the automotive industry.

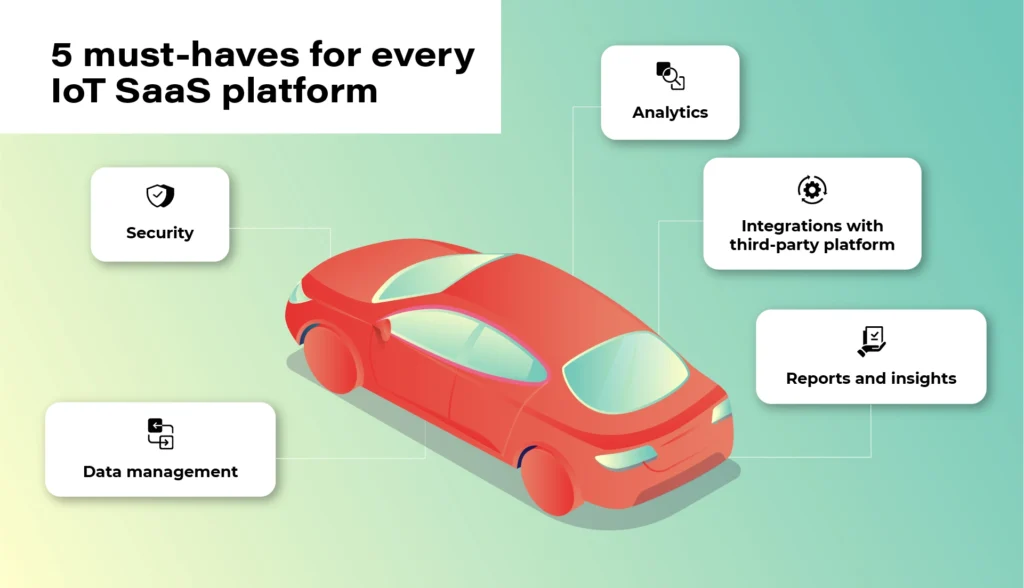

5 must-haves for every IoT SaaS platform

1. Security

At the heart of the Internet of Things is data. However, no one will share it unless the system guarantees an appropriate level of security and privacy. Access authorization is meant for selected users and platforms only. Authentication is geared to prevent unwanted third-party devices from connecting to the vehicle. Finally, there is also an option of blocking devices reaching their limits of usage or ones that have become unsafe. These types of elements that make up the security of the platform are a necessary condition to consider the implementation of the platform in your own vehicle fleet.

2. Data

The connected vehicle continuously receives and sends data. The vehicle communicates not only with other moving vehicles but also with the city and road infrastructure and third-party platforms. Data management, storage, and analysis are the gist of the entire IoT ecosystem. For everything to run smoothly and in line with security protocols, devices need to get data directly from your IoT platform, not from devices. Only in this way will you get a bigger picture of the whole, plus the option of comprehensive analysis- hence the possibility of monetization and obtaining additional business value .

3. Analytics

Once we have the guarantee that the data is safe and obtained from the right sources, we can start analyzing it. A good IoT platform allows it to be analyzed in real-time, but also in relation to past events. It also allows you to predict events before they happen - for example, it will warn the user about replacing a specific component before it breaks down. It is important that the platform collects and analyses data from the entire spectrum of events. Only in this way can it create a comprehensive picture of the real situation.

4. Integrations

The number of third-party platforms that the driver can connect to their car will continue to increase. You have to be prepared for this and choose a solution that will be able to evolve along with market changes. The openness of the system (combined with its security) will keep you going and expand your potential monetization possibilities.

When the system is shut down, you may have to replace some devices or make constant programming changes to communication protocols in the near future.

5. Reports

With this amount of data, since thousands or even hundreds of thousands of vehicles can be pinned to the platform - transparent data reporting becomes necessary. Some of the information may be irrelevant, some will gain significance only in combination with others, some will be more or less important for your business (different aspects will be pointed out by a company operating in the area of shared mobility , as opposed to a company managing a lorry fleet).

Your IoT platform must enable you to easily access, select and present key information in a way that will be clear to each employee, not business intelligence experts only.

We need data to draw constructive business conclusions, not to be bombarded with useless information.

Top market solutions - use cases of the biggest automotive brands

All right. So what solution should you opt for? There is no one, obvious answer to this question. It all depends on your individual needs, the scale of the business, and the cooperation model that is key for you.

You can focus on larger market players and scalable solutions - e.g. the Microsoft Azure platform or AWS by Amazon or on services in the SaaS model provided e.g. by players such as Otonomo, Octo, Bosch, or Ericsson.

Microsoft Azure x Volkswagen

The Azure platform, created by the technological giant from Redmond, has been known to developers and cloud architects for a long time. No wonder that it is often used by the most famous brands in the automotive industry. Microsoft is supported by the scale of its projects, excellent understanding of cloud technologies, and experience in creating solutions dedicated to the world's largest brands.

In 2020, based on these solutions, Volkswagen implemented its own Automotive Cloud platform (by its subsidiary - CARIAD, previously called CarSoftware.org.)

Powered by Microsoft Azure cloud and IoT Edge solutions, the platform will support the operation of over 5 million new Volkswagens every year. The company also plans to transfer technology to other vehicles from the group in all regions of the world, and by doing this, laying the foundations for customer-centric services.

As the brand writes in its press release, the platform is focused on „providing new services and solutions, such as in-car consumer experiences, telematics, and the ability to securely connect data between the car and the cloud.”

For this purpose, Volkswagen has also created a dedicated consumer platform - Volkswagen We, where car users will find smart mobility services and connectivity apps for their vehicles.

AWS x Ford and Lyft

Over 13 years on the market and „165 fully featured services for computing, storage, databases, networking, analytics, robotics, machine learning and artificial intelligence (AI), Internet of Things (IoT), mobile, security, hybrid, virtual and augmented reality (VR and AR), media….” support AWS, or the Amazon cloud solutions.

For people from the automotive industry, a great advantage is a huge brand community and an extensive ecosystem of other services such as movie streaming (Prime Video), voice control (Alexa), or shopping in Amazon Go stores, which can create new business opportunities for companies providing automotive solutions.

The Amazon platform was selected, among others, by the Ford Motor Company (in cooperation with Transportation Mobility Cloud), and by Lyft in the shared mobility sector.

Ford and creators of the Transportation Mobility Cloud (TMC) Autonomic justified the choice of that solution as follows: [we choose] „AWS for its global availability, and the breadth and depth of AWS’ portfolio of services, including Internet of Things (IoT), machine learning, analytics, and compute services”. The collaboration with Amazon is intended to help the brands expand the availability of cloud connectivity services and connected car application development services for the transportation industry”.

Based on the Amazon DynamoDB (NoSQL database) service, Lyft chose Amazon services to be able to easily track users’ journeys, precisely calculate routes and manage the scale of the process during the communication peak, holidays, and days off.

Chris Lambert, CTO at Lyft, commented on the brand's choice: „By operating on AWS, we are able to scale and innovate quickly to provide new features and improvements to our services and deliver exceptional transportation experiences to our growing community of Lyft riders. […] we don’t have to focus on the undifferentiated heavy lifting of managing our infrastructure, and can concentrate instead on developing and improving services with the goal of providing the best transportation experiences for riders and drivers, and take advantage of the opportunity for Lyft to develop best-in-class self-driving technology.”

BMW & MINI x Otonomo

Transforming data to revolutionize driving and transportation. Otonomo, the IoT platform operating in the SaaS model, using this slogan is trying to convince the automotive industry to avail of its services.

Among its customers, BMW and belonging to the same MINI group are particularly noteworthy. The vehicles have been connected to the platform in 44 countries and are intended to provide additional information for road traffic, smart cities, and improve the overall driving experience.

Among the data to be collected by the vehicles, the manufacturer mentions information on the availability of parking lots, traffic congestion, and traffic itself in terms of city planning, real-time traffic intelligence, local hazard warning services, mapping services, and municipal maintenance and road optimization.

Volvo x Ericsson Connected Vehicle Cloud

Partnerships with telecommunications companies are also a common business model in creating cloud services for vehicles. This kind of cooperation was chosen by Volvo, e.g. whilst working with Ericsson. Anyway, this cooperation dates back to 2012 and is constantly being expanded.

Connected Vehicle Cloud (CVC) platform, as its producer named it, allows Volvo to „deliver scalably, secured, high-quality digital capabilities, including a full suite of automation, telematics, infotainment, navigation, and fleet management services to its vehicles. All software is able to be supported and seamlessly updated over-the-air (OTA) through the Ericsson CVC”.

Mazda x KDDI & Orange IoT

In 2020, connected car services also made their debut in Mazda, specifically the MX-30 model. Like the Swedish vehicle manufacturer, a local technology partner was also selected here. It was KDDI, the Japanese telecommunications tycoon. (Orange became a partner for the European market).

With Mazda's connection to the IoT cloud, the MyMazda App has also been developed. The manufacturer boasts that in this way they introduced a package of integrated services, "which will remove barriers between the car and the driver and provide a unique experience in using the vehicle". The IoT platform itself is geared to offer drivers a higher level of safety and comfort.

What counts is the specifics of your industry and flexibility of the platform

Regardless of which solution you choose, remember that security and data management are an absolute priority of any IoT platform. There is no one proven model because the automotive industry also has completely different vehicles, goals, and fleet scales.

Identify your key needs and make your final choice based on them. The IoT platform should be adjusted to your business, not the other way round. Otherwise, you will be in for constant software updates and potential problems with data management and its smooth monetization.

The next step for digital twin – virtual world

Digital Twin is a widely spread concept of creating a virtual representation of object state. The object may be small, like a raindrop, or huge as a factory. The goal is to simplify the operations on the object by creating a set of plain interfaces and limiting the amount of stored information. With a simple interface, the object can be easily manipulated and observed, while the state of its physical reflection is adjusted accordingly.

In the automotive and aerospace industries , this is a common approach to use virtual objects representation to design, develop, test, manufacture, and operate both parts of a vehicle, like an engine, drivetrain, chassis/fuselage, or a full vehicle – a whole car, motorcycle, truck or aircraft. Virtual representations are easier to experiment with, especially on a bigger scale, and to operate - especially in situations when connectivity between a vehicle and the cloud is not stable ability to query the state anyway is vital to provide a smooth user experience.

It’s not always critical to replicate the object with all details. For some use cases, like airflow modeling for calculating drag force, mainly exterior parts are important. For computer vision AI simulation, on the other hand, user checking if the doors and windows are locked only requires a boolean true/false state. And to simulate the combustion process in the engine, even the vehicle type is not important.

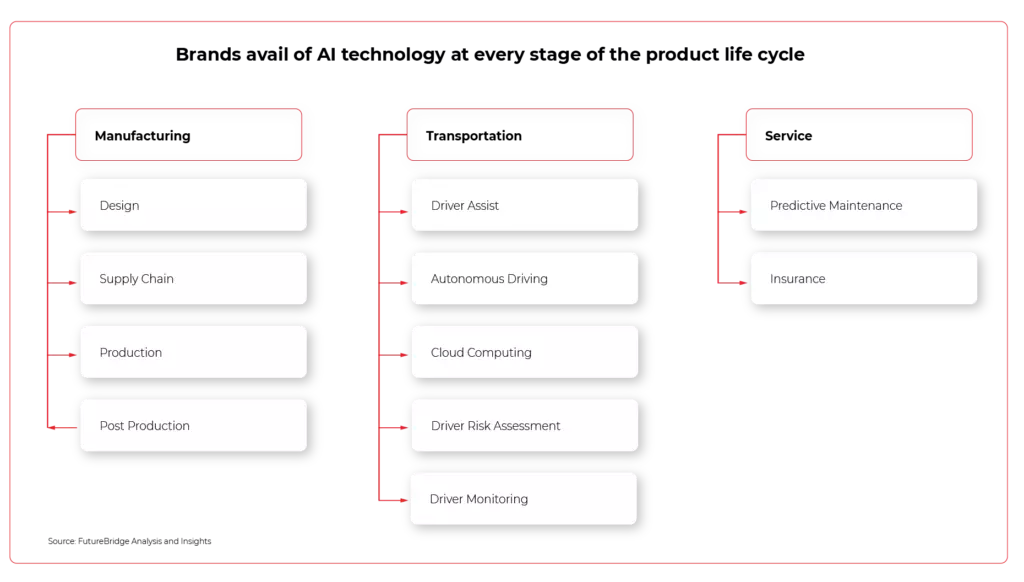

Today, artificial intelligence takes a significant role in a lot of car systems, to name a few: driver assistance, fatigue check, predictive maintenance, emergency braking, and collision avoidance, speed limit recognition, and prediction. Most of those systems do not live in a void - to operate correctly they require information about the surrounding world gathered through V2X connections, cameras, radars, lidars, GPS position, thermometers, or ABS/ESP sensors.

Let’s take Adaptive Cruise Control (ACC). The vehicle is kept in lane using computer vision and a front-facing camera. The distance to surrounding vehicles and obstacles is calculated using both a camera and a radar/lidar. Position on the map is gathered using GPS, and the speed limit is jointly calculated using the navigation system, road sign recognition, and distance to the vehicle ahead. This is an example of a complex system, which is hard to test - all parts of it have to be simulated separately, for example, by injecting a fake GPS path. Visualizing this kind of test system is complicated, and it’s hard to use data gathered from the car to reproduce the failure scenarios.

Here the Virtual World comes to help. The virtual world is an extension of the vehicle shadow concept where the multiple types of digital twins coexist in the same environment knowing their presence and interfaces. The system is composed of digital representation of physical assets whenever possible – including elements recognized via computer vision. Vehicles, road infrastructure, positioning systems, or even pedestrians are part of the virtual world. All vehicles are part of the same environment meaning they can share the data regarding the position of other traffic participants.

- Such a system provides multiple benefits: Improved accuracy of assistance systems, as the recognized infrastructure and traffic participants can come from other vehicles, and their position can be estimated even when they are still outside the range of sensors.

- Easier, more robust communication between infrastructure, vehicles, pedestrians, and cloud APIs as everything remains in the same digital system.

- Possibility to fully reproduce conditions of system failure as the state history of not just vehicle, but all of its surrounding remains in cloud and can be used to recreate and visualize the area.

- Ability to enhance existing systems leveraging data from the greater area - for example, immediately notifying about an obstacle on the road in 500 meters and suggestion to reduce speed.

- The extensive information set can be used to build new AI/ML applications, like real-time weather information (rain sensor) can be built to close sunroofs of vehicles parked in the area.

- The same system can be used to better simulate its behavior, even using data from real vehicles.

- Common interfaces allow for quicker implementation.

Obviously, there are also challenges - the amount of data to be stored is huge, so it should be heavily optimized, and storage has to be highly scalable. There is also an impact of the connection between the car and the cloud . Overall, the advantages overweight the disadvantages, and the Virtual World will be a common pattern in the next years with the growing implementation of software-defined vehicles and machine learning applications requiring more and more data to improve its operations.

Cybersecurity meets automotive business

The automotive industry is well known for its security standards regarding the road safety of vehicles. All processes regarding vehicle development - from drawing board to sales - were standardized and refined over the years. Both internal tests, as well as globally renowned companies like NHTSA or EuroNCAP, are working hard on making the vehicle safe in all road conditions - for both passengers and other participants of road traffic.

ISO/SAE 21434 - new automotive cybersecurity standard

Safety engineering is currently an important part of automotive engineering and safety standards, for example, ISO 26262 and IEC 61508. Techniques regarding safety assessment, like FTA (Fault Tree Analysis), or FMEA (Failure Mode and Effects Analysis) are also standardized and integrated into the vehicle development lifecycle.

With the advanced driver assistance systems starting to be a commodity, the set of tests started to quickly expand adapting to the market situation. Currently, EuroNCAP takes into account automatic emergency braking systems, lane assist, speed assistance, or adaptive cruise control. The overall security rating of the car highly depends on modern systems.

But the security is not limited to crash tests and driver safety. In parallel to the new ADAS systems, the connected car concept, remote access, and in general, vehicle connectivity moved forward. Secure access to the car does not only mean car keys but also network access and defense against cybersecurity threats.

And the threat is real. 6 years ago, in 2015, two security researchers hacked Jeep Cherokee driving 70mph on a highway by effectively disabling its breaks , changing the climate control and the infotainment screen display. The zero-day exploit allowing that is now fixed, but the situation immediately caught the public eye and changed the OEMs mindset from “minor, unrealistic possibility” to “very important topic”.

There was no common standard though. OEMs, Tier1s, and automotive software development companies worked hard to make sure this kind of situation never happens again.

A few years later other hackers proved that the first generation of Tesla Autopilot could be tricked to accelerate over the speed limit by only slightly changing the speed limit road sign. As a result, discussion about software-defined vehicles cybersecurity sparked again.

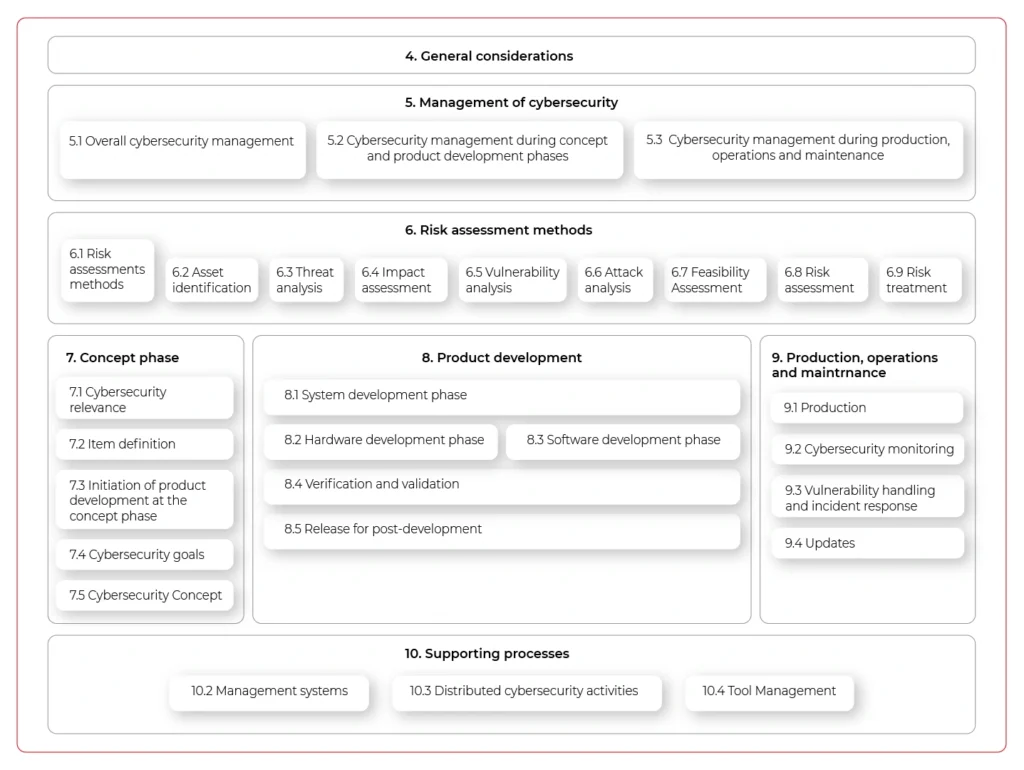

All of these resulted in the definition of the new standard called ISO 21434 “Road vehicles — cybersecurity engineering . The work started last year, but currently, it’s at the “Approval” phase, so we can quickly go through the most important topics it tackles.

In general, the new norm provides guidelines for including cybersecurity activities into processes through the whole vehicle lifecycle. The entire document structure is visualized below:

The important aspect of the new standard is that it does not only handle vehicle production but all activities until the vehicle is decommissioned - including incident response or software updates. It does not just focus on singular activities but highly encourages the continuous improvement of internal processes and standards.

The document also lists the best practices regarding cybersecurity design:

- Principle of least privilege

- Authentication and authorization

- Audit

- E2E security

- Architectural Trust Levels

- Segregation of interfaces

- Protection of Maintainability during service

- Testability during development (test interface) and operations10.

- Security by default

The requirements do not end on the architectural and design level. They can go as low as the hardware (identification of security-related elements, documentation, and verification for being safe, as they are potential entry points for hackers), and source code, where specific principles are also listed:

- The correct order of execution for subprograms and functions

- Interfaces consistency

- Data flow and control flow corrections

- Simplicity, readability, comprehensibility

- Robustness, verifiability, and suitability for modifications

The standard documentation is comprehensive, although clearly visible in the provided examples rather abstract and not specific to any programming languages, frameworks, and tools. There are recommendations, but it’s not intended to answer all questions, rather give a basis for further development. While not a panacea to all cybersecurity problems of the industry, we are now at the point when we need standardization and common ground for handling security threats in-vehicle software and connectivity, and the new ISO 21434 is a great start.

Building telematics-based insurance products of the future

Thanks to advancements in connected car technologies and the accessibility of personal mobile devices, insurers can roll out telematics-based services at scale. Utilizing telematics data opens the door to improving customer experience, unlocking new revenue streams, and increasing market competitiveness.

After reading this article, you will know:

- What is telematics

- How insurers build PAYD & PHYD products

- Why real-time crash detection is important

- How to identify stolen vehicles

- If it’s possible to streamline roadside assistance

- What role telematics plays in predictive maintenance

Telemetry- the early days

Obtaining vehicle data isn’t a new concept that has materialized with the evolution of the cloud and connectivity technologies. It is called telemetry and was possible for a long time but accessible only to the manufacturers or specialized parties because establishing the connection with the car was not an easy feat. As an example, it first started to be used by Formula 1 racing teams in the late 1980s, and all they could manage was very short bursts of data when the car was passing close to the pits. Also, the diversity and complexity of data were significantly different compared to what is available today because cars were less complex and had fewer sensors onboard that could gather and communicate data.

What is telematics?

At the very basic level, it’s a way of connecting to the vehicle data remotely. More specifically, telematics is a connection mechanism between machines (M2M) enabled by telecommunication advances. Telematics understood in the insurance context is even more specific and means connecting to the data generated by both the vehicle itself and the driver .

At first, when telematics-based products started gaining popularity, they required drivers to use additional devices like black boxes that needed to be installed in the car, sometimes by a qualified technician. These devices were either installed on the dashboard, under the bonnet, or plugged in the OBD-II connector. The black boxes were fairly simple devices that comprised of GPS, motion sensor, and a SIM card plus some basic software. They gathered rudimentary information about:

- the time-of-day customers drive

- the speed on different sorts of roads

- sharp braking and acceleration

- total mileage

- the total number of journeys

Meantime mobile apps mostly replaced black boxes as it didn’t take long for smartphones to get sophisticated enough to render them rather useless. Of course, they are still offered by the insurers as an alternative for customers that refuse to install apps that access their location or require one due to not having a sufficiently advanced mobile device. However, these days most of the cars that roll off the assembly line have built-in connectivity capabilities, so the telematics function is already embedded in the vehicle from the very beginning. As an example, 90% of Ford passenger cars starting from 2020 are connected. This means that there is no more need for additional devices. The car can now share all the data black boxes or apps gathered plus a lot of detailed data about the vehicle state from the massive amount of sensors they’ve got on board. More technologically advanced cars like Tesla can send up to 5 gigabytes of data every day.

Telematics-based insurance products and services

By employing new technologies, insurers can be closer to their customers, understand them better and take a more proactive approach to maintain the relationship. Telematics is the key technology that allows for this type of stance in the auto insurance area. Insurers can leverage telematics to build numerous products and services , but it is important to remember that the regulations can differ from state to state and from country to country.

So, the solutions depicted in this article should serve only as an example of how the technology can be used.

Usage-based products

Usage-based products are probably the most widespread in this category as they have been around for some time and offer the most tangible benefit to customers - cost savings.

The market value for these products is currently estimated at 20 billion dollars, and it is projected to reach 67 billion USD in the next 5 years. This is a good indicator that there is a growing demand in the market, especially from millennials and gen Zs who expect the services & products they buy to be tailored to them and not based on a generic quote.

Currently, the two main categories of usage-based insurance are Pay-how-you-drive (PHYD) and Pay-as-you-drive (PAYD) products. The first one is based on the assumption that the drivers should be rewarded for how they drive. So, when building PHYD offering, insurers need data on when & where their customers drive, the speed on different roads, how they accelerate, and brake, and how they enter corners. Feeding that data to Machine Learning algorithms allows assessing whether the customers are safe drivers who obey the law and to reward them with a discount on their premium. The customer benefits are clear, but the insurer benefits as well. By enabling their customers to use PHYD products, the insurers can:

- correct risk miscalculations,

- enhance price accuracy,

- attract favorable risks,

- retain profitable accounts,

- reduce claim costs

- enable lower premiums

The second category is the PAYD model in which the customers pay only for what they actually drive plus a low monthly rate. In this scenario, the insurers only need to monitor the miles driven and then multiply the amount by a fixed mile fee (a few cents usually). This type of solution is perfect for irregular drivers, and it was also a choice for many during COVID. It can increase insurance affordability, reduce uninsured driving, and provide consumer savings. It makes premiums more accurately reflect the claim costs of each individual motorist and rewards motorists who reduce their accident risk. Additionally, it can be a great alternative to PHYD products for customers who are not comfortable with gathering multiple data points about their driving behavior.

Real-time crash detection

This solution allows insurers to be closer to their customer and to react to events in real-time. It is a part of a larger trend in which the evolution of technology enables the shift from a mode of operations where the insurer is largely invisible to their customers (unless something happens) to a new model where the company is there to support and help the customers. And if possible, even go as far as to predict and prevent losses occur.

By analyzing the vehicle data and driver behavior, it is possible to detect accidents as they happen. Through monitoring the vehicle location, speed, and sensor data (in this case, motion sensor) and setting up alerts, insurers can be the first to know that there has been an accident. However, detecting the actual accident requires filtering out random shock and vibrations like speed bumps, rough roads, and potholes, parking on the kerb, doors, or boot lid being slammed.

This allows them to take a proactive approach and contact the driver, coordinate the emergency services, and roadside assistance. Using the data from the crash, they can also start the first notice of loss process and reconstruct the accident’s timeline. If it happens that there are more parties involved in the incident, the crash data can be used to determine who is responsible in ambiguous situations.

Stolen Vehicle Alerts

The big advantage of telematics-based products and services is that they are beneficial to both sides, and it’s easy to present. One of the examples can be enabling stolen vehicle alerts. By gathering data about customer behavior, insurers can build driver profiles that allow them to set up alerts that are triggered by unusual or suspicious behavior.

For instance, let’s assume a customer typically drives their car between 7am and 5pm on weekdays and then goes on various medium distance trips during the weekend. So an unexpected, high-speed journey at 3am on Wednesday can seem suspicious and trigger an alert. Of course, there can be unforeseen events that force customer behavior like that, but then the policyholder can be contacted to verify whether that’s them using the car and if there’s been an emergency. However, if the verification fails, then authorities can be notified and informed of the vehicle’s position in real-time to help recover the vehicle once it’s been confirmed as stolen.

For fleet owners, geo-fencing rules can be established to enhance fleet security. Many of the businesses with fleets operate during specific working hours. At night the company vehicles are parked in designated lots. So, if there is a situation when a vehicle leaves the specific area during the hours it shouldn’t, an automated alert can be triggered. The fleet manager can be then contacted to verify whether the car is being used by the company or if it’s leaving the property unauthorized. If necessary, authorities can be notified about the theft, and the vehicle location can be tracked to enable swift recovery.

Roadside assistance

Vehicle roadside assistance is a service that assists the driver of a vehicle in case of a breakdown. Vehicle roadside assistance is an effort by auto service professionals to sort minor mechanical and electrical repairs and adjustments in an attempt to make a vehicle drivable again. According to just a single roadside assistance company in the US, they receive 1.76 million calls for help a year, which translates to 5,000 calls every day. Clearly, any automation and expediting of the processes can have a significant impact on the effectiveness of operations and the customer experience.

By employing modern technologies like telematics, insurers can streamline the process from the moment the driver notifies the insurer of a breakdown. The company can start a full process aimed at resolving the issue as fast as possible in the least stressful way. Using vehicle location, a tow truck can be dispatched without the need for the customer to try and pinpoint their location. And the insurer can then proceed to locate and book the nearest available replacement vehicle. Furthermore, using the telematics data, an initial assessment of damage can be performed in order to expedite the repair. As an example, the data may indicate that the vehicle has been overheating for several miles before it stopped and that can be useful information for the garage that will try to fix the car.

Predictive maintenance

There are two types of servicing: reactive and proactive. While reactive requires managing a failure after it occurs, the various proactive maintenance approaches allow for some level of planning to address that failure ahead of time. Proactive maintenance enables better vehicle uptime and higher utilization, especially for fleet owners. Telematics is helping to further improve maintenance practices and optimize uptime on the path to predictive maintenance models.

This type of service is best suited for more modern vehicles where the telematics feature is embedded and there is a multitude of different sensors monitoring the vehicle’s health. However, a more basic level of predictive maintenance is achievable with plug-in telematics dongles and devices able to read fault codes.

Using that data, insurers can remind policyholders about things like oil and brake pad changes, which will have an impact on both road safety and vehicle longevity. They can also send alerts about issues like low tire pressure to encourage drivers to refill the tires with air on their own rather than wait for a puncture and require roadside assistance.

The simple preventive maintenance can ultimately save a lot of stress for the driver as it will prevent more severe issues with the car as well as money and time spent on the repairs. For fleet owners, it means increased uptime and better utilization of the vehicles that in turn lead to an increase in profit and lower costs.

Building Telematics-based Insurance Products - Summing Up

Aside from offering policyholders benefits like fairer, lower rates, streamlined claims resolution, and better roadside assistance, telematics technology is a goldmine of data for the insurers . They get a better understanding of driver behavior and associated risk and can adjust the premiums accordingly. In the event of an accident, an adjuster can find out which part of the car was damaged, how severe the impact was, and what is the probability of passengers suffering injuries. Finally, insurance companies can benefit from reduced administration costs by being able to resolve the claim faster and more efficiently.

How to monetize car data - 3 strategies for companies and 28 practical use cases

Data is the currency of the 21st century. Those who have access to it can manage it wisely and draw constructive conclusions to get ahead and outperform the competition. The business model based on their monetization is no longer the domain strictly reserved for the Silicon Valley giants. Also, companies whose products and services are not directly related to data trading are trying their hand in this field. The automotive industry is one of the market sectors where data monetization will soon bring the greatest benefits. It is estimated that by 2030 it will be as much as $ 450-750 billion on a global scale.

In this article, you will learn:

What are the 4 megatrends to increase the amount of data from cars.

* Which technologies will enable better data downloading.

* Who can earn money from vehicle data monetization.

* What are the three main data monetization strategies.

* 28 practical use cases of how you can generate revenue.

The increase in revenues on this account is not only due to the electronics and sensors that are installed inside the vehicles. Social and cultural changes will also contribute to the increase in the amount of generated data - for example, the need to reduce city traffic and the search for ways of traveling alternative to vehicles with combustion engines.

Among the megatrends that will contribute to a greater inflow of data for monetization, the following are usually mentioned:

- electrification;

- connectivity;

- diverse mobility / shared mobility;

- and autonomous driving.

The trends that will transform the way we travel and use vehicles today are opportunities not only for OEMs (original equipment manufacturers), but also for insurance companies, fleet managers, toll providers, fuel retailers, and companies dealing with parking or traffic.

All these industries are increasingly joined by technologies that not only help to collect data but also to process it. The flow of information between these market sectors will enable the development of effective methods of obtaining data and creating new services that can be monetized.

In particular, it will be enabled due to the 8 developing technologies:

1. Big data analytics

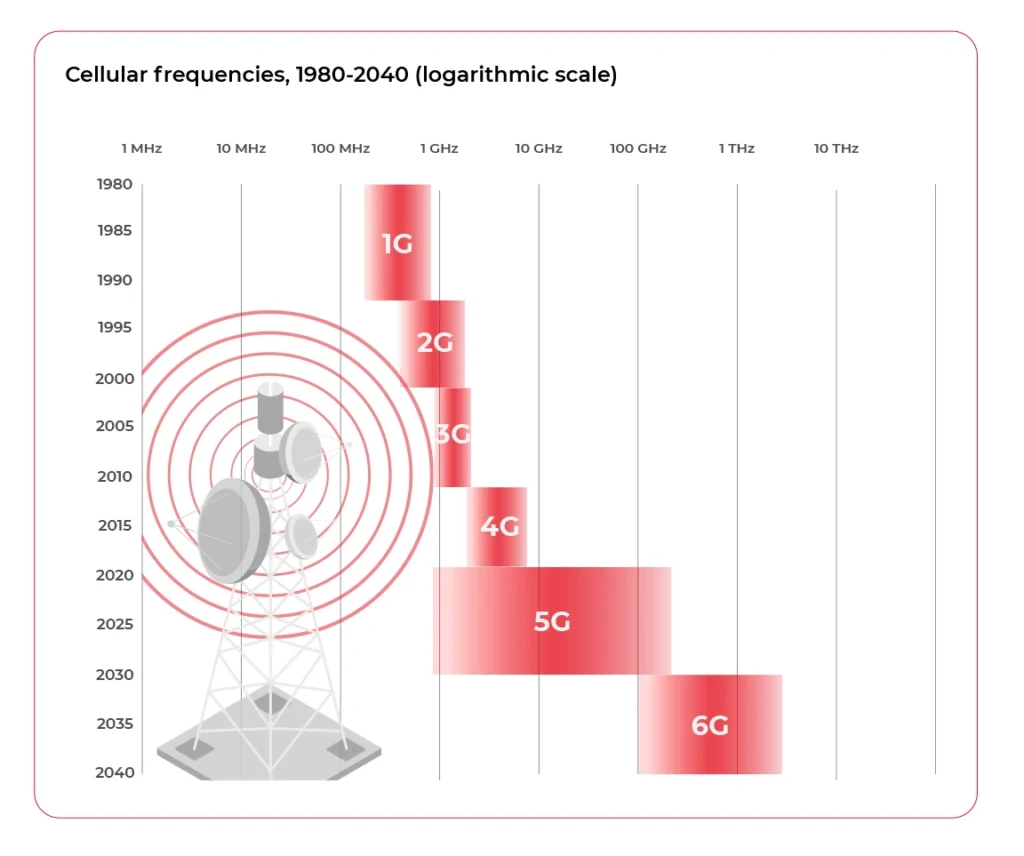

2. High-speed data towers (5G)

3. Software platforms

4. Data cloud

5. High definition maps

6. High-resolution positioning (GPS)

7. Smart road infrastructure

8. V2X communication

Due to the extensive technological infrastructure, the amount of data that can be obtained from the vehicle will increase immeasurably with today's possibilities. It is estimated that in the near future, up to 10,000+ points from which data can be collected will be accessible in the car.

Understand the drivers and their needs

/„The challenge for industry players is that data will not be car-centric, but customer-centric.” – European premium OEM”/

While technology plays a key role in converting data into real currency, we should bear in mind one thing. In fact, the data is generated not by the vehicle, but by its user. It is the user’s attitude towards technology, privacy, and convenience that determines the success of obtaining information. Without their consent and willingness, there is no effective data monetization strategy.

As the examples of Google or Facebook show, the use of data without users' knowledge sooner or later ends in lawsuits, reluctance, and consumers turning their backs on the brand.

So how can you get users' consent to share data?

The answer is simple - although putting it into practice may be a real challenge - offer something in return. If you give something to the driver, they will share the data you care about the most.

Among the universal benefits on which you can build a strategy for obtaining data from drivers, the following are especially noteworthy:

- time savings,

- greater overall comfort and driving comfort,

- increased level of safety,

- reduction of vehicle operating costs,

- entertainment or increasing driving experience.

Research shows that drivers are much more willing to share data about the external environment of the vehicle - e.g., driving conditions, the technical condition of the vehicle, or even its location. However, they are not so eager to share data from their vehicle interior, e.g., the possibility of recording conversations. However, the percentage of such approvals increases dramatically, up to 60%, when drivers are offered more safety in return.

Younger customers and frequent travelers (who spend over 20 hours in their cars weekly) are also more open to this type of service - which results from their attitude to life, as well as personal needs. Differences in attitudes to privacy can also be shown in different markets (e.g., in Asia, Germany, or the USA). This is due to cultural differences, data regulations, and the technological advancement of a given region.

Regardless of where the company operates, in order to consider effective data monetization, you need to answer three key questions:

- WHO drives a given car?

- HOW do they behave behind the wheel?

- WHERE do they drive?

Understanding the consumer's needs and the way they travel is the starting point for developing an effective data monetization strategy. Only then can we choose the right tools and technologies enabling us to turn data into profits.

Monetizing car data - 28 practical use cases

Each case of data collection in a vehicle can potentially be turned into a benefit. It may concern one of the three areas:

- Generating revenue

- Reducing costs

- Increasing safety and security

Data monetization strategies can be based on only one of these assumptions or be a mix of activities from different areas. Let's have a look at the specific methods that are currently developing in the automotive market.

1. Generating revenue

Generating revenue from data in the automotive industry is frequently done by selling new functionalities and services. Usually (but not always), they are directly linked to the vehicle and are aimed at the driver ( direct monetization ).

Due to a large number of sensors and the fact that the car of the future will perfectly know and read the driver's needs, this type of vehicle is also perfect for being fitted with tailored advertising .

The third way of generating income can also be selling data to advertising companies that will use it to promote third-party brands. Obviously, this model causes the most distrust and reservations among the majority of consumers.

Direct monetization

1. Over-the-air-software add-ons / OTA

Do you want your car's operating system to be faster and more efficient? Or maybe you need to have it repaired, but you are too busy to visit your car dealer? Updating your software in the cloud will let you avoid stress and save you time. Analysts estimate that by 2022 automotive companies will have made about 100 million such vehicle updates annually.

2. Networked parking service

Being able to enter the car park without paying the traditional fee, and a suggestion where you can find a free space. Who wouldn't take advantage of such a convenience in congested cities, for a small surcharge or by providing the registration number of their own vehicle? The system of smart car parks connected to the network offers such possibilities.

3. Tracking/theft protection service

A car is often a valuable and indispensable resource for company activities (but also for private individuals). Vehicle theft does not only involve a financial loss but often logistics-related issues, too. Users increasingly often agree to share their location and modern tracking systems that will easily locate the vehicle in the event of theft.

4. Vehicle usage monitoring and scoring

Who wouldn't want to pay less for vehicle insurance or its rental? Systems monitoring drivers’ behavior while driving and evaluating them in line with the regulations may soon become the standard of services offered by insurers and rental companies.

5. Connected navigation service

Real-time road traffic updates, current fuel prices at nearby filling stations, possible parking options, access to repair stations or car wash - all that by means of voice commands and questions we ask our GPS while driving. For such convenience, most drivers will be delighted to share their data.

6. Onboard delivery of mobility-related content /services

7. Onboard platform to purchase non-driving related goods

Just as the phone is no longer used merely for telephone calls, the car increasingly often plays additional roles. Listening to music from the Internet or streaming videos by passengers (or by the driver, when the car is parked) is completely normal today. Soon we can expect that shopping from the driver's seat will also become the order of the day. And not necessarily only shopping for goods related to mobility and the car.

8. Usage-based tolling and taxation

Each road user and road infrastructure is subject to the same tax obligations and fees. Meanwhile, modern technology allows us to monitor how we use the infrastructure and how often we do it. There is an extensive group of drivers who could save a lot by sharing this type of data with road management.

9. „Gamified” / social like driving experience

“Tell me and I will forget, show me and I may remember; involve me and I will understand.” - Confucius said over 2,500 years ago, and nothing has changed since. Having fun, competing with friends, and having personal experience are still the strongest incentives for us to take new action. It also relates to our purchases.

10. Fleet management solution

Managing a fleet of vehicles, each in different locations, driven by a different driver, and carrying a different load is a real challenge. Unless the entire fleet is managed using one central platform that collects data from individual vehicles. Then everything is close at hand.

11. In-car hot-spot

Mobile internet onboard? Not only the driver who can update necessary data and stay in touch with the base (in the case of a fleet vehicle) will benefit, but also the passengers. In-car hot-spot is an ideal product for companies from the telecom industry, travel companies, insurers, and fleets.

Tailored advertising

12. Predictive maintenance

Advertising is not scared of any medium and, like a chameleon, it adjusts to the environment in which it appears. A car that, just like a smartphone, gets new functions every now and then, becomes an ideal place for such activities. Especially those messages that help drivers predict possible breakdowns and remind them about the upcoming service or oil change are highly appreciated.

13. Targeted advertisements and promotions

Apart from targeting advertisements in terms of the needs related to the vehicle operation, advertisers can also select ads based on who and where is driving the car, the driver's age, gender, or interests. Of course, the accuracy of targeting depends on the amount of data that can be obtained from the vehicle user. Drivers can therefore see displayed ads based on their current and past behavior and linked to the businesses and places featured on their route.

Selling data

Gathering vehicle data and selling it to third parties ? We only mention this point because, being experts, we feel it is our duty. As the previous and subsequent use cases show, there are many more creative ways, approved by drivers, that will allow them to benefit from car data.

2. Reducing costs

Data is a mine of information. Companies from the automotive industry can earn money not only by selling new products but also by enhancing existing solutions, reducing R&D costs, or offering cheaper services to users. Potentially, not only producers but also end users can benefit from data acquisition.

R&D and material costs reduction

14. Warranty costs reduction

Every year, companies from the automotive industry spend huge amounts of money on user warranty services. Data on how the vehicle is used, or what breaks down the most often can not only improve the service process itself and increase consumer satisfaction but also help make real savings in companies. Based on the analyzed information, it is possible to more precisely select the scope of warranty and its duration and even adjust it to specific users.

15. Traffic data based retail footprint and stock level optimization

By using advanced geospatial analysis, traditional stores and malls are capable of locating heavy-traffic areas. Wherever the number of vehicles and the frequency of trips increases, there is a potential for greater sales. It is also easier to plan and adjust the stock, expecting potential consumer interest. Companies from the automotive industry, which have data from vehicles, are a natural business partner for this submarket.

16. Data feedback based R&D optimization

Regardless of the sector in which we operate, the R&D department cannot exist without market feedback, looking for new trends and insights. In the automotive industry, continuous product optimization is the key to success. Data provided by managers is a constant source of inspiration and optimization that can contribute to a company's market position. Of course, provided that they are properly analyzed and used for new products.

Customers cost reduction

17. Usage-based insurance PAYD / PHYD

Switching from an insurance based on accident history to insurance based on date, time, and actual driving style? The advantages for the insurer do not need any explanation. For drivers who travel safely on the road and have nothing to be ashamed of, pay as you drive (PAYD) or pay how you drive (PHYD) insurance certainly has unquestionable benefits and is worth sacrificing a bit of privacy.

18. Driving style suggestions

Do you want to know how to drive more economically? How to adjust the speed to the road conditions or shorten the travel time? Systems installed in connected cars will be happy to help you with this. All you have to do is share information about how you are getting on behind the wheel.

19. E-hailing

24/7 availability, the possibility to order a ride from a location where there are no traditional taxis, the ease of paying via a mobile application. There are many advantages to using the services of brands such as Uber or Lyft. Although no one heard about these companies a few years ago, today they set trends related to our mobility . All due to the skillful use of data and the creation of a business model based on the driver and passenger benefit.

20. Carpooling

Fuel economy and pro-ecological trends increasingly contribute to our conscious use of vehicles. Instead of driving alone, we share travel costs increasingly often and invite other people to travel with us. The creation of applications and infrastructure based on consumer data, which will facilitate driver and passengers recognition, is an ideal model for companies from the automotive industry.

21. P2P car sharing

Your car is parked in the garage because you cycle daily or use public transport? Rent it to other drivers via the peer-to-peer platform and earn money. Of course, the company behind the mentioned application that connects both parties will also earn a few bobs on it, as that's what its business model is all about.

22. Trucks platooning

Connecting vehicles into convoys has existed as long as traffic. However, today's technology and data flow offer additional benefits. Trucks platooning is the creation of a convoy using communication technology and automated driving assistance systems. In such a convoy, one of the cars is the "leader", and the rest adapt to its actions, requiring no or little action from the drivers. Advantages for companies organizing a convoy? Lower Co2 emissions (up to 16% from the trailing vehicles and by up to 8% from the lead vehicle), better road safety, saving drivers time, and getting tasks done faster.

Improved customer satisfaction

23. Early recall detection and software updates

The data received from the vehicle enables early detection of faults and prevents unnecessary problems on the road, and even more - it allows to repair them remotely in the OTA (over-the-air) model. Thanks to such amenities, the driver does not have to download the required software or visit their authorized dealer in person to repair the vehicle.

3. Increasing safety and security

24. Driver’s condition monitoring service

Drowsiness and fatigue are some of the most common factors contributing to road accidents. Thanks to driver monitoring systems in the form of infrared sensors and a camera integrated into the steering wheel, the vehicle can warn the driver in advance and recognize symptoms that could lead to an accident or falling asleep at the wheel. This is one of the amenities that drivers most often agree on when it comes to sharing vehicle data.

25. Improved road/infrastructure maintenance and design

Analyzing data from vehicles can help both the drivers themselves and the road service. For instance, when cars regularly skid at some point - which is detected by ESP / ABS systems, road workers can introduce certain speed limits or improve the road profile. This type of data is also useful in planning road repairs when the renovation needs to be planned during less traffic volume.

26. Breakdown call service

Tyre pressure monitoring, battery and engine condition, fuel level, and electricity drops in the vehicle. Monitoring such data can prevent more than one accident, and should it happen, it helps the driver overcome the obstacles much faster. When roadside assistance knows where the driver is and what exactly happened to the car, it can react much faster or instruct the driver how to fix the problem.

27. Emergency call service

Data from connected cars can save not only our holidays but also our lives. When every second counts and the driver or other road users cannot call an ambulance or fire brigade, the connected car will do it for them. Thanks to the emergency call service option, the vehicle sends information about the location of the vehicle and its status to the appropriate services.

28. Road laws monitoring on enforcement

Data collected from vehicles - especially on a large scale - can tell a lot about the way a given group drives or about the compliance with the rules of the road. Providing data from your own vehicle to the traffic law monitoring services can improve our habits, reduce the number of road hogs and drunk drivers, and help adjust the law to new conditions.

Crucial factors in data monetization

The data stream generated by vehicles will increase year by year. In order to be well prepared for the monetization of this information and not to miss the opportunities for the automotive industry for additional sources of income, it is crucial to take care of several key issues.

- First of all : find a steadfast IT partner with experience in the field, who will supplement the competencies of the OEM with cloud solutions, AI, and building platforms based on data monitoring and analysis.

- Secondly : constantly create and test car products and services based on real needs and amenities for customers - which is inherently related to the next point.

- Thirdly : create an open policy for the management of customers’ data that rules out trading in confidential information or unclear or misleading regulations of data use.

Only the development of a business strategy based on all these assumptions can bring real benefits and help stand out from the competitors.

As you can see, this is not a simple and quick process to implement, as many entities are involved in it, and various interest groups may clash. So, is the game worth the candle? The answer is in the stories of telephone companies that used to believe that the telephone should only be used for making calls, and it did not have to be smart.

Whether we like it or not, vehicles are changing right before our very eyes and are increasingly often used not only for getting from A to B. People who do not understand it and do not see the opportunities facing the automotive industry may soon share the fate of the mobile giants from over a dozen years ago.

The future of autonomous driving connectivity – Quantum entanglement or 6G?

The title of the article is quite deceiving - both mentioned technologies are currently just distant concepts based on widely divergent connectivity mediums. It’s still a distant future, but let’s think for a while about where we are now, what awaits us in the very near future and where we are heading in the long term.

Autonomous driving and the whole Connected Car concept benefits greatly from internet connectivity. Traffic information, being able to request information about nearby cars, navigation, infrastructures like traffic lights, parking, or charging stations - all of that affects the decision about the actual path to be taken by the vehicle or driver.

Some of the systems are rather insensitive to the network bandwidth, for example, the layout of the roads does not require updates every second. On the other hand information about red light or vehicles losing traction nearby are critical and lowering latency directly affects the safety.

What technologies provide connectivity for autonomous driving?

These days cars mainly use the common mobile technology for connectivity: GPRS/EDGE, 3G/HSDPA, LTE, and 4G switching dynamically depending on network coverage. As the availability of 5G increases, the obvious next step is implementing it in the vehicle modems.

Can connected cars rely on 5G?

Obviously, 5G will never be available everywhere. The technology itself is a limitation here - it is millimeter-wave connectivity resulting in 2% of range compared to 4G (300-600m compared to 10-15km). Additionally, the latest Ericsson report predicts that by the end of 2026, 5G coverage is expected to reach 60 percent of the global population, while this still means mainly densely populated areas like cities and suburbs.

5G solves the latency and bandwidth problem but does not give full coverage, especially for rural areas and highways. Is there nothing more we can use to improve the situation? Not at all, multiple alternatives are being developed right now in parallel.

What are the alternatives to 5G?

There is IEE80211.p (WAVE - Wireless Access for the Vehicular Environment) based on the Wi-Fi WLAN standard focusing on improving the stability of the connection between high-speed vehicles. This is short-range, Vehicle2Vehicle and Vehicle2Infrarstructure communication.

While the 5G is not yet fully there, the 6G is starting to form. The successor of the 5th generation of the wireless cellular network is planned to increase the bandwidth, greatly allowing for extremely data consuming, real-time services to be built - like dynamic Virtual Reality streaming. The groups, like the Next G Alliance, are working on defining technical aspects and testing multiple possibilities, like THz wave frequencies as a physical medium for communication.

The other promising development is the LEO (Low Earth Orbit) satellite network, with a Starlink created by Elon Musk being the most popular currently available. This is no match in terms of latency to both 5G and 6G, but the unprecedented coverage and worldwide availability make it a great solution for situations, where the bandwidth is critical, while moderate latency is still sufficient.

The most futuristic medium, the quantum entanglement from the title of this article, seemed like the Holy Grail of communication - faster than light, meaning no latency at all. When the scientists announced that quantum entanglement works and was observed by comparing distant, entangled particles, the world held its breath. But in the end, there is currently no way to transmit anything this way - quantum entanglement breaks if one of the particles in the pair is forced to a particular quantum state. It’s disappointing but shows us that there may be a totally new way for communication still to be discovered.

Sum up: what connection type will be fueling Connected and Autonomous Cars

So what is the future of communication for Connected Cars and Autonomous Driving? 5G, 6G, satellite or wifi? The answer is all of them. As cars right now can dynamically switch between different kinds of mobile networks, in the future, they should also be able to pick the lowest latency connection available from a mobile network, satellite, wifi or whatever will be the future, or even use multiple simultaneously depending on the system requirements. Because there is no one best solution for all geographical regions, in-car systems, and conflicting requirements. Hybrid connectivity is the future of automotive connectivity.

Monitoring your microservices on AWS with Terraform and Grafana - monitoring

Welcome back to the series. We hope you’ve enjoyed the previous part and you’re back to learn the key points. Today we’re going to show you how to monitor the application.

Monitoring

We would like to have logs and metrics in a single place. Let’s imagine you see something strange on your diagrams, mark it with your mouse, and immediately have proper log entries from this particular timeframe and this particular machine displayed below. Now, let’s make it real.

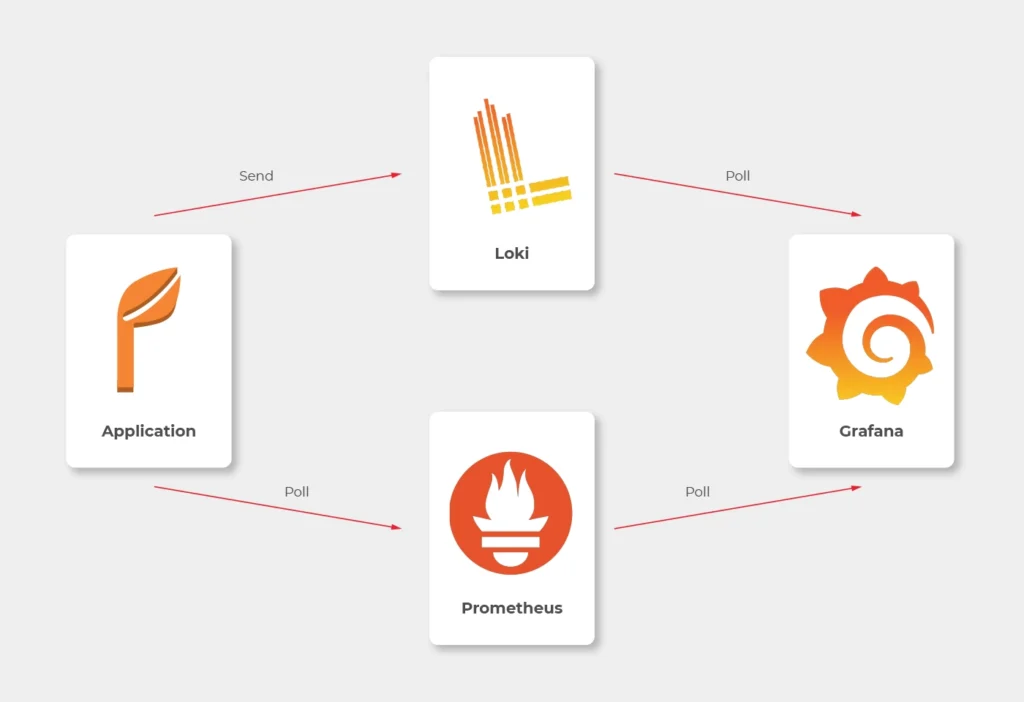

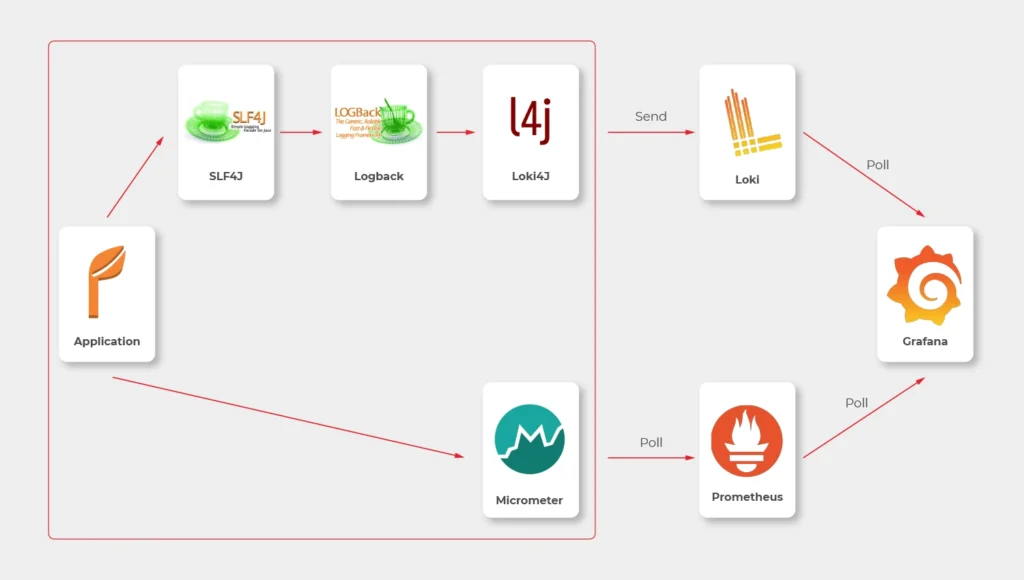

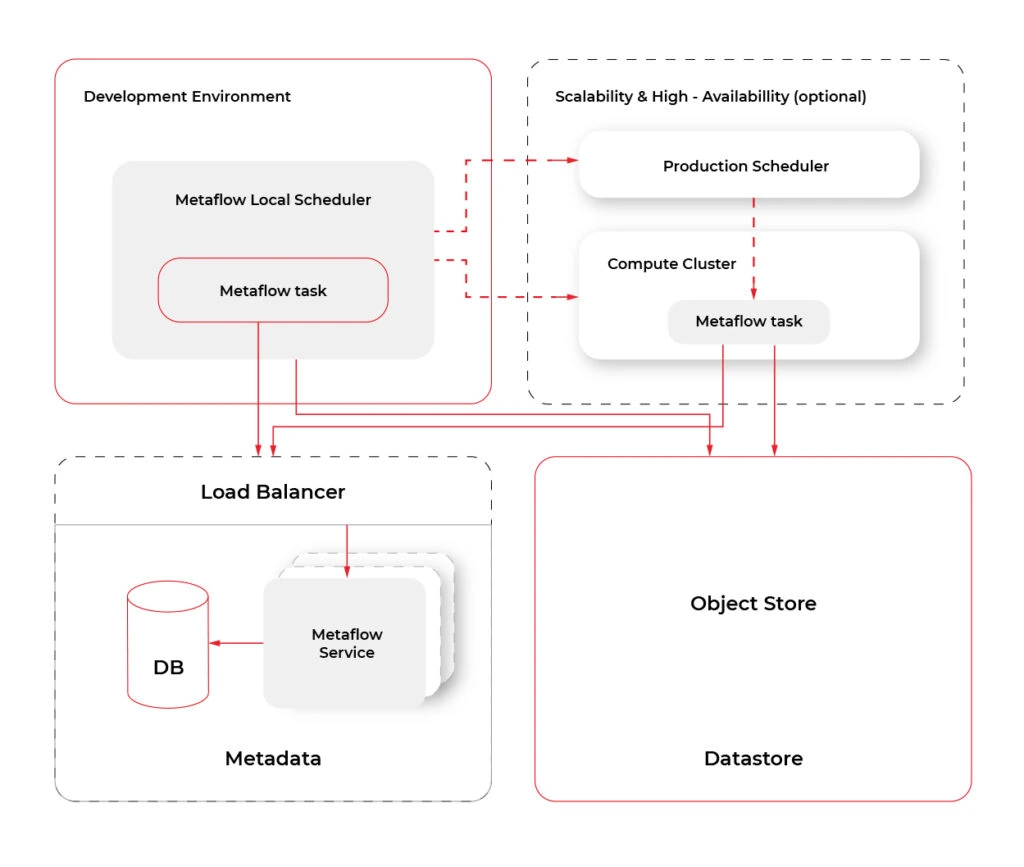

Some basics first. There is a huge difference between the way Prometheus and Loki get the data. Both of them are being called by Grafana to poll data, but Prometheus also actively calls the application to poll metrics. Loki, instead, just listens, so it needs some extra mechanism to receive logs from applications.

In most sources over the Internet, you’ll find that the best way to send logs to Loki is to use Promtail. This is a small tool, developed by Loki’s authors, which reads log files and sends them entry by entry to remote Loki’s endpoint. But it’s not perfect. Sending multiline logs is still in a bad shape (state for February 2021), some config is really designed to work with Kubernetes only and at the end of the day, this is one more additional application you would need to run inside your Docker image, which can get a little bit dirty. Instead, we propose to use a loki4j logback appender (https://github.com/loki4j). This is a zero-dependency Java library designed to send logs directly from your application.

There is one more Java library needed - Micrometer . We’re going to use it to collect metrics of the application.

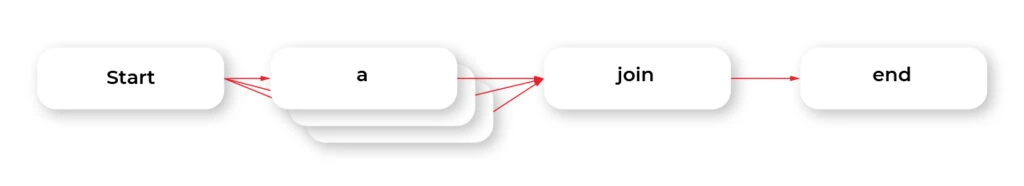

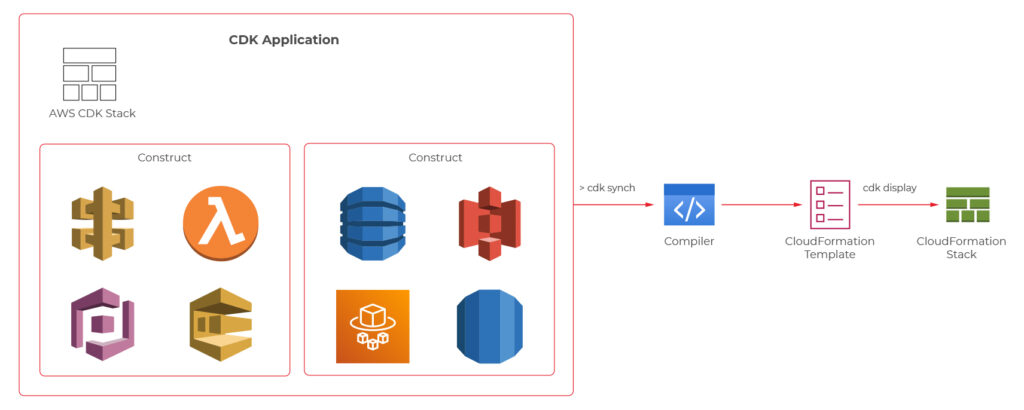

So, the proper diagram should look like this.

Which means, we need to build or configure the following pieces:

- slf4j (default configuration is enough)

- Logback

- Loki4j

- Loki

- Micrometer

- Prometheus

- Grafana

Micrometer

Let’s start with metrics first.

There are just three things to do on the application side.

The first one is to add a dependency to the Micrometer with Prometheus integration (registry).

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

Now, we have a new endpoint exposable from Spring Boot Actuator, so we need to enable it.

management:

endpoints:

web:

exposure:

include: prometheus,health

This is a piece of configuration to add. Make sure you include prometheus in both config server and config clients’ configuration. If you have some Web Security configured, make sure to enable full access to /actuator/health and /actuator/prometheus endpoint.

Now we would like to distinguish applications in our metrics, so we have to add a custom tag in all applications. We propose to add this piece of code as a Java library and import it with Maven.

@Configuration

public class MetricsConfig {

@Bean

MeterRegistryCustomizer<MeterRegistry> configurer(@Value("${spring.application.name}") String applicationName) {

return (registry) -> registry.config().commonTags("application", applicationName);

}

}

Make sure you have spring.application.name configured in all bootstrap.yml files in config clients and application.yml in the config server.

Prometheus

The next step is to use a brand new /actuator/prometheus endpoint to read metrics in Prometheus.

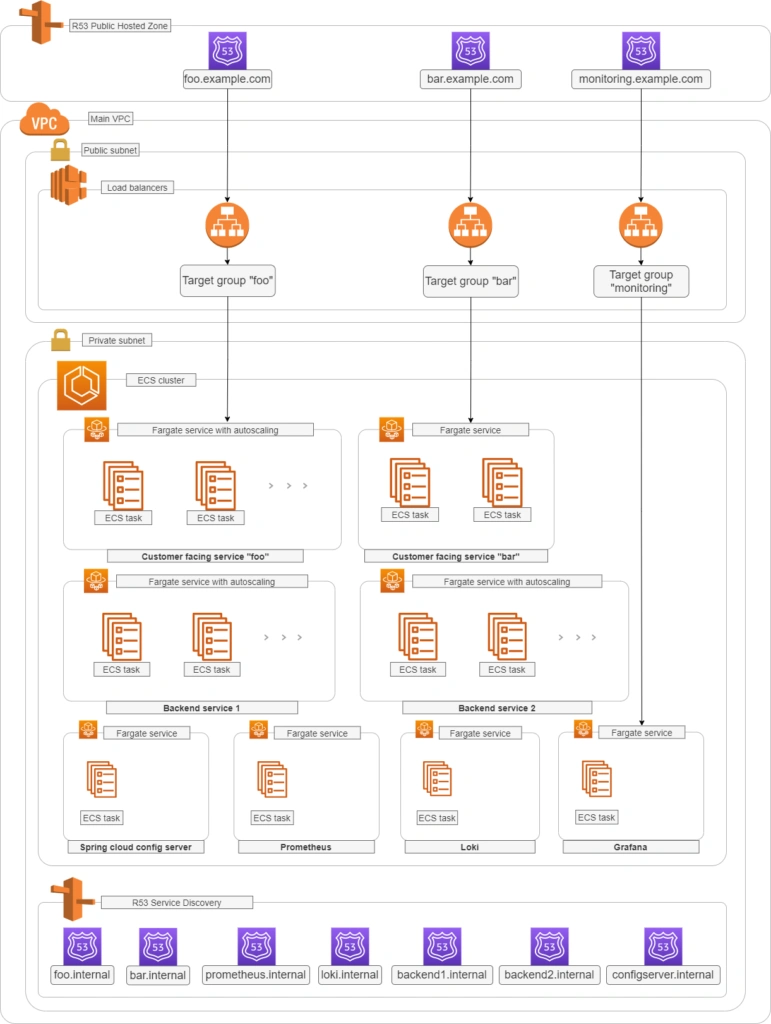

The ECS configuration is similar to backend services. The image you need to push to your ECR should look like that.

FROM prom/prometheus

COPY prometheus.yml .

ENTRYPOINT prometheus --config.file=prometheus.yml

EXPOSE 9090

As Prometheus doesn’t support HTTPS endpoints, it’s just a temporary solution, and we’ll change it later.

The prometheus.yml file contains such a configuration.

scrape_configs:

- job_name: 'cloud-config-server'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$cloud_config_server_url'

type: 'A'

port: 8888

- job_name: 'foo'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$foo_url

type: 'A'

port: 8080

- job_name: bar

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$bar_url

type: 'A'

port: 8080

- job_name: 'backend_1'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$backend_1_url

type: 'A'

port: 8080

- job_name: 'backend_2'

metrics_path: '/actuator/prometheus'

scrape_interval: 5s

dns_sd_configs:

- names:

- '$backend_2_url

type: 'A'

port: 8080

Let’s analyse the first job as an example.

We would like to call '$cloud_config_server_url' url with '/actuator/prometheus' relative path on a port 8080 . As we’ve used dns_sd_configs and type: 'A', the Prometheus can handle multivalue DNS answers from the Service Discovery, to analyze all tasks in each service. Please make sure you replace all ' $x' variables in the file with proper URLs from the Service Discovery.

The Prometheus isn’t exposed to the public load balancer, so you cannot verify your success so far. You can expose it temporarily or wait for Grafana.

Logback and Loki4j

If you use the Spring Boot, you probably already have spring-boot-starter-logging

library included. Therefore, you use logback as the default slf4j integration. Our job now is to configure it to send logs to Loki. Let’s start with the dependency:

<dependency>

<groupId>com.github.loki4j</groupId>

<artifactId>loki-logback-appender</artifactId>

<version>1.1.0</version>

</dependency>

Now let’s configure it. The first file is called logback-spring.xml and located in the config server next to the application.yml (1) file.

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/>

<appender name="Console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${LOG_PATTERN}</pattern>

</encoder>

</appender>

<springProfile name="aws">

<appender name="Loki" class="com.github.loki4j.logback.Loki4jAppender">

<http>

<url>${LOKI_URL}/loki/api/v1/push</url>

</http>

<format class="com.github.loki4j.logback.ProtobufEncoder">

<label>

<pattern>application=spring-cloud-config-server,instance=${INSTANCE},level=%level</pattern>

</label>

<message>

<pattern>${LOG_PATTERN}</pattern>

</message>

<sortByTime>true</sortByTime>

</format>

</appender>

</springProfile>

<root level="INFO">

<appender-ref ref="Console"/>

<springProfile name="aws">

<appender-ref ref="Loki"/>

</springProfile>

</root>

</configuration>

What do we have here? There are two appenders with the common pattern, and one root logger. So we start with pattern configuration <property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/> . Of course you can configure it, as you want.

Then, the standard console appender. As you can see, it uses the LOG_PATTERN .

Then you can see the com.github.loki4j.logback.Loki4jAppender appender. This way the library is being used. We’ve used < springProfile name="aws" > profile filter to enable it only in the AWS infrastructure and disable locally. We use the same when using the appender with appender-ref ref="Loki" . Please note the label pattern, used here to label each log with custom tags (application, instance, level). Another important part here is Loki’s URL. We need to provide it as an environment variable for the ECS task. To do that, you need to add one more line to your aws_ecs_task_definition configuration in terraform.

"environment" : [

...

{ "name" : "LOKI_URL", "value" : "loki.internal" }

],

As you can see, we defined “loki.internal” URL and we’re going to create it in a minute.

There are few issues with logback configuration for the config clients.

First of all, you need to provide the same LOKI_URL environment variable to each client, because you need Loki before reading config from the config server.

Now, let’s put another logback-spring.xml file in the config server next to the applic ation.yml (2) file.

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level [%thread] %logger - %msg%n"/>

<springProperty scope="context" name="APPLICATION_NAME" source="spring.application.name"/>

<appender name="Console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>\${LOG_PATTERN}</pattern>

</encoder>

</appender>

<springProfile name="aws">

<appender name="Loki" class="com.github.loki4j.logback.Loki4jAppender">

<http>

<requestTimeoutMs>15000</requestTimeoutMs>

<url>\${LOKI_URL}/loki/api/v1/push</url>

</http>

<format class="com.github.loki4j.logback.ProtobufEncoder">

<label>

<pattern>application=\${APPLICATION_NAME},instance=\${INSTANCE},level=%level</pattern>

</label>

<message>

<pattern>\${LOG_PATTERN}</pattern>

</message>

<sortByTime>true</sortByTime>

</format>

</appender>

</springProfile>

<root level="INFO">

<appender-ref ref="Console"/>

<springProfile name="aws"><appender-ref ref="Loki"/></springProfile>

</root>

</configuration>

The first change to notice are slashes before environment variables (eg. \${LOG_PATTERN } ). We need it to tell the config server not to resolve variables on it’s side (because it’s impossible). The next difference is a new variable <springProperty scope="context" name="APPLICATION_NAME" source="spring.application.name"/> . with this line and spring.application.name in all your applications each log will be tagged with a different name. There is also a trick with the ${INSTANCE} variable. As Prometheus uses IP address + port as an instance identifier and we want to use the same here, we need to provide this data to each instance separately.

So your Dockerfile files for your applications should have something like that.

FROM openjdk:15.0.1-slim

COPY /target/foo-0.0.1-SNAPSHOT.jar .

ENTRYPOINT INSTANCE=$(hostname -i):8080 java -jar foo-0.0.1-SNAPSHOT.jar

EXPOSE 8080

Also, to make it working, you are supposed to tell your clients to use this configuration. Just add this to bootstrap.yml files in all you config clients.

logging:

config: ${SPRING_CLOUD_CONFIG_SERVER:http://localhost:8888}/application/default/main/logback-spring.xml

spring:

application:

name: foo

That’s it, let’s move to the next part.

Loki

Creating Loki is very similar to Prometheus. Your dockerfile is as follows.

FROM grafana/loki

COPY loki.yml .

ENTRYPOINT loki --config.file=loki.yml

EXPOSE 3100

The good news is, you don’t need to set any URLs here - Loki doesn’t send any data. It just listens.

As a configuration, you can use a file from https://grafana.com/docs/loki/latest/configuration/examples/ . We’re going to adjust it later, but it’s enough for now.

Grafana

Now, we’re ready to put things together.

In the ECS configuration, you can remove service discovery stuff and add a load balancer, because Grafana will be visible over the internet. Please remember, it’s exposed at port 3000 by default.

Your Grafana Dockerfile should be like that.

FROM grafana/grafana

COPY loki_datasource.yml /etc/grafana/provisioning/datasources/

COPY prometheus_datasource.yml /etc/grafana/provisioning/datasources/

COPY dashboad.yml /etc/grafana/provisioning/dashboards/

COPY *.json /etc/grafana/provisioning/dashboards/

ENTRYPOINT [ "/run.sh" ]

EXPOSE 3000

Let’s check configuration files now.

loki_datasource.yml:

apiVersion: 1

datasources:

- name: Loki

type: loki

access: proxy

url: http://$loki_url:3100

jsonData:

maxLines: 1000

I believe the file content is quite obvious (we'll return here later).

prometheus_datasource.yml:

apiVersion: 1

datasources:

- name: prometheus

type: prometheus

access: proxy

orgId: 1

url: https://$prometheus_url:9090

isDefault: true

version: 1

editable: false

dashboard.yml:

apiVersion: 1

providers:

- name: 'Default'

folder: 'Services'

options:

path: /etc/grafana/provisioning/dashboards

With this file, you tell Grafana to install all json files from /etc/grafana/provisioning/dashboards directory as dashboards.

The last leg is to create some dashboards. You can, for example, download a dashboard from https://grafana.com/grafana/dashboards/10280 and replace ${DS_PROMETHEUS} datasource with your name “prometheus”.

Our aim was to create a dashboard with metrics and logs at the same screen. You can play with dashboards as you want, but take this as an example.

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": "-- Grafana --",

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"type": "dashboard"

}

]

},

"editable": true,

"gnetId": null,

"graphTooltip": 0,

"id": 2,

"iteration": 1613558886505,

"links": [],

"panels": [

{

"aliasColors": {},

"bars": false,

"dashLength": 10,

"dashes": false,

"datasource": null,

"fieldConfig": {

"defaults": {

"custom": {}

},

"overrides": []

},

"fill": 1,

"fillGradient": 0,

"gridPos": {

"h": 8,

"w": 24,

"x": 0,

"y": 0

},

"hiddenSeries": false,

"id": 4,

"legend": {

"avg": false,

"current": false,

"max": false,

"min": false,

"show": true,

"total": false,

"values": false

},

"lines": true,

"linewidth": 1,

"nullPointMode": "null",

"options": {

"alertThreshold": true

},

"percentage": false,

"pluginVersion": "7.4.1",

"pointradius": 2,

"points": false,

"renderer": "flot",

"seriesOverrides": [],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "system_load_average_1m{instance=~\"$instance\", application=\"$application\"}",

"interval": "",

"legendFormat": "",

"refId": "A"

}

],

"thresholds": [],

"timeRegions": [],

"title": "Panel Title",

"tooltip": {

"shared": true,

"sort": 0,

"value_type": "individual"

},

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

},

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"datasource": "Loki",

"fieldConfig": {

"defaults": {

"custom": {}

},

"overrides": []

},

"gridPos": {

"h": 33,

"w": 24,

"x": 0,

"y": 8

},

"id": 2,

"options": {

"showLabels": false,

"showTime": false,

"sortOrder": "Ascending",

"wrapLogMessage": true

},

"pluginVersion": "7.3.7",

"targets": [

{

"expr": "{application=\"$application\", instance=~\"$instance\", level=~\"$level\"}",

"hide": false,

"legendFormat": "",

"refId": "A"

}

],

"timeFrom": null,

"timeShift": null,

"title": "Logs",

"type": "logs"

}

],

"schemaVersion": 27,

"style": "dark",

"tags": [],

"templating": {

"list": [

{

"allValue": null,

"current": {

"selected": false,

"text": "foo",

"value": "foo"

},

"datasource": "prometheus",

"definition": "label_values(application)",

"description": null,

"error": null,

"hide": 0,

"includeAll": false,

"label": "Application",

"multi": false,

"name": "application",

"options": [],

"query": {

"query": "label_values(application)",

"refId": "prometheus-application-Variable-Query"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

},

{

"allValue": null,

"current": {

"selected": false,

"text": "All",

"value": "$__all"

},

"datasource": "prometheus",

"definition": "label_values(jvm_classes_loaded_classes{application=\"$application\"}, instance)",

"description": null,

"error": null,

"hide": 0,

"includeAll": true,

"label": "Instance",

"multi": false,

"name": "instance",

"options": [],

"query": {

"query": "label_values(jvm_classes_loaded_classes{application=\"$application\"}, instance)",

"refId": "prometheus-instance-Variable-Query"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

},

{

"allValue": null,

"current": {

"selected": false,

"text": [

"All"

],

"value": [

"$__all"

]

},

"datasource": "Loki",

"definition": "label_values(level)",

"description": null,

"error": null,

"hide": 0,

"includeAll": true,

"label": "Level",

"multi": true,

"name": "level",

"options": [

{

"selected": true,

"text": "All",

"value": "$__all"

},

{

"selected": false,

"text": "ERROR",

"value": "ERROR"

},

{

"selected": false,

"text": "INFO",

"value": "INFO"

},

{

"selected": false,

"text": "WARN",

"value": "WARN"

}

],

"query": "label_values(level)",

"refresh": 0,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"tagValuesQuery": "",

"tags": [],

"tagsQuery": "",

"type": "query",

"useTags": false

}

]

},

"time": {

"from": "now-24h",

"to": "now"

},