What Is an exit strategy and why do you need one?

Table of contents

Schedule a consultation with software experts

Contact usCloud is everywhere these days. There is no doubt about that. Everyone already did or is about to make an important decision - which cloud provider to choose?

For enterprise solutions, the first thought goes to the big players: Amazon, Microsoft, Google. They provide both private and public cloud solutions using their own set of APIs, command-line tools and of course pricing.

It is very easy to get in, but is it the same with getting out? All your services may already benefit from great tools and services shared by your provider. Awesome tools, but specific to the company. Does Amazon CLI work with Azure? Not really.

The important part is that the scale of your infrastructure you can manage. The software can be optimized, to some point, but still, it may be a viable choice. Resources can be hosted using CDN to better manage bandwidth and storage cost. But what can be done if the provider increased the prices? How this can be mitigated?

The exit strategy

This is why you need an exit strategy. The plan to move all your resources safely and possibly without any interruptions from one warehouse to another. Or even the plan to migrate from one provider to the another. The reasons for the change may be very different. Pricing change, network problems, low latency or even a law change. All this may push your company against the wall. It is fine when you are prepared, but how to do this?

It may seem more on the paranoic side or catastrophic thinking, but in reality, it is not so uncommon. Even worse case, which is a provider shut down, happened a lot lately. It is especially visible with an example of cloud storage startups. $15 million dollars CloudMine startup filed for bankruptcy last year. A few years ago the same thing happened to Nirvanix and Megacloud. Nobody expected that and a lot of companies has been facing that problem - how can we safely move all the data if everything can disappear in 2 weeks?

Does it mean AWS will go down tomorrow? Probably not, but would you like to bet if it will be there in 10 years? A few years ago nobody even heard about Alibaba Cloud, and now they have 19 datacenters worldwide. The world is moving so fast that nobody can say what tomorrow brings.

How to mitigate the problem?

So we have established what the problem is. Now let’s move to the solutions. In the following paragraphs, I will briefly paint a picture of what consists of an exit strategy and may help you move towards it.

One of them is to use a platform like Cloud Foundry or Kubernets which can enable you to run your services on any cloud. All big names offer some kind of hosted Kubernetes solutions: Amazon ECS, Microsoft AKS and Google GKE. Moving workloads from one Kubernetes deployment to another, even private hosted, is easy.

This may not be enough though. Sometimes you have more than containers deployed. The crucial part then will be to have infrastructure as a config. Consider if the way you deploy your platform to IaaS is really transferable? Do you use provider-specific databases, storage or services? Maybe you should change some solutions to more universal ones?

Next part will be to make sure services your write, deploy and configure are truly cloud-native. Are they really portable in a way you can get the same source code or docker image and run on the different infrastructure? Are all external services loosely coupled and bounded to your application so you can easily exchange them? Or if all your microservices platforms are independent?

Last, but not least is to backup everything. Really, EVERYTHING. Not just all your data, but also the configuration of services, infrastructure, and platforms. If you can restore everything from the ground up to the working platform in 24 hours you are better than most of your competitors.

So why do I need one?

Avoiding provider lock-in may not be easy, especially when your company just started to use AWS or Azure. You may not feel very comfortable creating an exit strategy for different providers or just don’t know where to start. There are solutions, like Grape Up’s Cloudboostr, that manage backups and multi-cloud interoperability out of the box. Using this kind of platform may save you a lot of pain.

An exit strategy gives you a lot of freedom. New, small cloud provider comes to the market and gives you a very competitive price? You can move all your services to their infrastructure. Especially when we consider that small cloud providers can be more flexible and more keen to adapt to their client needs.

An exit plan gives safety, freedom, and security. Do not think of it as optional. The whole world goes toward the multi-cloud and the hybrid cloud . According to Forbes, this is what 2019 will matter most in the cloud ecosystem. Do you want to stay behind? Or are you already prepared for the worse?

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Automating your enterprise infrastructure. Part 1: Introduction to cloud infrastructure as code (AWS Cloud Formation example)

This is the first article of the series that presents the path towards automated infrastructure deployment. In the first part, we focus on what Infrastructure as Code actually means, its main concepts and gently fill you in on AWS Cloud Formation. In the next part , we get some hands-on experience building and spinning up Enterprise Level Infrastructure as Code.

With a DevOps culture becoming a standard, we face automation everywhere. It is an essential part of our daily work to automate as much as possible. It simplifies and shortens our daily duties, which de facto leads to cost optimization. Moreover, respected developers, administrators, and enterprises rely on automation because it eliminates the probability of human error (which btw takes 2nd place when it comes to security breach causes ).

Additionally, our infrastructure gets more and more complicated as we evolve towards cloud-native and microservice architectures. That is why Infrastructure as code (IaC) came up. It’s an answer to the growing complexity of our systems.

What you’ll find in this article:

- We introduce you to the IaC concept - why do we need it?

- You’ll get familiar with the AWS tool for IaC: Cloud Formation

Why do we need to automate our enterprise infrastructure?

Let’s start with short stories. Close eyes and imagine this:

Sunny morning, your brand new startup service is booming. A surge of dollars flows into Your bank account. The developers have built nice microservice-oriented infrastructure, they’ve configured AWS infrastructure, all pretty shiny. Suddenly, You receive a phone call from someone who says that Amazon's cleaning lady slipped into one of the AWS data centers, fall on the computing rack, therefore the whole Availability Zone went down. Your service is down, users are unhappy.

You tell your developers to recreate the infrastructure in a different data center as fast as they can. Well, it turns out that it’s not possible as fast as you would wish. Last time, it took them a week to spin up the infrastructure, which consists of many parts… you’re doomed.

The story is an example of Disaster Recovery , or rather a lack of it. No one thought that anything might go wrong. But as Murphy’s law says: Anything that can go wrong will go wrong

The other story:

As a progressive developer, you’re learning bleeding-edge cloud technologies to keep up with changing requirements for your employer. You decided to use AWS. Following Michal's tutorial , you happily created your enterprise-level infrastructure. After a long day, you cheerfully lay down to bed. The horror begins when you enter your bank account at the end of the month. Seems that Amazon charged you, for the resources you didn’t delete.

You think these scenarios are unreal? Get to know these stories:

How do You avoid these scenarios? The simple answer to that is IaC.

Infrastructure as Code

Infrastructure as Code is a way to create a recipe for your infrastructure. Normally, a recipe consists of two parts: ingredients and directions/method on how to turn ingredients into the actual dish. IaC is similar, except the narration is a little bit different.

In practice, IaC says:

Keep your IaC scripts (infrastructure components definition) right next to your application code in the Git repository. Think about those definitions as simple text files containing descriptions of your infrastructure. In comparison to the metaphor above, IaC scripts (infrastructure components definitions) are ingredients .

IaC also tells you this:

Use or build tools that will seamlessly turn your IaC scripts into actual cloud resources. So translating that: use or build tools that will seamlessly turn your ingredients (IaC scripts) into a dish (cloud resources).

Nowadays, most IaC tools do the infrastructure provisioning for you and keep it idempotent . So, you just have to prepare the ingredients. Sounds cool, right?

Technically speaking, IaC states that similarly to the automated application build & deployment processes and tools, we should have processes and tools targeted for automated infrastructure deployment .

An important thing to note here is that the approach described above leans you towards GitOps and trunk-based CICD . It is not a coincidence that these concepts are often listed one next to the other. Eventually, this is a big part of what DevOps is all about.

Still not sure how IoC is beneficial to you? See this:

During the HacktOberFest conference, Michal has been setting up the infrastructure manually - live during his lecture. It took him around 30 minutes - even though Michal is an experienced player.

Using cloud formation scripts, the same infrastructure is up and running in ~5 minutes , besides it doesn’t mean that we have to continuously watch over the script being processed. We can just fire and forget, go, have a coffee for the remaining 4 minutes and 50 seconds.

To sum up:

30/5 = 6

Your infrastructure boots up 6 times faster and you have some extra free time. Eventually, it boils down only to the question if you can afford such a waste.

With that being said, we can clearly see that IaC is the foundation on top of which enterprises may implement:

- Highly Available systems

- Disaster recovery

- predictable deployments

- faster time to prod

- CI/CD

- Cost optimization

Note that IaC is just a guideline, and IaC tools are just tools that enable you to achieve the before-mentioned goals faster and better. No tool does the actual work for you.

Regardless of your specific needs, either you build enterprise infrastructure and want to have HA and DR or you just deploy your first application to the cloud and reduce the cost of it, IoC is beneficial for you.

Which IaC tool to use?

There are many IaC tool offerings on the market. Each claim to be the best one. Only to satisfy our AWS deployment automation, we can go with Terraform, AWS Cloud Formation, Ansible and many many more. Which one to use? There is no straight answer, as always in IT: it depends . We recommend doing a few PoC, try out various tools and afterward decide which one fits you best.

How do we do it? Cloud Formation

As aforementioned we need to transcribe our infrastructure into code. So, how do we do it?

First, we need a tool for that. So there it is, the missing piece of Enterprise level AWS Infrastructure - Cloud Formation . It’s an AWS native IaC tool commonly used to automate infrastructure deployment.

Simply put, AWS Cloud Formation scripts are simple text files containing definitions of AWS resources that your infrastructure utilizes (EC2, S3, VPC, etc.). In Cloud Formation these text files are called Templates.

Well… ok, actually Cloud Formation is a little bit more than that. It’s also an AWS service that accepts CF scripts and orchestrates AWS to spin up all of the resources you requested in the right order (simply, automates the clicking in the console). Besides, it gives you live insight into the requested resource status.

Cloud formation follows the notion of declarative infrastructure definitions. On the contrary to an imperative approach in which You say how to provision infrastructure, declaratively you just specify what is the expected result. The knowledge of how to spin up requested resources lies on the AWS side.

If You followed Michal Kapiczynski’s tutorials , the Cloud Formation scripts presented underneath are just all his heavy work, written down to ~500 lines of yml file that you can keep in the repository right next to your application.

Note: Further reading requires you to either see Michals articles before or basic knowledge of AWS.

Enterprise Level Infrastructure Overview

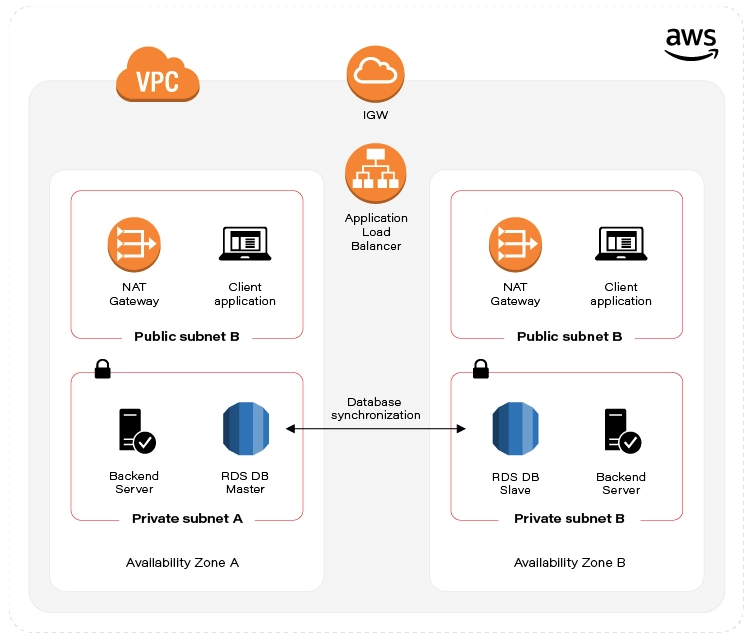

There are many expectations from Enterprise Level infrastructure. From our use case standpoint, we’ll guarantee High Availability, by deploying our infrastructure in two separate AWS Data Centers (Availability Zones) and provide data redundancy by database replication. The picture presented above visualizes the target state of our Enterprise Level Infrastructure.

TLDR; If You’re here just to see the finished Cloud Formation script, please go ahead and visit this GitHub repository .

We've decided to split up our infrastructure setup into two parts (scripts) called Templates . The first part includes AWS resources necessary to construct a network stack. The latter collects application-specific resources: virtual machines, database, and load balancer. In cloud formation nomenclature, each individual set of tightly related resources is called Stack .

Stack usually contains all resources necessary to implement planned functionality. It can consist of: VPC, Subnets, EC2 instances, Load Balancers, etc. This way, we can spin up and tear down all of the resources at once with just one click (or one CLI command).

Each Template can be parametrized. To achieve easy scaling capabilities and disaster recovery, we’ll introduce the Availability Zone parameter. It will allow us to deploy the infrastructure in any AWS data center all around the world just by changing the parameter value.

As you will see through the second part of the guide , Cloud Formation scripts include a few extra resources in comparison to what was originally shown in Michal’s Articles . That’s because AWS creates these resources automatically for you under the hood when you create the infrastructure manually. But since we’re doing the automation, we have to define these resources explicitly.

Sources:

- https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/gettingstarted.templatebasics.html

- https://martinfowler.com/bliki/InfrastructureAsCode.html

- https://docs.microsoft.com/en-us/azure/devops/learn/what-is-infrastructure-as-code

The hidden cost of overengineering microservices: How we cut cloud costs by 82%

When microservices are overused, complexity and costs skyrocket. Here’s how we consolidated 25 services into 5 - simplifying architecture and slashing cloud spend without sacrificing stability.

It’s hard to predict exactly how microservice architecture will evolve, what pros and cons will surface, and what long-term impact it will have. Microservices can offer significant benefits — like scalability, independent deployments, and improved fault isolation — but they also introduce hidden challenges, such as increased complexity, communication overhead, and maintenance costs.

While this architectural approach brings flexibility in managing systems, prioritizing critical components, and streamlining release and testing processes, it won’t magically fix everything — architecture still needs to make sense. Applying the wrong architecture can create more problems than it solves. Poorly designed microservices may lead to inefficiencies, tight coupling in unexpected places, and operational overhead that outweighs their advantages.

Entry point: reclaiming architectural simplicity

The project we took on was an example of microservice architecture applied without tailoring it to the actual shape and needs of the system. Relatively small and simple applications were over-decoupled. Not only were different modules and domains split into separate services, but even individual layers — such as REST API, services containing business logic, and database repositories — were extracted into separate microservices. This is a classic case of solving a simple problem with a complex tool, without adapting to the context.

Our mission was to refactor the system — not just at the code level, but at the architectural level — with a primary focus on reducing the long-term maintenance costs. To achieve this, we’ve decided to retain the microservice approach, but with a more pragmatic level of granularity. Instead of 25 microservices, we consolidated the system into just 5 thoughtfully grouped services, reduced cache instances from 3 to 1 and migrated 10 databases into 5.

Consulting the system

Before making any decisions, we conducted a thorough audit of the system’s architecture, application performance, efficiency, and overall cost. Looking at the raw architectural diagram alone is rarely enough — we wanted to observe the system in action and pay close attention to key metrics. This live analysis provided critical insights into configuring the new applications to better meet the system's original requirements while reducing operational costs.

Cloud Provider access

To truly understand a system’s architecture, it’s essential to have access to the cloud provider's environment — with a wide set of permissions. This level of visibility pays off significantly. The more detailed your understanding at this stage, the more opportunities you uncover for optimization and cost savings during consolidation.

Monitoring tools access

Most systems include monitoring tools to track their health and performance. These insights help identify which metrics are most critical for the system. Depending on the use case, the key factor might be computing power, memory usage, instance count, or concurrency. In our case, we discovered that some microservices were being unnecessarily autoscaled. CPU usage was rising — not due to a lack of resources, but because of accumulating requests in the next microservices in the chain that performed heavy calculations and interacted with external APIs. Understanding these patterns enabled us to make informed decisions about application container configurations and auto scaling strategies.

Refactoring, consolidating, and optimizing cloud architecture

We successfully consolidated 25 microservices into 5 independent, self-sufficient applications, each backed by one of 5 standardized databases — down from a previously fragmented set of 10 and a single cache instance instead of 3. Throughout this transformation, we stick to a core refactoring principle: system inputs and outputs must remain unchanged. Internally, however, architecture and data flow were redesigned to improve efficiency and maintainability.

We carefully defined domain boundaries to determine which services could be merged. In most cases, previously separated layers — REST proxies, service logic, and repositories — were brought together in an unified application within a single domain. Some applications required database migrations, resulting in consolidated databases structured into multiple schemas to preserve legacy boundaries.

Although we estimated resource requirements for the new services, production behavior can be unpredictable — especially when pre-launch workload testing isn't possible. To stay safe, we provisioned a performance buffer to handle unexpected spikes.

While cost reduction was our main goal, we knew we were dealing with customer-facing apps where stability and user experience come first. That’s why we took a safe and thoughtful approach — focusing on smart consolidation and optimization without risking reliability. Our goal wasn’t just to cut costs, but to do it in a way that also improved the system without impacting end-users.

Challenges and risks of architecture refactoring

Limited business domain knowledge

It’s a tough challenge when you're working with applications and domains without deep insight into the business logic. On one hand, it wasn’t strictly required since we were operating on a higher architectural level. But every time we needed to test and fix issues after consolidation, we had to investigate from scratch — often without clear guidance or domain expertise.

Lack of testing opportunities

In maintenance-phase projects, it's common that dedicated QA support or testers with deep system knowledge aren’t available — which is totally understandable. At this point, we often rely on the work done by previous developers: verifying what types of tests exist, how well they cover the code and business logic, and how effective they are at catching real issues.

Parallel consolidation limitations

The original system’s granularity made it difficult for more than one developer to work on consolidating a single microservice simultaneously. Typically, each domain was handled by one developer, but in some cases, having multiple people working together could have helped prevent issues during such a complex process.

Backward compatibility

Every consolidated application had to be 100% backward-compatible with the pre-consolidation microservices to allow for rollbacks if needed. That meant we couldn’t introduce any breaking changes during the transition — adding extra pressure to get things right the first time.

Distributed configuration

The old system’s over-granular design scattered configuration across multiple services and a config server. Rebuilding that into a unified configuration required careful investigation to locate, align, and centralize everything in one application.

End-user impact

Since the system was customer-facing, any bug or functionality gap after consolidation could directly affect users. This raised the stakes for every change and reinforced the need for a cautious, thoughtful rollout.

Architectural refactoring comes with risks and understanding them upfront is key to delivering both system reliability and cost efficiency.

What we gained: lower costs, higher reliability, and a sustainable system

Cloud cost reduction

After consolidation, overall cloud infrastructure costs were reduced by 82% . This was a direct result of architectural refactoring, microservices reduction, and more efficient resource usage.

Monitoring tool efficiency

The new architecture also lowered the load on external monitoring tools, leading up to 70% drop in related costs .

Indirect cost savings

While we didn’t have full access to some billing metrics, we know that many tools charge based on factors like request volume, microservice count and internal traffic. Simplifying the core of the system brought savings across these areas too.

Simplified maintenance

Shrinking from 25 microservices to 5 dramatically reduced the effort required for feature development, domain-specific releases, and CI/CD pipeline management. Once we removed the facade of complexity, it became clear the system wasn’t as complicated as it seemed. Onboarding new developers is now much faster and easier — which also opens the door to rethinking how many engineers are truly needed for ongoing support.

Zero downtime deployment

Since we were working with a customer-facing system, minimizing downtime for each release was critical. By consolidating functionality into 5 clearly defined, domain scoped applications, we made it possible to achieve zero downtime deployments in production.

Reduced complexity

Consolidation clarified how the system works and gave developers a wider view of its components. With cohesive domains and logic housed in fewer applications, it’s now easier to follow business flows, implement efficient solutions, debug issues, and write effective tests.

---

Every decision made at a given moment usually feels like the right one — and often it is. But if something remains important over time, it’s worth revisiting that decision in light of new context and evolving circumstances. As our case clearly shows, taking the time to reevaluate can truly pay off — both literally and figuratively.

Collaboration between OEMs and cloud service providers: Driving future innovations

Collaboration between Cloud Service Providers (CSPs) and Automotive Original Equipment Manufacturers (OEMs) lies at the heart of driving innovation and progress in the automotive industry.

The partnerships bring together the respective strengths and resources of both parties to fuel advancements in software-defined vehicles and cutting-edge technologies.

This article will delve into the transformative collaborations between Automotive Original Equipment Manufacturers (OEMs) and Cloud Service Providers (CSPs) in the automotive industry, representing a critical junction facilitating the convergence of automotive engineering and cloud computing technologies.

Why OEMs and Cloud Service Providers cooperate

CSPs are crucial in supporting the automotive industry by providing the necessary cloud infrastructure and services . This includes computing power, storage capacity, and networking capabilities to process and compute resources generated by software-defined vehicles.

On the other hand, OEMs are responsible for designing and manufacturing vehicles, which heavily rely on sophisticated software systems to control various functions, ranging from safety and infotainment to navigation and autonomous driving capabilities. To seamlessly integrate software systems into the vehicles , OEMs collaborate with CSPs, leveraging the power of cloud technologies .

Collaboration between CSPs and automotive companies spans several key areas to elevate vehicle functionality and performance. These areas include:

- designing and deploying cloud infrastructure to support the requirements of connected and autonomous vehicles

- handling and analyzing the vast amounts of vehicle-generated data

- facilitating seamless communication among vehicles and with other devices and systems

- ensuring data security and privacy

- delivering over-the-air (OTA) updates swiftly and efficiently for vehicle software

- testing autonomous vehicle technology through cloud computing

Benefits of collaboration

The benefits of such collaboration are significant, offering continuous software innovation, improved data analysis, but also reduced time-to-market, cost savings, product differentiation, and a competitive edge for new entrants in the automotive industry.

Cloud services enable automotive companies to unlock new possibilities, enhance vehicle performance, and deliver a seamless driving experience for customers . Moreover, the partnership between CSPs and automotive OEMs has the potential to revolutionize transportation , as it facilitates efficient and seamless interactions between vehicles, enhancing road safety and overall convenience for drivers and passengers.

In terms of collaboration strategies, automotive OEMs have various options, such as utilizing public cloud platforms, deploying private clouds for increased data security, or adopting hybrid approaches that combine the advantages of both public and private clouds. The choice of strategy depends on each company's specific data storage and security requirements.

Real-life examples of cooperation between Cloud Service Providers and automotive OEMs

Several real-life examples demonstrate the successful collaboration between cloud service providers and automotive OEMs. It's important to note that some automotive companies collaborate with more than one cloud service provider , showcasing the industry's willingness to explore multiple partnerships and leverage different technological capabilities.

In the automotive sector, adopting a multi-cloud strategy is common but complicated due to diverse cloud usage. Carmakers employ general-purpose SaaS enterprise applications and cloud infrastructure, along with big data tools for autonomous vehicles and cloud-based resources for car design and manufacturing. They also seek to control software systems in cars, relying on cloud infrastructure for updates and data processing. Let’s have a look at how different partnerships with cloud service providers are formed depending on the various business needs.

Microsoft

Mercedes-Benz and Microsoft have joined forces to enhance efficiency, resilience, and sustainability in car production. Their collaboration involves linking Mercedes-Benz plants worldwide to the Microsoft Cloud through the MO360 Data Platform. This integration improves supply chain management and resource prioritization for electric and high-end vehicles.

Additionally, Mercedes-Benz and Microsoft are teaming up to test an in-car artificial intelligence system. This advanced AI will be available in over 900,000 vehicles in the U.S., enhancing the Hey Mercedes voice assistant for seamless audio requests. The ChatGPT-based system can interact with other applications to handle things like making restaurant reservations or purchasing movie tickets, and it will make voice commands more fluid and natural.

Renault, Nissan, and Mitsubishi have partnered with Microsoft to develop the Alliance Intelligent Cloud, a platform that connects vehicles globally, shares digital features and innovations, and provides enhanced services such as remote assistance and over-the-air updates. The Alliance Intelligent Cloud also connects cars to "smart cities" infrastructure, enabling integration with urban systems and services.

Volkswagen and Microsoft are building the Volkswagen Automotive Cloud together, powering the automaker's future digital services and mobility products, and establishing a cloud-based Automated Driving Platform (ADP) using Microsoft's Azure cloud computing platform to accelerate the introduction of fully automated vehicles.

Volkswagen Group's vehicles can share data with the cloud via Azure Edge services. Additionally, the Volkswagen Automotive Cloud will enable the updating of vehicle software.

BMW and Microsoft : BMW has joined forces with Microsoft Azure to elevate its ConnectedDrive platform, striving to deliver an interconnected and smooth driving experience for BMW customers. This collaboration capitalizes on the cloud capabilities of Microsoft Azure, empowering the ConnectedDrive platform with various services, including real-time traffic updates, remote vehicle monitoring, and engaging infotainment features.

In 2019, BMW and Microsoft announced that they were working on a project to create an open-source platform for intelligent, multimodal voice interaction.

Hyundai-Kia and Microsoft joined forces to create advanced in-car infotainment systems. The collaboration began in 2008 when they partnered to develop the next-gen in-car infotainment. Subsequently, in 2010, Kia introduced the UVO voice-controlled system, a result of their joint effort, utilizing Windows Embedded Auto software.

The UVO system incorporated speech recognition to maintain the driver's focus on the road and offered compatibility with various devices. In 2012, Kia enhanced the UVO system by adding a telematics suite with navigation capabilities. Throughout their partnership, their primary goal was to deliver cutting-edge technology to customers and prepare for the future.

In 2018, Hyundai-Kia and Microsoft announced an extended long-term partnership to continue developing the next generation of in-car infotainment systems.

Amazon

The Volkswagen Group has transformed its operations with the Volkswagen Industrial Cloud on AWS. This cloud-based platform uses AWS IoT services to connect data from machines, plants, and systems across over 120 factory sites. The goal is to revolutionize automotive manufacturing and logistics, aiming for a 30% increase in productivity, a 30% decrease in factory costs, and €1 billion in supply chain savings.

Additionally, the partnership with AWS allows the Volkswagen Group to expand into ridesharing services, connected vehicles, and immersive virtual car-shopping experiences, shaping the future of mobility.

The BMW Group has built a data lake on AWS, processing 10 TB of data daily and deriving real-time insights from the vehicle and customer telemetry data. The BMW Group utilizes its Cloud Data Hub (CDH) to consolidate and process anonymous data from vehicle sensors and various enterprise sources. This centralized system enables internal teams to access the data to develop customer-facing and internal applications effortlessly.

Rivian, an electric vehicle manufacturer , runs powerful simulations on AWS to reduce the need for expensive physical prototypes. By leveraging the speed and scalability of AWS, Rivian can iterate and optimize its vehicle designs more efficiently.

Moreover, AWS allows Rivian to scale its capacity as needed. This is crucial for handling the large amounts of data generated by Rivian's Electric Adventure Vehicles (EAVs) and for running data insights and machine learning algorithms to improve vehicle health and performance.

Toyota Connected , a subsidiary of Toyota, uses AWS for its core infrastructure on the Toyota Mobility Services Platform. AWS enables Toyota Connected to handle large datasets, scale to more vehicles and fleets, and improve safety, convenience, and mobility for individuals and fleets worldwide. Using AWS services, Toyota Connected managed to increase its traffic volume by a remarkable 18-fold.

Back in April 2019, Ford Motor Company , Autonomic , and Amazon Web Services (AWS) joined forces to enhance vehicle connectivity and mobility experiences. The collaboration aimed to revolutionize connected vehicle cloud services, opening up new opportunities for automakers, public transit operators, and large-scale fleet operators.

During the same period, Ford collaborated with Amazon to enable members of Amazon's loyalty club, Prime, to receive package deliveries in their cars, providing a secure and convenient option for Amazon customers.

Honda and Amazon have collaborated in various ways. One significant collaboration is the development of the Honda Connected Platform, which was built on Amazon Web Services (AWS) using Amazon Elastic Compute Cloud (Amazon EC2) in 2014. This platform serves as a data connection and storage system for Honda vehicles.

Another collaboration involves Honda migrating its content delivery network to Amazon CloudFront, an AWS service. This move has resulted in cost optimization and performance improvements.

Stellantis and Amazon have announced a partnership to introduce customer-centric connected experiences across many vehicles. Working together, Stellantis and Amazon will integrate Amazon's cutting-edge technology and software know-how throughout Stellantis' organization. This will encompass various aspects, including vehicle development and the creation of connected in-vehicle experiences.

Furthermore, the collaboration will place a significant emphasis on the digital cabin platform known as STLA SmartCockpit. The joint effort will deliver innovative software solutions tailored to this platform, and the planned implementation will begin in 2024.

Kia has engaged in two collaborative efforts with Amazon . Firstly, they have integrated Amazon's AI technology, specifically Amazon Rekognition, into their vehicles to enable advanced image and video analysis. This integration facilitates personalized driver-assistance features, such as customized mirror and seat positioning for different drivers, by analyzing real-time image and video data of the driver and the surrounding environment within Kia's in-car system.

Secondly, Kia has joined forces with Amazon to offer electric vehicle charging solutions. This partnership enables Kia customers to conveniently purchase and install electric car charging stations through Amazon's wide-ranging products and services, making the process hassle-free.

Even Tesla, the electric vehicle manufacturer, had collaborated with AWS to utilize its cloud infrastructure for various purposes, including over-the-air software updates, data storage, and data analysis, until the company’s cloud account was hacked and used to mine cryptocurrency,

By partnering with Google Cloud, Renault Group aims to achieve cost reduction, enhanced efficiency, flexibility, and accelerated vehicle development. Additionally, they intend to deliver greater value to their customers by continuously innovating software.

Leveraging Google Cloud technology, Renault Group will focus on developing platforms and services for the future of Software Defined Vehicles (SDVs). These efforts encompass in-vehicle software for the "Software Defined Vehicle" Platform and cloud software for a Digital Twin.

The Google Maps platform, Cloud, and YouTube will be integrated into future Mercedes-Benz vehicles equipped with their next-generation operating system, MB.OS. This partnership will allow Mercedes-Benz to access to Google's geospatial offering, providing detailed information about places, real-time and predictive traffic data, and automatic rerouting. The collaboration aims to create a driving experience that combines Google Maps' reliable information with Mercedes-Benz's unique luxury brand and ambience.

Volvo has partnered with Google to develop a new generation of in-car entertainment and services. Volvo will use Google's cloud computing technology to power its digital infrastructure. With this partnership, Volvo's goal is to offer hands-free assistance within their cars, enabling drivers to communicate with Google through their Volvo vehicles for navigation, entertainment, and staying connected with acquaintances.

Ford and Google have partnered to transform the connected vehicle experience, integrating Google's Android operating system into Ford and Lincoln vehicles and utilizing Google's AI technology for vehicle development and operations. Google plans to give drivers access to Google Maps, Google Assistant, and other Google services.

Furthermore, Google will assist Ford in various areas, such as in-car infotainment systems, over-the-air updates, and the utilization of artificial intelligence technology.

Toyota and Google Cloud are collaborating to bring Speech On-Device, a new AI product, to future Toyota and Lexus vehicles, providing AI-based speech recognition and synthesis without relying on internet connectivity.

Toyota intends to utilize the vehicle-native Speech On-Device in its upcoming multimedia system. By incorporating it as a key element of the next-generation Toyota Voice Assistant, a collaborative effort between Toyota Motor North America Connected Technologies and Toyota Connected organizations, the system will benefit from the advanced technology Google Cloud provides.

In 2015, Honda and Google embarked on a collaborative effort to introduce the Android platform to cars. Through this partnership, Honda integrated Google's in-vehicle-connected services into their upcoming models, with the initial vehicles equipped with built-in Google features hitting the market in 2022.

As an example, the 2023 Honda Accord midsize sedan has been revamped to include Google Maps, Google Assistant, and access to the Google Play store through the integrated Google interface.

Conclusion

The collaboration between cloud service providers and industries, such as automotive, has revolutionized the way businesses operate and leverage data. Organizations can enhance efficiency, accelerate technological advancements, and unlock valuable insights by harnessing the power of scalable cloud platforms. As cloud technologies continue to evolve, the potential for innovation and growth across industries remains limitless, promising a future of improved operations, cost savings, and enhanced decision-making processes.

Interested in our services?

Reach out for tailored solutions and expert guidance.