Deliver your apps to Kubernetes faster

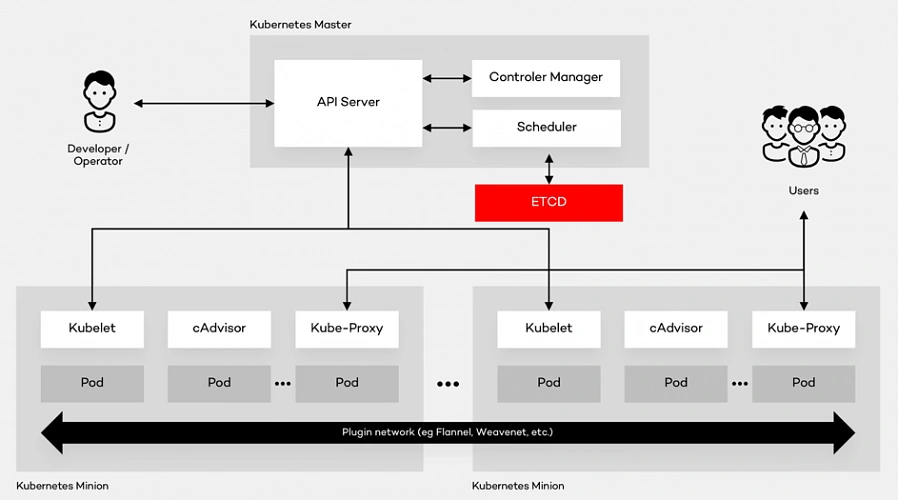

Kubernetes is currently the most popular container orchestration platform used by enterprises, organizations and individuals to run their workloads . Kubernetes provides software developers with great flexibility in how they can design and architect systems and applications.

Unfortunately, its powerful capabilities come at a price of the platform’s complexity, especially from the developer’s perspective. Kubernetes forces developers to learn and understand its internals fluently in order to deploy workloads, secure them and integrate with other systems.

Why is it so complex?

Kubernetes uses the concept of Objects, which are abstractions representing the state of the cluster. When one wants to perform some operation on the cluster e.g., deploy an application, they basically need to make the cluster create several various Kubernetes Objects with an appropriate configuration. Typically, when you would like to deploy a web application, in the simplest case scenario, you would need to:

- Create a deployment.

- Expose the deployment as a service.

- Configure ingress for the service.

However, before you can create a deployment (i.e. command Kubernetes to run a specific number of containers with your application), you need to start with building a container image that includes all the necessary software components to run your app and of course the app itself. “Well, that’s easy” – you say – “I just need to write a Dockerfile and then build the image using docker build ”. That is all correct, but we are not there yet. Once you have built the image, you need to store it in a container image registry where Kubernetes can pull it from.

You could ask - why is it so complex? As a developer, I just want to write my application code and run it, rather than additionally struggle with Docker images, registries, deployments, services, ingresses, etc., etc. But that is the price for Kubernetes’ flexibility. And that is also what makes Kubernetes so powerful.

Making deployments to Kubernetes easy

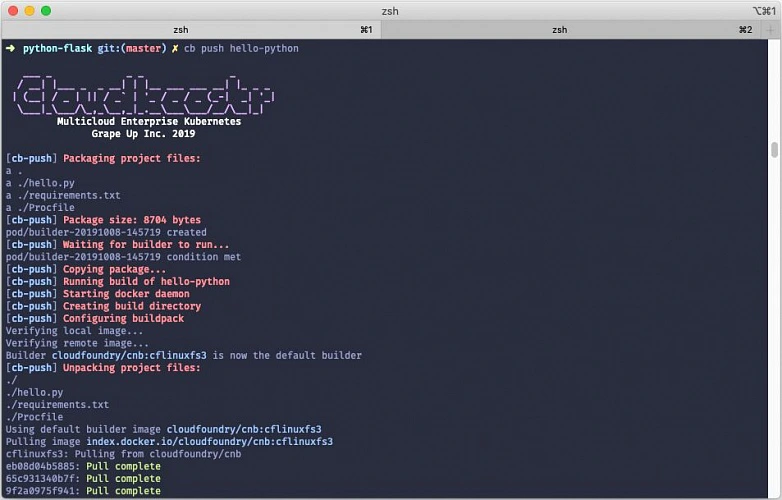

What if all the above steps were automated and combined into a single command allowing developers to deploy their app quickly to the cluster? With Cloudboostr’s latest release, that is possible!

What’s new? The Cloudboostr CLI - a new command line tool designed to simplify developer experience when using Kubernetes. To deploy an application to the cluster, you simply execute a single command:

cb push APP_NAME

The concept of “pushing” an application to the cluster has been borrowed from the Cloud Foundry community and its famous cf push command described by cf push haiku:

Here is my source code

Run it on the cloud for me

I do not care how.

When it comes to Cloudboostr , the “push” command automates the app deployment process by:

- Building the container image from application sources.

- Pushing the image to the container registry.

- Deploying the image to Kubernetes cluster.

- Configuring service and ingress for the app.

Looking under the hood

Cloudboostr CLI uses the Cloud Native Buildpacks project to automatically detect the application type and build an OCI-compatible container image with an appropriate embedded application runtime. Cloud Native Buildpacks can autodetect the most popular application languages and frameworks such as Java, .NET, Python, Golang or NodeJS.

Once the image is ready, it is automatically pushed to the Harbor container registry built into Cloudboostr. By default, Harbor is accessible and serves as a default registry for all Kubernetes clusters deployed within a given Cloudboostr installation. The image stored in the registry is then used to create a deployment in Kubernetes. In the current release only standard Deployment objects are supported, but adding support for StatefulSets is in the roadmap. As the last step, a service object for the application is created and a corresponding ingress object configured with Cloudboostr’s built-in Traefik proxy.

The whole process described above is executed in the cluster. Cloudboostr CLI triggers the creation of a temporary builder container that is responsible for pulling the appropriate buildpack, building the container image and communicating with the registry. The builder container is deleted from the cluster after the build process finishes. Building the image in the cluster eliminates the need to have Docker and pack (Cloud Native Buildpacks command line tool) installed on the local machine.

Cloudboostr CLI uses configuration defined in kubeconfig to access Kubernetes clusters. By default, images are pushed to the Harbor registry in Cloudboostr, but the CLI can also be configured to push images to an external container registry.

Why bother a.k.a. the benefits

While understanding Kubernetes internals is extremely useful, especially for troubleshooting and debugging, it should not be required when you just want to run an app. Many development teams that start working with Kubernetes find it difficult as they would prefer to operate on the application level rather than interact with containers, pods, ingresses, etc. The “cb push” command aims to help those teams and give them a tool to deliver fast and deploy to Kubernetes efficiently.

Cloudboostr was designed to tackle common challenges that software development teams face using Kubernetes. It became clear that we could improve the entire developer experience by providing those teams with a convenient yet effective tool to migrate from Cloud Foundry to Kubernetes. A significant part of that transition came to offer a feature that makes deploying apps to Kubernetes as user-friendly as Cloud Foundry does. That allows developers to work intuitively and with ease.

Cloudboostr CLI significantly simplifies the process of deploying applications to a Kubernetes cluster and takes the burden of handling containers and all Kubernetes-native concepts off of developers’ backs. It boosts the overall software delivery performance and helps teams to release their products to the market faster.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.