Generative AI

Generative AI is not hype—it's the next frontier in activation. The question is no longer whether to adopt it, but how to deploy it in ways that compound value across operations, support, and product development.

We design and deploy AI-driven assistants and platforms that augment employees, customers, and operations.

From Gen-AI copilots to automated documentation pipelines and retrieval-augmented systems — we build the AI layer that eliminates manual bottlenecks, scales expert knowledge, and transforms how your organization handles complexity.

Build copilots that support customers, engineers, and operators with natural language interaction.

Automate the creation and retrieval of technical knowledge, reducing time-to-answer and increasing efficiency.

Use GenAI to generate and maintain service manuals, technical docs, and regulatory reports.

Deliver scalable, 24/7 support experiences driven by customer touchpoints and telemetry data - powered by GenAI.

Implement advanced retrieval-augmented generation (RAG) and agent frameworks tailored for automotive use cases.

Deploy enterprise-grade GenAI platforms that integrate into OEM workflows with security and compliance in mind.

Learn how we help our customers tackle their challenges

Explore how we redefine industry standards through innovation.

How two automotive OEMs turned regulatory pressure into business opportunity.

Beyond compliance

Data Act compliance isn't about checking boxes, but about fundamentally reimagining how automotive data creates value.

Breaking the 90-day decision cycle

How a global automotive leader achieved real-time insights with Gen AI.

Production-ready AI platform

80% less code while retaining high quality and testability

24 weeks became 5: When AI transforms legacy modernization

Learn how we help our customers tackle their challenges

Explore how we redefine industry standards through innovation.

Not sure how to turn vehicle data into business value? Let's map out your options.

Get in touch for tailored solutions and expert guidance.

Insights on automotive innovation

Exploring the future of automotive technology

Generative AI for connected cars: Solution-oriented chatbots for personalized user support

Generative AI is becoming a major player in automotive innovation. The market is already valued at USD 480.22 million in 2024 , and it’s expected to grow to USD 3,900.03 million by 2034, with a steady annual growth rate of 23.3%. Moreover, by 2025, the global automobile sector will invest $11.1 billion in cognitive and AI technologies. These numbers show how quickly the industry is picking up on this technology’s potential.

GenAI is making its mark across various areas. From manufacturing optimization to autonomous driving, its impact is undeniable. Predictive maintenance systems identify issues early, AI-powered tools optimize vehicle development, and talking to in-car assistants is starting to feel like a scene out of a sci-fi movie.

Speaking of sci-fi, pop culture has always loved the idea of talking cars. There is K.I.T.T. (Knight Industries Two Thousand), of course, but also all Transformers and tons of cartoons, starting with Lightning McQueen. Is it just pure fiction? Not at all (except McQueen, for many reasons 😊)! Early attempts at smarter cars started with examples like a 2004 Honda offering voice-controlled navigation and Ford’s 2007 infotainment system. Fast forward to now, and we have a VW Golf with a GPT-based assistant that’s more conversational than ever.

But honestly, the most resourceful one is K.I.T.T. – it activates all onboard systems, diagnoses itself, and uses company resources (there is an episode when K.I.T.T. withdraws money from the company bank account using an ATM). In 1982, when the show first aired, it was just pure science fiction. But what about now? Is it more science or fiction? With Generative AI growing rapidly in automotive, we have to revisit that question.

Let’s break it down!

Prerequisites

Let’s assume we would like to create a solution-oriented chatbot connected with a car. By “solution-oriented,” I mean one that is really useful, able not only to change the attractive interior lighting but also to truly solve owners’ issues.

The idea is to use Generative AI, a large language model with its abilities in reasoning, problem-solving, and language processing.

Therefore, the first question is – where should the model be planted – in the cloud or a car?

For the first option, you need a constant Internet connection (which is usually not guaranteed in cars). In contrast, the second option typically involves a smaller and less versatile model, and you still need a lot of resources (hardware, power) to run it. The truth lies, as usual, in between (cloud model if available, local one otherwise), but today we’ll focus on the cloud model only.

The next step is to consider the user-facing layer. The perfect one is integrated into the car, isn’t it? Well, in most cases, yes, but there are some drawbacks.

The first issue is user-oriented – if you want to interact with your car when being outside of it, your mobile phone is probably the most convenient option (or a smartwatch, like Michael from Knight Rider). Also, infotainment systems are comprehensively tested and usually heavily sealed into cars, so introducing such a bot is very time-consuming. Therefore, the mobile phone is our choice.

We don’t want to focus on this application today, however. Depending on the target operating system, it probably should use speech-to-text recognition and text-to-speech generation and stream data both ways for a better user experience.

The core part is the chatbot backend – a regular application connecting the frontend and the LLM. It should be able to call external APIs and use two sources of knowledge – live car data and company-owned data sources.

Basics

Let’s gather the components. There is a customer-facing layer – the mobile application; then there is our main backend application, the LLM, of course, and some services to provide data and functionalities.

The diagram above is conceptual, of course. The backend is probably cloud-hosted, too, and cloud services linked to car services form the essence of the “connected cars” pattern.

The main concept for the application is “tool calling” – the LLM ability to call predefined functions with structuralized arguments. That’s why the backend is surrounded by different services. In a perfect world, those should be separated microservices designed for different use cases. However, this architecture is not scenario-based. There is no “if-else-if” ladder or so. The LLM determines how to utilize the tools based on its own decision-making process.

The sample conversation schema might look like the one presented below.

As you can see, the chatbot service calls the LLM, and the LLM returns command “call function A.” Then, the service calls the function and returns the response to the LLM (not the user!).

This approach is very flexible as functions (a.k.a. tools) might execute actions and return useful data. Also, the LLM may decide to use a function based on another function result. In the case above, it can, for example, use one function to check the climate control system status and discover that it’s running in the “eco mode”. Then, it might decide to call the “set mode” function with the argument “max AC” to change the mode. After that, the LLM can return an answer to the user with a message like “It should be fixed now”.

To build such an application, all you need to call the LLM like that (OpenAI GPT-4o example):

{

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": "My AC is ineffective! Fix it!"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get AC status",

"description": "Return current status of the climate control system"

}

},

{

"type": "function",

"function": {

"name": "set AC mode",

"description": "Sets up the specified mode for the climate control system",

"parameters": {

"type": "object",

"properties": {

"mode": {

"type": "string",

"description": "Desired mode",

"enum": ["ECO", “NORMAL”, "MAX AC"]

}

},

"required": ["mode"]

}

}

}

],

"tool_choice": "auto"

}

As you can see, the response schema does not bother us here – the assumption is that the LLM is able to understand any reasonable response.

Dive

The subtitle should be a “deep dive”, but honestly, we’re just scratching the surface today. Nevertheless, let’s focus a little bit more.

So far, we have the user-facing application and the backend service. Now, let’s make it useful.

The AC example mentioned above is perfectly valid, but how can it be achieved? Let’s say there is an API for interaction with the AC in the car. It’s typical for all PHEVs and EVs and available for some HEVs, too, when you can turn on your AC remotely via the mobile app. However, the real value lies in the connected car

There is no IP address of the car hardcoded in the application. Usually, there is a digital twin in the cloud (a cloud service that represents the car). The application calls the twin, and the twin notifies the vehicle. There should also be some pub/sub queue in between to handle connectivity tier disruptions. Also, the security layer is extremely important. We don’t want anybody even to play the radio at max volume during a quiet night ride, not to mention turning off the lights or engaging breaks.

Which brings us to the list of possible actions.

Let’s assume all systems in the car are somehow connected, maybe using a common bus or a more modern ethernet-like network. Still, some executors, such as brakes, should be isolated from the system.

So, there is no “brake API” to stop a car. However, it may be beneficial for mechanics to execute some "dangerous" actions programmatically, e.g., to increase the pressure in the braking system without actually pressing the pedal. If this is the case, such functionalities should be accessible exclusively through a local connection without the need for digital twin integration. Therefore, we can assume there are two systems in the car – local and cloud-integrated, no matter the nature of the isolation (physical, network, or software). Let’s focus on the connected car aspect.

I believe the system should be able to change the vehicle settings, even if there is a risk that the driver could be surprised by an unauthorized change in the steering feel while taking a turn. This way, the chatbot might be useful and reduce support load by adjusting car settings based on the user's preferences. To avoid misusage, we can instruct the chatbot by prompt engineering to confirm each change with the user before execution and, of course, implement best-in-class security for all components. We can also allow certain operations only if the car is parked.

Which brings us back to the list of possible actions.

For the sake of this article, let’s assume the chatbot can change various car settings. Examples include:

- Climate control settings

- Driver assistant sensitivity and specific functions toggles

- Navigation System settings, like route type or other functions toggles

- 360 camera system settings, like brightness adjustment

- Sound system settings like equalizer

- Wiper settings

- Notifications settings

- Active steering system settings

This list is not complete, and the best thing is – it doesn’t need to be, as adding new functions (tool definition + API availability) might be a part of the future system OVA update.

What about reading real-time data? Should we connect to the car directly and read the status? Let’s leave this option for another article 😉 and focus on communication via the cloud.

There are two possibilities.

We can provide more tools to get data per source/component (a reminder – LLM decides to call for data, which then triggers an API call, and the LLM processes the received response). Alternatively, we could implement a single tool, “get vehicle data,” that collects and merges all data available from all data sources.

For the latter approach, two ways are available – do we really need a tool? Maybe we should inject the current state into each conversation, as it’s probably beneficial to have the current state anyway to solve all cases?

Let me give the standard consultant reply to those questions.

It depends.

More data in the context means extended response time and a higher bill. Also, some cases don’t need the entire context or don’t need it at all. On the other hand, if you let the LLM decide which part of the context is necessary (which function to call), it will also affect the time and cost.

The next part is the “cost” of collecting data. Some data sources might be slow or consume valuable in-car resources to calculate (maybe not appropriate) data.

My advice would be to start collecting all data in the background during session creation and attach it to the session progressively when ready. Additionally, provide the LLM with the capability to wait for data if it has not yet been supplied. This should be implemented as a function to handle instances where the user requests data-related action, but the necessary data is not yet available

The second part is splitting the data. You can use a much smaller LLM to decide which context data is necessary to answer the current question and remove unnecessary information to keep it concise.

The last part is company data. All LLM models are trained based on thousands of manuals available over the Internet, but this is usually not sufficient. Your chatbot should have the car manual at its fingertips, so the general knowledge is not enough. Therefore, you need to provide it. You can execute the RAG procedure and add some content to the context for each prompt, but my advice would be to hide it behind a function, too. Then, the LLM may decide when to call it to get some extra information if needed.

Use cases of Generative AI for connected cars

OK, we have all the components in place – there is the frontend, the backend, the model, and the toolbox. Can it be useful? As you would expect!

In the examples below, I will skip the part where the LLM commands the backend to perform an action, and the backend calls an API. I will also omit the process of the API response traveling back through the backend to the LLM. Additionally, I will assume that no new data is added to the context, so the LLM must decide which data it needs. Besides that, make sure that your context contains some system prompts to better explain all possibilities to the assistant.

Here are some examples.

User: I think my Lane Keeping Assist activates too frequently. Can you check it?

Assistant: [get driver assistance settings]

Assistant: [diagnose sensors]

Assistant: Everything looks good, but I can see that the Lane Keeping Assist mode is set to “fast”. Do you want me to lower the setting to “medium”?

User: Yes, please.

Assistant: [change LKA settings]

Assistant: It should be better now.

In the example above, the user has a problem with one of the driver assistance components. The system proposes and, after confirmation, executes settings changes, so the driver doesn’t need to find a proper setting in the on-board computer.

Check another case.

User: Whenever I’m parking the car in my garage, I can barely see anything in my rear camera. There is not much light there, but maybe you help me in some other way?

Assistant: [get camera settings]

Assistant: I can increase the brightness of the camera, but then it might be too bright for other places. What do you think?

User: Yes, please do that.

Assistant: [change settings]

Assistant: Here you are; let me know if you want me to roll back the change.

Usually, there is no brightness setting available for the user. To adjust it, the user would need to schedule a service department meeting, spend some time, and pay for the facility. The assistant may do it in a few seconds for free.

User: There are so many notifications in the car, so I can’t hear the important ones!

Assistant: [check last notifications]

Assistant: If you find some of my notifications unnecessary, I can mute some of them. What do you think?

User: Yes, please turn off the speeding avoidance warning.

Assistant: I can’t turn it off due to legal requirements, but I can replace the sound with vibration. feedback on the acceleration pedal. Do you want me to do that?

User: Sounds great!

Assistant: [adjust settings]

Assistant: It’s done. I hope you’ll be less distracted.

In this case, the assistant solves the issue of annoying notifications. Note the alternative solution suggestion and knowledge about the legal aspect. We can achieve it with proper function descriptions.

User: It seems my car can’t connect to the Internet.

Assistant: [check the connection] -> no connection to the car from the cloud service

Assistant: It seems your car is disconnected. Can you please check the cellular network signal icon on the infotainment main screen?

User: I can see the signal is good.

Assistant: [check last logs]

Assistant: I can see there were some issues with your connection before. Please try to open the settings in the car infotainment and restart the communication module. I’ll guide you through the process.

User: OK, what should I do?

Assistant: [check user manual]

Assistant: Go to “Settings”, then “Connectivity”, then “Cellular” and then click “Restart module”. Confirm when asked.

User: OK

Assistant: [check the connection]

Assistant: I can see the connection is restored. Can you confirm that? If not, I can schedule a service meeting for you.

User: No need; it works now!

In the last example, the Assistant guides the user using logs, the manual, and after checking real-time conditions. Without the LLM, the user would need to visit the dealer or at least use online support assistance.

Final words

With modern technology, we can bring some science fiction concepts to real life. Maybe WARP speed is not possible yet, but at least a car itself can give us solutions to problems recently solved only in much more expensive ways.

The beauty of it is – it’s really simple. Of course, there is a user-facing application to be made, the security to be ensured, and a bunch of functions to be implemented, but with modern connected cars, those elements are in place anyway. The tricky, novel part is a very simple application that uses the LLM as the brain power of the system.

As you can see the “ Attention is all you need ” paper that started the LLM revolution has allowed humanity to bring to life concepts present in our culture for decades. On the other hand, would this article have been ever written if its authors hadn’t watched the K.I.T.T. in their childhood? We will never know.

The foundation for AI success: How to build a strategy to increase ROAI

AI adoption is on the rise but turning it into real business value is another story. 74% of companies struggle to scale AI initiatives , and only a tiny fraction - just 26% - develop the capabilities needed to move beyond proofs of concept. The real question on everyone's mind is - How to increase ROAI?

One of the biggest hurdles is proving the impact. In 2023, the biggest challenge for businesses was demonstrating AI’s usefulness in real operations . Many companies invest in this technology without a clear plan for how it will drive measurable results.

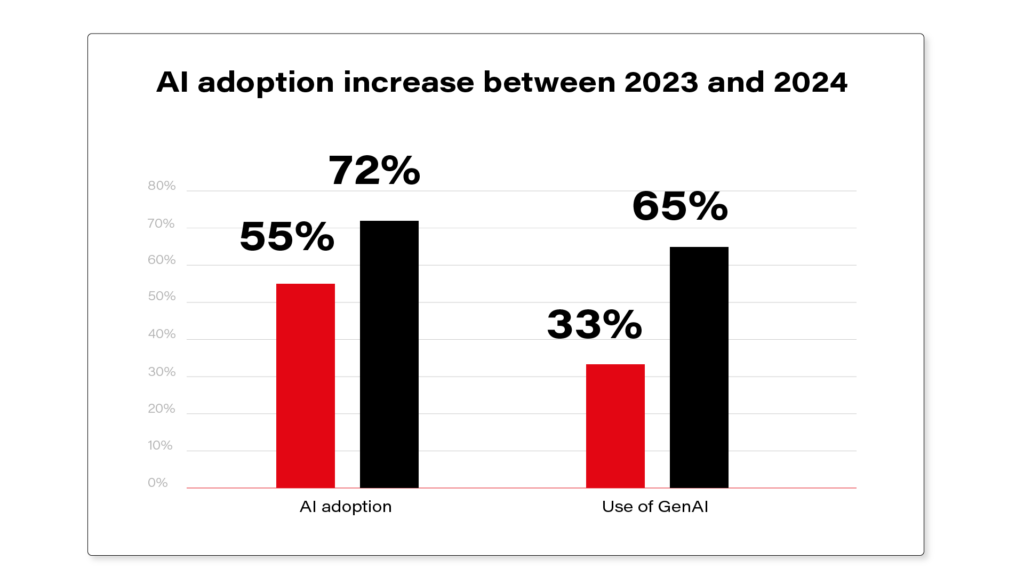

Even with these challenges, the adoption keeps growing. McKinsey's 2024 Global Survey on AI reported that 65% of respondents' organizations are regularly using Generative AI in at least one business function, nearly doubling from 33% in 2023. Businesses know its value, but making artificial intelligence work at scale takes more than just enthusiasm.

Source: https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

That’s where the right approach makes all the difference. A holistic strategy, strong data infrastructure, and efficient use of talent can help you increase ROAI and turn technology into a competitive advantage. But you need to start with building a foundation for AI investments and implementation first.

Why AI must be aligned with business goals

Too many AI projects fail when companies focus on the technology first instead of the problem it’s meant to solve. Investing in artificial intelligence just because it’s popular leads to expensive pilots that never scale, systems that complicate workflows instead of improving them, and wasted budgets with nothing to show for it.

Start with the problem, not the technology

Before committing resources, leadership needs to ask:

- What’s the goal? Is the priority cutting maintenance costs, making faster decisions, or detecting fraud more accurately? If the objective isn’t clear, neither will the results.

- Is AI even the right solution? Some problems don’t need machine learning. Sometimes, better data management or process improvements do the job just as well, without the complexity or cost of AI.

Choosing AI use cases that deliver real value

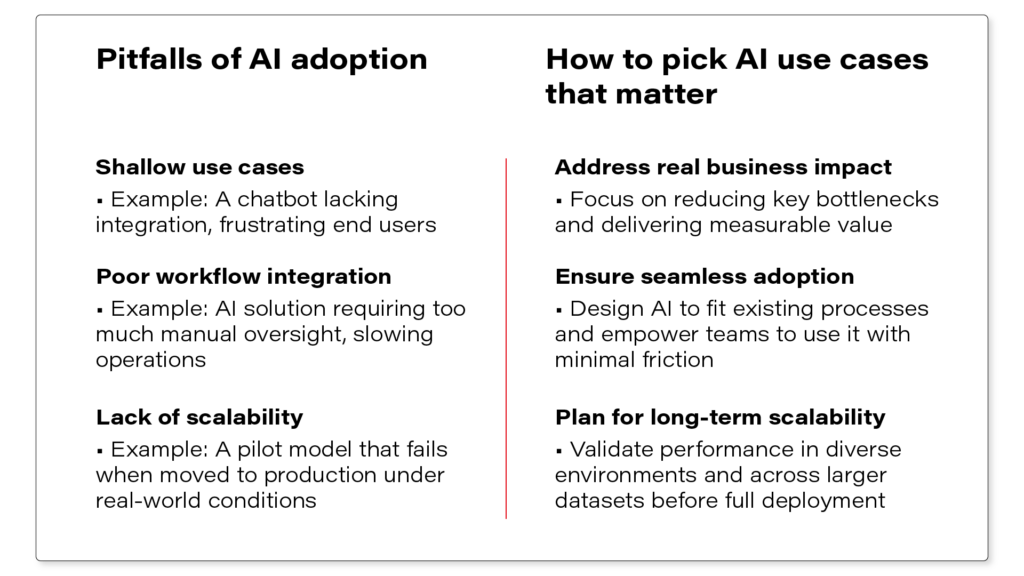

Once AI aligns with business goals, the next challenge is selecting initiatives that generate measurable impact. Companies often waste millions on projects that fail to solve real business problems, can’t scale, or disrupt workflows instead of improving them.

See which factors must align for AI to create tangible business value:

How responsible AI ties back to business results

Responsible AI protects long-term business value by creating systems that are transparent, fair, and aligned with user expectations and regulatory requirements. Organizations that take a proactive approach to AI governance minimize risks while building solutions that are both effective and trusted.

One of the biggest gaps in AI adoption is the lack of consistent oversight . Without regular audits and monitoring, models can drift, introduce bias, or generate unreliable results. Businesses need structured frameworks to keep AI reliable, adaptable, and aligned with real-world conditions. This also means actively managing ethical issues, explainability, and data security to maintain performance and trust.

As regulations evolve, compliance is no longer an afterthought. AI used in critical areas like fraud detection, risk assessment, and automated decision-making requires continuous monitoring to meet regulatory expectations. Companies that embed AI governance from the start avoid operational risks.

Another key challenge is trust . When AI-driven decisions lack transparency, scepticism grows. Users and stakeholders need clear visibility into how AI operates to build confidence. Companies that make decisions transparent and easy to understand improve adoption across their organization, and ultimately increase ROAI.

Measuring AI success and proving ROAI

The real test of AI’s success is whether it improves daily operations and delivers measurable business value. When teams work more efficiently, revenue grows, and risks become easier to manage, the investment is clearly paying off.

Key indicators of AI success

Is AI reducing manual effort? Automating repetitive tasks helps employees focus on more strategic work. If delays still slow operations or fraud detection overwhelms teams with false positives, AI may not be delivering real efficiency. Faster approvals and quicker customer issue resolution indicate AI is making a difference.

Is AI improving financial outcomes? Accurate forecasting cuts waste, and AI-driven pricing boosts profit margins. If automation isn’t lowering operational costs or streamlining workflows, it may not be adding real value.

Is AI strengthening security and compliance? Fraud detection prevents financial losses when it catches real threats without unnecessary disruptions. Compliance automation eases the burden of manual oversight, while AI-driven security reduces the risk of data breaches. If risks remain high, AI may need adjustments.

To prove AI’s return on investment, companies need to establish success criteria upfront , track AI performance over time, and compare different configurations (e.g., Generative AI use cases, LLM models ) to confirm the technology delivers cost savings and tangible benefits .

The hidden costs of AI initiatives and the challenge of scaling

Investing in artificial intelligence goes beyond development. Many companies focus on building and implementing models but underestimate the effort required to scale, maintain, and integrate them into existing systems. Costs accumulate over time, and without proper planning, AI projects can stall, and budgets stretch.

One of the highest ongoing costs is data . AI relies on clean, structured information, but collecting, storing, and maintaining it requires continuous effort. Over time, models need regular updates to remain accurate as well. Fraud tactics change, regulations evolve, and systems produce unreliable results without adjustments, leading to costly mistakes.

This becomes even more challenging when AI moves from a controlled pilot to full-scale implementation . A model that performs well in one department may not integrate easily across an entire organization. Expanding its use often exposes hidden costs, workflow disruptions, and technical limitations that weren’t an issue on a smaller scale.

Scaling AI successfully also requires coordination across different teams . While ML engineers refine models, business teams track measurable outcomes, and compliance teams manage regulatory requirements. You need these groups to align early.

AI must also integrate with existing enterprise systems without disrupting workflows, which requires dedicated infrastructure investments . Many legacy IT environments weren’t designed for AI-driven automation, which leads to increased costs for adaptation, cloud migration, and security improvements.

Companies that navigate these challenges effectively see real gains from AI. However, aligning strategy, execution, and scaling AI efficiently isn’t always straightforward. That’s where expert guidance makes a difference.

See how Grape Up helps businesses increase ROAI

Grape Up helps business leaders turn AI from a concept into a practical tool that delivers measurable ROAI by aligning technology with real business needs.

We work with companies to define AI roadmaps, making sure every initiative has a clear purpose and contributes to strategic goals. Our team supports data infrastructure and AI integration , so new solutions fit smoothly into existing systems without adding complexity.

From strategy to execution, Grape Up helps you increase ROAI. Make technology a real business asset adapted for long-term success.

The key to ROAI: Why high-quality data is the real engine of AI success

Data might not literally be “the new oil,” but it’s hard to ignore its growing impact on companies' operations. By some estimates, the world will generate over 180 zettabytes of data by the end of 2025 . Yet, many organizations still struggle to turn that massive volume into meaningful insights for their AI projects.

According to IBM, poor data quality already costs the US economy alone $3.1 trillion per year - a staggering figure that underscores just how critical proper governance is for any initiative, AI included.

On the flip side, well-prepared data can dramatically boost the accuracy of AI models, shorten the time it takes to get results and reduce compliance risks. That’s why the high quality of information is increasingly recognized as the biggest factor in an AI project’s success or failure and a key to ROAI.

In this article, we’ll explore why good data practices are so vital for AI performance, what common pitfalls often derail organizations, and how usage transparency can earn customer trust while delivering a real return on AI investment.

Why data quality dictates AI outcomes

An AI model’s accuracy and reliability depend on the breadth, depth, and cleanliness of the data it’s trained on. If critical information is missing, duplicated, or riddled with errors, the model won’t deliver meaningful results, no matter how advanced it is. It’s increasingly being recognized that poor quality leads to inaccurate predictions, inefficiencies, and lost opportunities.

For example, when records contain missing values or inconsistencies, AI models generate results that don’t reflect reality. This affects everything from customer recommendations to fraud detection, making AI unreliable in real-world applications. Additionally, poor documentation makes it harder to trace data sources, increasing compliance risks and reducing trust in AI-driven decisions.

The growing awareness has made data governance a top priority across industries as businesses recognize its direct impact on AI performance and long-term value.

Metrics for success: Tracking the impact of quality data on AI

Even with the right data preparation processes in place, organizations benefit most when they track clear metrics that tie data quality to AI performance. Here are key indicators to consider:

Monitoring these metrics lets organizations gain visibility into how effectively their information supports AI outcomes. The bottom line is that quality data should lead to measurable gains in operational efficiency, predictive accuracy, and overall business value. In other words - it's the key to ROAI.

However, even with strong data quality controls, many companies struggle with deeper structural issues that impact AI effectiveness.

AI works best with well-prepared data infrastructures

Even the cleanest sets won’t produce value if data infrastructure issues slow down AI workflows. Without a strong data foundation, teams spend more time fixing errors than training AI models.

Let's first talk about the people - they too are, after all, key to ROAI.

The right talent makes all the difference

Fixing data challenges is about tools as much as it is about people.

- Data engineers make sure AI models work with structured, reliable datasets.

- Data scientists refine data quality, improve model accuracy, and reduce bias.

- AI ethicists help organizations build responsible, fair AI systems.

Companies that invest in data expertise can prevent costly mistakes and instead focus on increasing ROAI.

However, even with the right people, AI development still faces a major roadblock: disorganized, unstructured data.

Disorganized data slows AI development

Businesses generate massive amounts of data from IoT devices, customer interactions, and internal systems. Without proper classification and structure, valuable information gets buried in raw, unprocessed formats. This forces data teams to spend more time cleaning and organizing instead of implementing AI in their operations.

- How to improve it: Standardized pipelines automatically format, sort, and clean data before it reaches AI systems. A well-maintained data catalog makes information easier to locate and use, speeding up development.

Older systems struggle with AI workloads

Many legacy systems were not built to process the volume and complexity of modern AI workloads. Slow query speeds, storage limitations, and a lack of integration with AI tools create bottlenecks. These issues make it harder to scale AI projects and get insights when they are needed.

- How to improve it: Upgrading to scalable cloud storage and high-performance computing helps AI process data faster. Moreover, integrating AI-friendly databases improves retrieval speeds and ensures models have access to structured, high-quality inputs.

Beyond upgrading to cloud solutions, businesses are exploring new ways to process and use information.

- Edge computing moves data processing closer to where it’s generated to reduce the need to send large volumes of information to centralized systems. This is critical in IoT applications, real-time analytics, and AI models that require fast decision-making.

- Federated learning allows AI models to train across decentralized datasets without sharing raw data between locations. This improves security and is particularly valuable in regulated industries like healthcare and finance, where data privacy is a priority.

Siloed data limits AI accuracy

Even when companies maintain high-quality data, access restrictions, and fragmented storage prevent teams from using it effectively. AI models trained on incomplete datasets miss essential context, which in turn leads to biased or inaccurate predictions. When different departments store data in separate formats or systems, AI cannot generate a full picture of the business.

- How to improve it: Breaking down data silos allows AI to learn from complete datasets. Role-based access controls provide teams with the right level of data availability without compromising security or compliance.

Fixing fragmented data systems and modernizing infrastructure is key to ROAI, but technical improvements alone aren’t enough. Trust, compliance, and transparency play just as critical a role in making AI both effective and sustainable.

Transparency, privacy, and security: The trust trifecta

AI relies on responsible data handling. Transparency builds trust and improves outcomes, while privacy and security keep organizations compliant and protect both customers and businesses from unnecessary risks. When these three elements align, people are more willing to share data, AI models become more effective, and companies gain an edge.

Why transparency matters

82% of consumers report being "highly concerned" about how companies collect and use their data, with 57% worrying about data being used beyond its intended purpose. When customers understand what information is collected and why, they’re more comfortable sharing it. This leads to richer datasets, more accurate AI models, and smarter decisions. Internally, transparency helps teams collaborate more effectively by clarifying data sources and reducing duplication.

Privacy and security from the start - a key to ROAI

While transparency is about openness, privacy and security focus on protecting data. Main practices include:

Compliance as a competitive advantage

Clear records and responsible data practices reduce legal risks and allow teams to focus on innovation instead of compliance issues. Customers who feel their privacy is respected are more willing to engage, while strong data practices can also attract partners, investors, and new business opportunities.

Use data as the strategic foundation for AI

The real value of AI comes from turning data into real insights and innovation - but none of that happens without a solid data foundation.

Outdated systems, fragmented records, and governance gaps hold back AI performance. Fixing these issues ensures AI models are faster, smarter, and more reliable.

Are your AI models struggling with data bottlenecks?

Do you need to modernize your data infrastructure to support AI at scale?

We specialize in building, integrating, and optimizing data architectures for AI-driven businesses.

Let’s discuss what’s holding your AI back and how to fix it.

Contact us to explore solutions tailored to your needs.

.jpg)

.jpg)

.jpg)

.jpeg)

.jpg)