How to choose a technological partner to develop data-driven innovation

Insurance companies, especially those focused on life and car insurance, in their offers are placing more and more emphasis on big data analytics and driving behavior-based propositions. We should expect that this trend will only gain ground in the future. And this raises further questions. For instance, what should be taken into account when choosing a technological partner for insurance-technology-vehicle cooperation?

Challenges in selecting a technology partner

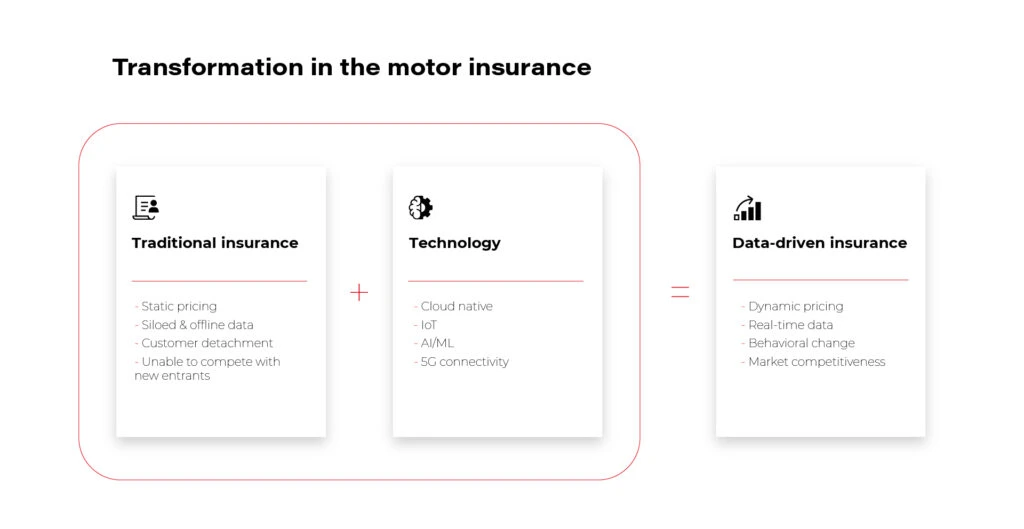

The potential of telematics insurance programs encourages auto insurers to move from traditional car insurance and build a competitive advantage on collected data.

No wonder technology partners are sought to support and develop increasingly innovative projects. Such synergistic collaboration brings tangible benefits to both parties.

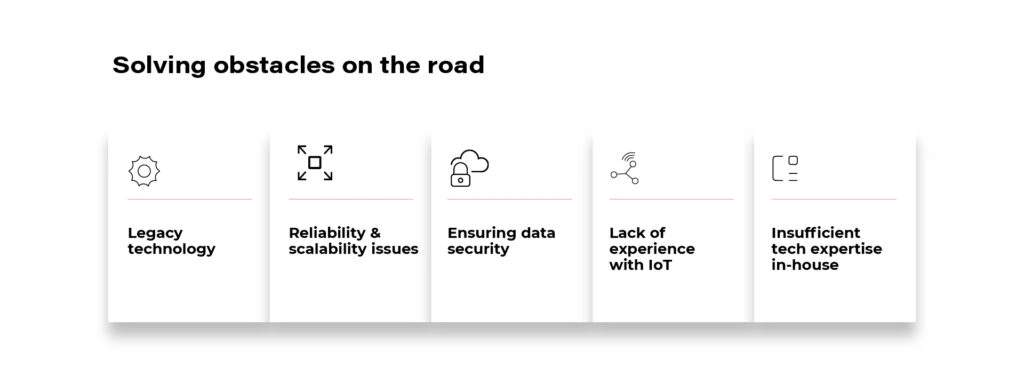

As we explained in the article How to enable data-driven innovation for the mobility insurance , the right technology partner will ensure:

- data security;

- cloud and IoT technology selection;

- the reliability and scalability of the proposed solutions.

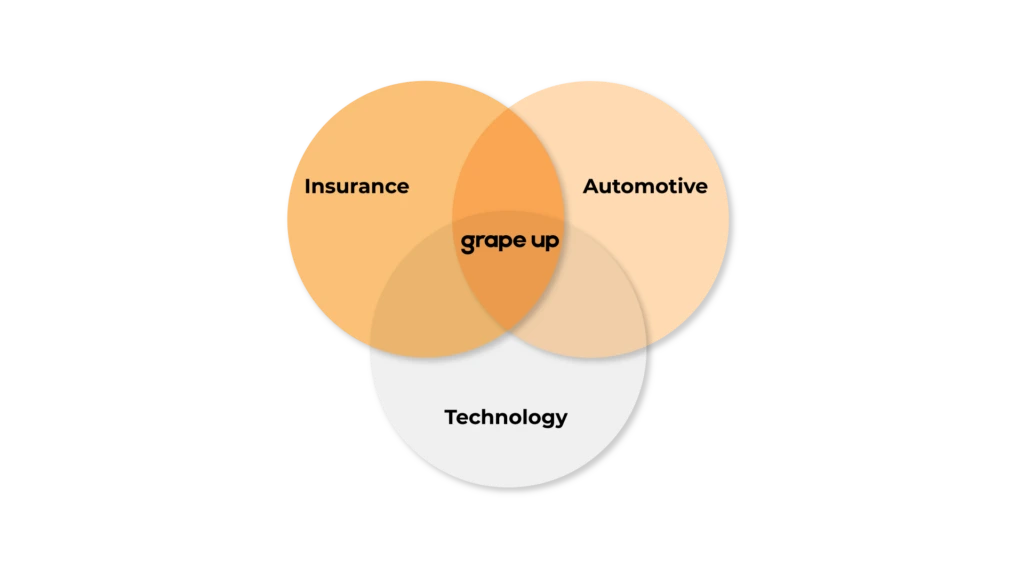

Finding such a partner, on the other hand, is not easy, because it must be a company that efficiently navigates in as many as three areas: AI/cloud technology, automotive, and insurance . You need a team of specialists who operate naturally in the software-defined vehicle ecosystem , and who are familiar with the characteristics of the P&C insurance market and the challenges faced by insurance clients.

Aim for the cloud. The relevance of AI and data collection and analytics technologies

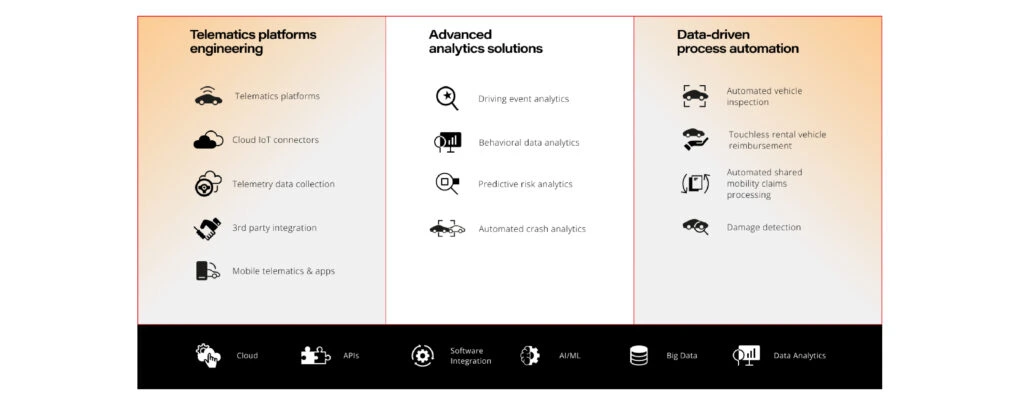

Information is the most important asset of the 21st century. The global data collection market in 2021 was valued at $1.66 billion. No service based on the Internet of Things and AI could operate without a space to collect and analyze data. Therefore, the ideal insurance industry partner must deliver proprietary and field-tested cloud solutions . And preferably those that are dependable. Cloud services offered these days by insurance partners include:

- cloud R&D,

- cloud and edge computing,

- system integration,

- software engineering,

- cloud platforms development.

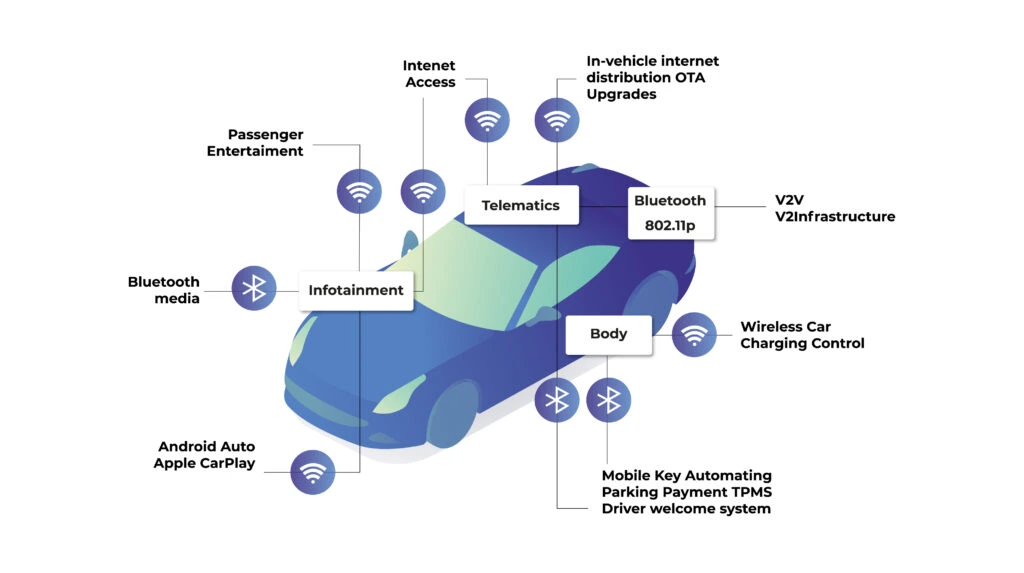

Connectivity between the edge device and the cloud must be stable and fast. Mobility devices often operate in limited connectivity conditions, therefore car insurance businesses should leverage multiple methods to ensure an uninterrupted connection. Dynamic switching of cellular, satellite, and Wi-Fi communications combined with globally distributed cloud infrastructure results in reliable transmission and low latency.

A secure cloud platform is capable of handling an increasing number of connected devices and providing them all with the required APIs while maintaining high observability.

As a result, the data collected is precise, valid, and reliable . They provide full insight into what is happening on the road, allowing you to better develop insurance quotes. No smart data-driven automation is possible without it.

Data quality, on the other hand, also depends on the technologies implemented inside the vehicle ( which we will discuss further below) and on all intermediate devices, such as the smartphone. The capabilities of a potential technology partner must therefore reach far beyond basic IT skills and most common technologies.

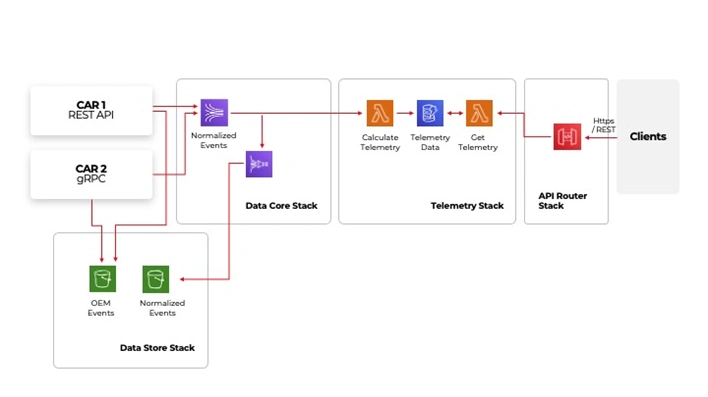

Telemetry data collection

Obviously, data acquisition and collection is not enough, because information about what is happening on the road, usage and operation of components in itself is just a "record on paper". But to make such a project a reality, you still need to implement advanced analytical tools and telematics solutions.

Real-time data streaming from telematics devices, mobile apps, and connected car systems gives access to driving data, driver behavior analysis, and car status. It enables companies to provide insurance policies based on customer driving habits .

Distributed AI

AI models are an integral part of modern vehicles. They predict front and rear collision, control damping of the suspension based on the road ahead, recognize road signs, or lanes. Modern infotainment applications suggest routes and settings depending on driver behavior and driving conditions.

Empowering the automotive industry to build software-defined vehicles. Automotive aspect

Today it is necessary to take into consideration a strategy towards modern, software-defined vehicles. According to Daimler AG, this can be expressed by the letters “CASE”:

- C onnected.

- A utonomous

- S hared.

- E lectric.

This idea means the major focus is going to be put on making the cars seamlessly connected to the cloud, support or advancements in autonomous driving based on electric power.

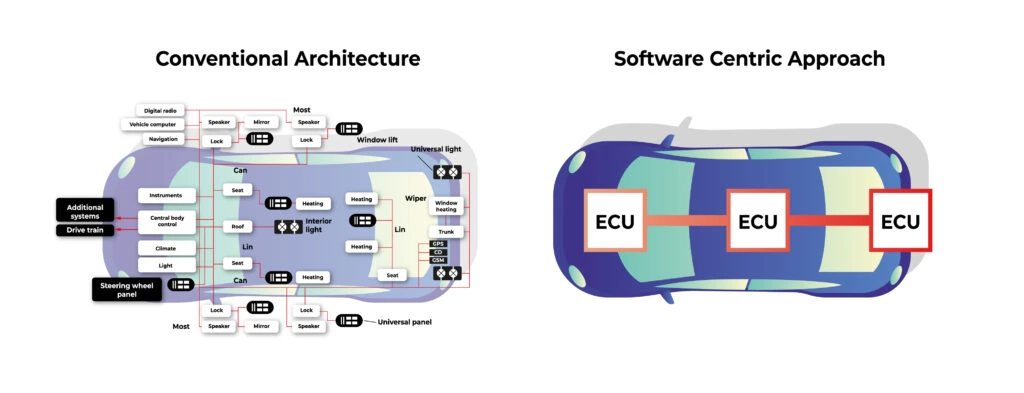

Digitalization and evolution of the computer hardware caused a natural evolution of the vehicle. New SoC’s (System on a Chip, integrated board containing CPU, memory, and peripherals) are multipurpose and powerful enough to handle not just a single task but multiple, simultaneously. It would not be an exaggeration to say that the cars of the future are smart spaces that combine external solutions (e.g. cloud computing, 5G) with components that work internally (IoT sensors). Technology solution providers must therefore work in two directions, understanding the specifics of both these ecosystems. Today, they cannot be separated.

The partner must be able to operate at the intersection of cloud technologies, AI and telemetry data collection. Ideally, they should know how these technologies can be practically used in the car. Such a service provider should also be aware of the so-called bottlenecks and potential discrepancies between the actual state and the results of the analysis. This knowledge comes from experience and implementation of complex software-defined vehicle projects.

Enabling data-driven innovation for mobility insurance. Insurance context

There are companies on the market that are banking on the innovative combination of automotive and automation. Although you have to separate the demand of OEMs and drivers from the demand of the insurance industry.

It's vital that the technology partner chosen by an insurance company is aware of this. This, naturally, involves experience supported by a portfolio for similar clients and specific industry know-how. The right partner will understand the insurer's expectations and correctly define their needs, combining them with the capabilities of a software-defined vehicle .

From an insurer's standpoint, the key solutions will be the following:

- Roadside assistance . For accurately determining the location of an emergency (this is important when establishing the details of an incident with conflicting stakeholders’ versions).

- Crash detection. To take proactive measures geared toward mitigating the consequences.

- UBI and BBI. The data gathered from mobile devices, plug-in dongles, or the vehicle embedded onboard systems can be processed and used to build risk profiles and tailored policies based on customers’ driving styles and patterns.

Technology and safety combined

The future of technology-based insurance policies is just around the corner. Simplified roadside assistance, drive safety support, stolen vehicle identification, personalized driving feedback, or crash detection- all of these enhance service delivery, benefit customers, and increase profitability in the insurance industry.

Once again, it is worth highlighting that the real challenge, as well as opportunity, is to choose a partner that can handle different, yet consistent, areas of expertise.

If you also want to develop data-driven innovation in your insurance company, contact GrapeUp. Browse our portfolio of automo tive & insurance projects .

We design a fast lane for Financial Services

Build future-ready solutions that learn, predict, and respond in real-time.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.