Parking is plain sailing... Provided that your car is equipped with automated valet parking

Among the many vehicle functions that intelligent software increasingly performs for us, parking is certainly the one that the majority of us would be most willing to leave to algorithms. While a ride on the highway can be seamless or a long road trip can be smooth, it is also the moment when the engine slows down and the search for a parking space, for a significant number of drivers, becomes a real test of skills. How about getting it automated? This would be beneficial not only for the driver but also for OEM-s, who can use such technology in factories and when loading and unloading vehicles onto ships or trains. Automated Valet Parking developed in BMW iX shows that this process has already started.

Parking difficulties are influenced not only by the dynamically changing circumstances of each parking operation and the large number of factors that must be monitored but also by overloaded parking lots and the endless chase for a time. According to statistics, it is in parking lots that the highest number of collisions and accidents occur, and it is this element that drivers often point out as causing them the most trouble.

According to the National Safety Council statistics, over 60,000 people are injured in parking lots every year. What is more, there are more than 50,000 crashes in parking lots and garages annually. In contrast, according to insurer Moneybarn, 60 percent of drivers found parallel parking to be stressful.

Leaving security in the hands of technology

It's no wonder that car companies around the world are looking for a foothold in exactly this part of automation, which could allow them to convince users to place their confidence in fully autonomous vehicles.

Increased safety - which can definitely be influenced by the introduction of such solutions - has always been at the forefront of all ratings showing driver approval of SVD (software-defined vehicle) technology . With automatic parking, the driver additionally receives time-savings, convenience, and reduced stress, because they do not have to waste energy on searching for a free spot, nor think about where they parked their vehicle. An algorithm and a system of networked sensors make the parking decisions for the driver. All the driver has to do is leave the car in a special drop-off/pick-up zone and confirm parking in the application. After shopping at the mall or a meeting, the user again confirms the vehicle pick-up in the app and proceeds to the zone where their vehicle is already parked.

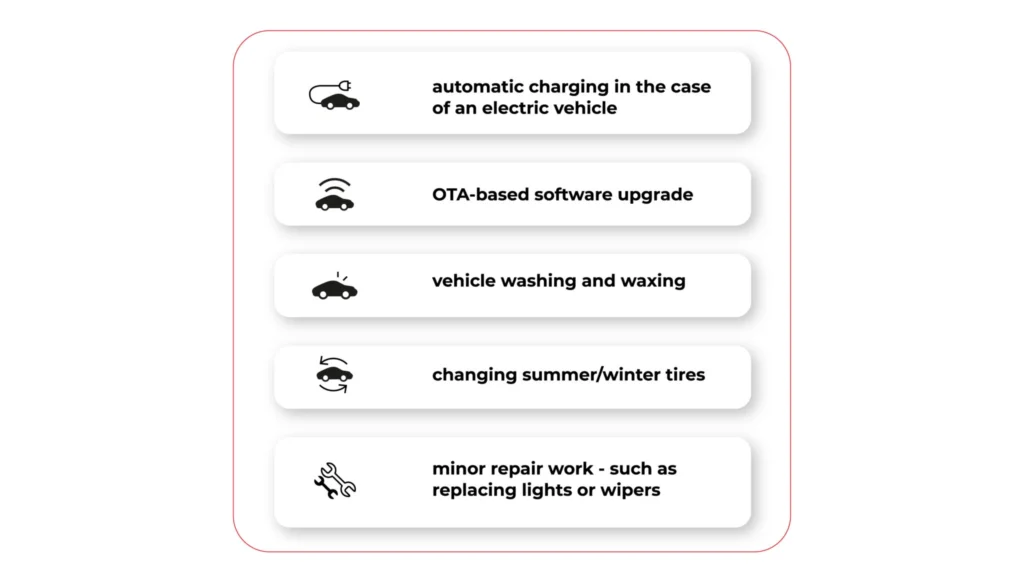

This stress-free handover of the car into the trusted hands of a "digital butler", opens up new service opportunities also for OEMs and companies cooperating with the automotive industry . While the driver can go shopping or go to the movies in peace, the vehicle can be serviced during this time. Among the potential applications are services such as:

- automatic charging in the case of an electric vehicle;

- OTA-based software upgrade;

- vehicle washing and waxing

- changing summer/winter tires;

- minor repair work - such as replacing lights or wipers.

Let's take a look at two of the most impressive use cases in this area that have appeared on the market recently. The first one is the Automated Valet Parking project, implemented in partnership with top car manufacturers and technology providers, with BMW leading the way. The second one is the offer of Nvidia, which managed to start cooperation with Mercedes-Benz in this field.

BMW Autonomous Valet Parking

Futurists of the 20th century predicted that the next century would bring us an era of robots able to perform most daily human activities on their own, in an intelligent, autonomous, and efficient way. Although this vision was a gross exaggeration, today on the market there are solutions that can clearly be described as innovative or ahead of their time.

An example? BMW and their all-electric flagship SUV, BMW iX, which communicates with external infrastructure and parks 100 percent without the driver’s input. The owner of the vehicle simply steps out of the car, handing it over to the "technological guardian".

The data exchange here takes place in three tracks: vehicle, smartphone app, and underground garage parts (cameras + sensors). The driver activates the Autonomous Valet Parking (AVP) option in the application, thanks to which the vehicle is able to maneuver independently around the garage without his participation. And all this with maximum safety, both in terms of collision-avoidance and protection of expensive items inside the vehicle.

This project would be much harder without the modern 5G network equipment provided by Deutsche Telekom. Why a fifth-generation network? Because compared to traditional WLAN solutions, it allows to dynamically enable, disable and update capabilities through API.

The flexible configuration and very low latency allow to shape the bandwidth and prioritize the vehicle connectivity traffic, making the connection stable, fast and reliable. This is one of the key requirements for any Connected Car system which is coupled with Autonomous Vehicle capabilities - if the connection is not reliable, latency is too high, or another device takes over the bandwidth, it may result in jerk, stuttering ride, as the data from external sensors is transferred late.

However, these are not all the surprises that the BWM Group has in store for their customers. In addition to parking, the driver can also benefit from other automated service functions such as washing or intelligent refueling. The solution is universal and can also be used by other OEMs.

https://youtu.be/iz_yKaa8QgM

Nvidia cooperate with Mercedes-Benz

There are many indications that Voice Assistant will be growing. For example, in 2020 in the U.S. alone, about 20 million people will make purchases via smartphone using voice-activated features [statista.com]. This trend isn't sparing the automotive industry, either, with technology providers racing to create software that would revolutionize such cumbersome tasks as parking. One of the forerunners is the semiconductor giant Nvidia, which created the Nvidia Drive Concierge service . It's an artificial intelligence-based software assistant that - literally - gives the floor to the driver, but also lets technology come to the fore.

"Hey Nvidia!" What does this voice command remind you of? Most often it is associated with another conversational voice assistance system, namely Siri. You are on the right track, because NDC works on a similar principle. The driver gives a command, and the assistant is able to recognize a specific voice, assign it to the vehicle owner and respond.

By far the most interesting functionality is the ability to integrate the software with Nvidia Drive AV autonomous technology, or on-demand parking. This works in a very intuitive way. All you have to do is get out of the vehicle, activate the function and watch as the "four wheels" steer themselves towards a parking space. And they do it in a collision-free manner, regardless of whether it's parallel, perpendicular or angled parking. It will work the same way in the reverse direction. If you want to leave a parking space, you simply hail the car, it pulls up on its own and is ready to continue its journey.

Sounds like total abstraction? It's already happening. Nvidia has teamed up with one of the world's leading OEMs, Mercedes-Benz. Starting in 2024, all next-generation Benz vehicles will be powered by Nvidia Drive AGX Orin technology, along with sensors and software. For the German company, automated parking services will therefore soon become common knowledge.

This is what Jensen Huang, founder and CEO of Nvidia, said about the collaboration: Together, we're going to revolutionize the car ownership experience, making the vehicle software programmable and continuously upgradable via over-the-air updates. Every future Mercedes-Benz with the Nvidia Drive system will come with a team of expert AI and software engineers continuously developing, refining and enhancing the car over its lifetime.

Automated Valet Parking: innovation at the cutting edge of technology

Vehicle automation and the resulting cooperation between OEMs and suppliers of new technologies is now entering new dimensions. Also in this area that many drivers associate with something very cumbersome, which often generates anxiety.

The integration of Nvidia Orin systems at Mercedes-Benz or the comprehensive AVP at BMW are prime examples of how new solutions at the intersection of AI , IoT, and 5G are becoming, to some extent, guardians of safety and guarantors of comfort from start to finish. It's also a good springboard to talk about fully automated vehicles.

Data powertrain in automotive: Complete end-to-end solution

We power your entire data journey, from signals to solutions

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.