Big picture of Spring Cloud Gateway

In my life, I had an opportunity to work in a team that maintains the Api Gateway System. The creation of this system began more than 15 years ago, so it is quite a long time ago considering the rate of technology changing. The system was updated to Java 8, developed on a light-way server which is Tomcat Apache, and contained various tests: integration, performance, end-to-end, and unit test. Although the gateway was maintained with diligence, it is obvious that its core contains a lot of requests processing implementation like routing, modifying headers, converting request payload, which nowadays can be delivered by a framework like Spring Cloud Gateway. In this article, I am going to show the benefits of the above-mentioned framework.

The major benefits, which are delivered by Spring Cloud Gateway:

- support to reactive programming model: reactive http endpoint, reactive web socket

- configuring request processing (routes, filters, predicates) by java code or yet another markup language (YAML)

- dynamic reloading of configuration without restarting the server (integration with Spring Cloud Config Server)

- support for SSL

- actuator Api

- integration gateway to Service Discovery mechanism

- load-balancing mechanisms

- rate-limiting (throttling) mechanisms

- circuit breakers mechanism

- integration with Oauth2 due to providing security features

Those above-mentioned features have an enormous impact on the speed and easiness of creating an Api gateway system. In this article, I am going to describe a couple of those features.

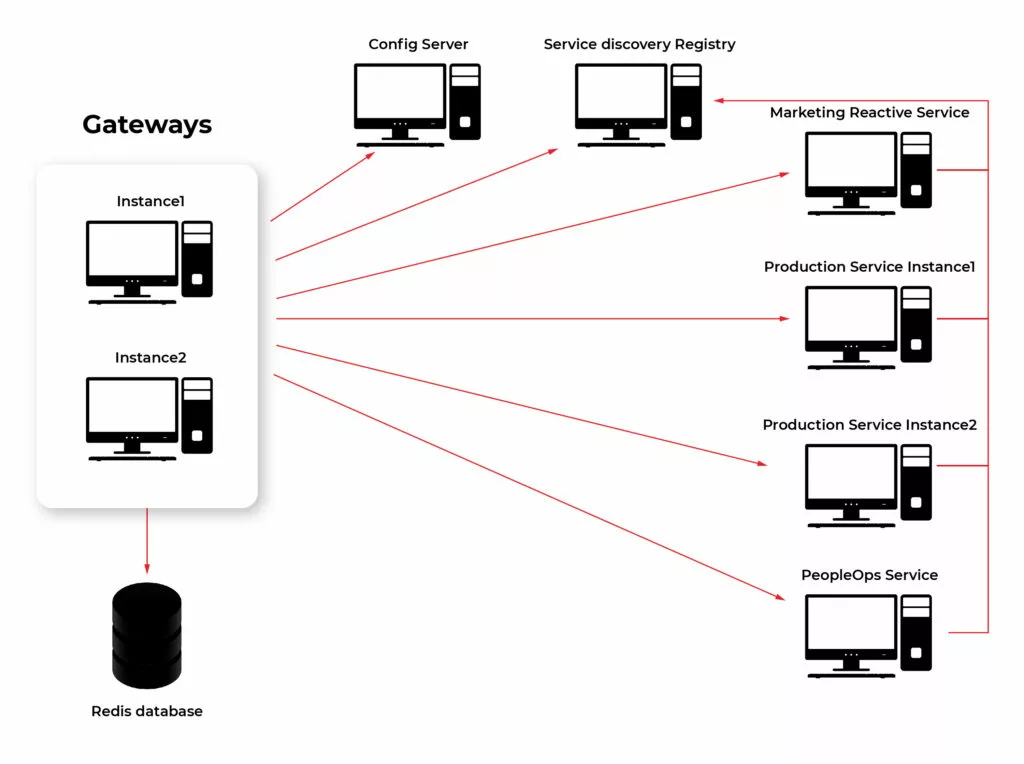

Due to the software, the world is the world of practice and systems cannot work only in theory, I decided to create a lab environment to prove the practical value of the Cloud Spring Gateway Ecosystem. Below I put an architecture of the lab environment:

Building elements of Spring Cloud Gateway

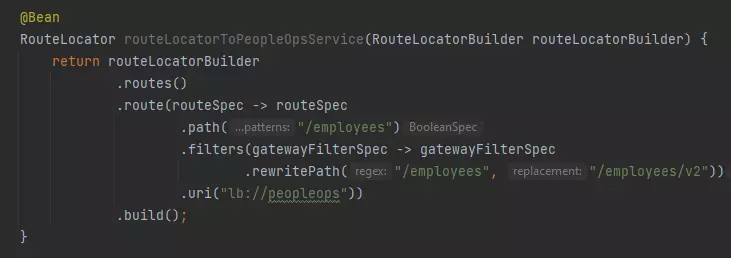

The first feature of Spring Cloud Gateway I am going to describe is a configuration of request processing. It can be considered the heart of the gateway. It is one of the major parts and responsibilities. As I mentioned earlier this logic can be created by java code or by YAML files. Below I add an example configuration in YAML and Java code way. Basic building blocks used to create processing logic are:

- Predicates – match requests based on their feature (path, hostname, headers, cookies, query)

- Filters – process and modify requests in a variety of ways. Can be divided depending on their purpose:

- gateway filter - modify the incoming http request or outgoing http response

- global filter - special filters applying to all routes so long as some conditions are fulfilled

Details about different implementations of getaway building components can be found in docs: https://cloud.spring.io/spring-cloud-gateway/reference/html/ .

Example of configuring route in Java DSL:

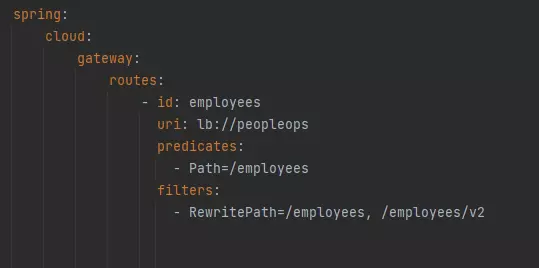

Configuration same route with YAML:

Spring Cloud Config Server integrated with Gateway

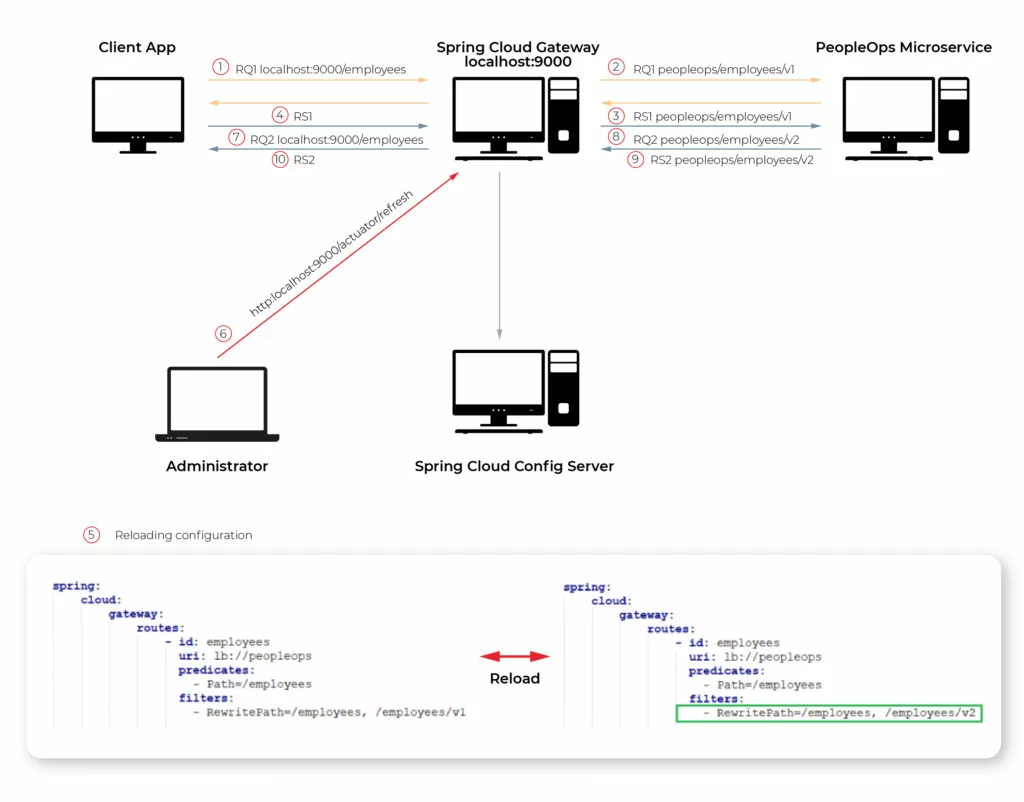

Someone might not be a huge fan of YAML language but using it here may have a big advantage in this case. It is possible to store configuration files in Spring Cloud Config Server and once configuration changes it can be reloaded dynamically. To do this process we need to use the Actuator Api endpoint.

Dynamic reloading of gateway configuration shows the picture below. The first four steps show request processing consistent with the current configuration. The gateway passes requests from the client to the “employees/v1” endpoint of the PeopleOps microservice (step 2). Then gateway passes the response back from the PeopleOps microservice to the client app (step 4). The next step is updating the configuration. Once Config Server uses git repository to store configuration, updating means committing recent changes made in the application.yaml file (step 5 in the picture). After pushing new commits to the repo is necessary to send a GET request on the proper actuator endpoint (step 6). These two steps are enough so that client requests are passed to a new endpoint in PeopleOps Microservice (steps 7,8,9,10).

Reactive web flow in Api Gateway

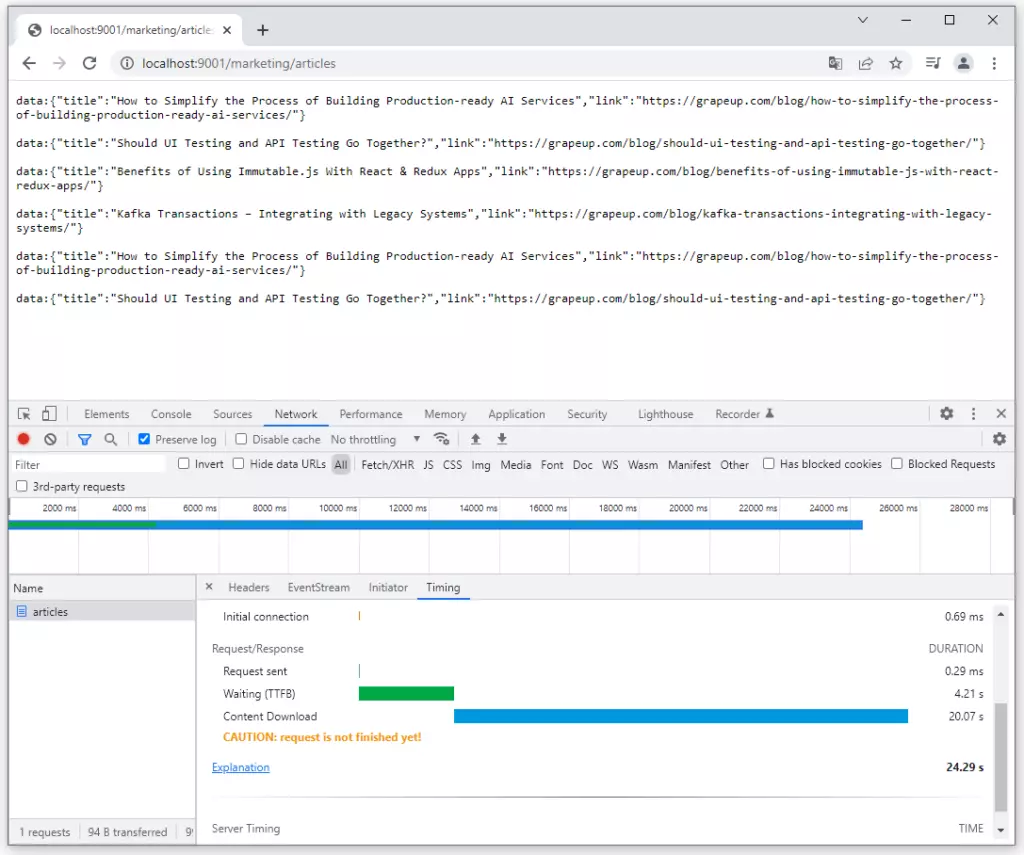

As the documentation said Spring Cloud Gateway is built on top of Spring Web Flux. Reactive programming gains popularity among Java developers so Spring Gateway offers to create fully reactive applications. In my lab, I created Controller in a Marketing microservice which generates article data repetitively every 4 seconds. The browser observes this stream of requests. The picture below shows that 6 chunks of data were received in 24 seconds.

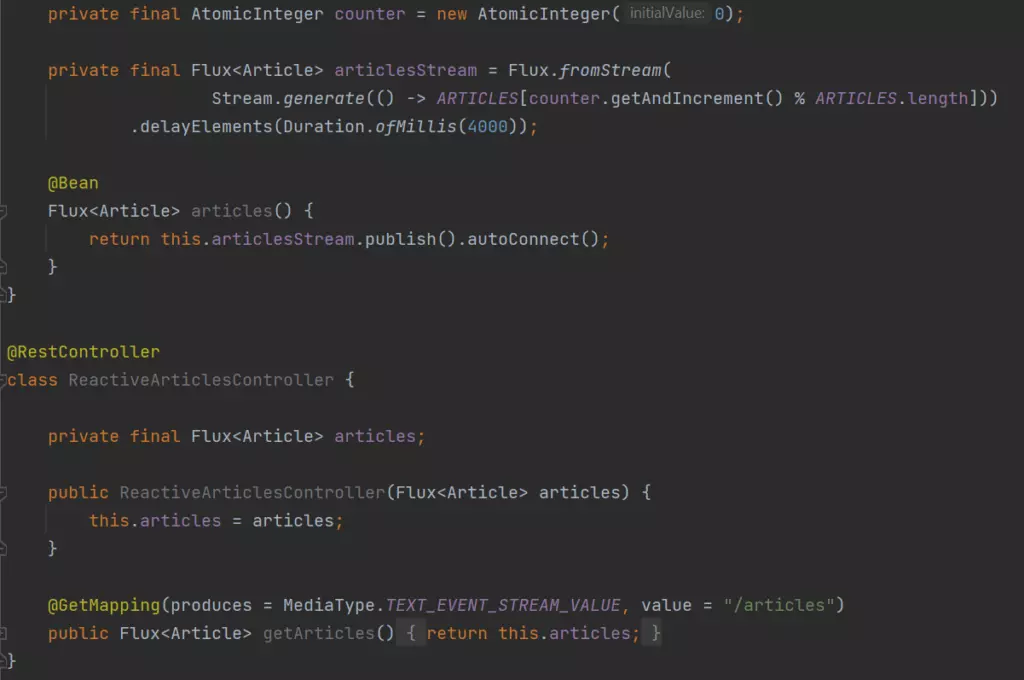

I do not dive into reactive programming style deeply, there are a lot of articles about the benefits and differences between reactive and other programming styles. I just put the implementation of a simple reactive endpoint in the Marketing microservice below. It is accessible on GitHub too: https://github.com/chrrono/Spring-Cloud-Gateway-lab/blob/master/Marketing/src/main/java/com/grapeup/reactive/marketing/MarketingApplication.java

Rate limiting possibilities of Gateway

The next feature of Spring Cloud Gateway is the implementation of rate-limiting (throttling) mechanisms. This mechanism was designed to protect gateways from harmful traffic. One of the examples might be distributed denial-of-service (DDoS) attack. It consists of creating an enormous number of requests per second which the system cannot handle.

The filtering of requests may be based on the user principles, special fields in headers, or other rules. In production environments, mostly several gateways instance up and running but for Spring Cloud Gateway framework is not an obstacle, because it uses Redis to store information about the number of requests per key. All instances are connected to one Redis instance so throttling can work correctly in a multi-instances environment.

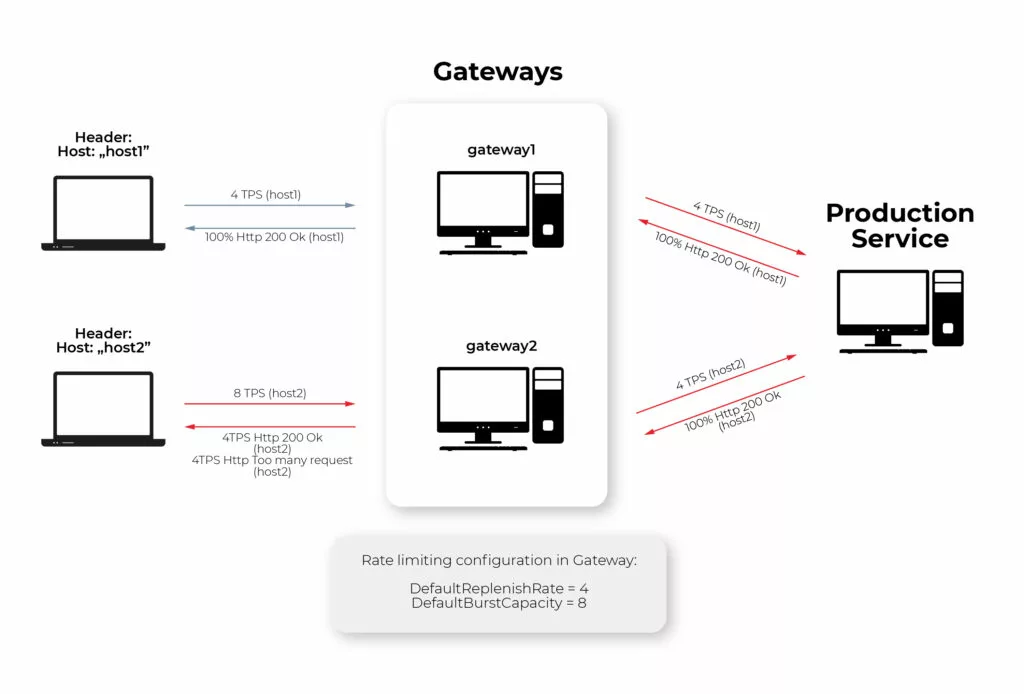

Due to prove the advantages of this functionality I configured rate-limiting in Gateway in the lab environment and created an end-to-end test, which can be described in the picture below.

The parameters configured for throttling are as follows: DefaultReplenishRate = 4, DefaultBurstCapacity = 8. It means getaways allow 4 Transactions (Request) per second (TPS) for the concrete key. The key in my example is the header value of “Host” field, which means that the first and second clients have a limit of 4TPS separately. If the limit is exceeded, the gateway replies by http response with 429 http code. Because of that, all requests from the first client are passed to the production service, but for the second client only half of the requests are passed to the production service by the gateway, and another half are replied to the client immediately with 429 Http Code.

If someone is interested in how I test it using Rest Assured, JUnit, and Executor Service in Java test is accessible here: https://github.com/chrrono/Spring-Cloud-Gateway-lab/blob/master/Gateway/src/test/java/com/grapeup/gateway/demo/GatewayApplicationTests.java

Service Discovery

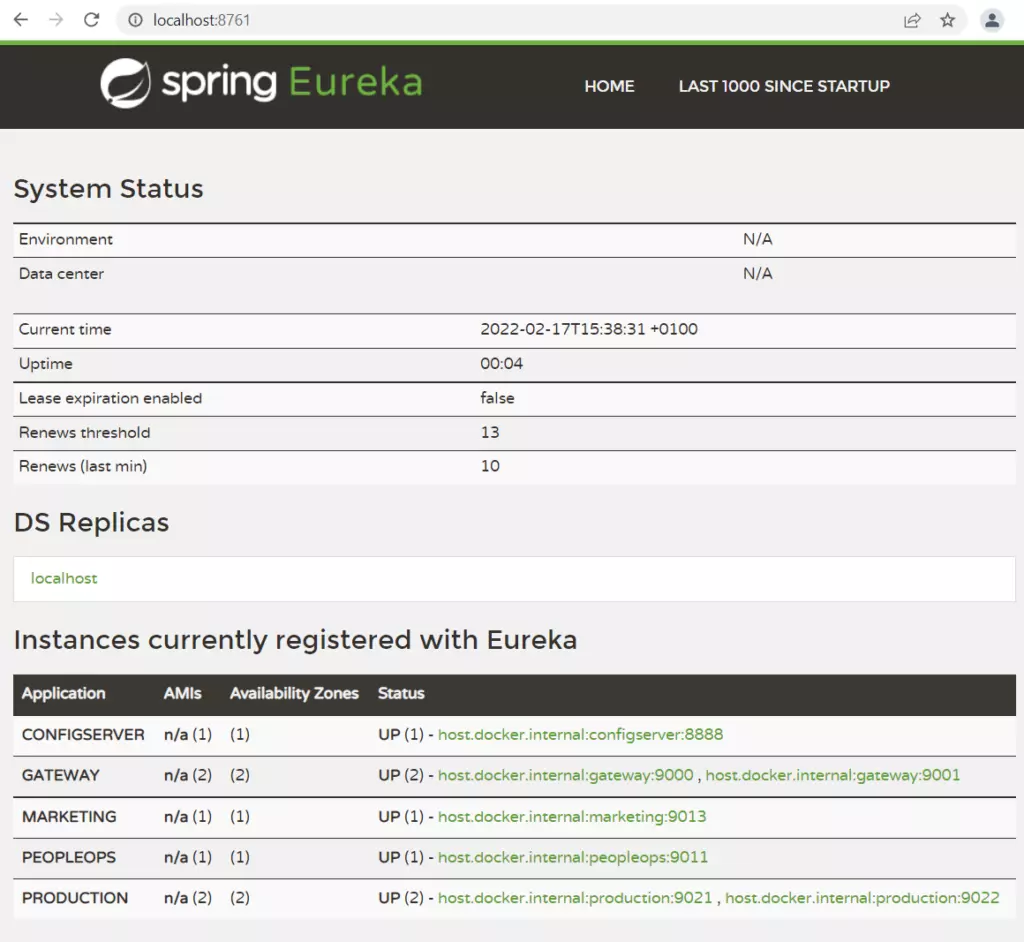

The next integration subject concerns the service discovery mechanism. Service discovery is a service registry. Microservice starting registers itself to Service Discovery and other applications may use its entry to find and communicate with this microservice. Integration Spring Cloud Gateway with Eureka service discovery is simple. Without the creation of any configuration regarding request processing, requests can be passed from the gateway to a specific microservice and its concrete endpoint.

The below Picture shows all registering applications from my lab architecture created due to a practical test of Spring Cloud Gateway. “Production” microservice has one entry for two instances. It is a special configuration, which enables load balancing by a gateway.

Circuit Breaker mention

The circuit breaker is a pattern that is used in case of failure connected to a specific microservice. All we need is to define Spring Gateway fallback procedures. Once the connection breaks down, the request will be forwarded to a new route. The circuit breaker offers more possibilities, for example, special action in case of network delays and it can be configured in the gateway.

Experiment on your own

I encourage you to conduct your own tests or develop a system that I build, in your own direction. Below, there are two links to GitHub repositories:

- https://github.com/chrrono/config-for-Config-server (Repo for keep configuration for Spring Cloud Config Server)

- https://github.com/chrrono/Spring-Cloud-Gateway-lab (All microservices code and docker-compose configuration)

To establish a local environment in a straightforward way, I created a docker-compose configuration. This is a link for the docker-compose.yml file: https://github.com/chrrono/Spring-Cloud-Gateway-lab/blob/master/docker-compose.yml

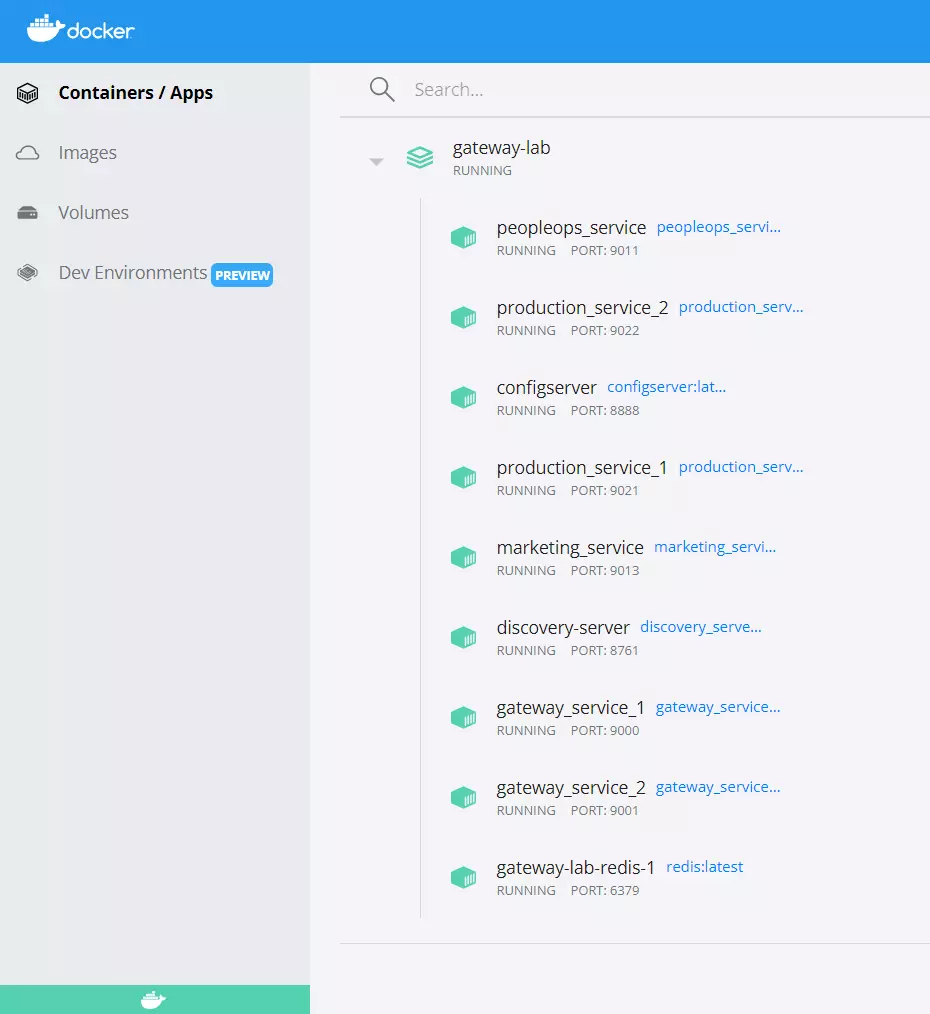

All you need to do is install a docker on your machine. I used Docker Desktop on my Windows machine. After executing the “docker-compose up” command in the proper location you should see all servers up and running:

To conduct some tests I use the Postman application, Google Chrome, and my end-to-end tests (Rest Assured, JUnit, and Executor Service in Java). Do with this code all you want and allow yourself to have only one limitation: your imagination 😊

Summary

Spring Cloud Gateway is a huge topic, undoubtedly. In this article, I focused on showing some basic building components and the overall intention of gateway and interaction with others spring cloud services. I hope readers appreciate the possibilities and care by describing the framework. If someone has an interest in exploring Spring Cloud Gateway on your own, I added links to a repo, which can be used as a template project for your explorations.

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.