In-app purchases in iOS apps – a tester’s perspective

Year after year, Apple’s new releases of mobile devices gain a decent amount of traction in tech media coverage and keep attracting customers to obtain their quite pricey products. Promises of superior quality, straightforwardness of the integrated ecosystem, and inclusion of new, cutting edge technologies urge the company’s longtime fans and new customers alike to upgrade their devices to Californian designed phones, tablets and computers.

Resurgence

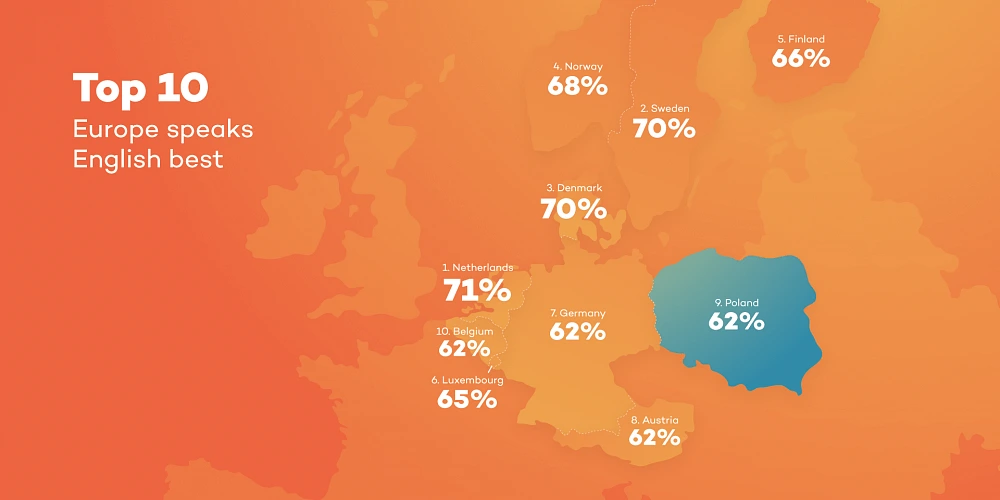

Focusing on the mobile market alone, it is impossible to neglect the significant raise in Apple’s iOS market share of mobile operating systems. Its major competitor, Google’s Android has noted 70.68% of mobile market share in April 2020 – which is around 6 percentage points less than in October 2019. On the other hand, iOS, which noted 22.09% of the market share around the same time, recently has risen to 28.79%. This trend surely pleases Apple’s board, along with anyone who strives to monetize their app ideas in App store.

Gaining revenue through in-app purchases sounds like a brilliant idea, but it requires plenty of planning, calculating risks, and evaluating funds for the project. Before publishing the software product, an idea has to be conceived, marketed, developed, and tested. Each step of this process of making an app aimed at providing paid content differs from the process of creating a custom-ordered software. And that also includes testing.

At what cost?

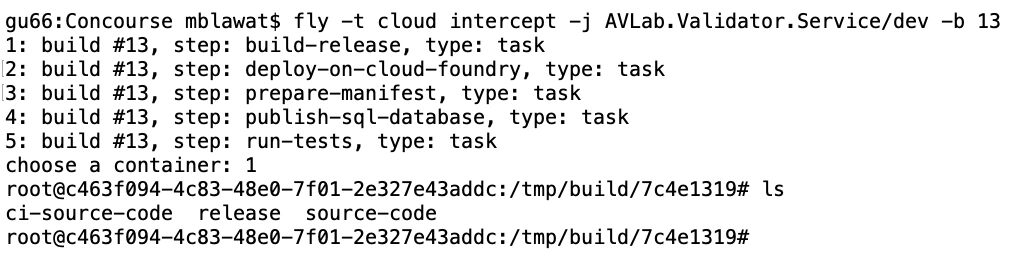

But wait! Testing usually includes lots of repetition. So that would mean testers have to go through many transactions. Doesn’t that entail spending lots of money? Well, not exactly. Apple provides development teams with their own in-app purchase testing tool, Sandbox. But using it doesn’t make testing all fun and games.

Sandbox allows for local development of in-app purchases without spending a dime on them. That happens by supplementing the ‘real’ Appstore account with the Sandbox one. Sounds fantastic, doesn’t it? But unfortunately, there are some inconveniences behind that.

If it ain’t broke...

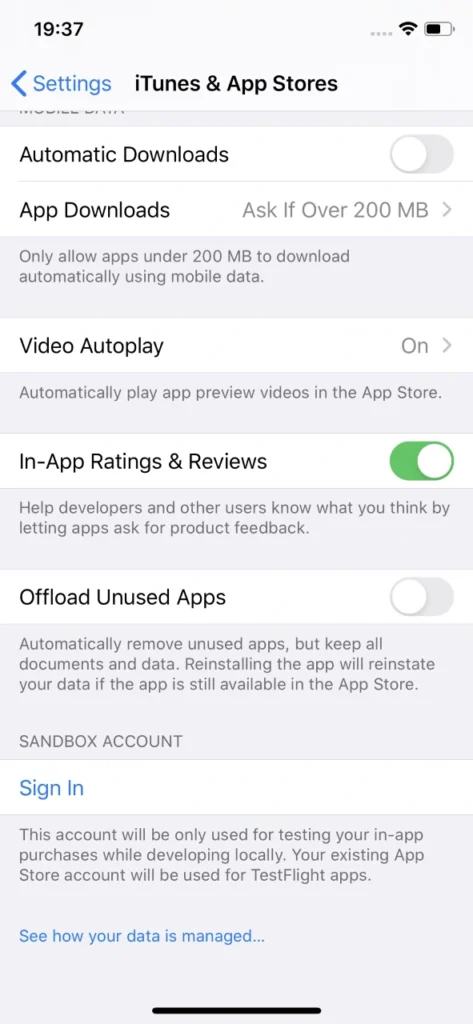

First of all, Sandbox accounts have to be created manually via iTunes Connect, which leaves much to be desired in terms of performance. These accounts require an email in a valid format. Testers will need plenty of Sandbox accounts because it is actually quite easy to ‘use them up’, especially when tested software has its own sign-in system (not related to Apple ID). If by design said app account is also associated with In-app purchase, each app account will require a new Sandbox account.

Unfortunately, Apple’s Sandbox accounts can get really tricky to log into. When you’re trying to sign in to another Sandbox account, which was probably named similarly as all previous one for convenience, you’d think your muscle memory will allow you to type in the password without looking at the screen. Nothing more wrong. Sometimes, when you type in the credentials which consist of an email and a password, check twice and hit Sign In button in Sandbox login popover, nothing happens.

User is not logged in, not even a sign in error is displayed. And you try again. Every character is exactly the same as before. And eventually, you manage to log in. It’s not really a big of a deal unless you lose your temper easily testing manually, but a simple message informing why Sandbox login failed would be much more user-friendly. In automated tests you could just write the code to try to log in until the email address used as login is displayed in the Sandbox account section in iOS settings, which means that the login was successful. It’s not something testers can’t live with, but addressing the issue by Apple would greatly improve the experience of working in iOS development.

Cryptic writings

Problems arise when notifications informing that a particular Sandbox user is subscribed to an auto-renewable subscription are not delivered by Apple. Therefore, many subscription purchase attempts have to be made to actually make sure whether the development of the app went the correct way and it’s just Apple’s own system’s error, not a bug inside the app.

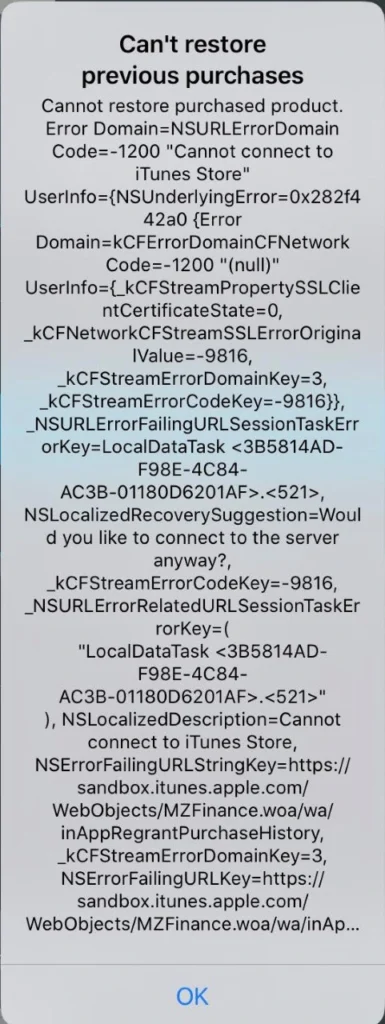

Speaking of errors – during testing of in-app purchase features, it can become really difficult to point out to developers what went wrong to help them debug the problem. Errors displayed are very cryptic and long; therefore, investigating the root cause of the problem can consume a substantial amount of time. There are two main reasons for that: there’s no error documentation created by Apple for those long error messages or the message displayed is very generic.

Combining this with problems which include performance drops in ‘prime time’, problems with receiving server notifications, e.g. for Autorenewing Subscriptions or simply inability to connect to iTunes store and a simple task of testing monthly subscription can turn into a major regression testing suite.

Hey, Siri...

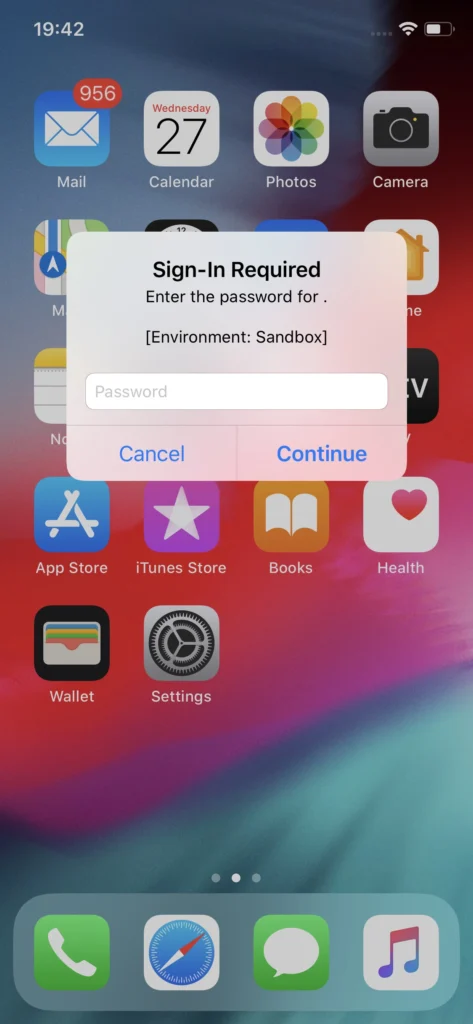

Another issue with Sandbox testing that is not so convenient to work with and not so obvious to workaround are the irritating Sandbox login prompts. These occur randomly for the eternity of your app’s development cycle if the In-app purchases feature in the app under test includes auto-renewable subscriptions. What is problematic is that these login prompts pop-up at any given time, not just when the app is used or dropped to the background. Well, if you’re patient you can learn to live with it and dismiss it when it shows up. But problems may occur when the device used for testing said app is also utilized as a real device in automated tests, e.g. in conjunction with Appium.

This can be addressed by setting up Appium properties in testing framework to automatically dismiss system popups. That could prove somewhat helpful if the test suite doesn’t include any other interactions with system popups. Deleting the application which includes auto-renewable subscriptions from the device gets rid of the random Sandbox login prompts on the device, but that’s not how testing works. Another workaround might be building the app with subscription part removed, which requires additional work on developers’ side. These login prompts are surely a major problem which Apple should address.

Send reinforcements

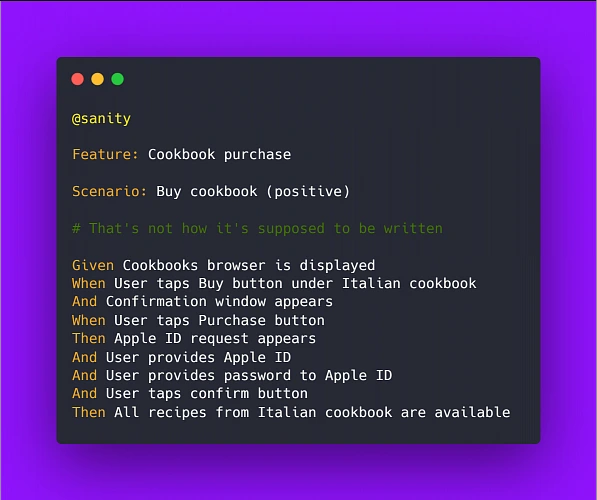

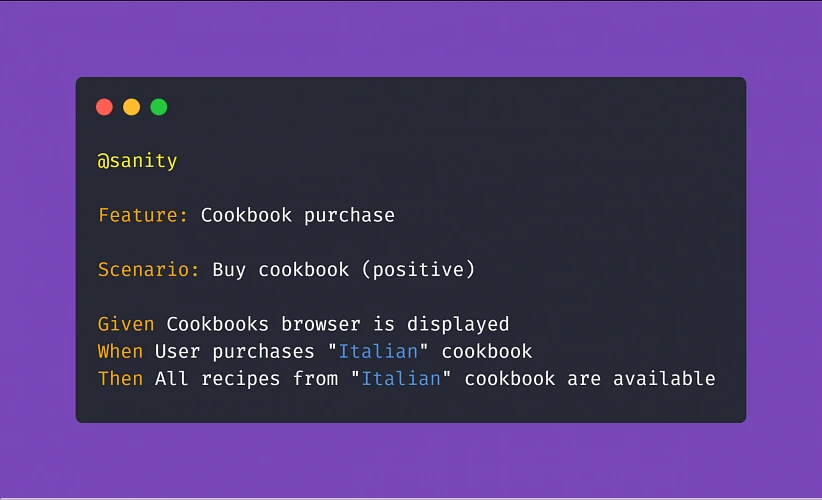

Despite all that, developers and testers alike can and eventually will get through the tedious process of developing and ensuring the quality of in-app purchases in Apple’s ecosystem . A good tactic for this in manual testing is to work out a solid testing routine, which will allow for quicker troubleshooting. Being cautious about each step in the testing scenario and monitoring the environment differences such as being logged in with proper Sandbox account instead of regular Apple ID, an appropriate combination of app account and the Sandbox account or the state of the app in relation to purchases made (whether an In-app purchase has been made within a particular installation or not) is key to understanding whether the application does what is expected and transactions are successful.

While Silicon Valley’s giant rises in the mobile market again, more and more ideas will be monetized in Appstore, making profits not only for the developers but also directly for Apple, which collects a hefty portion of the money spent on apps and paid extras. Let’s hope that sooner than later Apple will address the issues that have been annoying development teams for years now and make their jobs a bit easier.

Sources:

Grape Up guides enterprises on their data-driven transformation journey

Ready to ship? Let's talk.

Check related articles

Read our blog and stay informed about the industry's latest trends and solutions.

Interested in our services?

Reach out for tailored solutions and expert guidance.