G.Tx - Agentic AI platform for application modernization at scale

G.Tx Platform is purpose-built to combine Agentic AI with proven methodology for modernizing complex legacy systems - delivering speed, confidence, and predictable outcomes

G.Tx Methodology ensures confident modernization

Accelerate enterprise legacy transformation

G.Tx Platform delivers 70% automated code generation

Minimize transformation risks end-to-end

G.Tx ensures 100% logic preservation, automated testing, and validated deployment strategies

Transform with financial confidence

AI-driven G.Tx Feasibility Analysis helps to map your entire modernization journey – including accurate costs and deadlines

Shorten time to market

G.Tx accelerates modernization of your product enabling to deliver new features to production at the speed of market changes

G.Tx Platform: The assembly line for legacy code transformation

G.Tx provides Solution Architects with tooling that enables them to design code transformation strategies based on pre-defined templates and AI-driven code development.

Pre-defined and proven transformation templates that significantly accelerate delivery timelines

Complete transparency and control over every step of the code transformation process

Enterprise-scale capabilities for handling large and complex system transformations

Knowledge sharing enablement that supports development teams throughout the modernization journey

Key features

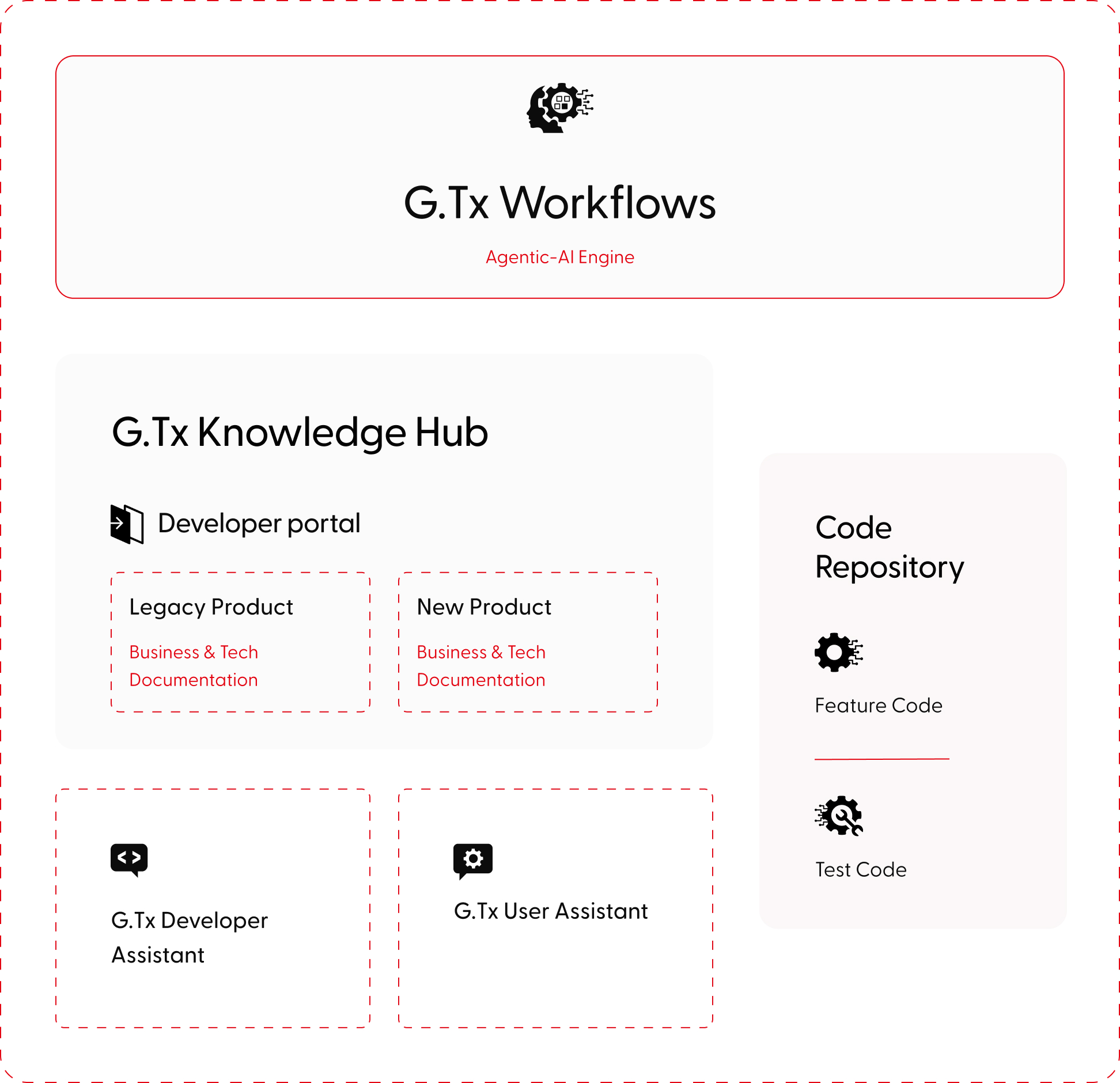

G.Tx workflows – agentic AI engine

Our Agentic AI engine automatically generates up to 70% of new code and 80% of test code, reducing manual effort while ensuring speed, consistency, and validated system behavior.

G.Tx transformation templates

Ready-to-use, proven G.Tx workflows designed for the most common code transformation scenarios (e.g., unit test generation).

G.Tx scaled transformation

G.Tx is engineered for complex, enterprise-grade transformations with bulk file processing and quick scaling to cover wider codebases without manual effort.

G.Tx Knowledge Hub

Knowledge Hub with all project related information – from BRD, through technical design and user guide instructions.

G.Tx Developer Assistant

A developer chatbot connected to Project Knowledge Base, capable to answering developer questions about legacy code, design, dependencies.

G.Tx User Assistant

User Guide chatbot connected to Project Knowledge Base, capable to explaining how product works and how to operate it.

Working with G.Tx: Our proven modernization process

From understanding your legacy system to running modernized applications in production, our G.Tx Platform assists every step of transformation.

Understand

AI-powered deep analysis

Generate business & tech documentation from code

Design

Design transformation strategy

Model transformation for Agentic AI processing

Build

Generate & refine code

Agentic AI generates feature and test code automatically. Developer in the loop to verify and refine.

Run

Phased rollout to production

Enterprise-grade data protection

Gain complete AI transparency and control by ensuring secure modernization through client-approved models, full process visibility, and expert code verification to eliminate hallucination errors.

Complete model control

Choose from various LLMs tailored to specific requirements, with the freedom to deploy on public or private infrastructure according to your enterprise governance standards.

Enterprise security & transparency

Retain complete authority over your data while benefiting from full visibility into every data flow. G.Tx ensures encrypted AI communications and collaborative data governance that aligns with your security standards.

Zero data training risk

Your sensitive data never trains AI models. G.Tx exclusively uses pre-approved models in full compliance with your enterprise data policies, ensuring complete data privacy.

Human-verified quality

G.Tx combines AI efficiency with human expertise—every generated code is verified and refined by engineering team. Smart validation suites ensure your transformed system behaves exactly like the original one.

Learn how we help our customers tackle their challenges

Explore how we redefine industry standards through innovation.

Regulate and innovate at the same time? Tell us what's holding you back.

Reach out for tailored solutions and expert guidance.

Insights on AI-driven legacy modernization

Learn more how we found a way to migrate smarter.

Challenges of the legacy migration process and best practices to mitigate them

Legacy software is the backbone of many organizations, but as technology advances, these systems can become more of a burden than a benefit. Migrating from a legacy system to a modern solution is a daunting task fraught with challenges, from grappling with outdated code and conflicting stakeholder interests to managing dependencies on third-party vendors and ensuring compliance with stringent regulatory standards.

However, with the right strategies and leveraging advanced technologies like Generative AI, these challenges can be effectively mitigated.

Challenge #1: Limited knowledge of the legacy solution

The average lifespan of business software can vary widely depending on several factors, such as the type of software or the industry it serves. Nevertheless, no matter if the software is 5 or 25 years old, it is highly possible its creators and subject matter experts are not accessible anymore (or they barely remember what they built and how it really works), the documentation is incomplete, the code messy and the technology forgotten a long time ago.

Lack of knowledge of the legacy solution not only blocks its further development and maintenance but also negatively affects its migration – it significantly slows down the analysis and replacement process.

Mitigation:

The only way to understand what kind of functionality, processes and dependencies are covered by the legacy software and what really needs to get migrated is in-depth analysis. An extensive discovery phase initiating every migration project should cover:

- interviews with the key users and knowledge keepers,

- observations of the employees and daily operations performed within the system,

- study of all the available documentation and resources,

- source code examination.

The discovery phase, although long (and boring!), demanding, and very costly, is crucial for the migration project’s success. Therefore, it is not recommended to give in to the temptation to take any shortcuts there.

At Grape Up , we do not. We make sure we learn the legacy software in detail, optimizing the analytical efforts at the same time. We support the discovery process by leveraging Generative AI tools . They help us to understand the legacy spaghetti code, forgotten purpose, dependencies, and limitations. GenAI enables us to make use of existing incomplete documentation or to go through technologies that nobody has expertise in anymore. This approach significantly speeds the discovery phase up, making it smoother and more efficient.

Challenge #2: Blurry idea of the target solution & conflicting interests

Unfortunately, understanding the legacy software and having a complete idea of the target replacement are two separate things. A decision to build a new solution, especially in a corporate environment, usually encourages multiple stakeholders (representing different groups of interests) to promote their visions and ideas. Often conflicting, to be precise.

This nonlinear stream of contradicting requirements leads to an uncontrollable growth of the product backlog, which becomes extremely difficult to manage and prioritize. In consequence, efficient decision-making (essential for the product’s success) is barely possible.

Mitigation:

A strong Product Management community with a single product leader - empowered to make decisions and respected by the entire organization – is the key factor here. If combined with a matching delivery model (which may vary depending on a product & project specifics), it sets the goals and frames for the mission and guides its crew.

For huge legacy migration projects with a blurry scope, requiring constant validation and prioritization, an Agile-based, continuous discovery & delivery process is the only possible way to go. With a flexible product roadmap (adjusted on the fly), both creative and development teams work simultaneously, and regular feedback loops are established.

High pressure from the stakeholders always makes the Product Leader’s job difficult. Bold scope decisions become easier when MVP/MDP (Minimum Viable / Desirable Product) approach & MoSCoW (must-have, should-have, could-have, and won't-have, or will not have right now) prioritization technique are in place.

At Grape Up, we assist our clients with establishing and maintaining efficient product & project governance, supporting the in-house management team with our experienced consultants such as Business Analysts, Scrum Masters, Project Managers, or Proxy Product Owners.

Challenge #3: Strategical decisions impacting the future

Migrating the legacy software gives the organization a unique opportunity to sunset outdated technologies, remove all the infrastructural pain points, reach out for modern solutions, and sketch a completely new architecture.

However, these are very heavy decisions. They must not only address the current needs but also be adaptable to future growth. Wrong choices can result in technical debt, forcing another costly migration – much sooner than planned.

Mitigation:

A careful evaluation of the current and future needs is a good starting point for drafting the first technical roadmap and architecture. Conducting a SWOT analysis (Strengths, Weaknesses, Opportunities, Threats) for potential technologies and infrastructural choices provides a balanced view, helping to identify the most suitable options that align with the organization's long-term plan. For Grape Up, one of the key aspects of such an analysis is always industry trends.

Another crucial factor that supports this difficult decision-making process is maintaining technical documentation through Architectural Decision Records (ADRs). ADRs capture the rationale behind key decisions, ensuring that all stakeholders understand the choices made regarding technologies, frameworks, or architectures. This documentation serves as a valuable reference for future decisions and discussions, helping to avoid repeating past mistakes or unnecessary changes (e.g. when a new architect joins the team and pushes for his own technical preferences).

Challenge #4: Dependencies and legacy 3 rd parties

When migrating from a legacy system, one of the significant challenges is managing dependencies with numerous other applications and services which are integrated with the old solution, and need to remain connected with the new one. Many of these are often provided by third-party vendors that may not be willing or able to quickly respond to our project’s needs and adapt to any changes, posing a significant risk to the migration process. Unfortunately, some of the dependencies are likely to be hidden and spotted not early enough, affecting the project’s budget and timeline.

Mitigation:

To mitigate this risk, it's essential to establish strong governance over third-party relationships before the project really begins. This includes forming solid partnerships and ensuring that clear contracts are in place, detailing the rules of cooperation and responsibilities. Prioritizing demands related to third-party integrations (such as API modifications, providing test environments, SLA, etc.), testing the connections early, and building time buffers into the migration plan are also crucial steps to reduce the impact of potential delays or issues.

Furthermore, leveraging Generative AI, which Grape Up does when migrating the legacy solution, can be a powerful tool in identifying and analyzing the complexities of these dependencies. Our consultants can also help to spot potential risks and suggest strategies to minimize disruptions, ensuring that third-party systems continue to function seamlessly during and after the migration.

Challenge #5: Lack of experience and sufficient resources

A legacy migration requires expertise and resources that most organizations lack internally. It is 100% natural. These kinds of tasks occur rarely; therefore, in most cases, owning a huge in-house IT department would be irrational.

Without prior experience in legacy migrations, internal teams may struggle with project initiation; for that reason, external support becomes necessary. Unfortunately, quite often, the involvement of vendors and contractors results in new challenges for the company by increasing its vulnerability (e.g., becoming dependent on externals, having data protection issues, etc.).

Mitigation:

To boost insufficient internal capabilities, it's essential to partner with experienced and trusted vendors who have a proven track record in legacy migrations. Their expertise can help navigate the complexities of the process while ensuring best practices are followed.

However, it's recommended to maintain a balance between internal and external resources to keep control over the project and avoid over-reliance on external parties. Involving multiple vendors can diversify the risk and prevent dependency on a single provider.

By leveraging Generative AI, Grape Up manages to optimize resource use, reducing the amount of manual work that consultants and developers do when migrating the legacy software. With a smaller external headcount involved, it is much easier for organizations to manage their projects and keep a healthy balance between their own resources and their partners.

Challenge #6: Budget and time pressure

Due to their size, complexity, and importance for the business, budget constraints and time pressure are always common challenges for legacy migration projects. Resources are typically insufficient to cover all the requirements (that keep on growing), unexpected expenses (that always pop up), and the need to meet hard deadlines. These pressures can result in compromised quality, incomplete migrations, or even the entire project’s failure if not managed effectively.

Mitigation:

Those are the other challenges where strong governance and effective product ownership would be helpful. Implementing an iterative approach with a focus on delivering an MVP (Minimum Viable Product) or MDP (Minimum Desirable Product) can help prioritize essential features and manage scope within the available budget and time.

For tracking convenience, it is useful to budget each feature or part of the system separately. It’s also important to build realistic time and financial buffers and continuously update estimates as the project progresses to account for unforeseen issues. There are multiple quick and sufficient (called “magic”) estimation methods that your team may use for that purpose, such as silent grouping.

As stated before, at Grape Up, we use Generative AI to reduce the workload on teams by analyzing the old solution and generating significant parts of the new one automatically. This helps to keep the project on track, even under tight budget and time constraints.

Challenge #7: Demanding validation process

A critical but typically disregarded and forgotten aspect of legacy migration is ensuring the new system meets not only all the business demands but also compliance, security, performance, and accessibility requirements. What if some of the implemented features appear to be illegal? Or our new system lets only a few concurrent users log in?

Without proper planning and continuous validation, these non-functional requirements can become major issues shortly before or after the release, putting the entire project at risk.

Mitigation:

Implementation of comprehensive validation, monitoring, and testing strategies from the project's early stages is a must. This should encompass both functional and non-functional requirements to ensure all aspects of the system are covered.

Efficient validation processes must not be a one-time activity but rather a regular occurrence. It also needs to involve a broad range of stakeholders and experts, such as:

- representatives of different user groups (to verify if the system covers all the critical business functions and is adjusted to their specific needs – e.g. accessibility-related),

- the legal department (to examine whether all the planned features are legally compliant),

- quality assurance experts (to continuously perform all the necessary tests, including security and performance testing).

Prioritizing non-functional requirements, such as performance and security, is essential to prevent potential issues from undermining the project’s success. For each legacy migration, there are also individual, very project-specific dimensions of validation. At Grape Up, during the discovery phase our analysts empowered by GenAI take their time to recognize all the critical aspects of the new solution’s quality, proposing the right thresholds, testing tools, and validation methods.

Challenge #8: Data migration & rollout strategy

Migrating data from a legacy system is one of the most challenging tasks of a migration project, particularly when dealing with vast amounts of historical data accumulated over many years. It is complex and costly, requiring meticulous planning to avoid data loss, corruption, or inconsistency.

Additionally, the release of the new system can have a significant impact on customers, especially if not handled smoothly. The risk of encountering unforeseen issues during the rollout phase is high, which can lead to extended downtime, customer dissatisfaction, and a prolonged stabilization period.

Mitigation:

Firstly, it is essential to establish comprehensive data migration and rollout strategies early in the project. Perhaps migrating all historical data is not necessary? Selective migration can significantly reduce the complexity, cost, and time involved.

A base plan for the rollout is equally important to minimize customer impact. This includes careful scheduling of releases, thorough testing in staging environments that closely mimic production, and phased rollouts that allow for gradual transition rather than a big-bang approach.

At Grape Up, we strongly recommend investing in Continuous Integration and Continuous Delivery (CI/CD) pipelines that can streamline the release process, enabling automated testing, deployment, and quick iterations. Test automation ensures that any changes or fixes (that are always numerous when rolling out) are rapidly validated, reducing the risk of introducing new issues during subsequent releases.

Post-release, a hypercare phase is crucial to provide dedicated support and rapid response to any problems that arise. It involves close monitoring of the system’s performance, user feedback, and quick deployment of fixes as needed. By having a hypercare plan in place, the organization can ensure that any issues are addressed promptly, reducing the overall impact on customers and business operations.

Summary

Legacy migration is undoubtedly a complex and challenging process, but with careful planning, strong governance, and the right blend of internal and external expertise, it can be navigated successfully. By prioritizing critical aspects such as in-depth analysis, strategic decision-making, and robust validation processes, organizations can mitigate the risks involved and avoid common pitfalls.

Managing budgets and expenses effectively is crucial, as unforeseen costs can quickly escalate. Leveraging advanced technologies like Generative AI not only enhances the efficiency and accuracy of the migration process but also helps control costs by streamlining tasks and reducing the overall burden on resources.

At Grape Up, we understand the intricacies of legacy migration and are committed to helping our clients transition smoothly to modern solutions that support future growth and innovation. With the right strategies in place, your organization can move beyond the limitations of legacy systems, achieving a successful migration within budget while embracing a future of improved performance, scalability, and flexibility.

Modernizing legacy applications with generative AI: Lessons from R&D Projects

As digital transformation accelerates, modernizing legacy applications has become essential for businesses to stay competitive. The application modernization market size, valued at USD 21.32 billion in 2023 , is projected to reach USD 74.63 billion by 2031 (1), reflecting the growing importance of updating outdated systems.

With 94% of business executives viewing AI as key to future success and 76% increasing their investments in Generative AI due to its proven value (2), it's clear that AI is becoming a critical driver of innovation. One key area where AI is making a significant impact is application modernization - an essential step for businesses aiming to improve scalability, performance, and efficiency.

Based on two projects conducted by our R&D team , we've seen firsthand how Generative AI can streamline the process of rewriting legacy systems.

Let’s start by discussing the importance of rewriting legacy systems and how GenAI-driven solutions are transforming this process.

Why re-write applications?

In the rapidly evolving software development landscape, keeping applications up-to-date with the latest programming languages and technologies is crucial. Rewriting applications to new languages and frameworks can significantly enhance performance, security, and maintainability. However, this process is often labor-intensive and prone to human error.

Generative AI offers a transformative approach to code translation by:

- leveraging advanced machine learning models to automate the rewriting process

- ensuring consistency and efficiency

- accelerating modernization of legacy systems

- facilitating cross-platform development and code refactoring

As businesses strive to stay competitive, adopting Generative AI for code translation becomes increasingly important. It enables them to harness the full potential of modern technologies while minimizing risks associated with manual rewrites.

Legacy systems, often built on outdated technologies, pose significant challenges in terms of maintenance and scalability. Modernizing legacy applications with Generative AI provides a viable solution for rewriting these systems into modern programming languages, thereby extending their lifespan and improving their integration with contemporary software ecosystems.

This automated approach not only preserves core functionality but also enhances performance and security, making it easier for organizations to adapt to changing technological landscapes without the need for extensive manual intervention.

Why Generative AI?

Generative AI offers a powerful solution for rewriting applications, providing several key benefits that streamline the modernization process.

Modernizing legacy applications with Generative AI proves especially beneficial in this context for the following reasons:

- Identifying relationships and business rules: Generative AI can analyze legacy code to uncover complex dependencies and embedded business rules, ensuring critical functionalities are preserved and enhanced in the new system.

- Enhanced accuracy: Automating tasks like code analysis and documentation, Generative AI reduces human errors and ensures precise translation of legacy functionalities, resulting in a more reliable application.

- Reduced development time and cost: Automation significantly cuts down the time and resources needed for rewriting systems. Faster development cycles and fewer human hours required for coding and testing lower the overall project cost.

- Improved security: Generative AI aids in implementing advanced security measures in the new system, reducing the risk of threats and identifying vulnerabilities, which is crucial for modern applications.

- Performance optimization: Generative AI enables the creation of optimized code from the start, integrating advanced algorithms that improve efficiency and adaptability, often missing in older systems.

By leveraging Generative AI, organizations can achieve a smooth transition to modern system architectures, ensuring substantial returns in performance, scalability, and maintenance costs.

In this article, we will explore:

- the use of Generative AI for rewriting a simple CRUD application

- the use of Generative AI for rewriting a microservice-based application

- the challenges associated with using Generative AI

For these case studies, we used OpenAI's ChatGPT-4 with a context of 32k tokens to automate the rewriting process, demonstrating its advanced capabilities in understanding and generating code across different application architectures.

We'll also present the benefits of using a data analytics platform designed by Grape Up's experts. The platform utilizes Generative AI and neural graphs to enhance its data analysis capabilities, particularly in data integration, analytics, visualization, and insights automation.

Project 1: Simple CRUD application

The source CRUD project was used as an example of a simple CRUD application - one written utilizing .Net Core as a framework, Entity Framework Core for the ORM, and SQL Server for a relational database. The target project containes a backend application created using Java 17 and Spring Boot 3.

Steps taken to conclude the project

Rewriting a simple CRUD application using Generative AI involves a series of methodical steps to ensure a smooth transition from the old codebase to the new one. Below are the key actions undertaken during this process:

- initial architecture and data flow investigation - conducting a thorough analysis of the existing application's architecture and data flow.

- generating target application skeleton - creating the initial skeleton of the new application in the target language and framework.

- converting components - translating individual components from the original codebase to the new environment, ensuring that all CRUD operations were accurately replicated.

- generating tests - creating automated tests for the backend to ensure functionality and reliability.

Throughout each step, some manual intervention by developers was required to address code errors, compilation issues, and other problems encountered after using OpenAI's tools.

Initial architecture and data flows’ investigation

The first stage in rewriting a simple CRUD application using Generative AI is to conduct a thorough investigation of the existing architecture and data flow. This foundational step is crucial for understanding the current system's structure, dependencies, and business logic.

This involved:

- codebase analysis

- data flow mapping – from user inputs to database operations and back

- dependency identification

- business logic extraction – documenting the core business logic embedded within the application

While OpenAI's ChatGPT-4 is powerful, it has some limitations when dealing with large inputs or generating comprehensive explanations of entire projects. For example:

- OpenAI couldn’t read files directly from the file system

- Inputting several project files at once often resulted in unclear or overly general outputs

However, OpenAI excels at explaining large pieces of code or individual components. This capability aids in understanding the responsibilities of different components and their data flows. Despite this, developers had to conduct detailed investigations and analyses manually to ensure a complete and accurate understanding of the existing system.

This is the point at which we used our data analytics platform. In comparison to OpenAI, it focuses on data analysis. It's especially useful for analyzing data flows and project architecture, particularly thanks to its ability to process and visualize complex datasets. While it does not directly analyze source code, it can provide valuable insights into how data moves through a system and how different components interact.

Moreover, the platform excels at visualizing and analyzing data flows within your application. This can help identify inefficiencies, bottlenecks, and opportunities for optimization in the architecture.

Generating target application skeleton

As with OpenAI's inability to analyze the entire project, the attempt to generate the skeleton of the target application was also unsuccessful, so the developer had to manually create it. To facilitate this, Spring Initializr was used with the following configuration:

- Java: 17

- Spring Boot: 3.2.2

- Gradle: 8.5

Attempts to query OpenAI for the necessary Spring dependencies faced challenges due to significant differences between dependencies for C# and Java projects. Consequently, all required dependencies were added manually.

Additionally, the project included a database setup. While OpenAI provided a series of steps for adding database configuration to a Spring Boot application, these steps needed to be verified and implemented manually.

Converting components

After setting up the backend, the next step involved converting all project files - Controllers, Services, and Data Access layers - from C# to Java Spring Boot using OpenAI.

The AI proved effective in converting endpoints and data access layers, producing accurate translations with only minor errors, such as misspelled function names or calls to non-existent functions.

In cases where non-existent functions were generated, OpenAI was able to create the function bodies based on prompts describing their intended functionality. Additionally, OpenAI efficiently generated documentation for classes and functions.

However, it faced challenges when converting components with extensive framework-specific code. Due to differences between frameworks in various languages, the AI sometimes lost context and produced unusable code.

Overall, OpenAI excelled at:

- converting data access components

- generating REST APIs

However, it struggled with:

- service-layer components

- framework-specific code where direct mapping between programming languages was not possible

Despite these limitations, OpenAI significantly accelerated the conversion process, although manual intervention was required to address specific issues and ensure high-quality code.

Generating tests

Generating tests for the new code is a crucial step in ensuring the reliability and correctness of the rewritten application. This involves creating both unit tests and integration tests to validate individual components and their interactions within the system.

To create a new test, the entire component code was passed to OpenAI with the query: "Write Spring Boot test class for selected code."

OpenAI performed well at generating both integration tests and unit tests; however, there were some distinctions:

- For unit tests , OpenAI generated a new test for each if-clause in the method under test by default.

- For integration tests , only happy-path scenarios were generated with the given query.

- Error scenarios could also be generated by OpenAI, but these required more manual fixes due to a higher number of code issues.

If the test name is self-descriptive, OpenAI was able to generate unit tests with a lower number of errors.

Project 2: Microservice-based application

As an example of a microservice-based application, we used the Source microservice project - an application built using .Net Core as the framework, Entity Framework Core for the ORM, and a Command Query Responsibility Segregation (CQRS) approach for managing and querying entities. RabbitMQ was used to implement the CQRS approach and EventStore to store events and entity objects. Each microservice could be built using Docker, with docker-compose managing the dependencies between microservices and running them together.

The target project includes:

- a microservice-based backend application created with Java 17 and Spring Boot 3

- a frontend application using the React framework

- Docker support for each microservice

- docker-compose to run all microservices at once

Project stages

Similarly to the CRUD application rewriting project, converting a microservice-based application using Generative AI requires a series of steps to ensure a seamless transition from the old codebase to the new one. Below are the key steps undertaken during this process:

- initial architecture and data flows’ investigation - conducting a thorough analysis of the existing application's architecture and data flow.

- rewriting backend microservices - selecting an appropriate framework for implementing CQRS in Java, setting up a microservice skeleton, and translating the core business logic from the original language to Java Spring Boot.

- generating a new frontend application - developing a new frontend application using React to communicate with the backend microservices via REST APIs.

- generating tests for the frontend application - creating unit tests and integration tests to validate its functionality and interactions with the backend.

- containerizing new applications - generating Docker files for each microservice and a docker-compose file to manage the deployment and orchestration of the entire application stack.

Throughout each step, developers were required to intervene manually to address code errors, compilation issues, and other problems encountered after using OpenAI's tools. This approach ensured that the new application retains the functionality and reliability of the original system while leveraging modern technologies and best practices.

Initial architecture and data flows’ investigation

The first step in converting a microservice-based application using Generative AI is to conduct a thorough investigation of the existing architecture and data flows. This foundational step is crucial for understanding:

- the system’s structure

- its dependencies

- interactions between microservices

Challenges with OpenAI

Similar to the process for a simple CRUD application, at the time, OpenAI struggled with larger inputs and failed to generate a comprehensive explanation of the entire project. Attempts to describe the project or its data flows were unsuccessful because inputting several project files at once often resulted in unclear and overly general outputs.

OpenAI’s strengths

Despite these limitations, OpenAI proved effective in explaining large pieces of code or individual components. This capability helped in understanding:

- the responsibilities of different components

- their respective data flows

Developers can create a comprehensive blueprint for the new application by thoroughly investigating the initial architecture and data flows. This step ensures that all critical aspects of the existing system are understood and accounted for, paving the way for a successful transition to a modern microservice-based architecture using Generative AI.

Again, our data analytics platform was used in project architecture analysis. By identifying integration points between different application components, the platform helps ensure that the new application maintains necessary connections and data exchanges.

It can also provide a comprehensive view of your current architecture, highlighting interactions between different modules and services. This aids in planning the new architecture for efficiency and scalability. Furthermore, the platform's analytics capabilities support identifying potential risks in the rewriting process.

Rewriting backend microservices

Rewriting the backend of a microservice-based application involves several intricate steps, especially when working with specific architectural patterns like CQRS (Command Query Responsibility Segregation) and event sourcing . The source C# project uses the CQRS approach, implemented with frameworks such as NServiceBus and Aggregates , which facilitate message handling and event sourcing in the .NET ecosystem.

Challenges with OpenAI

Unfortunately, OpenAI struggled with converting framework-specific logic from C# to Java. When asked to convert components using NServiceBus, OpenAI responded:

"The provided C# code is using NServiceBus, a service bus for .NET, to handle messages. In Java Spring Boot, we don't have an exact equivalent of NServiceBus, but here's how you might convert the given C# code to Java Spring Boot..."

However, the generated code did not adequately cover the CQRS approach or event-sourcing mechanisms.

Choosing Axon framework

Due to these limitations, developers needed to investigate suitable Java frameworks. After thorough research, the Axon Framework was selected, as it offers comprehensive support for:

- domain-driven design

- CQRS

- event sourcing

Moreover, Axon provides out-of-the-box solutions for message brokering and event handling and has a Spring Boot integration library , making it a popular choice for building Java microservices based on CQRS.

Converting microservices

Each microservice from the source project could be converted to Java Spring Boot using a systematic approach, similar to converting a simple CRUD application. The process included:

- analyzing the data flow within each microservice to understand interactions and dependencies

- using Spring Initializr to create the initial skeleton for each microservice

- translating the core business logic, API endpoints, and data access layers from C# to Java

- creating unit and integration tests to validate each microservice’s functionality

- setting up the event sourcing mechanism and CQRS using the Axon Framework, including configuring Axon components and repositories for event sourcing

Manual Intervention

Due to the lack of direct mapping between the source project's CQRS framework and the Axon Framework, manual intervention was necessary. Developers had to implement framework-specific logic manually to ensure the new system retained the original's functionality and reliability.

Generating a new frontend application

The source project included a frontend component written using aspnetcore-https and aspnetcore-react libraries, allowing for the development of frontend components in both C# and React.

However, OpenAI struggled to convert this mixed codebase into a React-only application due to the extensive use of C#.

Consequently, it proved faster and more efficient to generate a new frontend application from scratch, leveraging the existing REST endpoints on the backend.

Similar to the process for a simple CRUD application, when prompted with “Generate React application which is calling a given endpoint” , OpenAI provided a series of steps to create a React application from a template and offered sample code for the frontend.

- OpenAI successfully generated React components for each endpoint

- The CSS files from the source project were reusable in the new frontend to maintain the same styling of the web application.

- However, the overall structure and architecture of the frontend application remained the developer's responsibility.

Despite its capabilities, OpenAI-generated components often exhibited issues such as:

- mixing up code from different React versions, leading to code failures.

- infinite rendering loops.

Additionally, there were challenges related to CORS policy and web security:

- OpenAI could not resolve CORS issues autonomously but provided explanations and possible steps for configuring CORS policies on both the backend and frontend

- It was unable to configure web security correctly.

- Moreover, since web security involves configurations on the frontend and multiple backend services, OpenAI could only suggest common patterns and approaches for handling these cases, which ultimately required manual intervention.

Generating tests for the frontend application

Once the frontend components were completed, the next task was to generate tests for these components. OpenAI proved to be quite effective in this area. When provided with the component code, OpenAI could generate simple unit tests using the Jest library.

OpenAI was also capable of generating integration tests for the frontend application, which are crucial for verifying that different components work together as expected and that the application interacts correctly with backend services.

However, some manual intervention was required to fix issues in the generated test code. The common problems encountered included:

- mixing up code from different React versions, leading to code failures.

- dependencies management conflicts, such as mixing up code from different test libraries.

Containerizing new application

The source application contained Dockerfiles that built images for C# applications. OpenAI successfully converted these Dockerfiles to a new approach using Java 17 , Spring Boot , and Gradle build tools by responding to the query:

"Could you convert selected code to run the same application but written in Java 17 Spring Boot with Gradle and Docker?"

Some manual updates, however, were needed to fix the actual jar name and file paths.

Once the React frontend application was implemented, OpenAI was able to generate a Dockerfile by responding to the query:

"How to dockerize a React application?"

Still, manual fixes were required to:

- replace paths to files and folders

- correct mistakes that emerged when generating multi-staged Dockerfiles , requiring further adjustments

While OpenAI was effective in converting individual Dockerfiles, it struggled with writing docker-compose files due to a lack of context regarding all services and their dependencies.

For instance, some microservices depend on database services, and OpenAI could not fully understand these relationships. As a result, the docker-compose file required significant manual intervention.

Conclusion

Modern tools like OpenAI's ChatGPT can significantly enhance software development productivity by automating various aspects of code writing and problem-solving. Leveraging large language models, such as OpenAI over ChatGPT can help generate large pieces of code, solve problems, and streamline certain tasks.

However, for complex projects based on microservices and specialized frameworks, developers still need to do considerable work manually, particularly in areas related to architecture, framework selection, and framework-specific code writing.

What Generative AI is good at:

- converting pieces of code from one language to another - Generative AI excels at translating individual code snippets between different programming languages, making it easier to migrate specific functionalities.

- generating large pieces of new code from scratch - OpenAI can generate substantial portions of new code, providing a solid foundation for further development.

- generating unit and integration tests - OpenAI is proficient in creating unit tests and integration tests, which are essential for validating the application's functionality and reliability.

- describing what code does - Generative AI can effectively explain the purpose and functionality of given code snippets, aiding in understanding and documentation.

- investigating code issues and proposing possible solutions - Generative AI can quickly analyze code issues and suggest potential fixes, speeding up the debugging process.

- containerizing application - OpenAI can create Dockerfiles for containerizing applications, facilitating consistent deployment environments.

At the time of project implementation, Generative AI still had several limitations .

- OpenAI struggled to provide comprehensive descriptions of an application's overall architecture and data flow, which are crucial for understanding complex systems.

- It also had difficulty identifying equivalent frameworks when migrating applications, requiring developers to conduct manual research.

- Setting up the foundational structure for microservices and configuring databases were tasks that still required significant developer intervention.

- Additionally, OpenAI struggled with managing dependencies, configuring web security (including CORS policies), and establishing a proper project structure, often needing manual adjustments to ensure functionality.

Benefits of using the data analytics platform:

- data flow visualization: It provides detailed visualizations of data movement within applications, helping to map out critical pathways and dependencies that need attention during re-writing.

- architectural insights : The platform offers a comprehensive analysis of system architecture, identifying interactions between components to aid in designing an efficient new structure.

- integration mapping: It highlights integration points with other systems or components, ensuring that necessary integrations are maintained in the re-written application.

- risk assessment: The platform's analytics capabilities help identify potential risks in the transition process, allowing for proactive management and mitigation.

By leveraging GenerativeAI’s strengths and addressing its limitations through manual intervention, developers can achieve a more efficient and accurate transition to modern programming languages and technologies. This hybrid approach to modernizing legacy applications with Generative AI currently ensures that the new application retains the functionality and reliability of the original system while benefiting from the advancements in modern software development practices.

It's worth remembering that Generative AI technologies are rapidly advancing, with improvements in processing capabilities. As Generative AI becomes more powerful, it is increasingly able to understand and manage complex project architectures and data flows. This evolution suggests that in the future, it will play a pivotal role in rewriting projects.

Do you need support in modernizing your legacy systems with expert-driven solutions?

.................

Sources:

- https://www.verifiedmarketresearch.com/product/application-modernization-market/

- https://www2.deloitte.com/content/dam/Deloitte/us/Documents/deloitte-analytics/us-ai-institute-state-of-ai-fifth-edition.pdf

LLM comparison: Find the best fit for legacy system rewrites

Legacy systems often struggle with performance, are vulnerable to security issues, and are expensive to maintain. Despite these challenges, over 65% of enterprises still rely on them for critical operations.

At the same time, modernization is becoming a pressing business need, with the application modernization services market valued at $17.8 billion in 2023 and expected to grow at a CAGR of 16.7%.

This growth highlights a clear trend: businesses recognize the need to update outdated systems to keep pace with industry demands.

The journey toward modernization varies widely. While 75% of organizations have started modernization projects, only 18% have reached a state of continuous improvement.

Data source: https://www.redhat.com/en/resources/app-modernization-report

For many, the process remains challenging, with a staggering 74% of companies failing to complete their legacy modernization efforts. Security and efficiency are the primary drivers, with over half of surveyed companies citing these as key motivators.

Given these complexities, the question arises: Could Generative AI simplify and accelerate this process?

With the surging adoption rates of AI technology, it’s worth exploring if Generative AI has a role in rewriting legacy systems.

This article explores LLM comparison, evaluating GenAI tools' strengths, weaknesses, and potential risks. The decision to use them ultimately lies with you.

Here's what we'll discuss:

- Why Generative AI?

- The research methodology

- Generative AI tools: six contenders for LLM comparison

- OpenAI backed by ChatGPT-4o

- Claude-3-sonnet

- Claude-3-opus

- Claude-3-haiku

- Gemini 1.5 Flash

- Gemini 1.5 Pro

- Comparison summary

Why Generative AI?

Traditionally, updating outdated systems has been a labor-intensive and error-prone process. Generative AI offers a solution by automating code translation, ensuring consistency and efficiency. This accelerates the modernization of legacy systems and supports cross-platform development and refactoring.

As businesses aim to remain competitive, using Generative AI for code transformation is crucial, allowing them to fully use modern technologies while reducing manual rewrite risks.

Here are key reasons to consider its use:

- Uncovering dependencies and business logic - Generative AI can dissect legacy code to reveal dependencies and embedded business logic, ensuring essential functionalities are retained and improved in the updated system.

- Decreased development time and expenses - automation drastically reduces the time and resources required for system re-writing. Quicker development cycles and fewer human hours needed for coding and testing decrease the overall project cost.

- Consistency and accuracy - manual code translation is prone to human error. AI models ensure consistent and accurate code conversion, minimizing bugs and enhancing reliability.

- Optimized performance - Generative AI facilitates the creation of optimized code from the beginning, incorporating advanced algorithms that enhance efficiency and adaptability, often lacking in older systems.

The LLM comparison research methodology

It could be tough to compare different Generative AI models to each other. It’s hard to find the same criteria for available tools. Some are web-based, some are restricted to a specific IDE, some offer a “chat” feature, and others only propose a code.

As our goal was the re-writing of existing projects , we aimed to create an LLM comparison based on the following six main challenges while working with existing code:

- Analyzing project architecture - understanding the architecture is crucial for maintaining the system's integrity during re-writing. It ensures the new code aligns with the original design principles and system structure.

- Analyzing data flows - proper analysis of data flows is essential to ensure that data is processed correctly and efficiently in the re-written application. This helps maintain functionality and performance.

- Generating historical b acklog - this involves querying the Generative AI to create Jira (or any other tracking system) tickets that could potentially be used to rebuild the system from scratch. The aim is to replicate the workflow of the initial project implementation. These "tickets" should include component descriptions and acceptance criteria.

- Converting code from one programming language to another - language conversion is often necessary to leverage modern technologies. Accurate translation preserves functionality and enables integration with contemporary systems.

- Generating new code - the ability to generate new code, such as test cases or additional features, is important for enhancing the application's capabilities and ensuring comprehensive testing.

- Privacy and security of a Generative AI tool - businesses are concerned about sharing their source codebase with the public internet. Therefore, work with Generative AI must occur in an isolated environment to protect sensitive data.

Source projects overview

To test the capabilities of Generative AI, we used two projects:

- Simple CRUD application - The project utilizes .Net Core as its framework, with Entity Framework Core serving as the ORM and SQL Server as the relational database. The target application is a backend system built with Java 17 and Spring Boot 3.

- Microservice-based application - The application is developed with .Net Core as its framework, Entity Framework Core as the ORM, and the Command Query Responsibility Segregation (CQRS) pattern for handling entity operations. The target system includes a microservice-based backend built with Java 17 and Spring Boot 3, alongside a frontend developed using the React framework

Generative AI tools: six contenders for LLM comparison

In this article, we will compare six different Generative AI tools used in these example projects:

- OpenAI backed by ChatGPT-4o with a context of 128k tokens

- Claude-3-sonnet - context of 200k tokens

- Claude-3-opus - context of 200k tokens

- Claude-3-haiku - context of 200k tokens

- Gemini 1.5 Flash - context of 1M tokens

- Gemini 1.5 Pro - context of 2M tokens

OpenAI

OpenAI's ChatGPT-4o represents an advanced language model that showcases the leading edge of artificial intelligence technology. Known for its conversational prowess and ability to manage extensive contexts, it offers great potential for explaining and generating code.

- Analyzing project architecture

ChatGPT faces challenges in analyzing project architecture due to its abstract nature and the high-level understanding required. The model struggles with grasping the full context and intricacies of architectural design, as it lacks the ability to comprehend abstract concepts and relationships not explicitly defined in the code.

- Analyzing data flows

ChatGPT performs better at analyzing data flows within a program. It can effectively trace how data moves through a program by examining function calls, variable assignments, and other code structures. This task aligns well with ChatGPT's pattern recognition capabilities, making it a suitable application for the model.

- Generating historical backlog

When given a project architecture as input, OpenAI can generate high-level epics that capture the project's overall goals and objectives. However, it struggles to produce detailed user stories suitable for project management tools like Jira, often lacking the necessary detail and precision for effective use.

- Converting code from one programming language to another

ChatGPT performs reasonably well in converting code, such as from C# to Java Spring Boot, by mapping similar constructs and generating syntactically correct code. However, it encounters limitations when there is no direct mapping between frameworks, as it lacks the deep semantic understanding needed to translate unique framework-specific features.

- Generating new code

ChatGPT excels in generating new code, particularly for unit tests and integration tests. Given a piece of code and a prompt, it can generate tests that accurately verify the code's functionality, showcasing its strength in this area.

- Privacy and security of the Generative AI tool

OpenAI's ChatGPT, like many cloud-based AI services, typically operates over the internet. However, there are solutions to using it in an isolated private environment without sharing code or sensitive data on the public internet. To achieve this, on-premise deployments such as Azure OpenAI can be used, a service offered by Microsoft where OpenAI models can be accessed within Azure's secure cloud environment.

Best tip

Use Reinforcement Learning from Human Feedback (RLHF): If possible, use RLHF to fine-tune GPT-4. This involves providing feedback on the AI's outputs, which it can then use to improve future outputs. This can be particularly useful for complex tasks like code migration.

Overall

OpenAI's ChatGPT-4o is a mature and robust language model that provides substantial support to developers in complex scenarios. It excels in tasks like code conversion between programming languages, ensuring accurate translation while maintaining functionality.

- Possibilities 3/5

- Correctness 3/5

- Privacy 5/5

- Maturity 4/5

Overall score: 4/5

Claude-3-sonnet

Claude-3-Sonnet is a language model developed by Anthropic, designed to provide advanced natural language processing capabilities. Its architecture is optimized for maintaining context over extended interactions, offering a balance of intelligence and speed.

- Analyzing project architecture

Claude-3-Sonnet excels in analyzing and comprehending the architecture of existing projects. When presented with a codebase, it provides detailed insights into the project's structure, identifying components, modules, and their interdependencies. Claude-3-Sonnet offers a comprehensive breakdown of project architecture, including class hierarchies, design patterns, and architectural principles employed.

- Analyzing data flows

It struggles to grasp the full context and nuances of data flows, particularly in complex systems with sophisticated data transformations and conditional logic. This limitation can pose challenges when rewriting projects that heavily rely on intricate data flows or involve sophisticated data processing pipelines, necessitating manual intervention and verification by human developers.

- Generating historical backlog

Claude-3-Sonnet can provide high-level epics that cover main functions and components when prompted with a project's architecture. However, they lack detailed acceptance criteria and business requirements. While it may propose user stories to map to the epics, these stories will also lack the details needed to create backlog items. It can help capture some user goals without clear confirmation points for completion.

- Converting code from one programming language to another

Claude-3-Sonnet showcases impressive capabilities in converting code, such as translating C# code to Java Spring Boot applications. It effectively translates the logic and functionality of the original codebase into a new implementation, leveraging framework conventions and best practices. However, limitations arise when there is no direct mapping between frameworks, requiring additional manual adjustments and optimizations by developers.

- Generating new code

Claude-3-Sonnet demonstrates remarkable proficiency in generating new code, particularly in unit and integration tests. The AI tool can analyze existing codebases and automatically generate comprehensive test suites covering various scenarios and edge cases.

- Privacy and security of the Generative AI tool

Unfortunately, Anthropic's privacy policy is quite confusing. Before January 2024, they used clients’ data to train their models. The updated legal document ostensibly provides protections and transparency for Anthropic's commercial clients, but it’s recommended to consider the privacy of your data while using Claude.

Best tip

Be specific and detailed : provide the GenerativeAI with specific and detailed prompts to ensure it understands the task accurately. This includes clear descriptions of what needs to be rewritten, any constraints, and desired outcomes.

Overall

The model's ability to generate coherent and contextually relevant content makes it a valuable tool for developers and businesses seeking to enhance their AI-driven solutions. However, the model might have difficulty fully grasping intricate data flows, especially in systems with complex transformations and conditional logic.

- Possibilities 3/5

- Correctness 3/5

- Privacy 3/5

- Maturity 3/5

Overall score: 3/5

Claude-3-opus

Claude-3-Opus is another language model by Anthropic, designed for handling more extensive and complex interactions. This version of Claude models focuses on delivering high-quality code generation and analysis with high precision.

- Analyzing project architecture

With its advanced natural language processing capabilities, it thoroughly examines the codebase, identifying various components, their relationships, and the overall structure. This analysis provides valuable insights into the project's design, enabling developers to understand the system's organization better and make decisions about potential refactoring or optimization efforts.

- Analyzing data flows

While Claude-3-Opus performs reasonably well in analyzing data flows within a project, it may lack the context necessary to fully comprehend all possible scenarios. However, compared to Claude-3-sonnet, it demonstrates improved capabilities in this area. By examining the flow of data through the application, it can identify potential bottlenecks, inefficiencies, or areas where data integrity might be compromised.

- Generating historical backlog

By providing the project architecture as an input prompt, it effectively creates high-level epics that encapsulate essential features and functionalities. One of its key strengths is generating detailed and precise acceptance criteria for each epic. However, it may struggle to create granular Jira user stories. Compared to other Claude models, Claude-3-Opus demonstrates superior performance in generating historical backlog based on project architecture.

- Converting code from one programming language to another

Claude-3-Opus shows promising capabilities in converting code from one programming language to another, particularly in converting C# code to Java Spring Boot, a popular Java framework for building web applications. However, it has limitations when there is no direct mapping between frameworks in different programming languages.

- Generating new code

The AI tool demonstrates proficiency in generating both unit tests and integration tests for existing codebases. By leveraging its understanding of the project's architecture and data flows, Claude-3-Opus generates comprehensive test suites, ensuring thorough coverage and improving the overall quality of the codebase.

- Privacy and security of the Generative AI tool

Like other Anthropic models, you need to consider the privacy of your data. For specific details about Anthropic's data privacy and security practices, it would be better to contact them directly.

Best tip

Break down the existing project into components and functionality that need to be recreated. Reducing input complexity minimizes the risk of errors in output.

Overall

Claude-3-Opus's strengths are analyzing project architecture and data flows, converting code between languages, and generating new code, which makes the development process easier and improves code quality. This tool empowers developers to quickly deliver high-quality software solutions.

- Possibilities 4/5

- Correctness 4/5

- Privacy 3/5

- Maturity 4/5

Overall score: 4/5

Claude-3-haiku

Claude-3-Haiku is part of Anthropic's suite of Generative AI models, declared as the fastest and most compact model in the Claude family for near-instant responsiveness. It excels in answering simple queries and requests with exceptional speed.

- Analyzing project architecture

Claude-3-Haiku struggles with analyzing project architecture. The model tends to generate overly general responses that closely resemble the input data, limiting its ability to provide meaningful insights into a project's overall structure and organization.

- Analyzing data flows

Similar to its limitations in project architecture analysis, Claude-3-Haiku fails to effectively group components based on their data flow relationships. This lack of precision makes it difficult to clearly understand how data moves throughout the system.

- Generating historical backlog

Claude-3-Haiku is unable to generate Jira user stories effectively. It struggles to produce user stories that meet the standard format and detail required for project management. Additionally, its performance generating high-level epics is unsatisfactory, lacking detailed acceptance criteria and business requirements. These limitations likely stem from its training data, which focused on short forms and concise prompts, restricting its ability to handle more extensive and detailed inputs.

- Converting code from one programming language to another

Claude-3-Haiku proved good at converting code between programming languages, demonstrating an impressive ability to accurately translate code snippets while preserving original functionality and structure.

- Generating new code

Claude-3-Haiku performs well in generating new code, comparable to other Claude-3 models. It can produce code snippets based on given requirements or specifications, providing a useful starting point for developers.

- Privacy and security of the Generative AI tool

Similar to other Anthropic models, you need to consider the privacy of your data, although according to official documentation, Claude 3 Haiku prioritizes enterprise-grade security and robustness. Also, keep in mind that security policies may vary for different Anthropic models.

Best tip

Be aware of Claude-3-haiku capabilities : Claude-3-haiku is a natural language processing model trained on short form. It is not designed for complex tasks like converting a project from one programming language to another.

Overall

Its fast response time is a notable advantage, but its performance suffers when dealing with larger prompts and more intricate tasks. Other tools or manual analysis may prove more effective in analyzing project architecture and data flows. However, Claude-3-Haiku can be a valuable asset in a developer's toolkit for straightforward code conversion and generation tasks.

- Possibilities 2/5

- Correctness 2/5

- Privacy 3/5

- Maturity 2/5

Overall score: 2/5

Gemini 1.5 Flash

Gemini 1.5 Flash represents Google's commitment to advancing AI technology; it is designed to handle a wide range of natural language processing tasks, from text generation to complex data analysis. Google presents Gemini Flash as a lightweight, fast, and cost-efficient model featuring multimodal reasoning and a breakthrough long context window of up to one million tokens.

- Analyzing project architecture

Gemini Flash's performance in analyzing project architecture was found to be suboptimal. The AI tool struggled to provide concrete and actionable insights, often generating abstract and high-level observations instead.

- Analyzing data flows

It effectively identified and traced the flow of data between different components and modules, offering developers valuable insights into how information is processed and transformed throughout the system. This capability aids in understanding the existing codebase and identifying potential bottlenecks or inefficiencies. However, the effectiveness of data flow analysis may vary depending on the project's complexity and size.

- Generating historical backlog

Gemini Flash can synthesize meaningful epics that capture overarching goals and functionalities required for the project by analyzing architectural components, dependencies, and interactions within a software system. However, it may fall short of providing granular acceptance criteria and detailed business requirements. The generated epics often lack the precision and specificity needed for effective backlog management and task execution, and it struggles to generate Jira user stories.

- Converting code from one programming language to another

Gemini Flash showed promising results in converting code from one programming language to another, particularly when translating from C# to Java Spring Boot. It successfully mapped and transformed language-specific constructs, such as syntax, data types, and control structures. However, limitations exist, especially when dealing with frameworks or libraries that do not have direct equivalents in the target language.

- Generating new code

Gemini Flash excels in generating new code, including test cases and additional features, enhancing application reliability and functionality. It analyzed the existing codebase and generated test cases that cover various scenarios and edge cases.

- Privacy and security of the Generative AI tool

Google was one of the first in the industry to publish an AI/ML privacy commitment , which outlines our belief that customers should have the highest level of security and control over their data stored in the cloud. That commitment extends to Google Cloud Generative AI products. You can set up a Gemini AI model in Google Cloud and use an encrypted TLS connection over the internet to connect from your on-premises environment to Google Cloud.

Best tip

Use prompt engineering: Starting by providing necessary background information or context within the prompt helps the model understand the task's scope and nuances. It's beneficial to experiment with different phrasing and structures; refining prompts iteratively based on the quality of the outputs. Specifying any constraints or requirements directly in the prompt can further tailor the model's output to meet your needs.

Overall

By using its AI capabilities in data flow analysis, code translation, and test creation, developers can optimize their workflow and concentrate on strategic tasks. However, it is important to remember that Gemini Flash is optimized for high-speed processing, which makes it less effective for complex tasks.

- Possibilities 2/5

- Correctness 2/5

- Privacy 5/5

- Maturity 2/5

Overall score: 2/5

Gemini 1.5 Pro

Gemini 1.5 Pro is the largest and most capable model created by Google, designed for handling highly complex tasks. While it is the slowest among its counterparts, it offers significant capabilities. The model targets professionals and developers needing a reliable assistant for intricate tasks.

- Analyzing project architecture

Gemini Pro is highly effective in analyzing and understanding the architecture of existing programming projects, surpassing Gemini Flash in this area. It provides detailed insights into project structure and component relationships.

- Analyzing data flows

The model demonstrates proficiency in analyzing data flows, similar to its performance in project architecture analysis. It accurately traces and understands data movement throughout the codebase, identifying how information is processed and exchanged between modules.

- Generating historical backlog

By using project architecture as an input, it creates high-level epics that encapsulate main features and functionalities. While it may not generate specific Jira user stories, it excels at providing detailed acceptance criteria and precise details for each epic.

- Converting code from one programming language to another

The model shows impressive results in code conversion, particularly from C# to Java Spring Boot. It effectively maps and transforms syntax, data structures, and constructs between languages. However, limitations exist when there is no direct mapping between frameworks or libraries.

- Generating now code

Gemini Pro excels in generating new code, especially for unit and integration tests. It analyzes the existing codebase, understands functionality and requirements, and automatically generates comprehensive test cases.

- Privacy and security of the Generative AI tool

Similarly to other Gemini models, Gemini Pro is packed with advanced security and data governance features, making it ideal for organizations with strict data security requirements.

Best tip

Manage context: Gemini Pro incorporates previous prompts into its input when generating responses. This use of historical context can significantly influence the model's output and lead to different responses. Include only the necessary information in your input to avoid overwhelming the model with irrelevant details.

Overall

Gemini Pro shows remarkable capabilities in areas such as project architecture analysis, data flow understanding, code conversion, and new code generation. However, there may be instances where the AI encounters challenges or limitations, especially with complex or highly specialized codebases. As such, while Gemini Pro offers significant advantages, developers should remain mindful of its current boundaries and use human expertise when necessary.

- Possibilities 4/5

- Correctness 3/5

- Privacy 5/5

- Maturity 3/5

Overall score: 4/5

LLM comparison summary

Embrace AI-driven approach to legacy code modernization

Generative AI offers practical support for rewriting legacy systems. While tools like GPT-4o and Claude-3-opus can’t fully automate the process, they excel in tasks like analyzing codebases and refining requirements. Combined with advanced platforms for data analysis and workflows, they help create a more efficient and precise redevelopment process.

This synergy allows developers to focus on essential tasks, reducing project timelines and improving outcomes.